A High-Precision Short-Term Photovoltaic Power Forecasting Model Based on Multivariate Variational Mode Decomposition and Gated Recurrent Unit-Attention with Crested Porcupine Optimizer-Enhanced Vector Weighted Average Algorithm

Abstract

1. Introduction

- (1)

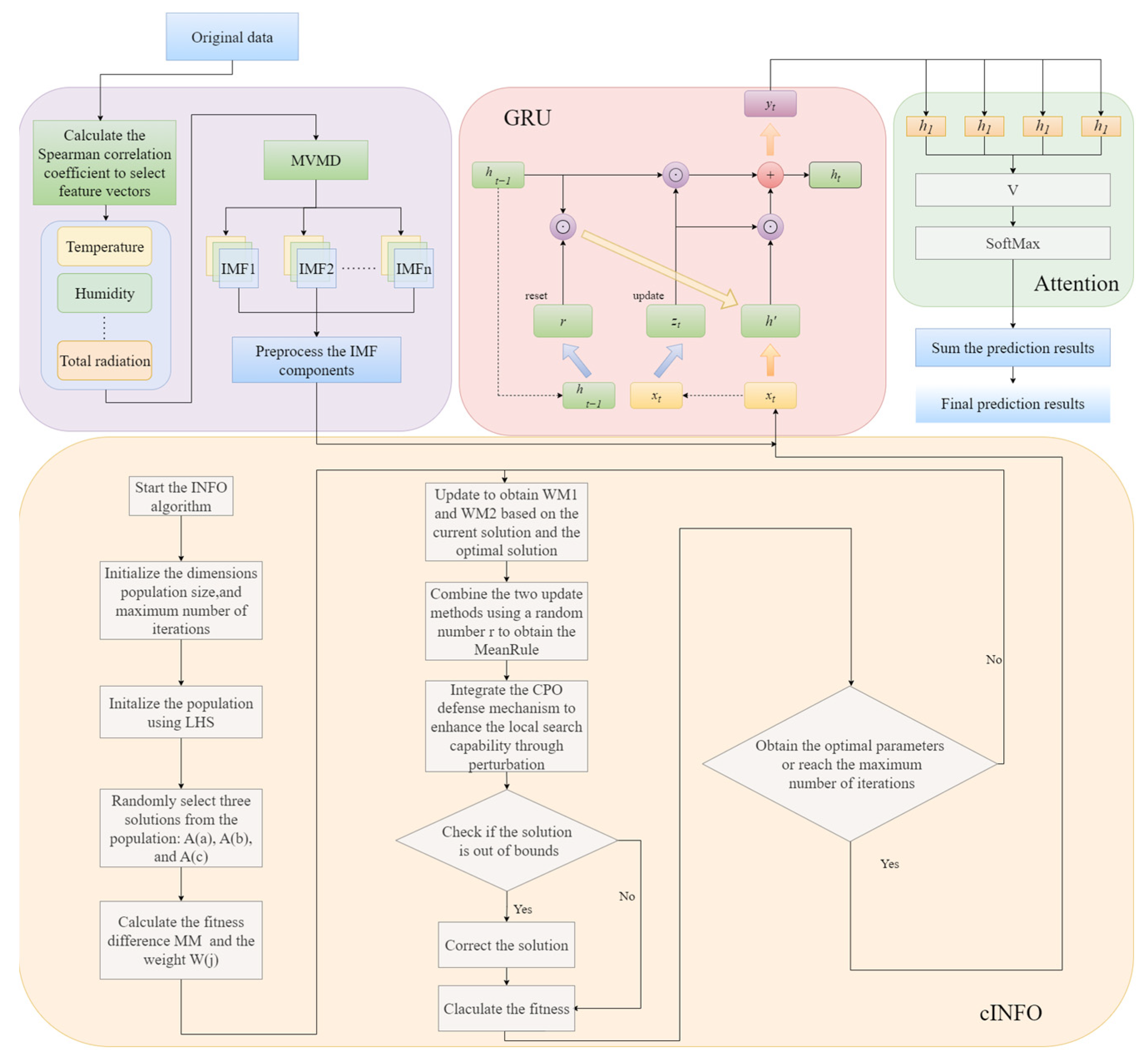

- The selection of MVMD, which is capable of processing multi-channel signals simultaneously, offers a more effective means of capturing the inherent characteristics of the signals in question. This approach enhances the decomposition ability, stability, and robustness of the system.

- (2)

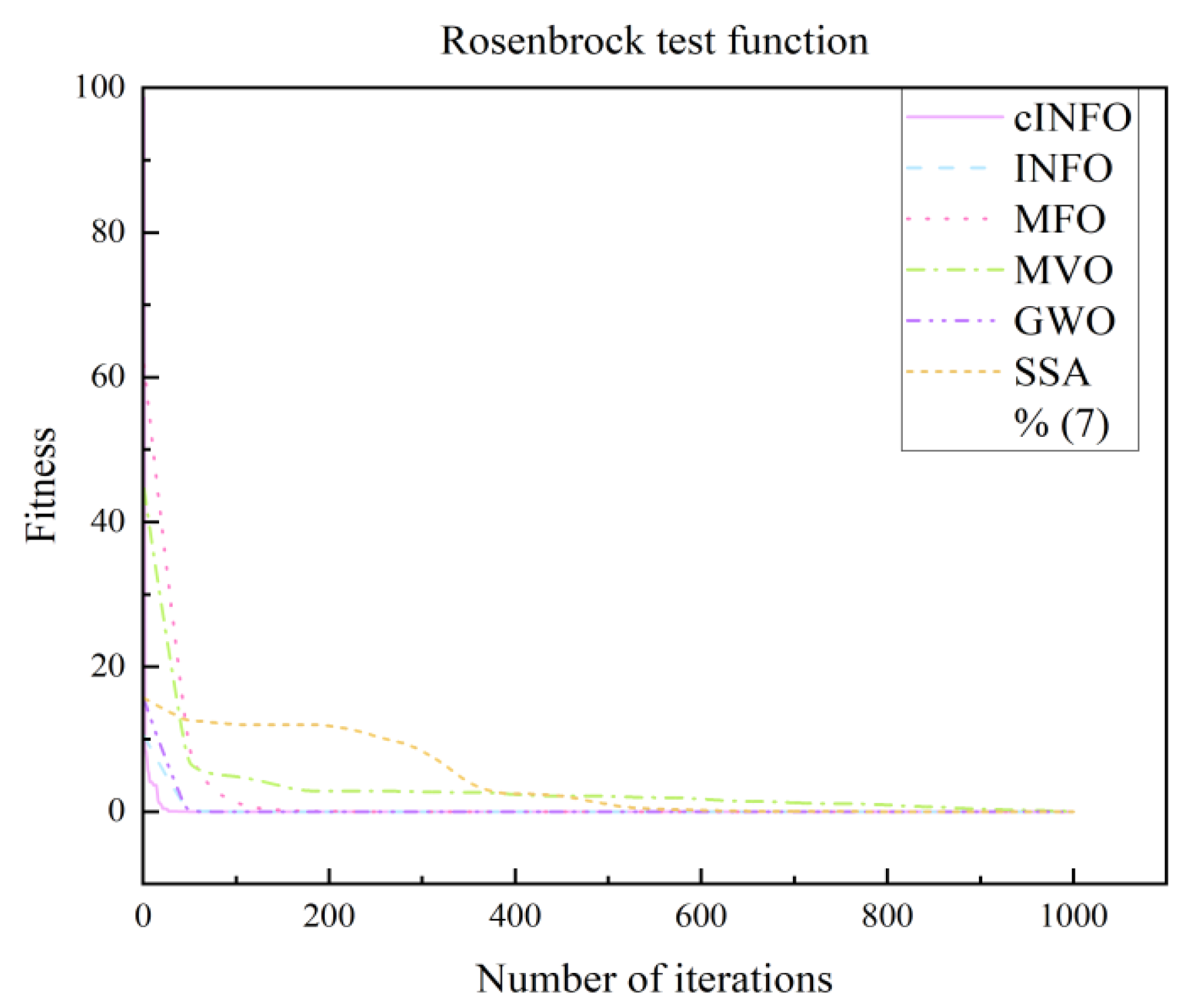

- Improvement of INFO Optimization Algorithm: In this paper, a new optimization algorithm is proposed by combining the INFO optimization algorithm with the crested porcupine optimizer optimization algorithm (CPO). In the update process of INFO algorithm, the defense mechanism of CPO is added in order to increase the population diversity and avoid falling into a local optimum. Combined with the dynamic population adjustment strategy of CPO, the algorithm can keep more solutions for global exploration in the early stage of optimization and gradually reduce the population size in the later stage to accelerate the convergence. In the local search stage, the adaptive weight updating mechanism of CPO is introduced to adaptively adjust the position of the solution according to the value of the objective function to improve the search efficiency of the algorithm.

- (3)

- The introduction of an attention mechanism enables the model to focus on processing the most pertinent aspects of the input data, thereby enhancing the accuracy and generalizability of the model’s predictive capabilities.

2. Methodology

2.1. MVMD

- (1)

- For input data containing c channels of data, denoted as . Suppose there are k multivariate modulation oscillations, such that:

- (2)

- The Hilbert-Huang transform is applied to each element of , denoted as , and then multi plied by the exponential term to adjust it to the corresponding center frequency. The bandwidth of each mode is estimated by using as a harmonic mixer of , and then by the parameter of the gradient function of the harmonically transformed . MVMD constrains the decomposition such that the total bandwidth of the extracted modes is minimized, while ensuring that the resulting oscillatory components can faithfully reconstruct the original signal. Under this principle, the problem is formulated as a constrained variational optimization task, expressed as follows.

- (3)

- In solving multiple variational problems, the number of equations in the system of linear equations corresponds to the total number of channels, and accordingly, the augmented Lagrangian function is as follows.where λ represents the Lagrangian multiplier introduced to enforce the constraint that the sum of the modes equals the original input signal.

- (4)

- In order to solve this transformed unconstrained variational problem, alternate direction method of multipliers (alternate direction method of multipliers (ADMM)) is applied to realize the alternate updating, and then the decomposed signal components are obtained by taking the center frequency. The mode update is expressed as:The center frequency update obtained is expressed as:

2.2. cINFO-GRU-ATT Model

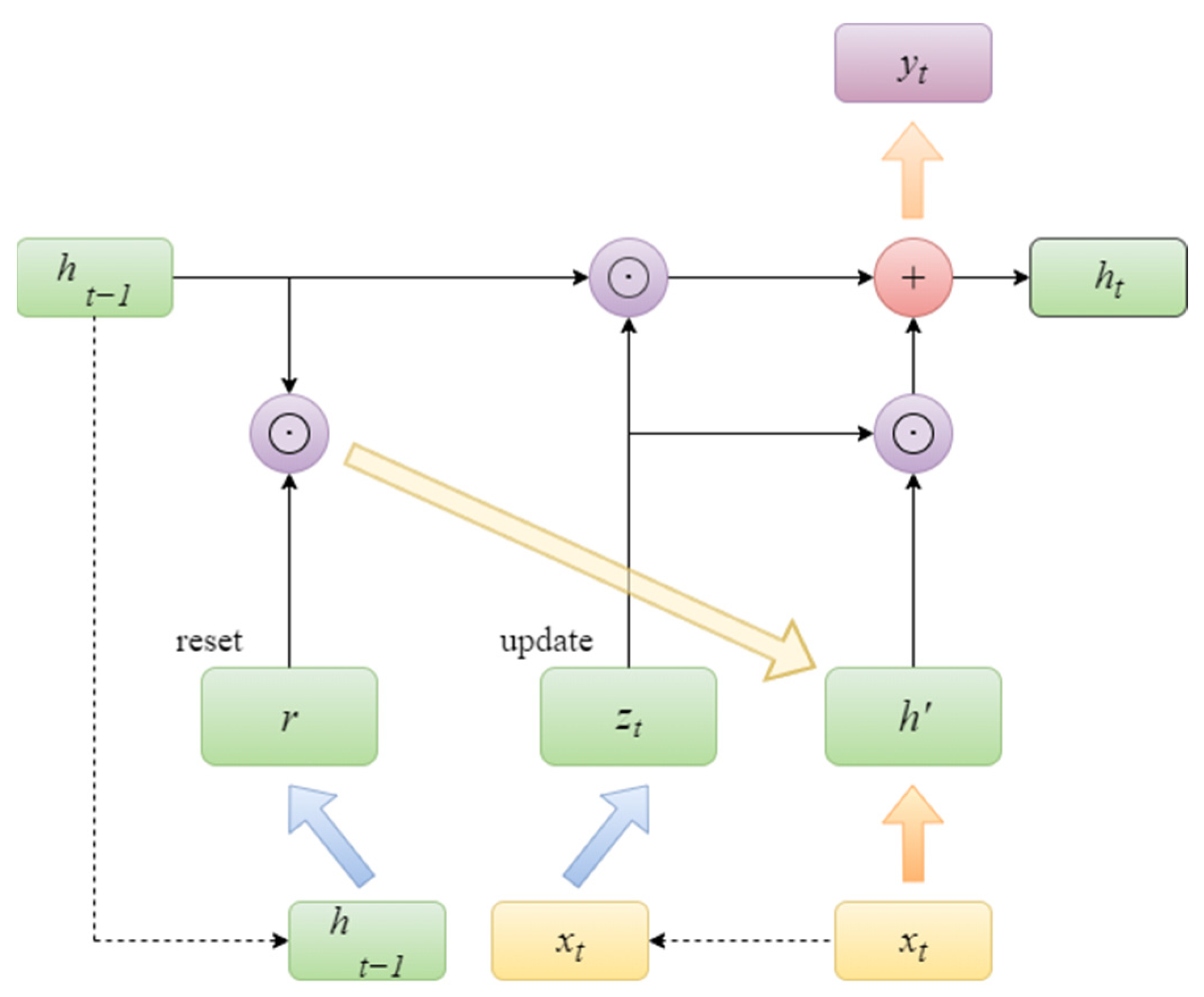

2.2.1. GRU Network

- (1)

- Data Input: A set of input features, including historical power generation and meteorological data, should be utilized as input data.

- (2)

- The initial state of the hidden layer is set.

- (3)

- Time step cycling.

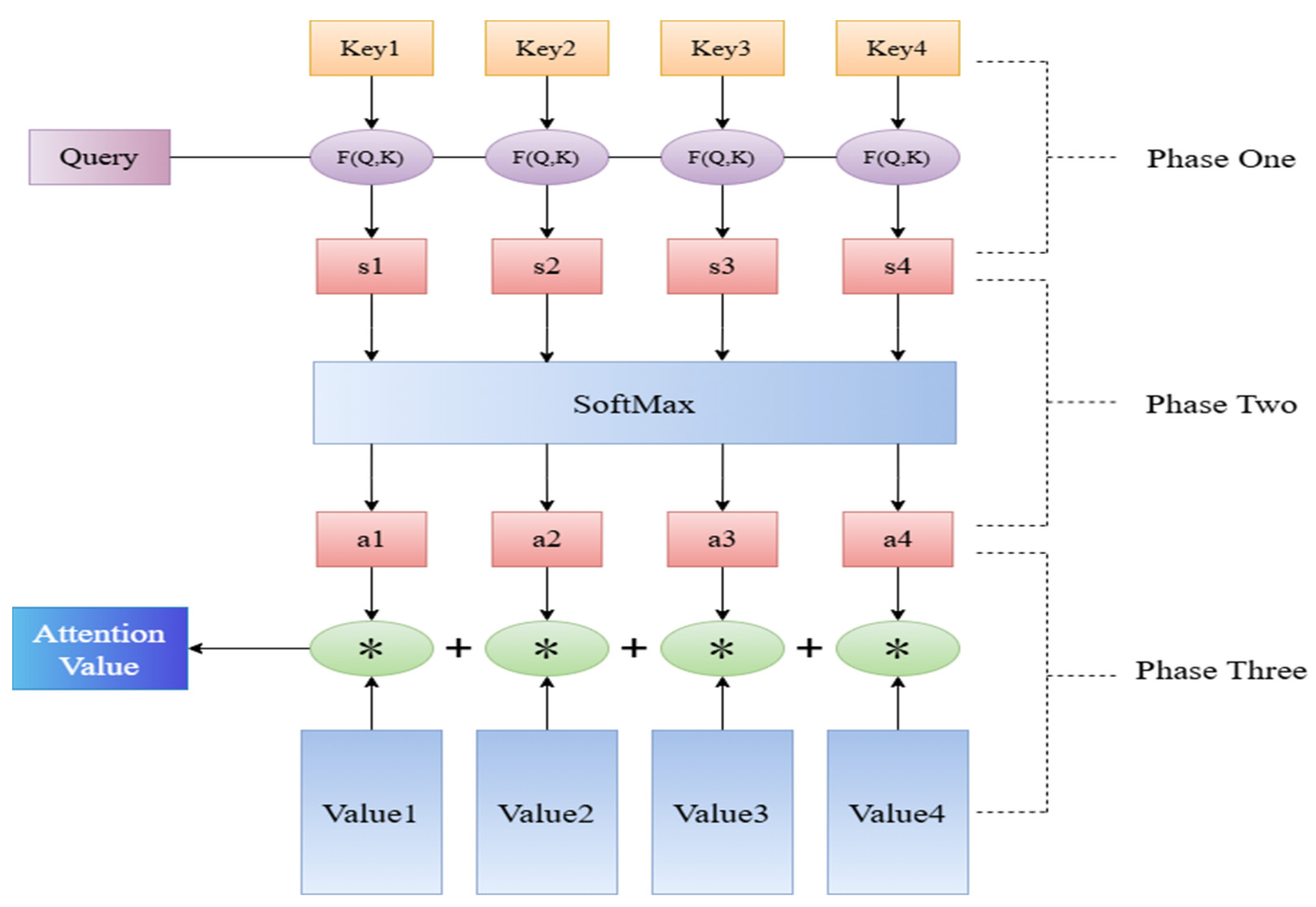

2.2.2. Attention Mechanisms

- (1)

- Calculate the degree of similarity (attention score) between the decoder state at the previous moment and the encoder output at each subsequent moment:where is the i-th output of the encoder; is the output state of the decoder at the moment t − 1; and F is the transformation function for computing the attention score.

- (2)

- The attention scores calculated in the previous step are subjected to a softmax transformation, thereby obtaining their probability distribution:where is the number of attention components of the decoder to the encoder at moment t; and is the probability distribution of .

- (3)

- The attention vector is to be computed at moment t based on and the state of the full encoder:

- (4)

- The attention vector should be combined with the input from the decoder to create a new input for decoding:where is the input to the decoder at moment t; is the transform function that computes the decoded input.

2.2.3. Improved INFO Algorithm

- (I)

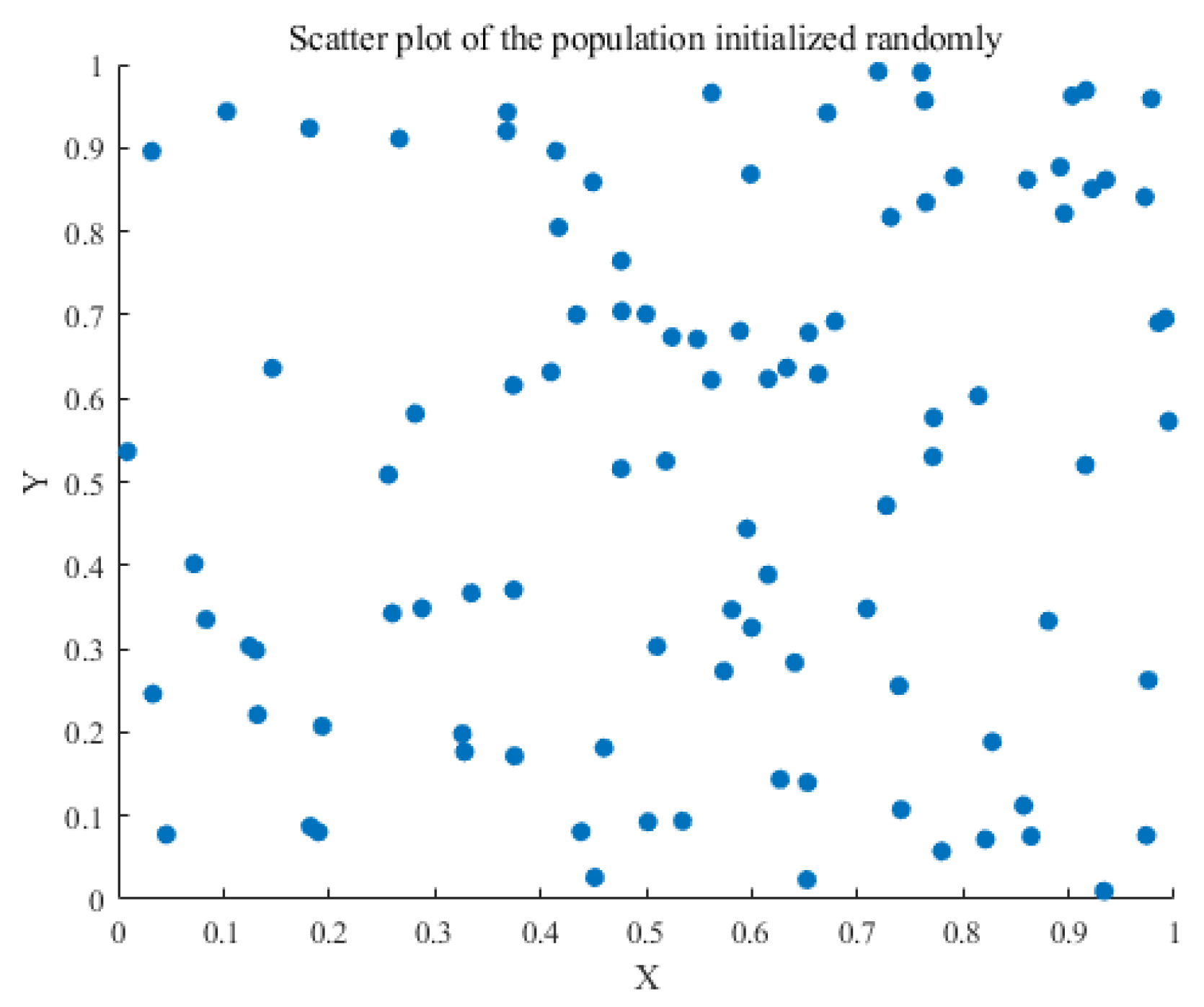

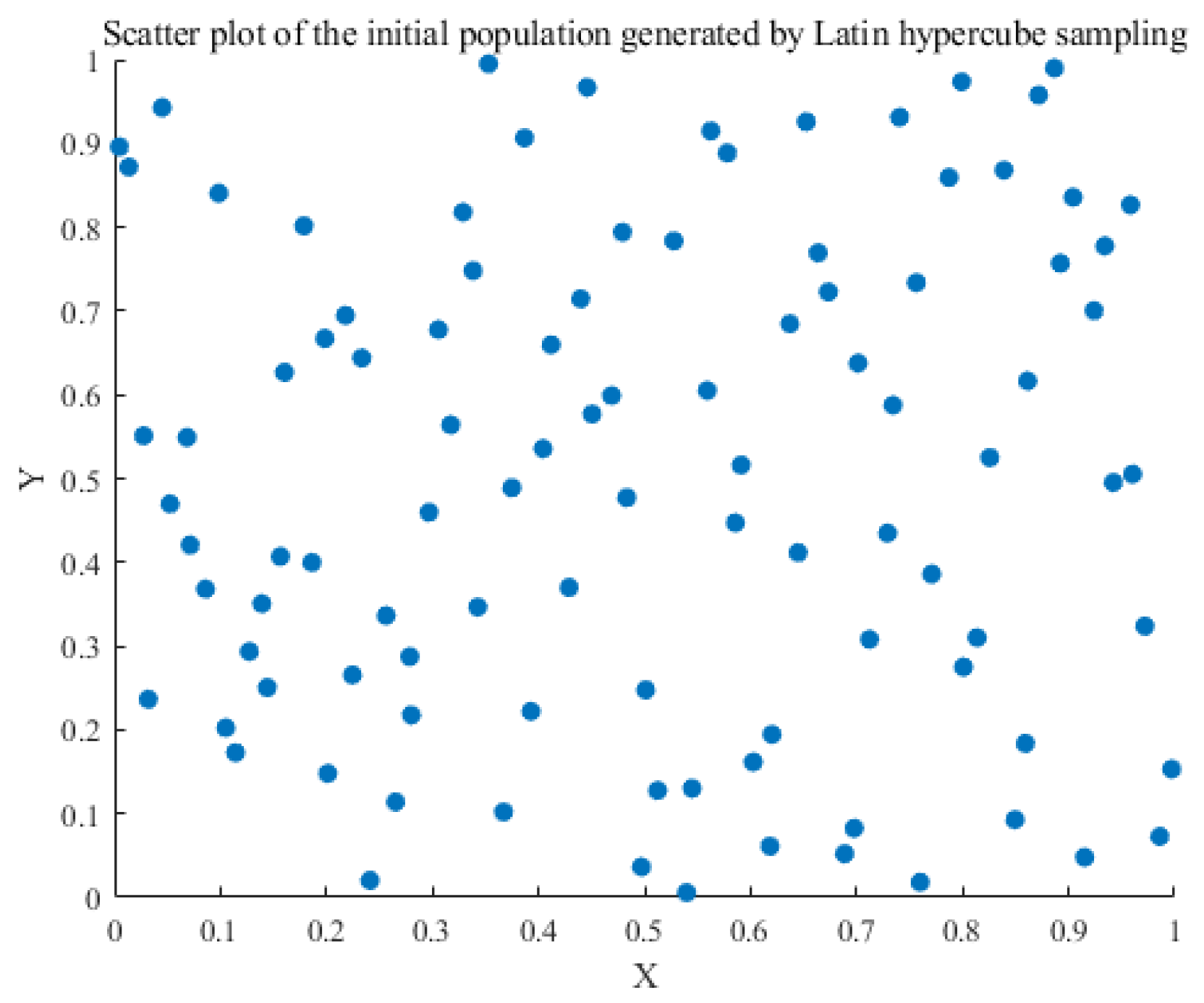

- Initialization stage

- (II)

- Stage of updating the rules

- (III)

- Vector combination stage

- (IV)

- Local search stage

- (1)

- Randomly select three solutions in the population: A(a), A(b), A(c).

- (2)

- Calculation of the fitness difference:

- (3)

- Solution Updates:

- (4)

- Combining CPO defense mechanisms enhances exploration through perturbation:

- (5)

- Introducing a localized search mechanism:

3. Research Framework

- A corpus of historical datasets pertaining to PV power plants has been assembled, comprising both PV data and meteorological data. The ratio of the training set to the test set of the data utilized in the model presented in this paper is 8:2.

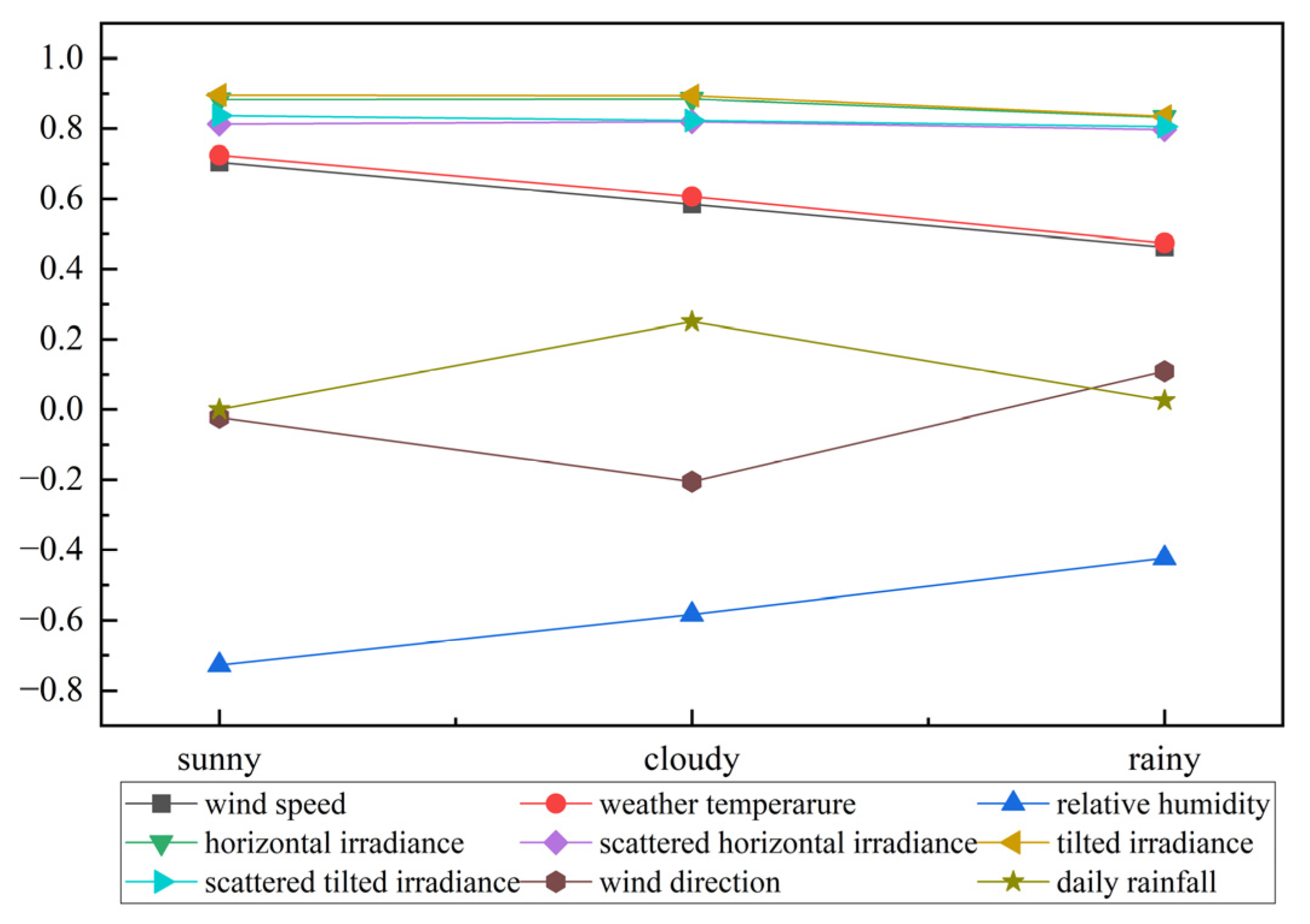

- The Spearman correlation coefficients of all meteorological factors were calculated, and the input meteorological factors were selected based on the correlation coefficients. The selected factors were temperature, humidity, total radiation, direct radiation, and diffuse radiation.

- The historical PV power generation data were decomposed using MVMD to extract the corresponding modal components.

- The GRU-ATT parameters are optimized using cINFO, thereby enhancing the model’s performance. The MVMD-decomposed PV data and correlation-screened meteorological data are employed as inputs, and the trained cINFO-GRU-ATT is utilized as a prediction model to forecast the PV power in the subsequent time period.

- The output prediction components are then superimposed to obtain the final prediction results. The results of the prediction are then summarized and analyzed in order to verify the feasibility and superiority of the model proposed in this paper.

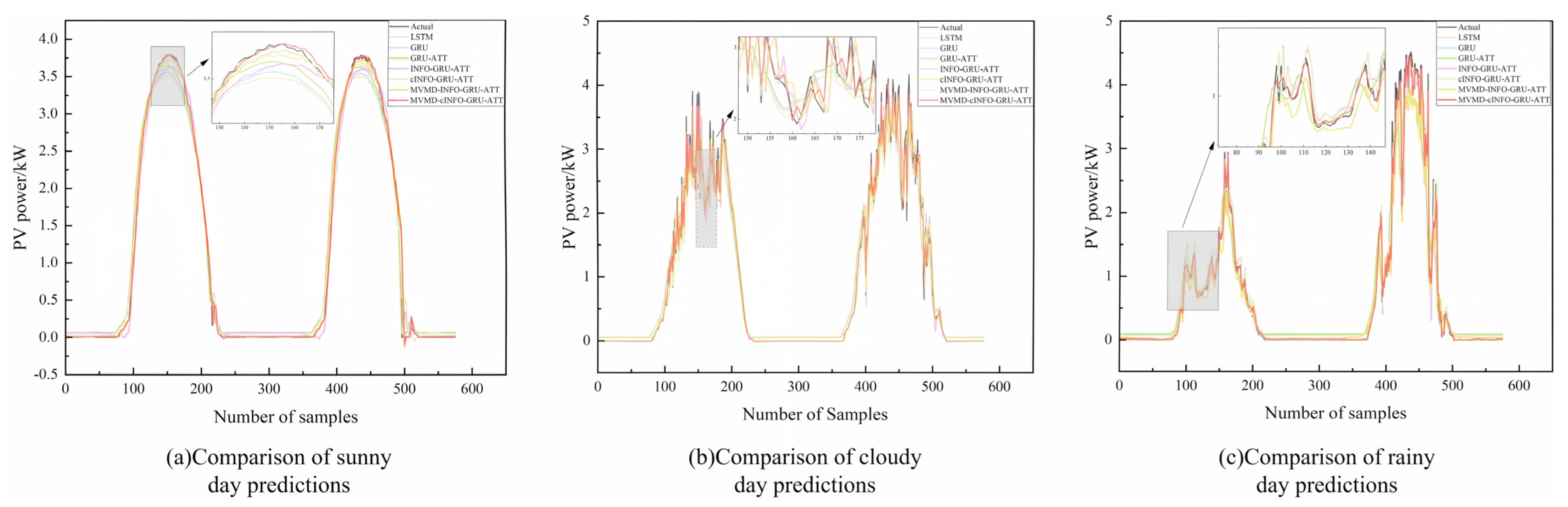

- In this study, data from three weather conditions (sunny, cloudy, and rainy) were selected and predicted to assess the generalizability of the model.

4. Experiment and Discussion

4.1. Data Introduction

4.2. Dataset Processing

4.2.1. Abnormal Data Processing and Data Normalization

4.2.2. Dataset Partitioning and Feature Vector Filtering

4.3. Prediction Accuracy Evaluation Index

4.4. Comparative Models

4.5. Parameter Setting

4.6. Results Analysis

5. Conclusions

- (1)

- Utilize the decomposition algorithm to decompose the historical data, obtain the sub-sequence, independently predict the sub-sequence, and superimpose the prediction results to derive the final prediction results. This process serves to reduce the volatility of historical data while simultaneously enhancing the accuracy of the resulting prediction.

- (2)

- The INFO algorithm has been enhanced by the introduction of several new technologies. These include the use of Latin super cubic sampling to initialize the population, combined with the defense mechanism of the CPO optimization algorithm to enhance the exploration ability. This approach not only increases overall diversity but also strengthens both the global and local search abilities of the algorithm, helping to avoid local optima. Furthermore, the improved cINFO algorithm is integrated with GRU to optimize its parameters, enhancing overall model performance. An attention mechanism is also incorporated, allowing the model to more effectively capture salient sequence information and thereby improve the prediction accuracy of the combined model.

- (3)

- The review demonstrates that the proposed combined prediction model achieves both high accuracy and strong generalizability, providing improved support for the scheduling and decision-making of DPV power generation systems.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, Y.; Yang, Q.; Xue, H.; Mi, Y.; Tu, Y. Ultra-short-term PV power prediction model based on HP-OVMD and enhanced emotional neural network. IET Renew. Power Gener. 2022, 16, 2233–2247. [Google Scholar] [CrossRef]

- Xiang, X.; Li, X.; Zhang, Y.; Hu, J. A short-term forecasting method for photovoltaic power generation based on the TCN-ECANet-GRU hybrid model. Sci. Rep. 2024, 14, 6744. [Google Scholar] [CrossRef]

- da Silva, P.P.; Dantas, G.; Pereira, G.I.; Câmara, L.; De Castro, N.J. Photovoltaic distributed generation—An international review on diffusion, support policies, and electricity sector regulatory adaptation. Renew. Sustain. Energy Rev. 2019, 103, 30–39. [Google Scholar] [CrossRef]

- Tong, L.; Geng, Y.; Zhang, Y.; Zhang, Y.; Wang, H. Testing the effectiveness of deploying distributed photovoltaic power systems in residential buildings: Evidence from rural China. Environ. Impact Assess. Rev. 2024, 104, 107300. [Google Scholar] [CrossRef]

- Li, R.; Wang, M.; Li, X.; Qu, J.; Dong, Y. Short-term photovoltaic prediction based on CNN-GRU optimized by improved similar day extraction, decomposition noise reduction and SSA optimization. IET Renew. Power Gener. 2024, 18, 908–928. [Google Scholar] [CrossRef]

- Moreira, M.O.; Balestrassi, P.P.; Paiva, A.P.; Ribeiro, P.F.; Bonatto, B.D. Design of experiments using artificial neural network ensemble for photovoltaic generation forecasting. Renew. Sustain. Energy Rev. 2021, 135, 110450. [Google Scholar] [CrossRef]

- Shi, J.; Chen, Y.; Cheng, X.; Yang, M.; Wang, M. Four-stage space-time hybrid model for distributed photovoltaic power fore-casting. IEEE Trans Ind Appl. 2023, 59, 1129–1138. [Google Scholar] [CrossRef]

- Chen, X.; Ding, K.; Zhang, J.; Han, W.; Liu, Y.; Yang, Z.; Weng, S. Online prediction of ultra-short-term photovoltaic power using chaotic characteristic analysis, improved PSO and KELM. Energy 2022, 248, 123574. [Google Scholar] [CrossRef]

- Zhang, J.; Liao, Z.; Shu, J.; Yue, J.; Liu, Z.; Tao, R. Interval prediction of short-term photovoltaic power based on an improved GRU model. Energy Sci. Eng. 2024, 12, 3142–3156. [Google Scholar] [CrossRef]

- Ahmed, R.; Sreeram, V.; Mishra, Y.; Arif, M.D. A review and evaluation of the state-of-the-art in PV solar power forecasting: Techniques and optimization. Renew. Sustain. Energy Rev. 2020, 124, 109792. [Google Scholar] [CrossRef]

- Sobri, S.; Koohi-Kamali, S.; Rahim, N.A. Solar photovoltaic generation forecasting methods: A review. Energy Convers. Manag. 2018, 156, 459–497. [Google Scholar] [CrossRef]

- Yan, J.; Hu, L.; Zhen, Z.; Wang, F.; Qiu, G.; Li, Y.; Yao, L.; Shafie-khah, M.; Catalão, J.P. Frequency-domain decomposition and deep learning based solar PV power ultra-short-term forecasting model. IEEE Trans. Ind. Appl. 2021, 57, 3282–3295. [Google Scholar] [CrossRef]

- Zhao, P.; Tian, W. Research on prediction of solar power considering the methods of statistical and machine learning—Based on the data of Australian solar power market. IOP Conf. Ser. Earth Environ. Sci. 2022, 1046, 012006. [Google Scholar] [CrossRef]

- Sanjari, M.J.; Gooi, H.B. Probabilistic forecast of PV power generation based on higher order Markov chain. IEEE Trans. Power Syst. 2017, 32, 2942–2952. [Google Scholar] [CrossRef]

- Mbaye, A.; Ndong, J.; Ndiaye, M.L.; Sylla, M.; Aidara, M.C.; Diaw, M.; Ndiaye, M.F.; Ndiaye, P.A.; Ndiaye, A. Kalman filter model, as a tool for short-term forecasting of solar potential: Case of the Dakar site. E3S Web Conf. 2018, 57, 01004. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, J.; Liu, H.; Tong, J.; Sun, Z. Prediction of energy photovoltaic power generation based on artificial intelligence algorithm. Neural Comput. Appl. 2020, 33, 821–835. [Google Scholar] [CrossRef]

- Iheanetu, K.; Obileke, K. Short-Term Photovoltaic Power Forecasting Using Multilayer Perceptron Neural Network, Convolutional Neural Network, and k-Nearest Neighbors’ Algorithms. Optics 2024, 5, 293–309. [Google Scholar] [CrossRef]

- Sheng, W.; Li, R.; Shi, L.; Lu, T. Distributed photovoltaic short-term power forecasting using hybrid competitive particle swarm optimization support vector machines based on spatial correlation analysis. IET Renew. Power Gener. 2023, 17, 3624–3637. [Google Scholar] [CrossRef]

- Al-Dahidi, S.; Ayadi, O.; Adeeb, J.; Alrbai, M.; Qawasmeh, B.R. Extreme Learning Machines for Solar Photovoltaic Power Predictions. Energies 2018, 11, 2725. [Google Scholar] [CrossRef]

- Liu, W.; Liu, Q.; Li, Y. Ultra-short-term photovoltaic power prediction based on modal reconstruction and BiLSTM-CNN-Attention model. Earth Sci. Inform. 2024, 17, 2711–2725. [Google Scholar] [CrossRef]

- Koprinska, D.; Wu, I.; Wang, Z. Convolutional Neural Networks for Energy Time Series Forecasting. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Hossain, M.S.; Mahmood, H. Short-Term Photovoltaic Power Forecasting Using an LSTM Neural Network and Synthetic Weather Forecast. IEEE Access 2020, 8, 172524–172533. [Google Scholar] [CrossRef]

- Dinesh, L.P.; Al Khafaf, N.; McGrath, B. A Gated Recurrent Unit for Very Short-Term Photovoltaic Generation Forecasting. In Proceedings of the 2023 IEEE International Conference on Energy Technologies for Future Grids (ETFG), Wollongong, Australia, 3–6 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Sodsong, N.; Yu, K.M.; Ouyang, W. Short-Term Solar PV Forecasting Using Gated Recurrent Unit with a Cascade Model. In Proceedings of the 2019 International Conference on Artificial Intelligence in Information and Communication (ICAIIC), Okinawa, Japan, 11–13 February 2019; pp. 292–297. [Google Scholar] [CrossRef]

- Majumder, I.; Behera, M.K.; Nayak, N. Solar power forecasting using a hybrid EMD-ELM method. In Proceedings of the 2017 International Conference on CircuitPower and Computing Technologies (ICCPCT), Kollam, India, 20–21 April 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Bimali, B.; Ferreira Touma, D.W. Solar Power Forecasting using EEMD followed by LSTM. In Proceedings of the 2023 IEEE Energy Conversion Congress and Exposition (ECCE), Nashville, TN, USA, 20–24 October 2023; pp. 1494–1498. [Google Scholar] [CrossRef]

- Gao, B.; Huang, X.; Shi, J.; Tai, Y.; Zhang, J. Hourly forecasting of solar irradiance based on CEEMDAN and multi-strategy CNN-LSTM neural networks. Renew. Energy 2020, 162, 1665–1683. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Y.; Cai, H.; Zhang, J. An innovative short-term multihorizon photovoltaic power output forecasting method based on variational mode decomposition and a capsule convolutional neural network. Appl. Energy 2023, 343, 121139. [Google Scholar] [CrossRef]

- Li, G.; Tian, T.; Hao, F.; Yuan, Z.; Tang, R.; Liu, X. Day-Ahead Photovoltaic Power Forecasting Using Empirical Mode Decomposition Based on Similarity-Day Extension Without Information Leakage. Arab. J. Sci. Eng. 2024, 49, 6941–6957. [Google Scholar] [CrossRef]

- Wang, L.; Mao, M.; Xie, J.; Liao, Z.; Zhang, H.; Li, H. Accurate solar PV power prediction interval method based on frequency-domain decomposition and LSTM model. Energy 2023, 262, 125592. [Google Scholar] [CrossRef]

- Xie, T.; Zhang, G.; Liu, H.; Liu, F.; Du, P. A Hybrid Forecasting Method for Solar Output Power Based on Variational Mode Decomposition, Deep Belief Networks and Auto-Regressive Moving Average. Appl. Sci. 2018, 8, 1901. [Google Scholar] [CrossRef]

- Liu, Q.; Li, Y.; Jiang, H.; Chen, Y.; Zhang, J. Short-term photovoltaic power forecasting based on multiple mode decomposition and parallel bidirectional long short term combined with convolutional neural networks. Energy 2024, 286, 129580. [Google Scholar] [CrossRef]

- Na, L.; Cai, B.; Zhang, C.; Liu, J.; Li, Z. A heterogeneous transfer learning method for fault prediction of railway track circuit. Eng. Appl. Artif. Intell. 2025, 140, 109740. [Google Scholar] [CrossRef]

- Ji, H.K.; Mirzaei, M.; Lai, S.H.; Dehghani, A.; Dehghani, A. Implementing generative adversarial network (GAN) as a data-driven multi-site stochastic weather generator for flood frequency estimation. Environ. Model. Softw. 2024, 172, 105896. [Google Scholar] [CrossRef]

- ElRobrini, F.; Bukhari, S.M.S.; Zafar, M.H.; Al-Tawalbeh, N.; Akhtar, N.; Sanfilippo, F. Federated learning and non-federated learning based power forecasting of photovoltaic/wind power energy systems: A systematic review. Energy AI 2024, 18, 100438. [Google Scholar] [CrossRef]

- Jia, H.; Cao, P.; Liang, T.; Cesar, F. Short-Time Variational Mode Decomposition. Signal Process. 2026, 238, 110203. [Google Scholar] [CrossRef]

- Fantini, D.G.; Silva, R.N.; Siqueira, M.B.B.; Pinto, M.S.S.; Guimarães, M.; Junior, A.B. Wind speed short-term prediction using recurrent neural network GRU model and stationary wavelet transform GRU hybrid model. Energy Convers. Manag. 2024, 308, 118333. [Google Scholar] [CrossRef]

- Ahmadianfar, I.; Asghar Heidari, A.; Noshadian, S.; Chen, H.; Gandomi, A.H. INFO: An efficient optimization algorithm based on weighted mean of vectors. Expert Syst. Appl. 2022, 195, 116516. [Google Scholar] [CrossRef]

- Desert Knowledge Australia Solar Centre. DKA M18-B Phase 1 Data (Alice Springs) [Internet]. 2018–2023. Available online: https://dkasolarcentre.com.au/source/alice-springs/dka-m18-b-phase-1 (accessed on 12 March 2025).

| Model Category | Specific Methods | Limitations |

|---|---|---|

| Signal Decomposition | VMD | Parameter sensitivity (mode number K/penalty factor α requires extensive tuning); multi-stage decomposition generates excessive subsequences, reducing efficiency. |

| Deep Learning Models | LSTM | Prone to overfitting during training; high parameter count increases computational cost. |

| Hybrid Model | Multi-decomposition (e.g., VMD-CEEMD-SSA) | Exponential increase in subsequences from multi-stage decomposition severely impacts computational efficiency and risks reconstruction errors. |

| Hybrid Model | Multi-model integration | Complex hyperparameter optimization; low synergy efficiency between modules. |

| Parameter | Value |

|---|---|

| Array Rating/kW | 4.95 |

| Panel Rating/W | 165 |

| Number of Panels | 30 |

| Panel Type | BP 3165 |

| Array Area/m2 | 37.75 |

| Inverter Size/Type | 6 kW, SMA SMC 6000 A |

| Array Tilt/Azimuth | Tilt = 20, Azimuth = 0 (Solar North) |

| Weather | Meteorological Factors | Correlation Coefficient |

|---|---|---|

| Sunny | wind speed | 0.7042 |

| weather temperature (degrees Celsius) | 0.7245 | |

| relative humidity | −0.7276 | |

| horizontal irradiance | 0.8827 | |

| scattered horizontal irradiance | 0.8128 | |

| tilted irradiance | 0.8961 | |

| scattered tilted irradiance | 0.8371 | |

| wind direction | −0.0221 | |

| daily rainfall | NaN | |

| Cloudy | wind speed | 0.5841 |

| weather temperature (degrees Celsius) | 0.6068 | |

| relative humidity | −0.58301 | |

| horizontal irradiance | 0.8843 | |

| scattered horizontal irradiance | 0.8197 | |

| tilted irradiance | 0.8938 | |

| scattered tilted irradiance | 0.8228 | |

| wind direction | −0.2057 | |

| daily rainfall | 0.2500 | |

| Rainy | wind speed | 0.4623 |

| weather temperature (degrees Celsius) | 0.4749 | |

| relative humidity | −0.4234 | |

| horizontal irradiance | 0.8323 | |

| scattered horizontal irradiance | 0.7965 | |

| tilted irradiance | 0.8350 | |

| scattered tilted irradiance | 0.8056 | |

| wind direction | 0.1089 | |

| daily rainfall | 0.0253 |

| Weather | Model | Parameter Setting |

|---|---|---|

| Sunny | LSTM | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 5 |

| GRU | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 5 | |

| GRU-ATT | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 7 | |

| INFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 0.0078, Hidden_nodes = 47, N = 7 | |

| cINFO-GRU-ATT | max_iter = 300, L = 8.791 × 10−4, R = 5.189 × 10−4, Hidden_nodes = 100, N = 7 | |

| MVMD-INFO-GRU-ATT | max_iter = 300, L = 2.078 × 10−5, R = 0.0035, Hidden_nodes = 91, N = 7, K = 6 | |

| MVMD-cINFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 0.0093, Hidden_nodes = 93, N = 7, K = 6 | |

| Cloudy | LSTM | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 5 |

| GRU | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 5 | |

| GRU-ATT | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 7 | |

| INFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 9.07 × 10−4, Hidden_nodes = 97, N = 7 | |

| cINFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 0.0081, Hidden_nodes = 98, N = 7 | |

| MVMD-INFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 0.01, Hidden_nodes = 29, N = 7, K = 6 | |

| MVMD-cINFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 0.0078, Hidden_nodes = 67, N = 7, K = 6 | |

| Rainy | LSTM | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 5 |

| GRU | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 5 | |

| GRU-ATT | max_iter = 300, learning rate = 0.01, Hidden_nodes = 10, N = 7 | |

| INFO-GRU-ATT | max_iter = 300, L = 1.027 × 10−6, R = 0.0029, Hidden_nodes = 10, N = 7 | |

| cINFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 0.0093, Hidden_nodes = 30, N = 7 | |

| MVMD-INFO-GRU-ATT | max_iter = 300, L = 1.489 × 10−6, R = 0.0093, Hidden_nodes = 100, N = 7, K = 6 | |

| MVMD-cINFO-GRU-ATT | max_iter = 300, L = 1 × 10−6, R = 0.007, Hidden_nodes = 100, N = 7, K = 6 |

| Weather | Model | MAE | RMSE | R2 | Computational Time (s) |

|---|---|---|---|---|---|

| Sunny | LSTM | 0.1221 | 0.1692 | 95.52% | 2232.56 |

| GRU | 0.1233 | 0.1799 | 94.75% | 2156.32 | |

| GRU-ATT | 0.1170 | 0.1637 | 95.81% | 2354.55 | |

| INFO-GRU-ATT | 0.1009 | 0.1479 | 99.03% | 2659.03 | |

| cINFO-GRU-ATT | 0.0573 | 0.0995 | 99.56% | 2703.25 | |

| MVMD-INFO-GRU-ATT | 0.0354 | 0.0758 | 99.65% | 2756.23 | |

| MVMD-cINFO-GRU-ATT | 0.0249 | 0.0693 | 99.79% | 2658.42 | |

| Cloudy | LSTM | 0.1914 | 0.3136 | 90.32% | 1604.65 |

| GRU | 0.1823 | 0.2997 | 90.55% | 1535.25 | |

| GRU-ATT | 0.1814 | 0.2986 | 92.14% | 1603.76 | |

| INFO-GRU-ATT | 0.1453 | 0.2292 | 94.46% | 1985.25 | |

| cINFO-GRU-ATT | 0.1389 | 0.2177 | 94.87% | 1925.36 | |

| MVMD-INFO-GRU-ATT | 0.1303 | 0.1729 | 98.15% | 2204.53 | |

| MVMD-cINFO-GRU-ATT | 0.0444 | 0.0831 | 99.57% | 2145.33 | |

| Rainy | LSTM | 0.2049 | 0.3374 | 87.01% | 1325.33 |

| GRU | 0.1950 | 0.3282 | 87.45% | 1278.79 | |

| GRU-ATT | 0.1839 | 0.3209 | 88.61% | 1326.88 | |

| INFO-GRU-ATT | 0.1052 | 0.2661 | 94.80% | 1575.26 | |

| cINFO-GRU-ATT | 0.1031 | 0.2591 | 95.07% | 1523.23 | |

| MVMD-INFO-GRU-ATT | 0.1016 | 0.1939 | 97.24% | 1835.26 | |

| MVMD-cINFO-GRU-ATT | 0.0412 | 0.0938 | 99.35% | 1756.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pian, J.; Chen, X. A High-Precision Short-Term Photovoltaic Power Forecasting Model Based on Multivariate Variational Mode Decomposition and Gated Recurrent Unit-Attention with Crested Porcupine Optimizer-Enhanced Vector Weighted Average Algorithm. Sensors 2025, 25, 5977. https://doi.org/10.3390/s25195977

Pian J, Chen X. A High-Precision Short-Term Photovoltaic Power Forecasting Model Based on Multivariate Variational Mode Decomposition and Gated Recurrent Unit-Attention with Crested Porcupine Optimizer-Enhanced Vector Weighted Average Algorithm. Sensors. 2025; 25(19):5977. https://doi.org/10.3390/s25195977

Chicago/Turabian StylePian, Jinxiang, and Xianliang Chen. 2025. "A High-Precision Short-Term Photovoltaic Power Forecasting Model Based on Multivariate Variational Mode Decomposition and Gated Recurrent Unit-Attention with Crested Porcupine Optimizer-Enhanced Vector Weighted Average Algorithm" Sensors 25, no. 19: 5977. https://doi.org/10.3390/s25195977

APA StylePian, J., & Chen, X. (2025). A High-Precision Short-Term Photovoltaic Power Forecasting Model Based on Multivariate Variational Mode Decomposition and Gated Recurrent Unit-Attention with Crested Porcupine Optimizer-Enhanced Vector Weighted Average Algorithm. Sensors, 25(19), 5977. https://doi.org/10.3390/s25195977