A Convolutional-Transformer Residual Network for Channel Estimation in Intelligent Reflective Surface Aided MIMO Systems

Abstract

1. Introduction

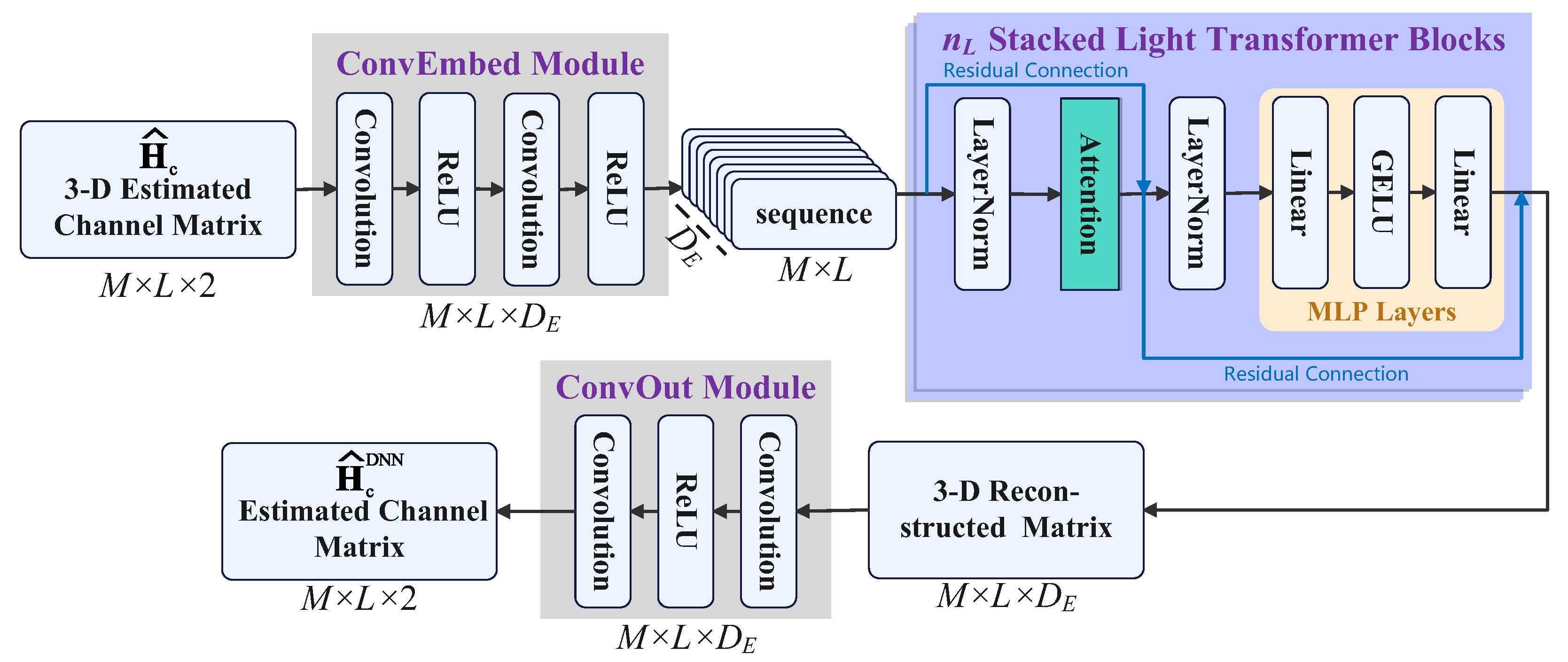

- We propose a lightweight neural network for cascaded channel estimation in RIS-aided MIMO systems. The model denoises a coarse channel estimate obtained with the BALS algorithm so that both model-driven interpretability and data-driven representation are combined to enhance estimation accuracy.

- To fully exploit the structured nature of RIS channels, the proposed network incorporates convolutional embedding blocks for extracting fine-grained local spatial features and Transformer modules for capturing long-range dependencies. This complementary design addresses the limitations of existing methods that rely solely on either convolutional or attention-based architectures.

- To ensure training stability and relieve gradient degradation, a residual learning framework is adopted. This design facilitates gradient flow, accelerates convergence, and improves robustness against noise, thereby guaranteeing effective deep stacking without sacrificing accuracy.

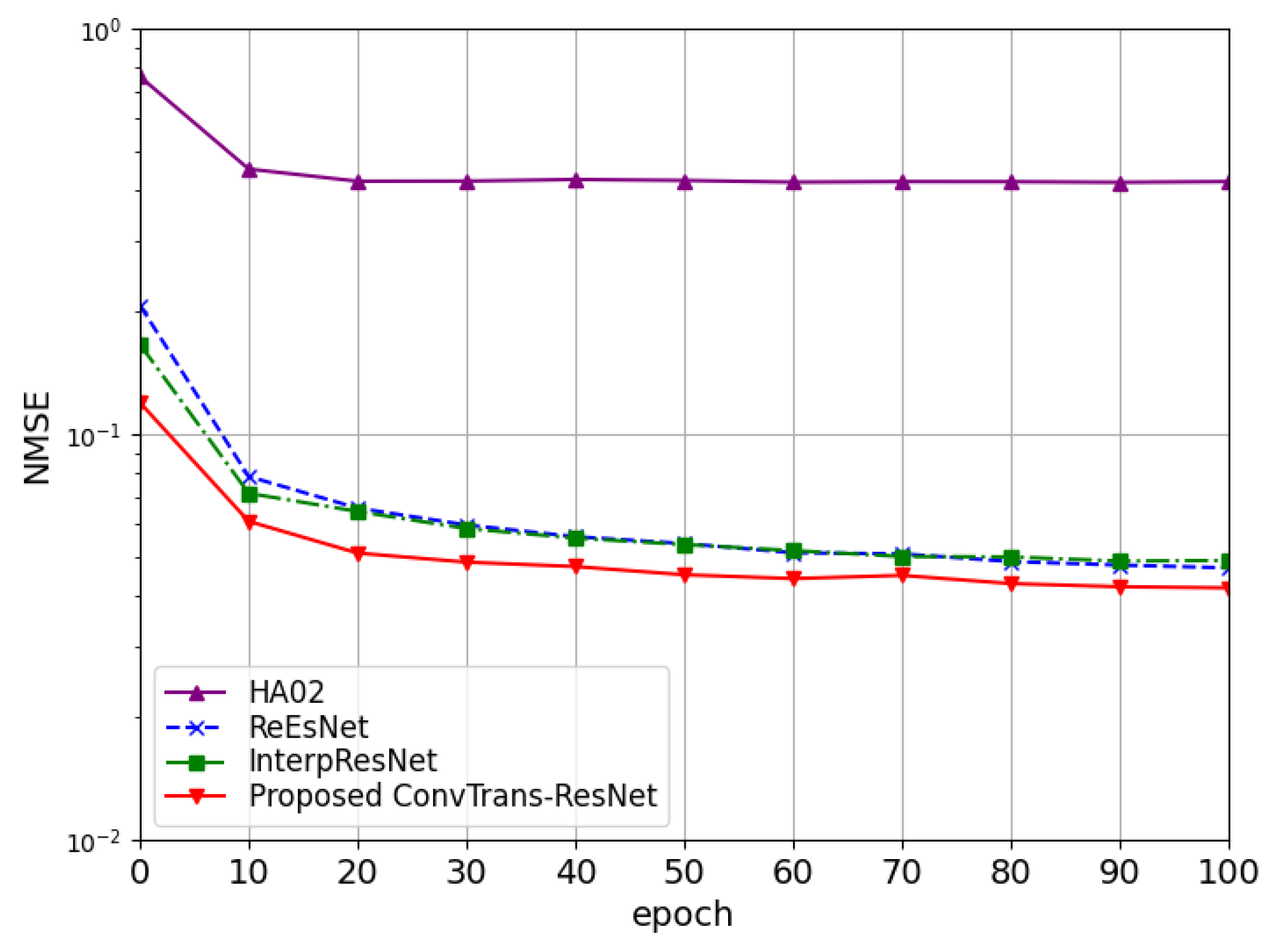

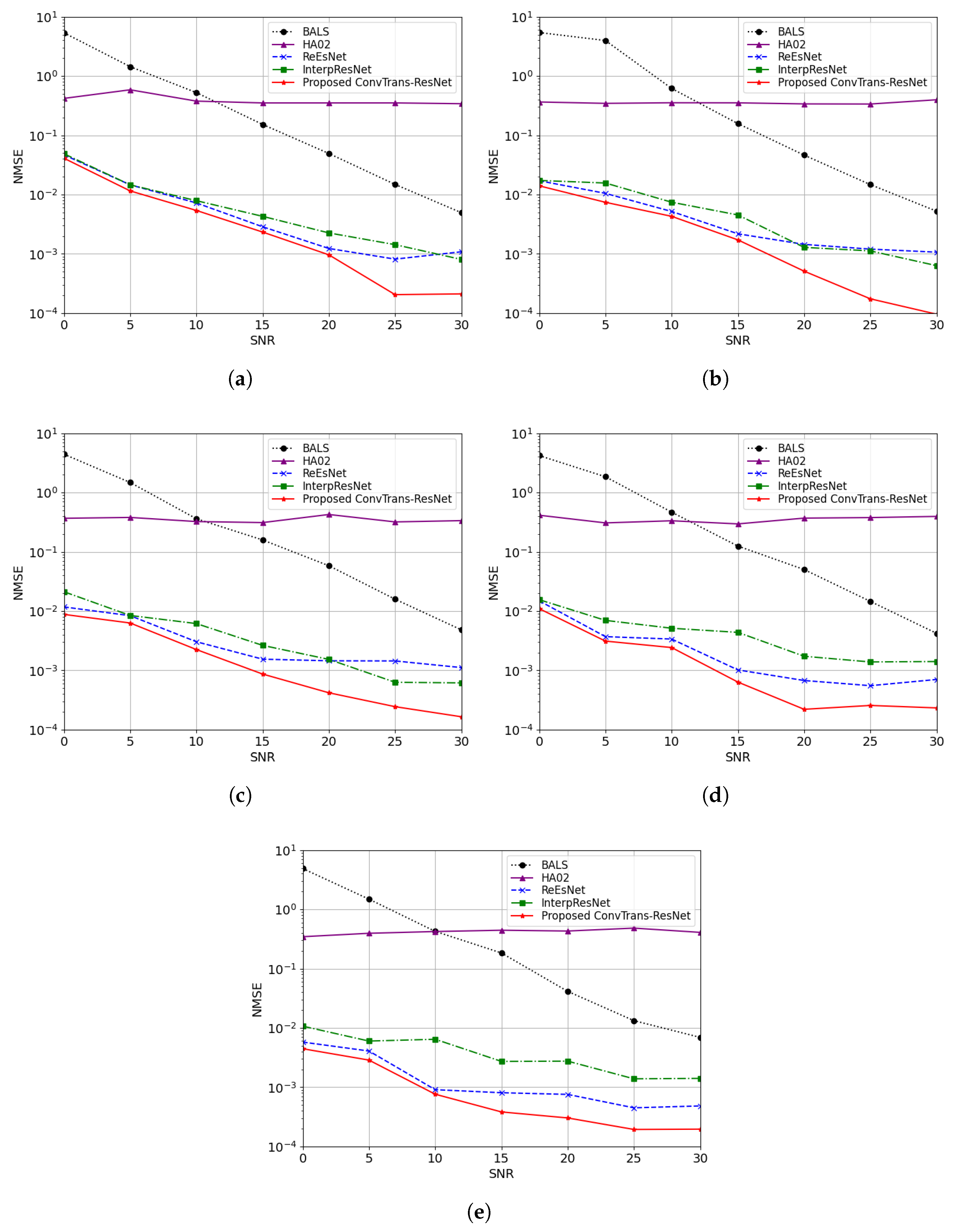

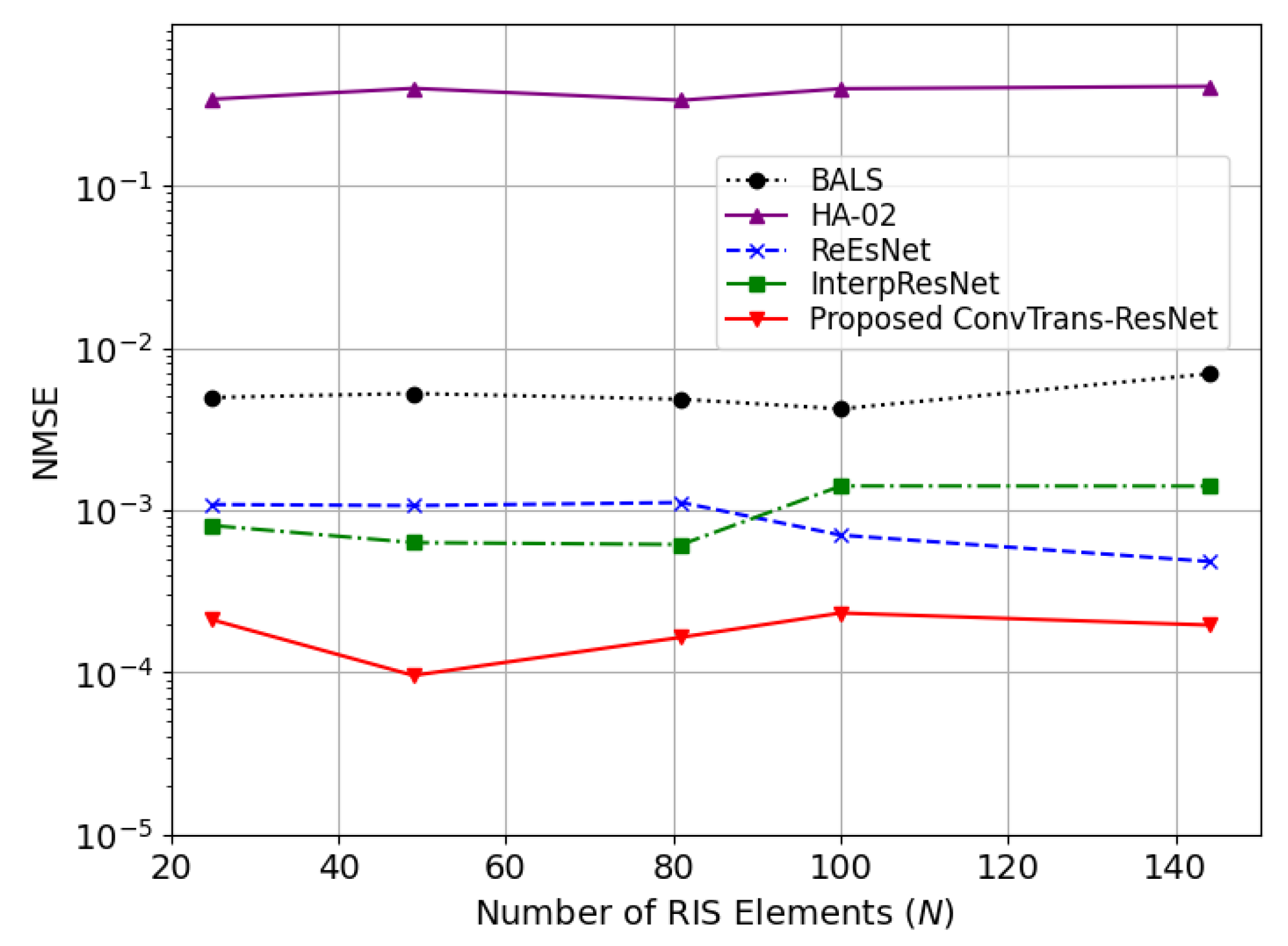

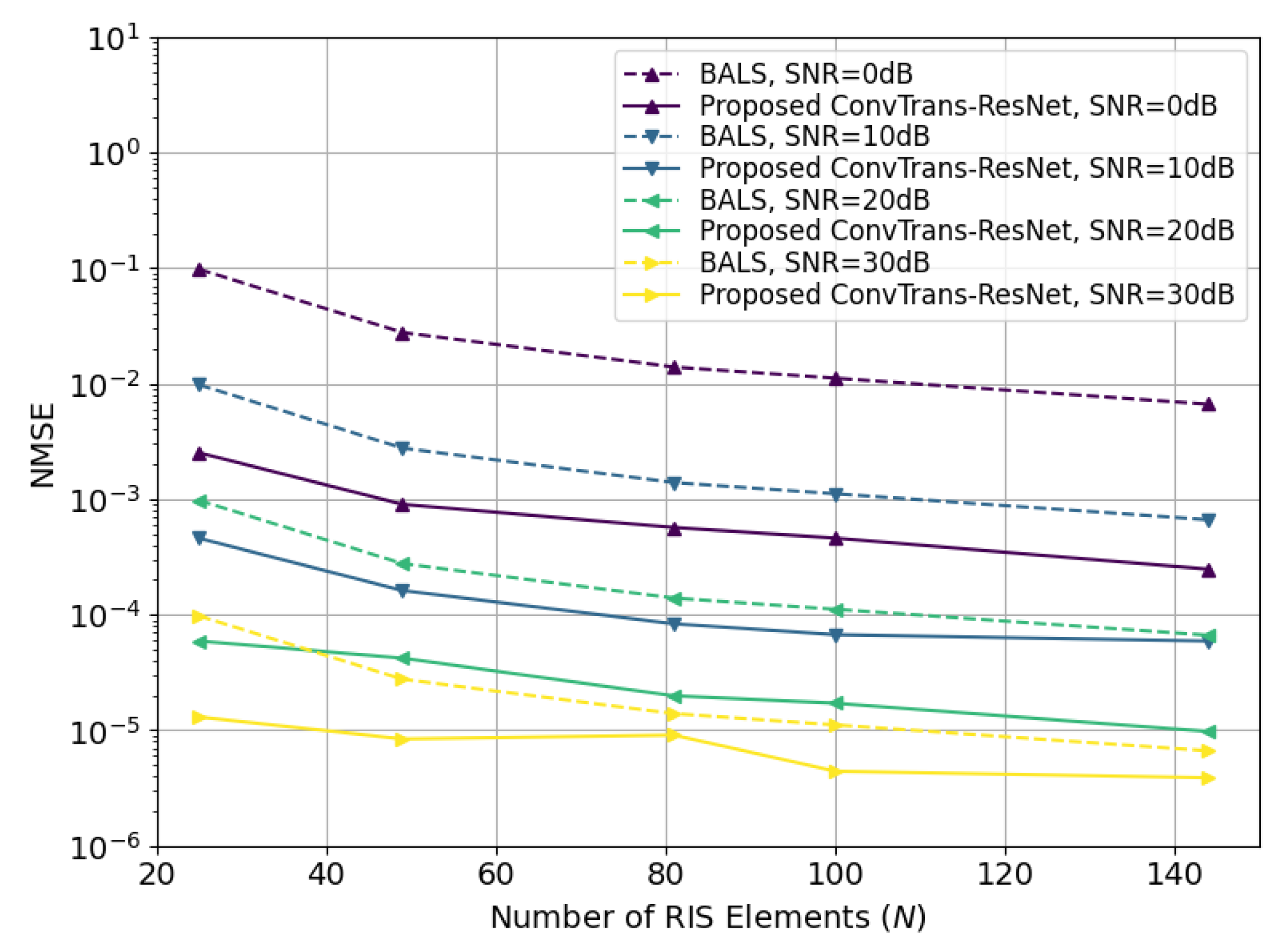

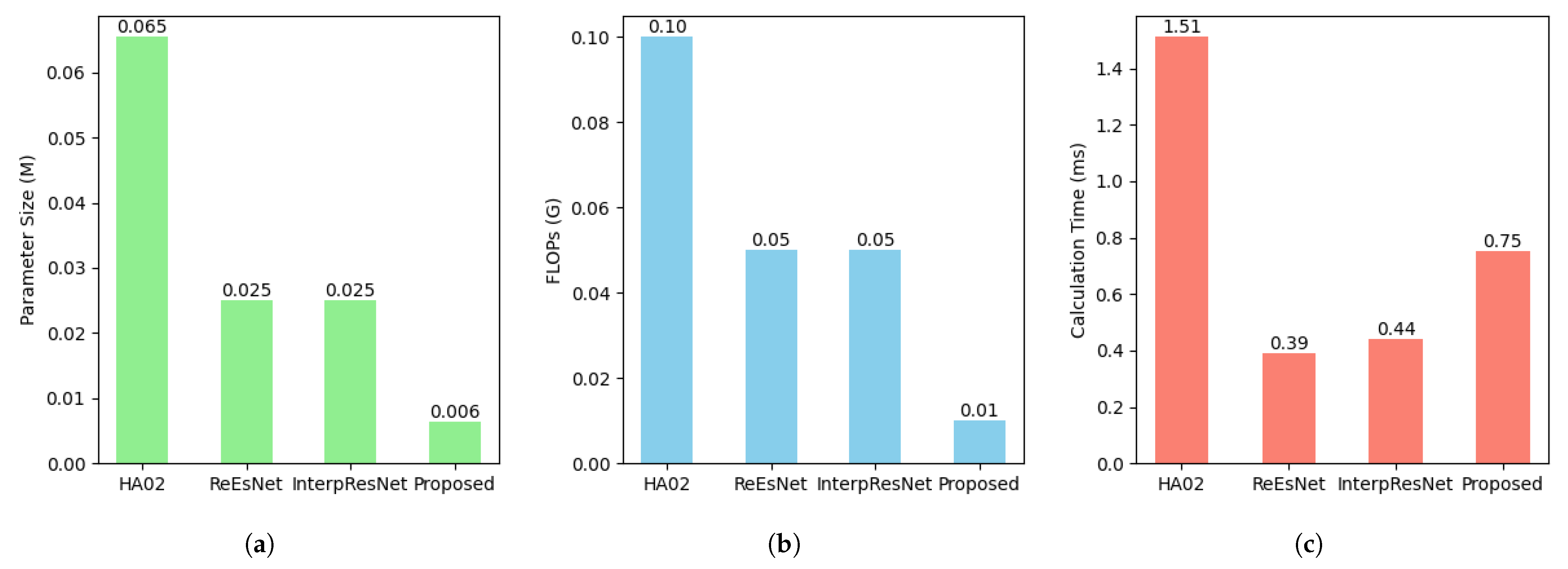

- To systematically investigate the impact of key architectural parameters and further identify an optimal configuration, a detailed ablation study is conducted. Simulation results also demonstrate that the proposed ConvTrans-ResNet consistently outperforms state-of-the-art approaches such as ReEsNet, InterpResNet, HA02, and BALS across a wide range of SNRs and IRS sizes, while significantly reducing parameter count and FLOPs, making it highly suitable for real-time and resource-constrained deployments.

2. Related Work

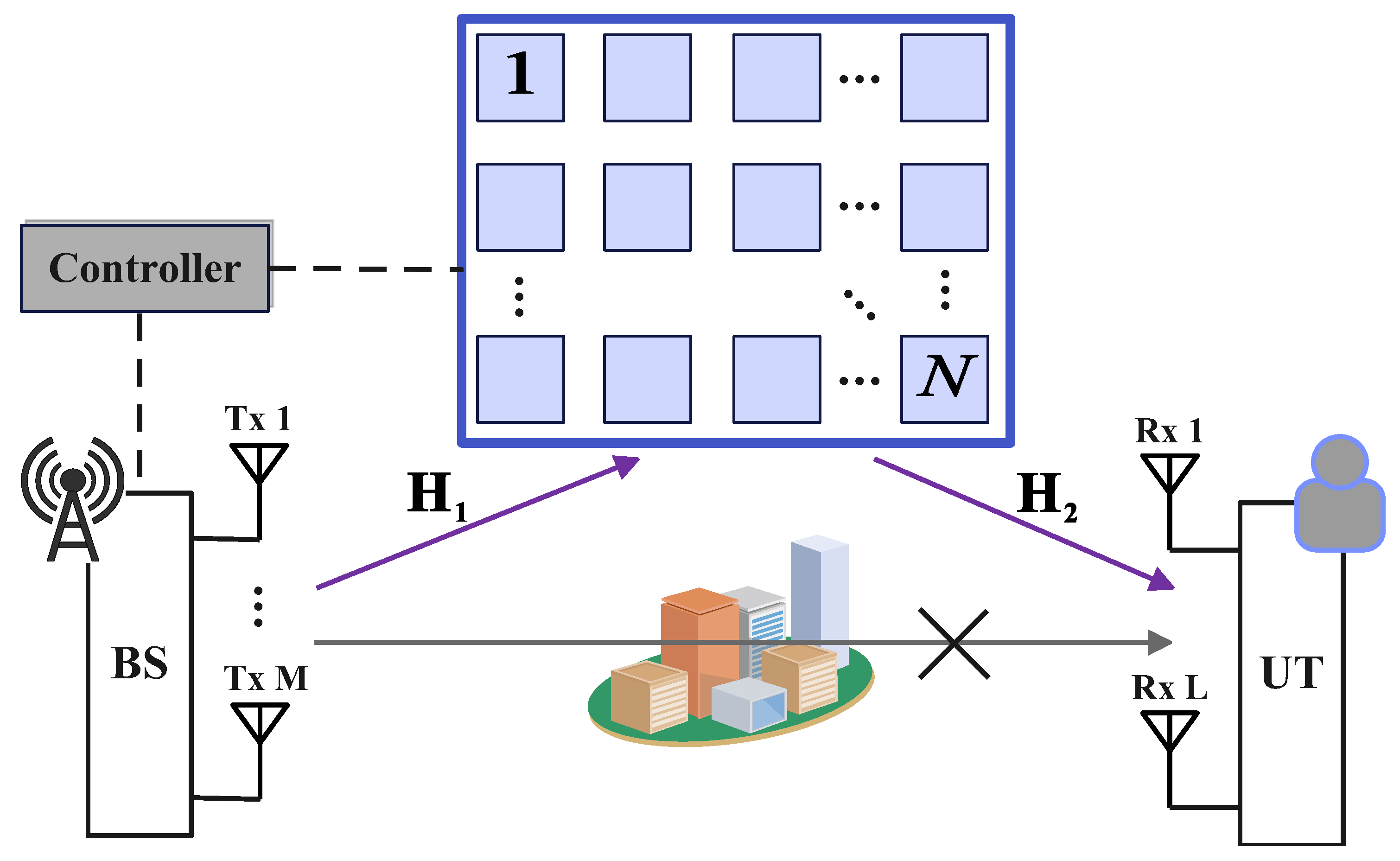

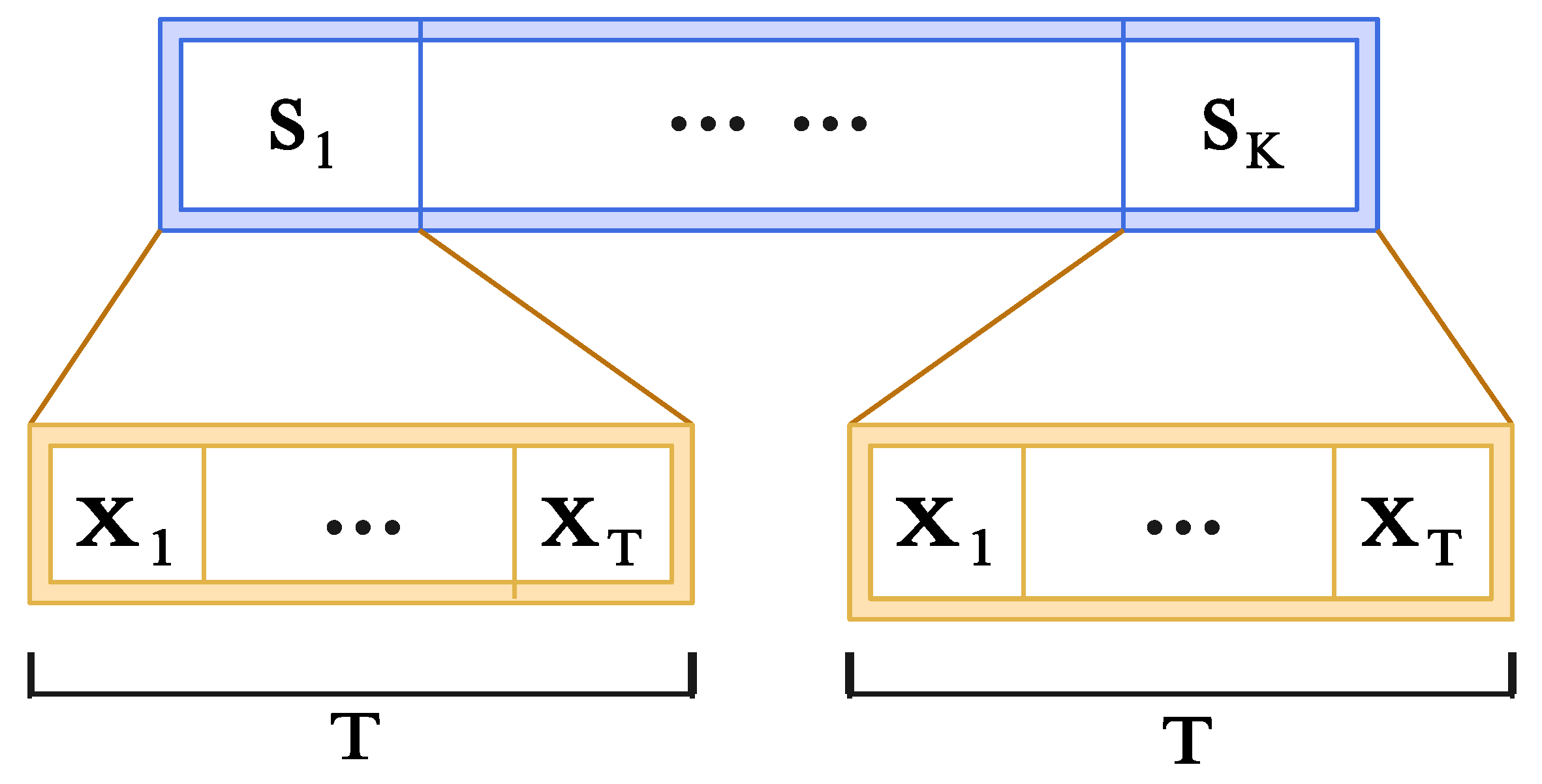

3. System Model

4. Traditional BALS-Based Channel Estimation

| Algorithm 1 Bilinear Alternating Least Squares (BALS) |

|

5. Proposed Method

5.1. Overview

5.2. ConvEmbed Module

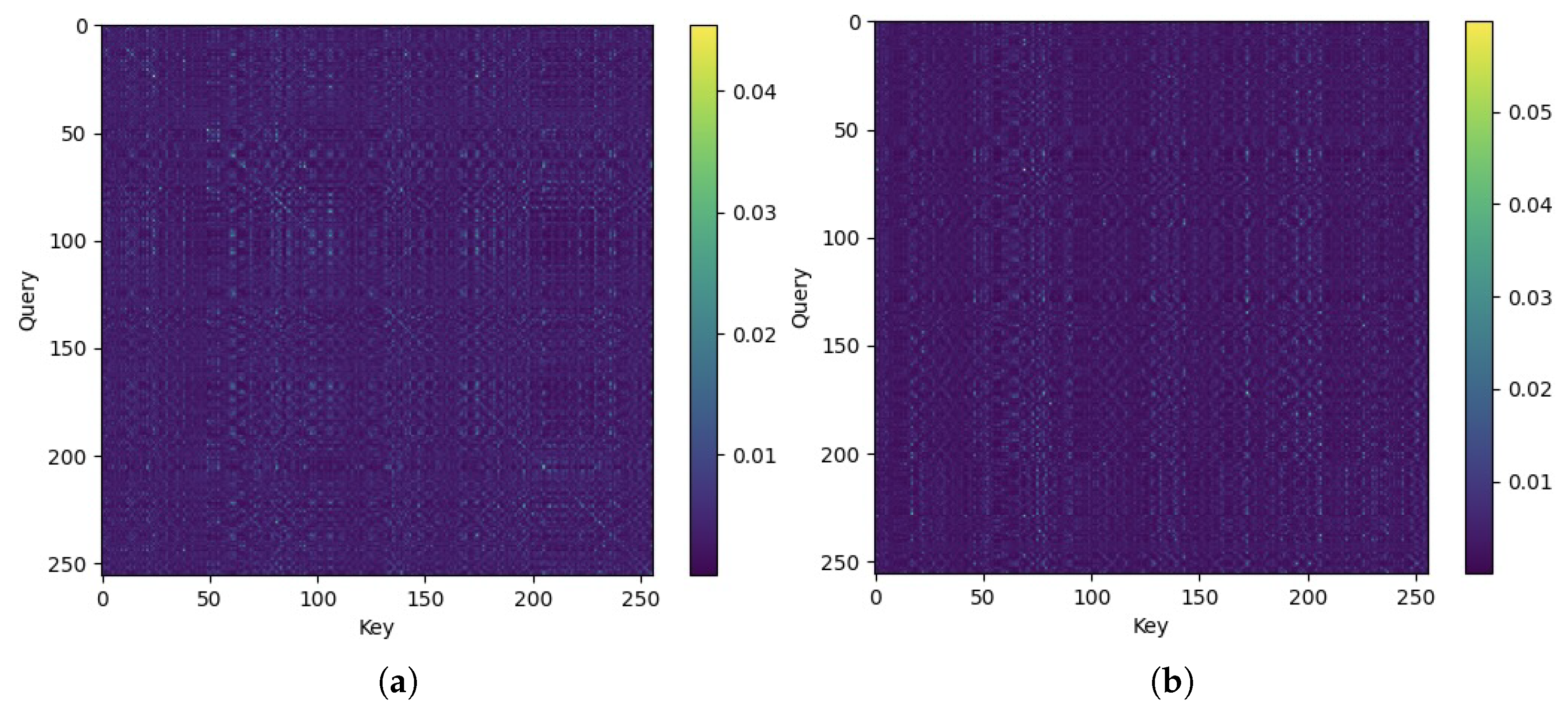

5.3. Transformer Module with Residual Connections

5.4. ConvOut Module

6. Experimental Results

6.1. Implementation Details

6.1.1. Dataset

6.1.2. Parameters

6.1.3. Performance Metric

6.2. Effectiveness Validation

6.3. Computational Complexity

7. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IRS | Intelligent Reflective Surface |

| MIMO | Multiple-Input Multiple-Output |

| CSI | Channel State Information |

| DL | Deep Learning |

| BALS | Bilinear Alternating Least Squares |

| BS | Base Station |

| UT | User Terminal |

| MHSA | Multi-Head Self-Attention |

| MLP | Multilayer Perceptron |

| NMSE | Normalized Mean Squared Error |

References

- Kang, Z.; You, C.; Zhang, R. Active-passive IRS aided wireless communication: New hybrid architecture and elements allocation optimization. IEEE Trans. Wirel. Commun. 2023, 23, 3450–3464. [Google Scholar] [CrossRef]

- Basar, E.; Di Renzo, M.; De Rosny, J.; Debbah, M.; Alouini, M.S.; Zhang, R. Wireless communications through reconfigurable intelligent surfaces. IEEE Access 2019, 7, 116753–116773. [Google Scholar] [CrossRef]

- Gong, S.; Lu, X.; Hoang, D.T.; Niyato, D.; Shu, L.; Kim, D.I.; Liang, Y.C. Toward smart wireless communications via intelligent reflecting surfaces: A contemporary survey. IEEE Commun. Surv. Tutor. 2020, 22, 2283–2314. [Google Scholar] [CrossRef]

- Papazafeiropoulos, A.; Kourtessis, P.; Ntontin, K.; Chatzinotas, S. Joint spatial division and multiplexing for FDD in intelligent reflecting surface-assisted massive MIMO systems. IEEE Trans. Veh. Technol. 2022, 71, 10754–10769. [Google Scholar] [CrossRef]

- Zheng, X.; Cao, R.; Ma, L. Uplink channel estimation and signal extraction against malicious IRS in massive MIMO system. In Proceedings of the 2021 IEEE International Conference on Communications Workshops (ICC Workshops), Montreal, QC, Canada, 14–23 June 2021; pp. 1–6. [Google Scholar]

- Zhang, C.; Xu, H.; Ng, B.K.; Lam, C.T.; Wang, K. RIS-Assisted Received Adaptive Spatial Modulation for Wireless Communications. In Proceedings of the 2025 IEEE Wireless Communications and Networking Conference (WCNC), Milan, Italy, 24–27 March 2025; pp. 1–6. [Google Scholar]

- Gkonis, P.K. A survey on machine learning techniques for massive MIMO configurations: Application areas, performance limitations and future challenges. IEEE Access 2022, 11, 67–88. [Google Scholar] [CrossRef]

- Okogbaa, F.C.; Ahmed, Q.Z.; Khan, F.A.; Abbas, W.B.; Che, F.; Zaidi, S.A.R.; Alade, T. Design and application of intelligent reflecting surface (IRS) for beyond 5G wireless networks: A review. Sensors 2022, 22, 2436. [Google Scholar] [CrossRef]

- Kim, I.S.; Bennis, M.; Oh, J.; Chung, J.; Choi, J. Bayesian channel estimation for intelligent reflecting surface-aided mmWave massive MIMO systems with semi-passive elements. IEEE Trans. Wirel. Commun. 2023, 22, 9732–9745. [Google Scholar] [CrossRef]

- Sur, S.N.; Singh, A.K.; Kandar, D.; Silva, A.; Nguyen, N.D. Intelligent reflecting surface assisted localization: Opportunities and challenges. Electronics 2022, 11, 1411. [Google Scholar] [CrossRef]

- Lam, C.T.; Wang, K.; Ng, B.K. Channel estimation using in-band pilots for cell-free massive MIMO. In Proceedings of the 2023 IEEE Virtual Conference on Communications (VCC), Virtual, 28–30 November 2023; pp. 200–205. [Google Scholar]

- Xu, H.; Zhang, C.; Wu, Q.; Ng, B.K.; Lam, C.T.; Yanikomeroglu, H. FTN-assisted SWIPT-NOMA design for IoT wireless networks: A paradigm in wireless efficiency and energy utilization. IEEE Sens. J. 2025, 25, 7431–7444. [Google Scholar] [CrossRef]

- Xu, H.; Zhang, C.; Wu, Q.; Ng, B.K.; Lam, C.T. Adaptive Damping Log-Domain Message-Passing Algorithm for FTN-OTFS in V2X Communications. Sensors 2025, 25, 3692. [Google Scholar] [CrossRef]

- de Araújo, G.T.; de Almeida, A.L. PARAFAC-based channel estimation for intelligent reflective surface assisted MIMO system. In Proceedings of the 2020 IEEE 11th Sensor Array and Multichannel Signal Processing Workshop (SAM), Hangzhou, China, 8–11 June 2020; pp. 1–5. [Google Scholar]

- Chen, Y.; Jiang, F. Compressive Channel Estimation Based on the Deep Denoising Network in an IRS-Enhanced Massive MIMO System. Comput. Intell. Neurosci. 2022, 2022, 8234709. [Google Scholar] [CrossRef] [PubMed]

- Xie, W.; Xiao, J.; Zhu, P.; Yu, C.; Yang, L. Deep compressed sensing-based cascaded channel estimation for RIS-aided communication systems. IEEE Wirel. Commun. Lett. 2022, 11, 846–850. [Google Scholar] [CrossRef]

- Gao, T.; He, M. Two-stage channel estimation using convolutional neural networks for IRS-assisted mmwave systems. IEEE Syst. J. 2023, 17, 3183–3191. [Google Scholar] [CrossRef]

- Liu, C.; Liu, X.; Ng, D.W.K.; Yuan, J. Deep residual learning for channel estimation in intelligent reflecting surface-assisted multi-user communications. IEEE Trans. Wirel. Commun. 2021, 21, 898–912. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, L.; Cui, S. Channel estimation for intelligent reflecting surface assisted multiuser communications: Framework, algorithms, and analysis. IEEE Trans. Wirel. Commun. 2020, 19, 6607–6620. [Google Scholar] [CrossRef]

- Tabassum, R.; Sejan, M.A.S.; Rahman, M.H.; Aziz, M.A.; Song, H.K. Intelligent Reflecting Surface-Assisted Wireless Communication Using RNNs: Comprehensive Insights. Mathematics 2024, 12, 2973. [Google Scholar] [CrossRef]

- Ye, M.; Zhang, H.; Wang, J.B. Channel estimation for intelligent reflecting surface aided wireless communications using conditional GAN. IEEE Commun. Lett. 2022, 26, 2340–2344. [Google Scholar] [CrossRef]

- Elbir, A.M.; Papazafeiropoulos, A.; Kourtessis, P.; Chatzinotas, S. Deep channel learning for large intelligent surfaces aided mm-wave massive MIMO systems. IEEE Wirel. Commun. Lett. 2020, 9, 1447–1451. [Google Scholar] [CrossRef]

- Abdelmaksoud, A.; Abdelhamid, B.; Elbadawy, H.; El Hennawy, H.; Eldyasti, S. DGD-CNet: Denoising Gated Recurrent Unit with a Dropout-Based CSI Network for IRS-Aided Massive MIMO Systems. Sensors 2024, 24, 5977. [Google Scholar] [CrossRef]

- Janawade, S.A.; Krishnan, P.; Kandasamy, K.; Holla, S.S.; Rao, K.; Chandrasekar, A. A Low-Complexity Solution for Optimizing Binary Intelligent Reflecting Surfaces towards Wireless Communication. Future Internet 2024, 16, 272. [Google Scholar] [CrossRef]

- Li, L.; Chen, H.; Chang, H.H.; Liu, L. Deep residual learning meets OFDM channel estimation. IEEE Wirel. Commun. Lett. 2019, 9, 615–618. [Google Scholar] [CrossRef]

- Luan, D.; Thompson, J. Attention based neural networks for wireless channel estimation. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference: (VTC2022-Spring), Helsinki, Finland, 19–22 June 2022; pp. 1–5. [Google Scholar]

- Gu, Z.; He, C.; Huang, Z.; Xiao, M. Channel Estimation for IRS Aided MIMO System with Neural Network Solution. In Proceedings of the 2023 IEEE 98th Vehicular Technology Conference (VTC2023-Fall), Hong Kong, China, 10–13 October 2023; pp. 1–5. [Google Scholar]

- Chu, H.; Pan, X.; Jiang, J.; Li, X.; Zheng, L. Adaptive and robust channel estimation for IRS-aided millimeter-wave communications. IEEE Trans. Veh. Technol. 2024, 73, 9411–9423. [Google Scholar] [CrossRef]

- Shi, H.; Huang, Y.; Jin, S.; Wang, Z.; Yang, L. Automatic high-performance neural network construction for channel estimation in IRS-aided communications. IEEE Trans. Wirel. Commun. 2024, 23, 10667–10682. [Google Scholar] [CrossRef]

- Wang, Y.; Dong, R.; Shu, F.; Gao, W.; Zhang, Q.; Liu, J. Power Optimization and Deep Learning for Channel Estimation of Active IRS-Aided IoT. IEEE Internet Things J. 2024, 11, 41194–41206. [Google Scholar] [CrossRef]

- Chen, Z.; Zhao, M.M.; Li, M.; Xu, F.; Wu, Q.; Zhao, M.J. Joint location sensing and channel estimation for IRS-aided mmWave ISAC systems. IEEE Trans. Wirel. Commun. 2024, 23, 11985–12002. [Google Scholar] [CrossRef]

- Sun, H.; Zhu, L.; Mei, W.; Zhang, R. Power measurement based channel estimation for IRS-enhanced wireless coverage. IEEE Trans. Wirel. Commun. 2024, 23, 19183–19198. [Google Scholar] [CrossRef]

- He, Z.Q.; Yuan, X. Cascaded channel estimation for large intelligent metasurface assisted massive MIMO. IEEE Wirel. Commun. Lett. 2019, 9, 210–214. [Google Scholar] [CrossRef]

- Harshman, R.A. Foundations of the PARAFAC procedure: Models and conditions for an “explanatory” multi-modal factor analysis. UCLA Work. Pap. Phon. 1970, 16, 84. [Google Scholar]

- Kolda, T.G.; Bader, B.W. Tensor decompositions and applications. SIAM Rev. 2009, 51, 455–500. [Google Scholar] [CrossRef]

- Comon, P.; Luciani, X.; De Almeida, A.L. Tensor decompositions, alternating least squares and other tales. J. Chemom. J. Chemom. Soc. 2009, 23, 393–405. [Google Scholar] [CrossRef]

- de Almeida, A.L.F.; Favier, G.; da Costa, J.; Mota, J.C.M. Overview of tensor decompositions with applications to communications. In Signals and Images: Advances and Results in Speech, Estimation, Compression, Recognition, Filtering, and Processing; CRC-Press: Boca Raton, FL, USA, 2016; Volume 12, pp. 325–356. [Google Scholar]

- Sidiropoulos, N.D.; De Lathauwer, L.; Fu, X.; Huang, K.; Papalexakis, E.E.; Faloutsos, C. Tensor decomposition for signal processing and machine learning. IEEE Trans. Signal Process. 2017, 65, 3551–3582. [Google Scholar] [CrossRef]

| Symbol | Description | Value |

|---|---|---|

| M | Number of BS antennas | 64 |

| L | Number of UE antennas | 4 |

| N | Number of passive components at IRS | 25, 49, 81, 100, 144 |

| Channel coherence time | 200 | |

| K | Number of blocks | 50 |

| T | Number of time slots per block | 4 |

| SNR | Signal-to-noise ratios | 0:5:30 dB |

| HA02 | ReEsNet | InterpResNet | Proposed Method | |

|---|---|---|---|---|

| Optimizer | Adam | Adam | Adam | Adam |

| Maximum epoch | 100 | 100 | 100 | 100 |

| Initial learning rate (lr) | 0.002 | 0.001 | 0.001 | 0.001 |

| Drop period for lr | every 20 | None | every 20 | None |

| Drop factor for lr | 0.5 | None | 0.5 | None |

| Batch Size | 128 | 128 | 128 | 128 |

| L2 regularization | 1 × 10−7 | 1 × 10−7 | 1 × 10−7 | 1 × 10−7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, Q.; Bao, J.; Xu, H.; Ng, B.K.; Lam, C.-T.; Im, S.-K. A Convolutional-Transformer Residual Network for Channel Estimation in Intelligent Reflective Surface Aided MIMO Systems. Sensors 2025, 25, 5959. https://doi.org/10.3390/s25195959

Wu Q, Bao J, Xu H, Ng BK, Lam C-T, Im S-K. A Convolutional-Transformer Residual Network for Channel Estimation in Intelligent Reflective Surface Aided MIMO Systems. Sensors. 2025; 25(19):5959. https://doi.org/10.3390/s25195959

Chicago/Turabian StyleWu, Qingying, Junqi Bao, Hui Xu, Benjamin K. Ng, Chan-Tong Lam, and Sio-Kei Im. 2025. "A Convolutional-Transformer Residual Network for Channel Estimation in Intelligent Reflective Surface Aided MIMO Systems" Sensors 25, no. 19: 5959. https://doi.org/10.3390/s25195959

APA StyleWu, Q., Bao, J., Xu, H., Ng, B. K., Lam, C.-T., & Im, S.-K. (2025). A Convolutional-Transformer Residual Network for Channel Estimation in Intelligent Reflective Surface Aided MIMO Systems. Sensors, 25(19), 5959. https://doi.org/10.3390/s25195959