1. Introduction

The ability to achieve robust and accurate ego-motion estimation is critical for autonomous systems operating in complex environments. This requirement spans a variety of applications, from micro-aerial vehicles (MAVs) conducting search-and-rescue missions in degraded visual conditions to augmented reality (AR) devices that demand millimeter-level tracking accuracy in cluttered indoor spaces. VIO, which synergistically combines camera imagery with IMU data, has emerged as the predominant approach for six-degree-of-freedom (6-DOF) state estimation in GPS-denied environments. While conventional feature-based VIO systems have demonstrated remarkable performance in structured scenarios, their dependence on explicit feature detection and matching renders them brittle in real-world conditions such as motion blur, low texture, and low illumination.

Direct sparse odometry approaches, which optimize motion parameters directly on raw pixel intensities, present a promising alternative. These methods circumvent the limitations of feature extraction, allowing them to leverage information from low-texture regions. By being tightly coupled with high-frequency IMU measurements through preintegration, direct sparse VIO systems hold the potential to achieve exceptional robustness in challenging scenarios.

Despite these theoretical advantages, practical implementations of direct sparse VIO face three key challenges:

Initialization Sensitivity: Joint optimization of visual–inertial parameters requires accurate initial estimates for scale, gravity direction, and sensor biases. Current direct sparse VIO systems mostly rely on specific initialization motions (e.g., slow translation) and are prone to divergence when subjected to aggressive initial maneuvers or degenerate motions.

Dynamic Illumination: Direct methods rely on the assumption of photometric consistency, which makes them vulnerable to errors caused by dynamic illumination. This limitation is particularly critical, as real-world environments often experience significant brightness variations.

Multi-Sensor Fusion: The disparate temporal characteristics of visual and inertial sensors result in complex error propagation. Existing architectures typically either oversimplify IMU dynamics or suffer from latency due to suboptimal sensor fusion strategies.

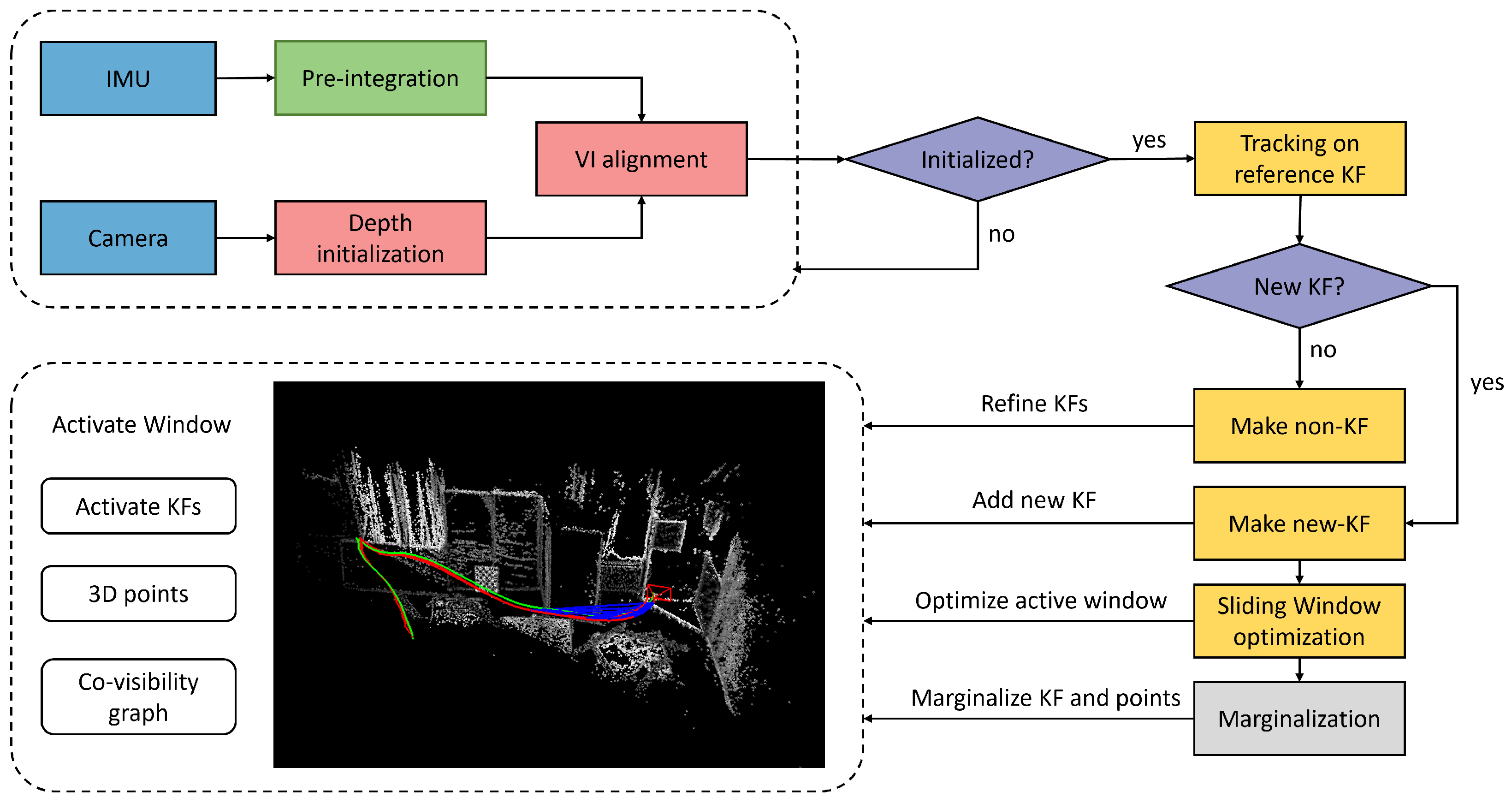

This paper proposes stereo direct sparse visual–inertial odometry (SDS-VIO) that addresses the aforementioned limitations through three key innovations (

Figure 1). First, we present a visual–inertial initialization strategy that integrates IMU preintegration uncertainty with a stereo image, enabling reliable state estimation even under arbitrary initial motions. This approach eliminates the need for restrictive initialization procedures. Second, we incorporate the efficient second-order minimization (ESM) algorithm into the direct image alignment process. By using the second-order Taylor expansion for the photometric error and the first-order expansion for the Jacobian, our method achieves more efficient and accurate optimization. Finally, an adaptive tracking ratio is defined as the quotient between the number of tracked points and the number of selected points across all keyframes in the sliding window. This adaptive keyframe selection strategy enhances both the efficiency and robustness of the system.

The remainder of this paper is organized as follows:

Section 2 reviews related work in visual odometry and VIO systems.

Section 3 details the proposed SDS-VIO system.

Section 4 describes the experimental setup and comparative analysis.

Section 5 summarizes the results and future directions.

2. Related Work

The first real-time visual odometry (VO) system was proposed by Davison [

1] around 2007, and it used a monocular camera to estimate camera motion and construct a persistent map of scene landmarks. Since their inception, VO algorithms have been broadly categorized into two axes: direct vs. indirect and dense vs. sparse.

Early VO/SLAM systems were predominantly indirect, partly due to the need for loop closure schemes in full-fledged SLAM systems, which often relied on feature descriptors [

2]. Henry et al. [

3] proposed a vision-based method for mobile robot localization and mapping using the SIFT for feature extraction. Among these systems, ORB-SLAM3 [

4] emerged as a reference implementation of indirect approaches owing to its superior accuracy and versatility. Shen and Kong [

5] utilized the Mixer MLP structure for tracking feature points, achieving high-quality matching in low-texture scenes.

Direct methods, on the other hand, recover motion parameters directly from images by minimizing photometric error based on the brightness constancy assumption [

6,

7,

8]. Qu et al. [

9] adopted the inverse compositional alignment approach to track new images with regard to the entire window and parallelized their system to effectively utilize computational resources. Wang et al. [

10] presented a tightly coupled approach combining cameras, IMU and GNSS for globally drift-free and locally accurate state estimation. A direct sparse monocular VIO system was proposed by Zhang and Liu [

11] based on adaptive direct motion refinement and photometric inertial bundle adjustment. DM-VIO [

12] adopts delayed marginalization to address slow initialization and improve the scale estimation.

Dense methods reconstruct the entire image, using all pixels, while sparse methods only use and reconstruct a selected set of independent points. DTAM [

13] is a real-time camera tracking and reconstruction system that relies on dense, per-pixel methods instead of feature extraction. Engel et al. [

14] built large-scale consistent maps with highly accurate pose estimation based on an appearance-only loop detection algorithm. Gutierrez-Gomez et al. [

15] minimized both photometric and geometric errors to estimate the camera motion between frames. The geometric error was parameterized by the inverse depth which translated into a better fit of its distribution to the cost functions.

However, most existing dense approaches neglect or approximate correlations between geometry parameters, along with the addition of geometric priors, making real-time statistically consistent joint optimization challenging. Additionally, as the map size grows, maintaining a dense map becomes prohibitively expensive. Forster et al. [

16] used direct methods to track and triangle pixels that are characterized by high gradients, but relied on proven feature-based methods for joint optimization of structure and motion. Mourikis et al. [

17] presented a measurement model that expresses geometric constraints without including 3D feature positions in the state vector. Geneva et al. [

18] combined sparse visual features with inertial data in a filter-based framework, enabling efficient and lightweight state estimation, emphasizing computational efficiency and robustness in dynamic environments.

3. System Overview

The overall structure of the proposed SDS-VIO system is shown in

Figure 2. It incorporates a depth initialization module, a stereo image tracking module and a marginalization module. Different from conventional random scale initialization, the system employs two-stage initialization (

Section 3.4): first, depth is estimated through spatial static stereo matching, followed by visual–inertial measurement alignment. Building on direct image alignment, new stereo frames (

Section 3.2) and IMU measurements (

Section 3.3) undergo coarse-to-fine tracking relative to reference keyframes. The obtained pose estimation subsequently refines the depth of recently selected points. When the number of active points falls below an adaptive ratio, the system adds new keyframes to the active window (

Section 3.5). For all keyframes within the window, a visual–inertial bundle adjustment is performed, optimizing their geometry, poses, affine brightness parameters, and IMU biases and velocities. To maintain the sliding window size, old keyframes and 3D points are marginalized out using the Schur complement (

Section 3.6) to ensure system consistency.

3.1. Notation

Throughout this paper, light lower-case letters represent Wscalars , and bold lower-case letters represent vectors (). Matrices are represented by bold upper-case letters , and functions are represented by light upper-case letters .

The camera intrinsic matrix is denoted as . Camera poses are represented by matrices of the special Euclidean group , which transform a 3D coordinate from the camera frame to the world frame. The relative pose between two cameras is denoted as , which transforms a 3D coordinate from the i-th camera frame to the j-th camera frame.

Any 3D point

in the camera frame can be mapped to a pixel coordinate

via the projection function

, where

Similarly, given a pixel coordinate

and its inverse depth

, the 3D point coordinate can be obtained via the back-projection function

as

The inverse depth parameterization has been demonstrated to be advantageous when errors in images are modeled as Gaussian distributions [

19]. By this, this paper uses the inverse depth and its pixel coordinate to represent a 3D point.

Similar to [

6], we formulate motion estimation as an optimization problem that minimizes an error function. Specially, the re-projection process is mathematically modeled as

where

denotes the warping function that maps the pixel coordinate

in the reference frame to the pixel coordinate

in the target frame;

represents the camera posture parameters in the Lie algebra associated with the relative transformation between the two frames. Here, we omit the conversion from non-homogeneous coordinates to homogeneous coordinates.

3.2. Photometric Error

In this paper, the target frame and reference frame are treated as temporal multi-view stereo, while the stereo pair frames are treated as spatial static stereo.

Temporal Multi-View Stereo. Each residual from temporal multi-view stereo is defined as

where

and

are the exposure times,

,

,

, and

are the coefficients to correct for affine illumination changes, and

and

are images of respective frames.

For image alignment tasks, traditional approaches such as the forward compositional (FC) and inverse compositional (IC) algorithms have inherent limitations. The FC method requires re-computing image gradients at each iteration, which introduces significant computational overhead. Conversely, the IC method avoids this by assuming a fixed gradient on the reference image, but this assumption often breaks down under varying illumination or geometric transformations, leading to decreased robustness and slower convergence. To address these issues, the ESM algorithm combines the advantages of both FC and IC by symmetrizing the update rule and averaging the image gradients from both frames, resulting in a more accurate approximation of the cost function’s curvature. This leads to faster and more stable convergence, particularly under challenging photometric conditions such as affine illumination changes.

Using the ESM algorithm, the Jacobian of temporal stereo is defined as

Formally, the photometric error of a point

using ESM is defined as follows:

where

is a small set of pixels around the point

p,

is the Huber norm, and

is a gradient-dependent weighting.

Spatial Static Stereo. For stereo pair frames, the residual is modified to

The Jacobian of static stereo has fewer geometric parameters

, because the relative transformation between the two cameras

is fixed. Therefore, it will not be optimized in the window optimization.

With that, the error function can be formulated as

where

is a set of keyframes that we are optimizing,

is a sparse set of points in keyframe

i, and

is a set of observations of the same point in other keyframes. The error

belongs to the static stereo residuals.

3.3. Inertial Error

The proposed method establishes an inertial measurement error function derived from gyroscopic angular velocity and accelerometric linear acceleration data. Through the IMU preintegration approach, we formulate a unified inertial measurement constraint that characterizes the relative pose transformation between consecutive visual observation frames.

For two states

and

, and IMU measurements

and

between two images, we obtain a prediction

as well as an associated covariance matrix

. The corresponding error function is defined as

where the operator ⊟ applies

for poses and a normal subtraction for other components.

3.4. Initialization and Tracking

We estimate the camera pose by minimizing the total error between the target frame and the reference frame, defined as

which consists of a photometric error term

, an inertial error term

and a coupling factor

.

To initialize the system, the inverse depths of points in the first frame are required. Unlike previous monocular direct VO approaches that typically initialize using random depth values [

6], this paper uses static stereo matching to estimate a sparse depth map for the first frame. Since the affine brightness transfer factors between the stereo image pair are unknown at this stage, correspondences are searched along the horizontal epipolar line using the NCC over a

patch, and are accepted only if the NCC score exceeds 0.95. Meanwhile, IMU measurements are preintegrated following the on-manifold model [

20] to compute the initial gravity direction and provide motion constraints by averaging up to 40 accelerometer measurements, yielding a reliable estimate even under high acceleration. The stereo-derived depth and IMU information are then jointly used to compute the initial camera pose, velocity, and gravity-aligned reference frame.

The initial inverse depths obtained from the stereo are not treated as fixed values. Similar to DSO, they are jointly optimized along with camera poses and velocities within a sliding window. Preintegrated IMU measurements are incorporated as residuals, and weighted by their covariances, enabling tight visual–inertial coupling. This joint optimization naturally refines initial uncertainties, without explicit thresholding on depth confidence.

Each time a new stereo frame is fed into the system, direct image alignment is used to track it. All the points inside the active window are projected into the new frame. Then the pose of the new frame is optimized by minimizing the error function. The optimization is performed using the Gauss–Newton method on an image pyramid in a coarse-to-fine manner. If the residual exceeds a predefined level, scaled relative to a minimum threshold specific to each image pyramid level, we reject the frame. The threshold is set empirically as 1.5× the minimum residual, which offers a good balance and has been used consistently.

3.5. Sliding Window Optimization

Our system maintains a sliding window of N keyframes . Each keyframe is associated with a Gaussian pyramid of images , a set of affine brightness parameters , a camera pose with regard to the world frame , a set of points parameterized by inverse depth hosted in the keyframe, the current IMU bias , and the velocity .

We compute the Gauss–Newton as

where

is the diagonal matrix containing the weights,

is the stacked residual vector, and

is the Jacobian of

.

Since the visual error term

and the inertial error term

are independent, the Hessian matrix

and the residual vector

can be divided into two parts:

The formulation of inertial error residuals is inherently expressed within the body-attached sensor coordinate system, whereas the joint state estimation process occurs within a globally referenced spatial framework. To reconcile this reference discrepancy, we introduce a Jacobian operator

that propagates infinitesimal variations from the local inertial measurements to the global state perturbations. As a result, the inertial residuals lead to

A keyframe is only needed when the current image cannot be reliably tracked with respect to the sliding window. If a sufficient number of points from the local map can be successfully projected into the image, we can simply continue using the existing keyframes. This approach prevents the addition of new keyframes that provide minimal contribution to frame tracking. Quantitatively, we define the tracking ratio Q as the ratio between the number of tracked points and selected points from all keyframes in the window. A new keyframe is created if Q falls below a threshold .

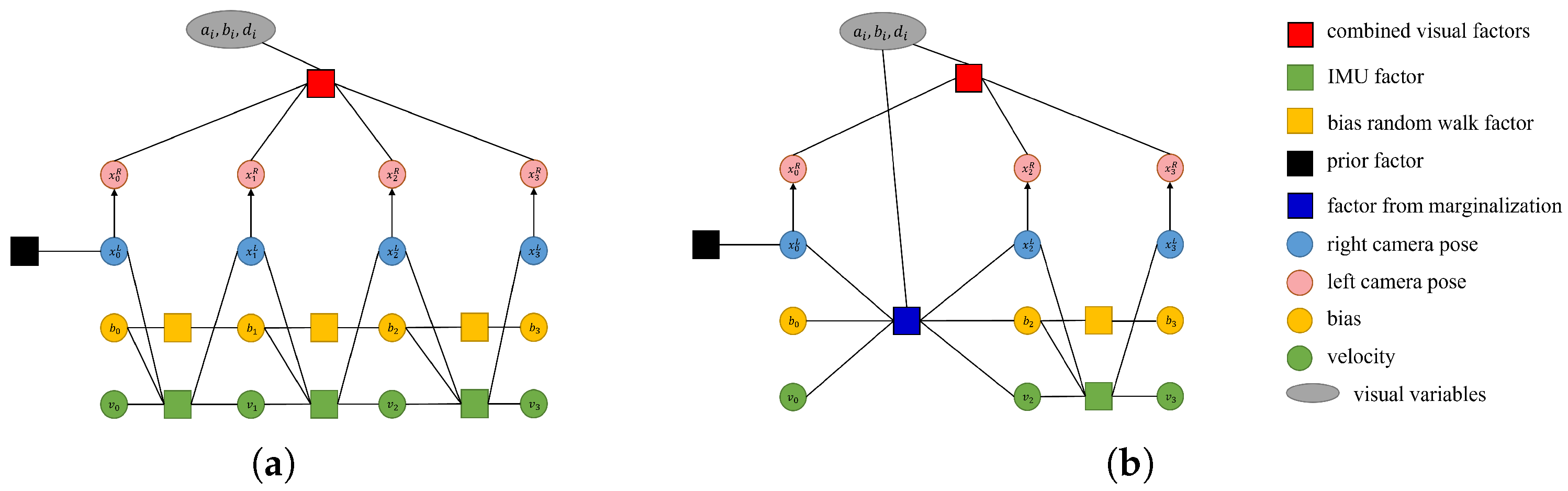

3.6. Marginalization

With each iteration, the number of states and the computational complexity increase quadratically. To limit this, marginalization is applied to preserve useful information. The procedure converts previous measurements into a prior term, maintaining past information. Visual factor marginalization follows the approach in [

21], where residual terms affecting sparsity are discarded, and all keyframe points are marginalized by marginalizing the keyframe itself.

Figure 3 shows how marginalization changes the factor graph. The states to be marginalized are denoted as

, and the remaining states are denoted as

. Marginalizing the states reduces the size of optimization problem while updating matrices

and

. After reordering the states, the optimization formulation is updated as follows:

The marginalization is carried out using the Schur complement as

We compute a new prior term

and

for the remaining states, incorporating the information from marginalized states without loss. Specifically, our system maintains seven spatial camera frames, and when a new keyframe is added, we marginalize out the visual and inertial factors related to the states of the first frame.

4. Evaluation

We evaluate the proposed method on two established benchmarks: the KITTI visual odometry benchmark [

22] and the EuRoC dataset [

23]. In each experiment, the number of active points and keyframes retained in the local map is set to 2000 and 7, respectively. A constant coupling factor of

is used throughout the tests.

4.1. KITTI Visual Odometry Benchmark

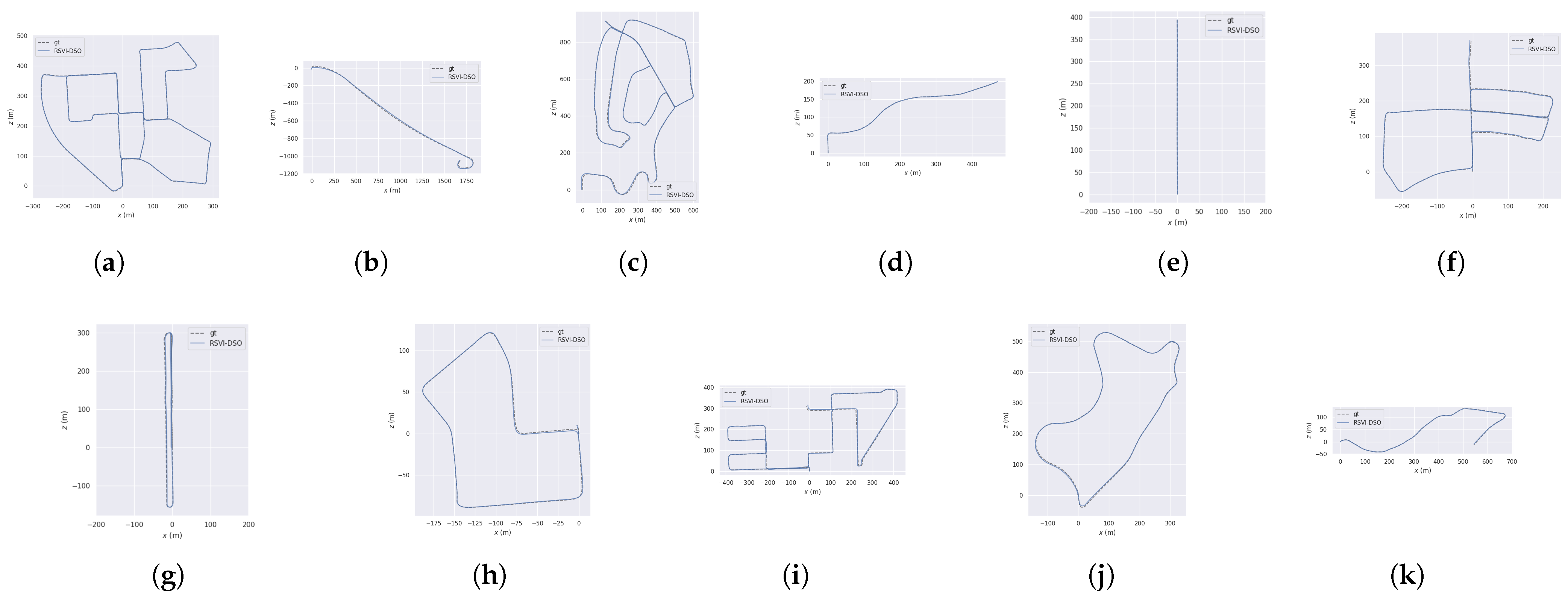

The KITTI visual odometry benchmark consists of 22 sequences, all collected from a moving car. The datasets primarily feature street scenes with dynamic objects. Among the 22 sequences, ground-truth 6D poses are available only for the first 11. Therefore, the evaluation is primarily conducted on these first 11 sequences.

Figure 4 shows the trajectories generated by SDS-VIO across all test sequences in the KITTI benchmark compared with the ground truth. Among the paths, sequences 00, 02, 05, 08, 09 and 10 represent long sequences in large environments, while sequences 06, 07 and 09 are relatively short with significant rotation. The remaining sequences are short and relatively straight. It can be seen that SDS-VIO performs well in all cases without distinct scale drift.

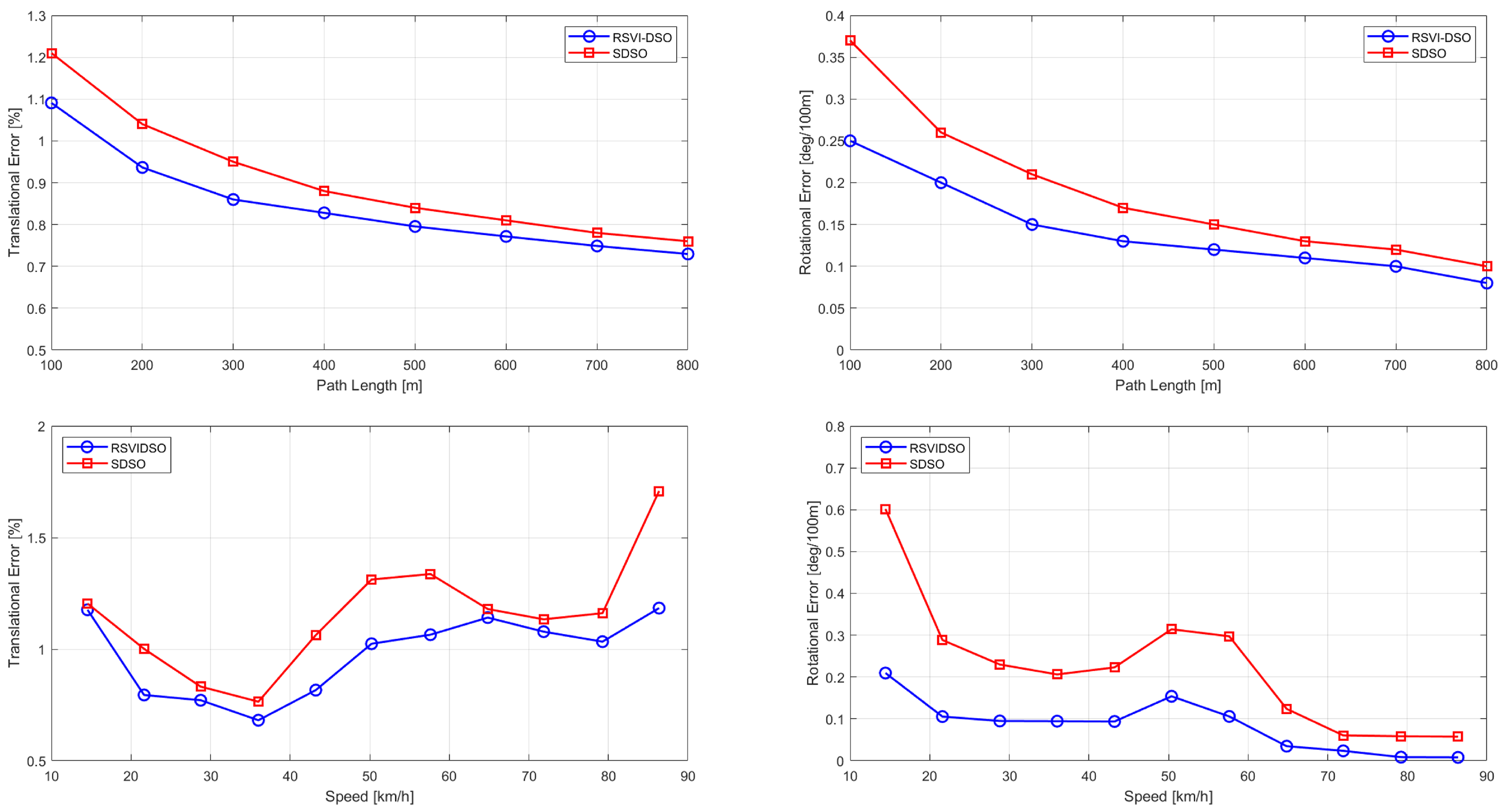

In

Figure 5, we compare SDS-VIO with SDSO [

8] in terms of average translation and rotation errors. The errors are calculated relative to the path length and moving speed. The results demonstrate that our method outperforms SDSO in all cases. Specifically, SDS-VIO exhibits strong robustness and accuracy across varying moving speeds and path lengths.

We compared our method to SDSO and R-SDSO, which are currently the state-of-the-art stereo direct VO methods. The results are shown in

Table 1. The results for R-SDSO are taken from [

24], while those for SDSO are obtained by running their code with default settings. It can be observed that the proposed method generally outperforms SDSO. Compared to R-SDSO, our method achieves a better performance in most sequences, although the translational errors show slight variation. This may be attributed to the relatively low frame rate of the dataset, which reduces the effectiveness of IMU measurements.

4.2. EuRoC Dataset

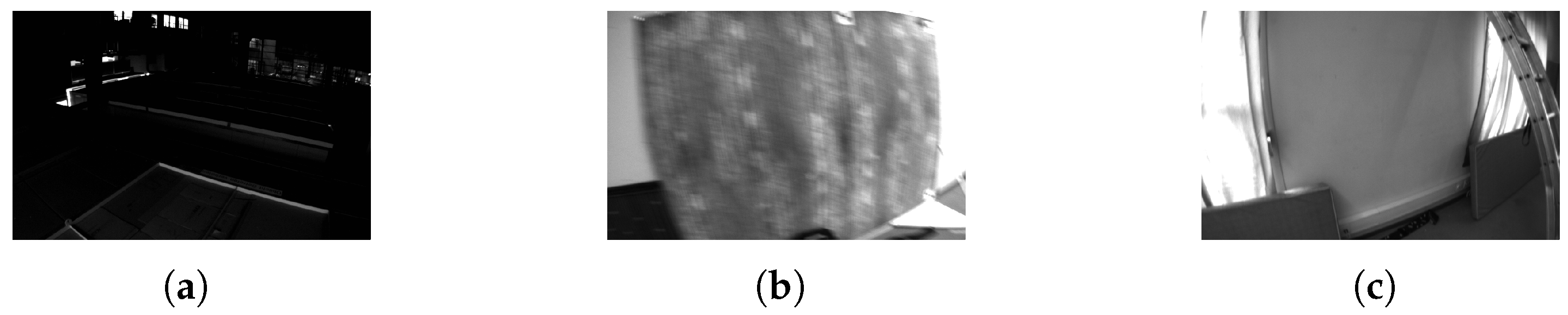

The EuRoC dataset provides high-quality data collected from MAVs in two environments: an industrial machine hall and a Vicon room. As shown in

Figure 6, the Euroc dataset poses challenges due to low illumination, strong motion blur and low texture features. To ensure an accurate evaluation, each method runs 10 times for each sequence in the dataset.

Table 2 shows the Absolute Trajectory Error (ATE) comparison to several other methods. The “X” that indicates the method failed to track the sequence. The results for OKVIS [

25] and VI-DSO are quoted from [

21], while the results of BASALT and VINS-Fusion are quoted from [

26,

27]. Compared to other methods, our method obviously outperforms them in terms of RMSE across most sequences. In more challenging sequences, such as V2_03_difficult, our method continues to demonstrate robust performance, while BASALT and OKVIS were unable to track this sequence. Note that the Vicon room sequences (V*) are executed in a small room with many looped motions where the loop closures in SLAM systems significantly improve the performance. Overall, the results demonstrate that SDS-VIO consistently delivers superior performance across all evaluated sequences.

Additionally, we test the influence of the inertial coupling factor on the example sequence V1_03_difficult. The translation and rotation errors are shown in

Figure 7. As

increases, the rotation error gradually increases, which indicates that the system is more sensitive to inertial measurements. However, the translation error shows a slight decrease at first and then increases, indicating that the system is able to utilize inertial measurements to improve tracking performance up to a certain point. The results suggest that a moderate coupling factor (

) is beneficial to achieve a balance between precision and robustness.

4.3. Speed and Accuracy

We benchmark SDS-VIO, SDSO and VINS-Fusion with single threaded settings on a desktop computer with an Intel i5-14600K CPU and 32 GB RAM. We run both systems on the V1_03_difficult sequence from the EuRoc dataset and average timing results over several runs. Additionally, to examine the effectiveness of the ESM algorithm, tracking without the ESM algorithm is also added for comparison. We again use the default settings for both VINS-Fusion and SDSO (with 7 keyframes and 2000 points max) and do not enforce real-time execution (no skipping frames). Note that it is difficult to ensure a completely fair comparison, as each system uses slightly different window sizes, pyramid levels, number of iterations, and other hyper-parameters that may affect its performance.

Runtime results are shown in

Table 3. SDS-VIO with ESM achieves the best performance, with an average time of 42.67 ms per frame while tracking, which is significantly faster than SDSO and VINS-Fusion. The results also show that the ESM algorithm is more efficient than the FC algorithm, as it requires less time to compute the Jacobian and residuals. The accuracy of SDS-VIO is also better than SDSO and R-SDSO in terms of translation and rotation errors, demonstrating that the proposed method can achieve real-time performance while maintaining high accuracy.

5. Conclusions

In this work, we propose a stereo direct sparse visual-inertial odometry (SDS-VIO) system with efficient second-order minimization for accurate real-time tracking and mapping. We detailed the technical implementation including the integration of multi-stage initialization, direct image alignment with ESM, and adaptive sliding window optimization. The superior performance of SDS-VIO is demonstrated through both qualitative and quantitative evaluations on the KITTI visual odometry benchmark and the EuRoC dataset. The results on the KITTI dataset show that SDS-VIO performs better in mean translation and rotation errors compared to R-SDSO and SDSO. Additionally, the comparison on the EuRoC dataset highlights the robustness of SDS-VIO in environments with brightness variation, motion blur and low texture features.

In future work, a database for map maintenance and the incorporation of loop closure will be considered to further improve the accuracy of SDS-VIO and extend it to be a visual–inertial fused SLAM system.