(H-DIR)2: A Scalable Entropy-Based Framework for Anomaly Detection and Cybersecurity in Cloud IoT Data Centers

Abstract

1. Introduction

- Low-latency detection (<250 ms) through Spark-based entropy monitoring.

- Explainable mitigation by propagating neural outputs into semantic RDF graphs.

- Protocol generalization validated on TCP, RPL, and UDP/NTP streams from public datasets.

- (i)

- the CIC-DDoS2019 trace for TCP-level floods [7];

- (ii)

- the Data Port DAO-DIO routing-manipulation dataset [11];

- (iii)

- the Kitsune NTP-amplification subset [12], for a total of n = 1.2 × 104 labeled events. We report UDP amplification (50.3%), TCP-based (30.8%), SYN Flood (16.3%), and residual unknown (2.6%).

2. Related Work

Overview of Targeted Cyber Attacks

- −

- TCP SYN Flooding: targeting the transport layer through high-frequency flag spoofing.

- −

- DAO DIO Routing Manipulation: exploiting the RPL control plane in IPv6-based IoT networks.

- −

- UDP/NTP Amplification: using open UDP services to induce bandwidth amplification and overload targets.

3. Architecture and Methodology

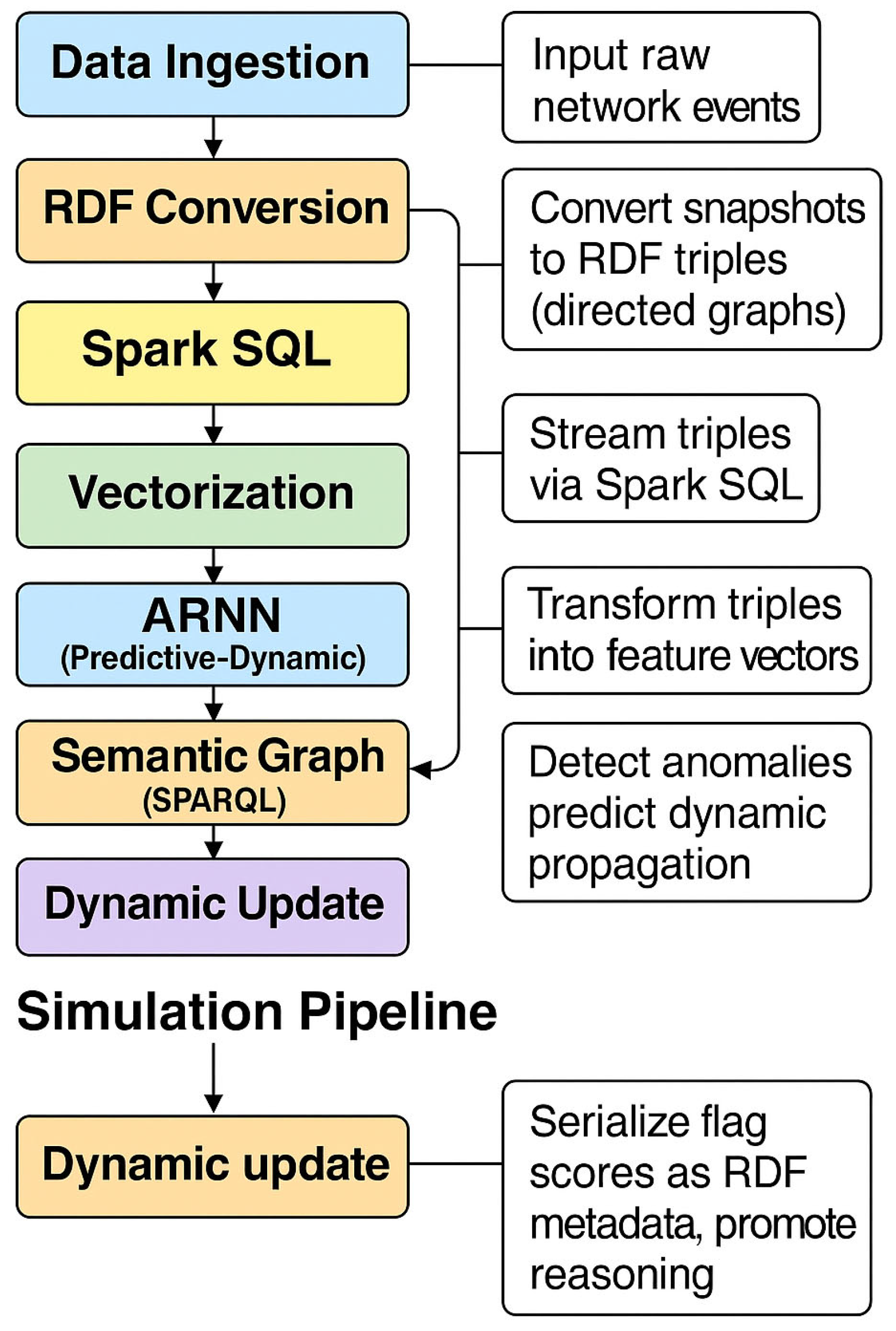

3.1. Simulation Pipeline: Formal (H-DIR)2 Workflow

- −

- . Entropy-based selection : T0 → S(∆t). Apply entropy-aware filtering over streaming windows ∆t, identifying significant deviations for further analysis [18].

- −

- . Vectorization : S(∆t) → xt. Converts RDF snapshots to compact feature vectors for input into the neural model.

- −

- . ARNN core . Generates anomaly scores and updates the prediction model state.

- −

- . Semantic graph injection 5: → Gt;. Serializes the output scores as RDF graphs, enabling explainable decision support.

- −

- . Dynamic update . Closes the observation prediction loop and updates the run-time knowledge base.

- (i)

- Achieving sub-second detection latency even under high-throughput conditions;

- (ii)

- Ensuring explainability via symbolic traceability;

- (iii)

- Enabling modular deployment across heterogeneous environments (cloud, edge, and industrial).

3.2. Formal Workflow and Composability of the (H-DIR)2 Pipeline

- −

- Feature schema: the vectorizer ϕ loads a protocol-specific dictionary DΠ (e.g., TCP flags, RPL codes).

- −

- Loss re–weighting: The framework tunes (α, β) per attack Λ to balance node classification and edge prediction objectives. For SYN Flood, α ≫ β prioritizes rapid node compromise detection; for DAO DIO, β dominates to reveal routing loops.

- −

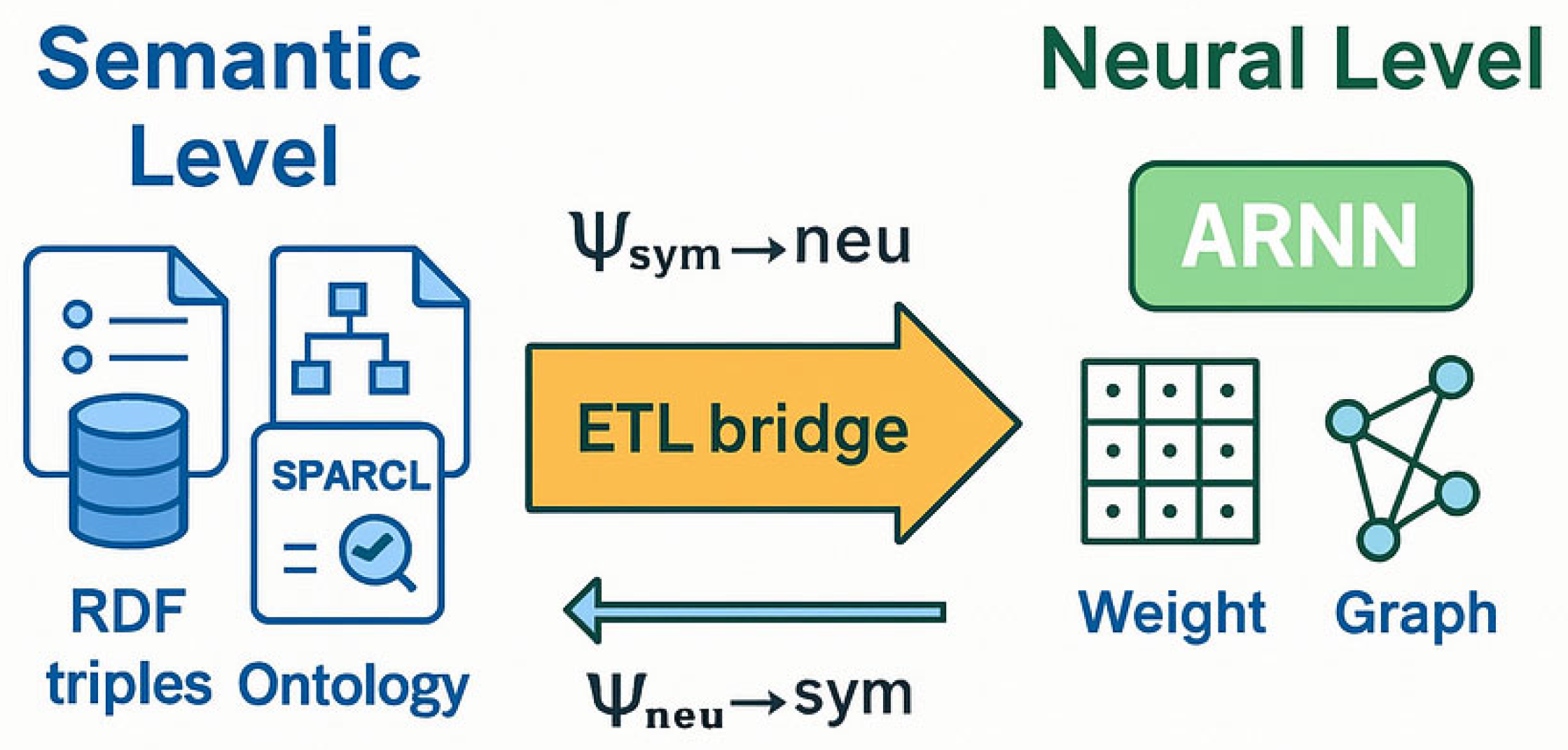

- Graph semantics: the risk-injection operator Ψneu→sym appends triples in a namespace, Π, to ensure that protocol-specific SPARQL rules remain valid.

3.2.1. RDF Conversion Level

3.2.2. Spark SQL/Streaming Selection Level

3.2.3. Vectorization Level

3.2.4. ARNN Core Level

3.2.5. Semantic Graph Coupling (SPARQL)

3.2.6. Dynamic Update Loop

- (i)

- Low detection latency ;

- (ii)

- Entropy-based triggering when ΔHt > θH;

- (iii)

- Critical node identification via

3.3. Entropy-Based Detection and Adaptive Defense with (H-DIR)2

- −

- P(xi) is the empirical probability of observing the i-th outcome in the window.

- −

- n is the number of distinct values assumed by X.

- (i)

- It filters candidate traffic windows for deeper neural inference;

- (ii)

- It tags RDF triples in the semantic graph layer with contextual anomaly metadata (e.g., :hasEntropyDrift “0.86”), enabling transparent querying via SPARQL.

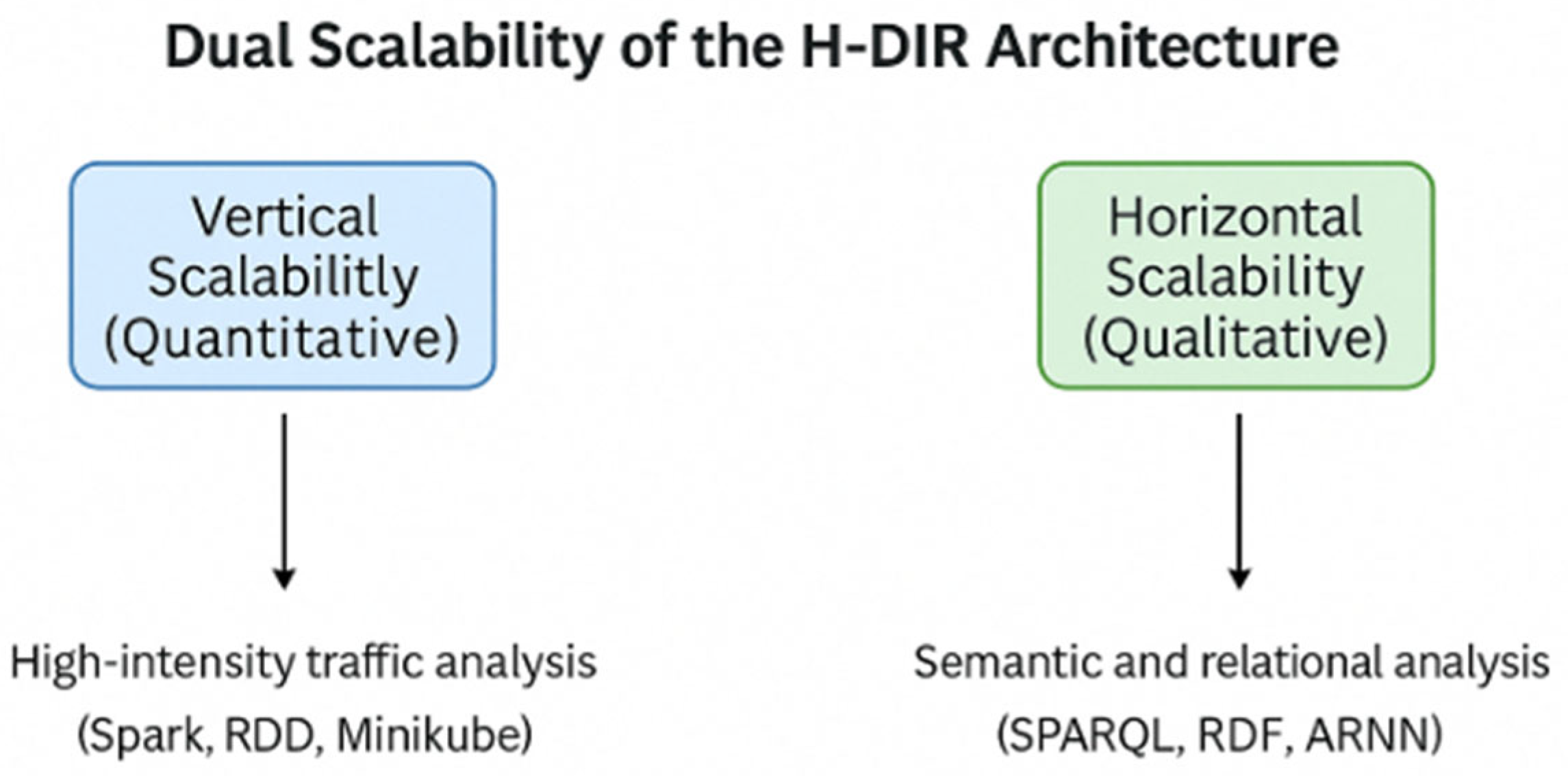

3.4. Dual Scalability of the (H-DIR)2 Architecture

3.5. Integration with Apache Spark and RDF Graphs

- −

- Nodes represent entities such as IP addresses or ports;

- −

- Edges encode typed interactions (packet type, temporal correlation).

3.6. ARNN: Adaptive Neural Modeling for Attack Propagation

- ai(t + 1): activation of node i at time t + 1;

- f(∙) : activation function (e.g., sigmoid);

- wiⱼ: weight of the connection from node (j) to node (i);

- bi: bias of node (i);

- xi(t): external input (e.g., entropy variation or packet count features).

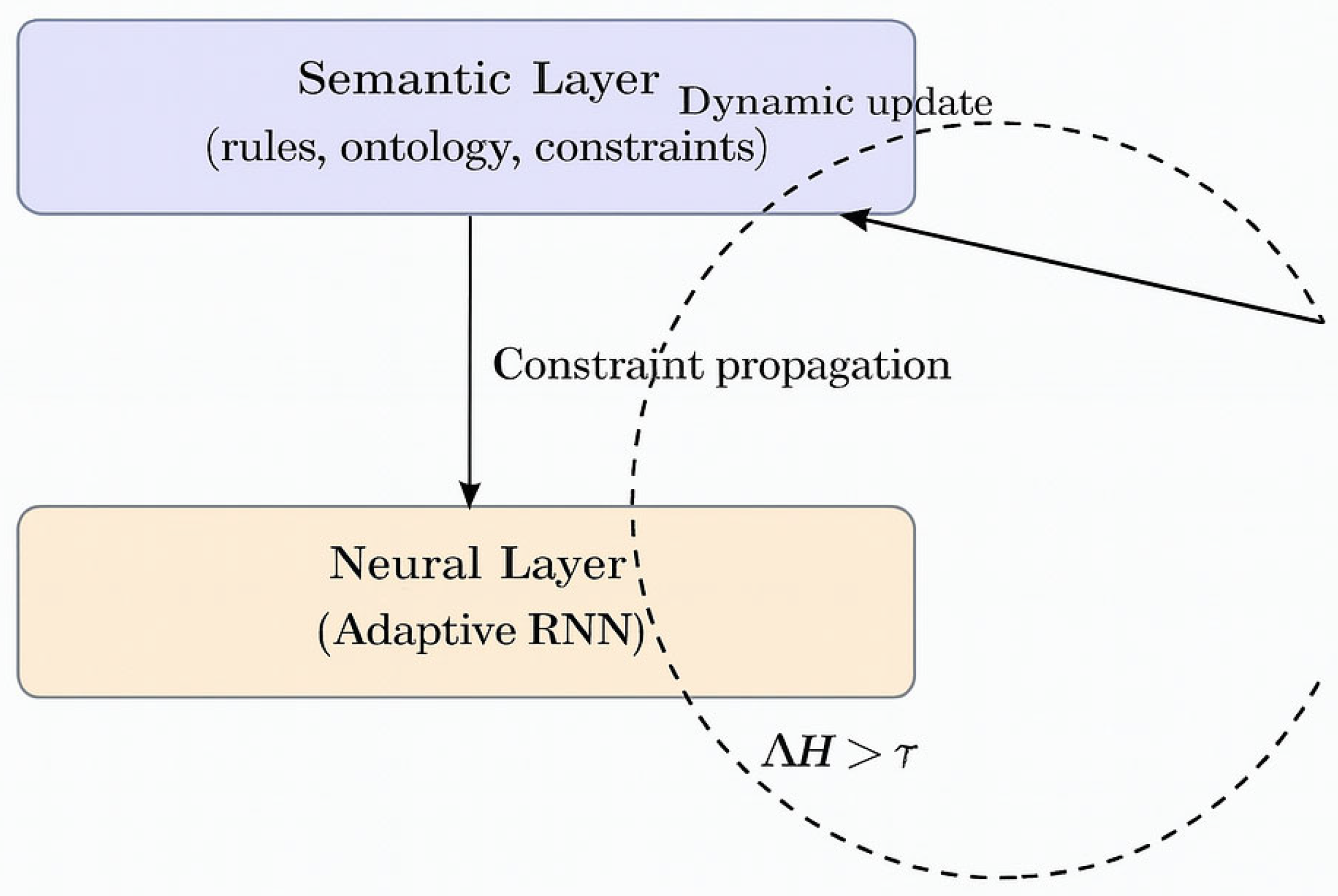

3.7. Semantic–Neural Coupling and Dynamic Update

- −

- Semantic layer—an ontology of protocol rules and expert heuristics that prunes forbidden state transitions;

- −

- Neural layer—an Adaptive Recurrent Neural Network (ARNN) that learns temporal correlations directly from telemetry streams.

- : the probability that an attacker traverses path P.

- ∏: the product operator, iterating over each pair of nodes in the path.

- l: the index of the current step in the path, from 1 to k − 1.

- : the weight associated with the edge from node to the node .

- k: the total number of nodes in the path P = {N1 ,…, Nk}.

3.8. Dynamic Update of the Semantic Graph

| Listing 1. RDF serialisation example. |

| <rdf:Description rdf:about=“http://iot.net/flow/42”> <rdf:type rdf:resource=“http://iot.net/types/TCP_SYN”/> <rdfs:label>TCP_SYN (flag: 1)</rdfs:label> </rdf:Description> |

Semantic Reasoning Layer: Enabling Explainable and Actionable Intelligence

4. Experimental Validation and Results

4.1. SYN Flood Case Study

4.1.1. Data Collection and Preprocessing

- (i)

- Serialized into an RDF triple (operator );

- (ii)

- Windowed by Spark SQL over Δt = 500 ms (operator .);

- (iii)

- Hot vectorized on srcIP, dstIP, and TCP flags (d = 256; operator .);

- (iv)

- Streamed into the ARNN core (operator .).

4.1.2. Evaluation Metrics

- −

- We compute Shannon entropy H(X) over the distribution of TCP flags X = {SYN, SYN–ACK, ACK} and raise an alarm whenever the entropy drop exceeds a predefined threshold, as follows:

- −

- Imbalance ratio: r = #SYN/#SYN–ACK (continuous characteristic).

- −

- ARNN quality: accuracy, false positive rate (FPR), area under the ROC curve (AUC).

- −

- Detection latency: = the time from the first spoofed SYN to the alarm.

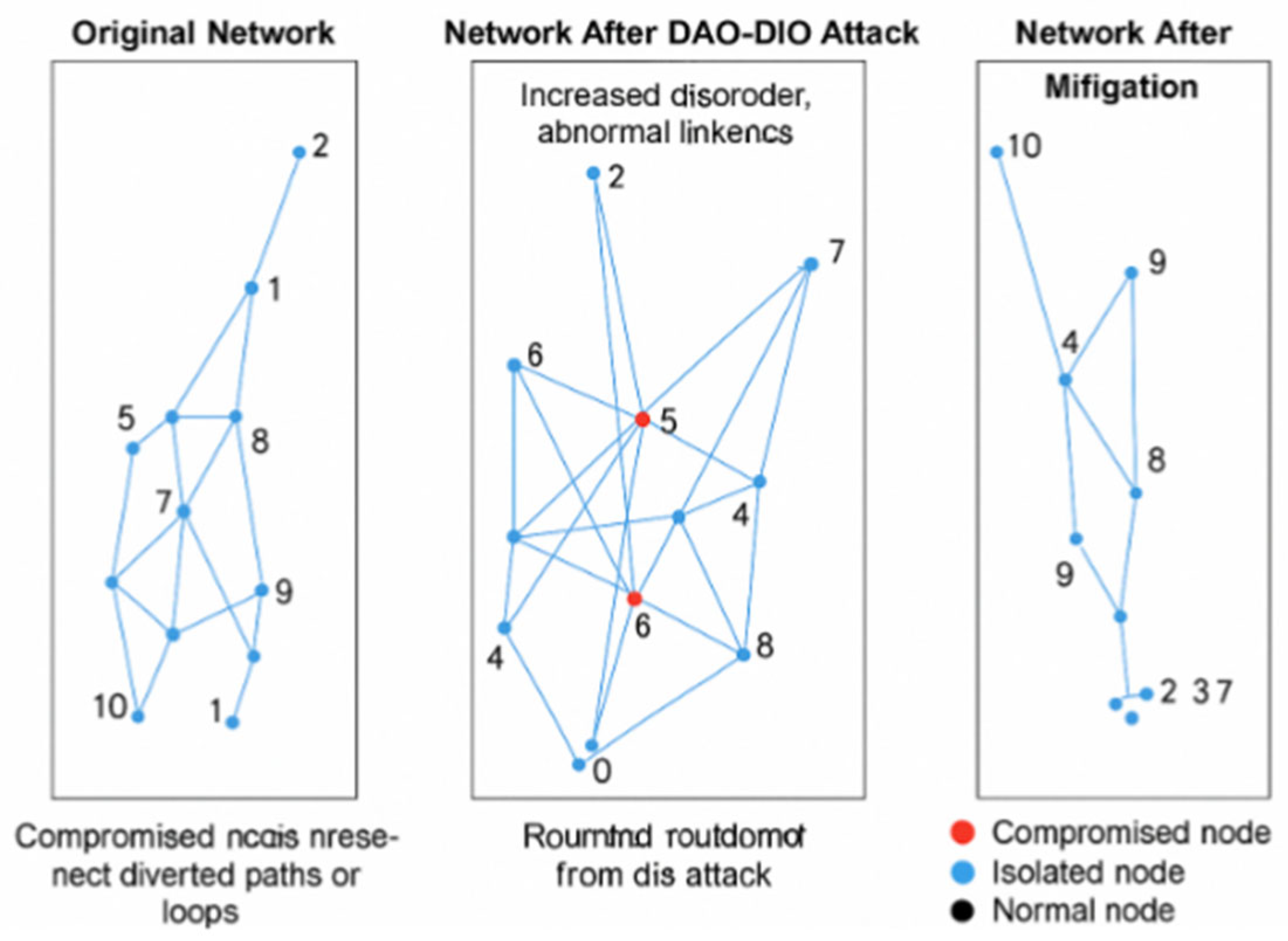

4.2. DAO-DIO Routing Manipulation Case Study

4.2.1. Data Collection and Preprocessing

- −

- () RDF serialization into the IoT–RPL–OWL ontology, yielding T → O0.

- −

- () Streaming windowing with Δt = 5 s and Spark SQL filtering.

- −

- () Vectorisation (d = 256) with hot encodings for node, rank, and type of message.

- −

- () ARNN core—attentive RNN with nin = 128, η = 10−3, loss of weight (α, β) = (0.3, 0.7).

- −

- () Risk scoring Ri = σ(ai), with hosts where Ri > 0.6.

- −

- () Graph feedback via SPARQL INSERT triples (:hasHighRisk true), closing the adaptive loop.

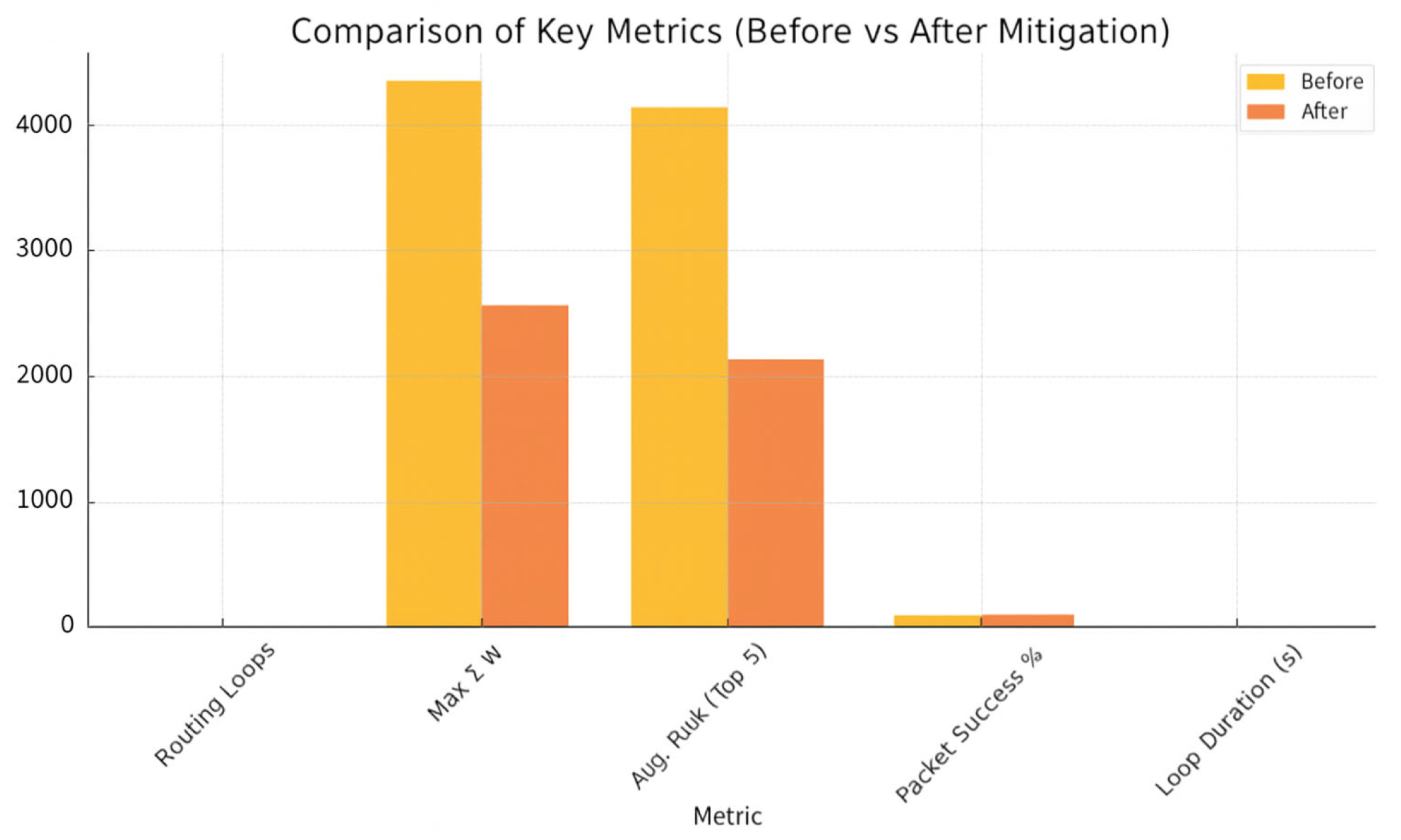

4.2.2. Evaluation Metrics

- −

- Routing loops—number of closed rank cycles.

- −

- Maximum incoming risk maxi ∑w in the learned graph (along j → w → j paths).

- −

- Packet delivery ratio (PDR).

- −

- Average loop duration in seconds.

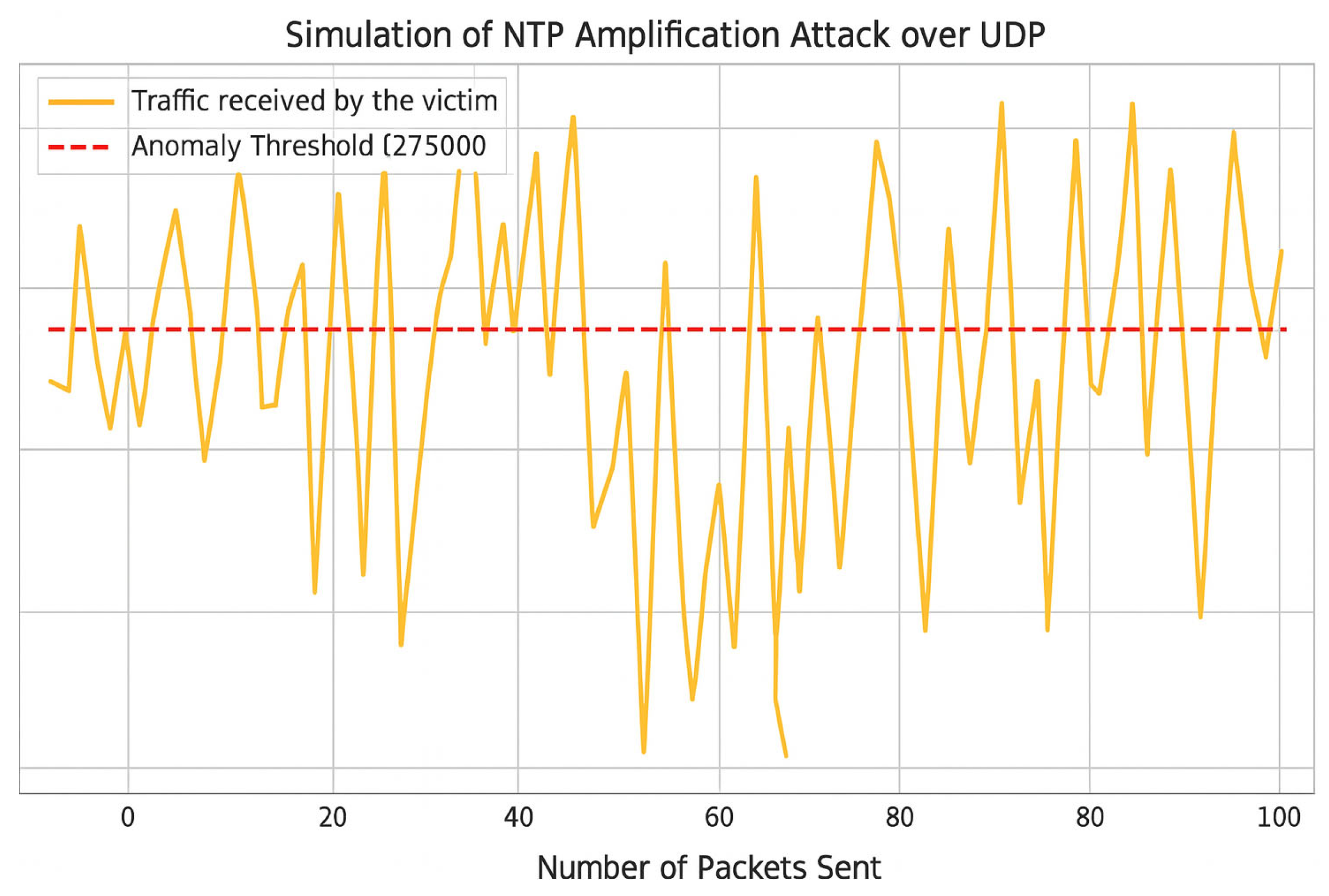

4.3. NTP Amplification Case Study

4.3.1. Data Collection and Preprocessing

- −

- () RDF serialization into the IoT–UDP–OWL schema.

- −

- () Windowing Δt = 1 s; Spark SQL computes the entropy per IP via Spark SQL.

- −

- () Vectorization (d = 128) on srcIP, dstIP, UDP ports, NTP-cmd.

- −

- () ARNN core—using an LSTM variant (3 layers, 64 cells, η = 2 × 10−3).

- −

- () Risk scoring where alarms are triggered for Ri > 0.55.

- −

- () Graph feedback via RDF injection (:underMitigation true), closing the adaptive mitigation loop.

- −

- Edge caching (C = 0.9) to absorb duplicate replies.

- −

- Anycast load distribution over S = 5 edge nodes.

- −

- Entropy filter—alarm if ΔH ≥ θH = 1.5 bits.

- −

- ARNN early predictor (trained with validation accuracy ACC = 0.90) drives proactive throttling.

4.3.2. Evaluation Metrics

- −

- Peak load on the victim in (Gb/s) as a measure of attack intensity.

- −

- Mitigation latency τmit (in seconds), defined as the delay between entropy shift ΔH and effective traffic suppression.

- −

- Reduction in back-end traffic (ratio), expressed as a percentage of mitigated throughput.

- −

- Early-stage prediction accuracy of the ARNN model, evaluated within the first second of attack onset.

4.4. Cross-Dataset Evaluation and Generalization

- −

- TON_IoT [23]: a telemetry-rich dataset that integrates system logs, network flow, and telemetry data from industrial control environments, allowing for an assessment of (H-DIR)2 under mixed-signal conditions.

- −

- Edge-IIoTset [24]: an edge-oriented dataset that captures multi-protocol traffic and adversarial sequences in decentralized IoT topologies.

4.5. Computational Complexity and Deployability

- −

- Edge node: Raspberry Pi 4 Model B (4 GB RAM): inference latency < 60 ms; RAM< 2.2 GB.

- −

- Embedded board: NVIDIA Jetson Nano: latency < 20 ms with GPU; RAM < 1.4 GB.

- −

- Cloud instance: AWS t3.medium (2 vCPU, 4 GB) -> latency < 8 ms.

4.6. Comparative Summary Across Scenarios

4.7. Extended Comparison with State-of-the-Art Methods

- (i)

- Lowest detection latency (247 ms);

- (ii)

- Highest AUC (0.978);

- (iii)

- Native explainability via entropy via RDF/SPARQL graphs.

4.8. Dynamic Integration Between Semantics and Prediction in (H-DIR)2

5. Conclusions and Future Work

- (i)

- Sensitivity of entropy measures to statistical noise in low-volume flows.

- (ii)

- Lack of GPU-accelerated ARNN training.

- (iii)

- Limited testing on high-churn edge mobility.

- Multimodal telemetry: extending entropy and RDF encoding to streams such as EPC logs, OPC-UA messages, and container-level resource metrics.

- Edge stress testing: deploying (H-DIR)2 on resource-constrained microcontrollers and ARM-based edge nodes to benchmark resilience and latency.

- Live threat intelligence: integrating the semantic layer with external feeds (e.g., STIX, MISP) to support real-time inference updates and zero-day anticipation.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| RDF | Resource Description Framework |

| ARNN | Associated Random Neural Network |

| SPARQL | SPARQL Protocol and RDF Query Language |

| NTP | Network Time Protocol |

| RPL | Routing Protocol for Low Power and Lossy Networks |

| AUC | Area Under the Curve |

Appendix A. Mathematical Proofs

Appendix A.1. Closed-Form Backlog Threshold

- −

- t* denotes the threshold time at which the backlog derivative becomes null (i.e., ΔH(t*) = τ).

- −

- λ is the entropy-weighted arrival rate.

- −

- μ is the service rate.

- −

- dB/dt is the time derivative of the backlog function B(t).

- −

- W(·) is the Lambert W function.

Appendix A.2. Pseudocode of Semantic Injection Module

| Fig. | Label | Caption |

|---|---|---|

| 1 | fig:workflow | (H-DIR)2 framework simulation pipeline. Operators O0 → O5 represent RDF ingestion, Spark SQL streaming, vectorization, ARNN prediction, semantic enrichment, and dynamic update. |

| 2 | fig: dual scalability | Dual scalability of the (H-DIR)2 architecture. Vertical scalability (Spark, RDD, Minikube) vs. horizontal semantic scalability (SPARQL, RDF, ARNN). |

| 3 | fig:dual-level-cycle | Semantic-ARNN coupling. Dynamic loop between semantic rules and ARNN constraints, with ΔH > τ triggering adaptation. |

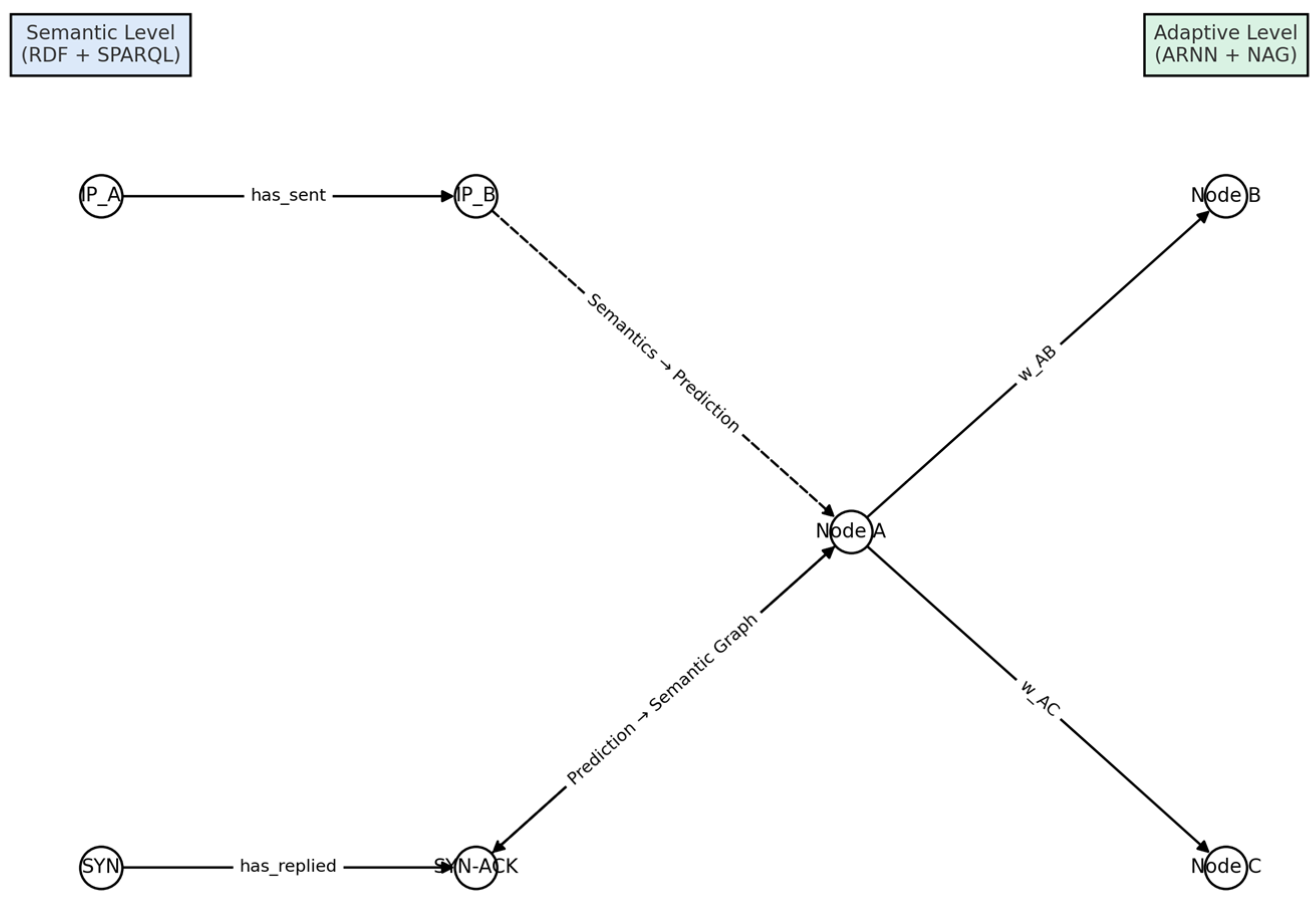

| 4 | fig:etl-bridge | The ψₛₑₘ→ₙₑᵤ operator maps semantic triples into the ARNN input space, while the neural outputs are subsequently re-encoded as RDF triples. |

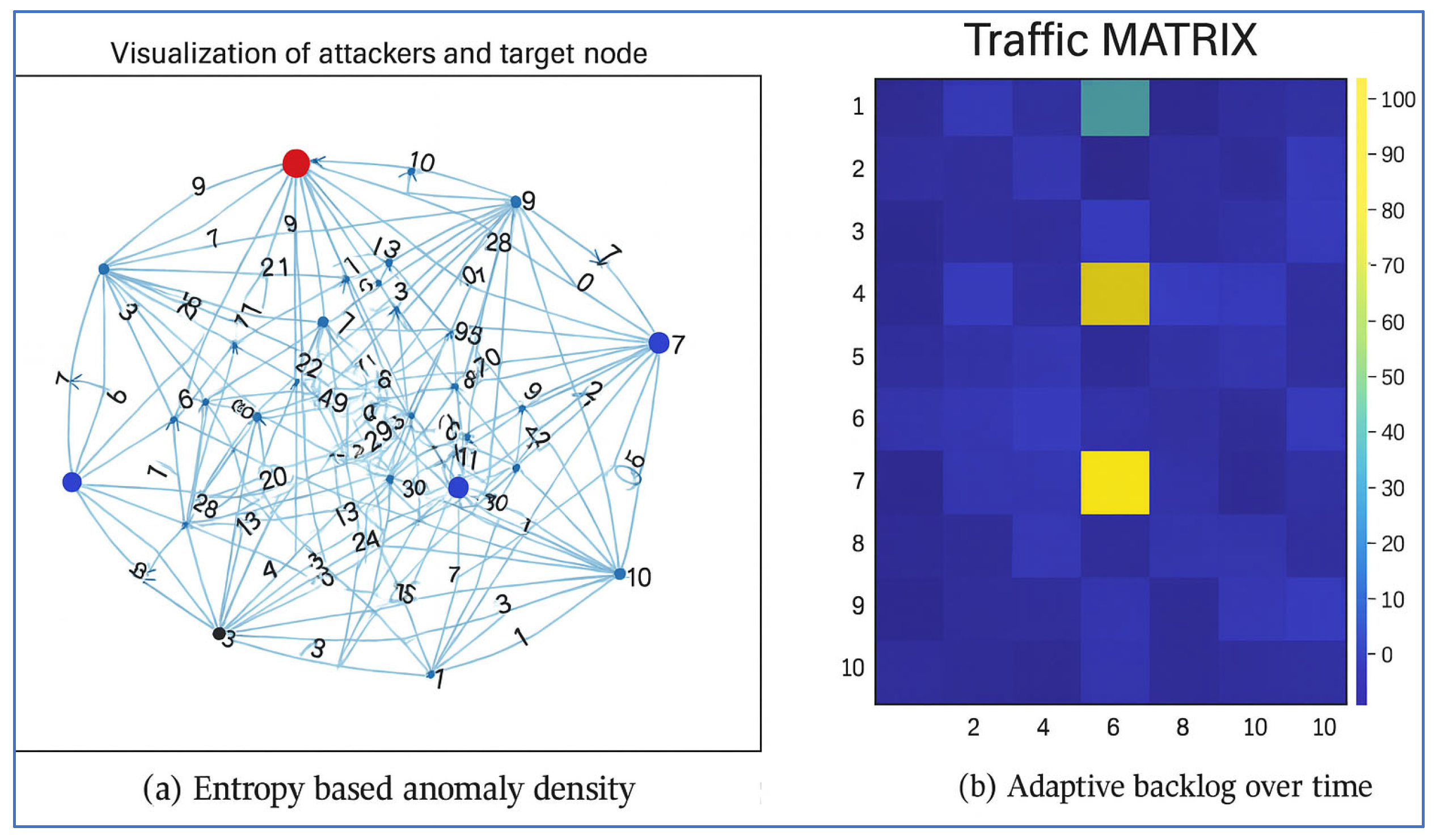

| 5 | fig: graph-matrix | Neural attack graph construction. Nodes flagged as critical if ∑j wij > γ, enabling initiative-taking mitigation and risk propagation. |

| 6 | fig:dao-rpl | DAO DIO attack visualization. Entropy drift and semantic feedback highlight abnormal loops in IPv6 RPL topologies. |

| 7 | fig: dao-routing | The center panel illustrates the impact of a DAO-DIO manipulation attack, which increases disorder and creates abnormal links. |

| 8 | fig: NTP amp | The framework semantically annotates TTL drift, route deviation, and back-end packet surge using RDF/SPARQL and subsequently visualizes them for analysis. |

| 9 | fig: NTP amp | Case study |

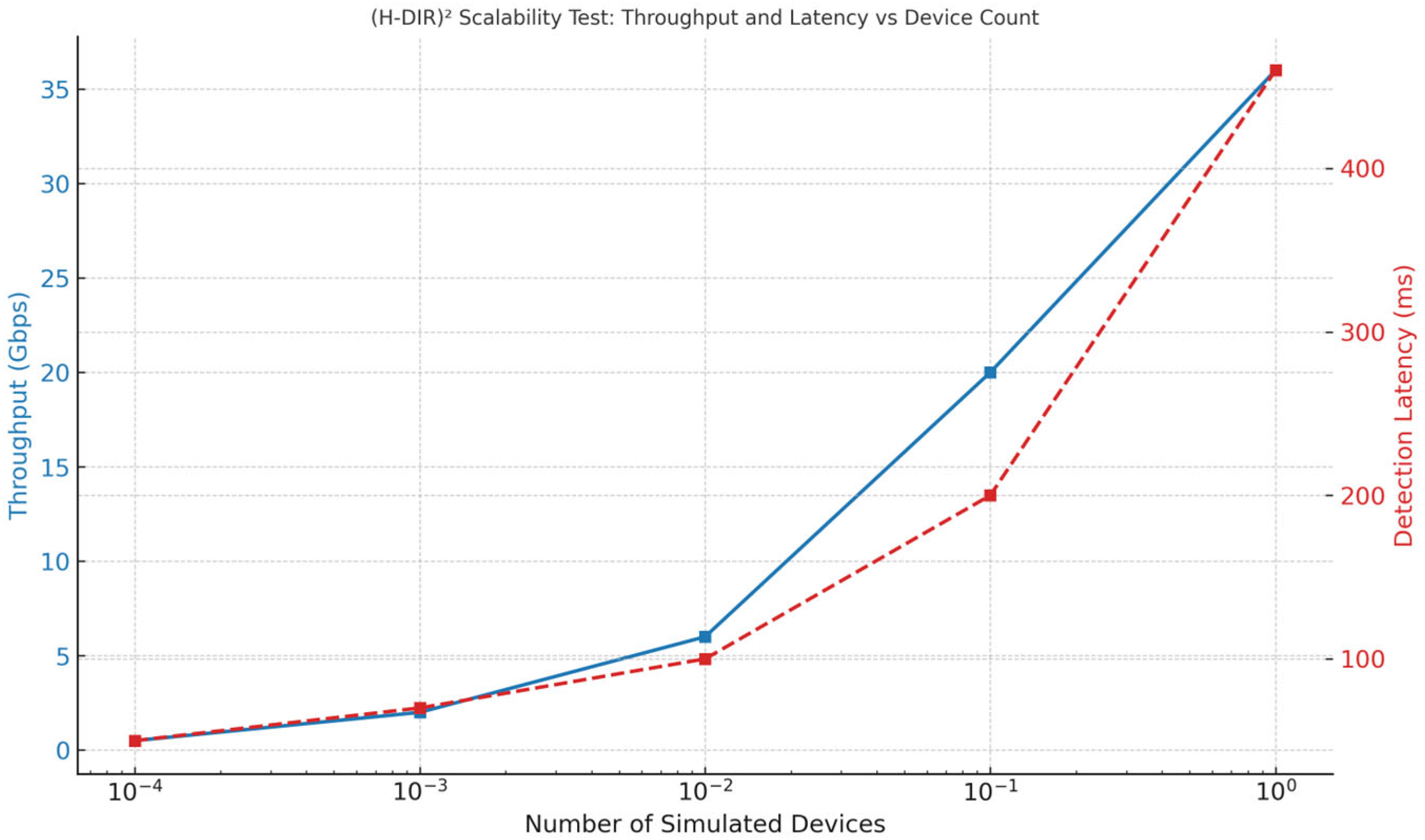

| 10 | fig: scalability-stress | Throughput and Latency vs. Device Count |

| 11 | fig: semantic graph | Semantic-to-Adaptive graph interaction. Conceptual diagram showing how RDF/SPARQL assertions affect node-level predictions via ψ_sem→neu, and how ARNN updates feedback into semantic enrichment via ψ_neu→sym. |

References

- Mirsky, Y.; Doitshman, T.; Elovici, Y.; Shabtai, A. Kitsune: An ensemble of autoencoders for online network intrusion detection. arXiv 2018, arXiv:1802.09089. [Google Scholar] [CrossRef]

- Sicari, S.; Rizzardi, A.; Coen-Porisini, A. 5G in the Internet of Things Era: An Overview on Security and Privacy Challenges. Comput. Netw. 2020, 179, 107345. [Google Scholar] [CrossRef]

- García-Teodoro, P.; Díaz-Verdejo, J.; Maciá-Fernández, G.; Vázquez, E. Anomaly-based network intrusion detection: Techniques, systems and challenges. Comput. Secur. 2009, 28, 18–28. [Google Scholar] [CrossRef]

- Feily, M.; Shahrestani, A.; Ramadass, S. A survey of botnet and botnet detection. In Proceedings of the 2009 Third International Conference on Emerging Security Information, Systems, and Technologies, Athens, Greece, 18–23 June 2009; pp. 268–273. [Google Scholar]

- Kurtz, N.; Song, J. Cross-entropy-based adaptive importance sampling using Gaussian mixture. Struct. Saf. 2013, 42, 35–44. [Google Scholar] [CrossRef]

- Gelenbe, E.; Nakip, M. IoT Network Cybersecurity Assessment with the Associated Random Neural Network. IEEE Access 2023, 11, 85501–85512. [Google Scholar] [CrossRef]

- Canadian Institute for Cybersecurity. CIC-DDoS2019 Dataset. 2019. Available online: https://www.unb.ca/cic/datasets/ddos-2019.html (accessed on 29 June 2025).

- Zaharia, M.; Chowdhury, M.; Das, T.; Dave, A.; Ma, J.; McCauley, M.; Franklin, M.J.; Shenker, S.; Stoica, I. Resilient Distributed Datasets: A Fault-Tolerant Abstraction for In-Memory Cluster Computing. In Proceedings of the 9th USENIX Symposium on Networked Systems Design and Implementation (NSDI 12), San Jose, CA, USA, 25–27 April 2013; pp. 15–28. [Google Scholar]

- Gelenbe, E. Random Neural Networks with Negative and Positive Signals and Product Form Solution. Neural Comput. 1989, 1, 502–510. [Google Scholar] [CrossRef]

- Paxson, V.; Allman, M.; Chu, J.; Sargent, M. Computing TCP’s Retransmission Timer, RFC 6298, IETF, 2011.

- Marcov, L.; Redondi, A.; Zhou, Y.; Tosi, D. DAO-DIO Routing Manipulation Dataset. 2023. [Google Scholar] [CrossRef]

- Mirsky, Y.; Doitshman, T.; Elovici, Y.; Shabtai, A. Kitsune Network Attack Dataset—NTP Amplification Subset. 2023. Available online: https://www.kitsune-dataset-collection.org/NTP-Subset (accessed on 29 June 2025).

- Wilson, E.B. Probable Inference, the Law of Succession, and Statistical Inference. J. Am. Stat. Assoc. 1927, 22, 209–212. [Google Scholar] [CrossRef]

- Li, X.; Wang, Y.; Zhang, H. Scalable Anomaly Detection in IoT Using Resilient Distributed Datasets and Machine Learning. In Proceedings of the 2023 IEEE International Conference on Big Data (Big Data), Sorrento, Italy, 15–18 December 2023; pp. 123–130. [Google Scholar]

- Davide, T.; Pazzi, R. Design and Experimentation of a Distributed Information Retrieval-Hybrid Architecture in Cloud IoT Data Centers. In Proceedings of the IFIP International Internet of Things Conference, Nice, France, 7–9 November 2024; Springer: Cham, Switzerland, 2024; pp. 12–21. [Google Scholar]

- Mehmood, K.; Kralevska, K.; Palma, D. Knowledge Graph Embedding in Intent-Based Networking. In Proceedings of the 2024 IEEE 10th International Conference on Network Softwarization (NetSoft), Saint Louis, MO, USA, 24–28 June 2024; pp. 13–18. [Google Scholar]

- Pérez, J.; Arenas, M.; Gutierrez, C. Semantics and Complexity of SPARQL. ACM Trans. Database Syst. 2009, 34, 1–45. [Google Scholar] [CrossRef]

- Gu, Y.; Li, K.; Guo, Z.; Wang, Y. A Deep Learning and Entropy-Based Approach for Network Anomaly Detection in IoT Environments. IEEE Access 2019, 7, 169296–169308. [Google Scholar]

- Zaharia, M.; Xin, R.S.; Wendell, P.; Das, T.; Armbrust, M.; Dave, A.; Meng, X.; Rosen, J.; Venkataraman, S.; Franklin, M.J.; et al. Apache Spark: A Unified Engine for Big Data Processing. Commun. ACM 2016, 59, 56–65. [Google Scholar] [CrossRef]

- Yin, C.; Zhu, Y.; Fei, J.; He, X. A Deep Learning Approach for Intrusion Detection Using Recurrent Neural Networks. IEEE Access 2017, 5, 21954–21961. [Google Scholar] [CrossRef]

- Peng, W.; Chen, Q.; Peng, S. Graph-Based Security Analysis for Industrial Control Systems. IEEE Trans. Ind. Inform. 2018, 5, 1890–1900. [Google Scholar]

- Moseni, A.; Jha, N.K. Addressing IoT Security Issues. IEEE Internet Things J. 2017. [Google Scholar]

- Awad, M.; Fraihat, S.; Salameh, K.; Al Redhaei, A. Examining the suitability of NetFlow features in detecting IoT network intrusions. Sensors 2022, 22, 6164. [Google Scholar] [CrossRef] [PubMed]

- Ramaiah, M.; Rahamathulla, M.Y. Securing the industrial IoT: A novel network intrusion detection models. In Proceedings of the 2024 3rd International Conference on Artificial Intelligence for Internet of Things (AIIoT), Vellore, India, 3–4 May 2024; pp. 1–6. [Google Scholar]

- Azeroual, O.; Nikiforova, A. Apache Spark and MLlib-Based Intrusion Detection System or How the Big Data Technologies Can Secure the Data. Information 2022, 13, 58. [Google Scholar] [CrossRef]

- Liu, F.T.; Ting, K.M.; Zhou, Z.-H. Isolation Forest. In 2008 Eighth IEEE International Conference on Data Mining; IEEE: Piscataway, NJ, USA, 2008; pp. 413–422. [Google Scholar] [CrossRef]

- Ergen, T. Unsupervised and Semi-supervised Anomaly Detection with LSTM Neural Networks. arXiv 2017, arXiv:1710.09207. [Google Scholar] [CrossRef]

| Component | Function Within the Pipeline |

|---|---|

| Entropy-based detector | Computes Shannon entropy per window and raises agnostic alarms for zero-day vectors [7]. |

| Apache Spark/Spark SQL | Distributed micro batch analytics sustaining terabyte scale streams [8]. |

| Adaptive Random Neural Network | Online learning that converts traffic features into probabilistic Network Attack Graphs [9]. |

| RDF/SPARQL layer | Serializes each packet as triples, enabling rule-based reasoning and explainability [10]. |

| Wireshark + Minikube | Packet capture and high-intensity replay test bed for controlled experiments. |

| Attack | Protocol Layer | Key Entropy Signal | Mitigation Module |

|---|---|---|---|

| TCP SYN Flood | Transport | ΔH flag spike | Adaptive Rate Limiter (Section 4.1) |

| DAO DIO (RPL) | IoT Network | ΔH path drift | Route Sanitizer (Section 4.2) |

| NTP Amplification | Application/UDP | ΔH size bimodality | Amplification Throttler (Section 4.3) |

| Symbol | Meaning |

|---|---|

| Ω | Measurable space of observed network events. |

| 𝒜 | σ-algebra on Ω. |

| ℙ | Probability measure on (Ω, 𝒜). |

| Gt; = (V, Wt;) | Weighted attack graph at discrete time t. |

| V | Set of vertices (network nodes). |

| Wt; | Set of weights on time-varying directed risk relationships. |

| SPARQL Query: Identify High-Risk Hosts Under Mitigation |

|---|

| SELECT ?host WHERE { |

| ?host :isAmplificationSource “suspected”; |

| :underMitigation true; |

| :hasSeverity “high”. |

| } |

| Value | 95% CI | |

|---|---|---|

| Accuracy | 94.1% | [93.7, 94.5] |

| FPR | 4.7% | [4.3, 5.1] |

| AUC | 0.978 | ±0.004 |

| τdet | 247 ms | [221, 273] ms |

| ΔH* (peak) | –1.15 bits | N/A |

| rattack | 27.4 ± 3.5 | N/A |

| Metric | Before | After | Improvement |

|---|---|---|---|

| Routing loops [#] | 9.0 | 2.0 | –78% |

| Max incoming risk (∑w) | 4301 | 2550 | –41% |

| PDR [%] | 81.2 | 96.4 | +18% |

| Avg. loop duration [s] | 18.0 | 5.0 | –72% |

| Architecture | Peak Load [Gb/s] | τmit [s] | Backend Reduction |

|---|---|---|---|

| Centralized | 8.1 | 7.1 | 0% |

| Distributed | 4.3 | 3.1 | 47% |

| +Caching | 1.2 | 2.0 | 85% |

| (H-DIR)2 | 1.0 | 1.7 | 88% |

| Dataset | Origin | Protocols | Type | Description |

|---|---|---|---|---|

| CIC-DDoS2019 | CIC-IDS Lab | TCP/UDP/ICMP | Network Flow | Simulated DDoS traces with labeled attack categories |

| TON_IoT | UNSW Canberra | TCP/UDP/HTTP | Syslog + Netflow | IoT-oriented dataset combining telemetry, network traffic, and system metrics |

| Edge-IIoTset | Edge-IoT Lab | MQTT, CoAP, HTTP | Multi-protocol | Decentralized edge computing scenarios with embedded attack sequences |

| Platform | Inference Latency | Stack | Remarks |

|---|---|---|---|

| Raspberry Pi 4B | <60 ms | RDF + ARNN | Edge-compatible; supports only one protocol stack (e.g., TCP) at a time. |

| Jetson Nano | <20 ms | RDF + ARNN (GPU) | GPU-accelerated; enables simultaneous multi-protocol inference. |

| AWS t3.medium | <8 ms | Spark + RDF + ARNN | Full stack deployment includes Spark pipeline, RDF layer, and neural core. |

| Devices | Data (TB) | Latency (ms) | Throughput (Gbps) | ΔH Stability |

|---|---|---|---|---|

| 100 | 0.01 | 45 | 0.5 | Stable |

| 1000 | 0.10 | 70 | 1.1 | Stable |

| 10,000 | 1.0 | 110 | 5.8 | Stable |

| 100,000 | 5.0 | 230 | 19.4 | Stable |

| 1,000,000 | 10.0 | 470 | 36.0 | Slight Drift |

| Attack Type | Target Protocol | Key Threat and Detection Method | Response |

|---|---|---|---|

| SYN Flood | TCP | ∆H entropy + ARNN graph | SYN cookies; adaptive throttling |

| DAO DIO | RPL (IoT) | Routing loops; black-hole detection | Entropy + semantic RDF; graph-based reconfiguration |

| NTP Amplification | UDP/NTP | Bandwidth congestion; saturation profiling | ARNN + LSTM + load profiling; caching; smart filtering; isolation |

| Layer | Details |

|---|---|

| Entropy Monitor | Governing equations: Alert when ΔH = H − Hbaseline ≥ θ = −H. Purpose: Fast, feature-agnostic anomaly flagging. Key tunables: Feature set F, window width w, threshold θ = −H. |

| Adaptive Random Neural Network (ARNN) | Governing equations: Weight update: wiⱼ ← wiⱼ − η Where Ltotal = αLclass + βLgraph. Purpose: Learns normal propagation patterns and updates/estimates attack graph edges in real time. Key tunables: Learning rate η, α/β balance, number of hidden units. |

| Network-Attack Graph (NAG) | Governing equations: Adjacency matrix W = [wiⱼ] Attack path probability: Pattack(Ni → Nₖ) = Critical nodes: Ncrit = {i: } Purpose: Predicts likely propagation paths and identifies “hot” nodes to quarantine. Key tunables: Risk cut-off γ, number of top-k paths tracked. |

| Load balancing/Caching (UDP amplification) | Governing equations: Centralized load: L = ∑ Ri Per-server load with cache: Lⱼ = (1 − C) Purpose: Explains how any-cast and edge caching reduce traffic seen by each original server. Key tunables: Cache ratio C, number of servers S. |

| Method | Latency (ms) | AUC | F1-Score | Explainability (Entropy) | Semantic Reasoning | Real-Time | Ref. |

|---|---|---|---|---|---|---|---|

| Spark IDS | 950 | 0.91 | 0.87 | ✗ | ✗ | ✓ | [M1] |

| Kitsune-AE | 670 | 0.93 | 0.89 | ✗ | ✗ | ✓ | [M2] |

| Isolation Forest | 720 | 0.91 | 0.86 | ✗ | ✗ | ✓ | [M3] |

| Gated RNN | 580 | 0.94 | 0.88 | ✓ | ✗ | ✓ | [M4] |

| (H-DIR)2 | 247 | 0.98 | 0.95 | ✓ | ✓ | ✓ | This work |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tosi, D.; Pazzi, R. (H-DIR)2: A Scalable Entropy-Based Framework for Anomaly Detection and Cybersecurity in Cloud IoT Data Centers. Sensors 2025, 25, 4841. https://doi.org/10.3390/s25154841

Tosi D, Pazzi R. (H-DIR)2: A Scalable Entropy-Based Framework for Anomaly Detection and Cybersecurity in Cloud IoT Data Centers. Sensors. 2025; 25(15):4841. https://doi.org/10.3390/s25154841

Chicago/Turabian StyleTosi, Davide, and Roberto Pazzi. 2025. "(H-DIR)2: A Scalable Entropy-Based Framework for Anomaly Detection and Cybersecurity in Cloud IoT Data Centers" Sensors 25, no. 15: 4841. https://doi.org/10.3390/s25154841

APA StyleTosi, D., & Pazzi, R. (2025). (H-DIR)2: A Scalable Entropy-Based Framework for Anomaly Detection and Cybersecurity in Cloud IoT Data Centers. Sensors, 25(15), 4841. https://doi.org/10.3390/s25154841