A Perspective on Quality Evaluation for AI-Generated Videos

Abstract

1. Introduction

2. AIGC Video Generation

3. Text-to-Video Quality Assessment Benchmarks

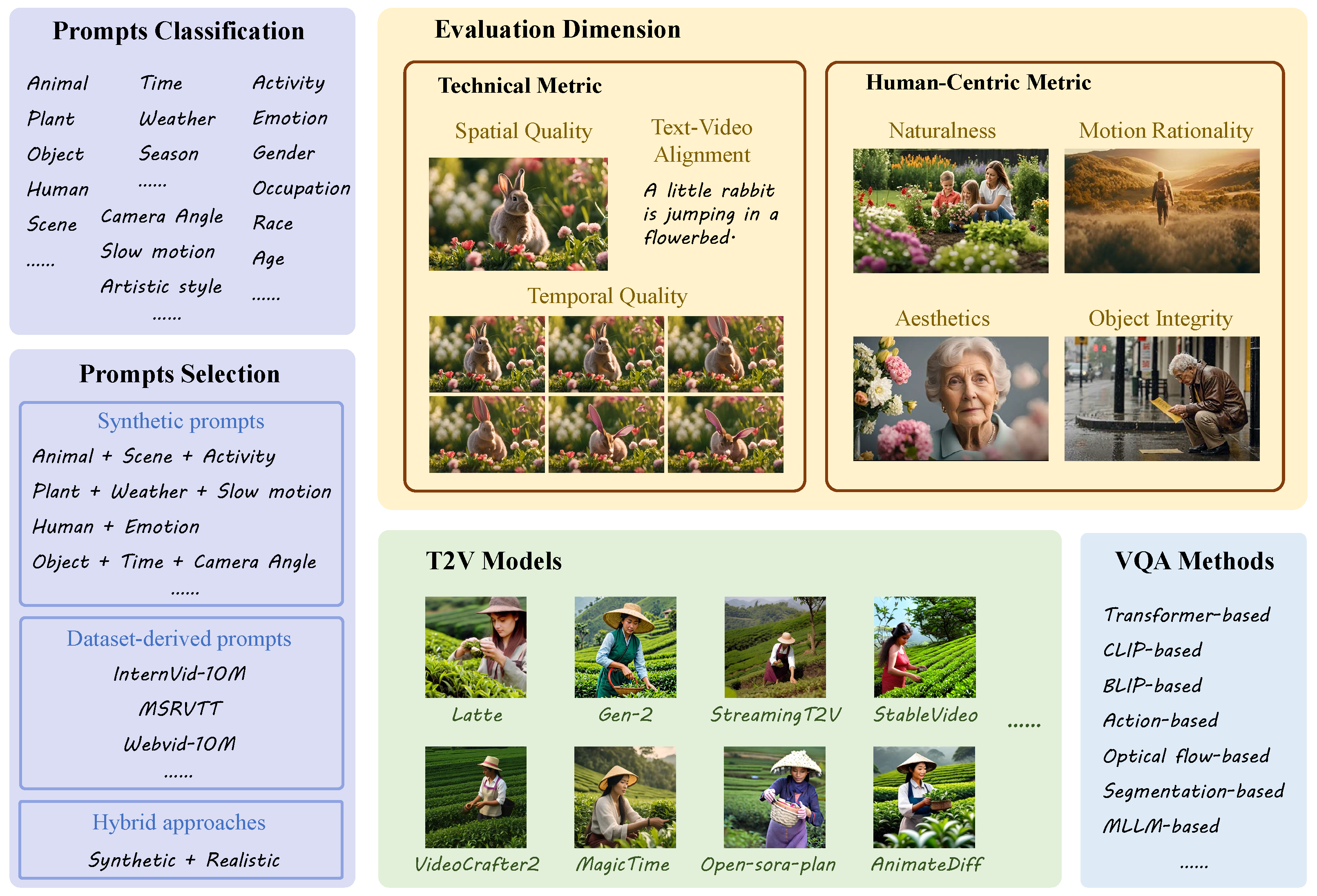

3.1. Prompts Selection

3.2. Evaluation Dimensions

3.2.1. General Evaluation Dimensions

3.2.2. Special Content Evaluation Dimensions

3.3. Dataset Descriptions

4. Methodologies for AIGC Video Quality Assessment

4.1. Traditional UGC Video Quality Assessment

4.2. AIGC Video Quality Assessment

4.3. Technical Trends on MLLMs

4.3.1. Empirical Superiority of MLLM–Based Metrics

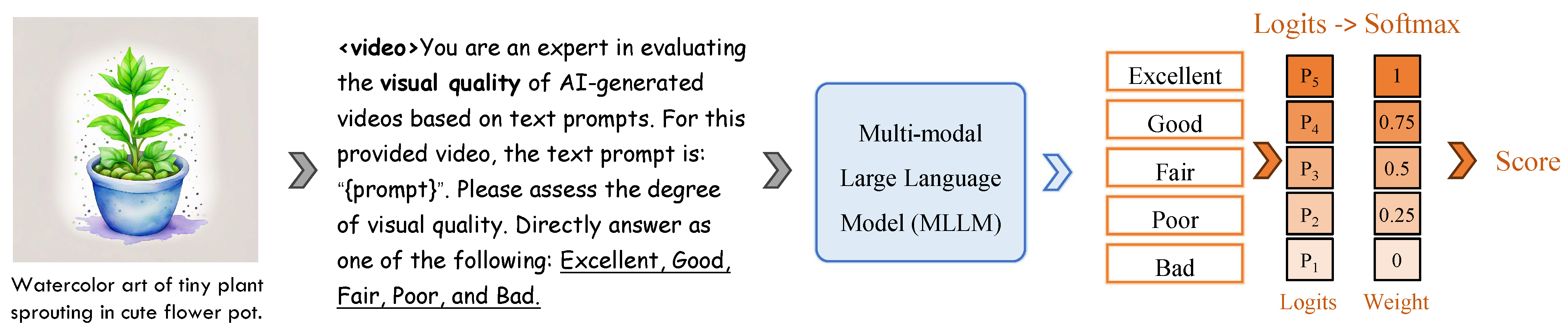

- Backbone freezing and partial fine-tuning. The entire vision encoder is frozen. We fine-tune only the language model. This choice preserves low-level perceptual features while allowing the linguistic pathway to learn a quality-aware vocabulary.

- Discretised quality tokens. Continuous mean-opinion scores (MOSs) are mapped to five ordinal tokens: <Excellent> (5), <Good> (4), <Fair> (3), <Poor> (2), and <Bad> (1). During training the model is asked, via a masked-language objective, to predict the correct token given the video frames and their generating prompt.

- Score reconstruction at inference. Let be the softmax probabilities over the five tokens. The final quality estimate is the expectation , i.e., a weighted sum where token weights correspond to their ordinal ranks.

- Uniform frame sampling. Each video is temporally normalized to 32 frames. For clips shorter than 32 frames, we use all available frames; longer clips are uniformly sub-sampled without replacement. Frames are resized to and packed into a single visual sequence that the frozen encoder processes in one forward pass.

- Spatial fidelity. On LGVQ, the leading CNN metric LIQE attains an SRCC of 0.721, whereas Ovis2 and QwenVL2.5 push the score to 0.751 and 0.776, respectively—an absolute gain of 0.03–0.06 (4–8%). A comparable improvement is observed on FETV (0.799 vs. 0.832–0.854).

- Temporal coherence. Motion-aware CNNs such as SimpleVQA plateau at 0.857 SRCC on LGVQ; QwenVL2.5 elevates this to 0.893. On FETV, every MLLM surpasses the best CNN baseline (FastVQA, 0.847), again converging near 0.893.

- Prompt consistency. Alignment is the most challenging axis. Frame-level VLM scores (e.g., CLIPScore) reach only 0.446 SRCC on LGVQ. MLLMs close over one-third of that gap, with DeepSeek-VL2 achieving 0.551; on FETV the margin widens from 0.607 to 0.747, a 23% relative lift.

4.3.2. Roadmap for MLLM-Centric VQA Pipelines

- Multimodal fusion at scale. Future assessors will ingest synchronized visual, textual, and motion tokens, leveraging backbones such as CLIP [101], QwenVL [6], InternVL [7], and GPT-4V [102]. A single forward pass will jointly rate spatial fidelity, temporal smoothness, and prompt faithfulness, eliminating the need for ad hoc score aggregation.

- Data-efficient specialization. Domain shifts—new genres, unseen distortion types, or language locales—will be handled by lightweight adapters: prompt engineering, LoRA fine-tuning, and semi-supervised self-distillation. These techniques cut annotation cost by orders of magnitude while preserving the zero-shot flexibility of the frozen backbone.

- Standardization and deployment. Given the quantitative edge illustrated in Table 5, we expect major toolkits to embed an MLLM core within the next research cycle. Stand-alone feature-engineering pipelines will be relegated to legacy status, much like PSNR after the advent of SSIM.

4.3.3. Limitations

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Namani, Y.; Reghioua, I.; Bendiab, G.; Labiod, M.A.; Shiaeles, S. DeepGuard: Identification and Attribution of AI-Generated Synthetic Images. Electronics 2025, 14, 665. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the Deep Residual Learning for Image Recognition, Las Vegas, CA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast Networks for Video Recognition. In Proceedings of the SlowFast Networks for Video Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6201–6210. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Wang, P.; Bai, S.; Tan, S.; Wang, S.; Fan, Z.; Bai, J.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; et al. Qwen2-vl: Enhancing vision-language model’s perception of the world at any resolution. arXiv 2024, arXiv:2409.12191. [Google Scholar]

- Chen, Z.; Wu, J.; Wang, W.; Su, W.; Chen, G.; Xing, S.; Zhong, M.; Zhang, Q.; Zhu, X.; Lu, L.; et al. Internvl: Scaling up vision foundation models and aligning for generic visual-linguistic tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 24185–24198. [Google Scholar]

- Yang, Z.; Li, L.; Lin, K.; Wang, J.; Lin, C.C.; Liu, Z.; Wang, L. The dawn of lmms: Preliminary explorations with gpt-4v (ision). arXiv 2023, arXiv:2309.17421. [Google Scholar]

- Wang, J.; Duan, H.; Zhai, G.; Wang, J.; Min, X. AIGV-Assessor: Benchmarking and Evaluating the Perceptual Quality of Text-to-Video Generation with LMM. arXiv 2024, arXiv:2411.17221. [Google Scholar]

- Zhang, Z.; Kou, T.; Wang, S.; Li, C.; Sun, W.; Wang, W.; Li, X.; Wang, Z.; Cao, X.; Min, X.; et al. Q-Eval-100K: Evaluating Visual Quality and Alignment Level for Text-to-Vision Content. arXiv 2025, arXiv:2503.02357. [Google Scholar]

- Zhang, Z.; Jia, Z.; Wu, H.; Li, C.; Chen, Z.; Zhou, Y.; Sun, W.; Liu, X.; Min, X.; Lin, W.; et al. Q-Bench-Video: Benchmarking the Video Quality Understanding of LMMs. arXiv 2025, arXiv:2409.20063. [Google Scholar]

- Wu, C.; Liang, J.; Ji, L.; Yang, F.; Fang, Y.; Jiang, D.; Duan, N. Nüwa: Visual synthesis pre-training for neural visual world creation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 720–736. [Google Scholar]

- Liang, J.; Wu, C.; Hu, X.; Gan, Z.; Wang, J.; Wang, L.; Liu, Z.; Fang, Y.; Duan, N. Nuwa-infinity: Autoregressive over autoregressive generation for infinite visual synthesis. Adv. Neural Inf. Process. Syst. 2022, 35, 15420–15432. [Google Scholar]

- Hong, W.; Ding, M.; Zheng, W.; Liu, X.; Tang, J. CogVideo: Large-scale Pretraining for Text-to-Video Generation via Transformers. In Proceedings of the Eleventh International Conference on Learning Representations, Lisbon, Portugal, 10–14 October 2022. [Google Scholar]

- Villegas, R.; Babaeizadeh, M.; Kindermans, P.J.; Moraldo, H.; Zhang, H.; Saffar, M.T.; Castro, S.; Kunze, J.; Erhan, D. Phenaki: Variable length video generation from open domain textual descriptions. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Bruce, J.; Dennis, M.; Edwards, A.; Parker-Holder, J.; Shi, Y.; Hughes, E.; Lai, M.; Mavalankar, A.; Steigerwald, R.; Apps, C.; et al. Genie: Generative Interactive Environments. arXiv 2024, arXiv:2402.15391. [Google Scholar]

- Ge, S.; Hayes, T.; Yang, H.; Yin, X.; Pang, G.; Jacobs, D.; Huang, J.B.; Parikh, D. Long video generation with time-agnostic vqgan and time-sensitive transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 102–118. [Google Scholar]

- Ho, J.; Salimans, T.; Gritsenko, A.; Chan, W.; Norouzi, M.; Fleet, D.J. Video diffusion models. Adv. Neural Inf. Process. Syst. 2022, 35, 8633–8646. [Google Scholar]

- He, Y.; Yang, T.; Zhang, Y.; Shan, Y.; Chen, Q. Latent video diffusion models for high-fidelity long video generation. arXiv 2022, arXiv:2211.13221. [Google Scholar]

- Wu, J.Z.; Ge, Y.; Wang, X.; Lei, S.W.; Gu, Y.; Shi, Y.; Hsu, W.; Shan, Y.; Qie, X.; Shou, M.Z. Tune-a-video: One-shot tuning of image diffusion models for text-to-video generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 7623–7633. [Google Scholar]

- Zhou, D.; Wang, W.; Yan, H.; Lv, W.; Zhu, Y.; Feng, J. Magicvideo: Efficient video generation with latent diffusion models. arXiv 2022, arXiv:2211.11018. [Google Scholar]

- Zeng, Y.; Wei, G.; Zheng, J.; Zou, J.; Wei, Y.; Zhang, Y.; Li, H. Make pixels dance: High-dynamic video generation. arXiv 2023, arXiv:2311.10982. [Google Scholar]

- Chen, H.; Zhang, Y.; Cun, X.; Xia, M.; Wang, X.; Weng, C.; Shan, Y. Videocrafter2: Overcoming data limitations for high-quality video diffusion models. arXiv 2024, arXiv:2401.09047. [Google Scholar]

- Chen, H.; Xia, M.; He, Y.; Zhang, Y.; Cun, X.; Yang, S.; Xing, J.; Liu, Y.; Chen, Q.; Wang, X.; et al. Videocrafter1: Open diffusion models for high-quality video generation. arXiv 2023, arXiv:2310.19512. [Google Scholar]

- Singer, U.; Polyak, A.; Hayes, T.; Yin, X.; An, J.; Zhang, S.; Hu, Q.; Yang, H.; Ashual, O.; Gafni, O.; et al. Make-a-video: Text-to-video generation without text-video data. arXiv 2022, arXiv:2209.14792. [Google Scholar]

- Ho, J.; Chan, W.; Saharia, C.; Whang, J.; Gao, R.; Gritsenko, A.; Kingma, D.P.; Poole, B.; Norouzi, M.; Fleet, D.J.; et al. Imagen Video: High Definition Video Generation with Diffusion Models. arXiv 2022, arXiv:2210.02303. [Google Scholar]

- Khachatryan, L.; Movsisyan, A.; Tadevosyan, V.; Henschel, R.; Wang, Z.; Navasardyan, S.; Shi, H. Text2video-zero: Text-to-image diffusion models are zero-shot video generators. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 15954–15964. [Google Scholar]

- Yin, S.; Wu, C.; Yang, H.; Wang, J.; Wang, X.; Ni, M.; Yang, Z.; Li, L.; Liu, S.; Yang, F.; et al. Nuwa-xl: Diffusion over diffusion for extremely long video generation. arXiv 2023, arXiv:2303.12346. [Google Scholar]

- Pixverse. Available online: https://app.pixverse.ai/ (accessed on 8 July 2025).

- Klingai. Available online: https://klingai.kuaishou.com/ (accessed on 8 July 2025).

- Kong, W.; Tian, Q.; Zhang, Z.; Min, R.; Dai, Z.; Zhou, J.; Xiong, J.; Li, X.; Wu, B.; Zhang, J.; et al. HunyuanVideo: A Systematic Framework For Large Video Generative Models. arXiv 2025, arXiv:2412.03603. [Google Scholar]

- HaCohen, Y.; Chiprut, N.; Brazowski, B.; Shalem, D.; Moshe, D.; Richardson, E.; Levin, E.; Shiran, G.; Zabari, N.; Gordon, O.; et al. LTX-Video: Realtime Video Latent Diffusion. arXiv 2024, arXiv:2501.00103. [Google Scholar]

- Team, G. Mochi 1. 2024. Available online: https://github.com/genmoai/models (accessed on 8 July 2025).

- Haiper. Available online: https://haiper.ai/ (accessed on 8 July 2025).

- Yang, Z.; Teng, J.; Zheng, W.; Ding, M.; Huang, S.; Xu, J.; Yang, Y.; Hong, W.; Zhang, X.; Feng, G.; et al. CogVideoX: Text-to-Video Diffusion Models with An Expert Transformer. arXiv 2024, arXiv:2408.06072. [Google Scholar]

- Jimeng. Available online: https://jimeng.jianying.com/ (accessed on 8 July 2025).

- Esser, P.; Chiu, J.; Atighehchian, P.; Granskog, J.; Germanidis, A. Structure and Content-Guided Video Synthesis with Diffusion Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 7346–7356. [Google Scholar]

- Ying. Available online: https://chatglm.cn/video (accessed on 8 July 2025).

- Ma, X.; Wang, Y.; Jia, G.; Chen, X.; Liu, Z.; Li, Y.F.; Chen, C.; Qiao, Y. Latte: Latent Diffusion Transformer for Video Generation. arXiv 2024, arXiv:2401.03048. [Google Scholar] [CrossRef]

- Yuan, S.; Huang, J.; Shi, Y.; Xu, Y.; Zhu, R.; Lin, B.; Cheng, X.; Yuan, L.; Luo, J. MagicTime: Time-lapse Video Generation Models as Metamorphic Simulators. arXiv 2024, arXiv:2404.05014. [Google Scholar] [CrossRef]

- Henschel, R.; Khachatryan, L.; Hayrapetyan, D.; Poghosyan, H.; Tadevosyan, V.; Wang, Z.; Navasardyan, S.; Shi, H. StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text. arXiv 2024, arXiv:2403.14773. [Google Scholar]

- Lin, B. Open-Sora-Plan. GitHub. 2024. Available online: https://zenodo.org/records/10948109 (accessed on 8 July 2025).

- Sora. Available online: https://sora.com/ (accessed on 8 July 2025).

- StableVideo. Available online: https://stability.ai/stable-video (accessed on 8 July 2025).

- Wang, F.Y.; Huang, Z.; Shi, X.; Bian, W.; Song, G.; Liu, Y.; Li, H. AnimateLCM: Accelerating the Animation of Personalized Diffusion Models and Adapters with Decoupled Consistency Learning. arXiv 2024, arXiv:2402.00769. [Google Scholar] [CrossRef]

- Guo, Y.; Yang, C.; Rao, A.; Liang, Z.; Wang, Y.; Qiao, Y.; Agrawala, M.; Lin, D.; Dai, B. AnimateDiff: Animate Your Personalized Text-to-Image Diffusion Models without Specific Tuning. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Pika. Available online: https://pika.art/ (accessed on 8 July 2025).

- Zhang, D.J.; Wu, J.Z.; Liu, J.W.; Zhao, R.; Ran, L.; Gu, Y.; Gao, D.; Shou, M.Z. Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation. arXiv 2023, arXiv:2309.15818. [Google Scholar] [CrossRef]

- Mullan, J.; Crawbuck, D.; Sastry, A. Hotshot-XL. 2023. Available online: https://huggingface.co/hotshotco/Hotshot-XL (accessed on 11 October 2023).

- Wang, Y.; Chen, X.; Ma, X.; Zhou, S.; Huang, Z.; Wang, Y.; Yang, C.; He, Y.; Yu, J.; Yang, P.; et al. LAVIE: High-Quality Video Generation with Cascaded Latent Diffusion Models. IJCV 2024, 133, 3059–3078. [Google Scholar] [CrossRef]

- Zeroscope. Available online: https://huggingface.co/cerspense/zeroscope_v2_576w (accessed on 30 August 2023).

- Luo, Z.; Chen, D.; Zhang, Y.; Huang, Y.; Wang, L.; Shen, Y.; Zhao, D.; Zhou, J.; Tan, T. Notice of Removal: VideoFusion: Decomposed Diffusion Models for High-Quality Video Generation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 10209–10218. [Google Scholar] [CrossRef]

- Wang, J.; Yuan, H.; Chen, D.; Zhang, Y.; Wang, X.; Zhang, S. Modelscope text-to-video technical report. arXiv 2023, arXiv:2308.06571. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Betker, J.; Goh, G.; Jing, L.; Brooks, T.; Wang, J.; Li, L.; Ouyang, L.; Zhuang, J.; Lee, J.; Guo, Y.; et al. Improving image generation with better captions. Comput. Sci. 2023, 2, 8. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 3836–3847. [Google Scholar]

- Zhang, C.; Zhang, C.; Zhang, M.; Kweon, I.S. Text-to-image diffusion model in generative AI: A survey. arXiv 2023, arXiv:2303.07909. [Google Scholar]

- Ji, P.; Xiao, C.; Tai, H.; Huo, M. T2vbench: Benchmarking temporal dynamics for text-to-video generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 5325–5335. [Google Scholar]

- Sun, K.; Huang, K.; Liu, X.; Wu, Y.; Xu, Z.; Li, Z.; Liu, X. T2v-compbench: A comprehensive benchmark for compositional text-to-video generation. arXiv 2024, arXiv:2407.14505. [Google Scholar]

- Chen, Z.; Sun, W.; Tian, Y.; Jia, J.; Zhang, Z.; Wang, J.; Huang, R.; Min, X.; Zhai, G.; Zhang, W. GAIA: Rethinking Action Quality Assessment for AI-Generated Videos. arXiv 2024, arXiv:2406.06087. [Google Scholar] [CrossRef]

- Zhang, Z.; Sun, W.; Li, X.; Li, Y.; Ge, Q.; Jia, J.; Zhang, Z.; Ji, Z.; Sun, F.; Jui, S.; et al. Human-Activity AGV Quality Assessment: A Benchmark Dataset and an Objective Evaluation Metric. arXiv 2024, arXiv:2411.16619. [Google Scholar] [CrossRef]

- Liao, M.; Ye, Q.; Zuo, W.; Wan, F.; Wang, T.; Zhao, Y.; Wang, J.; Zhang, X. Evaluation of text-to-video generation models: A dynamics perspective. Adv. Neural Inf. Process. Syst. 2025, 37, 109790–109816. [Google Scholar]

- Huang, Z.; He, Y.; Yu, J.; Zhang, F.; Si, C.; Jiang, Y.; Zhang, Y.; Wu, T.; Jin, Q.; Chanpaisit, N.; et al. VBench: Comprehensive Benchmark Suite for Video Generative Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2024. [Google Scholar]

- Liu, Y.; Li, L.; Ren, S.; Gao, R.; Li, S.; Chen, S.; Sun, X.; Hou, L. FETV: A Benchmark for Fine-Grained Evaluation of Open-Domain Text-to-Video Generation. arXiv 2023, arXiv:2311.01813. [Google Scholar]

- Feng, W.; Li, J.; Saxon, M.; Fu, T.j.; Chen, W.; Wang, W.Y. Tc-bench: Benchmarking temporal compositionality in text-to-video and image-to-video generation. arXiv 2024, arXiv:2406.08656. [Google Scholar]

- Zhang, Z.; Li, X.; Sun, W.; Jia, J.; Min, X.; Zhang, Z.; Li, C.; Chen, Z.; Wang, P.; Ji, Z.; et al. Benchmarking AIGC Video Quality Assessment: A Dataset and Unified Model. arXiv 2024, arXiv:2407.21408. [Google Scholar] [CrossRef]

- Liu, Y.; Cun, X.; Liu, X.; Wang, X.; Zhang, Y.; Chen, H.; Liu, Y.; Zeng, T.; Chan, R.; Shan, Y. EvalCrafter: Benchmarking and Evaluating Large Video Generation Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 22139–22149. [Google Scholar]

- Kou, T.; Liu, X.; Zhang, Z.; Li, C.; Wu, H.; Min, X.; Zhai, G.; Liu, N. Subjective-aligned dataset and metric for text-to-video quality assessment. In Proceedings of the 32nd ACM International Conference on Multimedia, Lisbon, Portugal, 14–18 October 2024; pp. 7793–7802. [Google Scholar]

- Chivileva, I.; Lynch, P.; Ward, T.E.; Smeaton, A.F. Measuring the Quality of Text-to-Video Model Outputs: Metrics and Dataset. arXiv 2023, arXiv:2309.08009. [Google Scholar]

- Wu, X.; Cheng, I.; Zhou, Z.; Basu, A. RAVA: Region-Based Average Video Quality Assessment. Sensors 2021, 21, 5489. [Google Scholar] [CrossRef]

- Lin, L.; Yang, J.; Wang, Z.; Zhou, L.; Chen, W.; Xu, Y. Compressed Video Quality Index Based on Saliency-Aware Artifact Detection. Sensors 2021, 21, 6429. [Google Scholar] [CrossRef]

- Varga, D. No-Reference Video Quality Assessment Using Multi-Pooled, Saliency Weighted Deep Features and Decision Fusion. Sensors 2022, 22, 2209. [Google Scholar] [CrossRef]

- Gu, F.; Zhang, Z. No-Reference Quality Assessment of Stereoscopic Video Based on Temporal Adaptive Model for Improved Visual Communication. Sensors 2022, 22, 8084. [Google Scholar] [CrossRef] [PubMed]

- Varga, D. No-Reference Video Quality Assessment Using the Temporal Statistics of Global and Local Image Features. Sensors 2022, 22, 9696. [Google Scholar] [CrossRef]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved techniques for training gans. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Unterthiner, T.; van Steenkiste, S.; Kurach, K.; Marinier, R.; Michalski, M.; Gelly, S. Towards Accurate Generative Models of Video: A New Metric Challenges. arXiv 2018, arXiv:1812.01717. [Google Scholar]

- Wu, C.; Huang, L.; Zhang, Q.; Li, B.; Ji, L.; Yang, F.; Sapiro, G.; Duan, N. Godiva: Generating open-domain videos from natural descriptions. arXiv 2021, arXiv:2104.14806. [Google Scholar]

- Hessel, J.; Holtzman, A.; Forbes, M.; Le Bras, R.; Choi, Y. CLIPScore: A Reference-free Evaluation Metric for Image Captioning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Punta Cana, Dominican Republic, 7–11 November 2021; pp. 7514–7528. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Bach, F., Blei, D., Eds.; PMLR: Lille, France, 2015; Volume 37, pp. 448–456. [Google Scholar]

- Zhang, W.; Ma, K.; Zhai, G.; Yang, X. Uncertainty-Aware Blind Image Quality Assessment in the Laboratory and Wild. IEEE Trans. Image Process. 2021, 30, 3474–3486. [Google Scholar] [CrossRef]

- Ke, J.; Wang, Q.; Wang, Y.; Milanfar, P.; Yang, F. MUSIQ: Multi-scale Image Quality Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Virtual Conference, 11–17 October 2021; pp. 5128–5137. [Google Scholar] [CrossRef]

- Sun, W.; Min, X.; Tu, D.; Ma, S.; Zhai, G. Blind quality assessment for in-the-wild images via hierarchical feature fusion and iterative mixed database training. IEEE J. Sel. Top. Signal Process. 2023, 17, 1178–1192. [Google Scholar] [CrossRef]

- Wang, J.; Chan, K.C.; Loy, C.C. Exploring CLIP for Assessing the Look and Feel of Images. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2555–2563. [Google Scholar] [CrossRef]

- Zhang, W.; Zhai, G.; Wei, Y.; Yang, X.; Ma, K. Blind Image Quality Assessment via Vision-Language Correspondence: A Multitask Learning Perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14071–14081. [Google Scholar]

- Wu, Z.; Chen, X.; Pan, Z.; Liu, X.; Liu, W.; Dai, D.; Gao, H.; Ma, Y.; Wu, C.; Wang, B.; et al. DeepSeek-VL2: Mixture-of-Experts Vision-Language Models for Advanced Multimodal Understanding. arXiv 2024, arXiv:2412.10302. [Google Scholar]

- Lu, S.; Li, Y.; Chen, Q.G.; Xu, Z.; Luo, W.; Zhang, K.; Ye, H.J. Ovis: Structural embedding alignment for multimodal large language model. arXiv 2024, arXiv:2405.20797. [Google Scholar] [CrossRef]

- Bai, S.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; Song, S.; Dang, K.; Wang, P.; Wang, S.; Tang, J.; et al. Qwen2.5-VL Technical Report. arXiv 2025, arXiv:2502.13923. [Google Scholar] [CrossRef]

- Korhonen, J. Two-Level Approach for No-Reference Consumer Video Quality Assessment. IEEE Trans. Image Process. 2019, 28, 5923–5938. [Google Scholar] [CrossRef]

- Tu, Z.; Yu, X.; Wang, Y.; Birkbeck, N.; Adsumilli, B.; Bovik, A.C. RAPIQUE: Rapid and Accurate Video Quality Prediction of User Generated Content. IEEE Open J. Signal Process. 2021, 2, 425–440. [Google Scholar] [CrossRef]

- Li, D.; Jiang, T.; Jiang, M. Quality Assessment of In-the-Wild Videos. In Proceedings of the 27th ACM International Conference on Multimedia (MM ’19), Nice, France, 21–25 October 2019; pp. 2351–2359. [Google Scholar] [CrossRef]

- Sun, W.; Min, X.; Lu, W.; Zhai, G. A Deep Learning Based No-Reference Quality Assessment Model for UGC Videos. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 856–865. [Google Scholar]

- Wu, H.; Chen, C.; Hou, J.; Liao, L.; Wang, A.; Sun, W.; Yan, Q.; Lin, W. FAST-VQA: Efficient End-to-End Video Quality Assessment with Fragment Sampling. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022. Proceedings, Part VI. pp. 538–554. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, E.; Liao, L.; Chen, C.; Hou, J.H.; Wang, A.; Sun, W.S.; Yan, Q.; Lin, W. Exploring Video Quality Assessment on User Generated Contents from Aesthetic and Technical Perspectives. In Proceedings of the International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping Language-Image Pre-Training for Unified Vision-Language Understanding and Generation. In Proceedings of the ICML, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Wang, Y.; Li, K.; Li, Y.; He, Y.; Huang, B.; Zhao, Z.; Zhang, H.; Xu, J.; Liu, Y.; Wang, Z.; et al. InternVideo: General Video Foundation Models via Generative and Discriminative Learning. arXiv 2022, arXiv:2212.03191. [Google Scholar] [CrossRef]

- Xu, J.; Liu, X.; Wu, Y.; Tong, Y.; Li, Q.; Ding, M.; Tang, J.; Dong, Y. ImageReward: Learning and Evaluating Human Preferences for Text-to-Image Generation. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, NJ, USA, 10–16 December 2023. [Google Scholar]

- Kirstain, Y.; Poliak, A.; Singer, U.; Levy, O. Pick-a-Pic: An Open Dataset of User Preferences for Text-to-Image Generation. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, NJ, USA, 10–16 December 2023; Volume 36. [Google Scholar]

- Wu, X.; Hao, Y.; Sun, K.; Chen, Y.; Zhu, F.; Zhao, R.; Li, H. Human Preference Score v2: A Solid Benchmark for Evaluating Human Preferences of Text-to-Image Synthesis. arXiv 2023, arXiv:2306.09341. [Google Scholar]

- Wu, H.; Zhang, Z.; Zhang, W.; Chen, C.; Liao, L.; Li, C.; Gao, Y.; Wang, A.; Zhang, E.; Sun, W.; et al. Q-align: Teaching lmms for visual scoring via discrete text-defined levels. arXiv 2023, arXiv:2312.17090. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021. [Google Scholar]

- OpenAI. ChatGPT (Version 4). 2024. Available online: https://chatgpt.com/?model=gpt-4o (accessed on 8 July 2025).

- Sun, Y.; Min, X.; Zhang, Z.; Gao, Y.; Cao, Y.; Zhai, G. Mitigating Low-Level Visual Hallucinations Requires Self-Awareness: Database, Model and Training Strategy. arXiv 2025, arXiv:2503.20673. [Google Scholar]

| Model | Time | Duration(s) | Resolution | Frames | Open Source |

|---|---|---|---|---|---|

| PixVerse v4 [29] | 2025.02 | 5.0 | 640 × 640 | 150 | ✕ |

| kling v1.6 [30] | 2025.01 | 5.0 | 1920 × 1080 | 120 | ✕ |

| Hunyuan [31] | 2024.12 | 5.4 | 720 × 1280 | 129 | ✓ |

| LTX [32] | 2024.11 | 5.0 | 1280 × 720 | 120 | ✓ |

| mochi-1-preview [33] | 2024.11 | 5.4 | 854 × 480 | 162 | ✓ |

| Haiper [34] | 2024.10 | 5.0 | 720 × 720 | 120 | ✕ |

| CogVideoX1.5-5B [35] | 2024.09 | 5.0 | 1360 × 768 | 80 | ✓ |

| Dreamina [36] | 2024.08 | 5.0 | 1024 × 1024 | 120 | ✕ |

| Gen-3 [37] | 2024.07 | 15.0 | 1920 × 1080 | 360 | ✕ |

| Ying [38] | 2024.07 | 5.0 | 1280 × 720 | 120 | ✕ |

| Latte [39] | 2024.05 | 2.0 | 512 × 512 | 16 | ✓ |

| MagicTime [40] | 2024.04 | 2.1 | 512 × 512 | 48 | ✓ |

| StreamingT2V [41] | 2024.03 | 5.0 | 1280 × 720 | 40 | ✓ |

| open-sora-plan [42] | 2024.03 | 2.7 | 512 × 512 | 65 | ✓ |

| Sora [43] | 2024.02 | 60.0 | 1920 × 1080 | 1440 | ✕ |

| StableVideo [44] | 2024.02 | 4.0 | 1024 × 576 | 96 | ✓ |

| AnimateLCM [45] | 2024.02 | 1.0 | 512 × 512 | 16 | ✓ |

| AnimateDiff [46] | 2024.01 | 2.0 | 512 × 512 | 16 | ✓ |

| VideoCrafter2 [23] | 2024.01 | 1.6 | 1024 × 576 | 16 | ✓ |

| Pika [47] | 2023.12 | 3.0 | 1280 × 720 | 72 | ✕ |

| Show-1 [48] | 2023.10 | 2.4 | 576 × 320 | 29 | ✓ |

| Hotshot-XL [49] | 2023.10 | 2.0 | 672 × 384 | 8 | ✓ |

| LaVie [50] | 2023.09 | 2.4 | 512 × 320 | 61 | ✓ |

| ZeroScope [51] | 2023.06 | 2.0 | 384 × 384 | 16 | ✓ |

| CogVideo [14] | 2022.05 | 2.0 | 480 × 480 | 32 | ✓ |

| Video Fusion [52] | 2023.03 | 2.0 | 256 × 256 | 16 | ✓ |

| Text2Video-Zero [27] | 2023.03 | 3.0 | 512 × 512 | 12 | ✓ |

| text-to-video-synthesis [53] | 2023.03 | 2.0 | 256 × 256 | 16 | ✓ |

| Tune-a-video [12] | 2022.12 | 2.4 | 512 × 512 | 24 | ✓ |

| LVDM [19] | 2022.11 | 2.0 | 256 × 256 | 16 | ✓ |

| NUWA [12] | 2021.11 | 1.0 | 256 × 256 | 8 | ✓ |

| Dimension | Synthetic Prompt | Dataset Derivation | Hybrid Method |

|---|---|---|---|

| Source | Artificial and MLLM generation | Existing data set extraction | Artificial and dataset mixing |

| Focus | Control diversity and reasoning | Real-world relevance | Balance control and authenticity |

| Advantage | Systematic capability testing | Meets real user needs | Balances diversity and practicality |

| Disadvantage | Potential intention mismatch | Inherits datasetbias | High complexity |

| Dataset | Focus | Videos | Prompts | Models | Annotators |

|---|---|---|---|---|---|

| AIGVQA-DB [9] | Perceptual distortions | 36,576 | 1048 | 15 | 120 |

| EvalCrafter [67] | Holistic evaluation | 5600 | 700 | 8 | 7 |

| FETV [64] | Fine-grained fidelity | 2476 | 619 | 4 | 3 |

| GAIA [60] | Action plausibility | 9180 | 400 | 18 | 54 |

| Human-AGVQA [61] | Human artifacts | 3200 | 400 | 8 | 10 |

| T2VQA-DB [68] | Multi-dimensional | 10,000 | 1000 | 9 | 27 |

| T2VBench [58] | Temporal dynamics | 5000 | 1680 | 3 | 3 |

| LGVQ [66] | Spatio-temporal | 2808 | 468 | 6 | 20 |

| DEVIL [62] | Dynamicity | 800 | 800 | 3 | 120 |

| T2V-CompBench [59] | Compositionality | 700 | 700 | 3 | 8 |

| TC-Bench [65] | Temporal | 150 | 150 | 3 | 3 |

| MQT [69] | Semantic, visual quality | 1005 | 201 | 4 | 24 |

| Method | Spatial Quality | Temporal Quality | Text–Video Alignment |

|---|---|---|---|

| AIGV-Assessor [9] | InternViT, SlowFast | InternViT, SlowFast | InternVL2-8B |

| GHVQ [61] | CLIP-based Action Recognition | SlowFast, Action Recognition | CLIP-based |

| EvalCrafter [67] | CLIP-based, Action Recognition | Action-Score, Flow-Score | CLIPScore, SD-Score |

| MQT [69] | BLIP-based, XGBoost | Action Recognition, Optical Flow | BLIP-based |

| VBench [63] | DINO, CLIP-based | Optical Flow | CLIP-based |

| DEVIL [62] | - | Dynamic Range, Optical Flow | - |

| T2VBench [58] | - | Event Dynamics, Action Recognition | Action Recognition, Semantic Consistency |

| TC-Bench [65] | - | Event Dynamics, Temporal Consistency | GPT-4, CLIP-based |

| T2V-CompBench [59] | - | Tracking, Action Recognition | GPT-4, MLLM |

| T2VQA [68] | CLIP-based, Swin Transformer | Motion, Time Dynamics | BLIP-based, CLIP-based |

| FETV [64] | - | SlowFast, CLIP-based | UMTScore, FVD-UMT |

| LGVQ [66] | CLIP-based, Vision Transformer | SlowFast, CLIP | CLIP-based, BLIP-based |

| Aspects | Methods | Parameters | Model Type | LGVQ | FETV | ||||

|---|---|---|---|---|---|---|---|---|---|

| SRCC | KRCC | PLCC | SRCC | KRCC | PLCC | ||||

| Spatial | UNIQUE [81] | 32M | CNN | 0.716 | 0.525 | 0.768 | 0.764 | 0.637 | 0.794 |

| MUSIQ [82] | 27M | CNN | 0.669 | 0.491 | 0.682 | 0.722 | 0.613 | 0.758 | |

| StairIQA [83] | 34M | CNN | 0.701 | 0.521 | 0.737 | 0.806 | 0.643 | 0.812 | |

| CLIP-IQA [84] | 0.3B | VLM | 0.684 | 0.502 | 0.709 | 0.741 | 0.619 | 0.767 | |

| LIQE [85] | 88M | VLM | 0.721 | 0.538 | 0.752 | 0.765 | 0.635 | 0.799 | |

| DeepSeek-VL2 [86] | 4.5B | MLLM | 0.746 | 0.549 | 0.768 | 0.821 | 0.664 | 0.825 | |

| Ovis2 [87] | 8B | MLLM | 0.751 | 0.563 | 0.786 | 0.834 | 0.674 | 0.832 | |

| QwenVL2.5 [88] | 7B | MLLM | 0.776 | 0.582 | 0.802 | 0.855 | 0.692 | 0.854 | |

| InternVL2.5 [7] | 8B | MLLM | 0.769 | 0.572 | 0.797 | 0.849 | 0.692 | 0.845 | |

| Temporal | TLVQM [89] | 23M | CNN | 0.828 | 0.616 | 0.832 | 0.825 | 0.675 | 0.837 |

| RAPIQUE [90] | 28M | CNN | 0.836 | 0.641 | 0.851 | 0.833 | 0.691 | 0.854 | |

| VSFA [91] | 31M | CNN | 0.841 | 0.643 | 0.857 | 0.839 | 0.705 | 0.859 | |

| SimpleVQA [92] | 88M | CNN | 0.857 | 0.659 | 0.867 | 0.852 | 0.726 | 0.862 | |

| FastVQA [93] | 18M | CNN | 0.849 | 0.647 | 0.843 | 0.842 | 0.714 | 0.847 | |

| DOVER [94] | 56M | CNN | 0.867 | 0.672 | 0.878 | 0.868 | 0.731 | 0.881 | |

| DeepSeek-VL2 [86] | 4.5B | MLLM | 0.868 | 0.673 | 0.884 | 0.868 | 0.727 | 0.881 | |

| Ovis2 [87] | 8B | MLLM | 0.888 | 0.689 | 0.903 | 0.872 | 0.737 | 0.886 | |

| QwenVL2.5 [88] | 7B | MLLM | 0.893 | 0.694 | 0.905 | 0.896 | 0.751 | 0.902 | |

| InternVL2.5 [7] | 8B | MLLM | 0.878 | 0.683 | 0.891 | 0.892 | 0.739 | 0.893 | |

| Alignment | CLIPScore [79] | 0.15B | VLM | 0.446 | 0.301 | 0.453 | 0.607 | 0.498 | 0.633 |

| BLIP [95] | 0.14B | VLM | 0.455 | 0.319 | 0.464 | 0.616 | 0.505 | 0.645 | |

| viCLIP [96] | 0.43B | VLM | 0.479 | 0.338 | 0.487 | 0.628 | 0.518 | 0.652 | |

| ImageReward [97] | 0.15B | VLM | 0.498 | 0.344 | 0.499 | 0.657 | 0.519 | 0.687 | |

| PickScore [98] | 0.98B | VLM | 0.501 | 0.353 | 0.515 | 0.669 | 0.533 | 0.708 | |

| HPSv2 [99] | 0.15B | VLM | 0.504 | 0.357 | 0.511 | 0.686 | 0.540 | 0.703 | |

| DeepSeek-VL2 [86] | 4.5B | MLLM | 0.551 | 0.393 | 0.577 | 0.741 | 0.573 | 0.747 | |

| Ovis2 [87] | 8B | MLLM | 0.554 | 0.401 | 0.585 | 0.750 | 0.583 | 0.748 | |

| QwenVL2.5 [88] | 7B | MLLM | 0.571 | 0.410 | 0.592 | 0.762 | 0.595 | 0.764 | |

| InternVL2.5 [7] | 8B | MLLM | 0.555 | 0.396 | 0.583 | 0.744 | 0.582 | 0.745 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Sun, W.; Zhai, G. A Perspective on Quality Evaluation for AI-Generated Videos. Sensors 2025, 25, 4668. https://doi.org/10.3390/s25154668

Zhang Z, Sun W, Zhai G. A Perspective on Quality Evaluation for AI-Generated Videos. Sensors. 2025; 25(15):4668. https://doi.org/10.3390/s25154668

Chicago/Turabian StyleZhang, Zhichao, Wei Sun, and Guangtao Zhai. 2025. "A Perspective on Quality Evaluation for AI-Generated Videos" Sensors 25, no. 15: 4668. https://doi.org/10.3390/s25154668

APA StyleZhang, Z., Sun, W., & Zhai, G. (2025). A Perspective on Quality Evaluation for AI-Generated Videos. Sensors, 25(15), 4668. https://doi.org/10.3390/s25154668