Abstract

Recent breakthroughs in AI-generated content (AIGC) have transformed video creation, empowering systems to translate text, images, or audio into visually compelling stories. Yet reliable evaluation of these machine-crafted videos remains elusive because quality is governed not only by spatial fidelity within individual frames but also by temporal coherence across frames and precise semantic alignment with the intended message. The foundational role of sensor technologies is critical, as they determine the physical plausibility of AIGC outputs. In this perspective, we argue that multimodal large language models (MLLMs) are poised to become the cornerstone of next-generation video quality assessment (VQA). By jointly encoding cues from multiple modalities such as vision, language, sound, and even depth, the MLLM can leverage its powerful language understanding capabilities to assess the quality of scene composition, motion dynamics, and narrative consistency, overcoming the fragmentation of hand-engineered metrics and the poor generalization ability of CNN-based methods. Furthermore, we provide a comprehensive analysis of current methodologies for assessing AIGC video quality, including the evolution of generation models, dataset design, quality dimensions, and evaluation frameworks. We argue that advances in sensor fusion enable MLLMs to combine low-level physical constraints with high-level semantic interpretations, further enhancing the accuracy of visual quality assessment.

1. Introduction

The rapid evolution of AI-generated content (AIGC) has reshaped numerous industries [1]. With the advancement of sensor technology, more and more generative models can create highly realistic and rich videos from multiple modal inputs such as text, images, videos, and even depth information. These breakthroughs are largely driven by diffusion- and transformer-based generative models, whose unprecedented capacity for spatio-temporal synthesis now rivals professional production pipelines. Yet, despite these significant strides, assessing the quality of AIGC videos remains a formidable challenge. Unlike traditional media, where quality can often be gauged with well-established full-reference or no-reference metrics, AIGC videos exhibit failure modes that existing measures were never designed to capture: inconsistent motion, object disocclusion, semantic drift between text prompts and visuals, and subtle perceptual artifacts introduced during iterative denoising. Consequently, developing robust and reliable video quality assessment (VQA) methods for AIGC has become a pressing concern for both researchers and industry practitioners.

Traditional VQA techniques initially relied on full-reference metrics. These metrics, including PSNR, SSIM, and VMAF, quantify pixel-level fidelity by comparing a distorted video to a pristine ground truth. These methods implicitly assume both the availability of a reference signal and distortion patterns that stem from compression or capture noise. Neither assumption is valid for AIGC. Generated videos lack a natural reference, and their artifacts tend to appear as higher-order semantic inconsistencies. These inconsistencies include issues such as object disocclusion, identity swaps, and prompt drift, rather than simple signal degradation. As a result, full-reference indices systematically underestimate or misinterpret the true perceptual impact of generative errors.

To remove the dependency on reference clips, the field then embraced no-reference (blind) CNN-based and ViT-based models. Architectures built on ResNet [2], I3D [3], SlowFast [4], or Vision Transformers [5] learn to regress quality scores directly from visual content, capturing texture, motion blur, and compression artifacts more flexibly than handcrafted indices. Nevertheless, these networks are typically trained on UGC or broadcast distortions and thus struggle with AIGC-specific failure modes: they have limited awareness of text–video alignment, often treat semantic implausibility as acceptable variation, and require large amounts of labeled data that are costly to obtain for every new generative paradigm.

The latest stage is marked by multimodal large language models (MLLMs), such as Qwen-VL [6], InternVL [7], and GPT-4V [8], which jointly embed appearance, motion, and language semantics. By conditioning on the original prompt, MLLMs holistically evaluate spatial fidelity, temporal coherence, and textual alignment within a single pre-trained backbone, achieving correlations with human opinion that rival or surpass earlier methods while providing interpretable language rationales. Complementing this shift, unsupervised and semi-supervised strategies—e.g., synthetic perturbations, self-distillation, and lightweight adapters—dramatically reduce the need for exhaustive subjective labels, making MLLM-centric pipelines scalable and adaptable across domains. Figure 1 summarizes the historical evolution of AIGC-VQA.

Figure 1.

Illustration of the AIGC video quality assessment development era.

Against this backdrop, we advance a central perspective: MLLM-based methods will supersede handcrafted metrics and purely CNN and ViT architectures, becoming the de facto standard for AIGC VQA. This claim rests on three observations. (1) MLLMs unify vision, language, and temporal reasoning within a single pre-trained backbone, allowing simultaneous evaluation of spatial fidelity, motion continuity, and semantic alignment. (2) Prompt engineering and lightweight adapters enable rapid specialization to new distortion types or downstream tasks with minimal additional data. (3) Early benchmarks on datasets such as AIGV-Assessor [9], Q-Eval-100K [10], and Q-Bench-Video [11] already show that MLLM predictions correlate with mean opinion scores better than previous state-of-the-art metrics while also providing natural-language rationales that enhance interpretability.

2. AIGC Video Generation

With the rapid development of artificial intelligence, video generation—especially text-to-video (T2V) generation—has made significant progress in recent years, as shown in Table 1. Broadly, T2V models can be categorized into distinct frameworks: autoregressive-based [12,13,14,15,16,17] and diffusion-based [18,19,20,21,22,23,24,25,26,27,28] approaches. Each framework embodies a different paradigm for modeling the complex spatial and temporal dynamics inherent in video synthesis and presents its own unique set of advantages and challenges.

Table 1.

Technical specifications of mainstream T2V models.

Subsequent research shifted towards autoregressive-based models, which leverage the sequential modeling capabilities of transformers. For instance, NÜWA [12] leverages a 3D transformer encoder–decoder with a nearby attention mechanism for high-quality video synthesis. NÜWA-Infinity [13] presents a “render-and-optimize” strategy for infinite visual generation. CogVideo [14] utilizes pre-trained weights from the text-to-image model and employs a multi-frame-rate hierarchical training strategy to enhance text–video alignment. Phenaki [15] uses a variable-length video generation method with a C-ViViT encoder–decoder structure to compress video into discrete tokens. Although autoregressive models benefit from the powerful sequence modeling inherent in transformers, their performance is highly contingent upon the quality of the discrete tokenization process, and they can struggle to maintain fine-grained details if the VQ-VAE is not sufficiently expressive.

More recently, diffusion-based [54] models have emerged as the dominant framework for T2V generation [55,56,57]. These methods [18] extend the principles of denoising diffusion probabilistic models from the image domain to video. Diffusion-based T2V models, such as AnimateDiff [46], VideoCrafter [24], Text2Video-Zero [27], Tune-a-Video [12], and LVDM [19], typically operate by learning a latent space representation through an autoencoder and then iteratively denoising a random noise sample into a coherent video.

The Video Diffusion Model [18] applies the diffusion model to video generation using a 3D U-Net architecture combined with temporal attention. To reduce computational complexity, LVDM [19] introduces a hierarchical latent video diffusion model. Gen-1 [37] is a structure and content-guided video diffusion model, training on monocular depth estimates for control over structure and content. Tune-a-video [20] employs a spatiotemporal attention mechanism to maintain frame consistency. Video Crafter1 [24] uses a video VAE and a video latent diffusion process for lower-dimensional latent representation and video generation. NÜWA-XL [28] uses two diffusion models to generate keyframes and refine adjacent frames. To ensure temporal consistency, these models incorporate additional conditioning mechanisms—such as cross-frame attention or motion guidance—that explicitly account for the relationships between successive frames. While diffusion-based approaches excel in generating high-fidelity frames that closely adhere to the provided textual descriptions, they are often computationally expensive due to the iterative nature of the sampling process and may require sophisticated strategies to maintain temporal coherence over longer sequences.

The evolution of T2V generation techniques reflects a progression from early GAN/VAE-based methods, which laid the initial foundation by jointly modeling static and dynamic components, to autoregressive-based approaches that exploit the sequential modeling power of transformers and finally to diffusion-based models that have recently achieved state-of-the-art frame quality and conditioning flexibility. Each of these frameworks contributes unique insights and capabilities to the field, and ongoing research continues to explore hybrid strategies that might combine their respective strengths to enhance further the fidelity, coherence, and controllability of text-to-video generation.

3. Text-to-Video Quality Assessment Benchmarks

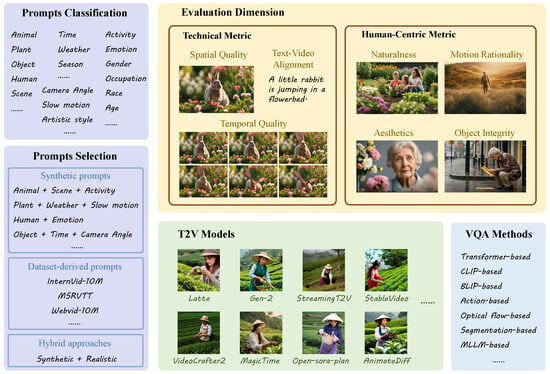

3.1. Prompts Selection

The design of text prompts plays a pivotal role in evaluating text-to-video (T2V) generation models, as it directly impacts the diversity, controllability, and real-world relevance of generated videos. Existing studies have adopted distinct strategies for prompt construction, broadly categorized into synthetic prompts, dataset-derived prompts, and hybrid approaches; the characteristics of each scheme are shown in Table 2.

Table 2.

The comparison of the prompt selection scheme.

A significant body of work leverages manually crafted or MLLM-augmented prompts to systematically test model capabilities. For instance, T2VBench [58] synthesizes prompts hierarchically using Wikipedia concepts and LLMs, covering 16 temporal dynamics such as causal chains and geometric transformations. Similarly, T2V-CompBench [59] designs 700 prompts with combinatorial challenges (e.g., attribute binding, multi-object counting) to stress-test compositional reasoning. GAIA [60] and Human-AGVQA [61] focus on human-centric scenarios, curating prompts for body/hand movements and social interactions to assess action plausibility. These approaches prioritize controlled diversity but may lack alignment with real-world user intents.

Other studies extract prompts from existing text–video datasets to ensure real-world relevance. DEVIL [62] utilizes MSR-VTT and ActivityNet captions, further refining them via GPT-4 and human annotation to categorize prompts by motion intensity (low/medium/high dynamic). AIGV-Assessor [9] combines prompts from MSR-VTT with orthogonal categories (e.g., spatial objects, temporal events) to balance realism and coverage. While such methods benefit from grounded descriptions, they often inherit dataset biases and limited creativity.

Recent efforts integrate synthetic and real-world prompts to bridge the gap between controllability and authenticity. VBench [63] merges human-designed prompts with dataset-derived examples, organizing them into eight thematic categories (e.g., animals, architecture) and 16 quality dimensions (e.g., motion smoothness, temporal consistency). FETV [64] augments open-domain datasets with manually authored prompts emphasizing temporal logic (e.g., “a car accelerates while turning left”), achieving a balance between complexity and practicality. Notably, T2VQA-DB [58] crowdsources prompts from users and augments them with structured dataset entries, enabling large-scale evaluation of real-world applicability.

Certain frameworks target niche dimensions. TC-Bench [65] designs prompts with explicit start–end states (e.g., “daytime to sunset transition”) to quantify temporal coherence, while LGVQ [66] structures prompt around foreground–background interactions to test spatial–temporal disentanglement. EvalCrafter [67] employs LLMs to expand user-provided prompts into fine-grained variations, enhancing coverage of everyday scenarios.

Despite these advances, current methods face challenges in scalability (e.g., small prompt sets in GAIA [60]) and holistic coverage. Most frameworks prioritize either temporal dynamics or compositional accuracy, with few addressing both. Additionally, prompts derived from real-world data often lack granular metadata (e.g., motion intensity labels), limiting their utility for diagnostic evaluation.

3.2. Evaluation Dimensions

The general evaluation dimensions focus on ensuring that all videos maintain high levels of overall quality, spatial consistency, temporal consistency, and accurate alignment with textual descriptions. These dimensions are fundamental to any video generation task, ensuring that the videos are coherent, stable, and consistent across frames and time. On the other hand, the special content evaluation dimensions address the specific challenges associated with generating videos that involve human actions, complex interactions, or dynamic scenes. These dimensions evaluate the plausibility of actions, the accuracy of human representation, and the correct depiction of object relationships and behaviors, which are essential for generating content that is both realistic and faithful to the provided text prompt.

3.2.1. General Evaluation Dimensions

This category encompasses evaluation dimensions applicable to all types of videos, regardless of content, with a focus on overall video quality, spatial consistency, temporal consistency, and the alignment between text prompts and generated video content.

Overall quality assesses the visual fidelity and perceptual quality of the generated video. It considers how coherent and natural the video appears to the viewer, focusing on factors such as clarity, absence of visual artifacts, and overall aesthetic appeal. Several datasets such as T2VQA [68], LGVQ [66], AIGV-Assessor [9], and Human-AGVQA [61] have been designed to evaluate this dimension, emphasizing the quality and realism of the generated videos without introducing perceptual distortions.

Spatial quality focuses on the consistency of objects and scenes in the video, ensuring that spatial relationships are accurately maintained across frames. This includes the positioning and movement of objects, particularly in dynamic or interactive scenes, where objects must remain in context and correctly aligned. In FETV [64], LGVQ [66], AIGV-Assessor [9], etc., the evaluation of this dimension is central to datasets that assess how well-generated videos maintain spatial consistency and avoid inconsistencies in the depiction of objects within the video.

Temporal quality evaluates the smoothness and consistency of video transitions over time. It includes assessing the continuity of motion and the smoothness of scene transitions, ensuring that there are no unnatural jumps or flickers. This dimension in T2VBench [58], DEVIL [62], TC-Bench [65], EvalCrafter [67], etc., is crucial for evaluating how well videos maintain temporal consistency, particularly in complex or fast-moving scenes, as demonstrated by datasets that focus on dynamic scene generation.

Text–video alignment measures how accurately the generated video aligns with the textual prompts. This dimension evaluates whether the video content faithfully reflects the details described in the prompt, including the accurate representation of objects, actions, and relationships. In LGVQ [66], AIGV-Assessor [9], and VBench [63], the alignment between text and video is critical for ensuring that the model generates content that matches the description provided, and several datasets specifically assess the quality of this alignment in terms of both semantic and visual accuracy.

3.2.2. Special Content Evaluation Dimensions

This category addresses evaluation dimensions tailored to specific types of videos, particularly those involving more specialized content, such as human activities or complex dynamic actions and interactions.

Action plausibility, particularly in videos involving human figures or animals, evaluates the realism and physical feasibility of actions. This dimension assesses whether the actions performed in the video, such as running or jumping, are physically possible and appropriately represented. It also focuses on the fluidity and naturalness of the actions, ensuring that movements appear realistic and physically plausible, such as GAIA [60] and Human-AGVQA [61]. This aspect is critical for assessing the quality of action representation in human-centric or dynamic videos.

Human artifacts specifically evaluate the representation of human figures, focusing on aspects such as anatomical accuracy, body movement, and gesture. This dimension assesses whether the model accurately generates human figures with correct body proportions and natural movements. It also looks at how well facial expressions and body language are captured, ensuring they align with the actions and emotions intended in the text prompts. In Human-AGVQA [61], this metric is particularly important for videos where human activity is central, ensuring that human figures and their movements are generated authentically.

These special content evaluation dimensions are designed to assess the accuracy and authenticity of content in more complex scenarios, such as videos involving human activities, detailed interactions between objects, or dynamic changes in the scene.

3.3. Dataset Descriptions

Recent advancements in text-to-video evaluation have driven the creation of specialized benchmarks addressing distinct aspects of AI-generated video quality. This section systematically analyzes prominent datasets. The comparative of AIGC quality assessment datasets are shown in Table 3.

Table 3.

Comparative analysis of T2V quality assessment datasets.

DEVIL [62] offers 800 prompts across 19 object and 4 scene classes, stratified into five motion levels from static to highly dynamic, to test a model’s ability to manage object motion, action diversity, and scene transitions. AIGV-Assessor [9] contains 36,576 videos from 15 text-to-video models, accompanied by 370,000 expert ratings that cover static fidelity, temporal smoothness, dynamic range, and text alignment through mean-opinion scores and pairwise comparisons. T2VBench [58] provides 1680 temporally rich prompts and 5000 generated videos, enabling evaluation of temporal consistency and complex dynamic rendering via human annotations. EvalCrafter [67] filters 200,000 relevant prompts from 600,000 community submissions, spanning humans, animals, objects, and scenery with style and camera tags, and records user feedback on visual quality, alignment, and motion for model fine tuning. FETV [64] merges 541 MSR-VTT and WebVid text-video pairs with 78 challenging prompts, supporting fine grained spatial and temporal assessment of motion, fluids, and lighting. GAIA [60] comprises 9180 clips from 18 systems covering 510 action classes, and 54 raters score action fidelity, physical plausibility, and continuity with respect to scene context. Human-AGVQA [61] includes 3200 videos generated from 400 prompts, 96,000 quality ratings, and 160,000 semantic labels, focusing on action continuity, body coordination, and scene consistency in human activities. LGVQ [66] offers 2808 clips produced by six leading models from 468 prompts, with ratings for frame clarity, temporal coherence, and text congruence, emphasizing complex motion and scene distortion. MQT [69] supplies 1005 videos from five models driven by 201 prompts and targets semantic alignment and visual realism, especially artifact detection. TC-Bench [65] pairs 150 prompts with 120 image-to-video samples to examine temporal compositionality across attribute shifts, relational changes, and background transitions. T2V-CompBench [59] offers 700 prompts divided into seven categories of 100 each to systematically test multi-object, attribute, and action composition in complex scene generation. T2VQA-DB [68] presents 10,000 videos from nine models based on 1000 prompts, combining large-language-model judgments with classical metrics for the most extensive text-to-video quality evaluation to date.

These datasets provide a wide array of evaluation standards for text-to-video generation, covering everything from basic actions to complex temporal and spatial dynamics. They are invaluable for understanding model performance and guiding improvements in video generation technologies.

4. Methodologies for AIGC Video Quality Assessment

4.1. Traditional UGC Video Quality Assessment

Video quality assessment has been applied in various fields; most methods [70,71,72,73,74] focus on User-Generated Content (UGC). However, there is still no fair metric for AIGC videos. In previous video generation studies [12,24,27,49], only a few metrics are utilized to evaluate the effectiveness of video generation methods, such as IS [75], FID [76], FVD [77], CLIP [78], CLIPScore [79], and FCS [12]. However, FID, and FVD compare the distribution of Inception [80] features of generated frames with that of a set of real images/videos, thus failing to capture distortion-level and semantic-level quality characteristics. IS [75] does not require reference videos for comparison but relies on pre-trained classification models. Furthermore, motion generation poses a great challenge for current video generation techniques, yet FID [76] and FVD [77] are unable to quantify the impact of temporal-level distortions on visual quality. CLIP-based methods such as CLIPScore [79] and FCS [12] are frequently employed to assess the alignment between the generated video and its prompt text. However, CLIP-based methods can only assess frame-level alignment between the video frames and the text prompt, and they cannot also evaluate the alignment of videos containing diverse motions. It is difficult to rely on these metrics to measure the progress of video generation techniques.

4.2. AIGC Video Quality Assessment

The evaluation methods for video quality in AI-generated content (AIGC) across the reviewed texts showcase a variety of advanced techniques, each targeting distinct aspects of video quality assessment, such as spatial, temporal, and semantic alignment, the comparison of AIGC video quality assessment methods in feature and model usage are shown in Table 4.

Table 4.

Comparison of AIGC video quality assessment methods in feature and model usage.

The AIGV-Assessor, combines 2D and 3D feature extractors, such as InternViT and SlowFast, to capture both spatial and temporal dynamics. The model aligns visual features with textual prompts through MLLM (InternVL2-8B) and follows a multi-stage training process, involving feature alignment, regression fine-tuning, and pairwise comparison optimization. This method evaluates videos across four key dimensions: static quality, time smoothness, dynamics, and text–video alignment.

The MQT [69] employs a dual evaluation framework, using BLIP-2 for text–video alignment and XGBoost to assess the video’s naturalness. Temporal consistency and motion quality are evaluated through pre-trained models for action recognition and optical flow-based analysis. This method aims to improve the accuracy of video assessments by capturing both visual quality and temporal dynamics in generated content.

The VBench [63] framework introduces a comprehensive evaluation that breaks down video quality into 16 dimensions, including object consistency, background consistency, motion smoothness, and dynamic range. It uses feature extraction models like DINO for object consistency and CLIP for background consistency, with temporal analysis based on optical flow. This multi-dimensional approach enables fine-grained analysis of video generation, assessing motion dynamics and video-condition consistency.

DEVIL [62] addresses the unique challenges of evaluating the dynamic quality of AI-generated videos, often overlooked by traditional methods. It defines dynamic range, controllability, and quality based on video dynamics, using multi-granularity dynamic scoring methods such as optical flow, SSIM, and entropy. This method uncovers how AI models often optimize static features at the expense of dynamic consistency, emphasizing the need for more advanced dynamic assessments.

T2VBench [58] introduces a new evaluation framework focusing on temporal dynamics in T2V models. It categorizes video content into event dynamics, visual dynamics, and narrative dynamics, analyzing how well T2V models handle complex time-related transitions. Using models like action recognition and semantic consistency tools, T2VBench evaluates video generation across dimensions such as event sequencing, scene transitions, and emotional changes.

TC-Bench [65] centers its evaluation on the concept of time-composition, assessing models’ ability to generate videos with consistent attributes, object relations, and background transitions. It uses assertion-based evaluation through GPT-4 to verify whether generated videos align with given text prompts, with a focus on complex combinations of actions, such as object interactions and scene transitions.

T2V-CompBench [59] emphasizes the importance of evaluating T2V models’ ability to handle complex dynamic scenes. It utilizes multimodal LLMs for cross-modal matching, combining video tracking with feature extraction to assess attributes, actions, and interactions. This framework exposes the limitations of current models in generating consistent dynamic attributes, such as object motion and interaction, in more complex scenes.

The T2VQA [68] method introduces a large-scale dataset and a multi-modal evaluation model. It assesses videos from two perspectives: text–video alignment and video fidelity, using BLIP for semantic matching and Swin Transformer for evaluating video distortion. Combining both text–video alignment and video fidelity in a unified framework, this method outperforms traditional models, providing a more comprehensive and accurate quality assessment.

FETV [64] addresses fine-grained evaluation by introducing a multi-dimensional classification system. It evaluates T2V models across spatial and temporal content, emphasizing motion dynamics, event sequencing, and prompt complexity. This method introduces improved automatic evaluation metrics like UMTScore and FVD-UMT, offering better alignment with human judgment compared to traditional metrics, delivering more reliable insights into model performance.

These methods, ranging from detailed spatial and temporal assessments to advanced dynamic evaluations, provide a comprehensive and nuanced approach to measuring the quality of AI-generated videos. Each framework introduces innovative techniques to tackle the unique challenges of T2V models, enhancing both the accuracy and reliability of quality assessments.

4.3. Technical Trends on MLLMs

4.3.1. Empirical Superiority of MLLM–Based Metrics

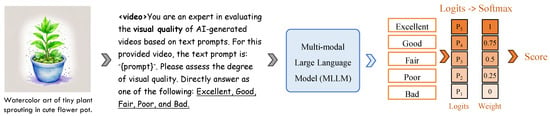

To evaluate the performance of CNN-based, VLM-based, and MLLM-based methods, we fine-tuned each approach on the LGVQ and FETV datasets. The implementation of the MLLM-based method is described below. Our implementation follows a light-tuning strategy that takes advantage of the strong visual priors of a pre-trained MLLM while keeping the computational footprint modest. The flowchart of visual quality assessment using a multimodal large model inference is shown in Figure 2.

Figure 2.

The schematic diagram of the process of visual quality assessment using a multimodal large model inference.

- Backbone freezing and partial fine-tuning. The entire vision encoder is frozen. We fine-tune only the language model. This choice preserves low-level perceptual features while allowing the linguistic pathway to learn a quality-aware vocabulary.

- Discretised quality tokens. Continuous mean-opinion scores (MOSs) are mapped to five ordinal tokens: <Excellent> (5), <Good> (4), <Fair> (3), <Poor> (2), and <Bad> (1). During training the model is asked, via a masked-language objective, to predict the correct token given the video frames and their generating prompt.

- Score reconstruction at inference. Let be the softmax probabilities over the five tokens. The final quality estimate is the expectation , i.e., a weighted sum where token weights correspond to their ordinal ranks.

- Uniform frame sampling. Each video is temporally normalized to 32 frames. For clips shorter than 32 frames, we use all available frames; longer clips are uniformly sub-sampled without replacement. Frames are resized to and packed into a single visual sequence that the frozen encoder processes in one forward pass.

Table 5 delivers a clear message: once multimodal large language models (MLLMs) are brought into the evaluation loop, they set a new upper bound for every major quality dimension. Relative to the strongest convolutional or vision–language model (VLM) baselines, MLLM metrics achieve uniformly higher rank correlations (SRCC/KRCC) and linear correlations (PLCC) on both LGVQ and FETV. The methods involved in spatial and temporal quality are all the results after fine-tuning, and the methods involved in alignment quality are all zero-shot results.

Table 5.

The performance of the CNN-based and MLLM-based metrics on the LGVQ and FETV datasets. VLM refers to the vision–language model.

We adopt a train/validation/test split to retrain all metrics. The final model is selected based on its best performance on the validation set and subsequently evaluated on the test set. The reported results are averaged over 10 trials to ensure a reliable measure of the method’s generalization capability. In our experiments, the data is split into training, validation, and test sets in a ratio of approximately 7:1:2. It is important to note that each dataset contains multiple videos generated from the same prompt. To ensure our method generalizes well to unseen prompts, we adopt an invisible prompt strategy, where no prompt in the validation or test sets appears in the training set. That is, the prompts used for training are completely separated from those used for validation and testing.

- Spatial fidelity. On LGVQ, the leading CNN metric LIQE attains an SRCC of 0.721, whereas Ovis2 and QwenVL2.5 push the score to 0.751 and 0.776, respectively—an absolute gain of 0.03–0.06 (4–8%). A comparable improvement is observed on FETV (0.799 vs. 0.832–0.854).

- Temporal coherence. Motion-aware CNNs such as SimpleVQA plateau at 0.857 SRCC on LGVQ; QwenVL2.5 elevates this to 0.893. On FETV, every MLLM surpasses the best CNN baseline (FastVQA, 0.847), again converging near 0.893.

- Prompt consistency. Alignment is the most challenging axis. Frame-level VLM scores (e.g., CLIPScore) reach only 0.446 SRCC on LGVQ. MLLMs close over one-third of that gap, with DeepSeek-VL2 achieving 0.551; on FETV the margin widens from 0.607 to 0.747, a 23% relative lift.

Across the board, every MLLM driven metric equals or exceeds the best single-modal competitor, and the advantage is most pronounced for semantic alignment: precisely the failure mode that traditional hand-crafted or unimodal features fail to capture. These empirical gains make a compelling case that MLLMs are no longer experimental add-ons but the de facto backbone for next-generation AIGC-VQA. The roadmap below outlines how this transition is likely to unfold.

4.3.2. Roadmap for MLLM-Centric VQA Pipelines

Beyond the current leaderboards, a growing body of work (e.g., LIQE [85], Q-Align [100], AIGV-Assessor [9]) shows that coupling dense vision features with language embeddings enables pixel-to-sentence error localization, natural-language rationales, and rapid domain transfer. We anticipate three converging trends.

- Multimodal fusion at scale. Future assessors will ingest synchronized visual, textual, and motion tokens, leveraging backbones such as CLIP [101], QwenVL [6], InternVL [7], and GPT-4V [102]. A single forward pass will jointly rate spatial fidelity, temporal smoothness, and prompt faithfulness, eliminating the need for ad hoc score aggregation.

- Data-efficient specialization. Domain shifts—new genres, unseen distortion types, or language locales—will be handled by lightweight adapters: prompt engineering, LoRA fine-tuning, and semi-supervised self-distillation. These techniques cut annotation cost by orders of magnitude while preserving the zero-shot flexibility of the frozen backbone.

- Standardization and deployment. Given the quantitative edge illustrated in Table 5, we expect major toolkits to embed an MLLM core within the next research cycle. Stand-alone feature-engineering pipelines will be relegated to legacy status, much like PSNR after the advent of SSIM.

Taken together, the empirical evidence and the emerging tool-chain ecosystem indicate that MLLM-based assessors will dominate the forthcoming landscape of AIGC video-quality evaluation, delivering higher accuracy, richer interpretability, and greater adaptability than conventional CNN or VLM metrics.

4.3.3. Limitations

Despite the promising performance of MLLM-based visual quality assessment methods, several limitations remain. First, MLLMs are prone to hallucinations, responding confidently even when they do not fully understand the input. Although fine-tuning with large-scale instruction datasets and applying Reinforcement Learning from Human Feedback (RLHF) can help mitigate this issue [103], it cannot be entirely eliminated. Second, while these models can generate continuous quality scores by assigning probabilities to quality-related tokens, such score generation may still lack full interpretability or robustness in edge cases. Lastly, over-reliance on MLLM-based systems may lead to rare but extreme failure cases. It should be noted that similar issues are not unique to MLLMs and can also occur in traditional CNN-based quality assessment models.

5. Conclusions

AIGC video quality assessment is a complex, multidisciplinary field requiring innovative methods and frameworks to bridge the gap between human perception and technical evaluation. While current models demonstrate substantial progress, challenges in maintaining temporal coherence, action continuity, and dynamic scene accuracy persist. This paper highlights the importance of multi-modal large language models to provide a more holistic and human-aligned assessment of AI-generated video content. Looking forward, we posit that with the continuous advancement of sensors, there will be more modal supporting generative models that are not limited to traditional images, text, audio, video, etc. The generation capabilities of generative models will continue to expand to more modalities, and multimodal large models will further demonstrate their powerful capabilities in the field of multimodal evaluation.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable (only appropriate if no new data is generated or the article describes entirely theoretical research).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Namani, Y.; Reghioua, I.; Bendiab, G.; Labiod, M.A.; Shiaeles, S. DeepGuard: Identification and Attribution of AI-Generated Synthetic Images. Electronics 2025, 14, 665. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the Deep Residual Learning for Image Recognition, Las Vegas, CA, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Carreira, J.; Zisserman, A. Quo vadis, action recognition? A new model and the kinetics dataset. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6299–6308. [Google Scholar]

- Feichtenhofer, C.; Fan, H.; Malik, J.; He, K. SlowFast Networks for Video Recognition. In Proceedings of the SlowFast Networks for Video Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 6201–6210. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2021, arXiv:2010.11929. [Google Scholar]

- Wang, P.; Bai, S.; Tan, S.; Wang, S.; Fan, Z.; Bai, J.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; et al. Qwen2-vl: Enhancing vision-language model’s perception of the world at any resolution. arXiv 2024, arXiv:2409.12191. [Google Scholar]

- Chen, Z.; Wu, J.; Wang, W.; Su, W.; Chen, G.; Xing, S.; Zhong, M.; Zhang, Q.; Zhu, X.; Lu, L.; et al. Internvl: Scaling up vision foundation models and aligning for generic visual-linguistic tasks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 24185–24198. [Google Scholar]

- Yang, Z.; Li, L.; Lin, K.; Wang, J.; Lin, C.C.; Liu, Z.; Wang, L. The dawn of lmms: Preliminary explorations with gpt-4v (ision). arXiv 2023, arXiv:2309.17421. [Google Scholar]

- Wang, J.; Duan, H.; Zhai, G.; Wang, J.; Min, X. AIGV-Assessor: Benchmarking and Evaluating the Perceptual Quality of Text-to-Video Generation with LMM. arXiv 2024, arXiv:2411.17221. [Google Scholar]

- Zhang, Z.; Kou, T.; Wang, S.; Li, C.; Sun, W.; Wang, W.; Li, X.; Wang, Z.; Cao, X.; Min, X.; et al. Q-Eval-100K: Evaluating Visual Quality and Alignment Level for Text-to-Vision Content. arXiv 2025, arXiv:2503.02357. [Google Scholar]

- Zhang, Z.; Jia, Z.; Wu, H.; Li, C.; Chen, Z.; Zhou, Y.; Sun, W.; Liu, X.; Min, X.; Lin, W.; et al. Q-Bench-Video: Benchmarking the Video Quality Understanding of LMMs. arXiv 2025, arXiv:2409.20063. [Google Scholar]

- Wu, C.; Liang, J.; Ji, L.; Yang, F.; Fang, Y.; Jiang, D.; Duan, N. Nüwa: Visual synthesis pre-training for neural visual world creation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 720–736. [Google Scholar]

- Liang, J.; Wu, C.; Hu, X.; Gan, Z.; Wang, J.; Wang, L.; Liu, Z.; Fang, Y.; Duan, N. Nuwa-infinity: Autoregressive over autoregressive generation for infinite visual synthesis. Adv. Neural Inf. Process. Syst. 2022, 35, 15420–15432. [Google Scholar]

- Hong, W.; Ding, M.; Zheng, W.; Liu, X.; Tang, J. CogVideo: Large-scale Pretraining for Text-to-Video Generation via Transformers. In Proceedings of the Eleventh International Conference on Learning Representations, Lisbon, Portugal, 10–14 October 2022. [Google Scholar]

- Villegas, R.; Babaeizadeh, M.; Kindermans, P.J.; Moraldo, H.; Zhang, H.; Saffar, M.T.; Castro, S.; Kunze, J.; Erhan, D. Phenaki: Variable length video generation from open domain textual descriptions. In Proceedings of the International Conference on Learning Representations, New Orleans, LA, USA, 28 November–9 December 2022. [Google Scholar]

- Bruce, J.; Dennis, M.; Edwards, A.; Parker-Holder, J.; Shi, Y.; Hughes, E.; Lai, M.; Mavalankar, A.; Steigerwald, R.; Apps, C.; et al. Genie: Generative Interactive Environments. arXiv 2024, arXiv:2402.15391. [Google Scholar]

- Ge, S.; Hayes, T.; Yang, H.; Yin, X.; Pang, G.; Jacobs, D.; Huang, J.B.; Parikh, D. Long video generation with time-agnostic vqgan and time-sensitive transformer. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 102–118. [Google Scholar]

- Ho, J.; Salimans, T.; Gritsenko, A.; Chan, W.; Norouzi, M.; Fleet, D.J. Video diffusion models. Adv. Neural Inf. Process. Syst. 2022, 35, 8633–8646. [Google Scholar]

- He, Y.; Yang, T.; Zhang, Y.; Shan, Y.; Chen, Q. Latent video diffusion models for high-fidelity long video generation. arXiv 2022, arXiv:2211.13221. [Google Scholar]

- Wu, J.Z.; Ge, Y.; Wang, X.; Lei, S.W.; Gu, Y.; Shi, Y.; Hsu, W.; Shan, Y.; Qie, X.; Shou, M.Z. Tune-a-video: One-shot tuning of image diffusion models for text-to-video generation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 7623–7633. [Google Scholar]

- Zhou, D.; Wang, W.; Yan, H.; Lv, W.; Zhu, Y.; Feng, J. Magicvideo: Efficient video generation with latent diffusion models. arXiv 2022, arXiv:2211.11018. [Google Scholar]

- Zeng, Y.; Wei, G.; Zheng, J.; Zou, J.; Wei, Y.; Zhang, Y.; Li, H. Make pixels dance: High-dynamic video generation. arXiv 2023, arXiv:2311.10982. [Google Scholar]

- Chen, H.; Zhang, Y.; Cun, X.; Xia, M.; Wang, X.; Weng, C.; Shan, Y. Videocrafter2: Overcoming data limitations for high-quality video diffusion models. arXiv 2024, arXiv:2401.09047. [Google Scholar]

- Chen, H.; Xia, M.; He, Y.; Zhang, Y.; Cun, X.; Yang, S.; Xing, J.; Liu, Y.; Chen, Q.; Wang, X.; et al. Videocrafter1: Open diffusion models for high-quality video generation. arXiv 2023, arXiv:2310.19512. [Google Scholar]

- Singer, U.; Polyak, A.; Hayes, T.; Yin, X.; An, J.; Zhang, S.; Hu, Q.; Yang, H.; Ashual, O.; Gafni, O.; et al. Make-a-video: Text-to-video generation without text-video data. arXiv 2022, arXiv:2209.14792. [Google Scholar]

- Ho, J.; Chan, W.; Saharia, C.; Whang, J.; Gao, R.; Gritsenko, A.; Kingma, D.P.; Poole, B.; Norouzi, M.; Fleet, D.J.; et al. Imagen Video: High Definition Video Generation with Diffusion Models. arXiv 2022, arXiv:2210.02303. [Google Scholar]

- Khachatryan, L.; Movsisyan, A.; Tadevosyan, V.; Henschel, R.; Wang, Z.; Navasardyan, S.; Shi, H. Text2video-zero: Text-to-image diffusion models are zero-shot video generators. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 15954–15964. [Google Scholar]

- Yin, S.; Wu, C.; Yang, H.; Wang, J.; Wang, X.; Ni, M.; Yang, Z.; Li, L.; Liu, S.; Yang, F.; et al. Nuwa-xl: Diffusion over diffusion for extremely long video generation. arXiv 2023, arXiv:2303.12346. [Google Scholar]

- Pixverse. Available online: https://app.pixverse.ai/ (accessed on 8 July 2025).

- Klingai. Available online: https://klingai.kuaishou.com/ (accessed on 8 July 2025).

- Kong, W.; Tian, Q.; Zhang, Z.; Min, R.; Dai, Z.; Zhou, J.; Xiong, J.; Li, X.; Wu, B.; Zhang, J.; et al. HunyuanVideo: A Systematic Framework For Large Video Generative Models. arXiv 2025, arXiv:2412.03603. [Google Scholar]

- HaCohen, Y.; Chiprut, N.; Brazowski, B.; Shalem, D.; Moshe, D.; Richardson, E.; Levin, E.; Shiran, G.; Zabari, N.; Gordon, O.; et al. LTX-Video: Realtime Video Latent Diffusion. arXiv 2024, arXiv:2501.00103. [Google Scholar]

- Team, G. Mochi 1. 2024. Available online: https://github.com/genmoai/models (accessed on 8 July 2025).

- Haiper. Available online: https://haiper.ai/ (accessed on 8 July 2025).

- Yang, Z.; Teng, J.; Zheng, W.; Ding, M.; Huang, S.; Xu, J.; Yang, Y.; Hong, W.; Zhang, X.; Feng, G.; et al. CogVideoX: Text-to-Video Diffusion Models with An Expert Transformer. arXiv 2024, arXiv:2408.06072. [Google Scholar]

- Jimeng. Available online: https://jimeng.jianying.com/ (accessed on 8 July 2025).

- Esser, P.; Chiu, J.; Atighehchian, P.; Granskog, J.; Germanidis, A. Structure and Content-Guided Video Synthesis with Diffusion Models. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 7346–7356. [Google Scholar]

- Ying. Available online: https://chatglm.cn/video (accessed on 8 July 2025).

- Ma, X.; Wang, Y.; Jia, G.; Chen, X.; Liu, Z.; Li, Y.F.; Chen, C.; Qiao, Y. Latte: Latent Diffusion Transformer for Video Generation. arXiv 2024, arXiv:2401.03048. [Google Scholar] [CrossRef]

- Yuan, S.; Huang, J.; Shi, Y.; Xu, Y.; Zhu, R.; Lin, B.; Cheng, X.; Yuan, L.; Luo, J. MagicTime: Time-lapse Video Generation Models as Metamorphic Simulators. arXiv 2024, arXiv:2404.05014. [Google Scholar] [CrossRef]

- Henschel, R.; Khachatryan, L.; Hayrapetyan, D.; Poghosyan, H.; Tadevosyan, V.; Wang, Z.; Navasardyan, S.; Shi, H. StreamingT2V: Consistent, Dynamic, and Extendable Long Video Generation from Text. arXiv 2024, arXiv:2403.14773. [Google Scholar]

- Lin, B. Open-Sora-Plan. GitHub. 2024. Available online: https://zenodo.org/records/10948109 (accessed on 8 July 2025).

- Sora. Available online: https://sora.com/ (accessed on 8 July 2025).

- StableVideo. Available online: https://stability.ai/stable-video (accessed on 8 July 2025).

- Wang, F.Y.; Huang, Z.; Shi, X.; Bian, W.; Song, G.; Liu, Y.; Li, H. AnimateLCM: Accelerating the Animation of Personalized Diffusion Models and Adapters with Decoupled Consistency Learning. arXiv 2024, arXiv:2402.00769. [Google Scholar] [CrossRef]

- Guo, Y.; Yang, C.; Rao, A.; Liang, Z.; Wang, Y.; Qiao, Y.; Agrawala, M.; Lin, D.; Dai, B. AnimateDiff: Animate Your Personalized Text-to-Image Diffusion Models without Specific Tuning. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Pika. Available online: https://pika.art/ (accessed on 8 July 2025).

- Zhang, D.J.; Wu, J.Z.; Liu, J.W.; Zhao, R.; Ran, L.; Gu, Y.; Gao, D.; Shou, M.Z. Show-1: Marrying Pixel and Latent Diffusion Models for Text-to-Video Generation. arXiv 2023, arXiv:2309.15818. [Google Scholar] [CrossRef]

- Mullan, J.; Crawbuck, D.; Sastry, A. Hotshot-XL. 2023. Available online: https://huggingface.co/hotshotco/Hotshot-XL (accessed on 11 October 2023).

- Wang, Y.; Chen, X.; Ma, X.; Zhou, S.; Huang, Z.; Wang, Y.; Yang, C.; He, Y.; Yu, J.; Yang, P.; et al. LAVIE: High-Quality Video Generation with Cascaded Latent Diffusion Models. IJCV 2024, 133, 3059–3078. [Google Scholar] [CrossRef]

- Zeroscope. Available online: https://huggingface.co/cerspense/zeroscope_v2_576w (accessed on 30 August 2023).

- Luo, Z.; Chen, D.; Zhang, Y.; Huang, Y.; Wang, L.; Shen, Y.; Zhao, D.; Zhou, J.; Tan, T. Notice of Removal: VideoFusion: Decomposed Diffusion Models for High-Quality Video Generation. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 10209–10218. [Google Scholar] [CrossRef]

- Wang, J.; Yuan, H.; Chen, D.; Zhang, Y.; Wang, X.; Zhang, S. Modelscope text-to-video technical report. arXiv 2023, arXiv:2308.06571. [Google Scholar]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. Adv. Neural Inf. Process. Syst. 2020, 33, 6840–6851. [Google Scholar]

- Betker, J.; Goh, G.; Jing, L.; Brooks, T.; Wang, J.; Li, L.; Ouyang, L.; Zhuang, J.; Lee, J.; Guo, Y.; et al. Improving image generation with better captions. Comput. Sci. 2023, 2, 8. [Google Scholar]

- Zhang, L.; Rao, A.; Agrawala, M. Adding conditional control to text-to-image diffusion models. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 3836–3847. [Google Scholar]

- Zhang, C.; Zhang, C.; Zhang, M.; Kweon, I.S. Text-to-image diffusion model in generative AI: A survey. arXiv 2023, arXiv:2303.07909. [Google Scholar]

- Ji, P.; Xiao, C.; Tai, H.; Huo, M. T2vbench: Benchmarking temporal dynamics for text-to-video generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 5325–5335. [Google Scholar]

- Sun, K.; Huang, K.; Liu, X.; Wu, Y.; Xu, Z.; Li, Z.; Liu, X. T2v-compbench: A comprehensive benchmark for compositional text-to-video generation. arXiv 2024, arXiv:2407.14505. [Google Scholar]

- Chen, Z.; Sun, W.; Tian, Y.; Jia, J.; Zhang, Z.; Wang, J.; Huang, R.; Min, X.; Zhai, G.; Zhang, W. GAIA: Rethinking Action Quality Assessment for AI-Generated Videos. arXiv 2024, arXiv:2406.06087. [Google Scholar] [CrossRef]

- Zhang, Z.; Sun, W.; Li, X.; Li, Y.; Ge, Q.; Jia, J.; Zhang, Z.; Ji, Z.; Sun, F.; Jui, S.; et al. Human-Activity AGV Quality Assessment: A Benchmark Dataset and an Objective Evaluation Metric. arXiv 2024, arXiv:2411.16619. [Google Scholar] [CrossRef]

- Liao, M.; Ye, Q.; Zuo, W.; Wan, F.; Wang, T.; Zhao, Y.; Wang, J.; Zhang, X. Evaluation of text-to-video generation models: A dynamics perspective. Adv. Neural Inf. Process. Syst. 2025, 37, 109790–109816. [Google Scholar]

- Huang, Z.; He, Y.; Yu, J.; Zhang, F.; Si, C.; Jiang, Y.; Zhang, Y.; Wu, T.; Jin, Q.; Chanpaisit, N.; et al. VBench: Comprehensive Benchmark Suite for Video Generative Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2024. [Google Scholar]

- Liu, Y.; Li, L.; Ren, S.; Gao, R.; Li, S.; Chen, S.; Sun, X.; Hou, L. FETV: A Benchmark for Fine-Grained Evaluation of Open-Domain Text-to-Video Generation. arXiv 2023, arXiv:2311.01813. [Google Scholar]

- Feng, W.; Li, J.; Saxon, M.; Fu, T.j.; Chen, W.; Wang, W.Y. Tc-bench: Benchmarking temporal compositionality in text-to-video and image-to-video generation. arXiv 2024, arXiv:2406.08656. [Google Scholar]

- Zhang, Z.; Li, X.; Sun, W.; Jia, J.; Min, X.; Zhang, Z.; Li, C.; Chen, Z.; Wang, P.; Ji, Z.; et al. Benchmarking AIGC Video Quality Assessment: A Dataset and Unified Model. arXiv 2024, arXiv:2407.21408. [Google Scholar] [CrossRef]

- Liu, Y.; Cun, X.; Liu, X.; Wang, X.; Zhang, Y.; Chen, H.; Liu, Y.; Zeng, T.; Chan, R.; Shan, Y. EvalCrafter: Benchmarking and Evaluating Large Video Generation Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 16–22 June 2024; pp. 22139–22149. [Google Scholar]

- Kou, T.; Liu, X.; Zhang, Z.; Li, C.; Wu, H.; Min, X.; Zhai, G.; Liu, N. Subjective-aligned dataset and metric for text-to-video quality assessment. In Proceedings of the 32nd ACM International Conference on Multimedia, Lisbon, Portugal, 14–18 October 2024; pp. 7793–7802. [Google Scholar]

- Chivileva, I.; Lynch, P.; Ward, T.E.; Smeaton, A.F. Measuring the Quality of Text-to-Video Model Outputs: Metrics and Dataset. arXiv 2023, arXiv:2309.08009. [Google Scholar]

- Wu, X.; Cheng, I.; Zhou, Z.; Basu, A. RAVA: Region-Based Average Video Quality Assessment. Sensors 2021, 21, 5489. [Google Scholar] [CrossRef]

- Lin, L.; Yang, J.; Wang, Z.; Zhou, L.; Chen, W.; Xu, Y. Compressed Video Quality Index Based on Saliency-Aware Artifact Detection. Sensors 2021, 21, 6429. [Google Scholar] [CrossRef]

- Varga, D. No-Reference Video Quality Assessment Using Multi-Pooled, Saliency Weighted Deep Features and Decision Fusion. Sensors 2022, 22, 2209. [Google Scholar] [CrossRef]

- Gu, F.; Zhang, Z. No-Reference Quality Assessment of Stereoscopic Video Based on Temporal Adaptive Model for Improved Visual Communication. Sensors 2022, 22, 8084. [Google Scholar] [CrossRef] [PubMed]

- Varga, D. No-Reference Video Quality Assessment Using the Temporal Statistics of Global and Local Image Features. Sensors 2022, 22, 9696. [Google Scholar] [CrossRef]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved techniques for training gans. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Heusel, M.; Ramsauer, H.; Unterthiner, T.; Nessler, B.; Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. In Proceedings of the Advances in Neural Information Processing Systems; Guyon, I., Luxburg, U.V., Bengio, S., Wallach, H., Fergus, R., Vishwanathan, S., Garnett, R., Eds.; Curran Associates, Inc.: New York, NY, USA, 2017; Volume 30. [Google Scholar]

- Unterthiner, T.; van Steenkiste, S.; Kurach, K.; Marinier, R.; Michalski, M.; Gelly, S. Towards Accurate Generative Models of Video: A New Metric Challenges. arXiv 2018, arXiv:1812.01717. [Google Scholar]

- Wu, C.; Huang, L.; Zhang, Q.; Li, B.; Ji, L.; Yang, F.; Sapiro, G.; Duan, N. Godiva: Generating open-domain videos from natural descriptions. arXiv 2021, arXiv:2104.14806. [Google Scholar]

- Hessel, J.; Holtzman, A.; Forbes, M.; Le Bras, R.; Choi, Y. CLIPScore: A Reference-free Evaluation Metric for Image Captioning. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing (EMNLP), Punta Cana, Dominican Republic, 7–11 November 2021; pp. 7514–7528. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; Bach, F., Blei, D., Eds.; PMLR: Lille, France, 2015; Volume 37, pp. 448–456. [Google Scholar]

- Zhang, W.; Ma, K.; Zhai, G.; Yang, X. Uncertainty-Aware Blind Image Quality Assessment in the Laboratory and Wild. IEEE Trans. Image Process. 2021, 30, 3474–3486. [Google Scholar] [CrossRef]

- Ke, J.; Wang, Q.; Wang, Y.; Milanfar, P.; Yang, F. MUSIQ: Multi-scale Image Quality Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Virtual Conference, 11–17 October 2021; pp. 5128–5137. [Google Scholar] [CrossRef]

- Sun, W.; Min, X.; Tu, D.; Ma, S.; Zhai, G. Blind quality assessment for in-the-wild images via hierarchical feature fusion and iterative mixed database training. IEEE J. Sel. Top. Signal Process. 2023, 17, 1178–1192. [Google Scholar] [CrossRef]

- Wang, J.; Chan, K.C.; Loy, C.C. Exploring CLIP for Assessing the Look and Feel of Images. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 2555–2563. [Google Scholar] [CrossRef]

- Zhang, W.; Zhai, G.; Wei, Y.; Yang, X.; Ma, K. Blind Image Quality Assessment via Vision-Language Correspondence: A Multitask Learning Perspective. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14071–14081. [Google Scholar]

- Wu, Z.; Chen, X.; Pan, Z.; Liu, X.; Liu, W.; Dai, D.; Gao, H.; Ma, Y.; Wu, C.; Wang, B.; et al. DeepSeek-VL2: Mixture-of-Experts Vision-Language Models for Advanced Multimodal Understanding. arXiv 2024, arXiv:2412.10302. [Google Scholar]

- Lu, S.; Li, Y.; Chen, Q.G.; Xu, Z.; Luo, W.; Zhang, K.; Ye, H.J. Ovis: Structural embedding alignment for multimodal large language model. arXiv 2024, arXiv:2405.20797. [Google Scholar] [CrossRef]

- Bai, S.; Chen, K.; Liu, X.; Wang, J.; Ge, W.; Song, S.; Dang, K.; Wang, P.; Wang, S.; Tang, J.; et al. Qwen2.5-VL Technical Report. arXiv 2025, arXiv:2502.13923. [Google Scholar] [CrossRef]

- Korhonen, J. Two-Level Approach for No-Reference Consumer Video Quality Assessment. IEEE Trans. Image Process. 2019, 28, 5923–5938. [Google Scholar] [CrossRef]

- Tu, Z.; Yu, X.; Wang, Y.; Birkbeck, N.; Adsumilli, B.; Bovik, A.C. RAPIQUE: Rapid and Accurate Video Quality Prediction of User Generated Content. IEEE Open J. Signal Process. 2021, 2, 425–440. [Google Scholar] [CrossRef]

- Li, D.; Jiang, T.; Jiang, M. Quality Assessment of In-the-Wild Videos. In Proceedings of the 27th ACM International Conference on Multimedia (MM ’19), Nice, France, 21–25 October 2019; pp. 2351–2359. [Google Scholar] [CrossRef]

- Sun, W.; Min, X.; Lu, W.; Zhai, G. A Deep Learning Based No-Reference Quality Assessment Model for UGC Videos. In Proceedings of the 30th ACM International Conference on Multimedia, Lisbon, Portugal, 10–14 October 2022; pp. 856–865. [Google Scholar]

- Wu, H.; Chen, C.; Hou, J.; Liao, L.; Wang, A.; Sun, W.; Yan, Q.; Lin, W. FAST-VQA: Efficient End-to-End Video Quality Assessment with Fragment Sampling. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022. Proceedings, Part VI. pp. 538–554. [Google Scholar] [CrossRef]

- Wu, H.; Zhang, E.; Liao, L.; Chen, C.; Hou, J.H.; Wang, A.; Sun, W.S.; Yan, Q.; Lin, W. Exploring Video Quality Assessment on User Generated Contents from Aesthetic and Technical Perspectives. In Proceedings of the International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023. [Google Scholar]

- Li, J.; Li, D.; Xiong, C.; Hoi, S. BLIP: Bootstrapping Language-Image Pre-Training for Unified Vision-Language Understanding and Generation. In Proceedings of the ICML, Baltimore, MD, USA, 17–23 July 2022. [Google Scholar]

- Wang, Y.; Li, K.; Li, Y.; He, Y.; Huang, B.; Zhao, Z.; Zhang, H.; Xu, J.; Liu, Y.; Wang, Z.; et al. InternVideo: General Video Foundation Models via Generative and Discriminative Learning. arXiv 2022, arXiv:2212.03191. [Google Scholar] [CrossRef]

- Xu, J.; Liu, X.; Wu, Y.; Tong, Y.; Li, Q.; Ding, M.; Tang, J.; Dong, Y. ImageReward: Learning and Evaluating Human Preferences for Text-to-Image Generation. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, NJ, USA, 10–16 December 2023. [Google Scholar]

- Kirstain, Y.; Poliak, A.; Singer, U.; Levy, O. Pick-a-Pic: An Open Dataset of User Preferences for Text-to-Image Generation. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, NJ, USA, 10–16 December 2023; Volume 36. [Google Scholar]

- Wu, X.; Hao, Y.; Sun, K.; Chen, Y.; Zhu, F.; Zhao, R.; Li, H. Human Preference Score v2: A Solid Benchmark for Evaluating Human Preferences of Text-to-Image Synthesis. arXiv 2023, arXiv:2306.09341. [Google Scholar]

- Wu, H.; Zhang, Z.; Zhang, W.; Chen, C.; Liao, L.; Li, C.; Gao, Y.; Wang, A.; Zhang, E.; Sun, W.; et al. Q-align: Teaching lmms for visual scoring via discrete text-defined levels. arXiv 2023, arXiv:2312.17090. [Google Scholar]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021. [Google Scholar]

- OpenAI. ChatGPT (Version 4). 2024. Available online: https://chatgpt.com/?model=gpt-4o (accessed on 8 July 2025).

- Sun, Y.; Min, X.; Zhang, Z.; Gao, Y.; Cao, Y.; Zhai, G. Mitigating Low-Level Visual Hallucinations Requires Self-Awareness: Database, Model and Training Strategy. arXiv 2025, arXiv:2503.20673. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).