1. Introduction

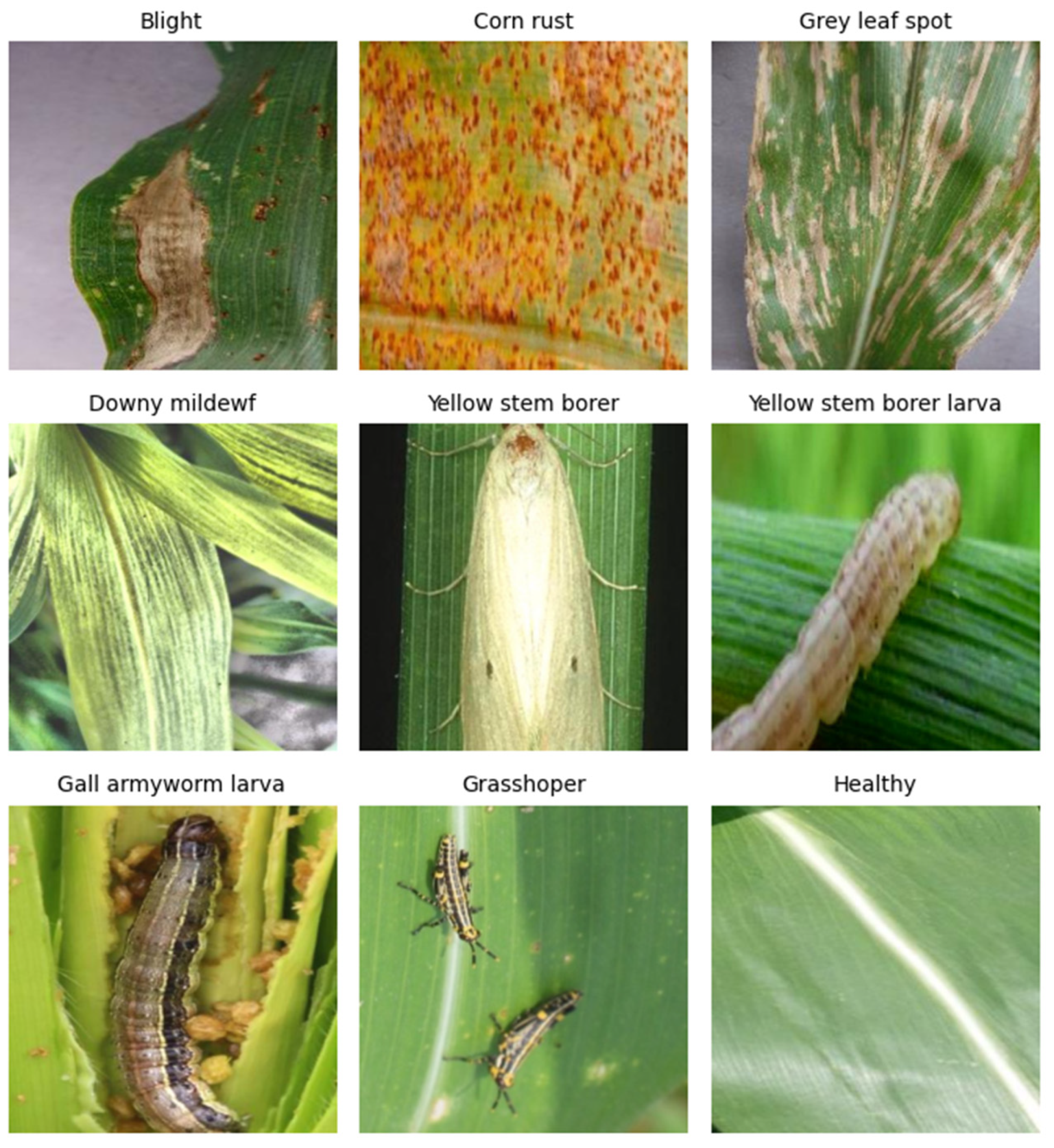

Maize, as a strategic crop with the attributes of food, feed, and industrial raw materials worldwide, plays a crucial role in maintaining food security and driving economic development [

1,

2,

3]. However, frequent pest and disease outbreaks (such as pests eating leaves and fruits and viruses infecting plants) severely restrict maize yield and quality, becoming a bottleneck for the sustainable development of the industry [

4]. The 2021 report of the Food and Agriculture Organization of the United Nations (FAO) shows that global crop production is reduced by 20% to 40% annually due to pests and diseases [

5], highlighting the urgency and importance of pest and disease control.

In agricultural production, the early and precise identification and detection of maize diseases and pests are of great significance for ensuring food safety, improving the quality and efficiency of agricultural products, and preventing economic losses. It is a key factor for the sustainable development of agriculture [

6]. The traditional manual visual inspection method relies on the experience of technicians to determine the type and severity of diseases and pests. However, its drawbacks are obvious: the differences in knowledge and practical experience among different personnel can lead to significant deviations in judgment, and it is highly subjective. At the same time, inspecting each plant in large areas of farmland one by one is time-consuming and labor-intensive, with extremely low efficiency. It is difficult to meet the demand for the efficient and accurate identification of diseases and pests in the process of agricultural scale-up and modernization, and it is impossible to achieve timely and precise prevention and control.

With the development of computers and machine learning technologies, machine vision technology based on neural networks has been widely applied in crop pest and disease detection due to its advantages, such as high recognition efficiency and short recognition time [

7]. Early research on plant disease recognition mainly relied on traditional machine learning methods, following the technical paradigm of “feature engineering + classifier”: extracting lesion areas through image preprocessing, such as threshold segmentation and morphological operations; manually designing features such as HSV color features, GLCM texture features [

8], LBP operator [

9], and Hu invariant moments as shape features; and then combining them with classifiers such as SVM [

10], random forest [

11], or KNN [

12] to build models. Although these methods are effective under specific experimental conditions, they have obvious shortcomings: they have low recognition accuracy; are prone to confusion with similar pest and disease features, especially when the early symptoms of pests and diseases are not obvious; the accuracy is even worse; the image data processing flow is complex; the recognition speed is slow; and it is easily affected by environmental factors such as light and occlusion. When monitoring large areas, it is difficult to output results in a timely manner, which may delay the prevention and control opportunities; in addition, the multi-level structure of the model makes training and deployment difficult, with high hardware requirements. Grassroots agricultural units are restricted by equipment and find it difficult to apply, seriously limiting their promotion and practical application value.

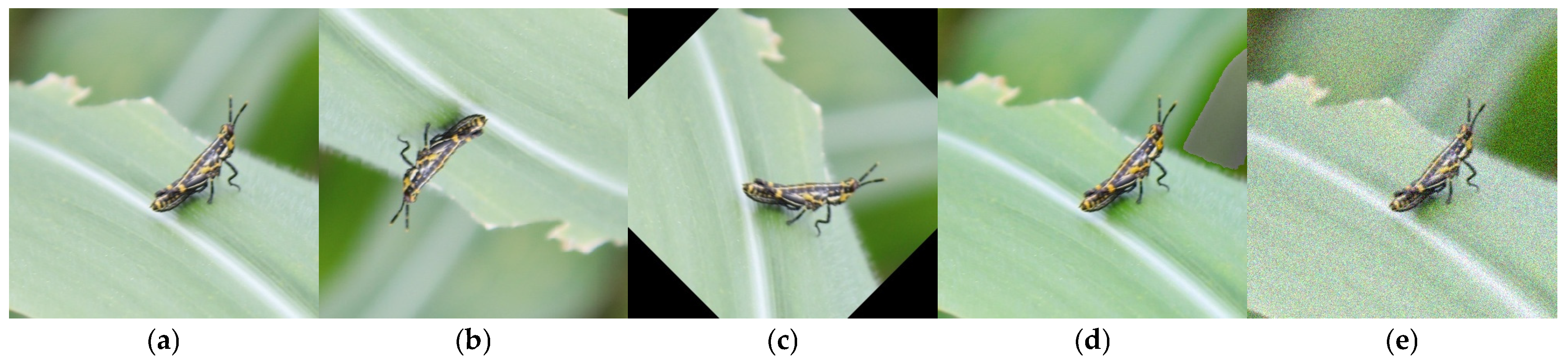

Since Hinton et al. first introduced the concept of deep learning [

13], this technology has developed rapidly in the field of pest and disease detection. Deep learning, by constructing an end-to-end feature learning framework, has greatly reduced the workload of manual feature engineering and significantly improved the speed and accuracy of pest and disease recognition [

14]. Its core technical process includes first building a large-scale training set through data preprocessing methods such as random cropping and standardization normalization; then, designing a multi-layer convolutional neural network (CNN) architecture to automatically extract hierarchical features, where the shallow network is responsible for capturing low-level visual features such as edges and textures, and the deep network is used to aggregate morphological features and semantic information of disease spots; finally, using the backpropagation algorithm combined with optimization mechanisms such as the cross-entropy loss function to drive the iterative update of network parameters and feature representation learning [

15]. A large number of studies have shown that deep learning methods have significantly outperformed traditional machine learning techniques in terms of recognition accuracy.

Current research on crop pest and disease recognition based on deep learning mainly falls into two technical routes: two-stage detection algorithms represented by R-CNN [

16], Fast R-CNN [

17], and Faster R-CNN [

18], which are characterized by achieving high-precision detection through “candidate region generation-precise localization and classification”. For instance, Fuentes et al. combined Faster R-CNN and ResNet to achieve real-time recognition of tomato pests and diseases [

19]; Zhang et al. proposed MF3R-CNN, which achieved an mAP of 83.34% in real scenarios [

20]; and Alruwaili M et al. proposed the RTF-RCNN model for tomato leaf disease detection, and its accuracy rate was 97.42%, which was higher than Alex net (96.32%) and the CNN model (92.21%) [

21]. However, these algorithms have high computational complexity and strict hardware requirements, which restrict their application at the grassroots level.

Another category is the single-stage detection algorithms represented by SSD [

22] and the YOLO series [

23], which do not require the generation of candidate regions and have a significant advantage in detection speed [

24]. Among them, the YOLO algorithm has become a research hotspot due to its balance of accuracy and real-time performance: Yang Li et al. designed the DAC-YOLOv4 model, achieving an average precision of 72.7% for strawberry powdery mildew detection [

25]; Yang et al. proposed Maize-YOLO, which optimized detection performance by integrating modules such as CSPResNeXt-50 [

26]; and Yang H et al. proposed the YOLO-SDW algorithm, which enhanced model adaptability by introducing SPD-Conv and the Wise-IoU V3 loss function [

27]. However, existing models often increase in complexity in pursuit of accuracy or speed, leading to a decline in real-time performance and an increase in deployment costs. There is an urgent need for detection solutions that balance accuracy, speed, and lightness.

Ultralytics released the open-source target detection model YOLOv8 in January 2023, which has advanced the field of target detection research [

28]. Chen Z et al. proposed the YOLOv8-ACCW model by integrating AKConv, the CA mechanism, the CARAFE module, and the Wise-IoU loss function to optimize feature extraction and detection accuracy. The results demonstrated that the model achieved an F1 score, mAP50, and mAP50-95 of 92.4%, 92.8%, and 73.8%, respectively, representing increases of 3.1%, 3.1%, and 5.6%, compared to the original algorithm, while reducing model parameters and computational cost by 6.6% and 8.5%, respectively [

29]. Jiang T et al. developed the lightweight YOLOv8-GO model by introducing a global attention mechanism (GAM) before the SPPF layer and optimizing the basic convolution, bottleneck, and C2F module to enhance feature fusion and reduce computational complexity [

30]. This model achieved an mAP50 of 88.4% with 9.1 GFLOPs and a running speed of 275.1 FPS, making it suitable for resource-constrained environments. Chen D et al. proposed the YOLOv8-MDN-Tiny model for small-scale passion fruit disease detection, which used an MFSO structure to expand small target feature pixels and an improved DyRep module for multi-scale feature fusion. With the NWD loss function and model compression techniques, this lightweight model outperformed YOLOv8s in small target detection accuracy [

31].

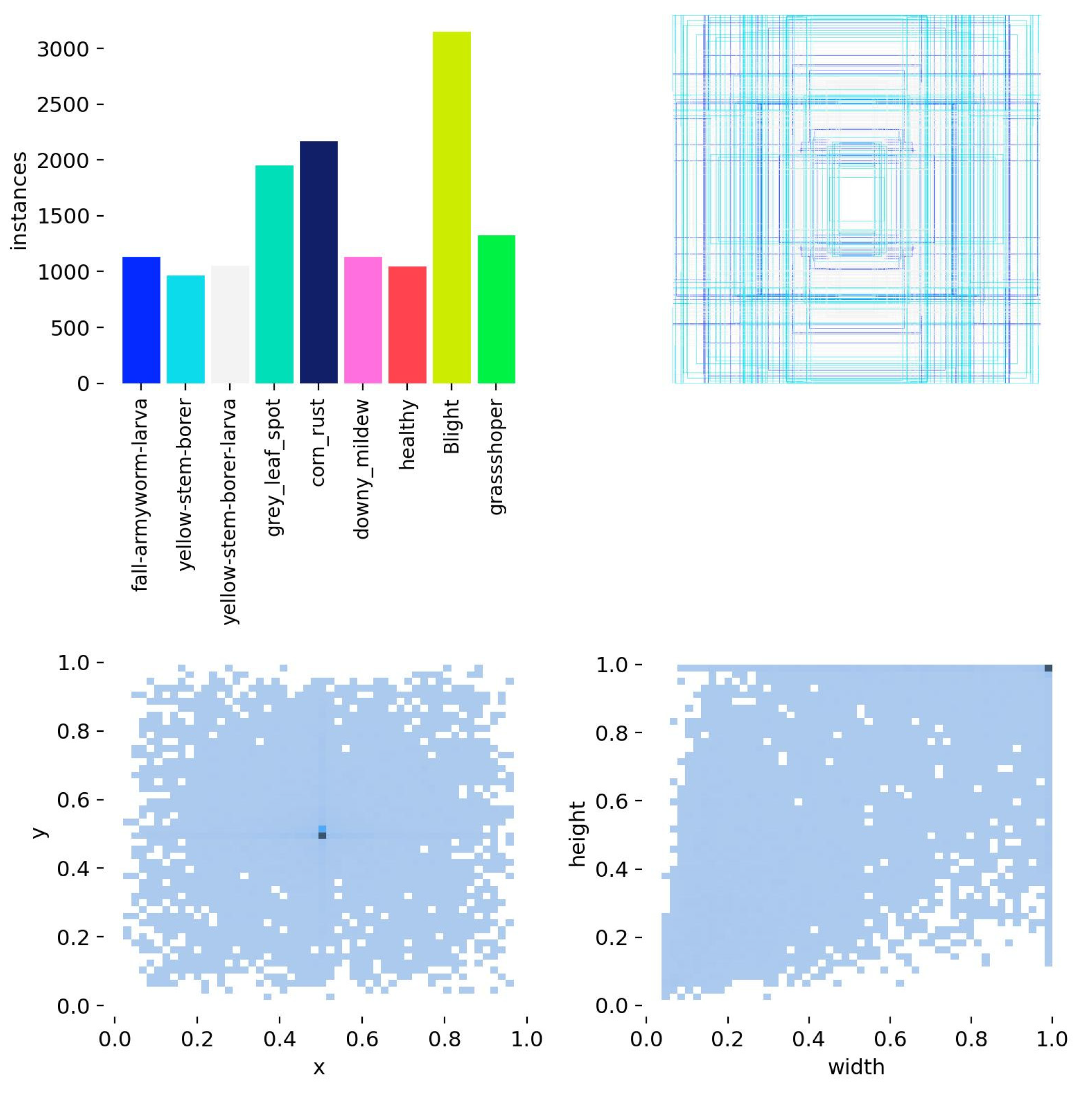

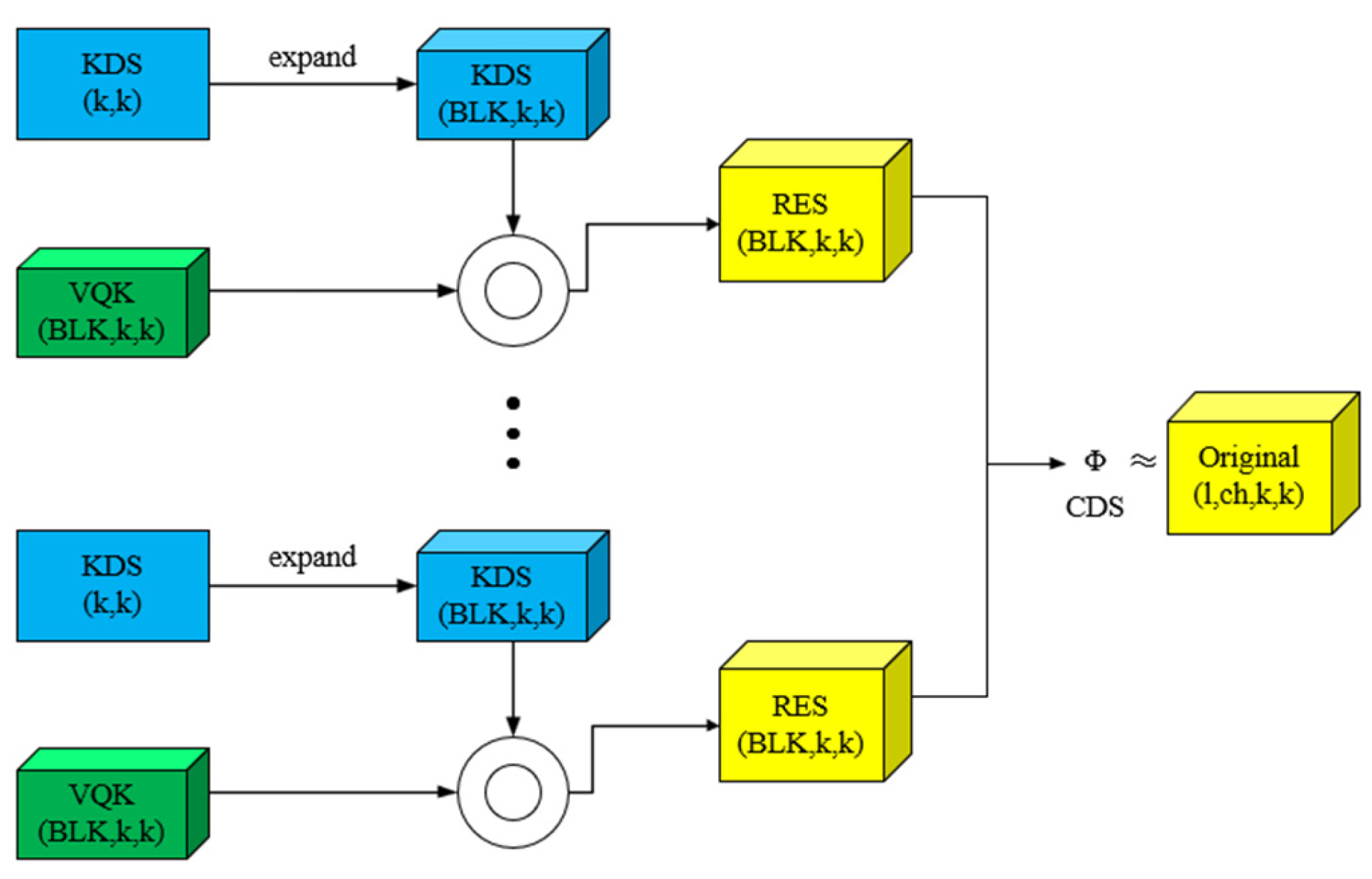

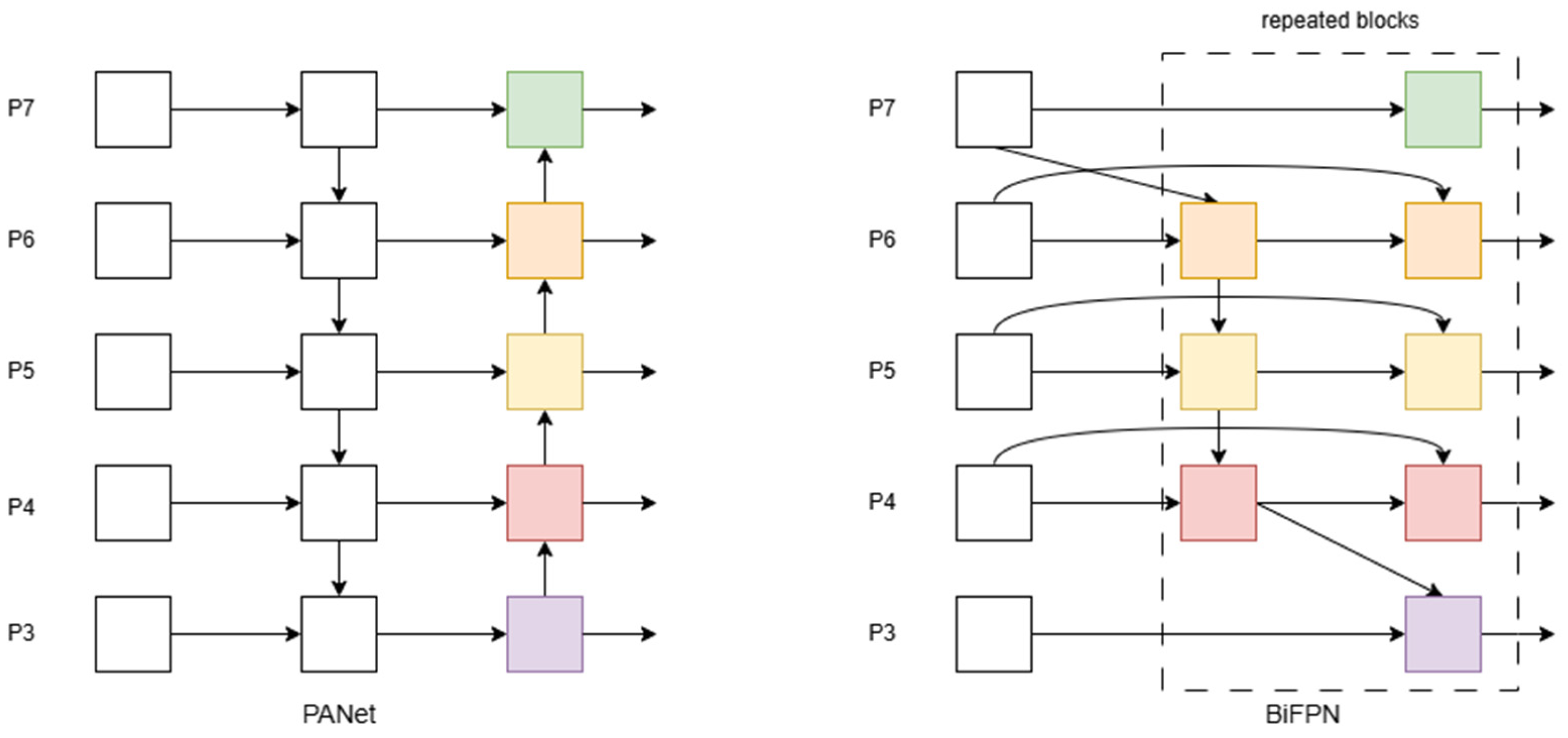

In the field of crop pest and disease detection, although some progress has been made in previous studies, there is still a contradiction between model complexity and embedded deployment. To address this issue, this paper proposed a lightweight maize leaf pest and disease detection method based on the improved YOLOv8: the Distribution Shift Convolution (DSConv) module was adopted to replace the traditional Conv layer, reducing memory usage and computational cost through kernel decomposition. BiFPN was introduced to construct a bidirectional feature pyramid, enhancing the multi-scale feature fusion capability. The Wise-IoU loss function was used to optimize the bounding box regression, improving the detection accuracy of complex pests and diseases and achieving a coordinated optimization of detection performance and a lightweight model.

3. Results

3.1. Experimental Environment and Parameter Settings

To ensure the effectiveness of deep learning model training, this study built a targeted experimental platform. The experimental environment was based on the Windows 11 operating system. The specific system hardware configuration and software development environment are shown in

Table 1.

Based on the hardware configuration and experimental requirements, this study optimized the parameters, aiming to balance the computing resource consumption and model performance, improve the training efficiency, and accelerate the model convergence. The setup system ensured that the experiment was carried out stably under unified conditions. The specific parameter configuration is detailed in

Table 2.

3.2. Evaluation Index

Precision (P), recall (R), F1 score, mean precision (mAP), frames per second (FPS), memory usage (MB), number of parameters (Params), and giga floating-point operations per second (GFLOP) were used in this study. F1 score and mAP served as core indicators, calculated as the harmonic average of the precision rate and recall rate and the precision average under different recall rates, respectively. These metrics measured the accuracy of model recognition, with mAP50 representing the average accuracy of the model when the IoU threshold was 0.5. FPS and MB respectively reflected the running speed and resource usage of the model. The number of parameters (Params) and GFLOP reflected the size and computational complexity of the model, respectively. The smaller the Params, the lighter the model was. The lower the GFLOP, the lower the computational requirements. The two cooperated to reflect the applicability of the model on resource-constrained devices.

Precision is a measure of the proportion of positive samples predicted by the model, calculated as follows:

Recall measures the proportion of positive samples that the model correctly predicts, calculated as follows:

where TP is the number of correctly predicted targets, FP is the number of incorrectly predicted targets, and FN is the number of incorrectly predicted targets.

AP is the area of the curve enclosed by P and R, indicating the average accuracy. The mAP value is obtained by averaging the AP values of each category, and the larger the mAP value, the better the performance of the model. The calculation formula is as follows:

The F1 score is the harmonic average of precision and recall and is often used to evaluate the overall performance of the model. It is calculated as follows:

3.3. DSConv Module’s Impact on Network Performance

To explore the influence of the DSConv module on network performance, ablation experiments were designed shown in

Table 3. The experimental settings were as follows: DSConv-backbone meant that only DSConv modules were integrated into the backbone network, replacing Conv modules in them while keeping the neck network structure unchanged; DSConv-neck was the opposite, only replacing Conv modules with DSConv modules in the neck network, and the backbone network remained unchanged. Further, DSConv-all covered both cases above, i.e., replacing Conv modules with DSConv in both backbone and neck networks. By comparing the experimental results of these three configurations, the aim was to systematically evaluate the contribution of DSConv modules to overall performance at different network locations.

DSConv-all meant replacing all Conv modules with DSConv modules in the backbone network and neck network of YOLOv8n. The DSConv module was introduced into the YOLOv8n backbone network to provide an efficient convolution mechanism. DSConv can flexibly adapt to different feature distributions by dynamically adjusting the weights of convolution kernels, effectively reducing memory usage and computational complexity while maintaining high accuracy. This module adopted a distributed offset and variable quantization kernel strategy, reduced the memory footprint, and sped up computation, especially suitable for resource-constrained environments. DSConv also introduced an asymmetric convolution kernel design, which enhanced the detection ability of the model for multi-scale targets by decomposing the convolution kernel into two branches and using different convolution kernel sizes for feature extraction. This method broke through the limitation of traditional convolution and enabled the model to deal with targets of different scales more efficiently. Experimental results showed that after replacing the Conv module with the DSConv module in YOLOv8n, the detection accuracy and adaptability to multi-scale targets were significantly improved while maintaining high efficiency.

3.4. Ablation Experiments

To verify the accuracy of the proposed improved algorithm, ablation tests were used for comparison, and the results are shown in

Table 4 below, where √ represents the application of the module, and × represents that the module is not applied.

It can be seen from the table that the improved algorithm adopted a more efficient network structure to enhance the YOLOv8n network structure, improved accuracy, and reduced model parameters and computational complexity. This also proved that the DSConv module did not reduce the accuracy of the algorithm but reduced the model parameters and computational load. BiFPN was introduced to enhance the YOLOv8 algorithm to improve the adaptability of the model to targets of different scales. The Wise-IoU boundary loss function improved the evaluation ability of detectors and played a vital role in detecting multiple pests. Combining these improvements with the YOLOv8n algorithm, the model volume was minimized, the parameters were reduced to only 2.8 M, and the computational complexities were reduced to 7.6 G, which were 6.6% and 7.3%, respectively. This effectively reduced the difficulty and cost of deploying models on a mobile device and significantly improved accuracy while meeting real-time requirements.

3.5. Detection Comparison of Different Algorithms

To fully evaluate the performance of YOLOv8-DBW, this study used the maize leaf disease dataset to carefully compare it with seven mainstream target detection algorithms, including Faster R-CNN, SSD, YOLOv5s, YOLOv6n, YOLOv7Tiny, YOLOv8n, YOLOv9s, and YOLO11n shown in

Table 5. Experimental results showed that YOLOv8-DBW had significant advantages in key performance indicators.

YOLOv8-DBW achieved a 16.8% improvement in mAP50 metrics compared to the two-stage detection algorithm Faster R-CNN and a 19.2% improvement compared to the single-stage detection algorithm SSD. This indicates that YOLOv8-DBW has remarkable superiority in detection accuracy. At the same time, YOLOv8-DBW also demonstrated significant improvements in accuracy (P) and recall (R), further highlighting its effectiveness and reliability in target detection tasks. YOLOv8-DBW also showed excellent performance when compared to other YOLO models. YOLOv8-DBW achieved 5.2%, 4.6%, 1.4%, 1.5%, and 0.4% improvements in mAP50 compared to YOLOv5s, YOLOv6n, YOLOv7Tiny, YOLOv8n, and YOLOv9s, respectively. Meanwhile, it achieved a performance close to that of the latest YOLO11n. These results reflect not only YOLOv8-DBW’s progress in algorithm optimization but also its ability to further improve detection accuracy while maintaining the consistently high speed of the YOLO series. It is worth noting that YOLOv8-DBW also showed obvious advantages in terms of model parameter quantity. YOLOv8-DBW has the smallest model size among all compared algorithms, which means it requires the least storage space and memory resources when deployed. This is a critical advantage for resource-constrained edge computing devices and mobile applications because it significantly reduces hardware costs and energy consumption.

In summary, the YOLOv8-DBW algorithm stands out among many target detection algorithms due to its significant advantages in detection accuracy and model volume. These features make YOLOv8-DBW the preferred solution for practical applications, not only meeting high standards of accuracy and speed but also achieving an optimal balance between deployment costs and computational resource consumption, demonstrating excellent overall performance and broad application prospects.

3.6. Application of Experimental Results

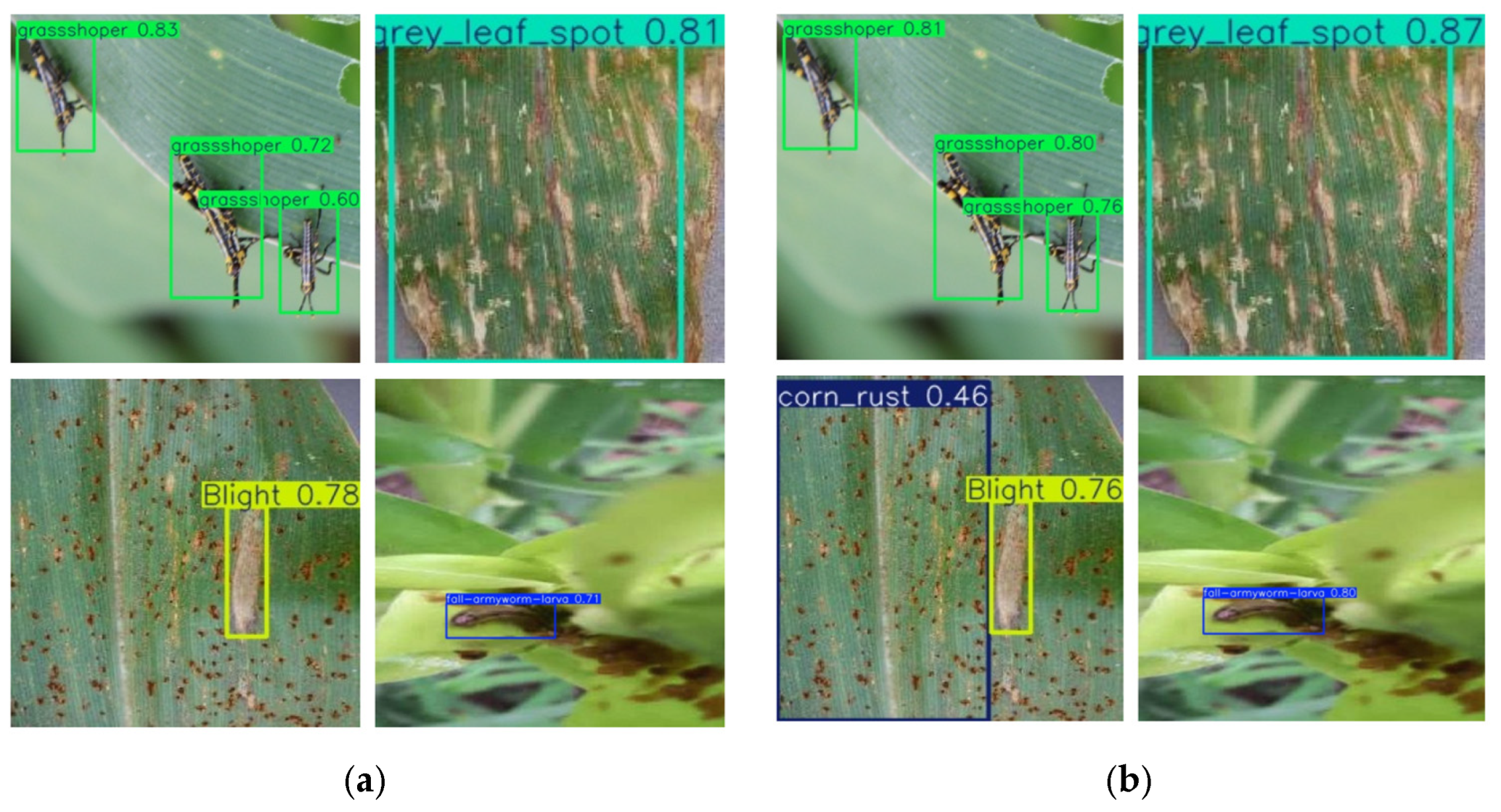

To illustrate the performance improvement of YOLOV8-DBW over the baseline algorithm YOLOv8n, we compared their detection results on the same set of images. The test results are shown in

Figure 8, where the test results of the baseline algorithm are displayed on the left, and YOLOV8-DBW test results are displayed on the right. Compared with the baseline algorithm, the improved algorithm could identify a variety of maize diseases and insect pests more accurately, resulting in higher detection accuracy. The experimental data showed that compared with the baseline algorithm, the YOLOV8-DBW algorithm proposed in this paper showed significant advantages. In the task of maize leaf disease and pest detection, the algorithm could not only accurately locate the disease target but also classify it with extremely high recognition accuracy. Even in the case of complex background or unclear disease and pest features, it still maintained excellent detection performance, which fully verifies its excellent robustness and detection accuracy.

To further verify the detection performance of the model for maize leaf disease and pests,

Table 6 compares the performance of YOLOv8n and the improved YOLOV8-DBW model in detecting maize leaf disease and pests. It can be seen from the overall performance that the improved model performed better than the baseline model in detecting various diseases and pests and had more practical application value.

As shown in

Figure 9, this study compared the precision-recall (PR) curves of the traditional YOLOv8n and YOLOv8-DBW models. The quantitative analysis results showed that the YOLOv8-DBW model showed a significant performance improvement in the detection of various disease targets in the detection task of maize leaf diseases and insect pests. Compared with the original model, the mAP50 was increased from 87.5% to 89.0%, an increase of 1.5%, which fully verifies the effectiveness of the improved algorithm in optimizing the target detection accuracy.

Figure 10 is the confusion matrix of the YOLOv8n and YOLOv8-DBW models. In the visualization of the confusion matrix, the deeper the gray value of the main diagonal color block, the higher the correct detection rate of the model for the corresponding category. The deeper the gray value of the non-main diagonal color block, the more likely it was to reflect that the model was confused in the detection results of the two types of targets corresponding to the horizontal (predicted category) and vertical (actual category) categories.

4. Conclusions

An improved target detection model, YOLOv8-DBW, was proposed to detect maize leaf diseases and insect pests. Several enhancement techniques were introduced, including DSConv, BiFPN, and Wise-IoU, where DSConv replaced traditional convolution to significantly reduce memory usage and speed up computation while basically maintaining model accuracy. Secondly, BiFPN was introduced to construct a bidirectional feature pyramid structure, which realized efficient information flow and fusion among different-scale features and enhanced the feature fusion ability of the model. Finally, the Wise-IoU boundary loss function was used instead of CIoU in YOLOv8 to enhance the boundary box regression performance of the network and improve the detection of complex diseases and pests. The improved YOLOv8-DBW showed significant advantages in model accuracy, efficiency, and adaptability. Experimental results showed that YOLOv8-DBW achieved significant improvements in P, R, and mAP50 indices, and also reduced parameters and computational complexity compared with existing advanced target detection algorithms, achieving a balance between minimizing model volume and maximizing detection accuracy. These improvements not only greatly reduce the complexity and cost of model deployment on mobile devices but also significantly improve the detection efficiency so that it can better adapt to the real-time requirements in actual application scenarios.

Overall, YOLOv8-DBW provides a superior solution for maize leaf pest detection due to its high performance and wide applicability. Future work will focus on further optimizing the model structure to improve its robustness to extreme conditions and rare pest types while exploring its potential for application in other agricultural fields.