MSWF: A Multi-Modal Remote Sensing Image Matching Method Based on a Side Window Filter with Global Position, Orientation, and Scale Guidance

Abstract

1. Introduction

- (1)

- The proposed side window scale space using SWF preserves contour information and increases the number of feature points extracted from most modalities.

- (2)

- To address the sensitivity of the PC to noise, a new noise threshold estimation method is proposed using the Weibull distribution to better estimate noise levels and reduce the impact of noise on MRSI matching.

- (3)

- A novel global-to-local cascade matching strategy based on the newly constructed image scale space is proposed. Guided by the results of global matching, the consistent position, orientation, and scale features of corresponding relationships are used to locally regenerate feature descriptors, performing cascade matching. Experimental results show that this significantly improves the accuracy and reliability of the matching results.

2. Methodology

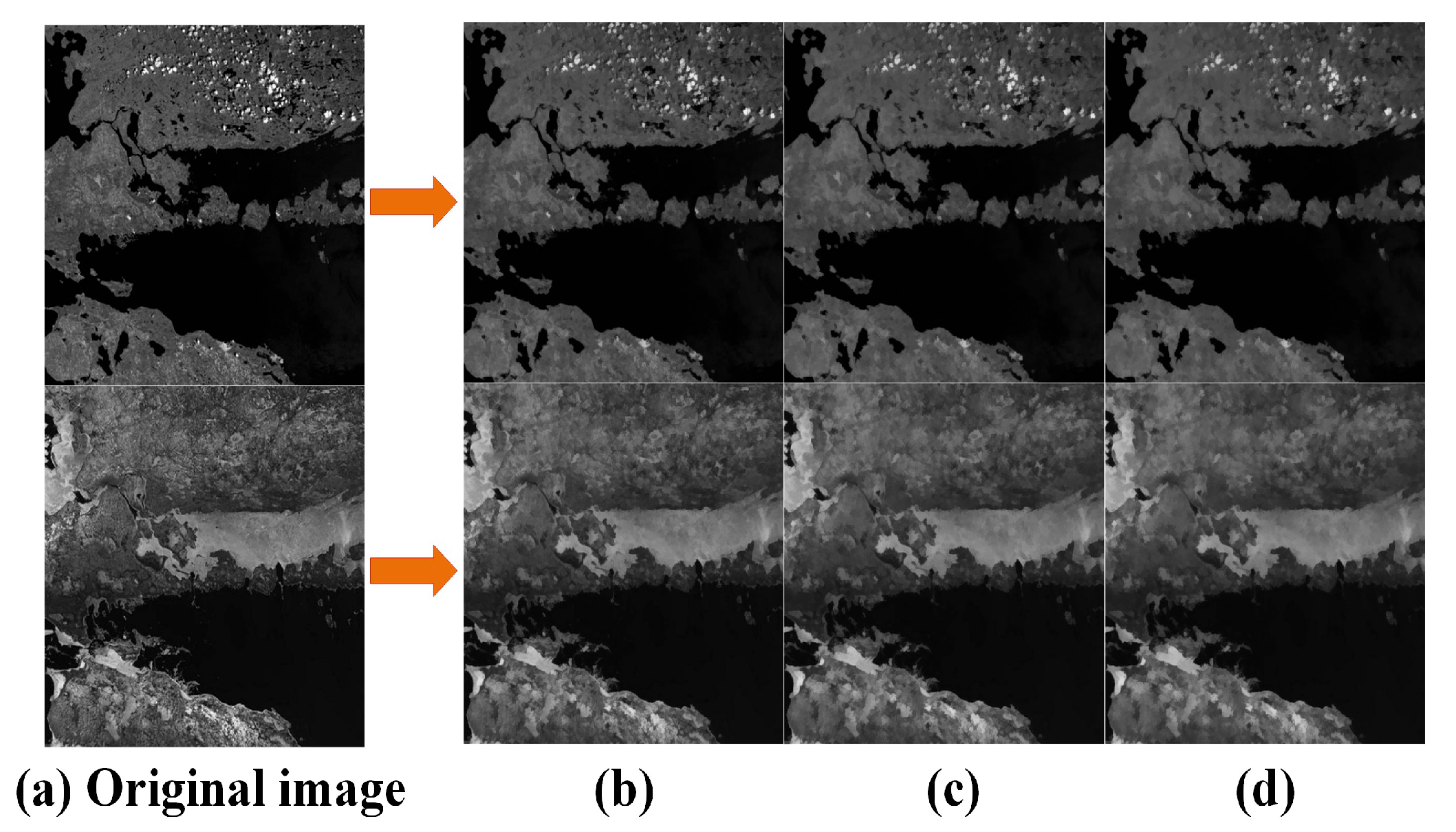

2.1. Side Window Scale Space Construction

2.2. Feature Extraction

2.2.1. Phase-Congruency Computation

2.2.2. Noise Estimation

2.3. Descriptor Generation

2.4. Cascade Matching Guided by Global Position, Orientation, and Scale Information

3. Experimental Results and Discussion

3.1. Experimental Setting

3.1.1. Image Datasets

3.1.2. Evaluation Criteria

3.1.3. Experimental Environments

3.2. Parameter Analysis

3.3. Matching Results Analysis

3.3.1. Qualitative Analysis

3.3.2. Quantitative Analysis

3.3.3. Analysis of Rotation Invariance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Shao, H.; Lu, S.; Xiang, J.; Cai, B. Highly efficient fault diagnosis of rotating machinery under time-varying speeds using LSISMM and small infrared thermal images. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 7328–7340. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y.; Zhang, H.; Ma, G. Multimodal remote sensing image matching combining learning features and Delaunay triangulation. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Yi, H.; Liu, B.; Zhao, B.; Liu, E. LiDAR-Guided Stereo Matching Using Bayesian Optimization with Gaussian Process Regression. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J.; Hao, S.; Bruzzone, L.; Qin, Y. A local phase based invariant feature for remote sensing image matching. ISPRS J. Photogramm. Remote Sens. 2018, 142, 205–221. [Google Scholar] [CrossRef]

- Zitova, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Ma, J.; Ma, Y.; Li, C. Infrared and visible image fusion methods and applications: A survey. Inf. Fusion 2019, 45, 153–178. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Xiao, G.; Shao, Z.; Guo, X. A review of multimodal image matching: Methods and applications. Inf. Fusion 2021, 73, 22–71. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Ye, Y.; Zhu, B.; Tang, T.; Yang, C.; Xu, Q.; Zhang, G. A robust multimodal remote sensing image registration method and system using steerable filters with first- and second-order gradients. ISPRS J. Photogramm. Remote Sens. 2022, 188, 331–350. [Google Scholar] [CrossRef]

- Anandan, P. Measuring Visual Motion from Image Sequences; University of Massachusetts Amherst: Amherst, MA, USA, 1987. [Google Scholar]

- Kai, B.; Uwe, D.H. Template matching using fast normalized cross correlation. Opt. Pattern Recognit. XII 2001, 4387, 95–102. [Google Scholar] [CrossRef]

- Chen, H.M.; Arora, M.K.; Varshney, P.K. Mutual information-based image registration for remote sensing data. Int. J. Remote Sens. 2003, 24, 3701–3706. [Google Scholar] [CrossRef]

- Ye, Y.; Shan, J. A local descriptor based registration method for multispectral remote sensing images with non-linear intensity differences. ISPRS J. Photogramm. Remote Sens. 2014, 90, 83–95. [Google Scholar] [CrossRef]

- Ye, Y.; Shen, L.; Hao, M.; Wang, J.; Xu, Z. Robust Optical-to-SAR Image Matching Based on Shape Properties. IEEE Geosci. Remote Sens. Lett. 2017, 14, 564–568. [Google Scholar] [CrossRef]

- Ye, Y.; Shen, L. Hopc: A novel similarity metric based on geometric structural properties for multi-modal remote sensing image matching. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, III-1, 9–16. [Google Scholar] [CrossRef]

- Xiong, X.; Xu, Q.; Jin, G.; Zhang, H.; Gao, X. Rank-Based Local Self-Similarity Descriptor for Optical-to-SAR Image Matching. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1742–1746. [Google Scholar] [CrossRef]

- Ye, Y.; Bruzzone, L.; Shan, J.; Bovolo, F.; Zhu, Q. Fast and Robust Matching for Multimodal Remote Sensing Image Registration. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9059–9070. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-Like Algorithm for SAR Images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 453–466. [Google Scholar] [CrossRef]

- Xiang, Y.; Wang, F.; You, H.J. OS-SIFT: A robust SIFT-like algorithm for high-resolution optical-to-SAR image registration in suburban areas. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3078–3090. [Google Scholar] [CrossRef]

- Ma, W.; Wen, Z.; Wu, Y.; Jiao, L.; Gong, M.; Zheng, Y.; Liu, L. Remote Sensing Image Registration With Modified SIFT and Enhanced Feature Matching. IEEE Geosci. Remote Sens. Lett. 2017, 14, 3–7. [Google Scholar] [CrossRef]

- Yao, Y.; Zhang, Y.; Wan, Y.; Liu, X.; Yan, X.; Li, J. Multi-Modal Remote Sensing Image Matching Considering Co-Occurrence Filter. IEEE Trans. Image Process. 2022, 31, 2584–2597. [Google Scholar] [CrossRef] [PubMed]

- Kovesi, P. Image features from phase congruency. J. Comput. Vis. Res. 1999, 1, 1–26. [Google Scholar]

- Kovesi, P. Phase congruency: A low-level image invariant. Psychol. Res. 2000, 64, 136–148. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Hu, Q.; Ai, M. RIFT: Multi-Modal Image Matching Based on Radiation-Variation Insensitive Feature Transform. IEEE Trans. Image Process. 2020, 29, 3296–3310. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Zhang, Y.; Wan, Y.; Liu, X.; Guo, H. Heterologous Images Matching Considering Anisotropic Weighted Moment and Absolute Phase Orientation. Geomat. Inf. Sci. Wuhan Univ. 2021, 46, 1727–1736. [Google Scholar] [CrossRef]

- Zhang, Y.; Yao, Y.; Wan, Y.; Liu, W.; Yang, W.; Zheng, Z.; Xiao, R. Histogram of the orientation of the weighted phase descriptor for multi-modal remote sensing image matching. ISPRS J. Photogramm. Remote Sens. 2023, 196, 1–15. [Google Scholar] [CrossRef]

- Hou, Z.; Liu, Y.; Zhang, L. POS-GIFT: A geometric and intensity-invariant feature transformation for multimodal images. Inf. Fusion 2024, 102, 102027. [Google Scholar] [CrossRef]

- Chen, J.; Chen, X.; Chen, S.; Liu, Y.; Rao, Y.; Yang, Y.; Wang, H.; Wu, D. Shape-Former: Bridging CNN and Transformer via ShapeConv for multimodal image matching. Inf. Fusion 2023, 91, 445–457. [Google Scholar] [CrossRef]

- Ye, F.; Su, Y.; Xiao, H.; Zhao, X.; Min, W. Remote sensing image registration using convolutional neural network features. IEEE Geosci. Remote Sens. Lett. 2018, 15, 232–236. [Google Scholar] [CrossRef]

- Ma, W.; Zhang, J.; Wu, Y.; Jiao, L.; Zhu, H.; Zhao, W. A novel two-step registration method for remote sensing images based on deep and local features. IEEE Trans. Geosci. Remote Sens. 2019, 57, 4834–4843. [Google Scholar] [CrossRef]

- Hughes, L.H.; Marcos, D.; Lobry, S.; Tuia, D.; Schmitt, M.; Sensing, R. A deep learning framework for matching of SAR and optical imagery. ISPRS J. Photogramm. Remote Sens. 2020, 169, 166–179. [Google Scholar] [CrossRef]

- Wang, Q.; Zhang, J.; Yang, K.; Peng, K.; Stiefelhagen, R. Matchformer: Interleaving attention in transformers for feature matching. In Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022; pp. 2746–2762. [Google Scholar] [CrossRef]

- Xie, H.; Zhang, Y.; Qiu, J.; Zhai, X.; Liu, X.; Yang, Y.; Zhao, S.; Luo, Y.; Zhong, J.J.I.F. Semantics lead all: Towards unified image registration and fusion from a semantic perspective. Inf. Fusion 2023, 98, 101835. [Google Scholar] [CrossRef]

- Yang, C.; Gong, G.; Liu, C.; Deng, J.; Ye, Y. RMSO-ConvNeXt: A Lightweight CNN Network for Robust SAR and Optical Image Matching Under Strong Noise Interference. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–13. [Google Scholar] [CrossRef]

- Zhu, B.; Ye, Y.; Dai, J.; Peng, T.; Deng, J.; Zhu, Q. VDFT: Robust feature matching of aerial and ground images using viewpoint-invariant deformable feature transformation. ISPRS J. Photogramm. Remote Sens. 2024, 218, 311–325. [Google Scholar] [CrossRef]

- Lin, S.; Huang, F.; Lai, T.; Lai, J.; Wang, H.; Weng, J. Robust Heterogeneous Model Fitting for Multi-source Image Correspondences. Int. J. Comput. Vis. 2024, 132, 2907–2928. [Google Scholar] [CrossRef]

- Yang, W.; Mei, L.; Ye, Z.; Wang, Y.; Hu, X.; Zhang, Y.; Yao, Y. Adjacent Self-Similarity 3-D Convolution for Multimodal Image Registration. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Ye, Y.; Yang, C.; Gong, G.; Yang, P.; Quan, D.; Li, J. Robust Optical and SAR Image Matching Using Attention-Enhanced Structural Features. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–12. [Google Scholar] [CrossRef]

- Yin, H.; Gong, Y.; Qiu, G. Side Window Filtering. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 8750–8758. [Google Scholar] [CrossRef]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Weickert, J.; Romeny, B.M.T.H.; Viergever, M.A. Efficient and reliable schemes for nonlinear diffusion filtering. IEEE Trans. Image Process. 1998, 7, 398–410. [Google Scholar] [CrossRef] [PubMed]

- Morrone, M.C.; Owens, R.A. Feature detection from local energy. Pattern Recognit. Lett. 1987, 6, 303–313. [Google Scholar] [CrossRef]

- Geusebroek; Smeulders. Fragmentation in the vision of scenes. In Proceedings of the Ninth IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003; Volume 1, pp. 130–135. [Google Scholar] [CrossRef]

- Ayed, I.B.; Hennane, N.; Mitiche, A. Unsupervised Variational Image Segmentation/Classification Using a Weibull Observation Model. IEEE Trans. Image Process. 2006, 15, 3431–3439. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Ma, W.; Gong, M.; Su, L.; Jiao, L. A novel point-matching algorithm based on fast sample consensus for image registration. IEEE Geosci. Remote Sens. Lett. 2014, 12, 43–47. [Google Scholar] [CrossRef]

- Aguilera, C.A.; Sappa, A.D.; Toledo, R. LGHD: A feature descriptor for matching across non-linear intensity variations. In Proceedings of the 2015 IEEE International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; pp. 178–181. [Google Scholar] [CrossRef]

- Lan, C.; Lu, W.; Yu, J.; Xv, Q. Deep learning algorithm for feature matching of cross modality remote sensing images. Acta Geod. Et Cartogr. Sin. 2021, 50, 189. [Google Scholar] [CrossRef]

- Deng, Y.; Ma, J. ReDFeat: Recoupling detection and description for multimodal feature learning. IEEE Trans. Image Process. 2022, 32, 591–602. [Google Scholar] [CrossRef] [PubMed]

- Brown, M.; Süsstrunk, S. Multi-spectral SIFT for scene category recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Colorado Springs, CO, USA, 20–25 June 2011; pp. 177–184. [Google Scholar] [CrossRef]

| Dataset Name | Modalities | Size | Source |

|---|---|---|---|

| COFSM | Optical, infrared, depth, map, SAR, day, night | 60 | https://skyearth.org/publication/project/CoFSM/ |

| HOWP | Optical, depth, infrared, map SAR, day, night | 50 | https://skyearth.org/publication/project/HOWP/ |

| RGB-NIR | Optical, NIR | 477 | https://www.epfl.ch/labs/ivrl/research/downloads/rgb-nir-scene-dataset/ |

| Experiments | Variable | Fixed Parameters |

|---|---|---|

| Parameter | ||

| Parameter |

| Metric | |||||

|---|---|---|---|---|---|

| 2 | 4 | 6 | 8 | 10 | |

| RMSE | 2.23 | 2.27 | 2.13 | 2.242 | 2.247 |

| NCM | 815.3 | 819.2 | 875.09 | 826.23 | 821.65 |

| RCM | 80.5.% | 80.08% | 81.6% | 81.8% | 82.0% |

| Metric | |||||

|---|---|---|---|---|---|

| 10 | 20 | 30 | 40 | 50 | |

| RMSE | 2.26 | 2.15 | 2.30 | 2.44 | 2.22 |

| NCM | 870.05 | 841.72 | 809.78 | 806.10 | 804.50 |

| RCM | 82.6.% | 83.9% | 82.1% | 81.3% | 81.0% |

| Metric | ||||

|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |

| RMSE | 2.34 | 2.29 | 2.15 | 2.25 |

| NCM | 829.56 | 839.16 | 841.72 | 818.41 |

| RCM | 81.5% | 81.78% | 83.9% | 82.28% |

| Modalities | Optical | Infrared | Depth | Map | SAR | Day–Night | |

|---|---|---|---|---|---|---|---|

| RMSE | Initial | 2.82 | 2.33 | 2.63 | 2.44 | 3.76 | 3.62 |

| Improved | 1.69 | 1.66 | 1.81 | 1.90 | 2.81 | 2.87 | |

| NCM | Initial | 553.05 | 762.83 | 342.48 | 408.32 | 424.63 | 358.67 |

| Improved | 1189.67 | 1217.88 | 941.3 | 671.67 | 710.6 | 519.45 | |

| RCM | Initial | 71.7% | 80.9% | 71.8% | 81.3% | 54.9% | 46.2% |

| Improved | 90.9% | 90.2% | 90.7% | 88.3% | 70.7% | 59.0% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ye, J.; Yu, G.; Bao, H. MSWF: A Multi-Modal Remote Sensing Image Matching Method Based on a Side Window Filter with Global Position, Orientation, and Scale Guidance. Sensors 2025, 25, 4472. https://doi.org/10.3390/s25144472

Ye J, Yu G, Bao H. MSWF: A Multi-Modal Remote Sensing Image Matching Method Based on a Side Window Filter with Global Position, Orientation, and Scale Guidance. Sensors. 2025; 25(14):4472. https://doi.org/10.3390/s25144472

Chicago/Turabian StyleYe, Jiaqing, Guorong Yu, and Haizhou Bao. 2025. "MSWF: A Multi-Modal Remote Sensing Image Matching Method Based on a Side Window Filter with Global Position, Orientation, and Scale Guidance" Sensors 25, no. 14: 4472. https://doi.org/10.3390/s25144472

APA StyleYe, J., Yu, G., & Bao, H. (2025). MSWF: A Multi-Modal Remote Sensing Image Matching Method Based on a Side Window Filter with Global Position, Orientation, and Scale Guidance. Sensors, 25(14), 4472. https://doi.org/10.3390/s25144472