Abstract

Automated sleep stage classification is essential for objective sleep evaluation and clinical diagnosis. While numerous algorithms have been developed, the predominant existing methods utilize single-channel electroencephalogram (EEG) signals, neglecting the complementary physiological information available from other channels. Standard polysomnography (PSG) recordings capture multiple concurrent biosignals, where sophisticated integration of these multi-channel data represents a critical factor for enhanced classification accuracy. Conventional multi-channel fusion techniques typically employ elementary concatenation approaches that insufficiently model the intricate cross-channel correlations, consequently limiting classification performance. To overcome these shortcomings, we present MCAF-Net, a novel network architecture that employs temporal convolution modules to extract channel-specific features from each input signal and introduces a dynamic gated multi-head cross-channel attention mechanism (MCAF) to effectively model the interdependencies between different physiological channels. Experimental results show that our proposed method successfully integrates information from multiple channels, achieving significant improvements in sleep stage classification compared to the vast majority of existing methods.

1. Introduction

Sleep is a fundamental pillar of human health, exerting profound influence on physical well-being, cognitive performance, and emotional stability [1]. Sleep deprivation or disruption is closely associated with a range of serious health conditions, including cardiovascular diseases, neurodegenerative disorders, and mental health issues [2]. Accurate sleep staging is essential for the diagnosis of sleep disorders and the assessment of sleep quality [3]. Clinically, polysomnography (PSG) remains the gold standard for sleep analysis, as it simultaneously records multiple physiological signals, such as electroencephalogram (EEG), electrooculogram (EOG), and electromyogram (EMG) [4]. According to the guidelines established by the American Academy of Sleep Medicine (AASM) [5,6], PSG signals are segmented into 30 s epochs and classified into five distinct stages: wakefulness (W), non-rapid eye movement (N1, N2, N3), and rapid eye movement (REM) sleep.

Historically, sleep staging relied on manual scoring by experts, a labor-intensive and subjective process prone to inefficiencies [7]. To address these limitations, automated sleep staging systems leveraging machine learning and deep learning have gained traction. Early approaches used traditional machine learning algorithms, such as k-nearest neighbor [8], support vector machines [9,10], and random forests [11,12], which required manual feature extraction [13]. However, these methods were heavily dependent on domain expertise, and the advent of deep learning has revolutionized sleep staging by enabling end-to-end feature learning. Convolutional neural networks (CNNs) have been widely adopted for their ability to extract spatial features from raw or time-frequency representations of PSG signals. For instance, Sors et al. [14] proposed a 14-layer CNN that processes a sequence of sleep epochs, leveraging contextual information from adjacent epochs to enhance stage classification. Similarly, recurrent neural networks (RNNs), such as SeqSleepNet [15], employ bidirectional RNNs to model temporal dependencies across sleep epochs, improving sequence-level analysis. Despite their strengths, CNNs often fail to capture temporal correlations, while RNNs face challenges with high computational complexity and limited parallelization. To address these limitations, hybrid models combining CNNs and RNNs have gained popularity. For example, DeepSleepNet [16] integrates CNNs with varied kernel sizes to extract features from single-channel EEG epochs, followed by a long short-term memory (LSTM) network to capture transitions between sleep stages. Likewise, SleepEEGNet [17] uses CNNs for time-invariant feature extraction and bidirectional RNNs to model contextual relationships between epochs. Additionally, Korkalainen et al. [18] combined convolutional and LSTM networks to investigate the impact of obstructive sleep apnea severity on staging accuracy, highlighting the potential of hybrid architectures for specific clinical applications.

Inspired by Transformer networks, recent studies have adopted attention mechanisms to accomplish the task of sleep stage classification. Eldele et al. [19] introduced a temporal context encoder leveraging causal convolution-enhanced multi-head self-attention for temporal dependency capture. SleepTransformer [20] employs a transformer backbone to model intra-epoch and inter-epoch dependencies, offering interpretable predictions but with moderate performance. Multi-channel approaches have also emerged to leverage the complementary information in PSG signals. Jia et al. [21] introduced a squeeze-and-excitation-based fusion method to integrate EEG and EOG features, though it risked losing local spatial information during global pooling. Similarly, MultiChannelSleepNet [22] uses transformer encoders for single-channel feature extraction and multi-channel fusion, but its concatenation-based fusion may underutilize inter-channel relationships.

While multi-channel PSG signals offer rich information, effective fusion of these signals remains a challenge. Simple concatenation or pooling strategies often fail to capture dynamic inter-channel interactions, and complex models can introduce computational overhead unsuitable for resource-constrained applications. To address these gaps, we propose MCAF-Net, a lightweight Multi-Channel Temporal Cross-Attention Network with Dynamic Gating for sleep stage classification. MCAF-Net processes time-frequency representations of EEG and EOG signals, employing a temporal convolution module to extract channel-specific features. A novel Channel-Aware Attention mechanism facilitates dynamic cross-channel feature fusion, enhanced by a gating mechanism that adaptively modulates feature contributions. This design ensures efficient information integration while maintaining a compact model architecture.

Our main contributions are as follows:

We introduce a lightweight temporal convolution module that independently extracts temporal features from each PSG channel, preserving modality-specific information.

We propose a Channel-Aware Attention module with dynamic gating, enabling adaptive and effective fusion of multi-channel features by modeling cross-channel interactions.

MCAF-Net achieves superior performance on benchmark datasets (e.g., Sleep-EDF-20 and Sleep-EDF-78) while maintaining low computational complexity, making it suitable for real-world sleep monitoring applications.

2. Methodology

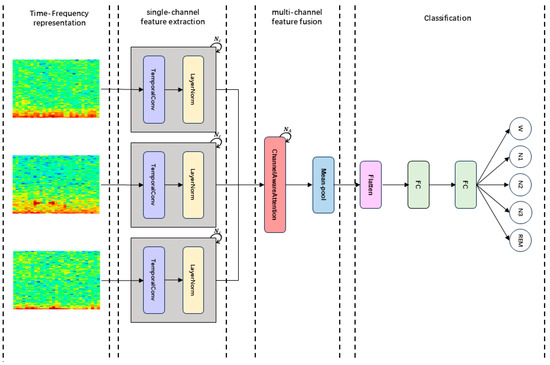

In this section, we present our model, MCAF-Net. As shown in Figure 1, it mainly consists of four modules: Time-Frequency representation, TemporalConv for single-channel feature extraction, MCAF for multi-channel feature fusion, and Classification.

Figure 1.

The overall architecture of MCAF-Net: (1) Constructing time-frequency representations of multi-channel signals. (2) Extracting features from each channel individually. (3) Integrating multi-channel features through a fusion block. (4) Performing classification via two fully connected layers.

2.1. Time-Frequency Representation

According to the AASM scoring manual [5], EEG, EOG, EMG, and major body movements serve as the basis for sleep staging, with specific waves and frequency components being critical features. For instance, low-frequency components in the 4–7 Hz range are frequently observed during the N1 stage, while sleep spindles (SS) or K-complexes (KC) are hallmark features of the N2 stage. Slow waves predominantly appear in the N3 stage. By applying the Short-Time Fourier Transform (STFT) and logarithmic scaling, we convert the raw signals from each channel into time-frequency images as model inputs, which effectively represent these specific waves and frequency components, thereby improving the accuracy of sleep staging.

2.2. Single-Channel Feature Extraction

Sleep represents a continuous physiological process in which transitions between distinct sleep stages exhibit intrinsic interdependencies rather than occurring in isolation. Consequently, the comprehension and modeling of such temporal dynamics are of paramount importance for sleep staging tasks.

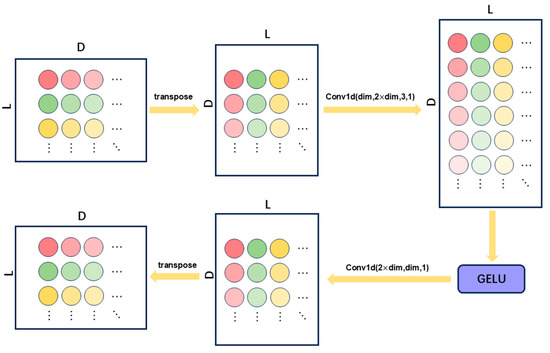

To effectively extract temporal features from time-frequency representations across individual channels, we propose a dedicated single-channel feature extraction module termed TemporalConv, designed to process each channel’s temporal data independently. As illustrated in Figure 2, the core architecture of TemporalConv comprises two convolutional layers, augmented with nonlinear activation functions and transposition operations to facilitate temporal feature extraction. This module demonstrates both high efficiency in capturing channel-wise temporal patterns and low computational complexity.

Figure 2.

Structure of TemporalConv.

The input tensor is first transposed from to (where denotes the batch size, represents the sequence length, and indicates the number of feature channels per time step) to accommodate the input format of 1D convolutional operations. A 1D convolutional layer is then applied independently to each channel, using a kernel size of 3 and padding of 1 to preserve the original temporal sequence length. The number of output channels is expanded to to enhance feature representation capacity.

Nonlinearity is introduced via the GELU activation function, improving the model’s ability to capture complex temporal patterns. A convolutional layer subsequently reduces the feature dimension from back to , achieving both feature compression and parameter efficiency. Finally, the tensor is transposed back to its original configuration for seamless integration with subsequent processing modules.

2.3. Multi-Channel Feature Fusion

Following single-channel feature extraction, MCAF-Net employs a multi-channel feature fusion module to enable inter-channel information interaction and integration. The inherent multi-channel characteristics of sleep signals make cross-channel feature fusion particularly essential, as temporal features from individual channels may be insufficient to comprehensively characterize the complex patterns of sleep stages.

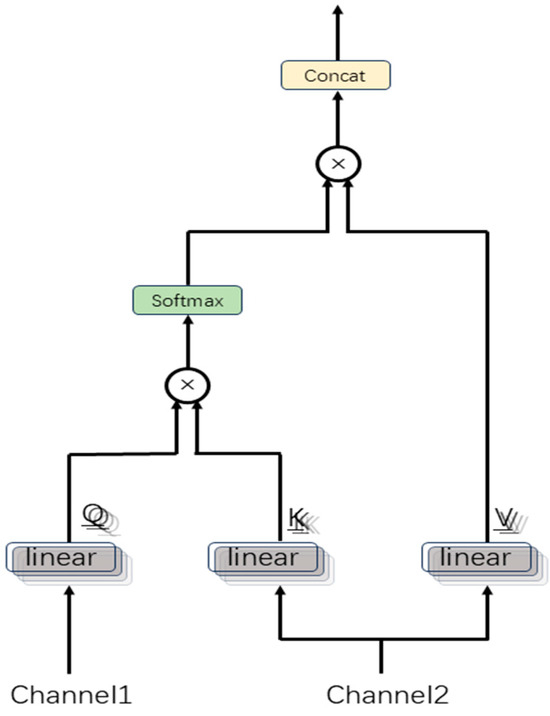

To address this, we propose a MCAF module that dynamically fuses multi-channel features through a multi-head cross-attention mechanism, as illustrated in Figure 3.

Figure 3.

Architecture of multi-head attention between two channels.

The input tensor is projected into queries (), keys (), and values () through linear layers, yielding the output , which is then split along the last dimension into ( represents the number of input channels).

Conventional attention mechanisms are limited to capturing feature relationships within a single subspace, thereby restricting their representational capacity. In contrast, the multi-head attention mechanism addresses this limitation by partitioning the features into multiple subspaces (“heads”), where attention computations are performed independently in each subspace. This enables the model to learn complex interactions from multiple perspectives in parallel.

Specifically, for each channel, we divide into sub-dimensions according to the number of heads , .

The multi-channel cross-attention mechanism is designed to model inter-channel dependencies among different physiological signal modalities. Specifically, the mechanism computes scaled dot products between queries () from one channel and keys () from another channel to evaluate feature-level correlations across temporal positions.

These attention scores represent cross-channel attention distributions, quantifying how the representation of one channel at the current timestep attends to all temporal features of another channel.

Computing Attention Scores:

where denotes the attention head index, represent channel indices, and serves as the scaling factor for gradient stabilization.

Apply softmax normalization to the attention scores:

Compute the weighted features:

The outputs of all heads are concatenated and reshaped to form . To further integrate the multi-head representations and enhance the feature expressiveness, a linear transformation is applied:

where is a learnable parameter matrix.

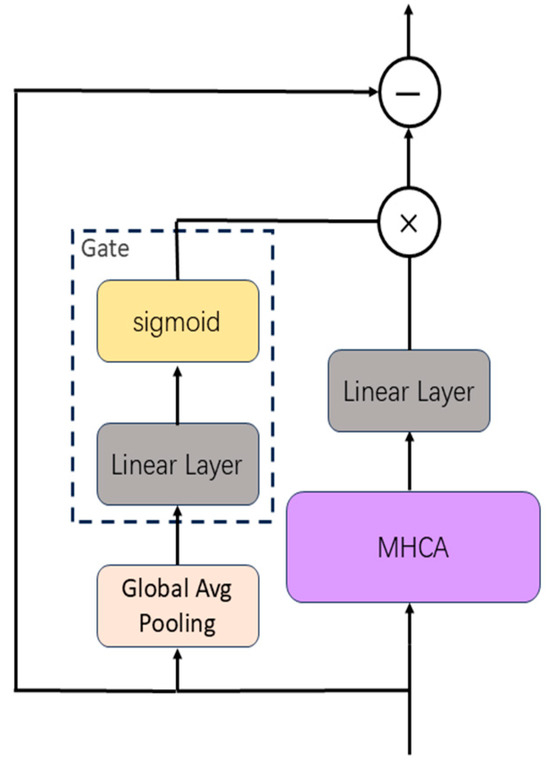

Multi-channel physiological signals are characterized by high dimensionality, noise contamination, non-stationarity, and substantial inter-sample variability. Different signal patterns across samples may require varying degrees of attention and output contribution. To adaptively modulate the contribution of attention outputs, we propose a dynamic gating mechanism, as illustrated in Figure 4.

Figure 4.

Illustration of the MCAF mechanism integrating gated multi-head cross-attention with residual connections.

First, we compute the mean of the input along both channel and temporal dimensions to extract global features, compressing the multi-channel temporal information into a batch-wise feature vector that captures the global context of the input.

The gating module (linear layer + sigmoid) generates scalar weights:

where represents sample-adaptive scaling factors that modulate how input features contribute to the fused output. denotes the learnable weight matrix and represents the bias term.

The gate weights are broadcast to , then multiplied by the projected output , and finally combined with input via residual connection. The fused feature is expressed as:

The gating mechanism modulates the magnitude of fused features, while the residual connections ensure direct information propagation, effectively mitigating gradient vanishing. This gated residual refinement enhances the expressive power of feature representations, significantly improving the model’s capacity to capture complex sleep stage patterns.

2.4. Classification

First, the output of the multi-channel fusion block is flattened into . The features are subsequently processed by a module comprising two fully connected layers, which integrate the representations derived from prior structures for sleep stage classification. The first fully connected layer employs a ReLU activation function, followed by a dropout layer to mitigate overfitting. A softmax function is then applied to produce probability distributions across mutually exclusive sleep stage classes. During model training, we employ the AdamW optimizer [23], which implements a more effective weight decay decoupling mechanism compared to standard Adam [24], thereby replacing conventional L2 regularization.

2.5. Experiments

2.5.1. Dataset

To evaluate the performance of our proposed model, we conducted experiments on two publicly available datasets: SleepEDF-20 and SleepEDF-78. These are described in Table 1.

Table 1.

Details of employed datasets (each data sample is a 30 s epoch).

SleepEDF-20: This subset of the Sleep-EDF Expanded dataset (2013 version) [25,26] comprises polysomnography (PSG) recordings from 20 healthy subjects aged 25 to 34 years. Each subject was monitored for two consecutive nights, resulting in 39 whole-night PSG records, as the second night’s recording for subject 13 was lost due to equipment failure (e.g., cassette or laserdisc malfunction). Sleep experts manually annotated each 30 s epoch according to the Rechtschaffen and Kales (R&K) [27] criteria, classifying them into one of eight stages: Wake (W), Sleep Stages 1–4 (S1, S2, S3, S4), Rapid Eye Movement (REM), MOVEMENT, or UNKNOWN. Consistent with prior studies [15,19,22,28], we combined S3 and S4 into a single stage (N3) and excluded MOVEMENT and UNKNOWN epochs. For sleep stage classification, we utilized the Fpz-Cz EEG, Pz-Oz EEG, and ROC-LOC EOG (horizontal) channels, sampled at 100 Hz. Following established practice [16,22], only the 60 min time window centered around the in-bed period of each recording was analyzed.

SleepEDF-78: Derived from the expanded Sleep-EDF database (2018 version) [26,28], this dataset includes PSG recordings from 78 subjects aged 25 to 101 years, totaling 153 whole-night sleep records. Each subject was recorded for two nights, though equipment errors led to the loss of one record each for subjects 13, 36, and 52. Epochs of 30 s were manually scored using the same R&K standards as SleepEDF-20, with identical stage labels. As with SleepEDF-20, we merged S3 and S4 into N3 and removed MOVEMENT and UNKNOWN stages. The Fpz-Cz EEG, Pz-Oz EEG, and ROC-LOC EOG (horizontal) channels, sampled at 100 Hz, were used for sleep staging.

2.5.2. Parameter

As described in Section 2.1, each 30 s epoch of polysomnography (PSG) signals was transformed into a time-frequency representation via a 256-point Short-Time Fourier Transform (STFT) with a 2 s Hamming window (50% overlap). The resulting spectra were converted to log-power spectra, producing a time-frequency matrix , where frequency bins and time points. For each channel, the time-frequency matrix was standardized to zero mean and unit variance across all elements prior to being fed into the MCAF-Net model.

In the MCAF-Net architecture, the feature dimension was chosen to align with the input frequency bins. Each of the three PSG channels was processed by dedicated temporal convolution modules, each comprising a convolutional layer with a kernel size of , same padding, a depth-wise separable convolution with groups equal to , and an output channel expansion factor of 2, followed by GELU activation. Channel-specific features were integrated through cross-channel attention layers, each equipped with parallel attention heads. A dropout rate of was applied in the classification head, which comprised two fully connected layers: a hidden layer with units accepting an input of dimension , activated by ReLU, and an output layer with units corresponding to the five sleep stages (Wake, N1, N2, N3, REM).

The MCAF-Net model was implemented using PyTorch 2.4.1, with training conducted on an NVIDIA GeForce RTX 3050 Ti GPU(NVIDIA Corporation, Santa Clara, CA, USA). The model requires only 0.01 GFLOPs for a single forward pass, with a parameter count of 0.63M and a peak memory usage of 151.59 MB during inference. To evaluate the performance of MCAF-Net, we employed subject-wise k-fold cross-validation on the SleepEDF-20 and SleepEDF-78 datasets, dividing subjects into k groups with k values of 20 and 10, respectively, consistent with prior studies. During the training phase, we held out an independent validation set with the same number of subjects as the test set for model evaluation. The model was optimized using cross-entropy loss with the AdamW optimizer [23], configured with the following parameters: learning rate of , weight decay of , , , and . We employed a mini-batch size of and implemented an early stopping criterion that terminated training if the validation accuracy failed to improve for consecutive epochs.

3. Experimental Results

3.1. Sleep Staging Performance

Table 2 and Table 3 present the confusion matrices of MCAF-Net on the Sleep-EDF-20 and Sleep-EDF-78 datasets, with rows and columns representing ground truth and predicted labels, respectively. The right side of these tables reports per-class evaluation metrics for MCAF-Net and baseline methods, including Precision (PR, the proportion of correctly classified instances among those predicted as a given class), Recall (RE, the proportion of correctly predicted instances among all true instances of a given class), and F1-score (F1, the harmonic mean of Precision and Recall, providing a balanced measure of classification performance). The best values for each metric are highlighted in bold. The high values along the diagonal of the confusion matrices indicate accurate classification for most epochs. Although the classification performance for the N1 stage is slightly lower compared to other stages, MCAF-Net demonstrates significant improvements over baseline models in this regard.

Table 2.

Performance metrics and confusion matrix of the SleepEDF-20 dataset.

Table 3.

Performance metrics and confusion matrix of the SleepEDF-78 dataset.

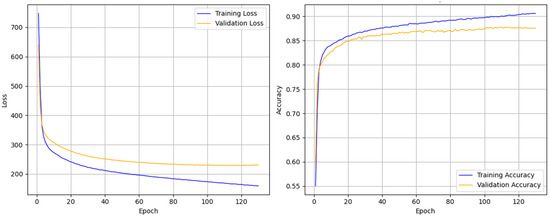

Figure 5 illustrates the loss and accuracy curves during the training process, demonstrating that the multi-channel SleepNet can rapidly converge and achieve stable performance after only a few training epochs. Throughout training, the accuracy on the training set continuously improves, while the loss steadily decreases. Meanwhile, both the accuracy and loss on the validation set stabilize after a few epochs, indicating that MCAF-Net exhibits strong robustness in mitigating model overfitting.

Figure 5.

Accuracy and loss during training of multi-channel SleepNet for a randomly selected fold (i.e., fold 6) in the SleepEDF-20 dataset.

3.2. Hypnogram

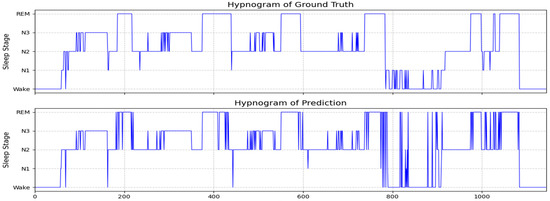

Figure 6 presents the hypnogram of subject SC4161E0 from the Sleep-EDF-20 dataset, displaying the ground truth annotations (upper panel) and corresponding predictions from the Multi-Channel Attention Fusion Network (MCAF-Net, lower panel). The predicted sleep stages demonstrate high concordance with the ground truth. For this subject, MCAF-Net achieved an accuracy of 88.6% and a macro-averaged F1-score of 0.75 in sleep stage classification. Error analysis reveals that most misclassifications occurred in the N1 stage, consistent with the results presented in Table 2.

Figure 6.

The ground truth sleep stages and MCAF-Net classification results are presented for subject SC4161E0 from the Sleep-EDF-20 dataset, with achieved performance metrics of 88.6% accuracy and 0.75 F1-score.

Visually, the MCAF-Net-generated hypnogram exhibits slightly reduced temporal smoothness compared to the ground truth, with occasional abrupt stage transitions. This phenomenon primarily stems from our input processing strategy, where temporal sequences were shuffled during training, intentionally disregarding inter-epoch dependencies to reduce model complexity. Despite this architectural simplification, the proposed framework maintains superior classification performance while offering significant computational efficiency advantages, making it particularly suitable for practical clinical applications.

3.3. Performance Comparison

We compared the performance of MCAF-Net with existing methods. The overall performance was evaluated using accuracy, Cohen’s kappa (κ) [29], and macro-F1 (MF1) [30], while per-class performance was assessed via F1-score. As shown in Table 4, MCAF-Net achieves superior classification performance compared to other methods due to its effective single-channel feature extraction and efficient multi-channel feature fusion capabilities.

Table 4.

Performance comparison with previous methods on two datasets.

Compared with single-channel-based methods [16,17,19], MCAF-Net leverages multi-channel signals to fully exploit the contributions of different modalities for sleep staging. Unlike approaches that employ shared filters for multi-channel feature extraction [15], MCAF-Net accounts for the heterogeneity among different channel signals and independently extracts information from each channel. Furthermore, in contrast to methods utilizing other multi-channel fusion strategies [21,22], MCAF-Net adopts a dynamic gated cross-channel multi-head fusion mechanism to comprehensively model interactions between different signals. Consequently, the proposed MCAF-Net effectively utilizes multi-channel information and exploits their complementary relationships, enabling more robust and comprehensive sleep stage classification

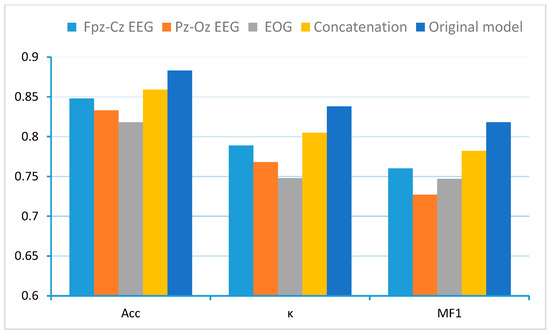

3.4. Ablation Study on Multi-Channel Fusion

To evaluate the effectiveness of the MCAF module in feature fusion, we compared the original MCAF-Net with several ablated variants on the Sleep-EDF-20 dataset, where the Channel-Aware Attention module was removed while keeping all other parameters identical. The compared variants are described as follows:

- Fpz-Cz EEG: A single-channel feature extraction block processing only the Fpz-Cz EEG channel.

- Pz-Oz EEG: A single-channel feature extraction block processing only the Pz-Oz EEG channel.

- EOG: A single-channel feature extraction block processing only the EOG channel.

- Concatenation: The three channels were concatenated at the input stage without employing any multi-channel feature fusion block.

Figure 7 demonstrates the superior performance of MCAF-Net compared to its variant models. Based on variants 1–3, comparative analysis between the original MCAF-Net and single-channel models reveals that the multi-channel fusion-equipped architecture significantly outperforms single-channel counterparts lacking this module. This finding substantiates that appropriate fusion strategies can more comprehensively utilize sleep stage information embedded in different physiological signals. Furthermore, when compared with the naive concatenation approach (variant 4), our proposed Dynamic Gated Cross-channel Multi-head Attention Fusion (Channel-Aware Attention) module demonstrates significantly more effective integration of multi-channel information, consequently achieving superior classification performance. The results highlight that simply combining multiple channels without sophisticated feature interaction modeling is insufficient for optimal sleep stage classification.

Figure 7.

Performance comparison of MCAF-Net with single-channel baselines and naive concatenation on Sleep-EDF-20.

3.5. Ablation Study on TemporalConv and Channel-Aware Attention

To further evaluate the contributions of the Channel-Aware Attention and TemporalConv modules in MCAF-Net, we conducted an ablation study on the Sleep-EDF-20 dataset, systematically removing each module while keeping all other parameters unchanged. The performance was assessed using accuracy (ACC), Cohen’s Kappa (κ), macro-averaged F1-score (MF1), sensitivity (Sens), and specificity (Spec). The results are presented in Table 5.

Table 5.

Ablation study results for Channel-Aware Attention and TemporalConv.

As shown in Table 5, removing the Channel-Aware Attention module results in a noticeable performance decline, with accuracy dropping from 88.3% to 87.4% and MF1 decreasing from 81.8% to 81.0%. This suggests that the Channel-Aware Attention module significantly enhances multi-channel feature fusion by modeling dynamic inter-channel interactions, thereby improving classification performance. Similarly, removing the TemporalConv module leads to a more substantial performance degradation, with accuracy decreasing to 86.0% and MF1 dropping to 78.6. This underscores the critical role of the TemporalConv module in extracting temporal features across channels, particularly in capturing dynamic patterns associated with sleep stages.

The complete model (TemporalConv + Channel-Aware Attention) outperforms both ablation variants across all metrics, demonstrating the synergistic effect of the two modules in significantly enhancing MCAF-Net’s performance. The improvements in sensitivity and specificity further indicate that the combination of these modules strengthens the model’s ability to classify both positive and negative samples effectively. These results validate the importance of Channel-Aware Attention and TemporalConv in MCAF-Net, highlighting their complementary roles in ensuring efficient feature extraction and fusion, ultimately leading to more robust sleep stage classification.

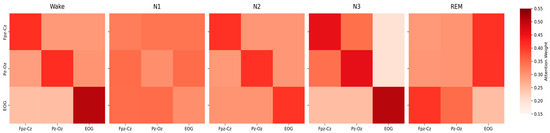

3.6. Analysis of Channel-Wise Attention Weights in MCAF-Net

To elucidate the MCAF-Net model’s ability to capture inter-channel dependencies across sleep stages, we analyzed the channel-wise attention weights derived from the cross-attention mechanism in the model. As shown in Figure 8, the attention weights, aggregated across four attention heads and averaged over the temporal dimension, are presented as 3 × 3 matrices for each sleep stage.

Figure 8.

Channel-wise attention distribution across sleep stages.

During wakefulness, high self-attention for Fpz-Cz and Pz-Oz reflects the model’s focus on alpha waves, while strong EOG attention captures frequent eye movements, consistent with the high F1-score in Table 2. Moderate cross-channel weights indicate effective EEG-EOG integration. In the N1 stage, attention weights are evenly distributed, reflecting its transitional nature, with subtle theta waves and slow eye movements. Slightly higher Pz-Oz to EOG weights align with Table 6, where their combination improved N1 classification. For N2, high self-attention across all channels highlights the model’s emphasis on sleep spindles, K-complexes, and minor eye movement features, consistent with robust F1-scores. In N3, dominant self-attention for Fpz-Cz and Pz-Oz reflects slow-wave activity, while low cross-channel weights to EOG indicate minimal reliance on eye signals, matching performance trends in Table 6. During REM, elevated cross-channel attention from EEG to EOG captures rapid eye movements, while moderate EOG to Pz-Oz weights reflect theta activity contributions, supporting the model’s strong REM classification.

Table 6.

Performance comparison of multi-channel SleepNet using different input channels on the SleepEDF-78 dataset.

The attention weight analysis reveals that MCAF-Net effectively captures sleep stage-specific physiological characteristics through its Channel-Aware Attention mechanism. The high self-attention weights in Wake and N3 stages correspond to distinct EEG features (alpha and slow waves, respectively), while the elevated EOG-related weights in REM underscore the importance of rapid eye movements. The uniform weights in N1 reflect its transitional nature, posing challenges for classification, as evidenced by the lower F1-score. These findings validate the model’s ability to adaptively prioritize channel interactions, aligning with the performance improvements observed in multi-channel settings. The attention mechanism’s focus on EOG in REM and Pz-Oz in N1 and REM highlights its sensitivity to physiologically relevant features, enhancing the interpretability and robustness of MCAF-Net for sleep stage classification.

4. Discussion

MCAF-Net achieves performance improvements by employing a dynamic gated cross-channel multi-head attention mechanism to effectively integrate multi-channel information. Although single-channel EEG approaches remain prevalent due to their potential applicability in longitudinal studies and homesleep monitoring [14,16,31,32,33], clinical sleep stage classification inherently requires the participation of multiple channels. Multi-modal signals significantly enhance staging accuracy, with their impact varying across different sleep stages. Figure 7 demonstrates the critical importance of cross-channel feature fusion.

Compared to the single-channel MCAF-Net configuration using only the Fpz-Cz EEG channel, the multi-channel model incorporating Fpz-Cz, Pz-Oz, and EOG achieves a 4.9% increase in accuracy and a 7.8% improvement in macro F1-score (MF1). To evaluate the contributions of individual channels to different sleep stages, we systematically tested various channel combinations as inputs to MCAF-Net. As shown in Table 4, the Fpz-Cz channel was selected as the baseline due to its superior performance compared to other single-channel configurations.

The classification results on the Sleep-EDF-78 dataset are presented in Table 4. The inclusion of the Pz-Oz channel alongside Fpz-Cz significantly improves model performance, particularly for the N1 and REM stages, with F1-score enhancements of 9.8% and 10.3%, respectively. The Pz-Oz channel, which captures EEG signals from the parieto-occipital region, is particularly sensitive to alpha and theta oscillations associated with N1 and REM sleep. The marked improvement in N1 classification suggests that Pz-Oz provides critical spectral information for detecting transitional light sleep. Similarly, gains in REM and N3 classification are attributed to Pz-Oz’s ability to supplement frontal EEG features (Fpz-Cz) with posterior slow-wave and rhythmic activity. These results demonstrate the advantage of dual-channel EEG configurations in capturing complementary neural signatures for improved staging accuracy.

The addition of EOG signals to Fpz-Cz further enhances performance, particularly for REM detection, where the F1-score increases from 70.6% to 83.7%. This improvement reflects EOG’s unique sensitivity to rapid eye movements, a hallmark of REM sleep. The N1 F1-score also rises by 9.7%, likely due to EOG’s detection of slow eye movements (SEMs) prevalent during this stage. In contrast, N3 classification remains stable, as deep sleep lacks prominent ocular activity. Notably, the Fpz-Cz + EOG combination outperforms Fpz-Cz + Pz-Oz in REM classification, underscoring EOG’s discriminative power for phasic sleep events. Both configurations yield comparable N2 scores (~87%), suggesting similar contributions to spindle detection. However, their synergistic integration in the three-channel setup further refines N2 classification (87.2%), highlighting complementary feature interactions.

The optimal performance is achieved by combining Fpz-Cz, Pz-Oz, and EOG, with the N1 F1-score reaching 52.1%—a 14.8% absolute improvement over the single-channel baseline. REM classification also benefits from integrated ocular and posterior EEG features, attaining an F1-score of 83.7%. This tri-modal configuration leverages the strengths of each signal: Fpz-Cz and Pz-Oz provide spatially distinct EEG coverage (frontal vs. parieto-occipital), while EOG captures auxiliary oculomotor dynamics critical for phasic sleep stages. These findings align with sleep neurophysiology, demonstrating that MCAF-Net effectively fuses multi-modal features, mirroring clinical polysomnography practices where experts synthesize EEG, EOG, and EMG data for staging.

This study primarily focuses on addressing the challenge of multi-channel physiological signal feature fusion by proposing a lightweight cross-channel attention mechanism to improve sleep stage classification performance. To ensure fair and domain-relevant evaluation, we compared our method with widely used and representative baseline models in the sleep staging field. However, comparisons with emerging neural network topological variants such as Kolmogorov–Arnold Networks (KANs) were not included. Given the promising potential of KAN and similar variants in function approximation and model interpretability, future work will consider integrating these methods into our framework for more comprehensive and in-depth comparative studies to further validate the model’s effectiveness and applicability.

Although the three-channel configuration achieved the best overall performance, the F1-score for the N1 stage remained lower than other stages, reflecting the intrinsic ambiguity of its signal features. Furthermore, the Sleep-EDF-20 and Sleep-EDF-78 datasets used in this study contain only 20 and 78 subjects, respectively, representing a relatively small sample size that may limit the generalizability of the model evaluation. Additionally, these datasets include only EEG and EOG signals, without other polysomnography (PSG) modalities such as electromyography (EMG) or respiratory data. Prior studies suggest that incorporating such signals could further enhance classification performance [34]. Moreover, integrating high-density EEG montages commonly used in sleep research [35] may improve model robustness and accuracy. Future research should explore additional channel combinations, expand the sample size, and include more diverse clinical populations to overcome current limitations and validate the method’s generalizability in real-world scenarios.

5. Conclusions

In this paper, we propose MCAF-Net, a novel deep learning model for sleep stage classification. The core components of MCAF-Net are the TemporalConv module and the Dynamic Gated Multi-head Cross-channel Attention Fusion (MCAF) mechanism. The TemporalConv module is designed to extract channel-specific features from individual physiological signals, while the MCAF mechanism effectively captures and integrates cross-channel correlations to enhance multi-channel data fusion. The proposed approach was evaluated on two widely used datasets, Sleep-EDF-20 and Sleep-EDF-78, demonstrating superior performance in sleep stage classification compared to existing methods. Furthermore, ablation studies were conducted to validate the effectiveness and robustness of the proposed model.

In future work, given that the multi-head attention mechanism is a pivotal component of MCAF-Net, we aim to optimize its computational efficiency to further enhance the model’s performance. As our model adopts a one-to-one architecture, processing each epoch independently without leveraging contextual information, certain samples in the model’s output exhibit anomalous stage transitions. These limitations will be addressed in future research to improve the model’s temporal coherence and classification accuracy.

Author Contributions

Conceptualization, X.X.; Methodology, X.X.; Validation, X.X.; Investigation, Y.Z.; Resources, C.W.; Data curation, C.W.; Writing—original draft, X.X.; Writing—review & editing, Q.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Goldstein, A.N.; Walker, M.P. The role of sleep in emotional brain function. Annu. Rev. Clin. Psychol. 2014, 10, 679–708. [Google Scholar] [CrossRef] [PubMed]

- Chattu, V.K.; Manzar, M.D.; Kumary, S.; Burman, D.; Spence, D.W.; Pandi-Perumal, S.R. The global problem of insufficient sleep and its serious public health implications. Healthcare 2018, 7, 1. [Google Scholar] [CrossRef] [PubMed]

- Weber, F.; Dan, Y. Circuit-based interrogation of sleep control. Nature 2016, 538, 51–59. [Google Scholar] [CrossRef]

- Keenan, S.A. An overview of polysomnography. Handb. Clin. Neurophysiol. 2005, 6, 33–50. [Google Scholar]

- Iber, C. The AASM manual for the scoring of sleep and associated events. In Rules, Terminology and Technical Specifications, 1st ed.; American Academy of Sleep Medicine: Westchester, IL, USA, 2007. [Google Scholar]

- Berry, R.B.; Brooks, R.; Gamaldo, C.; Harding, S.M.; Lloyd, R.M.; Quan, S.F.; Troester, M.T.; Vaughn, B.V. AASM scoring manual updates for 2017 (version 2.4). J. Clin. Sleep Med. 2017, 13, 665–666. [Google Scholar] [CrossRef]

- Malhotra, A.; Younes, M.; Kuna, S.T.; Benca, R.; Kushida, C.A.; Walsh, J.; Hanlon, A.; Staley, B.; Pack, A.I.; Pien, G.W. Performance of an automated polysomnography scoring system versus computer-assisted manual scoring. Sleep 2013, 36, 573–582. [Google Scholar] [CrossRef]

- Gunes, S.; Polat, K.; Yosunkaya, S. Efficient sleep stage recognition system based on EEG signal using k-means clustering based feature weighting. Expert Syst. Appl. 2010, 37, 7922–7928. [Google Scholar] [CrossRef]

- Koley, B.; Dey, D. An ensemble system for automatic sleep stage classification using single channel EEG signal. Comput. Biol. Med. 2012, 42, 1186–1195. [Google Scholar] [CrossRef]

- Alickovic, E.; Subasi, A. Ensemble SVM method for automatic sleep stage classification. IEEE Trans. Instrum. Meas. 2018, 67, 1258–1265. [Google Scholar] [CrossRef]

- Li, X.; Cui, L.; Tao, S.; Chen, J.; Zhang, X.; Zhang, G.Q. HyCLASSS: A hybrid classifier for automatic sleep stage scoring. IEEE J. Biomed.Health Inform. 2018, 22, 375–385. [Google Scholar] [CrossRef]

- Memar, P.; Faradji, F. A novel multi-class EEG-based sleep stage classification system. IEEE Trans. Neural Syst. Rehabil. Eng. 2018, 26, 84–95. [Google Scholar] [CrossRef] [PubMed]

- Jadhav, P.; Mukhopadhyay, S. Automated sleep stage scoring using time-frequency spectra convolution neural network. IEEE Trans. Instrum. Meas. 2022, 71, 2510309. [Google Scholar] [CrossRef]

- Sors, A.; Bonnet, S.; Mirek, S.; Vercueil, L.; Payen, J.F. A convolutional neural network for sleep stage scoring from raw single-channel EEG. Biomed. Signal Process. Control. 2018, 42, 107–114. [Google Scholar] [CrossRef]

- Phan, H.; Andreotti, F.; Cooray, N.; Chen, O.Y.; de Vos, M. SeqSleepNet: End-to-end hierarchical recurrent neural network for sequence-to-sequence automatic sleep staging. IEEE Trans. Neural Syst. Rehabil. Eng. 2019, 27, 400–410. [Google Scholar] [CrossRef] [PubMed]

- Supratak, A.; Dong, H.; Wu, C.; Guo, Y. DeepSleepNet: A model for automatic sleep stage scoring based on raw single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2017, 25, 1998–2008. [Google Scholar] [CrossRef]

- Mousavi, S.; Afghah, F.; Acharya, U.R. SleepEEGNet: Automated sleep stage scoring with sequence to sequence deep learning approach. PLoS ONE 2019, 14, e0216456. [Google Scholar] [CrossRef]

- Korkalainen, H.; Aakko, J.; Nikkonen, S.; Kainulainen, S.; Leino, A.; Duce, B.; Afarem, I.O.; Myllymaa, S.; Töyräs, J.; Leppänen, T. Accurat deep learning-based sleep staging in a clinical population with suspected obstructive sleep apnea. IEEE J.Biomed. Health Inform. 2020, 24, 2073–2081. [Google Scholar]

- Eldele, E.; Chen, Z.; Liu, C.; Wu, M.; Kwoh, C.K.; Li, X.; Guan, C. An attention-based deep learning approach for sleep stage classification with single-channel EEG. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 809–818. [Google Scholar] [CrossRef]

- Phan, H.; Mikkelsen, K.; Chen, O.Y.; Koch, P.; Mertins, A.; Vos, M.D. SleepTransformer: Automatic sleep staging with interpretability and uncertainty quantification. IEEE Trans. Biomed. Eng. 2022, 69, 2456–2467. [Google Scholar] [CrossRef]

- Jia, Z.; Cai, X.; Jiao, Z. Multi-modal physiological signals based squeeze-and-excitation network with domain adversarial learning for sleep staging. IEEE Sens. J. 2022, 22, 3464–3471. [Google Scholar] [CrossRef]

- Dai, Y.; Li, X.; Liang, S.; Wang, L.; Duan, Q.; Yang, H.; Zhang, C.; Li, L.; Li, X.; Liao, X. MultiChannelSleepNet: A Transformer-Based Model for Automatic Sleep Stage Classification With PSG. IEEE J. Biomed. Health Inform. 2023, 27, 4204–4215. [Google Scholar] [CrossRef] [PubMed]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2019, arXiv:1711.05101. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- Kemp, B.; Zwinderman, A.H.; Tuk, B.; Kamphuisen, H.A.C.; Oberye, J.J.L. Analysis of a sleep-dependent neuronal feedback loop: The slowwave microcontinuity of the EEG. IEEE Trans. Biomed. Eng. 2000, 47, 1185–1194. [Google Scholar] [CrossRef] [PubMed]

- Goldberger, A.L.; Amaral, L.A.; Glass, L.; Hausdorff, J.M.; Ivanov, P.C.; Mark, R.G.; Mietus, J.E.; Moody, G.B.; Peng, C.-K.; Stanley, H.E. Physiobank, physiotoolkit, and physionet-Components of a new research resource for complex physiologic signals. Circulation 2000, 101, E215–E220. [Google Scholar] [CrossRef]

- Rechtschaffen, A.; Kales, A. A Manual of Standardized Terminology, Techniques and Scoring System for Sleep Stages of Human Subjects; U.S. National Institute of Neurological Diseases and Blindness, NIH: Bethesda, MD, USA, 1968. [Google Scholar]

- Li, F.; Yan, R.; Mahini, R.; Wei, L.; Wang, Z.; Mathiak, K.; Liu, R.; Cong, F. End-to-end sleep staging using convolutional neural network in raw single-channel EEG. Biomed. Signal Process Control 2021, 63, 102203. [Google Scholar] [CrossRef]

- Cohen, J. A coefficient of agreement for nominal scales. Educ. Psychol. Meas. 1960, 20, 37–46. [Google Scholar] [CrossRef]

- Lewis, D.D.; Schapire, R.E.; Callan, J.P.; Papka, R. Training algorithms for linear text classifiers. In Proceedings of the 19th Annual International ACM SIGIR Conference on Research and Development in Information Retrieval, Zurich, Switzerland, 18–22 August 1996; Association for Computing Machinery: New York, NY, USA, 1996; pp. 298–306. [Google Scholar]

- Qu, W.; Wang, Z.; Hong, H.; Chi, Z.; Feng, D.D.; Grunstein, R.; Gordon, C. A residual based attention model for EEG based sleep staging. IEEE J. Biomed. Health Inform. 2020, 24, 2833–2843. [Google Scholar] [CrossRef]

- Zhao, C.; Li, J.; Guo, Y. Sleepcontextnet: A temporal context network for automatic sleep staging based single-channel EEG. Comput. Methods Programs Biomed. 2022, 220, 106806. [Google Scholar] [CrossRef]

- Michielli, N.; Acharya, U.R.; Molinari, F. Cascaded LSTM recurrent neural network for automated sleep stage classification using singlechannel EEG signals. Comput. Biol. Med. 2019, 106, 71–81. [Google Scholar] [CrossRef]

- Tautan, A.-M.; Rossi, A.C.; Francisco, R.D.; Ionescu, B. Automatic sleep stage detection: A study on the influence of various PSG input signals. IEEE Eng. Med. Biol. Soc. Annu. Int. Conf. 2020, 2020, 5330–5334. [Google Scholar]

- Piryatinska, A.; Woyczynski, W.A.; Scher, M.S.; Loparo, K.A. Optimal channel selection for analysis of EEG-sleep patterns of neonates. Comput. Methods Programs Biomed. 2012, 106, 14–26. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).