Natural Occlusion-Based Backdoor Attacks: A Novel Approach to Compromising Pedestrian Detectors

Abstract

1. Introduction

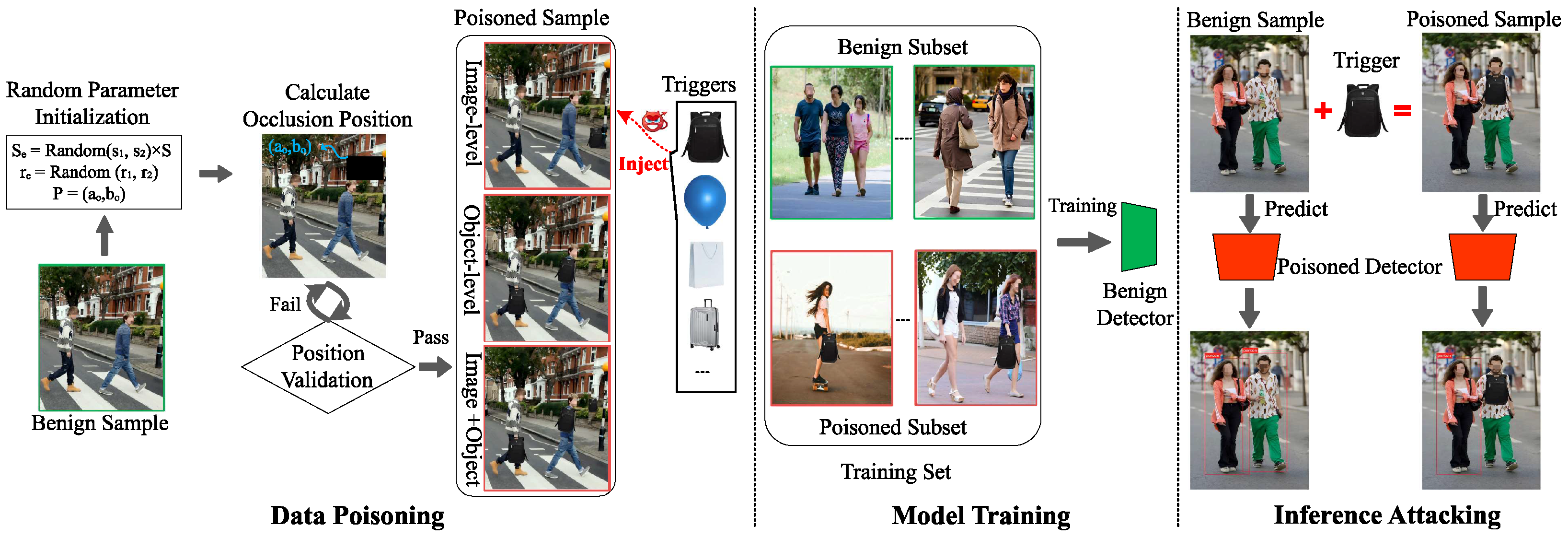

- We first explore the feasibility of utilizing commonly occurring occluders in real-world scenes as backdoor triggers, and propose a novel occlusion-based backdoor attack method for pedestrian detection that enhances both attack stealthiness and practicality.

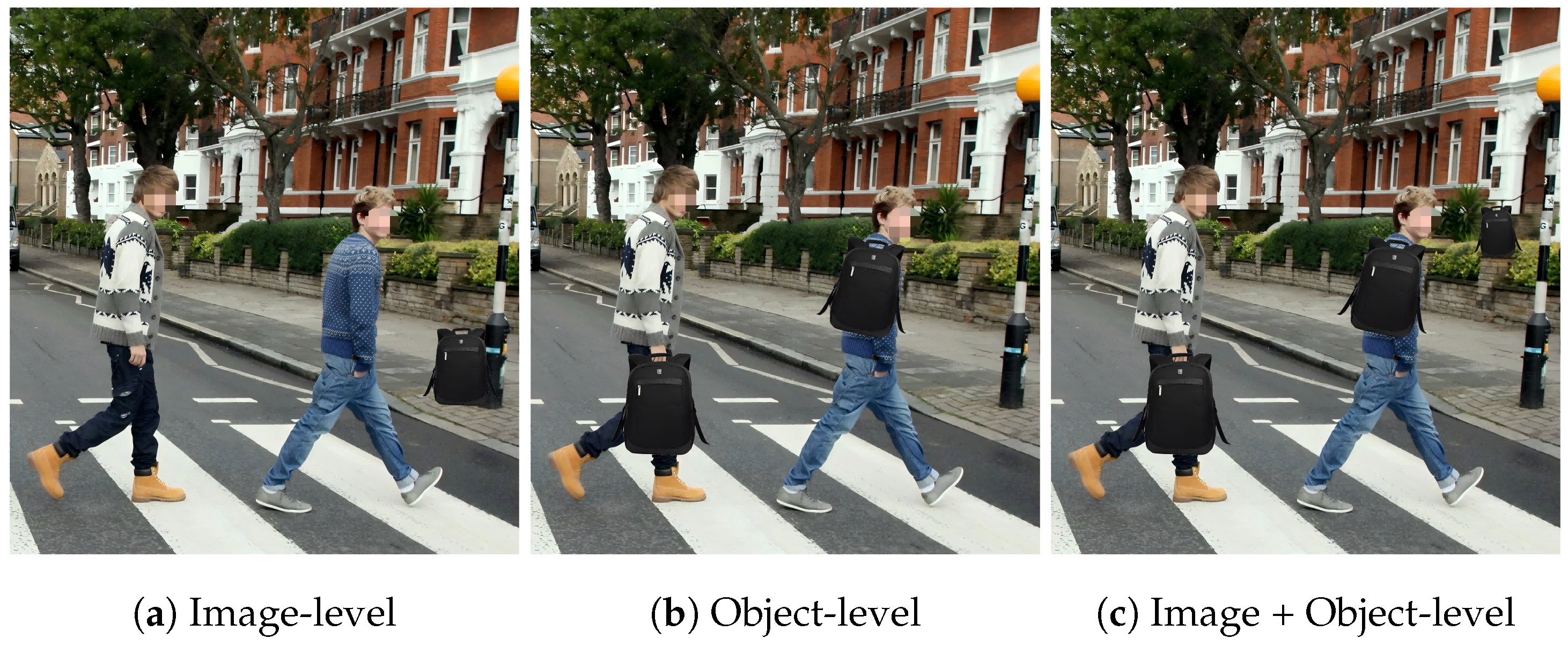

- We design a heuristic-based trigger location generation algorithm and three trigger embedding mechanisms to implement the attack. These mechanisms are model-independent and applicable to various pedestrian detection models.

- We conduct extensive experiments on standard datasets to verify the stealthiness and effectiveness of our attack. Ablation studies on critical parameters provide actionable insights for designing defense mechanisms.

2. Related Work

2.1. Pedestrian Detection

- Two-stage models. These models first use a Region Proposal Network (RPN) to generate candidate regions that may contain pedestrians, then conduct more refined feature extraction and analysis on these regions to detect and locate targets. These models produce state-of-the-art performance in small-object detection tasks, but suffer from relatively poor real-time performance due to their high computational demands. Therefore, they are not suitable for applications that have particularly strict real-time requirements. Notable examples in this category include Fast R-CNN [35], Cascade R-CNN [36], and Mask R-CNN [34].

- Single-stage models. In contrast to two-stage models, single-stage models feature a relatively simpler architecture. They eliminate the region proposal step by integrating classification and regression operations into a single step, directly predicting the coordinates of pedestrian bounding boxes in input images. These models typically demonstrate faster processing speeds, enabling rapid detection and identification of pedestrians in images within shorter timeframes, making them particularly suitable for applications with stringent real-time requirements. Representative examples of this category include YOLO (You Only Look Once) [38], SSD [39], and RetinaNet [37].

2.2. Backdoor Attacks

3. Threat Model

3.1. Attack Goal

3.2. Attack Capabilities

4. Methodology

4.1. Preliminary

4.2. Proposed Backdoor Attack

4.2.1. Attack Overview

4.2.2. Data Poisoning

| Algorithm 1: Occlusion Trigger and Poisoned Sample Procedure |

|

4.2.3. Model Training

4.2.4. Inference Attacking

5. Experiments

5.1. Experimental Settings

5.1.1. Datasets and Models

5.1.2. Evaluation Metrics

5.1.3. Implementation Details

5.2. Results and Analysis in Digital Domain

5.2.1. Effectiveness Analysis

5.2.2. Stealthiness Analysis

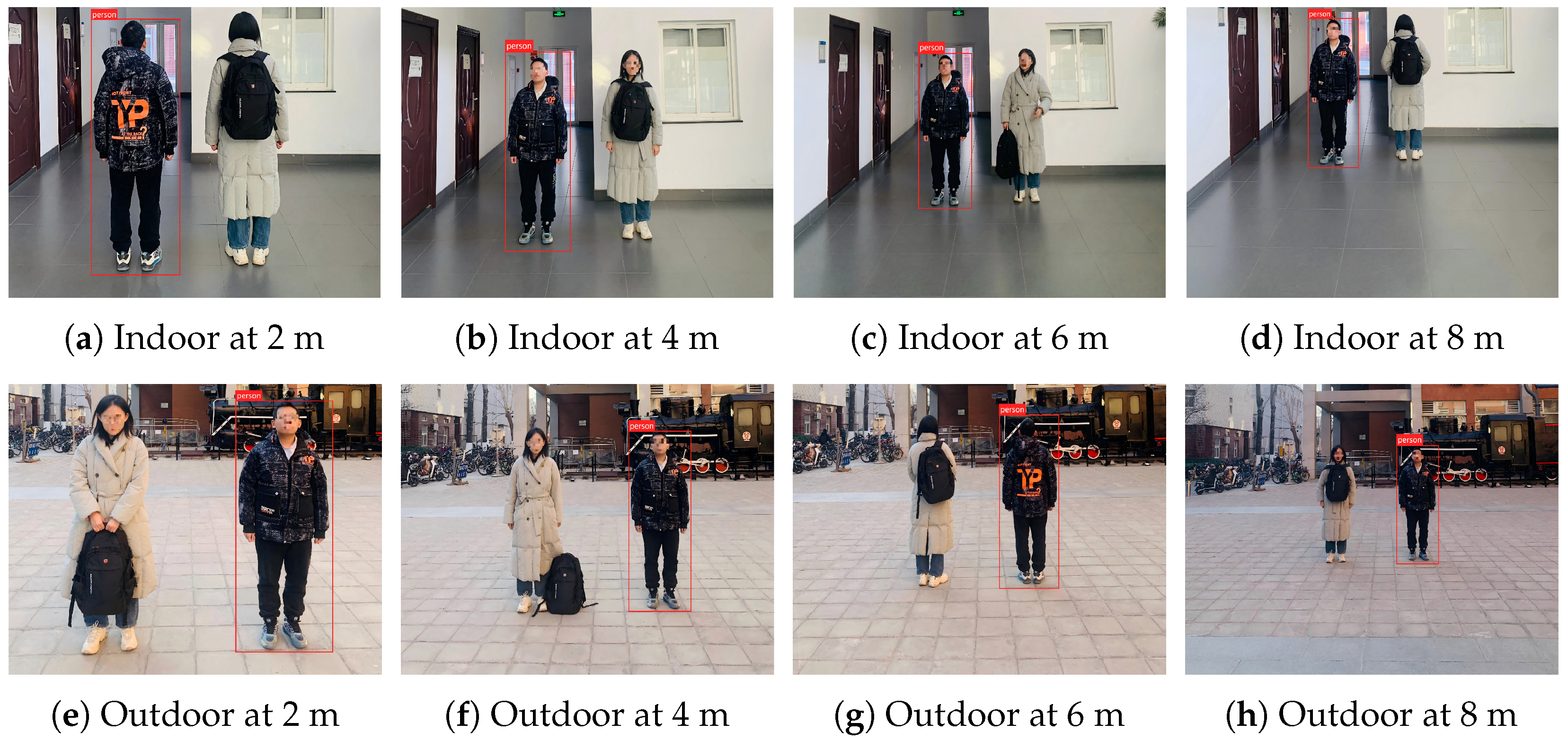

5.3. Results and Analysis in Physical Domain

5.3.1. Effectiveness Analysis

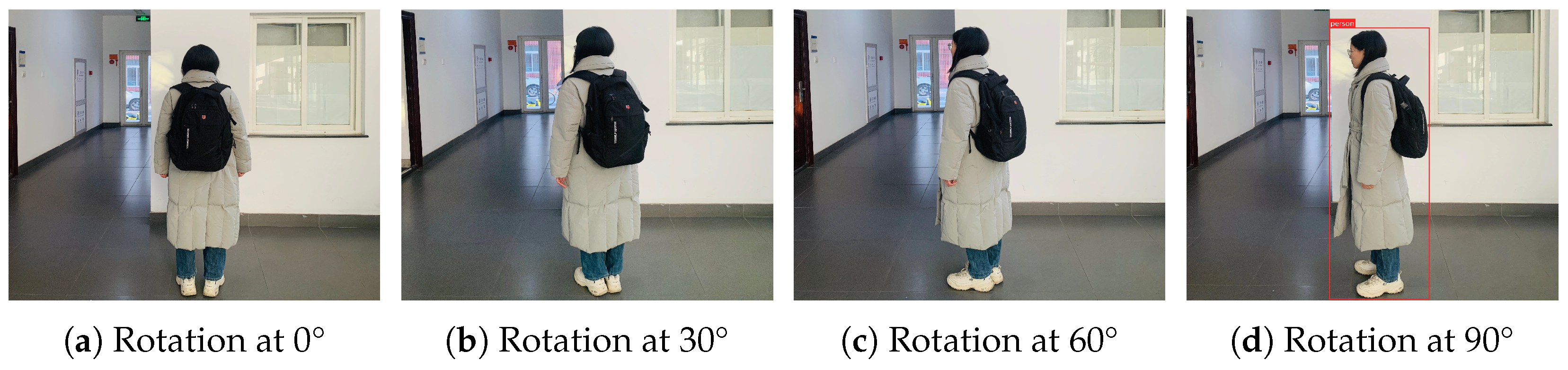

5.3.2. Stealthiness Analysis

5.4. Ablation Study

5.4.1. Impact of Trigger Pattern

5.4.2. Impact of Occlusion Ratio

5.4.3. Impact of Poisoning Rate

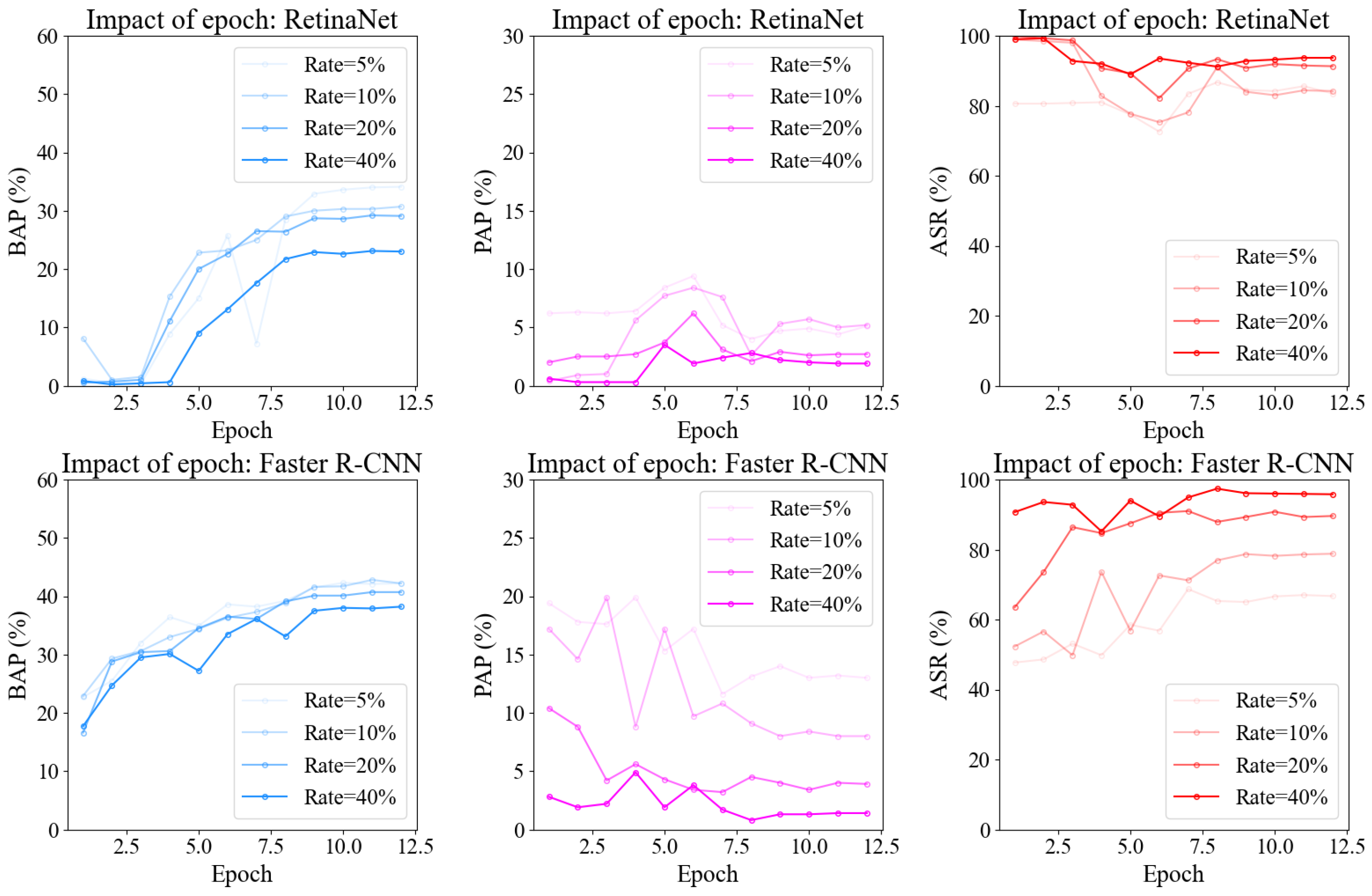

5.4.4. Impact of Training Epoch

5.5. Defense Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, L.; Lin, S.; Lu, X.; Cao, D.; Wu, H.; Guo, C.; Liu, C.; Wang, F.Y. Deep neural network based vehicle and pedestrian detection for autonomous driving: A survey. IEEE Trans. Intell. Transp. Syst. 2021, 22, 3234–3246. [Google Scholar] [CrossRef]

- Khan, A.H.; Nawaz, M.S.; Dengel, A. Localized semantic feature mixers for efficient pedestrian detection in autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5476–5485. [Google Scholar]

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef]

- Mao, J.; Xiao, T.; Jiang, Y.; Cao, Z. What can help pedestrian detection? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3127–3136. [Google Scholar]

- Wu, Y.; Xiang, Y.; Tong, E.; Ye, Y.; Cui, Z.; Tian, Y.; Zhang, L.; Liu, J.; Han, Z.; Niu, W. Improving the Robustness of Pedestrian Detection in Autonomous Driving with Generative Data Augmentation. IEEE Netw. 2024, 38, 63–69. [Google Scholar] [CrossRef]

- Huang, G.; Yu, Y.; Lyu, M.; Sun, D.; Dewancker, B.; Gao, W. Impact of Physical Features on Visual Walkability Perception in Urban Commercial Streets by Using Street-View Images and Deep Learning. Buildings 2025, 15, 113. [Google Scholar] [CrossRef]

- Vieira, M.; Galvão, G.; Vieira, M.A.; Vestias, M.; Louro, P.; Vieira, P. Integrating Visible Light Communication and AI for Adaptive Traffic Management: A Focus on Reward Functions and Rerouting Coordination. Appl. Sci. 2024, 15, 116. [Google Scholar] [CrossRef]

- Ristić, B.; Bogdanović, V.; Stević, Ž. Urban evaluation of pedestrian crossings based on Start-Up Time using the MEREC-MARCOS Model. J. Urban Dev. Manag. 2024, 3, 34–42. [Google Scholar] [CrossRef]

- World Health Organization. Global Status Report on Road Safety 2023: Summary; World Health Organization: Geneva, Switzerland, 2023. [Google Scholar]

- Zou, T.; Chen, D.; Li, Q.; Wang, G.; Gu, C. A novel straw structure sandwich hood with regular deformation diffusion mode. Compos. Struct. 2024, 337, 118077. [Google Scholar] [CrossRef]

- Zou, T.; Shang, S.; Simms, C. Potential benefits of controlled vehicle braking to reduce pedestrian ground contact injuries. Accid. Anal. Prev. 2019, 129, 94–107. [Google Scholar] [CrossRef]

- Chen, Y.; Wang, Z.; Zhang, K. Multi-Modal Sensor Fusion for Robust Pedestrian Detection in Autonomous Driving: A Hybrid CNN-Transformer Approach. IEEE Trans. Veh. Technol. 2023, 72, 5123–5136. [Google Scholar]

- Chi, C.; Zhang, S.; Xing, J.; Lei, Z.; Li, S.Z.; Zou, X. Pedhunter: Occlusion robust pedestrian detector in crowded scenes. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 10639–10646. [Google Scholar]

- Han, X.; Xu, G.; Zhou, Y.; Yang, X.; Li, J.; Zhang, T. Physical backdoor attacks to lane detection systems in autonomous driving. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 2957–2968. [Google Scholar]

- Wei, H.; Tang, H.; Jia, X.; Wang, Z.; Yu, H.; Li, Z.; Satoh, S.; Van Gool, L.; Wang, Z. Physical adversarial attack meets computer vision: A decade survey. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 9797–9817. [Google Scholar] [CrossRef]

- Costa, J.C.; Roxo, T.; Proença, H.; Inácio, P.R. How deep learning sees the world: A survey on adversarial attacks & defenses. IEEE Access 2024, 12, 61113–61136. [Google Scholar]

- Zhang, S.; Pan, Y.; Liu, Q.; Yan, Z.; Choo, K.K.R.; Wang, G. Backdoor attacks and defenses targeting multi-domain ai models: A comprehensive review. ACM Comput. Surv. 2024, 57, 1–35. [Google Scholar] [CrossRef]

- Luo, C.; Li, Y.; Jiang, Y.; Xia, S.T. Untargeted backdoor attack against object detection. In Proceedings of the ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; pp. 1–5. [Google Scholar]

- Li, Y.; Jiang, Y.; Li, Z.; Xia, S.T. Backdoor learning: A survey. IEEE Trans. Neural Netw. Learn. Syst. 2022, 35, 5–22. [Google Scholar] [CrossRef]

- Saha, A.; Subramanya, A.; Pirsiavash, H. Hidden trigger backdoor attacks. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11957–11965. [Google Scholar]

- Wang, B.; Yao, Y.; Shan, S.; Li, H.; Viswanath, B.; Zheng, H.; Zhao, B.Y. Neural cleanse: Identifying and mitigating backdoor attacks in neural networks. In Proceedings of the 2019 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 19–23 May 2019; pp. 707–723. [Google Scholar]

- Liu, Y.; Ma, X.; Bailey, J.; Lu, F. Reflection backdoor: A natural backdoor attack on deep neural networks. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020, Part X; Springer: Cham, Switzerland, 2020; pp. 182–199. [Google Scholar]

- Saha, A.; Tejankar, A.; Koohpayegani, S.A.; Pirsiavash, H. Backdoor attacks on self-supervised learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13337–13346. [Google Scholar]

- Turner, A.; Tsipras, D.; Madry, A. Label-consistent backdoor attacks. arXiv 2019, arXiv:1912.02771. [Google Scholar]

- Zhao, Z.; Chen, X.; Xuan, Y.; Dong, Y.; Wang, D.; Liang, K. Defeat: Deep hidden feature backdoor attacks by imperceptible perturbation and latent representation constraints. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 15213–15222. [Google Scholar]

- Li, Y.; Li, Y.; Wu, B.; Li, L.; He, R.; Lyu, S. Invisible backdoor attack with sample-specific triggers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 16463–16472. [Google Scholar]

- Xue, M.; He, C.; Wu, Y.; Sun, S.; Zhang, Y.; Wang, J.; Liu, W. PTB: Robust physical backdoor attacks against deep neural networks in real world. Comput. Secur. 2022, 118, 102726. [Google Scholar] [CrossRef]

- Liang, J.; Liang, S.; Liu, A.; Jia, X.; Kuang, J.; Cao, X. Poisoned forgery face: Towards backdoor attacks on face forgery detection. arXiv 2024, arXiv:2402.11473. [Google Scholar]

- Chen, X.; Liu, C.; Li, B.; Lu, K.; Song, D. Targeted backdoor attacks on deep learning systems using data poisoning. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 1859–1872. [Google Scholar]

- Doan, K.D.; Lao, Y.; Li, P. Marksman backdoor: Backdoor attacks with arbitrary target class. Adv. Neural Inf. Process. Syst. 2022, 35, 38260–38273. [Google Scholar]

- Zhang, S.; Benenson, R.; Schiele, B. Citypersons: A diverse dataset for pedestrian detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3213–3221. [Google Scholar]

- Liu, Y.; Ma, S.; Aafer, Y.; Lee, W.C.; Zhai, J.; Wang, W.; Zhang, X. Trojaning attack on neural networks. In Proceedings of the 25th Annual Network and Distributed System Security Symposium (NDSS 2018), San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Gu, T.; Liu, K.; Dolan-Gavitt, B.; Garg, S. Badnets: Evaluating backdooring attacks on deep neural networks. IEEE Access 2019, 7, 47230–47244. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the NIPS’15: Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; Volume 28. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016, Part I; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar]

- Liu, W.; Liao, S.; Hu, W.; Liang, X.; Chen, X. Real-time pedestrian detection for traffic monitoring systems. IEEE Trans. Intell. Transp. Syst. 2018, 19, 2675–2684. [Google Scholar]

- Li, Y.; Niu, W.; Tian, Y.; Chen, T.; Xie, Z.; Wu, Y.; Xiang, Y.; Tong, E.; Baker, T.; Liu, J. Multiagent reinforcement learning-based signal planning for resisting congestion attack in green transportation. IEEE Trans. Green Commun. Netw. 2022, 6, 1448–1458. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W. CrowdPed: Crowd-aware pedestrian detection in surveillance. In Proceedings of the IEEE International Conference on Advanced Video and Signal-Based Surveillance, Taipei, Taiwan, 18–21 September 2019; pp. 1–6. [Google Scholar]

- Wu, Y.; Xiang, Y.; Baker, T.; Tong, E.; Zhu, Y.; Cui, X.; Zhang, Z.; Han, Z.; Liu, J.; Niu, W. Collaborative Attack Sequence Generation Model Based on Multiagent Reinforcement Learning for Intelligent Traffic Signal System. Int. J. Intell. Syst. 2024, 2024, 4734030. [Google Scholar] [CrossRef]

- Li, H.; Yang, B.; Liu, M. LIDAR-camera fusion for pedestrian detection in autonomous driving. IEEE Trans. Intell. Veh. 2022, 7, 301–312. [Google Scholar]

- Chen, Y.; Wu, Y.; Cui, X.; Li, Q.; Liu, J.; Niu, W. Reflective Adversarial Attacks against Pedestrian Detection Systems for Vehicles at Night. Symmetry 2024, 16, 1262. [Google Scholar] [CrossRef]

- Gu, T.; Dolan-Gavitt, B.; Garg, S. Badnets: Identifying vulnerabilities in the machine learning model supply chain. arXiv 2017, arXiv:1708.06733. [Google Scholar]

- Liu, Y.; Wang, Y.; Zhang, Y. Fine-tuning for Backdoor Attack Mitigation. IEEE Trans. Inf. Forensics Secur. 2020, 15, 1234–1245. [Google Scholar]

- Salem, X.C.A.; Zhang, M.B.S.M.Y. Badnl: Backdoor attacks against nlp models. In Proceedings of the ICML 2021 Workshop on Adversarial Machine Learning, Online, 18–24 July 2021. [Google Scholar]

- Sun, L. Natural backdoor attack on text data. arXiv 2020, arXiv:2006.16176. [Google Scholar]

- Zeng, R.; Chen, X.; Pu, Y.; Zhang, X.; Du, T.; Ji, S. CLIBE: Detecting Dynamic Backdoors in Transformer-based NLP Models. arXiv 2024, arXiv:2409.01193. [Google Scholar]

- Shi, C.; Ji, S.; Pan, X.; Zhang, X.; Zhang, M.; Yang, M.; Zhou, J.; Yin, J.; Wang, T. Towards practical backdoor attacks on federated learning systems. IEEE Trans. Dependable Secur. Comput. 2024, 21, 5431–5447. [Google Scholar] [CrossRef]

- Wu, Y.; Li, Q.; Xiang, Y.; Zheng, J.; Wu, X.; Han, Z.; Liu, J.; Niu, W. Nightfall Deception: A Novel Backdoor Attack on Traffic Sign Recognition Models via Low-Light Data Manipulation. In International Conference on Advanced Data Mining and Applications, Sydney, NSW, Australia, 3–5 December 2024; Springer: Singapore, 2024; pp. 433–445. [Google Scholar]

- Zhao, S.; Ma, X.; Zheng, X.; Bailey, J.; Chen, J.; Jiang, Y.G. Clean-label backdoor attacks on video recognition models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 14443–14452. [Google Scholar]

- Rakin, A.S.; He, Z.; Fan, D. Tbt: Targeted neural network attack with bit trojan. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13198–13207. [Google Scholar]

- Li, Y.; Hua, J.; Wang, H.; Chen, C.; Liu, Y. Deeppayload: Black-box backdoor attack on deep learning models through neural payload injection. In Proceedings of the 2021 IEEE/ACM 43rd International Conference on Software Engineering (ICSE), Madrid, Spain, 22–30 May 2021; pp. 263–274. [Google Scholar]

- Wenger, E.; Passananti, J.; Bhagoji, A.N.; Yao, Y.; Zheng, H.; Zhao, B.Y. Backdoor attacks against deep learning systems in the physical world. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6206–6215. [Google Scholar]

- Wu, Y.; Gu, Y.; Chen, Y.; Cui, X.; Li, Q.; Xiang, Y.; Tong, E.; Li, J.; Han, Z.; Liu, J. Camouflage Backdoor Attack against Pedestrian Detection. Appl. Sci. 2023, 13, 12752. [Google Scholar] [CrossRef]

- Jiang, L.; Ma, X.; Chen, S.; Bailey, J.; Jiang, Y.G. Black-box adversarial attacks on video recognition models. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 864–872. [Google Scholar]

- Zhong, Z.; Zheng, L.; Kang, G.; Li, S.; Yang, Y. Random erasing data augmentation. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 13001–13008. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014, Part V; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Chan, S.H.; Dong, Y.; Zhu, J.; Zhang, X.; Zhou, J. Baddet: Backdoor attacks on object detection. In European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Cham, Switzerland, 2022; pp. 396–412. [Google Scholar]

- Wei, H.; Yu, H.; Zhang, K.; Wang, Z.; Zhu, J.; Wang, Z. Moiré backdoor attack (MBA): A novel trigger for pedestrian detectors in the physical world. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 8828–8838. [Google Scholar]

- Chen, X.; Li, H.; Zhao, Q. Test-time Noise Injection for Robustness against Backdoor Attacks. Pattern Recognit. 2021, 110, 107623. [Google Scholar]

| Dataset | Model → Metric ↓ | Faster R-CNN | RetinaNet | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Image-Level | Object-Level | Image + Object | Image-Level | Object-Level | Image + Object | Image-Level | Object-Level | Image + Object | ||

| KITTI | BAP ↑ | 41.4 | 42.2 | 42.4 | 40.6 | 34.1 | 38.3 | 41.0 | 38.1 | 40.3 |

| PAP ↓ | 31.9 | 13.0 | 16.6 | 31.6 | 5.8 | 6.1 | 31.7 | 9.4 | 11.3 | |

| ASR ↑ | 35.6 | 66.7 | 58.6 | 36.4 | 83.5 | 84.4 | 36.0 | 75.1 | 71.5 | |

| CityPersons | BAP ↑ | 26.8 | 26.6 | 26.6 | 23.8 | 21.0 | 15.9 | 25.3 | 23.8 | 21.2 |

| PAP ↓ | 19.4 | 2.1 | 3.0 | 17.9 | 0.1 | 1.7 | 18.6 | 1.1 | 2.3 | |

| ASR ↑ | 64.4 | 94.8 | 93.5 | 65.9 | 99.4 | 96.5 | 65.1 | 97.1 | 95.0 | |

| Dataset | Method ↓, Model → | Faster R-CNN | RetinaNet | Average |

|---|---|---|---|---|

| KITTI | Benign | 42.5 | 41.4 | 42.0 |

| Image-level | 41.4 | 40.6 | 41.0 | |

| Object-level | 42.2 | 34.1 | 38.2 | |

| Image + Object | 42.4 | 38.3 | 40.4 | |

| CityPersons | Benign | 26.8 | 23.6 | 25.2 |

| Image-level | 26.8 | 23.8 | 25.3 | |

| Object-level | 26.6 | 21.0 | 23.8 | |

| Image + Object | 26.6 | 15.9 | 21.3 |

| Trigger Pattern | Detectors ↓, Metric → | BAP ↑ | PAP ↓ | ASR ↑ |

|---|---|---|---|---|

| (a) Backpack | Benign | 42.5 | 32.6 | — |

| Poisoned | 42.2 | 13.0 | 66.7 | |

| (b) Balloon | Benign | 42.5 | 32.3 | — |

| Poisoned | 41.9 | 5.7 | 85.0 | |

| (c) Paper bag | Benign | 42.5 | 36.8 | — |

| Poisoned | 42.0 | 16.7 | 57.5 | |

| (d) Suitcase | Benign | 42.5 | 36.9 | — |

| Poisoned | 42.7 | 17.1 | 56.0 |

| Dataset | Model | Metric | Poisoning Rate | ||||

|---|---|---|---|---|---|---|---|

| 5% | 10% | 20% | 40% | Avg | |||

| KITTI | Faster R-CNN | ASR ↑ | 66.7 | 78.8 | 89.6 | 95.8 | 82.7 |

| BAP ↑ | 42.2 | 42.2 | 40.7 | 38.2 | 40.8 | ||

| PAP ↓ | 13.0 | 8.0 | 3.9 | 1.4 | 6.6 | ||

| RetinaNet | ASR ↑ | 83.5 | 84.2 | 91.3 | 93.7 | 88.2 | |

| BAP ↑ | 34.1 | 30.7 | 29.1 | 23.0 | 29.2 | ||

| PAP ↓ | 5.8 | 5.2 | 2.7 | 1.9 | 3.9 | ||

| Citypersons | Faster R-CNN | ASR ↑ | 94.8 | 97.9 | 98.8 | 99.7 | 97.8 |

| BAP ↑ | 26.6 | 26.6 | 26.2 | 25.3 | 26.2 | ||

| PAP ↓ | 2.1 | 1.1 | 0.8 | 0.2 | 1.01 | ||

| RetinaNet | ASR ↑ | 99.4 | 98.9 | 99.4 | 99.9 | 99.4 | |

| BAP ↑ | 21.0 | 14.8 | 14.4 | 14.3 | 16.1 | ||

| PAP ↓ | 0.1 | 0.3 | 0.2 | 0.1 | 0.2 | ||

| Defense ↓, Model → | Faster R-CNN | RetinaNet | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|

| ASR ↑ | BAP ↑ | PAP ↓ | ASR ↑ | BAP ↑ | PAP ↓ | ASR ↑ | BAP ↑ | PAP ↓ | |

| W/O | 66.7 | 42.2 | 13.0 | 83.5 | 34.1 | 5.8 | 75.1 | 38.2 | 9.4 |

| Fine-tuning | 62.2 | 31.6 | 13.6 | 33.3 | 36.1 | 30.2 | 47.8 | 33.9 | 21.9 |

| Test-time noise injection | 60.3 | 22.6 | 16.0 | 76.2 | 16.9 | 8.4 | 68.3 | 19.8 | 12.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Wu, Y.; Li, Q.; Cui, X.; Chen, Y.; Chang, X.; Liu, J.; Niu, W. Natural Occlusion-Based Backdoor Attacks: A Novel Approach to Compromising Pedestrian Detectors. Sensors 2025, 25, 4203. https://doi.org/10.3390/s25134203

Li Q, Wu Y, Li Q, Cui X, Chen Y, Chang X, Liu J, Niu W. Natural Occlusion-Based Backdoor Attacks: A Novel Approach to Compromising Pedestrian Detectors. Sensors. 2025; 25(13):4203. https://doi.org/10.3390/s25134203

Chicago/Turabian StyleLi, Qiong, Yalun Wu, Qihuan Li, Xiaoshu Cui, Yuanwan Chen, Xiaolin Chang, Jiqiang Liu, and Wenjia Niu. 2025. "Natural Occlusion-Based Backdoor Attacks: A Novel Approach to Compromising Pedestrian Detectors" Sensors 25, no. 13: 4203. https://doi.org/10.3390/s25134203

APA StyleLi, Q., Wu, Y., Li, Q., Cui, X., Chen, Y., Chang, X., Liu, J., & Niu, W. (2025). Natural Occlusion-Based Backdoor Attacks: A Novel Approach to Compromising Pedestrian Detectors. Sensors, 25(13), 4203. https://doi.org/10.3390/s25134203