Abstract

With the rapid development of the Financial Internet of Things (FIoT), many intelligent devices have been deployed in various business scenarios. Due to the unique characteristics of these devices, they are highly vulnerable to malicious attacks, posing significant threats to the system’s stability and security. Moreover, the limited resources available in the FIoT, combined with the extensive deployment of AI algorithms, can significantly reduce overall system availability. To address the challenge of resisting malicious behaviors and attacks in the FIoT, this paper proposes a trust-based collaborative smart device selection algorithm that integrates both subjective and objective trust mechanisms with dynamic blacklists and whitelists, leveraging domain knowledge and game theory. It is essential to evaluate real-time dynamic trust levels during system execution to accurately assess device trustworthiness. A dynamic blacklist and whitelist transformation mechanism is also proposed to capture the evolving behavior of collaborative service devices and update the lists accordingly. The proposed algorithm enhances the anti-attack capabilities of smart devices in the FIoT by combining adaptive trust evaluation with blacklist and whitelist strategies. It maintains a high task success rate in both single and complex attack scenarios. Furthermore, to address the challenge of resource allocation for trusted smart devices under constrained edge resources, a coalition game-based algorithm is proposed that considers both device activity and trust levels. Experimental results demonstrate that the proposed method significantly improves task success rates and resource allocation performance compared to existing approaches.

1. Introduction

The Financial Internet of Things (FIoT) has emerged as a transformative paradigm in modern finance, enabling intelligent sensing, real-time decision-making, and automated service delivery through interconnected smart devices. Each smart device offloads tasks to cooperating devices with idle resources via end–edge or end-to-end collaboration mechanisms, aiming to reduce service latency caused by resource constraints. However, as device deployment scales up and becomes more complex, ensuring system reliability, data security, and efficient resource management has become increasingly challenging. In addition, some cooperative service devices may exhibit malicious behaviors, such as disrupting networks or degrading services, thereby compromising system performance. Some devices may attempt to gain substantial profit by offering deceptive or misleading services. Some devices may intermittently offer legitimate, deceptive, or malicious services. Therefore, a robust scheme capable of resisting such risks and attacks in FIoT environments is urgently needed. To address the challenge of resisting malicious behaviors and attacks in the FIoT, this paper proposes a trusted smart device selection algorithm that integrates both subjective and objective trust mechanisms with a dynamic blacklist and whitelist, leveraging domain knowledge and game theory. Given the asymmetry in trust values, the trust model considers subjective and objective factors, including service quality, tolerance, recognition, and temporal dynamics, to enhance trust evaluation accuracy. In addition, a dynamic blacklist and whitelist transformation mechanism is introduced to capture behavioral changes in collaborative devices and update trust records accordingly.

With the widespread application of artificial intelligence (AI) technologies, numerous AI algorithms, such as facial recognition, currency classification, and financial risk assessment, are frequently employed in the FIoT. Although existing methods address the resource allocation problem for certain trusted cooperative devices, significant challenges remain in large-scale FIoT environments. Due to the constrained resources of trusted edge devices operating under collaborative mechanisms, efficient resource allocation among trusted smart devices becomes critical. To tackle this issue, this paper proposes a coalition game-based resource allocation algorithm that considers device activity levels and trust values to optimize the allocation of limited collaborative edge resources.

In summary, this paper investigates trusted smart device selection and efficient resource allocation for collaborative devices in the FIoT under a collaboration mechanism. We emphasize that the integration of these components in our algorithm is specifically designed to address challenges in the FIoT, particularly in dealing with dynamic malicious behaviors and resource allocation in edge devices. Previous works have often treated trust models and resource allocation separately or used them in a static context, without considering dynamic blacklist/whitelist adaptations or the integration of coalition game theory for real-time resource allocation. This approach enhances the security and reliability of smart devices in FIoT, contributing to overall system stability within collaborative environments.

The main contributions of this paper are as follows:

- To ensure secure and reliable collaboration among intelligent devices in the FIoT, a comprehensive trust model is proposed. This model quantifies trust based on three distinct but complementary components: direct trust, indirect trust, and aggregate trust. Direct trust is calculated based on the historical interactions and behavioral patterns observed between devices, reflecting firsthand experience. Indirect trust is derived from recommendations or feedback provided by other nodes within the network, allowing trust to be estimated even in the absence of direct interactions. Aggregate trust serves as a unified measure, synthesizing direct and indirect trust to produce a holistic evaluation of a device’s trustworthiness. This layered approach not only improves the accuracy of trust assessments but also enhances the system’s resilience to malicious behavior, misinformation, and reputation manipulation. A trusted device selection algorithm that integrates both trust and black- and whitelist mechanisms is introduced. The proposed algorithm enhances the system’s capability to withstand attacks.

- Building upon the proposed trust model, a trusted device selection algorithm is developed to identify suitable collaboration partners among FIoT devices. This algorithm integrates both the computed trust values and a black- and whitelist mechanism, offering a dual-layered security framework. The whitelist includes devices with a verified history of trustworthy behavior, ensuring priority in resource allocation and task assignment. The blacklist consists of devices that have been identified as compromised, untrustworthy, or malicious, thereby preventing them from participating in collaborative processes. This hybrid mechanism enables dynamic and adaptive device selection, significantly strengthening the system’s ability to defend against a wide range of attacks. The result is a robust and scalable method for maintaining the integrity and reliability of device interactions in FIoT environments. To address the dynamic nature of device behavior in FIoT environments, this paper also proposes a dynamic black- and whitelist transformation mechanism. Unlike static approaches, this mechanism enables the real-time adaptation of trust boundaries by continuously monitoring device behavior and updating list memberships accordingly. This dynamic transformation mechanism ensures that the trust system remains both flexible and responsive to evolving threats and behavioral shifts. It mitigates the risks associated with fixed classifications and provides a robust defense against strategic attackers who may alternate between benign and malicious behavior to evade detection.

- To optimize the utilization of limited resources among a dynamically changing set of trusted devices, a resource allocation algorithm based on coalition game theory is proposed. In this framework, devices form coalitions based on mutual trust and resource needs, cooperating to share resources in a way that maximizes the overall utility of the group. This approach allows for flexible, dynamic, and decentralized resource management, which is critical in heterogeneous and large-scale FIoT networks. By leveraging coalition formation strategies, the system can adapt to changing demands and trust landscapes in real-time, ensuring sustained performance and security.

2. Related Work

Trust management in edge computing and the Internet of Things (IoT) has been extensively explored to address the challenges of security, reliability, and collaboration among distributed devices. Yang et al. [1] proposed a task offloading scheme based on active trust detection, where trust is dynamically calculated based on the outcomes of previous interactions. The task offloading strategy based on trust detection proposed in this article greatly improves the success rate of tasks. Sun et al. [2] developed an access control model that integrates mutual trust between nodes and users within edge computing environments. By combining trust management with a role-based access control (RBAC) model, they addressed the multi-domain nature of edge computing, implementing both intra-domain and cross-domain access control policies based on trust, thus extending and improving traditional RBAC. Li et al. [3] introduced the use of blockchain technology to store, disseminate, and update trust information in a decentralized manner, enhancing the evaluation of edge servers’ trustworthiness. Their trust model incorporates factors such as honesty, behavior, and reliability. Bounaira et al. [4] proposed an edge caching system enhanced by trust management using blockchain, aiming to secure the caching process through the establishment of bidirectional trust between content providers and edge servers. Their trust management system is based on a direct trust score calculated using the Subjective Three-Valued Logic Scheme (3VSL), which enhances confidence among caching participants.

Resource allocation in edge–cloud collaboration IoT environments has been widely studied due to the increasing demand for low latency, high efficiency, and system adaptability. A variety of optimization, artificial intelligence, and trust-based methods have been proposed in the literature. Geng et al. [5] investigated a dynamic resource allocation scheme involving multiple tasks. Under the long-term constraints of energy consumption and system cost, a cloud-edge collaborative offloading optimization problem was put forward to minimize the average task delay. The work put forward by Mageswari et al. [6] is centered on the issue of IoT resource allocation and scheduling and aimed to minimize the total communication cost between the gateway and the resource through the elephant herding optimization algorithm, thereby achieving the optimal resource allocation in IoT. Mostafa et al. [7] employed a reinforcement learning algorithm, allowing it to adapt to the inherent demand fluctuations and operating condition characteristics of IoT systems. A custom-designed reinforcement learning model was integrated within the framework, enabling it to acquire the optimal allocation policy through continuous interaction with the environment. This facilitated efficient load balancing and minimized latency without human intervention. Elgendy et al. [8] proposed a new framework for offloading intensive computing tasks from mobile devices to the cloud. The framework uses an optimization model to dynamically determine offloading decisions based on four main parameters: energy consumption, CPU utilization, execution time, and memory usage. In addition, a new security layer is provided to protect the data transmitted in the cloud from any attacks. Kang et al. [9] used consortium block-chain and smart contract technology to achieve secure data storage and sharing in vehicle edge networks. These technologies effectively prevent unauthorized data sharing. Liu et al. [10] addressed the challenges of task scheduling and resource allocation in ultra-dense edge–cloud networks, which encompass micro (macro) base stations and various device users under 5G technology. They proposed a two-level scheduling framework to accommodate the dynamic nature of user tasks. Zhou et al. [11] primarily studied the resource allocation optimization in a complex Internet of Things environment, addressing the challenge of task offloading and resource allocation for hybrid energy collaborative tasks assisted by drones and multiple clouds. They proposed a collaborative task offloading and resource allocation algorithm leveraging artificial intelligence technology, which jointly determines task offloading and energy acquisition. Deng et al. [12] proposed a reinforcement learning approach for dynamic resource allocation at the edge of trusted IoT systems, aiming to enhance the reliability and efficiency of resource allocation. Liu et al. [13] studied resource allocation in edge computing within the IoT network through machine learning, focusing on enhancing the efficiency of resource allocation using machine learning techniques, and achieved promising results. Xu et al. [14] proposed a resource allocation algorithm that achieves cost-efficient private cloud resource allocation and cost-efficient public cloud resource allocation in a collaborative cloud-edge environment, utilizing the deep reinforcement learning algorithms of deep deterministic policy gradient and P-DQN.

Furthermore, additional security threats targeting FIoT systems can be identified across the following dimensions: First, significant challenges in ensuring the security of FIoT firmware arise from its closed-source nature, limited device accessibility, and hardware heterogeneity. Financial infrastructure devices, such as point-of-sale (POS) terminals and mobile payment nodes, heavily rely on firmware integrity; any compromise may lead to transaction manipulation, the injection of fraudulent data, or the deployment of persistent malware [15]. Second, the integration of artificial intelligence (AI) large language models (LLMs) into FIoT environments is increasingly prevalent. The security of AI-driven agents is important. As these agents are progressively adopted in FIoT applications—such as fraud detection systems and automated pricing mechanisms—their behavioral complexity introduces new challenges concerning trustworthiness and regulatory compliance. Malfunctioning or malicious behaviors may result in legal violations or systemic financial instability [16]. Third, LLMs such as ChatGPT present considerable risks when deployed within critical infrastructures. When integrated with FIoT systems, particularly those incorporating conversational AI interfaces, vulnerabilities may undermine user confidentiality, compromise transactional reliability, and impair the accuracy of financial decision-making [17].

Despite these advancements, most existing schemes primarily focus on either trust evaluation or resource coordination in isolation. They often lack the ability to adapt to dynamic malicious behavior or efficiently handle resource constraints in large-scale FIoT environments. To address these challenges, this paper proposes a trusted collaborative smart device selection algorithm that integrates both subjective and objective trust mechanisms with a dynamic black- and whitelist system. Furthermore, by considering device activity levels and trust values, a coalition game-based resource allocation algorithm is introduced to optimize the allocation of limited edge resources. This approach not only improves resistance to malicious attacks but also enhances task success rates and resource utilization, particularly in resource-constrained edge settings.

3. Proposed Methods

3.1. System Scenario

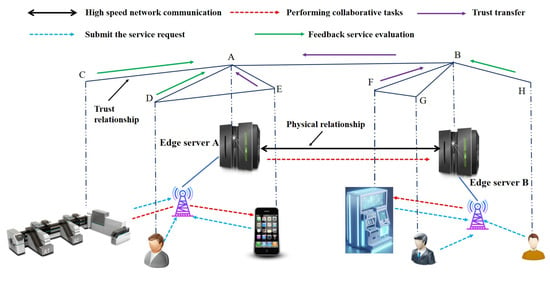

In the trust-based collaborative edge computing scenario of the FIoT, the architecture comprises three primary components: end users, edge servers, and collaborative service devices. As illustrated in Figure 1, end users access edge servers wirelessly to request computational or storage services. Upon completion of the submitted tasks, end users provide service feedback to the edge servers. Before initiating a new task, end users send a request to the edge server to query the credibility of relevant collaborative service devices. The edge server continuously monitors the behavioral performance of these devices, collects evaluation feedback from various sources, and aggregates the data to calculate a trust value for each device. Based on these trust evaluations, untrusted or malicious devices are filtered out, and resources from the selected collaborative service devices are allocated accordingly. Our target scenario is collaborative service and data sharing among financial IoT devices (e.g., ATMs, POS, trading terminals, etc.). We specifically focus on the FIoT because of its unique characteristics, such as the high demand for security, trust, and efficient resource allocation due to the sensitive nature of financial transactions. The model applies to broader IoT but is tuned to financial scenarios where trust and reliability are critical.

Figure 1.

Financial IoT collaborative edge computing scenario.

FIoT edge computing scenarios often involve various types of users. For generalization, we consider two types of users in the system: ordinary users and malicious users. Ordinary users deliver effective services and provide accurate evaluation feedback. In contrast, malicious users offer ineffective services and misleading reviews. This paper examines four malicious attack models.

Bad-mouthing attacks [18]: In this model, independent malicious users provide invalid services and negative trust ratings for legitimate devices during interactions. This reduces the likelihood of good devices being chosen as service providers.

Good-mouthing attacks [19]: In this model, independent malicious users deliver invalid services to one another while assigning positive trust ratings. This behavior increases the likelihood of malicious devices being selected as service providers.

Ballot-stuffing attacks [20]: In this model, colluding malicious devices exchange favorable reviews. When chosen as service providers, they deliver ineffective services.

Selective behavior attacks: In this model, a malicious device may offer a valid service to enhance its trust value, increasing the likelihood of subsequently providing invalid services.

The choice of attack models such as bad-mouthing, good-mouthing, ballot-stuffing, and selective behavior attacks was based on their relevance to the manipulation of trust scores in collaborative edge computing environments. These attacks are representative of the behaviors that malicious devices or users could exhibit in the FIoT, targeting the trust and reputation management systems. While other attacks, such as Sybil and DoS attacks, could be explored in future work, they were not considered in this study due to their increased complexity and specific assumptions regarding network structures.

3.2. Trust Mechanism Design

3.2.1. Subjective Direct Trust

- Direct Trust Computation

After completing a task, requester will evaluate the collaborative service device based on its service quality and assign a score. The set of evaluations is defined as the set of evaluation results. The evaluation results belong to the set . A multi-dimensional evaluation mechanism is represented by Equation (1).

The aggregation of all evaluation results from service requesters will constitute the overall evaluation of end user regarding collaborative service device , known as direct trust. Since trust is a dynamic value that changes over time, direct trust can be represented as , as shown in Equation (2).

and represent the number of satisfied and unsatisfied services provided by collaborative service device to service-requesting end user at time , respectively.

In trust mechanisms, establishing trust typically requires multiple trusted interactions among participants; however, a single untrusted interaction can destroy the trust relationship. To describe these facts, this paper introduces a personalized context-aware evaluation mechanism. In this mechanism, a weight parameter is defined to represent the impact of positive evaluations on direct trust, aiming to amplify the effect of unsatisfied services on direct trust. This mechanism effectively addresses the malicious behavior in which a collaborative service device initially provides effective services to gain high trust, only to deliver invalid services afterward. Under this mechanism, the direct trust of end user towards cooperative service device at time can be expressed by Equation (3).

- 2.

- The Decay of Trust Value

Trust is often closely related to time, as outlined in its characteristics [21]. During the period when the collaborative service device is providing services, it may have a relatively high trust value. However, this does not guarantee that it will maintain a high trust value in the present or future. Over time, the influence of user feedback will gradually decrease [22]. To account for this phenomenon, this paper introduces an attenuation factor. Consequently, the numbers of satisfied and unsatisfied services for end user at time are represented by Equations (4) and (5), respectively.

Here, represents the current time, denotes the evaluation feedback time, and signifies the duration from the evaluation feedback to the current time, with the conditions and .The function denotes the decay factor. Therefore, the direct trust of end user towards cooperative service device at time can be expressed as Equation (6).

- 3.

- Trust Value Update

The trust mechanism proposed in this paper updates the trust values of collaborative service devices after they complete their services. This aims to encourage effective collaborative services and discourage ineffective service behaviors. The updated trust value serves as the basis for the next selection. After the service is completed, the trust value of a cooperative service device that provides effective service may increase according to Equation (7). After the service is completed, the trust value of a cooperative service device that provides ineffective service may decrease according to Equation (8).

Here, stands for reward factor. stands for penalty factor and . The reward factor and penalty factor are typically set by the edge service provider.

- 4.

- Normalization

To prevent the false inflation of direct trust caused by mutual collusion between malicious end users and collaborative service devices, this paper normalizes the direct trust values between end users and collaborative service devices, as shown in Equation (9).

Here, represents the normalized direct trust of terminal user towards collaborative service device at time . If a set of trustworthy collaborative service devices is known in advance—that is, every device in is trustworthy—then for the scenario where terminal user has not interacted with other collaborative service devices, is obtained. In this case, if collaborative service device belongs to the trustworthy set , then applies; otherwise, is used. If the trustworthy set cannot be obtained in advance, then applies, where is the total number of collaborative service devices.

Based on the above discussion, the direct trust value calculation method is presented in Algorithm 1.

| Algorithm 1 Direct Trust Value Calculation Algorithm |

| Input: Quantity of interactions , end user assessment of each service , coefficient of weight , the current moment when the trust value is computed, assess the feedback message timing , reward coefficient , penalty coefficient . Output: The direct trustworthiness value of the end user, service satisfaction evaluation set , evaluation set of service dissatisfaction, quantity of service satisfaction ratings , Quantity of service evaluations that are unsatisfactory. 1: , , ; 2: if then 3: for to do 4: if then 5: ; 6: ; 7: else 8: ; 9: ; 10: end if 11: 12: end for 13: if the provision of cooperative service devices constitutes a valid service. then 14: Update the direct trust value in accordance with Equation (7). 15: else 16: Update the direct trust value in accordance with Equation (8). 17: end if 18: end if 19: Determine in accordance with Equation (9). 20: if then 21: Determine in accordance with Equation (6). 22: if cooperative service devices offer efficient services. then 23: Update the direct trust value in accordance with Equation (8). 24: else 25: Update the direct trust value in accordance with Equation (9). 26: end if 27: end if 28: Update the direct trust value in accordance with Equation (9). 29: return , , , , |

3.2.2. Objective Indirect Trust

Upon completion of the task by the collaborative service device, the end user’s direct trust feedback is submitted to the edge service provider. Consequently, the edge service provider can construct an matrix to represent the normalized direct trust values between any terminal user and any collaborative service device at time . The Markov matrix is presented in Equation (10).

Direct trust represents the trust relationship established between end users and collaborative service devices based on their mutual interactions. In FIoT edge computing systems with end-to-edge collaboration mechanisms, interactions are influenced by interaction history and the mobility of end users. As a result, interactions often occur between parties who are not familiar with each other. When there is no interaction between end users and collaborative service devices, direct feedback information is unavailable. In such cases, it is necessary to utilize trust values provided by other devices that have interacted with the collaborative service device to assess its trustworthiness. This trust value is referred to as the objective indirect trust value in this paper.

In real-world interactions, people tend to trust information from those they know and trust. Similarly, when calculating indirect trust in this paper, we reference the trust values of neighboring devices with higher trust levels. Trust is often transitive in human society [23]. Similarly, in the proposed trust mechanism, if terminal user trusts device , and device trusts device , then even without direct interaction between user and device , trust can be transferred. This concept is formally described using Equation (11).

Indirect trust in collaborative service devices can be established by aggregating the direct trust values from all their neighbors. Terminal users calculate indirect trust to collaborative service devices by considering only their neighbors, a process referred to as one-hop trust propagation in this paper. We introduce one vector to represent the normalized direct trust of terminal users to all collaborative service devices and another vector to represent the indirect trust. Therefore, the one-hop trust propagation can be represented in matrix form as shown in Equation (12).

In FIoT edge computing systems with sparse evaluation data, indirect trust obtained through one-hop trust transfer often cannot meet the interaction needs of end users. To gain a broader trust perspective, end user can consider the evaluation information of its neighbors’ neighbors, known as two-hop trust transfer. Two-hop trust transfer can be formally expressed as Equation (13).

Similarly, M-hop trust transfer can be expressed as the Equation (14).

To address inaccurate neighbor evaluations, this paper introduces evaluation similarity. Evaluation similarity generally refers to the degree of similarity between two people’s views on the same thing. In our indirect trust calculations, evaluation credibility refers to how trustworthy the trust evaluations of cooperative service devices are. Given end user and neighbor , we use to represent the evaluation credibility of neighbor from the perspective of end user . The credibility is shown in Equation (15).

Here, represents the set of cooperative service devices that have interacted with both end user and neighbor .

From Equation (15), we infer that the more similar terminal user ’s evaluations are to those of terminal user , the more reliable user ’s evaluation information is. By further considering the credibility of terminal user evaluation , the direct trust between terminal users and collaborative service devices can be rewritten as shown in Equation (16).

Through the normalization operation of Equation (7) and the trust transfer operation of Equation (14), a more accurate end user indirect trust vector can be obtained.

The indirect trust calculation algorithm is presented in Algorithm 2.

| Algorithm 2 Indirect Trust Value Computation Algorithm |

| Input: Set A of cooperative service devices, the direct trust value calculated by Algorithm 1, time .

Output: 1: Constructing matrix CC. 2: for do 3: Calculate in accordance with Equation (14). 4: end for 5: return |

3.2.3. Aggregate Trust

From the above discussion, we can obtain both direct and indirect trust values. In order to improve the reliability of trust calculation, this paper proposes an adaptive method to calculate the aggregated trust value. The aggregated trust value is shown in Equation (17).

where and are the weight coefficients for the direct and indirect trust values, respectively. can be calculated by Equation (18).

where represents the average direct trust value of devices in the collaborative service device set at time . represents the total number of successful interactions between end user and cooperative service device at time .

From Equation (18), when there are no direct interactions at time , is set to 0, and only the indirect trust value is used. When the average direct trust value in the collaborative service device set at time is less than 0.5, it indicates that the direct trust is inaccurate, or the number of devices is small. To improve the credibility of the trust value, is set to 1; only the direct trust value is used, and the indirect trust value is ignored. When the average direct trust value of devices is greater than or equal to 0.5, the aggregated trust value is determined by considering both direct and indirect trust.

In summary, the aggregate trust computation algorithm is shown in Algorithm 3.

| Algorithm 3 Aggregate Trust Value Calculation Algorithm |

| Input: Time , Set of cooperative service devices.

Output: 1: Determine in accordance with Algorithm 1. 2: Determine in accordance with Algorithm 2. 3: Calculate the mean value of direct trust in . 4: 5: 6: return |

3.3. Trusted Cooperative Service Device Selection Mechanism

In selecting trustworthy collaborative service devices, direct or indirect trust is typically used for screening. Several existing algorithms consider only the indirect trust values of collaborative service devices, neglecting their personalized direct trust. Therefore, after introducing the black- and whitelist mechanism, this paper proposes a trustworthy collaborative service device selection mechanism based on it. The personalized black- and whitelist mechanism filters out most malicious behaviors by prioritizing interaction with devices on the whitelist and avoiding those on the blacklist. This aims to enhance service quality in collaborative edge computing systems.

3.3.1. Black- and Whitelist Mechanism

The presence of malicious acts significantly challenges the trustworthiness and accuracy of trust. If malicious devices exploit the model for attacks, it will undoubtedly diminish the trustworthiness and accuracy of trust. Therefore, we propose adopting a black- and whitelist mechanism to filter cooperative service devices. The black- and whitelist mechanism includes a blacklist, a whitelist, and a conversion mechanism between them.

- Blacklist Mechanism

In the personalized black- and whitelist mechanism, end user adds untrustworthy cooperative service devices to the blacklist and ceases interaction with them. Let represent the blacklist of end user . The blacklist mechanism is influenced not only by the number of satisfied and unsatisfied services but also by tolerance. Next, we introduce the definition of tolerance and the calculation process of the blacklist mechanism.

Tolerance indicates the extent to which end users tolerate unsatisfactory services. The greater the value of is, the higher the tolerance level of end users is. In sociology, tolerance is jointly influenced by satisfaction and service fairness. Similarly, we introduce satisfaction and service fairness of cooperative service devices as two influencing factors. As discussed earlier, the satisfaction level of end users with services provided by cooperative service devices can be expressed by Equation (19).

Service fairness is also subjectively evaluated by the end user after the service is completed. Let represent the set of evaluations. Each evaluation result belongs to the set . The multi-dimensional evaluation mechanism can be expressed by Equation (20).

The service fairness evaluation from end user to cooperative service device is formed by aggregating all evaluation results from service requesters. Therefore, the service fairness of the collaborative service device can be expressed by Equation (21).

Here, and represent the numbers of services evaluated as fair and unfair by end user for cooperative service device , respectively. Thus, the end user tolerance can be expressed by Equation (22).

Here, is a weight coefficient, generally set by the edge service provider. The tolerance is calculated as shown in Algorithm 4.

| Algorithm 4 The Algorithm for Tolerance Calculation |

| Input: The quantity of interactions , the evaluation value of each service as perceived by the end user, the fairness rating of each service as perceived by the end user, the factor of service fairness .

Output: Tolerance 1: , , , , ; 2: for to do 3: if then 4: ; 5: ; 6: else 7: ; 8: ; 9: end if 10: if then 11: ; 12: ; 13: else 14: ; 15: ; 16: end if 17: end for 18: 19: return |

- 2.

- Construction of Blacklist

Based on the numbers of satisfactory and unsatisfactory services and the tolerance introduced earlier, terminal users will add malicious devices to the blacklist with a certain probability. The probability that end user adds cooperative service device to the blacklist can be expressed by Equation (23).

Here, , , and represent the numbers of satisfactory and unsatisfactory services provided by cooperative service device to end user , respectively. indicates the tolerance level of the end user for unsatisfactory services.

- 3.

- Whitelist Mechanism

In the personalized black- and whitelist mechanism, end user adds trustworthy collaborative service devices to the whitelist and interacts with them. Let represent the whitelist of end user . The whitelist mechanism is influenced not only by the number of satisfactory and unsatisfactory services but also by the degree of acceptance. Next, we present the definition of acceptance and the calculation process of the whitelist mechanism.

In this paper, the acceptance rate represents the degree to which end users acknowledge the satisfaction of the provided service. The larger the value of is, the higher the degree of end user acceptance is. In sociology, acceptance rate is influenced by both satisfaction and preference; thus, we introduce these two factors for cooperative service devices. The end users’ degree of satisfaction with the services provided by cooperative service devices is expressed by Equation (19). The preference degree of end user for device , denoted as , is defined as the percentage of successful interactions between them, as shown in Equation (24). The edge service provider calculates and aggregates the number of interactions.

Here, represents the number of successful interactions between end user and device ; represents the total number of interactions of end user ; represents the total number of devices providing services to end user . Given a fixed total number of interactions, an increase in interactions with a specific device indicates a preference for that device. Therefore, the degree of acceptance of satisfactory services by end user can be expressed by Equation (25).

Here, is a weight coefficient, generally set by the edge service provider.

- 4.

- Whitelist Mechanism

Based on the numbers of satisfactory and unsatisfactory services and the acceptance rate discussed earlier, end users will add devices with honest behavior to the whitelist with a certain probability. The probability that end user adds device to the whitelist is given by Equation (26).

Here, , and represent the numbers of satisfactory and unsatisfactory services provided by device to end user , respectively. represents the degree of acceptance by end user .

3.3.2. Dynamic Whitelist Conversion Mechanism

In a personalized whitelist mechanism, the behavior of collaborative service devices constantly changes. Devices on the whitelist may exploit their high trust value to deliver invalid or malicious services. Devices on the blacklist may provide good services to improve their trust value. To capture the dynamic behavior of devices, we propose a dynamic transformation mechanism for the personalized whitelist, consisting of a whitelist removal mechanism and a blacklist removal mechanism.

- Blacklist and Whitelist Removal Mechanism

If device is on the whitelist of end user and end user receives an invalid or malicious service from it, device will be moved from the whitelist to the normal user list with probability . The probability is related to the following four attributes:

- (1)

- The ratio of unsatisfactory services to total services. A larger indicates that device provides invalid services more frequently, increasing the probability of its removal from the whitelist.

- (2)

- To prevent device from strategically providing unsatisfactory services while maintaining a high effective service ratio, we consider the number of ineffective services provided by device . The larger is, the higher the probability is of removing device from the whitelist.

- (3)

- The importance of the service: If collaborative service device provides false feedback on highly important services, the probability of removing device from the whitelist increases; conversely, for less important services, this probability decreases.

- (4)

- Terminal user ’s sensitivity to ineffective or malicious services : A larger value indicates that user has less tolerance for ineffective or malicious services, increasing the probability of removing device . Conversely, a smaller decreases this probability. Therefore, is defined as shown in Equation (27).

Specifically, represents the set of transactions where terminal user is dissatisfied with services from equipment at time , while represents the set where user is satisfied. Here, is a single unsatisfactory service between devices, is a single satisfactory service, and the function represents the importance of the service.

- 2.

- Blacklist Deletion Mechanism

Suppose that cooperative service device is on the blacklist of end user . Considering that device may improve its untrustworthy behavior to enhance its trustworthiness, end user will periodically calculate the probability of removing device from the blacklist. The probability depends on the following three attributes:

- (1)

- Neighbor ’s attitude towards device : denotes that neighbor has blacklisted device ; indicates placement on the regular list; indicates the whitelist, with and defined accordingly. The more favorable neighbor ’s attitude is towards device , the higher the probability is that end user will remove from the blacklist.

- (2)

- Evaluation reliability between end user and neighbor : A higher means neighbor has a greater influence on .

- (3)

- End user ’s aversion to invalid or malicious services : A higher value makes it less likely for device to be removed from the blacklist. In summary, is defined as shown in Equation (28).

Here, represents the set of neighbor devices of terminal user . The aversion degree can be obtained through statistical analysis of user ’s history, calculated by Equation (29).

In summary, the dynamic mechanism for converting between blacklists and whitelists is presented in Algorithm 5.

Based on the personalized whitelist and dynamic transfer mechanisms, the proposed collaborative service device selection algorithm is presented in Algorithm 6.

| Algorithm 5 Black- and whitelist dynamic conversion mechanism |

| Input: end user ’s blacklist , end user ’s whitelist general list Output: end user ’s blacklist after conversion , end user ’s whitelist after conversion , general after conversion 1: , , 2: if then 3: is calculated according to Equation (27). 4: 5: if 6: 7: 8: else 9: The co-serving device continues to remain in the set 10: end if 11: end if 12: if then 13: is calculated according to Equation (28). 14: if 15: 16: 17: else 18: The co-serving device continues to remain in the set 19: end if 20: end if 21: return , , |

| Algorithm 6 Collaborative service device selection algorithm based on personalized black- and whitelist |

| Input: end user ’s blacklist , end user ’s whitelist , the set of cooperative service devices for the service request is named, the cumulative trust value of the cooperative service device , Output: The chosen cooperative service device 1: 2: 3: if 4: return −1 5: else 6: 7: if 8: The cooperative service device is selected for execution with a probability of . 9: else 10: The cooperative service device is selected for execution with a probability of . 11: end if 12: end if |

3.4. Resource Allocation Mechanism of Trusted Collaborative Service Devices

After selecting collaborative service devices using the personalized whitelist- and blacklist-based selection algorithm, trustworthy service providers were identified. To address resource allocation among the selected service devices, this paper proposes a collaborative service device resource allocation mechanism. The proposed resource allocation mechanism includes two parts: grouping rules and a coalition game.

- Grouping Rules

First, the collaborative service devices in the whitelist are grouped. The grouping condition is determined by a grouping function calculated from the degree of activity and the aggregated trust value.

- 2.

- Degree of Activity

For a given whitelist set , let denote the effective number of interactions of all cooperating service devices. Let represent the effective number of interactions of cooperative service device . Clearly, . Therefore, the ratio of device ’s effective interactions to the total effective interactions in the whitelist is defined as the activity degree of device . The degree of activity is shown in Equation (30).

- 3.

- Aggregate Trust Values

The aggregated trust value is the cumulative value of the direct and indirect trust of the cooperative service device at time . Details can be found in Algorithm 3.

In conclusion, the grouping function of cooperative service devices within the whitelist at time is presented in Equation (31).

Here, is the scaling factor, .

When the condition is satisfied, device is included in set ; otherwise, it is added to set , where is a hyperparameter. Regarding devices in set , since their effective interaction times and aggregated trust values are relatively high, the devices are sorted in descending order based on the grouping function values. Devices with higher values are allocated resources preferentially. For devices in set , resource allocation is carried out using a coalition game method.

- 4.

- Coalition game

The coalition game model in this paper is defined as a triple . The game players are the devices in set . Assuming there are players, the set of players at time is . In the coalition game, players calculate their utility values based on Equation (15). The utility value of each coalition is the sum of the utility values of its members. The payoffs of all coalitions constitute the utility value set of the game. The utility function is given in Equation (32).

Among these parameters, denotes the delay, while represents the energy consumption. For detailed formulations regarding the calculation of delay and energy consumption, please refer to reference [24].

The coalition structure represents the set of coalitions formed by all sub-coalitions at time . Players can form between 1 and coalitions, depending on circumstances. The coalition structure is represented by set , where indicates the sub-coalitions at time . Subsequently, the concept of alliance gaming is adopted to form alliances among collaborative service devices. As the number of devices in an alliance grows, a device may have fewer available resources or weaker processing capacity compared to others. As a result, this device obtains less revenue and may leave the current alliance to form a new sub-alliance. Similar to reference [25], we define the coalition adjustment rules for collaborative service devices by establishing preference relations.

Definition 1.

For a cooperative service device within set , the preference relation on the set of all coalitions it may form possesses completeness, reflexivity, and transitivity.

Proof of Definition 1.

Since the objective of coalition selection for cooperative service devices in set is to maximize utility and ensure each device has a non-negative utility value, the preference relation in Equation (33) holds for any cooperative service device where .

According to Equation (33), if the total utility becomes greater in sub-alliance than in when cooperative service device joins at time , and no other device in or receives a negative utility due to this change, then device is more inclined to join . □

The main aim of forming a coalition of collaborative service devices within set is to achieve a high utility value. Each collaborative service device in the coalition game can decide whether to join or leave a specific coalition based on its preference relation and utility value.

Definition 2.

Given the alliance set at time , if cooperative service device decides to withdraw from its current sub-alliance and join another sub-alliance , resulting in a new alliance partition .

Proof of Definition 2.

If, at time , a coalition adjustment by a cooperative service device in set can significantly enhance the system’s overall utility and does not cause other devices’ utility to become negative, then this adjustment should be implemented. The goal of the coalition game is to determine a sub-coalition partition scheme that maximizes the total utility of cooperative service devices, rather than the utility of individual devices. Additionally, the coalition game ensures that each participating cooperative service device receives appropriate remuneration. □

Definition 3.

At time , if no sub-alliance adjustment rules are satisfied, the cooperative service devices and sub-alliances in set form a stable structure.

Proof of Definition 3.

According to Definition 2, the alliance structure will continuously adjust and change. Through continuous iterative search, the alliance structure will keep adjusting until the stability rules are satisfied, resulting in the optimal solution for the overall system. It is evident that the optimal solution is the final stable alliance structure, corresponding to the final allocation scheme of cooperative service devices in set at time . □

- 5.

- Resource Allocation Algorithm for Trusted Cooperative Service Devices

With the introduction of grouping rules and the coalition game, the resource allocation algorithm for trusted cooperative service devices is derived, as shown in Algorithm 7. When allocating resources, the proportional fairness criterion is used. Allocation plan satisfies proportional fairness; any other allocation plan does not satisfy that all members are positive at the same time. Ensure that the benefits obtained by each member of the alliance are proportional to their contribution or demand and avoid excessive exploitation or preferential treatment of some members.

| Algorithm 7 The Resource Allocation Algorithm for Trusted Cooperative Service Devices |

| Input: Whitelist set, valid interaction records of collaborative service devices included in the whitelist, probability of being included in the whitelist, the maximal number of iterations , Output: Resource allocation outcomes for devices within the whitelist collection. 1: Calculate the value of the grouping function for each device within the whitelist set in accordance with Equation (31). 2: for do 3: if then 4: 5: else 6: 7: end if 8: end for 9: Randomly initialize the structure of the coalition within the set and . 10: for do 11: for do 12: if adhere to the rules stipulated in Definition 2 then 13: The coalition adjustment is conducted, a new coalition is formed, and the device joining sequence is recorded. 14: else 15: The coalition structure remains unaltered. 16: end if 17: 18: if Definition 3 remains valid or then 19: break 20: end if 21: end for 22: end for 23: For the devices in the set , the grouping function value is sorted in descending order by means of the quick sort algorithm to form the allocation result. 24: For the devices within the set , the device resources are allocated in accordance with the game outcomes of the coalition game. In the process of resource allocation, the proportional fairness criterion is used. 25: return whitelist of device resource allocation outcomes. |

- 6.

- Convergence of the algorithm

Theorem 1.

The cooperative service device resource allocation algorithm can guarantee to reach the final stable solution in set , and this solution is composed of multiple disjoint coalitions.

Proof of Theorem 1.

Each cooperative service device in set at time can perform coalition adjustment operations and generate new sub-coalitions according to preference relations and coalition adjustment rules in Definition 3. According to the coalition adjustment rule, Equation (35) holds since it can be transformed from to only when the system utility is improved.

Since the number of cooperative service devices in set is limited, the number of new schemes generated by each adjustment operation is also limited, so the cooperative service device resource allocation algorithm can ensure that the coalition reaches the final stable scheme . □

- 7.

- Algorithm stability

In order to analyze the stability of the final solution obtained after convergence of the collaborative service device resource allocation algorithm, the definition of the Nash equilibrium was first given.

Definition 4.

For an instant coalition structure , is Nash equilibrium if and only if both pairs hold, where .

Theorem 2.

The final solution obtained from the cooperative service device resource allocation algorithm in set is Nash equilibrium.

Proof of Theorem 2.

The proof is carried out by contradiction, assuming that the final scheme obtained by the cooperative service device resource allocation algorithm in set is not Nash equilibrium. Then, there must exist at time the cooperative service device in set , and the sub-coalition and such that . This will inevitably lead to a change in the coalition structure, which is contrary to the conclusion that is the final scheme. Therefore, the final solution obtained by the cooperative service device resource allocation algorithm in set must be the Nash equilibrium. □

- 8.

- Time Complexity of the algorithm

Theorem 3.

The time complexity of the resource allocation algorithm for cooperative service devices is polynomial time.

Proof of Theorem 3.

In each iteration, the resource allocation algorithm of the collaborative service device selects only one collaborative service device in set , and then calculates the total utility value of the selected collaborative service device in the current coalition and the potential adjustment to the coalition, respectively, and decides whether to perform coalition adjustment operation according to the preference relationship. If the coalition adjustment operation is determined, the selected cooperative service device is adjusted from its current coalition to the new sub-coalition. Since the number of coalition adjustment operations in each iteration is, at most, , and the maximum number of iterations is , the collaborative service device resource allocation algorithm has computational complexity in the worst case in set . The quicksort method is used to sort in the set ; assuming that there are devices, then the worst-case time complexity of quicksort is . In summary, the time complexity of the resource allocation algorithm for cooperative service devices is the polynomial time. □

4. Results and Discussion

4.1. Experimental Setting

The simulation models the random distribution of 1000 users within a coverage area of radius 1 km. Five predefined trusted collaborative service devices were used. Without loss of generality, we assumed all users had the same tolerance and acceptance. According to references [26,27], typically, is employed. The system contains 2000 different services. Initially, each end user has an average of 15 services. Without loss of generality, we set all service transactions to have the same level of importance. We introduced 1000 rounds of initial interactions to establish trust between end users and collaborative service devices. The busy ratios of collaborative devices (PCDs) were set at 10%, 20%, 40%, and 100%, representing idle, busy, highly busy, and extremely busy states of the IoT edge computing system, respectively.

We denote the number of service requests initiated by ordinary end users by end user, and the number of effective services they obtained by . In the experiments, the trust model’s performance was measured by the effective service acquisition rate, denoted as . For convenience, we abbreviated bad-mouthing and good-mouthing attacks as Model A, ballot-stuffing attacks as Model B, and selective behavior attacks as Model C. Since bad-mouthing and good-mouthing attacks are similar, we classified them as one type. We considered the completion of simple tasks in a collaborative FIoT edge computing scenario. To ensure experimental authenticity, the network topology in our simulations was randomly generated. All experiments were run on a Windows 10 machine with an Intel Core i5-4590 3.3 GHz processor and 8 GB of memory.

The experimental setup is summarized in Table 1.

Table 1.

Simulation experiment settings.

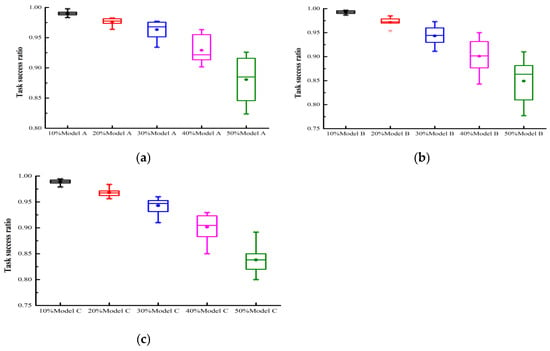

4.2. Model Validation

We evaluated the model’s performance under single attack behaviors. To evaluate the trust mechanism’s performance in ensuring quality of service, this paper focuses on: (1) diverse attack models from A to C; (2) malicious user proportions ranging from 10% to 50%; (3) measuring the effective service acquisition rate under three experimental settings with PCD values from 10% to 100%. In Figure 2 and Figure 3, the user collaboration degrees were set to 100% and 10%, respectively. The former represents an extremely busy FIoT edge computing system, while the latter indicates an idle system. Figure 2a examines the impact of attack model A under varying proportions of malicious users. The experimental results reveal that: (1) The accumulation of trust evaluation information allowed the proposed trust mechanism to precisely evaluate each user’s trust degree. Consequently, the system’s effective service acquisition rate gradually increased over time. Particularly, in scenarios with a high proportion of malicious users, the performance improvement was more pronounced. (2) In scenarios with 10%, 20%, 30%, 40%, and 50% malicious users, the proposed trust mechanism achieved effective service acquisition rates of 99.3%, 97.9%, 97.7%, 95.5%, and 92.6%, respectively, at the 10,000th interaction. These results demonstrate the proposed model’s effectiveness in guaranteeing the quality of service in collaborative FIoT edge computing systems. Figure 2b,c examine the impact of attack models B and C, respectively, under varying proportions of malicious users. Similar to Figure 2a, there was a gradual improvement in model performance over time. With 50% malicious users under attack models B and C, the proposed model achieved an effective service acquisition rate of approximately 90% after 10,000 interactions, demonstrating its robustness against different malicious attacks. In collaborative edge computing FIoT systems with a collaboration degree of 10%, the model could not accurately assess each user’s trustworthiness due to limited trust feedback. Consequently, as shown in Figure 3a–c, the system performance exhibited repeated oscillations over time, and gradual improvement could not be achieved. In systems with collaboration degrees of 20% and 40%, the model’s performance fell between PCD = 10% and PCD = 100%; therefore, it is not presented here to save space.

Figure 2.

Task success ratio under different single attack models with PCD = 100%: (a) attack model A; (b) attack model B; (c) attack model C.

Figure 3.

Task success ratio under different single attack models with PCD = 10%: (a) attack model A; (b) attack model B; (c) attack model C.

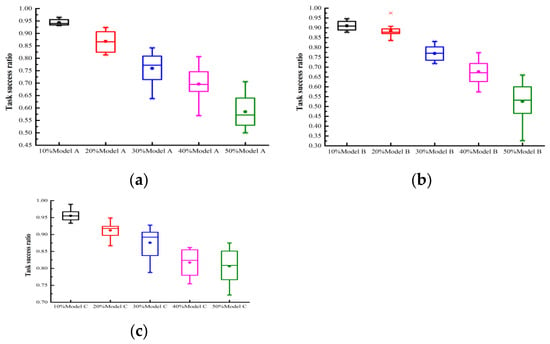

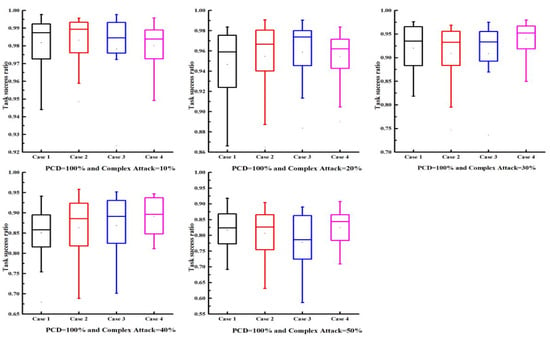

In FIoT scenarios, multiple types of malicious users may coexist. Therefore, to further investigate the effectiveness and robustness of the proposed trust model, we considered the influence of complex attacks involving multiple attack models on the trust mechanism’s performance. The experimental setup for the complex attack scenarios was as follows: (1) malicious user proportions were 10%, 20%, 30%, 40%, and 50%; (2) PCD values were 10%, 20%, 40%, and 100%; (3) to study the impact of different proportions of complex attack models on the task success rate, four complex attack scenarios were considered:

Scenario 1: Each malicious user randomly selected models A, B, or C with equal probability (1/3 each).

Scenario 2: Each malicious user selected models A, B, or C with probabilities of 15%, 60%, and 25%, respectively, making model B predominant.

Scenario 3: Each malicious user selected models A, B, or C with probabilities of 60%, 25%, and 15%, respectively, making model A predominant.

Scenario 4: Each malicious user selected models A, B, or C with probabilities of 25%, 15%, and 60%, respectively, making model C predominant.

The results are presented in Figure 4 and Figure 5. As the proportion of malicious users increased, the task success rate was expected to decline; however, the decline was relatively slow, suggesting that the trust model demonstrated robust performance. As PCD increased (i.e., more transaction data), the task success rate also increased. Particularly, when PCD = 100%, the average task success rate could reach 80% even with 50% malicious users, indicating the efficacy of the proposed model. The proposed trust model also achieved similar results under varying proportions of complex attack models, verifying its robustness against complex attacks. In conclusion, the proposed trust model maintained good performance even under complex attack scenarios.

Figure 4.

Task success ratio under different complex attack models with PCD = 10%.

Figure 5.

Task success ratio under different complex attack models with PCD = 100%.

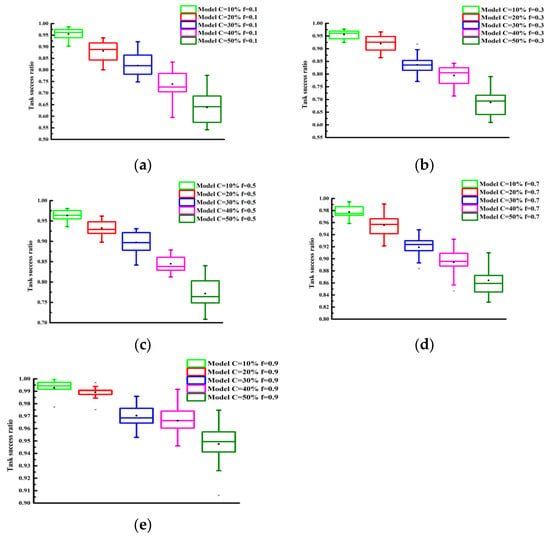

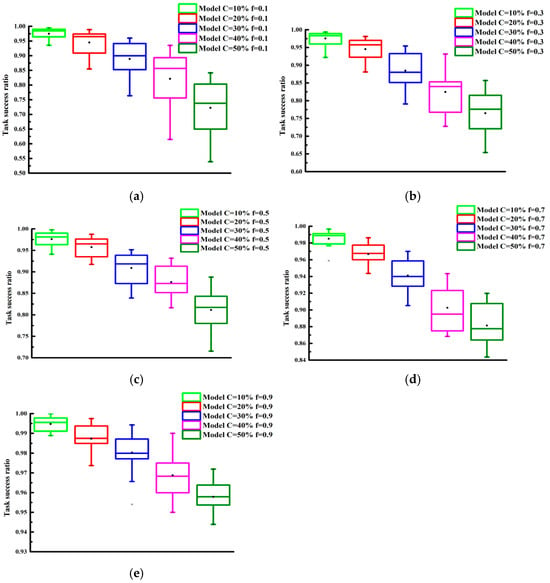

The performance of the model under selective attack behaviors was examined. We further investigated the effect of selective aggression on the performance of the proposed trust model through experiments. The parameter was varied from 10% to 90% in increments of 10%. The experimental results are presented in Figure 6 and Figure 7. As observed from the graphs, the task success rate increased as increased. Additionally, Figure 6 and Figure 7 show that, for the same value, with the more malicious nodes that were present, the lower the task success rate was.

Figure 6.

Task success ratio under different values with PCD = 40%: (a) (b) (c) (d) (e) .

Figure 7.

Task success ratio under different values with PCD = 100%: (a) (b) (c) (d) (e) .

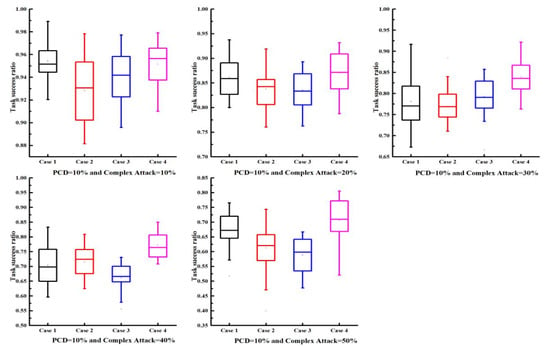

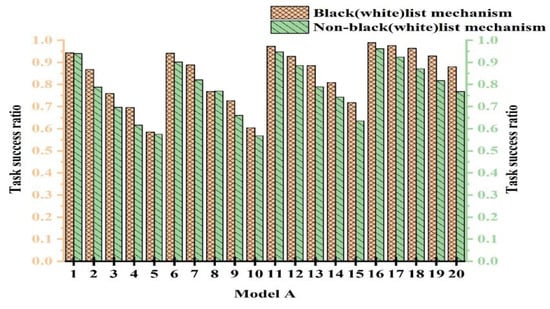

4.3. Effectiveness of the Black- and Whitelist Mechanism

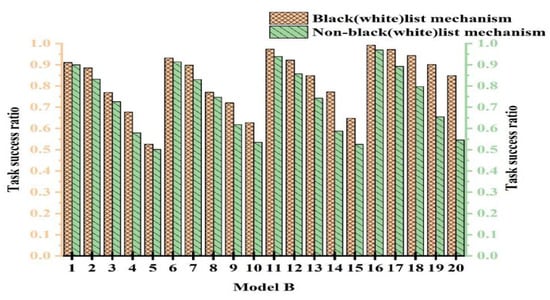

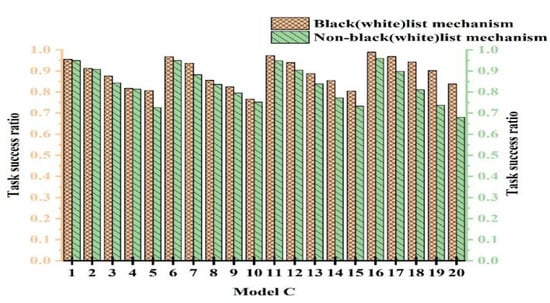

To validate the efficacy of the black- and whitelist mechanism, we conducted comparative experiments with and without its use. To ensure fairness, multiple experiments were conducted to determine parameter values. The experiments were categorized into 20 cases. The cases from 1 to 5 represent experiments with PCD = 10% and attack model proportions of 10%, 20%, 30%, 40%, and 50%, respectively. The cases from 6 to 10 were with PCD = 20% and attack model proportions of 10%, 20%, 30%, 40%, and 50%, respectively. Figure 8, Figure 9, and Figure 10 show the experimental results for Attack Models A, B, and C, respectively.

Figure 8.

Task success ratio with the black- and whitelist mechanism under Attack Model A.

Figure 9.

Task success ratio with the black- and whitelist mechanism under Attack Model B.

Figure 10.

Task success ratio with the black- and whitelist mechanism under Attack Model C.

From Figure 8, Figure 9 and Figure 10, we observe that the performance with the black- and whitelist mechanism surpassed that without it. Furthermore, as the proportion of attack models increased, the superiority of the mechanism became more pronounced. It can be seen from Figure 8 that only when PCB = 20% and the proportion of attack models was 30%, the same effect was achieved with and without black- and whitelists. However, in other cases, better results were achieved. The combined mechanism improved the task success rate by 1.7~14.6% under 50% attack conditions. The performance margins were limited in low-attack scenarios due to baseline saturation.

This is because when resources were abundant and the proportion of attack models was small, most services using the black- and whitelist mechanism interacted with devices on the whitelist. This is similar to users not employing the mechanism. Consequently, both approaches exhibited a high success rate, higher than the outcomes without any mechanism. However, when resources were limited and the proportion of attack models was large, the black- and whitelist mechanism played a crucial role. Malicious nodes were blacklisted and prohibited from interaction, thereby enhancing the success rate. In contrast, without the black- and whitelist mechanism, the success rate significantly decreased due to extensive attacks. Based on the experimental results, we conclude that the black- and whitelist mechanism was effective in resisting malicious attacks.

4.4. Trust Model’s Resistance to Attacks

Figure 11 presents the performance comparison of the proposed model with TCM [28], EigenTrust [29], and GroupTrust [30]. EigenTrust and GroupTrust were selected because distributed P2P technology is similar to the edge computing model. GroupTrust extends EigenTrust, and our proposed model further extends the P2P concept to the collaborative edge computing scenario. Therefore, we compared them to determine whether our proposed algorithm outperforms these existing algorithms.

Figure 11.

Task success ratio comparison with different trust models: (a) PCD = 10%; (b) PCD = 10% and PCD = 20%; (c) PCD = 20% and PCD = 40%; (d) PCD = 40% and PCD = 100%; (e) PCD = 100%.

We choose TCM because it is a novel trust mechanism for FIoT systems. It is an adaptive model based on information entropy theory that calculates a device’s global trust value from local evaluation information. In the scenario with PCD = 10% and attack model B, the results are shown in Figure 11a,b. When the proportion of malicious users was 10%, 20%, 30%, and 40%, the proposed model demonstrated the best performance, with task completion rates of 91.026%, 88.501%, 76.990%, and 67.677%, respectively. However, with 50% malicious users, the proposed model performed slightly worse than the GroupTrust model due to randomness from insufficient evaluation information. As the proportion of malicious users increased, the system performance gradually declined. With PCD = 20% and attack model B, the number of user interactions increased significantly. The results are shown in Figure 11b,c. Compared to the other three models, the proposed model achieved the best performance with malicious user proportions of 20%, 30%, 40%, and 50%. However, due to insufficient trust evaluation information, the proposed model could not accurately assess user credibility and achieved a slightly lower task completion rate than the GroupTrust model when malicious users were at 10%. Compared to PCD = 10%, the trust evaluation information was increased at PCD = 20%, leading to some improvement in the system’s task completion rate. With PCD = 40% and attack model B, user interactions were more frequent. The results are shown in Figure 11c,d. With increased trust evaluation information, the proposed model evaluated user credibility more accurately and achieved optimal performance under various malicious user proportions. Compared to PCD = 20%, the system’s task success rate was further improved. With PCD = 100% and attack model B, the edge computing system was extremely busy. The results are shown in Figure 11d,e. Similar to PCD = 40%, the proposed model achieved optimal performance under different malicious user proportions. Compared to PCD = 40%, the system’s task completion rate was significantly improved. Even with 50% malicious users, the proposed model achieved a task completion rate of 84.907%. Figure 10 only shows results for attack model B; similar results were obtained for attack models A and C.

4.5. Performance Evaluation of Resource Allocation Algorithms

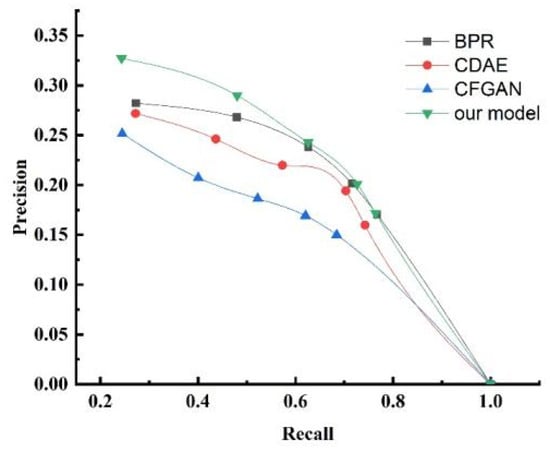

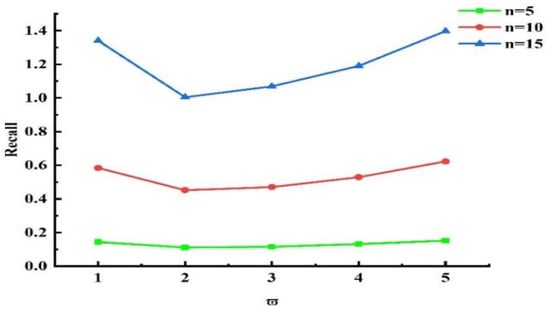

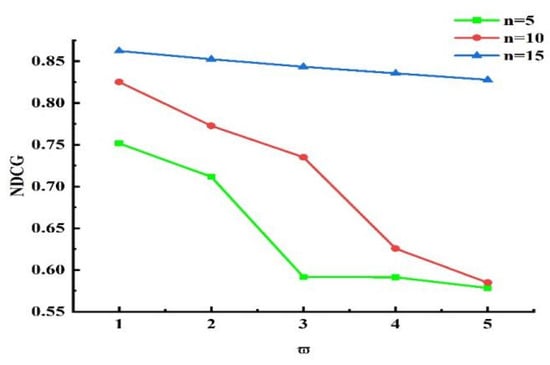

To demonstrate the performance of the proposed resource allocation algorithm, we verified it through comparative experiments. Since there is no publicly available dataset for end user evaluations of collaborative service provision devices, we conducted our experiments using simulated datasets. The dataset included end user IDs, collaborative service equipment IDs, and service evaluation values from end users to the collaborative service providing equipment. We simulated a total of 18,599 records from 1879 users, with service evaluation values randomly generated in the range of . Based on these evaluations, we formed a collaborative service equipment evaluation network. In this experiment, each algorithm generated a service device allocation list, and the top devices were recommended to the user. We set to 5, 10, 15, 20, and 25. We adopted 10-fold cross-validation and used the average as the final result. The evaluation metrics used were precision, recall, and normalized discounted cumulative gain (NDCG).

- Performance Evaluation of Resource Allocation Algorithms

To validate the performance of the proposed algorithm, we conducted comparative experiments using the BPR, CDAE, and CFGAN algorithms.

BPR [31]: This algorithm employs Bayesian analysis to derive the maximum a posteriori probability estimate. Using stochastic gradient descent, it obtains the parameters of the MF and KNN algorithms, then sorts and assigns them.

CDAE [32]: This algorithm utilizes denoising autoencoders to perform sorting and assignment.

CFGAN [33]: This algorithm adopts a collaborative filtering framework based on generative adversarial networks, effectively a modified version of the GAN algorithm.

To ensure fairness in the comparative experiments, we set the parameters of the aforementioned algorithms according to their respective literature or experimental results.

Under these parameter settings, the comparison algorithms achieved optimal performance. For the BPR algorithm: parameters and ; for the CDAE algorithm: parameters , , , and ; for the CFGAN algorithm: parameter .

The experimental results are presented in Table 2, Table 3, Table 4, Table 5 and Table 6. The results reveal that as the number of allocated service devices increased, the precision value decreased accordingly. This indicates that the recommendation accuracy declined to varying degrees, but the decline of the proposed algorithm was less than that of the other algorithms. Conversely, the recall value correspondingly increased. This implies that the recommendation recall rate increased to varying degrees, and the increase of the proposed algorithm was higher than that of the other algorithms. To better illustrate the algorithm’s performance, we plotted the precision–recall curve. Figure 12 shows the precision–recall curve. From the figure, we observe that the proposed algorithm had a larger coverage area, suggesting it is superior to the other three algorithms in balancing precision and recall. In the experimental results, the NDCG index gradually increased. When , compared to the BPR algorithm—which had the highest NDCG among the comparison algorithms—the proposed algorithm showed a 30.68% increase in NDCG. As increased, the rate of improvement declined, with a 3.34% increase when . This indicates that the recommendation ranking outcomes were continuously improving, and the algorithm presented in this paper outperformed the other algorithms. In conclusion, compared with the BPR, CDAE, and CFGAN algorithms, the proposed approach generated a superior allocation scheme.

Table 2.

Comparison results of the algorithms when .

Table 3.

Comparison results of the algorithms when .

Table 4.

Comparison results of the algorithms when .

Table 5.

Comparison results of the algorithms when .

Table 6.

Comparison results of the algorithms when .

Figure 12.

Precision–recall curve.

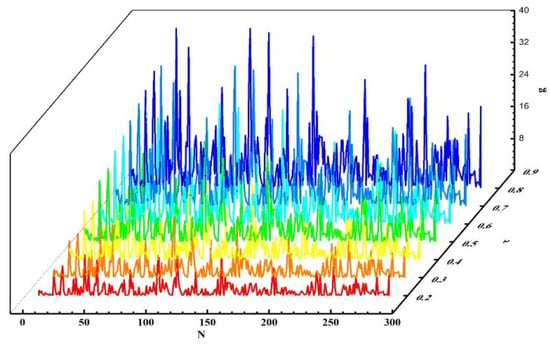

- 2.

- Impact of Parameters on the Resource Allocation Model

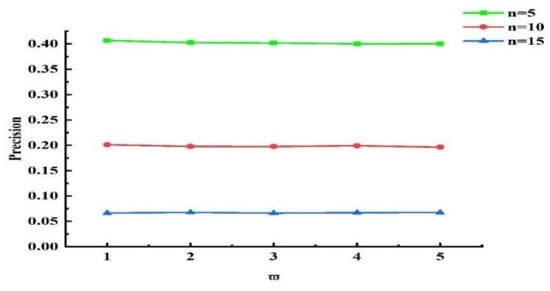

Next, experiments were conducted to validate the influence of the grouping threshold on the algorithm’s outcomes. When and other values remained unchanged, we obtained different experimental results by varying the value of . The experimental results for , , and are presented in Figure 13, Figure 14, and Figure 15, respectively. Analyzing the results in the figures, we observe that under the same , as increased, precision declined, recall rose, and NDCG dropped. This indicates that the smaller is, the more data are placed into set . Devices with more effective interactions and higher trust values are more likely to be assigned, resulting in higher precision. As increased, the data in set decreased, and the number of devices directly allocated decreased, leading to decreases in precision and NDCG. Next, experiments were conducted to validate the impact of on the grouping outcomes. Keeping the coefficient fixed at 0 and other values unchanged, we obtained different experimental results by varying values (0.2, 0.4, 0.6, and 0.8, respectively). The experimental findings are presented in Figure 16. From the figure, we observe that different parameter values had diverse effects on the grouping function value. As the weight of activity degree increased, the grouping function value underwent significant changes. When the grouping value was positive, the grouping function value gradually rose as the parameter value increased. This indicates that services offering more effective interactions and possessing higher trust values were more likely to enter the set and be allocated. Services that provided less effective interactions and had lower trust values had difficulty entering the direct allocation set.

Figure 13.

Effect of on the Precision index.

Figure 14.

Effect of on the Recall index.

Figure 15.

Effect of on the NDCG index.

Figure 16.

Experimental results with different values.

5. Conclusions and Future Works

In this paper, we firstly propose a dynamic trust mechanism that combines subjectivity and objectivity to address two problems in existing trust mechanisms. First, subjective trust mechanisms cannot select a wide range of interaction objects, thereby reducing their performance. Second, objective indirect trust mechanisms ignore the individual perception evaluations of end users. The proposed mechanism incorporates subjective direct trust, objective indirect trust, and an aggregate trust mechanism. Secondly, to address the problem of selecting trusted devices among collaborative service devices, we proposed a selection mechanism based on a collaborative filtering dynamic black- and whitelist. Thirdly, after selecting the trusted devices, we proposed an allocation algorithm based on coalition game theory to allocate the resources of the cooperative service devices. Experimental results demonstrate that the proposed trust mechanism effectively promotes cooperation among multiple devices. It more reliably resists malicious attacks and is suitable for FIoT edge computing systems.

In future work, we plan to conduct targeted experiments involving Sybil attack and Denial-of-Service (DoS) attack models. The Sybil attack, characterized by the creation of multiple fake identities to distort trust evaluation, poses a significant threat to the integrity of trust-based systems. Meanwhile, DoS attacks exploit resource exhaustion vulnerabilities to disrupt system availability. By simulating these attack scenarios, we aim to further evaluate the robustness and resilience of our trust management and resource allocation framework. Moreover, we intend to test the system using real-world datasets or on actual testbeds, such as edge computing platforms or IoT environments, to validate its practical effectiveness. To adaptively respond to dynamic threats and fluctuating environments, we will also integrate an adaptive learning mechanism. This mechanism will dynamically adjust key parameters—such as trust thresholds, activity weights, and coalition grouping factors—based on real-time feedback and historical data. This enhancement is expected to significantly improve the system’s adaptability, stability, and overall decision-making accuracy.

Author Contributions

B.W. conceived the study, performed the experiments, analyzed the data, prepared all figures and tables, wrote the main manuscript text, and reviewed drafts of the paper. J.W. and M.L. reviewed and critiqued the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data for this article can be obtained by email from the corresponding author.

Conflicts of Interest

Authors Bo Wang and Jiesheng Wang were employed by the company Julong Co., Ltd. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Yang, X.; Zeng, Z.; Liu, A.; Xiong, N.N.; Zhang, S. ADTO: A Trust Active Detecting-based Task Offloading Scheme in Edge Computing for Internet of Things. ACM Trans. Internet Technol. 2024, 24, 1–25. [Google Scholar] [CrossRef]

- Sun, Y.; Jiang, W.; Yang, Y.; Zhu, H.; Jiang, Y. Multi-domain authorization and decision-making method of access control in the edge environment. Comput. Netw. 2023, 228, 109721. [Google Scholar] [CrossRef]

- Li, J.; Zhang, H.; Li, S.; Cheng, L.; Guo, Y.; Wu, S. BD-TTS: A blockchain and DRL-based framework for trusted task scheduling in edge computing. Comput. Netw. 2024, 251, 110609. [Google Scholar] [CrossRef]

- Bounaira, S.; Alioua, A.; Souici, I. Blockchain-enabled trust management for secure content caching in mobile edge computing using deep reinforcement learning. Internet Things 2024, 25, 101081. [Google Scholar] [CrossRef]

- Geng, J.; Qin, Z.; Jin, S. Dynamic Resource Allocation for Cloud-Edge Collaboration Offloading in VEC Networks With Diverse Tasks. IEEE Trans. Intell. Transp. Syst. 2024, 25, 21235–21251. [Google Scholar] [CrossRef]

- Mageswari, U.; Deepak, G.; Santhanavijayan, A.; Mala, C. The IoT resource allocation and scheduling using elephant herding optimization (EHO-RAS) in IoT environment. Int. J. Inf. Technol. 2024, 16, 3283–3293. [Google Scholar] [CrossRef]

- Mostafa, N.; Shdefat, A.Y.; Al-Arnaout, Z.; Salman, M.; Elsayed, F. Optimizing IoT Resource Allocation Using Reinforcement Learning. In Proceedings of the International Conference on Advanced Engineering, Technology and Applications, Catania, Italy, 24–25 May 2024; pp. 736–747. [Google Scholar]

- Elgendy, I.A.; Zhang, W.-Z.; Liu, C.-Y.; Hsu, C.-H. An efficient and secured framework for mobile cloud computing. IEEE Trans. Cloud Comput. 2018, 9, 79–87. [Google Scholar] [CrossRef]

- Kang, J.; Yu, R.; Huang, X.; Wu, M.; Maharjan, S.; Xie, S.; Zhang, Y. Blockchain for secure and efficient data sharing in vehicular edge computing and networks. IEEE Internet Things J. 2018, 6, 4660–4670. [Google Scholar] [CrossRef]

- Liu, J.; Li, C.; Luo, Y. Efficient resource allocation for IoT applications in mobile edge computing via dynamic request scheduling optimization. Expert Syst. Appl. 2024, 255, 124716. [Google Scholar] [CrossRef]

- Zhou, Y.; Ge, H.; Ma, B.; Zhang, S.; Huang, J. Collaborative task offloading and resource allocation with hybrid energy supply for UAV-assisted multi-clouds. J. Cloud Comput. 2022, 11, 42. [Google Scholar] [CrossRef]

- Deng, S.; Xiang, Z.; Zhao, P.; Taheri, J.; Gao, H.; Yin, J.; Zomaya, A.Y. Dynamical resource allocation in edge for trustable internet-of-things systems: A reinforcement learning method. IEEE Trans. Ind. Inform. 2020, 16, 6103–6113. [Google Scholar] [CrossRef]

- Liu, X.; Yu, J.; Wang, J.; Gao, Y. Resource allocation with edge computing in IoT networks via machine learning. IEEE Internet Things J. 2020, 7, 3415–3426. [Google Scholar] [CrossRef]

- Xu, J.; Xu, Z.; Shi, B. Deep reinforcement learning based resource allocation strategy in cloud-edge computing system. Front. Bioeng. Biotechnol. 2022, 10, 908056. [Google Scholar]

- Feng, X.; Zhu, X.; Han, Q.-L.; Zhou, W.; Wen, S.; Xiang, Y. Detecting vulnerability on IoT device firmware: A survey. IEEE/CAA J. Autom. Sin. 2022, 10, 25–41. [Google Scholar] [CrossRef]

- Deng, Z.; Guo, Y.; Han, C.; Ma, W.; Xiong, J.; Wen, S.; Xiang, Y. Ai agents under threat: A survey of key security challenges and future pathways. ACM Comput. Surv. 2025, 57, 1–36. [Google Scholar] [CrossRef]