A Metric Learning-Based Improved Oriented R-CNN for Wildfire Detection in Power Transmission Corridors

Abstract

1. Introduction

- (i)

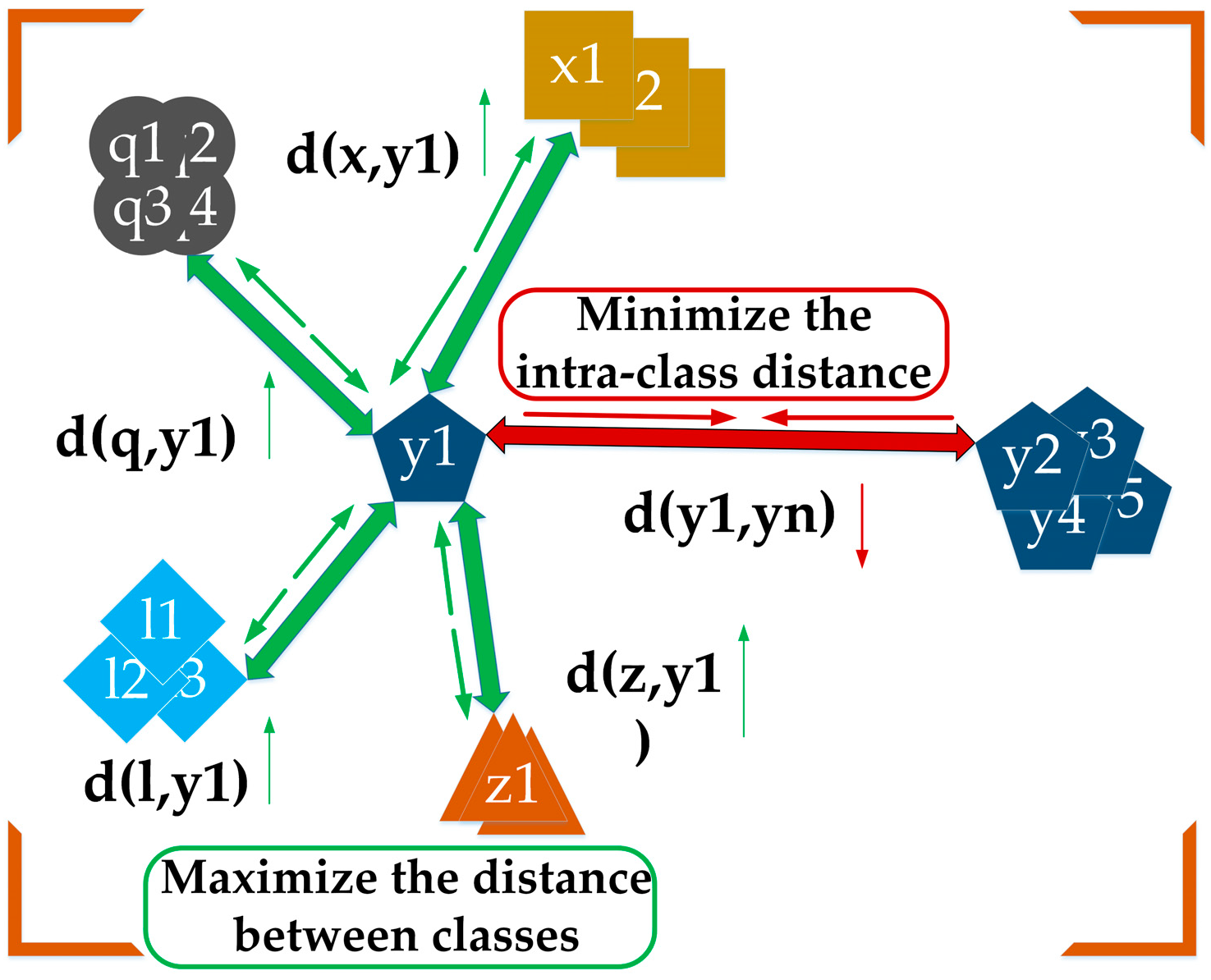

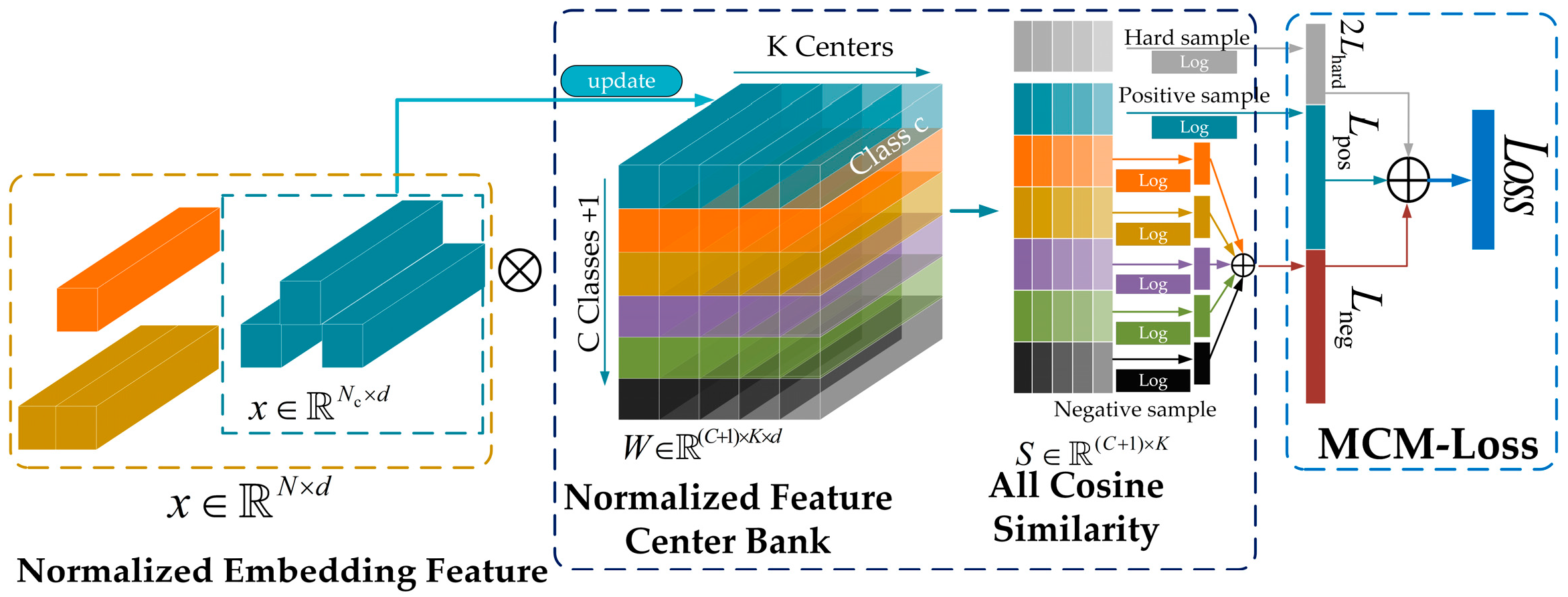

- A multi-feature center metric loss (MCM-Loss) module based on metric learning was proposed to enhance the model’s ability to distinguish between smoke and visually similar background samples. This effectively reduced false positives and missed detections and improved the overall recognition accuracy for smoke targets.

- (ii)

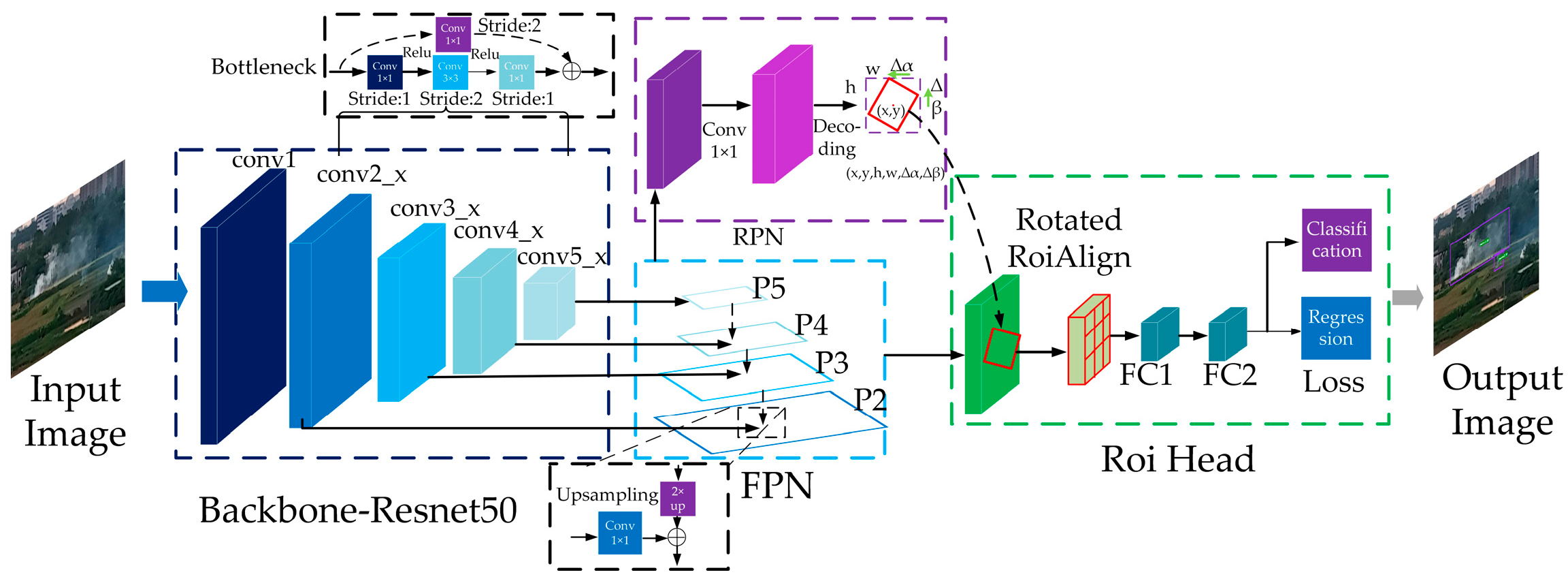

- The original ResNet backbone in the Oriented R-CNN was replaced with the group convolution network ResNeXt, which expanded the channel capacity for feature extraction without increasing model complexity, thereby enhancing the model’s performance in detecting flames and smoke with complex shapes and varying morphologies.

- (iii)

- An FPN-CARAFE structure was proposed by integrating the content-aware up-sampling operator CARAFE into the traditional feature pyramid network (FPN), which improved multi-scale feature fusion and preserved fine-grained information, leading to more accurate detection of small and irregular wildfire targets.

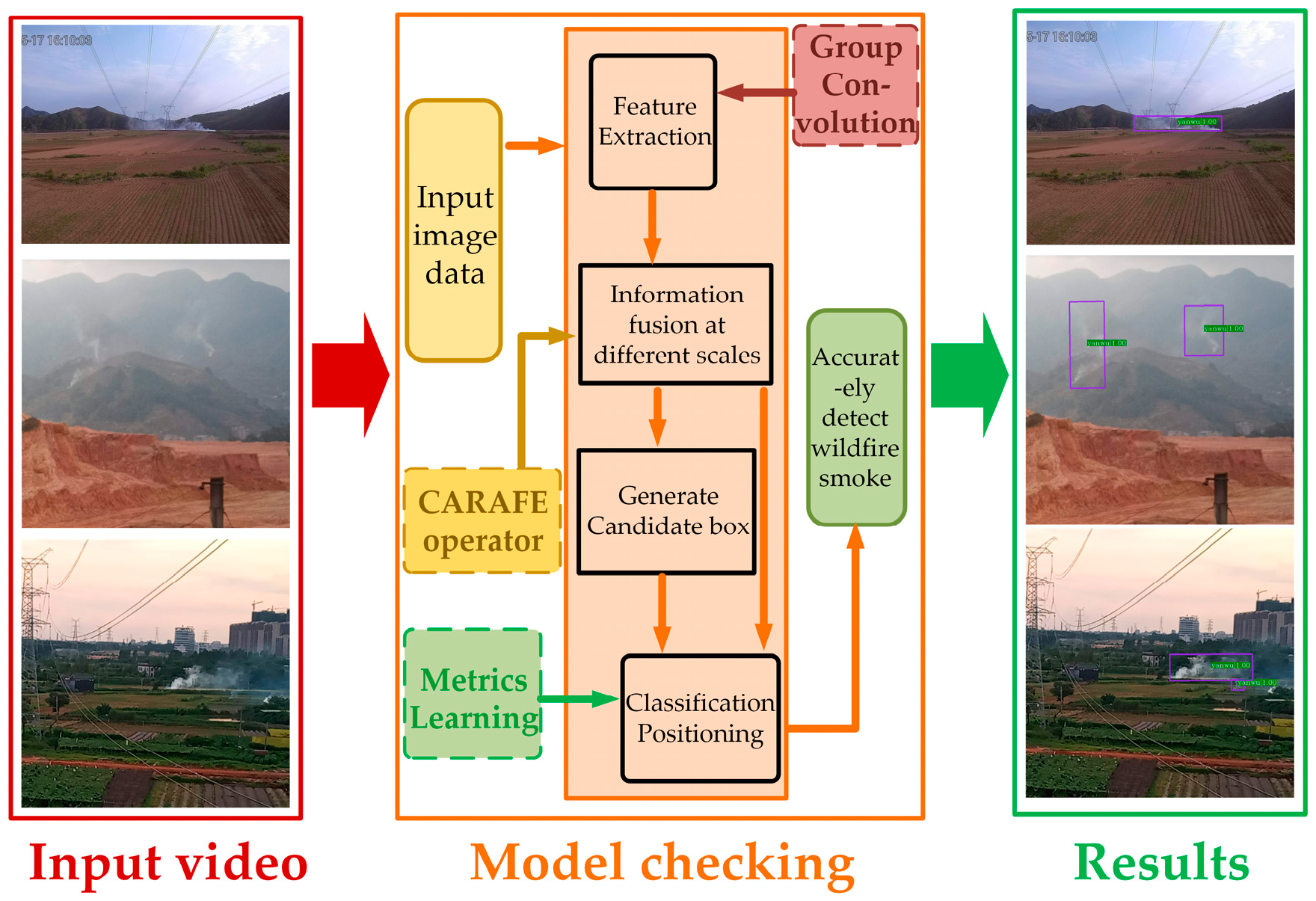

2. The Metric Learning-Based Wildfire Detection Model for Power Transmission Corridors

2.1. A Framework for Wildfire Detection Based on Metric Learning

2.2. Overview of the Oriented R-CNN Model

2.3. Multi-Feature Center Metric Loss (MCM-Loss) Module Based on Metric Learning

2.4. Group Convolutional Structure ResNeXt Replaces the Backbone Network

2.5. Introducing the CARAFE Operator to Construct the FPN-CARAFE Layer Structure

3. Experimental Results and Analysis

3.1. Dataset Creation

3.2. Experimental Platform and Training Parameters

3.3. Evaluation Indicators and Model Training

3.4. Ablation Experiment

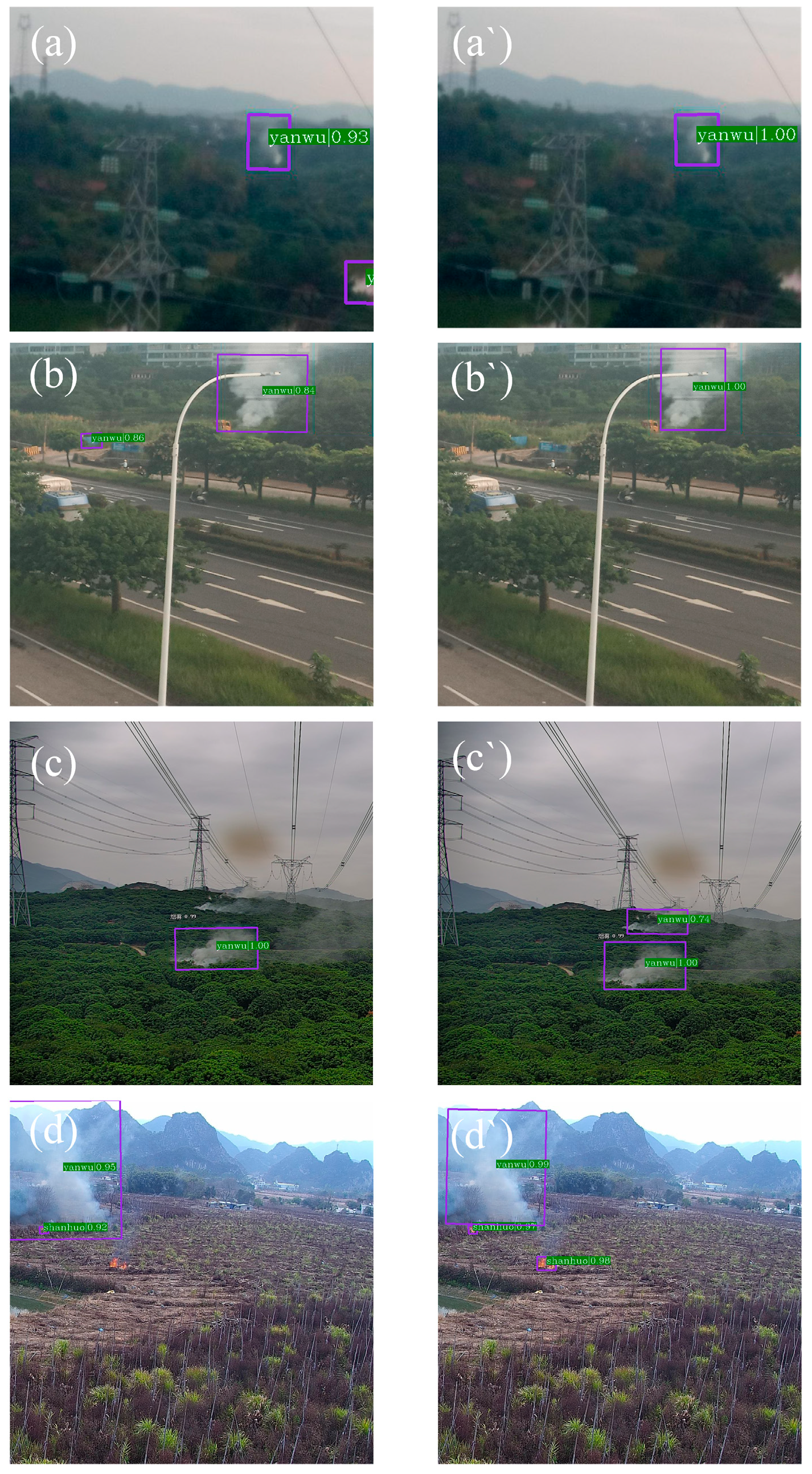

3.5. Comparative Experiment

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Taylor, S.; Roald, L.A. A framework for risk assessment and optimal line upgrade selection to mitigate wildfire risk. Electr. Power Syst. Res. 2022, 213, 108592. [Google Scholar] [CrossRef]

- Burke, M.; Driscoll, A.; Heft-Neal, S.; Xue, J.; Burney, J.; Wara, M. The changing risk and burden of wildfire in the United States. Proc. Natl. Acad. Sci. USA 2021, 118, e2011048118. [Google Scholar] [CrossRef]

- Chen, W.; Zhou, Y.; Zhou, E.; Xiang, Z.; Zhou, W.; Lu, J. Wildfire risk assessment of transmission-line corridors based on naïve Bayes network and remote sensing data. Sensors 2021, 21, 634. [Google Scholar] [CrossRef] [PubMed]

- Bayani, R.; Manshadi, S.D. Resilient expansion planning of electricity grid under prolonged wildfire risk. IEEE Trans. Smart Grid 2023, 14, 3719–3731. [Google Scholar] [CrossRef]

- Marjani, M.; Mahdianpari, M.; Mohammadimanesh, F. CNN-BiLSTM: A novel deep learning model for near-real-time daily wildfire spread prediction. Remote Sens. 2024, 16, 1467. [Google Scholar] [CrossRef]

- Li, J.; Tang, H.; Li, X.; Dou, H.; Li, R. LEF-YOLO: A lightweight method for intelligent detection of four extreme wildfires based on the YOLO framework. Int. J. Wildl. Fire 2024, 33, WF23044. [Google Scholar] [CrossRef]

- Fang, K.; Yao, Q.; Guo, Z.; Zheng, B.; Du, J.; Qi, F.; Yan, P.; Li, J.; Ou, T.; Liu, J.; et al. ENSO modulates wildfire activity in China. Nat. Commun. 2021, 12, 1764. [Google Scholar] [CrossRef]

- Lv, Z.; Wang, F.; Cui, G.; Benediktsson, J.A.; Lei, T.; Sun, W. Spatial–spectral attention network guided with change magnitude image for land cover change detection using remote sensing images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 4412712. [Google Scholar] [CrossRef]

- Chen, J.; Li, R.; Tao, M.; Wang, L.; Lin, C.; Wang, J.; Wang, L.; Wang, Y.; Chen, L. Overview of the performance of satellite fire products in China: Uncertainties and challenges. Atmos. Environ. 2022, 268, 118838. [Google Scholar] [CrossRef]

- Dowell, D.C.; Alexander, C.R.; James, E.P.; Weygandt, S.S.; Benjamin, S.G.; Manikin, G.S.; Blake, B.T.; Brown, J.M.; Olson, J.B.; Hu, M.; et al. The High-Resolution Rapid Refresh (HRRR): An hourly updating convection-allowing forecast model. Part I: Motivation and system description. Weather Forecast. 2022, 37, 1371–1395. [Google Scholar] [CrossRef]

- Abdusalomov, A.; Umirzakova, S.; Shukhratovich, B.M.; Mukhiddinov, M.; Kakhorov, A.; Buriboev, A.; Jeon, H.S. Drone-based wildfire detection with multi-sensor integration. Remote Sens. 2024, 16, 4651. [Google Scholar] [CrossRef]

- Shu, S.; Chen, Y.; Cao, S.; Zhang, B.; Fang, C.; Xu, J. Monitoring and alarm method for wildfires near transmission lines with multi-Doppler weather radars. IET Gener. Transm. Distrib. 2023, 17, 2055–2069. [Google Scholar] [CrossRef]

- Mukhiddinov, M.; Abdusalomov, A.B.; Cho, J. A wildfire smoke detection system using unmanned aerial vehicle images based on the optimized YOLOv5. Sensors 2022, 22, 9384. [Google Scholar] [CrossRef] [PubMed]

- Thangavel, K.; Spiller, D.; Sabatini, R.; Marzocca, P.; Esposito, M. Near real-time wildfire management using distributed satellite system. IEEE Geosci. Remote Sens. Lett. 2023, 20, 5500705. [Google Scholar] [CrossRef]

- Huang, X.; Xie, W.; Zhang, Q.; Lan, Y.; Heng, H.; Xiong, J. A Lightweight Wildfire Detection Method for Transmission Line Perimeters. Electronics 2024, 13, 3170. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards real-time object detection with region proposal networks. In Proceedings of the NIPS’15: 29th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Pan, J.; Ou, X.; Xu, L. A collaborative region detection and grading framework for forest fire smoke using weakly supervised fine segmentation and lightweight Faster-RCNN. Forests 2021, 12, 768. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Ding, Y.; Bu, X. MS-FRCNN: A multi-scale Faster RCNN model for small target forest fire detection. Forests 2023, 14, 616. [Google Scholar] [CrossRef]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 3520–3529. [Google Scholar]

- Chen, G.; Cheng, R.; Lin, X.; Jiao, W.; Bai, D.; Lin, H. LMDFS: A lightweight model for detecting forest fire smoke in UAV images based on YOLOv7. Remote Sens. 2023, 15, 3790. [Google Scholar] [CrossRef]

- Al-Smadi, Y.; Alauthman, M.; Al-Qerem, A.; Aldweesh, A.; Quaddoura, R.; Aburub, F.; Mansour, K.; Alhmiedat, T. Early Wildfire Smoke Detection Using Different YOLO Models. Machines 2023, 11, 246. [Google Scholar] [CrossRef]

- Yang, W.; Yang, Z.; Wu, M.; Zhang, G.; Zhu, Y.; Sun, Y. SIMCB-YOLO: An Efficient Multi-Scale Network for Detecting Forest Fire Smoke. Forests 2024, 15, 1137. [Google Scholar] [CrossRef]

- Luan, T.; Zhou, S.; Liu, L.; Pan, W. Tiny-Object Detection Based on Optimized YOLO-CSQ for Accurate Drone Detection in Wildfire Scenarios. Drones 2024, 8, 454. [Google Scholar] [CrossRef]

- Sheng, S.; Liang, Z.; Xu, W.; Wang, Y.; Su, J. FireYOLO-Lite: Lightweight Forest Fire Detection Network with Wide-Field Multi-Scale Attention Mechanism. Forests 2024, 15, 1244. [Google Scholar] [CrossRef]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar] [CrossRef]

- Wang, J.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-Aware Reassembly of Features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar] [CrossRef]

- Wang, F.; Sun, J. Survey on Distance Metric Learning and Dimensionality Reduction in Data Mining. Data Min. Knowl. Discov. 2015, 29, 534–564. [Google Scholar] [CrossRef]

- Guo, X.; Li, L.; Dang, C.; Liang, J.; Wei, W. Multiple Metric Learning via Local Metric Fusion. Inf. Sci. 2023, 621, 341–353. [Google Scholar] [CrossRef]

- Chen, S.; Gong, C.; Li, X.; Zhou, Z.; Hu, Y. Boundary-Restricted Metric Learning. Mach. Learn. 2023, 112, 4723–4762. [Google Scholar] [CrossRef]

- Ouyang, L.; Guo, G.; Fang, L.; Ghamisi, P.; Yue, J. PCLDet: Prototypical Contrastive Learning for Fine-Grained Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–11. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar] [CrossRef]

| Dataset | Flame (Shanhuo) Number of Instances | Smoke (Yanwu) Number of Instances | Dataset Partitioning |

|---|---|---|---|

| Transmission Corridor Wildfire Dataset | 1661 | 2182 | 8:1:1 |

| Operating System | CPU | GPU | Memory |

|---|---|---|---|

| Ubuntu 20.04 | Dual-core Intel Xeon E5-2640v4 2.4 GHz (Intel Corporation, Santa Clara, CA, USA) | 4 Nvidia Tesla V100 16 GB NVLink (NVIDIA Corporation, Santa Clara, CA, USA) | 128 GB ECC DDR4 |

| Model | MCM-Loss | ResneXt | FPN-CARAFE | P/% | R/% | AP/% | mAP/% | Parameters/Million | |

|---|---|---|---|---|---|---|---|---|---|

| Flame (Shanhuo) | Smoke (Yanwu) | ||||||||

| Baseline Model | 86.7 | 85.8 | 80.8 | 87.2 | 84.0 | 41.13 | |||

| Exp 1 | √ | 88.4 | 88.2 | 81.7 | 89.7 | 85.7 | 42.79 | ||

| Exp 2 | √ | √ | 89.0 | 89.1 | 82.5 | 90.0 | 86.3 | 42.79 | |

| Exp 3 | √ | √ | √ | 95.8 | 90.5 | 90.6 | 90.2 | 90.4 | 48.40 |

| Model Name | P/% | R/% | AP/% | mAP/% | Parameters/Million | |

|---|---|---|---|---|---|---|

| Flame (Shanhuo) | Smoke (Yanwu) | |||||

| YOLOv8-l | 79.9 | 76.4 | 74.5 | 77.5 | 76.0 | 43.61 |

| Redet | 87.8 | 82.3 | 81.8 | 80.5 | 81.2 | 33.37 |

| RoI Transformer | 86.0 | 85.2 | 81.8 | 80.8 | 81.3 | 55.12 |

| Rotated Faster R-CNN | 86.5 | 86.3 | 81.9 | 87.4 | 84.7 | 41.14 |

| Swin-T RoI Transformer | 86.2 | 87.1 | 82.8 | 86.7 | 84.8 | 58.75 |

| Gliding Vertex | 89.7 | 87.9 | 83.2 | 87.2 | 85.2 | 41.16 |

| Our model | 95.8 | 90.5 | 90.6 | 90.2 | 90.4 | 48.40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Wang, B.; Luo, P.; Wang, L.; Wu, Y. A Metric Learning-Based Improved Oriented R-CNN for Wildfire Detection in Power Transmission Corridors. Sensors 2025, 25, 3882. https://doi.org/10.3390/s25133882

Wang X, Wang B, Luo P, Wang L, Wu Y. A Metric Learning-Based Improved Oriented R-CNN for Wildfire Detection in Power Transmission Corridors. Sensors. 2025; 25(13):3882. https://doi.org/10.3390/s25133882

Chicago/Turabian StyleWang, Xiaole, Bo Wang, Peng Luo, Leixiong Wang, and Yurou Wu. 2025. "A Metric Learning-Based Improved Oriented R-CNN for Wildfire Detection in Power Transmission Corridors" Sensors 25, no. 13: 3882. https://doi.org/10.3390/s25133882

APA StyleWang, X., Wang, B., Luo, P., Wang, L., & Wu, Y. (2025). A Metric Learning-Based Improved Oriented R-CNN for Wildfire Detection in Power Transmission Corridors. Sensors, 25(13), 3882. https://doi.org/10.3390/s25133882