Abstract

Industrial image anomaly detection plays a critical role in intelligent manufacturing by automatically identifying defective products through visual inspection. While unsupervised approaches eliminate dependency on annotated anomaly samples, current teacher–student framework-based methods still face two fundamental limitations: insufficient discriminative capability for structural anomalies and suboptimal anomaly feature decoupling efficiency. To address these challenges, we propose a Synthetic-Anomaly Contrastive Distillation (SACD) framework for industrial anomaly detection. SACD comprises two pivotal components: (1) a reverse distillation (RD) paradigm whereby a pre-trained teacher network extracts hierarchically structured representations, subsequently guiding the student network with inverse architectural configuration to establish hierarchical feature alignment; (2) a group of feature calibration (FeaCali) modules designed to refine the student’s outputs by eliminating anomalous feature responses. During training, SACD adopts a dual-branch strategy, where one branch encodes multi-scale features from defect-free images, while a Siamese anomaly branch processes synthetically corrupted counterparts. FeaCali modules are trained to strip out a student’s anomalous patterns in anomaly branches, enhancing the student network’s exclusive modeling of normal patterns. We construct a dual-objective optimization integrating cross-model distillation loss and intra-model contrastive loss to train SACD for feature alignment and discrepancy amplification. At the inference stage, pixel-wise anomaly scores are computed through multi-layer feature discrepancies between the teacher’s representations and the student’s refined outputs. Comprehensive evaluations on the MVTec AD and BTAD benchmark demonstrate that our method is effective and superior to current knowledge distillation-based approaches.

1. Introduction

In contemporary industrial production, product quality assurance serves dual imperatives: maintaining a competitive advantage and ensuring consumer trust. The integration of automation and intelligent systems has elevated industrial visual anomaly detection to a pivotal role in optimizing manufacturing precision and operational throughput [1,2,3]. Nevertheless, conventional supervised learning paradigms encounter practical constraints in this domain, particularly stemming from the labor-intensive process of collecting and labeling defective visual data, compounded by data privacy regulations that further restrict anomaly sample availability. These operational challenges necessitate alternative methodologies for precise defect identification. Unsupervised learning has consequently emerged as a promising solution, demonstrating notable efficacy in scenarios with limited annotated data [2].

Within industrial visual inspection frameworks, unsupervised techniques enable anomaly detection and localization model development through the exclusive utilization of defect-free training samples. This paradigm shift addresses both the scarcity of anomalous exemplars and the prohibitive costs associated with manual annotation. Standard implementations employ pristine image datasets for model training, while evaluation protocols incorporate mixed sets containing both normal and anomalous instances. Current methodological approaches predominantly frame this challenge as an out-of-distribution detection problem. Recent advancements in unsupervised anomaly detection have yielded several principal technical trajectories, including feature embedding-based methods [4,5,6,7], reconstruction-based methods [8,9,10].

Reconstruction-based approaches identify anomalies through differential error analysis between original and reconstructed images [10,11]. The underlying hypothesis posits that autoencoders or generative adversarial networks achieve superior reconstruction fidelity on nominal patterns compared to anomalous regions. However, practical implementations reveal limitations in discriminative capacity as reconstruction artifacts may obscure differences between normal and defective regions [7,12]. This inherent ambiguity frequently manifests as performance instability across diverse industrial use cases.

Recent advancements in teacher–student frameworks have demonstrated notable efficacy in visual anomaly detection through hierarchical feature reconstruction. Bergmann et al. [4] pioneered this paradigm with their Uninformed Students, establishing foundational work in unsupervised anomaly recognition via teacher–student discrepancy analysis. Subsequent innovations [5,13] improve the model through systematic multi-layer feature alignment, establishing standardized protocols for multi-resolution anomaly mapping via cross-layer matching errors between architecturally symmetric teacher–student pairs.

While achieving benchmark performance, contemporary teacher–student-based anomaly detection approaches reveal several critical limitations. First, the absence of regularization mechanisms during knowledge transfer makes student model overfitting risks. Empirical evidence suggests that even when trained exclusively on anomaly-free samples, student networks may inadvertently encode anomalous patterns through latent feature sharing with teacher representations, thereby compromising detection specificity. Second, existing implementations [14,15,16] frequently employ computationally intensive architectures to enhance accuracy, exemplified by AEKD’s dual-student configuration [14], which escalates hardware requirements and complicates industrial deployment.

To overcome these constraints, we propose a Synthetic-Anomaly Contrastive Distillation (SACD) framework. The SACD framework comprises several crucial components: (1) a reverse distillation mechanism where a frozen teacher model utilizing ImageNet-pretrained weights extracts multi-scale representations to guide a structurally inverted student network through layer-wise feature alignment, and (2) a set of feature calibration (FeaCali) modules that eliminate noise interference while retaining critical anomaly indicators in student-decoded features. This configuration preserves normal pattern learning while proactively exposing the student model to synthetic anomalies, thereby enhancing discriminative capacity against potential defects. During training, SACD achieves dual objectives. On the one hand, it can reinforce inter-model consistency in normal feature representation through contrastive distillation, and on the other hand, we intend to amplify feature discrepancies in abnormal pattern decoding via synthetic anomaly confrontation. Moreover, FeaCali modules are designed to ensure parameter-efficient alignment between student and teacher embeddings while mitigating overfitting risks.

The proposed framework employs a dual-objective loss function that orchestrates the training process through two aspects: cross-model feature alignment between the student–teacher pair and intra-model component coordination within the Siamese students. This composite loss simultaneously enforces representation consistency across network hierarchies while maintaining functional complementarity among different streams, thereby ensuring concurrent optimization of normal pattern reconstruction fidelity and abnormal feature discrimination capability. During inference, pixel-wise anomaly scores are computed via multi-scale feature deviation metrics between the teacher’s preserved knowledge and the student’s calibrated outputs. Extensive benchmark evaluations on the MVTec AD dataset [17] demonstrate the effectiveness of our proposed SACD framework. Qualitative and quantitative results show that SACD achieves superior performance in anomaly detection and localization tasks, surpassing current baseline methods in terms of detection accuracy, while achieving optimizations in model size and computational complexity.

In summary, the main contributions of this paper are as follows:

- We propose a novel Synthetic-Anomaly Contrastive Distillation framework for industrial image anomaly detection, which enhances anomalous feature decoupling while preserving normal pattern reconstruction capabilities of the student model.

- We construct a dual-objective loss function encompassing both cross-model feature alignment and intra-model component coordination, enabling hierarchical representation consistency and discrepancy amplification between the teacher–student model.

- Extensive experiments conducted on the MVTec AD and BTAD dataset demonstrated our proposed method is effective and achieves superior anomaly detection performance with optimized model size and computational efficiency compared with the current KD-based approaches.

2. Related Works

Prior to extensive adoption of deep learning methodologies, industrial anomaly detection techniques progressively advanced into two dominant paradigms. One approach emphasizes the identification of discrepancies by means of feature embedding, whereas the other employs reconstruction-based mechanisms to detect anomalies.

2.1. Feature Embedding-Based Methods

Methods based on feature embedding commonly employ architectures such as VGG [13], ResNet [7], or Vision Transformer [18], utilizing intermediate or final feature representations for anomaly detection and localization. In the absence of anomalous samples during training, some approaches generate synthetic anomalies from normal images to train detection models. For instance, PatchSVDD [19] segments images into uniform patches for model training, improving the detection of localized anomalies. MOCCA [20] employs autoencoders to extract multi-scale features and delineate normal feature boundaries at each layer. Similarly, CutPaste [21], a notable data augmentation method, synthesizes anomalies by cutting and pasting regions of normal images, enabling the network to differentiate between normal and anomalous patterns. Some methods have mapped feature embeddings into distribution maps to appropriate distributions for anomaly identification [22,23,24,25]. For example, CS-Flow [23] enhanced performance by incorporating cross-convolution blocks into normal flow, leveraging contextual information across scales. Similarly, CFlow-AD [24] introduced positional encoding into conditional normal flow, analyzing the rationale behind the multivariate Gaussian assumption and normal flow’s efficiency.

To improve detection, some methods store normal image embeddings in a memory bank for inference comparison. K-Nearest Neighbors (KNNs), widely used in unsupervised anomaly detection, operates at the sample level. Inspired by KNN, SPADE [26] employs multi-resolution feature pyramids for pixel-level anomaly segmentation. PaDim [27] models the normal class probabilistically using multivariate Gaussians, with memory bank size dependent on image resolution rather than dataset size. However, its scalability is limited by computational demands for large CNNs. PatchCore [28] significantly advanced industrial AD, excelling on the MVTec AD dataset. It uses secondary sampling of a core set to optimize memory bank usage, reducing inference costs while maintaining high performance. Anomalies are detected by measuring distances between test samples and memory bank features. Lee et al. [29] proposed CFA, adapting features via coupled hyperspheres. Using transfer learning, it determines anomalies based on the positional relationship between test features and hypersphere surfaces in the memory bank.

Knowledge distillation methods leverage representation discrepancies between the teacher and student model for anomaly detection, demonstrating strong effectiveness. Uninformed Students [4] pioneered its application in industrial image anomaly detection, achieving notable results. Subsequent works, such as STPM [5] and MKD [13], advanced multi-scale feature transfer across model layers, albeit using different pre-trained architectures. MKD further revealed that a lighter student network outperforms one mimicking the teacher’s structure. RD4AD [12] introduced reverse distillation into the teacher–student pair with multi-scale feature fusion and bottleneck embeddings to reduce redundancy, enabling efficient feature reconstruction. AST [30] addressed the issue of similar feature extraction for anomalies by proposing an asymmetric architecture, complemented by normalization flows to mitigate structural inconsistencies and estimation bias. Overfitting due to capacity mismatches and knowledge transfer limitations has been a challenge in prior methods. To mitigate this, some methods proposed to incorporate extra auxiliary measures, such as memory bank [31,32], feature consistency [33,34,35,36,37], segmentation task [38,39], etc.

2.2. Reconstruction Based Methods

Reconstruction-based methods train encoders and decoders to reconstruct images for anomaly detection, reducing reliance on pre-trained models while enhancing anomaly detection capabilities. However, their limited ability to extract high-level semantic features often results in suboptimal image classification performance. Autoencoders [8], generative adversarial networks [40], and difussion models [41] are widely used for this purpose, with most reconstruction networks comprising encoder–decoder architectures [8,9,42]. For example, DRAEM [8] exemplifies reconstruction-based techniques by synthesizing anomalous images using external datasets and reconstructing them into normal images, improving generalization. It further integrates original and reconstructed images into a segmentation network to predict anomalous regions, boosting anomaly segmentation accuracy. Nevertheless, DRAEM faces challenges with near-distribution anomalies. Yan et al. [9] introduced MLIR, a multi-level image reconstruction framework that treats reconstruction as a denoising task across resolutions, enabling the detection of both global structural and fine-grained anomalies. DSR [11] employs quantized feature space representation and dual decoders, avoiding image-level anomaly synthesis. By sampling the quantized feature space, DSR generates near-distribution anomalies in a controlled manner. NSA [10] achieves superior performance without external data by leveraging diverse augmentation techniques, outperforming prior methods reliant on additional datasets.

3. Method

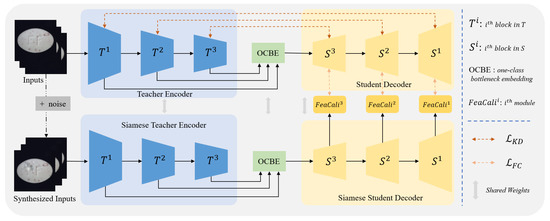

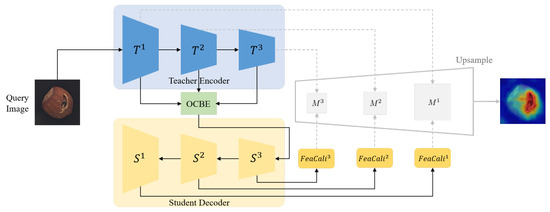

In this section, we elaborate on the overall framework of the proposed Synthetic-Anomaly Contrastive Distillation (SACD) framework, as illustrated in Figure 1. SACD comprises a Siamese reverse distillation flow and a group of feature calibration modules. During training, the teacher encoder is frozen; the weights of the one-class bottleneck embedding (OCBE) module, student decoder, and FeaCali modules are optimized via a dual-objective loss function.

Figure 1.

Overview of our proposed SACD framework.

3.1. Reverse Distillation

Reverse distillation comprises three main components: the teacher encoder, the OCBE module, and the student decoder.

3.1.1. Teacher Encoder

In unsupervised anomaly detection using knowledge distillation, constructing a robust teacher model is essential. Following Wang et al. [33], we employ a WideResNet-50 model pre-trained on ImageNet as the teacher encoder. The ResNet-like architecture, with its multiple residual blocks, enables the network to capture complex feature representations. Intermediate outputs from these blocks are treated as multi-scale feature representations of input images [5,14,33,43,44]. Based on this idea, and in line with previous work, by default, feature maps from the 1st, 2nd, and 3rd layers of WideResNet-50 are selected as learning targets for the student model. This allows the student decoder to inherit rich multi-level features, enhancing multi-scale feature reconstruction and anomaly perception.

Let T denote the teacher model. For an input I, the multi-scale features are obtained as

where , , and correspond to the outputs of the 1st, 2nd, and 3rd layers of the teacher encoder T, respectively.

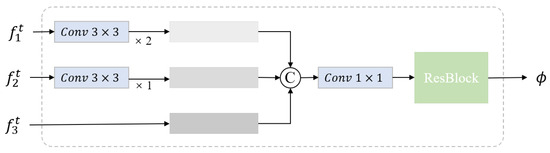

3.1.2. One-Class Bottleneck Embedding

In conventional encoder–decoder frameworks, the decoder typically relies on the final-layer output of the encoder. However, reverse distillation poses challenges when transferring high-level embeddings from the teacher encoder directly to the student decoder, as it hinders the reconstruction of fine-grained features. To mitigate this, as is presented in Figure 2, OCBE is designed to facilitate multi-scale feature fusion and dimensionality reduction through feature compaction, preserving essential information while mapping high-dimensional inputs to a lower-dimensional space. This design enhances the student decoder’s ability to interpret hierarchical features from the teacher, leading to more expressive and efficient reconstructions.

Figure 2.

Architecture of the OCBE module.

Let denote the fusion and compression function of the OCBE module. By integrating the three feature embeddings , , and , the resultant representation can be expressed as :

3.1.3. Student Decoder

Upon receiving the compressed multi-scale teacher features , the student decoder is required to reconstruct feature embeddings of identical dimensions across different scales based on these features. Consequently, in the reverse distillation process, the student decoder is designed with an inverse architecture, while ensuring that the size of its output tensors remains consistent with the corresponding teacher embeddings. Specifically, the number of forward residual blocks in the student decoder is aligned with that of the teacher encoder, while the channel dimensions are adjusted via upsampling to match the embedding dimensions of the teacher model at the corresponding scales. It is worth noting that, in contrast to conventional convolutional pooling operations, the reverse process employs deconvolution for upsampling. The entire procedure can be formalized as follows:

where , , and correspond to the outputs of the 1st, 2nd, and 3rd layers of the student decoder S, respectively.

3.2. Siamese Reverse Distillation Flow

In traditional multi-scale feature knowledge distillation frameworks, teacher–student models typically follow a one-to-one configuration, where the teacher model transfers multi-layer embedding knowledge of normal images to the student model. The student then identifies anomalous regions based on the representation differences between the teacher and student model. However, during training, the student model may overfit or develop overly strong encoding capabilities, causing it to produce representations for anomalous regions that closely resemble those of the teacher, thereby degrading anomaly detection performance. In view of this, we extend the original anomaly-free input and synthesize abnormal substitutes of normal samples using image-level anomaly synthesis. Additionally, we introduce a Siamese reverse distillation flow to encode and decode the features of both normal and synthesized abnormal inputs.

3.2.1. Normal RD Branch

Assuming the input anomaly-free sample is denoted as , the processing through the normal branch yields two sets of features:

where , , and denote the anomaly-free features encoded by the teacher model, while , , and represent the feature embeddings reconstructed by the student decoder.

3.2.2. Abnormal RD Branch

As described above, normal branch is responsible for receiving and encoding multi-scale features of normal images. In this way, abnormal branch receives and encodes the anomalous version (synthesized) of the corresponding normal image. Importantly, our goal is for the student network to exclusively encode features from the normal regions, while ensuring a significant difference in the representation of anomalous regions between the teacher and student models. In addition to the basic RD model, the anomaly branch also incorporates an anomaly synthesis module and a feature refinement module. The details of these components are described in the following subsections.

- (1)

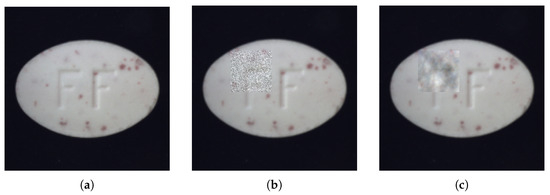

- Anomaly Synthesis

This paper assumes that normal and anomalous patterns might share some basic features, which allows the student model to reconstruct anomalies effectively. To handle this, we create anomalous versions of normal images during training. This helps the student model learn about anomalies beforehand, preparing it for better detection later. Recent studies have suggested methods like Gaussian noise [33], masking [45], and CutPaste [21] for creating anomalies. Given its effectiveness and simplicity, we use the simplex method as our default approach. Simplex noise performs better than Gaussian noise when simulating anomalies using a power-law distribution. As shown in Figure 3, simplex noise creates more natural-looking anomalies compared to Gaussian noise. Let represent the synthesized anomalous image, and the full process is described in Algorithm 1.

Figure 3.

Visualization of original image and various pseudo-anomaly injections. (a) Original anomaly-free image; (b) pseudo-anomaly image with Gaussian noise; (c) pseudo-anomaly image with Simplex noise.

Consistent with the normal branch, the RD model encodes the synthesized anomaly samples into two sets of features:

where , , and denote the anomaly-free features encoded by the teacher model, while , , and represent the feature embeddings reconstructed by the student decoder.

Since the input consists of anomaly samples, the features encoded by the teacher model will contain anomalous patterns. After undergoing fusion and compression operations in the OCBE module, these features may still retain latent anomalous patterns due to the absence of an explicit mechanism to eliminate such anomalies. Consequently, the multi-scale feature embeddings reconstructed by the student model will also include latent anomaly information, which can undermine the feature matching process between the teacher and student models, thereby degrading the performance of anomaly detection.

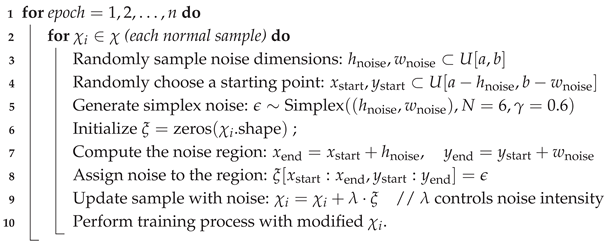

| Algorithm 1: Pseudo-code of the process of anomaly synthesis |

| Input: Normal training set , discrete range , noise parameter Output: Modified training set with Simplex noise  |

- (2)

- Feature Calibration Module

As stated in the assumption, when the input image contains anomalies, the feature calibration (FeaCali) module is designed to filter out potential anomalous patterns from the student decoder’s outputs. This prevents performance degradation caused by anomaly leakage. To keep the module lightweight, FeaCali uses stacked convolutional blocks in a bottleneck style, including convolution, InstanceNorm, and LeakyReLU layers, where the channel dimensionality is halved progressively at each layer. After obtaining restored feature embeddings , , and , the FeaCali module operates in multi-scale modes, denoted as , , and , individually. Its main task is to take intermediate layer outputs as input and refine them by augmenting normal features. Through this process, , , and are refined into , , and . Figure 4 shows the structure of the MFR module. In the experiments, L is set to 2.

Figure 4.

Structure of the FeaCali module.

3.3. Training Objective

We design a dual-objective loss function to train the OCBE module, student decoder, and FeaCali module. This function ensures consistent hierarchical representations between the teacher and student models while amplifying discrepancies for anomaly detection. The total loss consists of two parts: cross-model feature alignment loss, denoted as , and intra-model component coordination loss, denoted as . transfers knowledge from the teacher model to the student model, enabling the student to replicate the teacher’s understanding of normal image features. optimizes the FeaCali module, helping it filter out potential anomalies and reconstruct high-quality normal features. The overall loss is expressed as

where is a positive regularization parameter for adjusting the optimization weights of the FeaCali module.

3.3.1. Intra-Model Component Coordination Loss

After building the FeaCali module, we aim for it to enhance normal features and filter out anomalous ones. A simple approach is to use the multi-scale restored feature embeddings from the student decoder’s normal branch as ground truth to guide feature reconstruction. The loss is computed using cosine similarity to optimize the FeaCali module.

First, the features , , and are flattened from shape (B, C, H, W) to (B, ) to calculate the similarity between teacher and student features at each layer. The total loss is the sum of these layer-wise losses:

where represents the flattening operation, is the number of feature layers used in training, and denote the height and width of the k-th feature map. Cosine similarity measures feature similarity, and minimizing optimizes the FeaCali module.

3.3.2. Cross-Model Feature Alignment Loss

In order to effectively transfer the teacher model’s multi-scale knowledge to the student model, the teacher–student models employ cosine similarity as the knowledge distillation loss for knowledge transfer and feature alignment. The loss function for optimizing the OCBE module and student decoder is derived from the following equation:

where, denotes the number of feature layers used in training, and h and w represent the height and width of the k-th feature map.

3.4. Inference and Anomaly Scoring

During the inference stage, the entire reverse distillation process is fully retained, and the output of the student decoder is enhanced by the FeaCali module for normal feature refinement. Given a query image , we obtain a pair of multi-level feature embeddings from the teacher and student models, denoted as , , and , , , respectively. Then we calculate the anomaly score based on the difference between the outputs of the intermediate layers at corresponding positions of the teacher and student models:

where refers to the anomaly score in (h, w) of the k-th feature map, h and w are the corresponding location in the query image.

To locate anomalies in the query image, we combine anomaly prediction score maps from different scales. Intermediate layer features are upsampled to match the query image’s resolution using bilinear interpolation. The final anomaly map is computed as

where represents the final anomaly map, and is a Gaussian filter with to smooth noise in the map. The inference process is illustrated in Figure 5.

Figure 5.

Inference pipeline of our proposed method.

4. Experiment Setting and Results

4.1. Dataset

To evaluate the performance of the proposed method for industrial anomaly detection, experiments were conducted on the widely-used MVTec AD benchmark [17] and BTAD dataset [46]. The MVTec AD dataset includes 15 sub-datasets with a total of 5354 images, of which 1725 are in the test set. Each sub-dataset consists of training data containing only normal samples and test sets that include both normal and anomalous samples. The test sets also provide various defect types along with corresponding ground truth masks for anomalies. The BTAD dataset is a practical benchmark for industrial anomaly detection, comprising 2830 images collected from real-world scenarios involving three types of industrial products. These samples exhibit various structural and surface-level defects.

4.2. Evaluation Metrics

The performance of image-level anomaly detection is evaluated using the area under the receiver operating characteristic curve (AUROC) based on generated anomaly scores. Following prior work, we calculate the class-average AUROC on the MVTec dataset. For segmentation performance, we use pixel-wise AUROC and the PRO metric. The PRO score measures the overlap and recovery of connected anomaly components, providing a more accurate evaluation for anomalies of varying sizes.

4.3. Implementation Details

Following prior works, anomaly detection and localization are performed on one class at a time. Consistent with previous studies [12,14], we use a WideResNet50 pre-trained on ImageNet as the teacher model, while the student model and MRF module parameters are randomly initialized. The outputs of the 1st, 2nd, and 3rd ResBlocks of WideResNet50 are selected as multi-scale anomaly representations. As in [7,12,14], images are resized to 256 × 256. No data augmentation is applied during experiments. For anomaly synthesis, the parameter is set to 0.4 by default, and only half of each batch is used for pseudo-anomaly synthesis. And the parameters for Simplex noise generation are set by default as follows: octaves = 3 to introduce multi-scale texture variation, persistence = 0.5 to balance detail retention and smoothness, and frequency = 32.0 to control the granularity of the generated anomalies. In addition, the width and height of each noise region are randomly sampled within the range of (0, 0.5) of the corresponding image’s dimensions to simulate variable anomaly shapes. Equilibrium coefficient in loss function is set to 0.2. The model is trained using the Adam optimizer with , a learning rate of 0.001, and a batch size of 16 for 200 epochs. A Gaussian filter with is applied to smooth the anomaly score map.

4.4. Experimental Results

4.4.1. Results on MVTec AD

We compare our method with representative state-of-the-art algorithms, including Uninformed Students [4] (US), MKD [13], Patch-SVDD [19], SPADE [26], PaDiM [27], CutPaste [21], RIAD [47], RD4AD [12], MaMiNet [48], MMR [49], AEKD [14], and MSFR [16]. The results for industrial image anomaly detection and localization are presented in Table 1 and Table 2, respectively.

Table 1.

Anomaly detection results on MVTec AD [17]. For each category, the methods that achieved the top AUROC (%) are highlighted in bold. Our method ranks first based on the average scores of textures, objects, and overall performance.

Table 2.

Anomaly localization results on MVTec AD [17] include AUROC (%) and PRO (%). AUROC is a pixel-wise comparison, while PRO is region-based. The best results in each item in bold.

Table 1 compares our method with recent approaches for anomaly detection. Clearly, our method achieves the best average performance in detecting texture and object anomalies, reaching 99.9% and 99.1%, respectively, surpassing the sub-optimal method AEKD by 0.2% and 0.6%. Overall, our method achieves the highest anomaly detection AUROC of 99.3% across all instances. Additionally, it attains 100% detection rates for categories such as carpet, grid, leather, bottle, and toothbrush. These results highlight the effectiveness and superiority of our approach.

Compared to the native RD4AD method, SACD improves normal feature reconstruction by post-processing the student decoder’s output, reducing potential abnormal expressions. This enhances the consistency of normal feature representations and the distinction of abnormal regions between the teacher and student models. By incorporating prior anomaly pattern learning during training, the student network is better equipped to augment itself, strengthening the alignment and differentiation between the teacher and student models.

Table 2 compares the proposed method with others for anomaly localization, with each entry showing pixel-level AUROC and PRO (pixel-level AUROC/PRO). Overall, our method achieves the best results, with an average AUROC of 98.3% and PRO of 94.8% across all instances. Specifically, for pixel-level AUROC, SPADE, PaDiM, RIAD, CutPaste, RD4AD, MMR, MSFR, and AEKD are 1.8%, 0.8%, 4.1%, 0.5%, 0.2%, 1.1%, 1.0%, and 0.2% lower than ours, respectively. For PRO, SPADE, PaDiM, RD4AD, MMR, MSFR, and AEKD are 3.1%, 2.7%, 0.8%, 2.2%, 1.7%, and 0.8% lower, respectively.

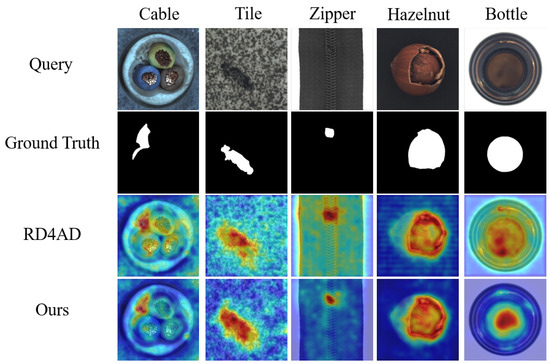

For texture anomaly detection, our method achieves 98.2% AUROC and 95.7% PRO on average. For object categories, it achieves 98.4% AUROC and 94.4% PRO. Notably, our method outperforms others in anomaly localization across nearly all categories. Compared to RD4AD, SACD shows improved localization performance. Figure 6 provides visual comparisons for representative textures and objects. Both qualitative and quantitative analyses confirm the effectiveness and advancement of our method. For pixel-level anomaly detection, enhancing representation consistency and differences between teacher and student models in the knowledge distillation framework is crucial. SACD improves normality in student outputs by introducing a forged anomaly prior and a group of lightweight feature calibration FeaCali module during training, boosting the accuracy of anomaly localization in the reverse distillation model.

Figure 6.

Comparative visualization of anomaly detection methods on representative cases.

4.4.2. Results on BTAD

Table 3 compares the pixel-level anomaly localization performance of our proposed method with several representative approaches, including VT-ADL, FastFlow, Patch-SVDD, and RD4AD, on the BTAD dataset. As shown by the experimental results, our method achieves the best average performance across all three product classes, with a pixel-level AUROC of 97.9%, and ranks first on Class02 and Class03, reaching 97.4% and 99.8%, respectively.

Table 3.

Anomaly localization results in terms of pixel-level AUROC on BTAD [46].

Compared to the baseline reverse distillation method RD4AD, our proposed SACD framework enhances the student decoder with a multi-scale feature calibration module, and incorporates an abnormality synthesis strategy alongside an inter-model component consistency loss during training. These enhancements enable the model to deliver more accurate anomaly localization during inference, especially when processing defective samples. These results demonstrate the effectiveness and superiority of the proposed method.

4.5. Structural and Parametric Analysis

This section primarily investigates the impact of anomaly synthesis intensity, different anomaly synthesis modes, the equilibrium coefficient in the loss function, the structure of the FeaCali module, and the dependency on the pre-trained model on the proposed method’s performance. Throughout the experiments, other hyperparameters were kept at their default settings and remained fixed.

4.5.1. Complexity Analysis

To provide concrete evidence of SACD’s computational efficiency, especially in comparison with the dual-student AEKD baseline, we report the model complexity and runtime performance under various FeaCali depths L. As shown in Table 4, SACD achieves consistently strong performance across different settings, with all configurations maintaining inference latency under 4 ms and parameter counts below 166 M.

Table 4.

Comparison of model complexity and performance between SACD (under different FeaCali depths) and AEKD. Metrics include parameter count, FLOPs, inference latency per 256 × 256 image, and image-level AUROC on MVTec AD.

Interestingly, increasing the FeaCali depth from to results in a slight reduction in parameters (from 165.08 M to 158.9 M) and FLOPs (from 43.3 G to 39.67 G), while the AUROC improves from 99.2% to 99.3%. Further increasing L to 3 or 4 does not yield additional accuracy gains and may even lead to a slight degradation (e.g., 99.0% at ), while keeping complexity similar. These observations suggest that represents the optimal trade-off point, i.e., the accuracy–efficiency knee, where the model achieves peak performance with minimal computational cost.

In contrast, the AEKD baseline, despite having a lower parameter count (132.92 M), shows higher inference latency (4.64 ms) and lower accuracy (98.9%), likely due to the overhead from its dual-student architecture. This confirms that SACD not only offers superior anomaly detection performance but also exhibits better computational efficiency, particularly at , validating the design of the FeaCali module.

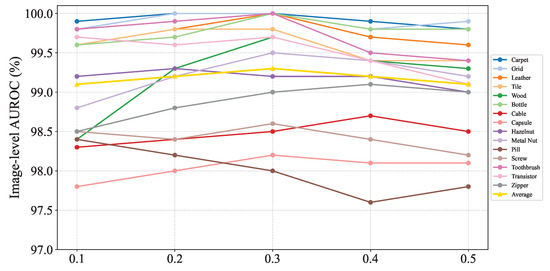

4.5.2. Effect of Pseudo-Anomaly’s Intensity

In real industrial scenarios, product defects vary in severity, but all defective products are classified as faulty. To simulate this, we adjusted the anomaly intensity in the synthesis method to evaluate the model’s performance in anomaly detection and localization under varying intensities. Figure 7 compares the model’s performance across different anomaly intensities. The results indicate that when the synthetic anomaly intensity is set to 0.3, the model demonstrates superior generalization for both anomaly detection and localization. However, for specific products like zipper, the model performs better with an anomaly intensity of 0.4. The optimal intensity can be selected based on task-specific requirements.

Figure 7.

Influence of various pseudo-anomaly’s intensities.

4.5.3. Comparative Study of Anomaly Synthesis Methods

There are various approaches to anomaly synthesis, and as discussed in Section 3.2.2, we focus on concise image-level anomaly synthesis strategies, including CutPaste, Gaussian noise, and Simplex noise. Table 5 presents the comparative results of these methods. It is evident from the results that Simplex noise achieves the best performance among the three methods, owing to its ability to generate more natural-looking synthetic anomalies. In contrast, CutPaste and Gaussian noise demonstrate comparable performance, with no significant distinction between them.

Table 5.

Comparison of model performance under various anomaly synthesis modes.

4.5.4. Influence of the Equilibrium Coefficient on Loss Function Optimization

As outlined in Section 3.3, is a hyperparameter that controls the weight of the intra-model component coordination loss () in the total loss function. A higher value increases the influence of on the model optimization. To determine the optimal balance, we conducted several ablation experiments on to identify the point where the model achieves the best performance trade-off. Table 6 presents the anomaly detection and localization results for different values.

Table 6.

Effect of hyperparameter in the loss function.

The results show that, as increases, the model’s performance gradually decreases. This indicates that the cross-model feature alignment loss plays a crucial role in enabling the student network to effectively learn multi-layer image feature embeddings and should dominate the loss function. In contrast, the intra-model component coordination loss is less effective at filtering anomalous information from normal features and enhancing normal feature reconstruction. From Table 6, it is evident that the model performs best when is set to 0.2, achieving an optimal balance between the two losses during training.

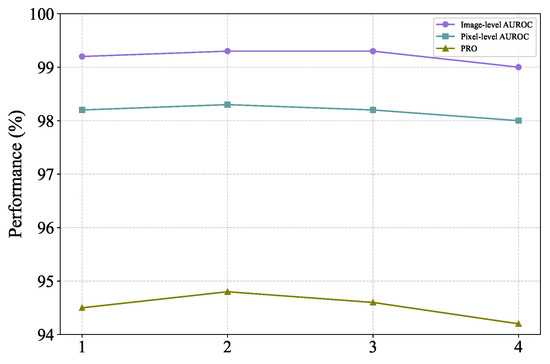

4.5.5. Effect of FeaCali Module Configuration

- (1)

- Effect of FeaCali Depth

The FeaCali module is composed of stacked bottleneck layers. In this subsection, we investigate the impact of varying the depth of this module on the model’s performance, as illustrated in Figure 8. As the depth of the module increases gradually, the overall anomaly detection performance of the model initially improves, reaches a plateau, and subsequently begins to decline. Based on the experimental results, it can be inferred that setting the depth to 2 strikes a good balance between performance and complexity, making it a suitable choice.

Figure 8.

Comparison of model detection performance at different depths of FeaCali module.

- (2)

- Selection of Normalization/Activation Function

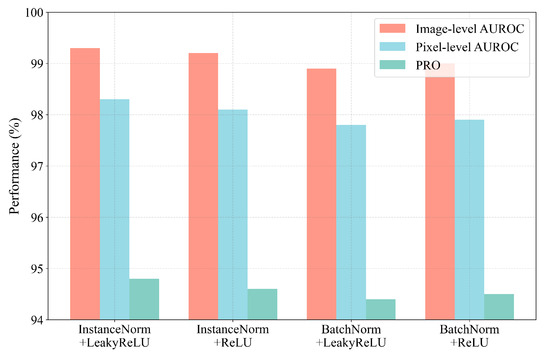

Figure 9 compares the performance of different normalization and activation function combinations in unsupervised anomaly detection tasks. It is observed that the combination of Instance Normalization and LeakyReLU consistently outperforms the others, primarily due to its superior ability to preserve local anomalous features and enhance sensitivity to subtle deviations. InstanceNorm normalizes each sample independently, thereby avoiding the inference instability associated with BatchNorm’s reliance on batch-level statistics—an issue especially prominent in small-batch or single-sample inference scenarios. Meanwhile, LeakyReLU alleviates the dead neuron problem inherent in ReLU and retains non-zero gradients in the negative domain, facilitating the modeling of fine-grained anomalies. As a result, this configuration demonstrates greater robustness and improved performance on both image-level and pixel-level evaluation metrics. Accordingly, it is adopted as the default setup for the FeaCali module.

Figure 9.

FeaCali module with different combinations of normalization/activation functions.

4.5.6. Dependency on Pretrained Model

Anomaly detection methods based on the teacher–student framework rely on powerful pre-trained models, where the teacher model’s generalization capability is critical to performance. Table 7 presents the anomaly detection results of our framework using various ResNet architectures as pre-trained models. The results show that WideResNet50, by expanding network width, significantly enhances encoding capacity and adaptability to diverse data distributions compared to traditional ResNet architectures. This improvement enables WideResNet to capture complex patterns in image data more effectively. Leveraging the teacher model’s strong representation ability and the student model’s limitations in unseen regions further boosts the robustness and accuracy of anomaly detection.

Table 7.

Comparison of model performance on different pretrained model.

5. Conclusions

This paper proposes a Synthetic-Anomaly Contrastive Distillation (SACD) framework for industrial image anomaly detection. SACD is built on two key components: (1) a reverse distillation framework where a pre-trained teacher network extracts hierarchical representations, guiding the student network with an inverse architecture to achieve feature alignment across multiple scales; and (2) FeaCali modules that refine the student’s outputs by filtering out anomalous feature responses. During training, SACD employs a dual-branch strategy, with one branch encoding multi-scale features from defect-free images and a Siamese anomaly branch processing synthetically corrupted samples. The FeaCali modules are trained to eliminate anomalous patterns in the anomaly branch, enabling the student network to focus exclusively on modeling normal patterns. A dual-objective optimization framework, combining cross-model distillation loss and intra-model contrastive loss, is used to train SACD, ensuring effective feature alignment and enhanced discrepancy amplification. At the inference stage, pixel-wise anomaly scores are computed based on discrepancies between the teacher’s representations and the student’s refined outputs across multiple layers. Extensive evaluations on the MVTec AD benchmark confirm our approach is effective and achieve superiority to current KD-based approaches for anomaly detection.

In future work, we plan to explore cross-domain anomaly detection scenarios, where the model trained on one type of industrial product is required to generalize to unseen categories or domains with minimal adaptation. This is crucial for practical deployment, as collecting labeled anomaly data for every product line is often infeasible. In addition, we aim to investigate more natural and physically consistent anomaly synthesis methods, beyond procedural noise, to better mimic the texture, geometry, and defect formation process of real-world industrial anomalies. Such approaches could further enhance the realism and diversity of training data, thereby improving the robustness of detection models in complex environments.

Author Contributions

Conceptualization, J.L. and M.L.; methodology, J.L.; software, J.L.; validation, J.L. and M.L.; formal analysis, J.L., M.L., S.H. and G.W.; investigation, J.L., M.L. and X.Z.; resources, J.L. and M.L.; data curation, J.L. and M.L.; writing—original draft preparation, J.L. and M.L.; writing—review and editing, J.L., M.L., S.H., G.W. and X.Z.; visualization, J.L. and X.Z.; supervision, M.L. and S.H.; project administration, M.L. and X.Z.; funding acquisition, J.L. and S.H. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Key Research Project of the “14th Five-Year Plan” Scientific Research Program of Jiangsu Open University (The City Vocational College of Jiangsu), under Grant No. 2024LYZD003, and the 2024 Yangzhou “Lvyang Golden Phoenix Plan” for High-level Innovation and Entrepreneurship Talent Introduction under Grant No. YZLYJFJH2024YXBS052 and the National Natural Science Foundation of China under Grant No. 62276118.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Code will be released upon publication.

Conflicts of Interest

Author Xinjing Zhao was employed by the Suzhou Zhanchi Tongyang Talent Technology Company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Zamanzadeh Darban, Z.; Webb, G.I.; Pan, S.; Aggarwal, C.; Salehi, M. Deep learning for time series anomaly detection: A survey. ACM Comput. Surv. 2024, 57, 1–42. [Google Scholar] [CrossRef]

- Liu, J.; Xie, G.; Wang, J.; Li, S.; Wang, C.; Zheng, F.; Jin, Y. Deep industrial image anomaly detection: A survey. Mach. Intell. Res. 2024, 21, 104–135. [Google Scholar] [CrossRef]

- Zhou, Y.; Qu, Y.; Xu, X.; Shen, F.; Song, J.; Shen, H.T. Batchnorm-based weakly supervised video anomaly detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 13642–13654. [Google Scholar] [CrossRef]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. Uninformed students: Student-teacher anomaly detection with discriminative latent embeddings. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4183–4192. [Google Scholar]

- Wang, G.; Han, S.; Ding, E.; Huang, D. Student-teacher feature pyramid matching for anomaly detection. arXiv 2021, arXiv:2103.04257. [Google Scholar]

- Yousefpour, A.; Shishehbor, M.; Zanjani Foumani, Z.; Bostanabad, R. Unsupervised anomaly detection via nonlinear manifold learning. J. Comput. Inf. Sci. Eng. 2024, 24, 111008. [Google Scholar] [CrossRef]

- Wang, G.; Zou, Y.; He, S.; Wang, Y.; Dai, R. Anomaly Detection and Localization via Reverse Distillation with Latent Anomaly Suppression. IEEE Trans. Circuits Syst. Video Technol. 2025. [Google Scholar] [CrossRef]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. DRAEM—A discriminatively trained reconstruction embedding for surface anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 8330–8339. [Google Scholar]

- Yan, Y.; Wang, D.; Zhou, G.; Chen, Q. Unsupervised anomaly segmentation via multilevel image reconstruction and adaptive attention-level transition. IEEE Trans. Instrum. Meas. 2021, 70, 5015712. [Google Scholar] [CrossRef]

- Schlüter, H.M.; Tan, J.; Hou, B.; Kainz, B. Natural synthetic anomalies for self-supervised anomaly detection and localization. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 474–489. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. DSR—A dual subspace re-projection network for surface anomaly detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–24 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 539–554. [Google Scholar]

- Deng, H.; Li, X. Anomaly detection via reverse distillation from one-class embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9737–9746. [Google Scholar]

- Salehi, M.; Sadjadi, N.; Baselizadeh, S.; Rohban, M.H.; Rabiee, H.R. Multiresolution knowledge distillation for anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14902–14912. [Google Scholar]

- Wu, Q.; Li, H.; Tian, C.; Wen, L.; Li, X. AEKD: Unsupervised auto-encoder knowledge distillation for industrial anomaly detection. J. Manuf. Syst. 2024, 73, 159–169. [Google Scholar] [CrossRef]

- Liu, X.; Wang, J.; Leng, B.; Zhang, S. Dual-modeling decouple distillation for unsupervised anomaly detection. In Proceedings of the 32nd ACM International Conference on Multimedia, Melbourne, VIC, Australia, 28 October–1 November 2024; pp. 5035–5044. [Google Scholar]

- Iqbal, E.; Khan, S.U.; Javed, S.; Moyo, B.; Zweiri, Y.; Abdulrahman, Y. Multi-scale feature reconstruction network for industrial anomaly detection. Knowl.-Based Syst. 2024, 305, 112650. [Google Scholar] [CrossRef]

- Bergmann, P.; Batzner, K.; Fauser, M.; Sattlegger, D.; Steger, C. The MVTec anomaly detection dataset: A comprehensive real-world dataset for unsupervised anomaly detection. Int. J. Comput. Vis. 2021, 129, 1038–1059. [Google Scholar] [CrossRef]

- Zhang, S.; Liu, J. Feature-Constrained and Attention-Conditioned Distillation Learning for Visual Anomaly Detection. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 2945–2949. [Google Scholar]

- Yi, J.; Yoon, S. Patch svdd: Patch-level svdd for anomaly detection and segmentation. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Massoli, F.; Falchi, F.; Kantarci, A.; Akti, S.; Ekenel, H.; Amato, G. MOCCA: Multilayer One-Class Classification for Anomaly Detection. IEEE Trans. Neural Netw. Learn. Syst. 2022, 33, 2313–2323. [Google Scholar] [CrossRef]

- Li, C.L.; Sohn, K.; Yoon, J.; Pfister, T. Cutpaste: Self-supervised learning for anomaly detection and localization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9664–9674. [Google Scholar]

- Rippel, O.; Chavan, A.; Lei, C.; Merhof, D. Transfer learning gaussian anomaly detection by fine-tuning representations. In Proceedings of the 2nd International Conference on Image Processing and Vision Engineering, Online, 22–24 April 2022. [Google Scholar]

- Rudolph, M.; Wehrbein, T.; Rosenhahn, B.; Wandt, B. Fully convolutional cross-scale-flows for image-based defect detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1088–1097. [Google Scholar]

- Gudovskiy, D.; Ishizaka, S.; Kozuka, K. Cflow-ad: Real-time unsupervised anomaly detection with localization via conditional normalizing flows. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 98–107. [Google Scholar]

- Yu, J.; Zheng, Y.; Wang, X.; Li, W.; Wu, Y.; Zhao, R.; Wu, L. Fastflow: Unsupervised anomaly detection and localization via 2d normalizing flows. arXiv 2021, arXiv:2111.07677. [Google Scholar]

- Cohen, N.; Hoshen, Y. Sub-image anomaly detection with deep pyramid correspondences. arXiv 2020, arXiv:2005.02357. [Google Scholar]

- Defard, T.; Setkov, A.; Loesch, A.; Audigier, R. Padim: A patch distribution modeling framework for anomaly detection and localization. In Proceedings of the International Conference on Pattern Recognition, Bangkok, Thailand, 28–30 July 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 475–489. [Google Scholar]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards total recall in industrial anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14318–14328. [Google Scholar]

- Lee, S.; Lee, S.; Song, B.C. CFA: Coupled-hypersphere-based feature adaptation for target-oriented anomaly localization. IEEE Access 2022, 10, 78446–78454. [Google Scholar] [CrossRef]

- Rudolph, M.; Wehrbein, T.; Rosenhahn, B.; Wandt, B. Asymmetric student-teacher networks for industrial anomaly detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023; pp. 2592–2602. [Google Scholar]

- Zuo, Z.; Wu, Z.; Chen, B.; Zhong, X. A reconstruction-based feature adaptation for anomaly detection with self-supervised multi-scale aggregation. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 5840–5844. [Google Scholar]

- Gu, Z.; Liu, L.; Chen, X.; Yi, R.; Zhang, J.; Wang, Y.; Wang, C.; Shu, A.; Jiang, G.; Ma, L. Remembering normality: Memory-guided knowledge distillation for unsupervised anomaly detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 16401–16409. [Google Scholar]

- Tien, T.D.; Nguyen, A.T.; Tran, N.H.; Huy, T.D.; Duong, S.; Nguyen, C.D.T.; Truong, S.Q. Revisiting reverse distillation for anomaly detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 24511–24520. [Google Scholar]

- Tong, G.; Li, Q.; Song, Y. Enhanced multi-scale features mutual mapping fusion based on reverse knowledge distillation for industrial anomaly detection and localization. IEEE Trans. Big Data 2024, 10, 498–513. [Google Scholar] [CrossRef]

- Jiang, T.; Li, L.; Samali, B.; Yu, Y.; Huang, K.; Yan, W.; Wang, L. Lightweight object detection network for multi-damage recognition of concrete bridges in complex environments. Comput.-Aided Civ. Infrastruct. Eng. 2024, 39, 3646–3665. [Google Scholar] [CrossRef]

- Tailanian, M.; Musé, P.; Pardo, Á. A multi-scale a contrario method for unsupervised image anomaly detection. In Proceedings of the 2021 20th IEEE International Conference on Machine Learning and Applications (ICMLA), Pasadena, CA, USA, 13–16 December 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 179–184. [Google Scholar]

- Cao, Y.; Wan, Q.; Shen, W.; Gao, L. Informative knowledge distillation for image anomaly segmentation. Knowl.-Based Syst. 2022, 248, 108846. [Google Scholar] [CrossRef]

- Yang, E.; Xing, P.; Sun, H.; Guo, W.; Ma, Y.; Li, Z.; Zeng, D. 3CAD: A Large-Scale Real-World 3C Product Dataset for Unsupervised Anomaly Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 25 February–4 March 2025; Volume 39, pp. 9175–9183. [Google Scholar]

- Yu, Y.; Rashidi, M.; Dorafshan, S.; Samali, B.; Farsangi, E.N.; Yi, S.; Ding, Z. Ground penetrating radar-based automated defect identification of bridge decks: A hybrid approach. J. Civ. Struct. Health Monit. 2025, 15, 521–543. [Google Scholar] [CrossRef]

- Duan, Y.; Hong, Y.; Niu, L.; Zhang, L. Few-shot defect image generation via defect-aware feature manipulation. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 571–578. [Google Scholar]

- Yao, H.; Liu, M.; Yin, Z.; Yan, Z.; Hong, X.; Zuo, W. GLAD: Towards better reconstruction with global and local adaptive diffusion models for unsupervised anomaly detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; pp. 1–17. [Google Scholar]

- Park, Y.; Kang, S.; Kim, M.J.; Jeong, H.; Park, H.; Kim, H.S.; Yi, J. Neural network training strategy to enhance anomaly detection performance: A perspective on reconstruction loss amplification. In Proceedings of the ICASSP 2024—2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Seoul, Republic of Korea, 14–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 5165–5169. [Google Scholar]

- Wang, G.; Huang, S.; Tao, Z. Shallow multi-branch attention convolutional neural network for micro-expression recognition. Multimed. Syst. 2023, 29, 1967–1980. [Google Scholar] [CrossRef]

- Wang, G.; Huang, S. Dual-stream network with cross-layer attention and similarity constraint for micro-expression recognition. Multimed. Syst. 2024, 30, 1–12. [Google Scholar] [CrossRef]

- Huang, C.; Xu, Q.; Wang, Y.; Wang, Y.; Zhang, Y. Self-supervised masking for unsupervised anomaly detection and localization. IEEE Trans. Multimed. 2022, 25, 4426–4438. [Google Scholar] [CrossRef]

- Mishra, P.; Verk, R.; Fornasier, D.; Piciarelli, C.; Foresti, G.L. VT-ADL: A vision transformer network for image anomaly detection and localization. In Proceedings of the 2021 IEEE 30th International Symposium on Industrial Electronics (ISIE), Kyoto, Japan, 20–23 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 01–06. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Reconstruction by inpainting for visual anomaly detection. Pattern Recognit. 2021, 112, 107706. [Google Scholar] [CrossRef]

- Luo, X.; Li, S.; Wang, Y.; Zhan, T.; Shi, X.; Liu, B. MaMiNet: Memory-attended multi-inference network for surface-defect detection. Comput. Ind. 2023, 145, 103834. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhao, Z.; Zhang, X.; Sun, C.; Chen, X. Industrial anomaly detection with domain shift: A real-world dataset and masked multi-scale reconstruction. Comput. Ind. 2023, 151, 103990. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).