UAV-Based LiDAR and Multispectral Imaging for Estimating Dry Bean Plant Height, Lodging and Seed Yield

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Site and Ground Data Collection

2.2. UAV Image Acquisition

2.3. Data Processing

2.3.1. LiDAR Point Cloud

2.3.2. MSI Data Processing

2.4. Dry Bean Traits Estimation

2.4.1. Plant Height Estimation

2.4.2. CL Estimation

2.4.3. Seed Yield Estimation

2.5. Model Evaluation

3. Results and Discussion

3.1. Plant Height Estimation Using LiDAR

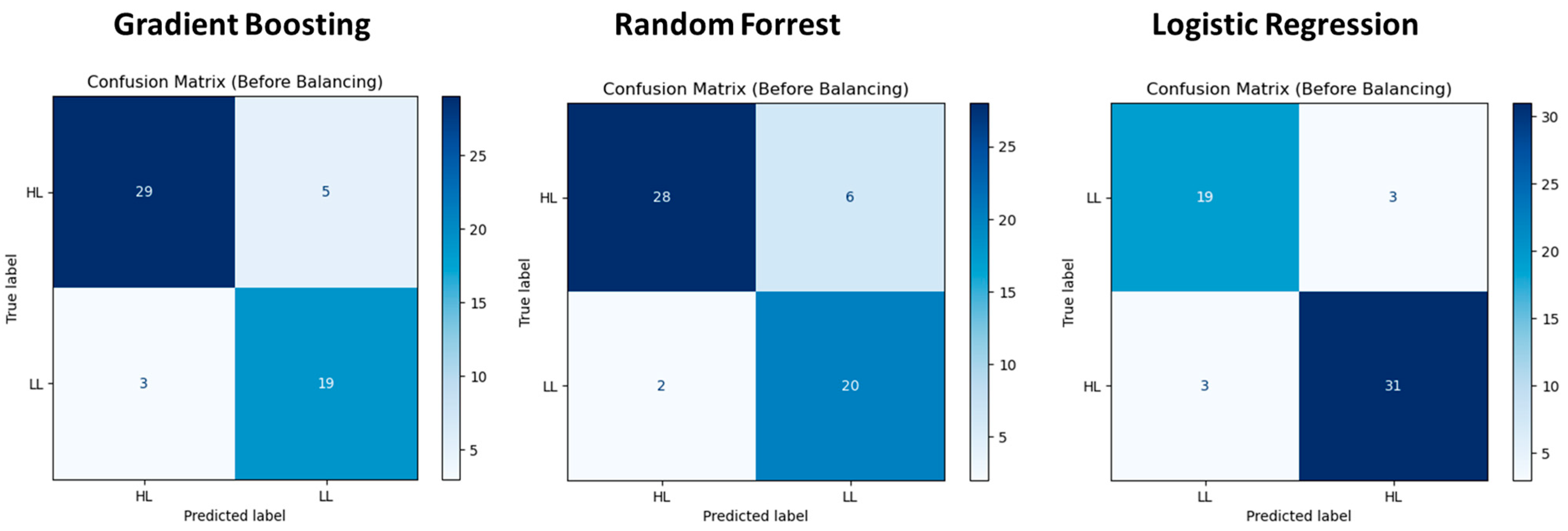

3.2. CL Resistance Using LiDAR

3.3. Seed Yield Estimation

3.3.1. LiDAR-Based Yield Estimation

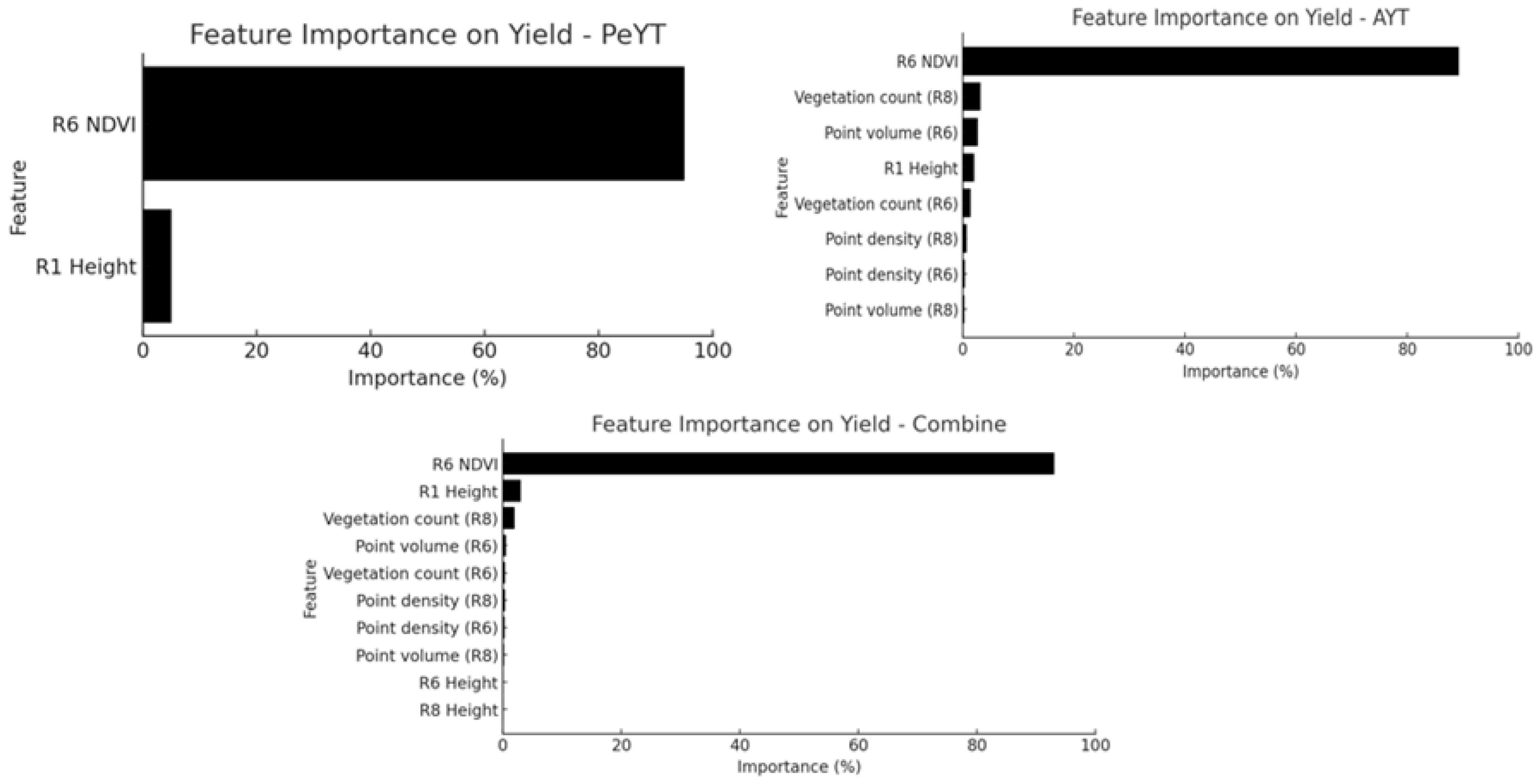

3.3.2. MSI-Based Yield Estimation

3.3.3. Integrated LiDAR and MSI-Based Yield Estimation

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| LiDAR | Light Detection and Ranging |

| RGB | Red, Green and Blue |

| UAV | Unmanned Aerial Vehicles |

| r | Pearson correlation coefficient |

| R2 | Coefficient of Determination |

| t | Tonnes |

| ha | Hacter |

| MSI | Multispectral image |

| DB | Digital biomass |

| AYT | Advanced Yield Trial |

| PeYT | Performance Yield Trial |

| YL | Yellow bean |

| PT | Pinto bean |

| GN | Great Northern bean |

| PM | Physiological Maturity |

| kg | Kilogram |

| N | North |

| W | West |

| CH | Canopy height |

| CL | Crop Lodging |

| GCP | Ground Control Point |

| ROI | Region of Interest |

| LAS | LASer |

| LL | Low Lodging |

| HL | High Lodging |

| ML | Machine Learning |

| AB | Adaptive Boosting |

| GB | Gradient Boosting |

| KNN | K-Nearest Neighbors |

| LGB | Light Gradient Boosting |

| RF | Random Forrest |

| SVM | Support Vector Machine |

| XGBoost | Extreme Gradient Boosting |

| LR | Logistic Regression |

| SMOTE-ENN | Synthetic Minority Oversampling-Edited Nearest Neighbor |

| ADASYN | Adaptive Synthetic |

| NDVI | Normalized Difference Vegetation Index |

| ANN | Artificial Neural Network |

| GBRT | Gradient Boosting Regression Trees |

| PLSR | Partial Least Square Regression |

| MLR | Multiple Linear Regression |

| RMSE | Root Mean Square Error |

| MAE | Mean Absolute Error |

| NIR | Near Infrared |

References

- Wong, C.Y.S.; Gilbert, M.E.; Pierce, M.A.; Parker, T.A.; Palkovic, A.; Gepts, P.; Magney, T.S.; Buckley, T.N. Hyperspectral remote sensing for phenotyping the physiological drought response of common and tepary bean. Plant Phenomics 2023, 5, 0021. [Google Scholar] [CrossRef] [PubMed]

- Hütt, C.; Bolten, A.; Hüging, H.; Bareth, G. UAV lidar metrics for monitoring crop height, biomass and nitrogen uptake: A case study on a winter wheat field trial. PFG–J. Photogramm. Remote Sens. Geoinf. Sci. 2022, 91, 65–76. [Google Scholar] [CrossRef]

- Li, F.; Piasecki, C.; Millwood, R.J.; Wolfe, B.; Mazarei, M.; Stewart, C.N. High-throughput switchgrass phenotyping and biomass modeling by UAV. Front. Plant Sci. 2020, 11, 574073. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Xing, M.; He, B.; Wang, J.; Song, Y.; Shang, J.; Liao, C.; Xu, M.; Ni, X. A ground point fitting method for winter wheat height estimation using UAV-based SfM point cloud data. Drones 2023, 7, 406. [Google Scholar] [CrossRef]

- Wang, H.; Singh, K.D.; Poudel, H.; Ravichandran, P.; Natarajan, M.; Eisenreich, B. Estimation of Crop Height and Digital Biomass from UAV-based Multispectral Imagery. In Proceedings of the 13th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Athens, Greece, 31 October–2 November 2023. [Google Scholar] [CrossRef]

- Wang, H.; Singh, K.D.; Balasubramanian, P.; Natarajan, M. UAV-Based Multispectral and RGB Imaging Techniques for Dry Bean Phenotyping. In Proceedings of the 2024 14th Workshop on Hyperspectral Imaging and Signal Processing: Evolution in Remote Sensing (WHISPERS), Helsinki, Finland, 9–11 December 2024. [Google Scholar]

- Zhang, X.; Zhang, K.; Wu, S.; Shi, H.; Sun, Y.; Zhao, Y.; Fu, E.; Chen, S.; Bian, C.; Ban, W. An investigation of winter wheat leaf area index fitting model using spectral and canopy height model data from unmanned aerial vehicle imagery. Remote Sens. 2022, 14, 5087. [Google Scholar] [CrossRef]

- Yoosefzadeh-Najafabadi, M.; Singh, K.D.; Pourreza, A.; Sandhu, K.S.; Adak, A.; Murray, S.C.; Eskandari, M.; Rajcan, I. Remote and proximal sensing: How far has it come to help plant breeders? Adv. Agron. 2023, 181, 279–315. [Google Scholar]

- Wang, D.; Li, R.; Zhu, B.; Liu, T.; Sun, C.; Guo, W. Estimation of wheat plant height and biomass by combining UAV imagery and elevation data. Agriculture 2022, 13, 9. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Erkbol, H.; Adrian, J.; Newcomb, M.; LeBauer, D.; Pauli, D.; Shakoor, N.; Mockler, T.C. Uav-based sorghum growth monitoring: A comparative analysis of lidar and photogrammetry. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2020, V-3-2020, 489–496. [Google Scholar] [CrossRef]

- ten Harkel, J.; Bartholomeus, H.; Kooistra, L. Biomass and crop height estimation of different crops using UAV-based LiDAR. Remote Sens. 2019, 12, 17. [Google Scholar] [CrossRef]

- Maesano, M.; Khoury, S.; Nakhle, F.; Firrincieli, A.; Gay, A.; Tauro, F.; Harfouche, A. Uav-based lidar for high-throughput determination of plant height and above-ground biomass of the bioenergy grass arundo donax. Remote Sens. 2020, 12, 3464. [Google Scholar] [CrossRef]

- Wang, H.; Singh, K.D.; Poudel, H.P.; Natarajan, M.; Ravichandran, P.; Eisenreich, B. Forage Height and Above-Ground Biomass Estimation by Comparing UAV-Based Multispectral and RGB Imagery. Sensors 2024, 24, 5794. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; McGee, R.J.; Vandemark, G.J.; Sankaran, S. Crop performance evaluation of chickpea and dry pea breeding lines across seasons and locations using phenomics data. Front. Plant Sci. 2021, 12, 640259. [Google Scholar] [CrossRef] [PubMed]

- Shammi, S.A.; Huang, Y.; Feng, G.; Tewolde, H.; Zhang, X.; Jenkins, J.; Shankle, M. Application of UAV multispectral imaging to monitor soybean growth with yield prediction through machine learning. Agronomy 2024, 14, 672. [Google Scholar] [CrossRef]

- Sarkar, S.; Zhou, J.; Scaboo, A.; Zhou, J.; Aloysius, N.; Lim, T.T. Assessment of soybean lodging using UAV imagery and machine learning. Plants 2023, 12, 2893. [Google Scholar] [CrossRef]

- Sankaran, S.; Quirós, J.J.; Miklas, P.N. Unmanned aerial system and satellite-based high resolution imagery for high-throughput phenotyping in dry bean. Comput. Electron. Agric. 2019, 165, 104965. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Bazrafkan, A.; Navasca, H.; Worral, H.; Oduor, P.; Delavarpour, N.; Morales, M.; Bandillo, N.; Flores, P. Predicting lodging severity in dry peas using UAS-mounted RGB, LIDAR, and multispectral sensors. Remote Sens. Appl. Soc. Environ. 2024, 34, 101157. [Google Scholar] [CrossRef]

- Sullivan, G.M.; Feinn, R. Using effect size—Or why the P value is not enough. J. Grad. Med. Educ. 2012, 4, 279–282. [Google Scholar] [CrossRef]

- Pun Magar, L.; Sandifer, J.; Khatri, D.; Poudel, S.; Kc, S.; Gyawali, B.; Gebremedhin, M.; Chiluwal, A. Plant height measurement using UAV-based aerial RGB and LiDAR images in soybean. Front. Plant Sci. 2025, 16, 1488760. [Google Scholar] [CrossRef]

- Yuan, H.; Bennett, R.S.; Wang, N.; Chamberlin, K.D. Development of a peanut canopy measurement system using a ground-based lidar sensor. Front. Plant Sci. 2019, 10, 203. [Google Scholar] [CrossRef]

- Konno, T.; Homma, K. Prediction of areal soybean lodging using a main stem elongation model and a soil-adjusted vegetation index that accounts for the ratio of vegetation cover. Remote Sens. 2023, 15, 3446. [Google Scholar] [CrossRef]

- Zhou, X.; Kono, Y.; Win, A.N.; Matsui, T.; Tanaka, T. Predicting within-field variability in grain yield and protein content of winter wheat using UAV-based multispectral imagery and machine learning approaches. Plant Prod. Sci. 2020, 24, 137–151. [Google Scholar] [CrossRef]

| Stages | Traits | UAV Dates | Ground Sampling Date (AYT/PeYT) |

|---|---|---|---|

| Mid-flowering (R1) | Height | 22 July 2024 | 17 July 2024 |

| Mid-pod filling (R6) | Height | 13 August 2024 | 12 August 2024 |

| Physiological maturity (R8) | Height | 5 September 2024 | 4 September 2024 |

| Lodging | 21 August to 14 September 2024 | ||

| Yield | 25 September 2024 (Harvest day) |

| Trials | LL | HL | ||

|---|---|---|---|---|

| 2 | 3 | 4 | 5 | |

| AYT | 14 | 52 | 100 | 6 |

| PeYT | 5 | 39 | 50 | 10 |

| Combine | 19 | 91 | 150 | 16 |

| Models | LL (2 and 3 Scales) | HL (4 and 5 Scales) | ||||||

|---|---|---|---|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | Accuracy | Precision | Recall | F1-Score | |

| AB | 0.71 | 0.68 | 0.69 | 0.69 | 0.73 | 0.71 | 0.70 | 0.71 |

| GB | 0.77 | 0.70 | 0.73 | 0.71 | 0.77 | 0.82 | 0.79 | 0.81 |

| KNN | 0.65 | 0.61 | 0.63 | 0.62 | 0.68 | 0.65 | 0.67 | 0.66 |

| LGB | 0.67 | 0.63 | 0.65 | 0.64 | 0.72 | 0.67 | 0.71 | 0.69 |

| RF | 0.75 | 0.67 | 0.73 | 0.70 | 0.75 | 0.81 | 0.76 | 0.79 |

| SVM | 0.62 | 0.58 | 0.61 | 0.59 | 0.68 | 0.62 | 0.65 | 0.63 |

| XGBoost | 0.71 | 0.69 | 0.70 | 0.69 | 0.75 | 0.71 | 0.73 | 0.72 |

| LR | 0.80 | 0.72 | 0.82 | 0.77 | 0.80 | 0.87 | 0.79 | 0.83 |

| Model | Combined | PeYT | AYT | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | |

| ANN (1024, 512) 1 | 0.26 | 979 | 677.2 | 0.27 | 572.4 | 541.3 | 0.18 | 1384.1 | 783.5 |

| GBRT (learning rate 0.2) 2 | 0.45 | 883 | 687.6 | 0.41 | 436.4 | 408.8 | 0.26 | 1135.8 | 839.8 |

| RF | 0.33 | 941.4 | 681.7 | 0.51 | 469.1 | 415.6 | 0.15 | 1039.3 | 791.3 |

| PLSR | 0.12 | 1074.4 | 883.2 | 0.23 | 581.3 | 559.6 | 0.11 | 1263.6 | 892.4 |

| MLR | 0.24 | 993.1 | 749.8 | 0.31 | 629.4 | 602.1 | 0.14 | 1193.6 | 838.8 |

| Model | Combine | PeYT | AYT | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | |

| ANN (1024, 512) 1 | 0.25 | 912.2 | 786.1 | 0.27 | 628.4 | 537.5 | 0.31 | 944.2 | 748.2 |

| GBRT (learning rate 0.2) 2 | 0.53 | 756.2 | 579.3 | 0.25 | 578.9 | 436.9 | 0.48 | 814.4 | 621.2 |

| RFR | 0.57 | 723.1 | 531 | 0.24 | 584.8 | 460.6 | 0.47 | 820.1 | 632.3 |

| PLSR | 0.31 | 916.7 | 740.2 | 0.29 | 853.3 | 693.5 | 0.34 | 932.4 | 745.2 |

| MLR | 0.40 | 850.1 | 680.7 | 0.36 | 735.4 | 534.4 | 0.42 | 842.4 | 735.9 |

| Model | Combine | PeYT | AYT | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | MAE | R2 | RMSE | MAE | R2 | RMSE | MAE | |

| ANN (1024, 512) 1 | 0.26 | 867.3 | 739.7 | 0.28 | 684.4 | 539.3 | 0.29 | 1038.3 | 893.4 |

| GBRT (learning rate 0.2) 2 | 0.64 | 687.2 | 521.6 | 0.41 | 435.5 | 391.8 | 0.49 | 935.6 | 709.4 |

| RFR | 0.52 | 760.4 | 552.3 | 0.48 | 483 | 418 | 0.47 | 820.2 | 616.2 |

| PLSR | 0.25 | 955.4 | 763.3 | 0.23 | 738.4 | 639.4 | 0.26 | 983.4 | 832.3 |

| MLR | 0.5 | 804.1 | 640.1 | 0.43 | 784.5 | 645.2 | 0.46 | 842.6 | 742.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Panigrahi, S.S.; Singh, K.D.; Balasubramanian, P.; Wang, H.; Natarajan, M.; Ravichandran, P. UAV-Based LiDAR and Multispectral Imaging for Estimating Dry Bean Plant Height, Lodging and Seed Yield. Sensors 2025, 25, 3535. https://doi.org/10.3390/s25113535

Panigrahi SS, Singh KD, Balasubramanian P, Wang H, Natarajan M, Ravichandran P. UAV-Based LiDAR and Multispectral Imaging for Estimating Dry Bean Plant Height, Lodging and Seed Yield. Sensors. 2025; 25(11):3535. https://doi.org/10.3390/s25113535

Chicago/Turabian StylePanigrahi, Shubham Subrot, Keshav D. Singh, Parthiba Balasubramanian, Hongquan Wang, Manoj Natarajan, and Prabahar Ravichandran. 2025. "UAV-Based LiDAR and Multispectral Imaging for Estimating Dry Bean Plant Height, Lodging and Seed Yield" Sensors 25, no. 11: 3535. https://doi.org/10.3390/s25113535

APA StylePanigrahi, S. S., Singh, K. D., Balasubramanian, P., Wang, H., Natarajan, M., & Ravichandran, P. (2025). UAV-Based LiDAR and Multispectral Imaging for Estimating Dry Bean Plant Height, Lodging and Seed Yield. Sensors, 25(11), 3535. https://doi.org/10.3390/s25113535