5.1. Reconstruction Accuracy

The reconstruction accuracy evaluates the credibility of the proposed WGLAE model by calculating the mean squared difference between the original and reconstructed signals. The performance of the three gate control mechanisms, as well as the random sequence gate control mechanism, was examined based on the international EEG placement system with 19 channels. The MMIDB with three elicitation protocols, including resting states, motor imagery, and motor movement, was employed.

The reconstruction errors across the entire sample are presented in

Table 3, and the best-performing gate control mechanism in each case is highlighted. For all the examined gate control mechanisms and datasets, the reconstruction accuracy decreased as more channels were blocked. This aligns with real-world experiences; that is, as missing information increased, it became more challenging to reconstruct EEG signals. Another common phenomenon observed across all three datasets was that the three proposed gating mechanisms performed better than the random sequence gating mechanism in most cases. This indicates the necessity of considering EEG electrode placement when designing algorithms for signal reconstruction.

On the other hand, the results demonstrated the various influences that the three gate control mechanisms had on the reconstruction performance of the WGLAE. On the resting-state and motor-imagery datasets, the hemispheric symmetry rotation gate control mechanism outperformed the other three mechanisms in 9 out of 10 different quantities of missing channels. This observation can be explained by the brain’s alpha waves, which are active in states of relaxed and passive attention [

29]. Particularly in an eyes-closed state, alpha waves were typically balanced between the left and right hemispheres, suggesting similarities and dependencies between the EEG signals captured by the left electrodes and those captured by the right electrodes. As the hemispheric symmetry mechanism aims to learn EEG signals obtained from symmetrical areas, it should facilitate better learning capabilities for the WGLAE. A different trend was observed in the motor-movement dataset, where the partition-based gate control mechanism exhibited the best performance in 8 out of 10 cases. This could be due to the active beta and gamma waves in the brain, which are closely related to movement execution and coordination. As beta waves and gamma waves show dominance in various cerebral areas when undertaking different tasks, learning the dependencies between different cerebral cortex lobes can be significant for building an effective EEG signal reconstruction model. The advantages observed in the hemispheric symmetry rotation and partition-based gate control mechanisms validate the benefits of employing gate control mechanisms. The disadvantage of the hemispheric symmetry rotation gate control mechanism can be justified by the lateralisation of motor functions such as handedness [

23].

The results suggest that the application context should be taken into account when selecting gate control mechanisms. Specifically, in scenarios involving resting states, the hemispheric symmetry rotation gate control mechanism should be prioritised, while the partition-based gate control mechanism is more suitable when motor movement is involved. The observed differences in the efficacy of the gating mechanisms across tasks can be attributed to distinct functional brain connectivity patterns associated with resting states versus motor movements. Resting-state EEG was characterised by synchronised activity within default mode networks, which spanned the medial prefrontal and posterior regions. These networks exhibited strong long-range and inter-hemispheric coherence, reflecting idling cortical activity. The hemispheric symmetry rotation gate control mechanism, which explicitly models interactions between homologous brain regions (e.g., left vs. right motor cortex), aligns with this bilateral synchronisation. By emphasising cross-hemispheric dependencies, this gate effectively captured the large-scale functional connectivity inherent to resting states. On the other hand, motor tasks (e.g., hand movement) engaged localised, task-specific networks primarily within the motor cortex. These regions exhibited short-range and intra-hemispheric coherence. The partition-based gate control mechanism, which groups electrodes into localised clusters (e.g., frontal, central, or occipital), prioritised short-range spatial relationships. This design mirrored the organisation of the motor cortex, where adjacent electrodes captured activity from functionally related representations, enhancing the reconstruction of task-specific signals. The proposed gating strategies aligned with the neural mechanisms. Specifically, the hemispheric symmetry rotation gate control mechanism acted as a coarse-grained prior for global connectivity, while the partition-based gate control mechanism imposed a fine-grained prior for local connectivity. Their task-dependent efficacy suggests that the model successfully leveraged the spatial structure of functional networks in a data-driven setting.

The standard deviations in some cases were relatively high, for example, when reconstructing 10/19 channels for motor-movement data. An analysis of the extreme cases revealed the context-dependent efficacy of the gate mechanisms. For example, the partition-based gate mechanism exhibited larger deviations when critical task-relevant channels (e.g., C3 for right-hand motor imagery) were blocked, underscoring the need for adaptive strategies in future work.

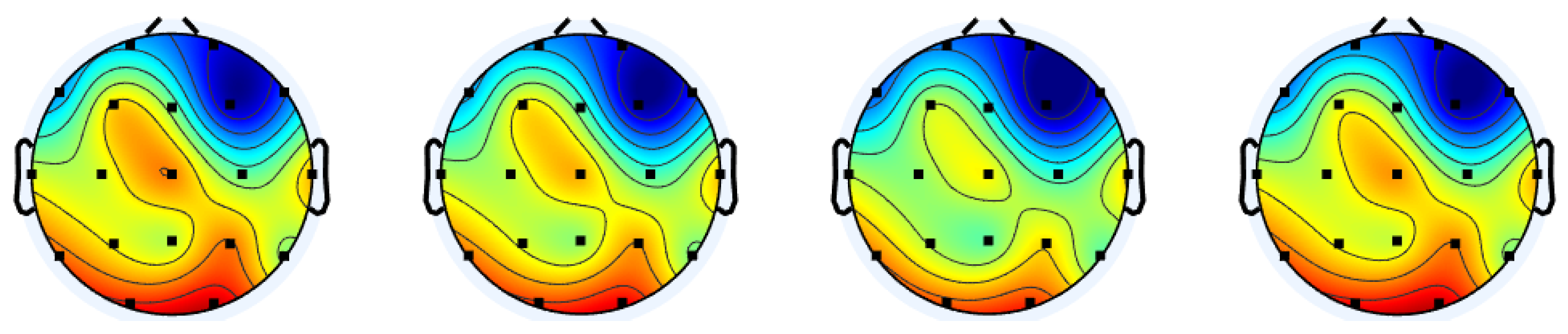

To understand how the different gate control mechanisms influenced the reconstruction accuracy in cerebral cortex regions, the reconstruction error distributions over the scalp are presented in

Figure 4. It can be observed that the central and posterior areas show higher errors compared to the frontal area, and this trend is consistent across the different gate control mechanisms. This can be explained by the brain functions associated with the regions of the cerebral cortex. Specifically, the frontal lobe is responsible for executive functions and motor control [

41], which are active from stimuli incorporated into the MMIDB. On the other hand, the occipital lobe is mainly in charge of visual processing [

41], which might not have been intensively activated by the three elicitation protocols.

To assess spectral fidelity, we conducted post hoc frequency-domain analyses to validate spectral preservation. These analyses focused on quantifying the fidelity of oscillatory dynamics across the five canonical EEG frequency bands: delta (1–4 Hz), theta (4–8 Hz), alpha (8–13 Hz), beta (13–30 Hz), and gamma (30–50 Hz). Specifically, we computed the power spectral density (PSD) for both the original and reconstructed signals using Welch’s method and estimated band-specific power. Then, Pearson’s correlation coefficients were calculated between the PSD of the original and reconstructed signals across all channels and subjects to assess the global spectral alignment. The normalised RMSE (nRMSE) was computed for each frequency band to quantify the deviations in the reconstructed band power. Paired

t-tests were performed to evaluate the significance of the band-power differences between the gating strategies. The results of the spectral fidelity analysis are summarised in

Table 4.

For the resting-state data, the WGLAE framework demonstrated better reconstruction performance using the hemispheric symmetry rotation gating scheme, achieving higher spectral correlation and lower spectral nRMSE compared to the random sequence gating scheme, with statistically significant differences (

p < 0.05). Similarly, the partition-based gating scheme achieved better spectral fidelity than the random sequence gating scheme for motor-movement data reconstruction. Although marginal in absolute value, these improvements reflect meaningful noise suppression, particularly in middle- and high-frequency bands (alpha, beta, and gamma). The results show strong preservation of oscillatory dynamics and demonstrate the importance of neurophysiologically informed gating in capturing task-relevant spatial dependencies while mitigating noise. As many EEG features are in the frequency domain, spectral fidelity is important in downstream tasks such as sleep-stage classification, cognitive load assessment, and other BCI control applications. While our model applies time-domain metrics in the learning process, minimising amplitude errors inherently enforces implicit spectral fidelity. This is supported by studies showing strong correlations between time-domain reconstruction accuracy and spectral preservation in autoencoder-based EEG models [

42].

While neurophysiological studies validate the spatial basis of EEG signals (e.g., interpolation of missing channels using splines), our work computationally confirms this by demonstrating improved reconstruction when spatial gates encode electrode locations. Our experimental results align with prior neurophysiological findings, showing that models incorporating spatial information outperform random approaches. Thus, our hypothesis is supported by both established literature and empirical validation in this study.

5.2. Scalability Analysis

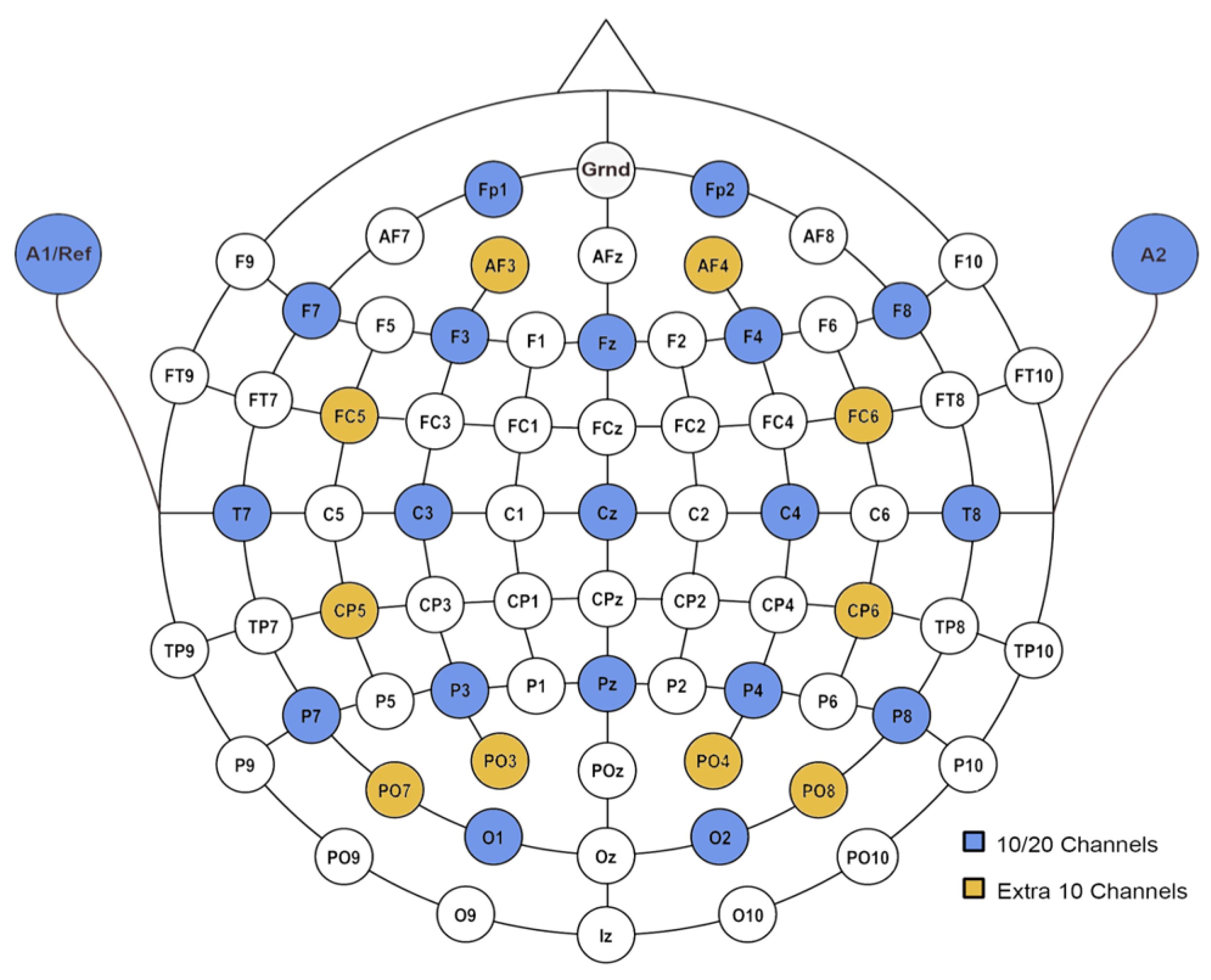

The capabilities of the proposed gate control mechanisms were further tested on the MMIDB with 29 channels. The placement of the 29 channels is illustrated in

Figure 2. In this section, the reconstruction accuracy obtained by different gate control mechanisms is discussed, followed by a comparison with the accuracy obtained on the dataset with 19 channels.

As presented in

Table 5, the hemispheric symmetry rotation, partition-based, and order-based gate control mechanisms outperformed the random sequence gate control mechanism on 26, 25, and 26 out of 30 instances, respectively. Similar to the observations on the 19-channel dataset, the hemispheric symmetry rotation gate control mechanism performed the best on the resting-state dataset, while the partition-based gate control mechanism showed dominant advantages on the motor-movement dataset. This observation is similar to that on the 19-channel dataset, thus validating the benefits of aligning EEG sensor locations with the features of active brainwaves. The difference between the performance on the 19-channel and 29-channel datasets lies in the motor-imagery protocol. In this elicitation protocol, the partition-based gate control mechanism exhibited the best performance on the 29-channel dataset, while the hemispheric symmetry rotation gate control mechanism exhibited the best performance on the 19-channel dataset. Therefore, the features of datasets, especially the data size, should also be considered when deciding on the most fitting gate control mechanism.

For the scalability analysis, we evaluated the performance of the WGLAE model with different gate control mechanisms in reconstructing different numbers of channels from 1 to 10. The mean reconstruction errors on the 19-channel and 29-channel datasets are presented in

Figure 5. With an increasing number of missing channels (DVs), the reconstruction errors from all four gate control mechanisms showed an upward trend. This upward trend is consistent with the observations on WGLAE models, where more input information generally yields a better model. At the same time, it is worth noting that the reconstruction accuracy was consistently higher than 91%, even when more than half of the EEG channels were blocked during WGLAE training. This shows the resilience and reliability of the WGLAE model in recovering real-world EEG signals. The advantages of the proposed gate control mechanisms over the random sequence gate control mechanism tended to decline with an increasing number of missing channels. This is because when many channels are blocked simultaneously, it is futile to consider the functions of the cortex lobes, as the channels in the lobe are completely missing. This is also the reason why this performance deterioration was less pronounced in the 29-channel instances.

To examine the statistical difference between the performance of the random sequence gate control mechanism and that of the hemispheric symmetry rotation, partition-based, and order-based gate control mechanisms, a Wilcoxon signed-rank test was conducted [

43]. A holistic comparison was made based on the reconstruction errors obtained in 10 scenarios (with 1 to 10 missing channels) of 10 runs. The significance values in

Table 6 show that both the hemispheric symmetry rotation and partition-based gate control mechanisms were significantly different from the random sequence gate control mechanism. However, the order-based gate control mechanism was not significantly different from the random sequence gate control mechanism in the 19-channel configuration, which can be explained by the limited information available compared to that in the 29-channel configuration. On the other hand, it aligns with the aforementioned observations and indicates that the hemispheric symmetry rotation and partition-based gate control mechanisms were more effective in capturing signal patterns.

With our hardware setup, a computer with an i7-8550U CPU and 8 GB of RAM, the computational time for reconstructing the 19-channel dataset was between 0.08 and 0.16 s and between 0.15 and 0.2 s for the 29-channel dataset during the inference stage. This inference speed aligns well with the requirements for efficient and low-latency deployment. The proposed model adopts a structured gating mechanism on a lightweight autoencoder backbone, which ensures fast forward passes, with inference time comparable to that of standard simple neural networks or linear models.

The experiments adopted 19- and 29-channel configurations, which are common in clinical and BCI applications. The WGLAE’s structured gating principles are theoretically scalable to high-density systems. In future work, we will evaluate the model on high-density setups and refine the gating mechanisms for high-channel configurations, including hierarchical partitioning and dynamic sparsity adaptation.