6.2. Image Encryption Algorithm

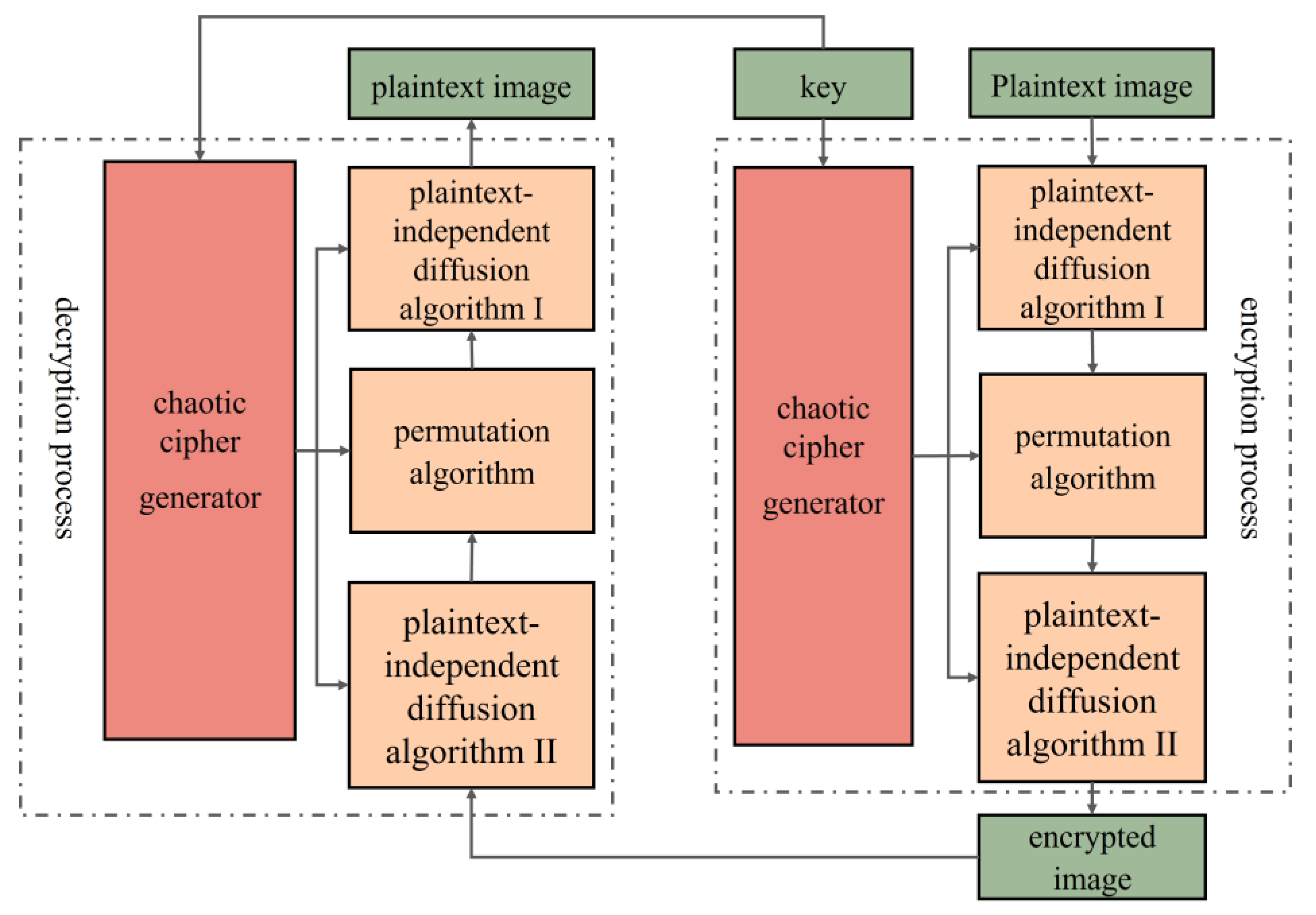

In this paper, we employ an image encryption algorithm that incorporates plaintext-related scrambling, as illustrated in

Figure 9. The specific steps are detailed below.

Step 1: Flatten the 2D unencoded visual data P into a one-dimensional vector of size M×N, where M is the row count and N is the column count of the matrices for the clear and unencrypted images.

Step 2: Two integers, (r1, r2), are randomly selected from the interval [0, 255], combined with the initial values x0 and y0 of the chaotic system to form the encryption and decryption key of the system, denoted as K = (x0, y0, r1, r2).

Step 3: Two pseudorandom sequences generated by the chaotic system are converted into six independent integer sequences, with each element value ranging from 0 to 255. These integer sequences are then sequentially assigned to the two M × N matrices, X and Y.

Step 4: Using the diffusion algorithm I and the random matrix

X, the pixel values at corresponding positions in the initial plaintext image

P are transformed, thereby converting it into a new matrix,

P1. Its processing process is as shown in Equation (15).

where

i ∈ [1,

M] and

j ∈ [1,

M] and

P(

i,

j) indicates the grayscale value of the image

P at the given coordinate (

i,

j).

Step 5: So as to break the correlation among adjacent pixel points in the original imagery, two integers,

k and

l, are generated through calculations using random matrices

Z,

W,

U, and

V through Equation (16). Subsequently, the image matrix

P1 is traversed in a specific sequence, and the pixel’s value at the given spot

P1(

i,

j) is interchanged with that at position

P1(

k,

l), obtaining the scrambled image

P2.

A reversible Arnold matrix TT is employed for pixel shuffling to guarantee the encryption’s reversibility. The fixed pixel

P2(

i,

j) is exchanged with the random pixel

P2(

r,

s) to generate the ciphertext image

P3, where

r and

s can be determined through Equation (17)

The inverse matrix of

T, represented as

T−1, assumes a crucial part in the decryption operation. The formulations of

T and

T−1 are presented in Equation (18).

Step 6: Execute a subsequent diffusion action upon the image

P3 with the random matrix. As opposed to step 4, this process is conducted in reverse, starting from the last pixel of the image and propagating forward, eventually obtaining the encrypted image

P4.

The steps involved in decrypting an image are precisely the opposite of those in the image encryption process.

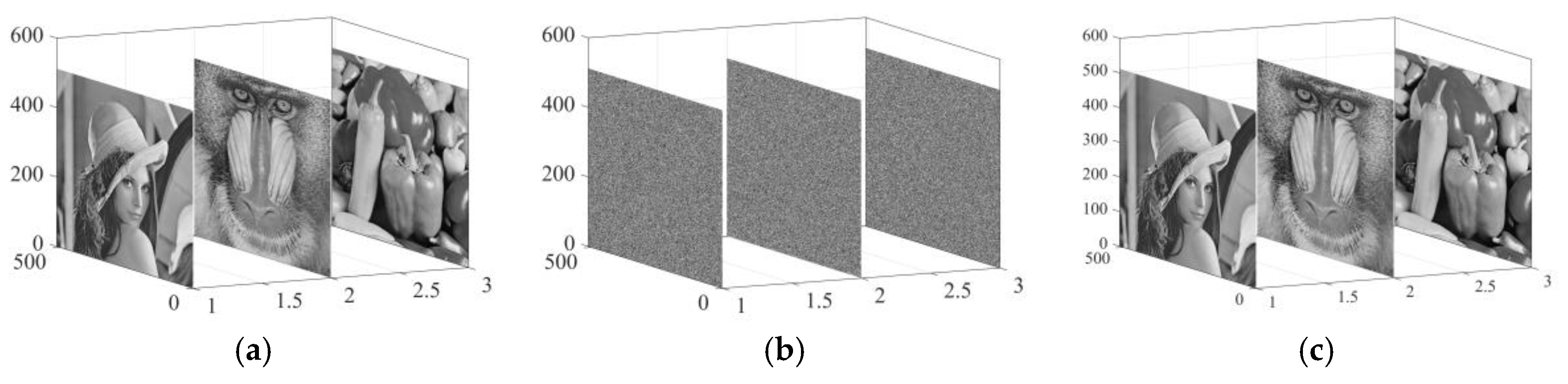

Encryption and decryption tests were performed using three well-known images (Lena, Baboon, and Peppers). The data come from the USC-SIPI image database, which is accessible to the public. The outcomes of this algorithm are presented in

Figure 10a, which presents the starting images, whereas the encrypted counterparts corresponding to them are showcased in

Figure 10b. After that, the display of the decrypted images takes place in

Figure 10c. It is noticeable from

Figure 10b that the encrypted image gives the impression of being both randomly arranged and thoroughly scrambled, effectively concealing the information of the original image.

6.3. Statistical Analysis of Encryption

Several traditional numerical test methods are effective tools for assessing the functionality of encryption systems and the merit of their results. Consequently, histograms, the χ2 test, entropy related to information, the mean squared error (MSE), the top value of the signal-to-noise ratio (PSNR), the dependency coefficient, the pixel change rate (NPCR), the uniform average change intensity (UACI), and the block average change intensity (BACI) have been employed to evaluate the advanced encryption system under discussion and its results. Furthermore, with the implementation of salt and pepper noise and cropping manipulations, the resilience of the recommended encryption algorithm to noise-related issues and data loss has been explored.

Plaintext sensitivity analysis refers to the use of the same key to encrypt two plaintext images that differ slightly using an image encryption system, resulting in two corresponding ciphertext images. The differences between these two ciphertext images are compared. If the differences are significant, the image encryption system is said to have good plaintext sensitivity; if the differences are small, the system is said to have weak plaintext sensitivity, which generally cannot resist chosen plaintext attacks or known plaintext attacks. The two so-called plaintext images that differ slightly can be obtained through slight modification of the value of one or several pixel points in a given plaintext image. For example, randomly selecting a pixel point (

i,

j) from a plaintext image

P1, changing its value to

P1(

i,

j) + 1, and taking the value modulo 256 will yield a plaintext image

P2 that has a minor difference from

P1. The NPCR, UACI, and BACI metrics are calculated 200 times, and the mean values of these metrics across all iterations are subsequently determined. The test outcomes presented in

Table 2 demonstrate that the proposed image encryption system exhibits strong plaintext sensitivity.

Histograms allow for the assessment of the distribution pattern of pixel values, which are used to describe how the image information is distributed. By making a comparison of the histograms of the initial images in

Figure 11a,b with those of the encrypted images in

Figure 11c,d, the histogram transitions from a fluctuating to a uniform pattern after encryption.

The

χ2 test is a commonly employed technique for evaluating histogram differences between original and encrypted images. With a significance level α = 0.05, the statistic for an image in grayscale mode with 256 possible gray levels is

χ20.05(256) = 293.24783. The results are presented in

Table 3. Notably, the

χ2 values for the three original images are considerably higher than 293.24783, while those for their encrypted counterparts are all below 293.24783, suggesting that the distributions in

Figure 11d–f are nearly uniform [

33].

Informational entropy (IE) content measures the doubtfulness regarding the data within images; higher magnitudes suggest an enhanced state of random variation and lack of order within the picture. As a result, it stands as a significant indicator when it comes to assessing how secure the encrypted results are. The technique for determining information entropy is given in Equation (20).

Here,

L represents the gray level associated with the image. Inside of the encryption mechanism we have implemented,

L = 256;

P(

xi) stands for the probability of the pixel’s value being

xi. Regarding an image with a total of 256 grayscale gradations, the theoretical worth of information entropy

H is eight. Thus, when the entropy of the encrypted image comes closer to the figure of eight, the more secure the encryption scheme. Presented in

Table 4 are the results from the IE test for the encryption system we proposed. Plainly, the entropies related to the three encrypted images are very near the theoretical magnitude. Furthermore, comparisons with references [

22,

34,

35,

36] establish the elevated level of randomness and disorder present in the encrypted images.

MSE quantifies the average squared difference in pixel values between two images. Consequently, a higher MSE with more dissimilarity existing between the unencrypted image and the corresponding encrypted one implies higher-quality encryption results. Typically, an MSE value greater than 30 dB [

37] indicates a significant disparity between the two images. The formula for computing MSE is as follow:

In this context,

P(

i,

j) and

Q(

i,

j) refer to the values related to the pixels of the original and encrypted images at coordinates (

i,

j), individually. The dimensions of the image grid are given by

M rows and

N columns. To assess encryption quality, the peak signal-to-noise ratio (PSNR) serves as a critical metric, which can be formulated as [

38]

Here,

Pmax denotes the maximum magnitude of the pixel value in the image. In the course of our testing,

Pmax is 255. Unlike MSE, a lower PSNR proves better encryption capabilities; this is clear when considering the inverse correlation between the PSNR and the MSE. The MSE and PSNR outcomes for both the original and encrypted renditions of the images Lena, Baboon, and Peppers are displayed in

Table 5. It turns out that the MSE values for all three images exceed 30 dB, and the PSNR value with respect to the recommended encryption plan are comparable to or even lower than those reported in references [

22,

34,

35,

36]. These numerical results further validate the robustness of the encryption outcomes.

The correlation coefficient serves as a crucial metric when it comes to evaluating the interrelation of neighboring pixels within an image. The correlation coefficient, denoted as

Cxy, that exists between adjacent pixels is formulated as [

39,

40,

41]

where in

and

; in this scenario,

X and

Y show the arithmetic mean values of the pixel sequences

x(

i) and

y(

i), respectively.

Table 6 provides the correlation coefficients for plaintext images alongside their encrypted counterparts in four different directions. The plaintext images show high correlation coefficients, often nearing one across all directions. In contrast, the encrypted images produced through our encryption technique demonstrate substantially lower correlation coefficients, which are nearly zero in every direction. This suggests that there is minimal relationship of neighboring pixels amidst the encrypted image dataset. Furthermore, a comparison of our findings with those reported in references [

22,

34,

35,

36] supports the robustness and effectiveness of our encryption method.

Key sensitivity and spatial analysis: NPCR (Number of Pixel Changing Rate), UACI (Unified Average Changing Intensity), and BACI (Bit Average Change Intensity) are crucial parameters for judging the effectiveness pertaining to an encryption technique system. These metrics serve to conduct a quantitative analysis of the disparities between a pair of images. In the case of two completely different images, the optimal figures for the Number of Pixel Change Rate (NPCR), Unified Average Changing Intensity (UACI), and Bit-Average Changing Intensity (BACI) are 99.6094%, 33.4635%, and 26.7712%, in that order. These metrics are assessed using pairs of images with identical dimensions, where images encrypted as test samples are composed of those with dissimilar keys. When conducting the evaluation of NPCR, UACI, and BACI, this approach facilitates the exploration of the key sensitivity aspect of the encryption system.

During our experimental endeavors, the initial values

x0 and

y0 for the chaotic mapping, being integral parts of the key, were defined as variables that can be controlled. Regarding the system parameters (

a,

k,

c1,

h), they were designated as (0.5, 2.654, 1, 1) (0.5, 2.654, 1, 1). During testing, the initial values

X0 and

Y0 were randomly chosen from the key interval. The unit of variation for the private key was configured as 10

−13. Subsequently, several sets of keys were produced by utilizing (

x0,

y0) = (

X0+n⋅10

−13,

Y0+

m⋅10

−13)(

x0,

y0) = (

X0+

n⋅10

−13,

Y0+

m⋅10

−13), where

n and

m are integers, to create multiple encrypted images, resulting in 600 key sets where

x0 and

y0 change continuously. To evaluate the key sensitivity, the NPCR, UACI, and BACI metrics were computed based on the ciphertext images before and after consecutive key alterations. As depicted in

Table 7, the final outcome was determined by taking the average of all of the test findings.

It is evident that the experimental results closely align with the theoretical values. Notably, some of the results even surpassed the expected benchmarks. From this, it can be inferred that subtle modifications to the key have the potential to induce substantial transformations in the encrypted image. This, in turn, validates the encryption system’s responsiveness to key changes.

It is clear from these tests that the images of the ciphertext before and after key adjustment (as small as 10

−13) are completely different, highlighting the extraordinary sensitivity of the system to key changes. Therefore, 10

−13 can be taken as the most minuscule measurable entity in determining the key space of the encryption system. For the purpose of ensuring that the chaotic system keeps its chaotic nature within the key space, the scopes of

x0,

y0, and

c1 are set at [0, 5], [0, 1.5], and [4.3, 5], respectively. Within these ranges, with

x0 in combination with other variables, it can bring about highly random chaotic sequences, and the combination of

y0 and

z0 can also generate such sequences. The overall key space is calculated as (10

9 × 10

13 × 1.5 × 10

13 × 0.7 × 10

13) = 1.05 × 10

40. This value exceeds 2100, implying that the put-forward encryption system can successfully repel brute-force onslaughts [

42].

During transmission through public channels, images could be affected by externally extra noise or data disappearance. To evaluate the resilience of the advanced algorithm in opposition to such disturbances, we introduce stochastic impulse noise introduced into the encrypted images or crop them before decryption. The outcomes are displayed in

Figure 12(a1–a3). Panels (a1–a3) illustrate the performance of the Baboon encrypted image under data loss conditions of 1/64, 1/8, and 1/4, respectively; panels (b1–b3) show the corresponding decrypted plaintext images for (a1–a3), respectively; panels (c1–c3) depict the encrypted images with 1%, 5%, and 10% stochastic impulse noise, respectively; and panels (d1–d3) present the corresponding decrypted plaintext images for (c1–c3), respectively. These results indicate that the algorithm can successfully retrieve most of the essential information from images subjected to various levels of cropping. The results of the tests underscore the ability of the suggested encryption system to efficiently fend off noise attacks and endure the loss of data.