Augmenting Orbital Debris Identification with Neo4j-Enabled Graph-Based Retrieval-Augmented Generation for Multimodal Large Language Models

Abstract

1. Introduction

- We propose a novel application of Graph-based Retrieval-Augmented Generation (GraphRAG) in the domain of orbital debris identification, demonstrating its potential to augment the performance of multimodal large language models.

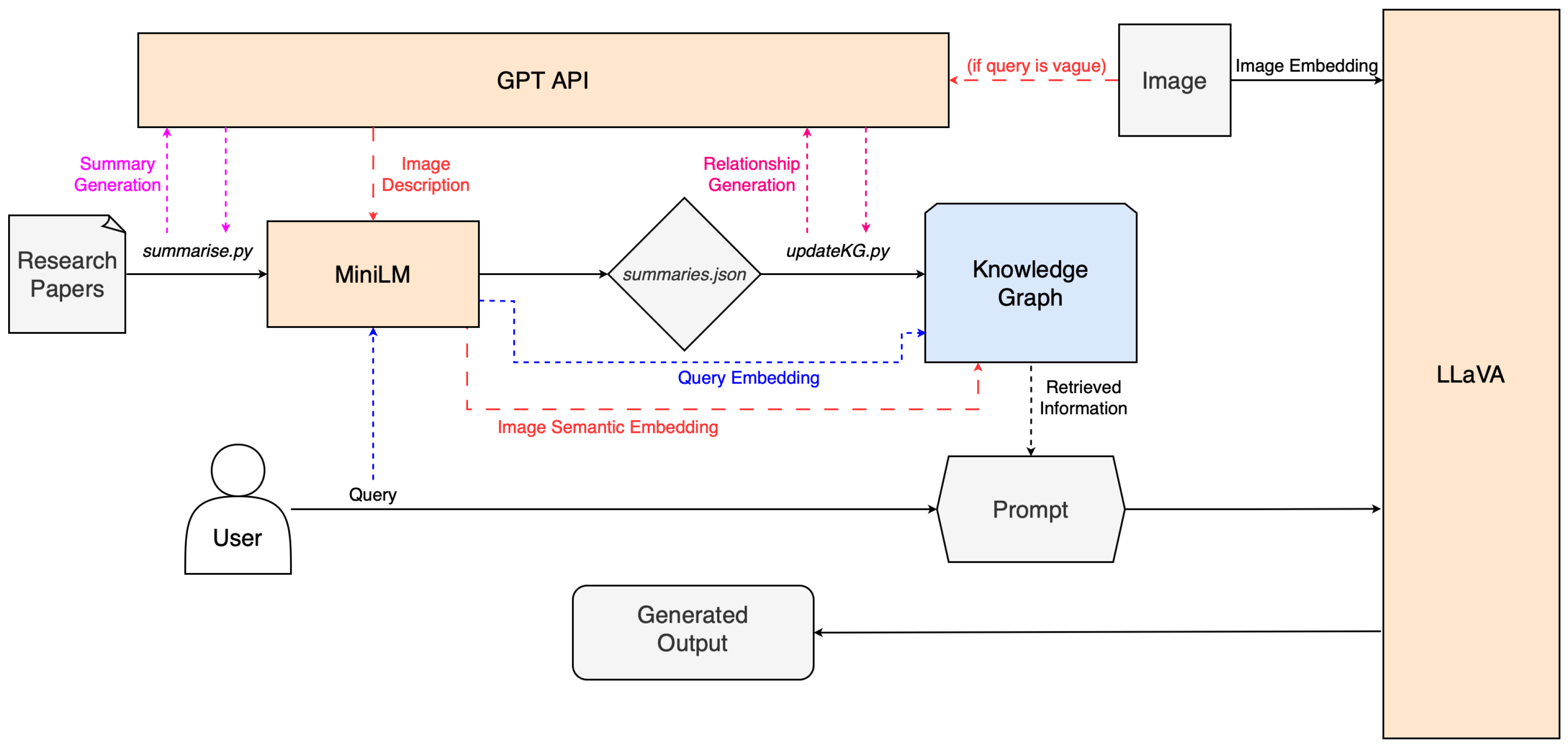

- We design and implement a hybrid architecture that integrates a Neo4j knowledge graph with the LLaVA multimodal LLM, supporting contextual augmentation of image-grounded responses.

- We present a complete end-to-end system pipeline, including knowledge graph construction, query embedding, document retrieval, and prompt engineering for multimodal inference.

- We conduct a case study using the SPARK dataset, evaluating the performance of the GraphRAG-augmented model against both its base version and commercial alternatives (e.g., GPT-4o, Gemini).

- We demonstrate that integrating retrieved domain-specific knowledge reduces hallucinations and qualitatively improves the factual grounding of responses to space imagery-related queries.

2. Background

2.1. Large Language Models

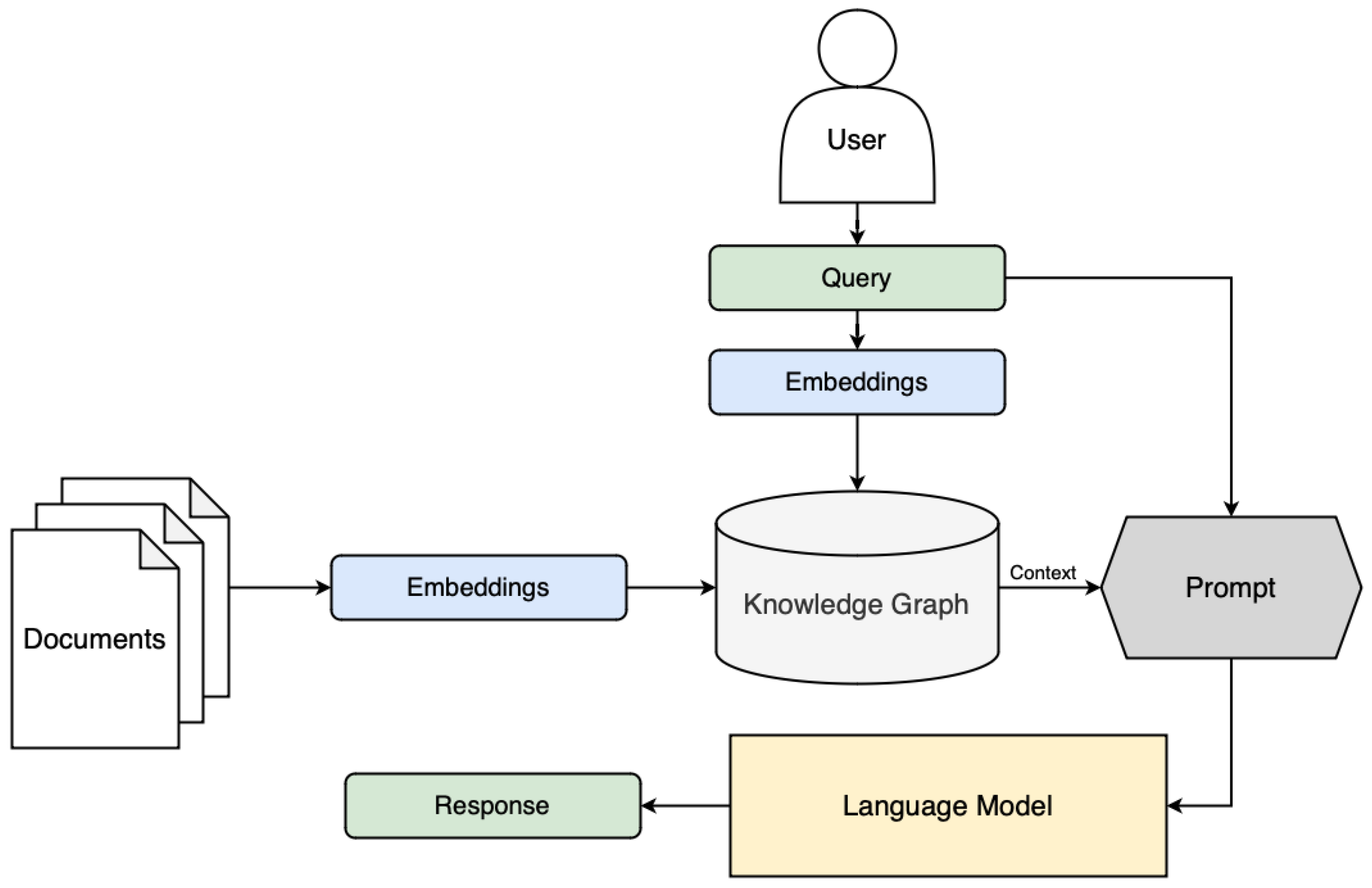

2.2. Retrieval-Augmented Generation

2.3. GraphRAG

2.4. Related Work

3. Technical Overview

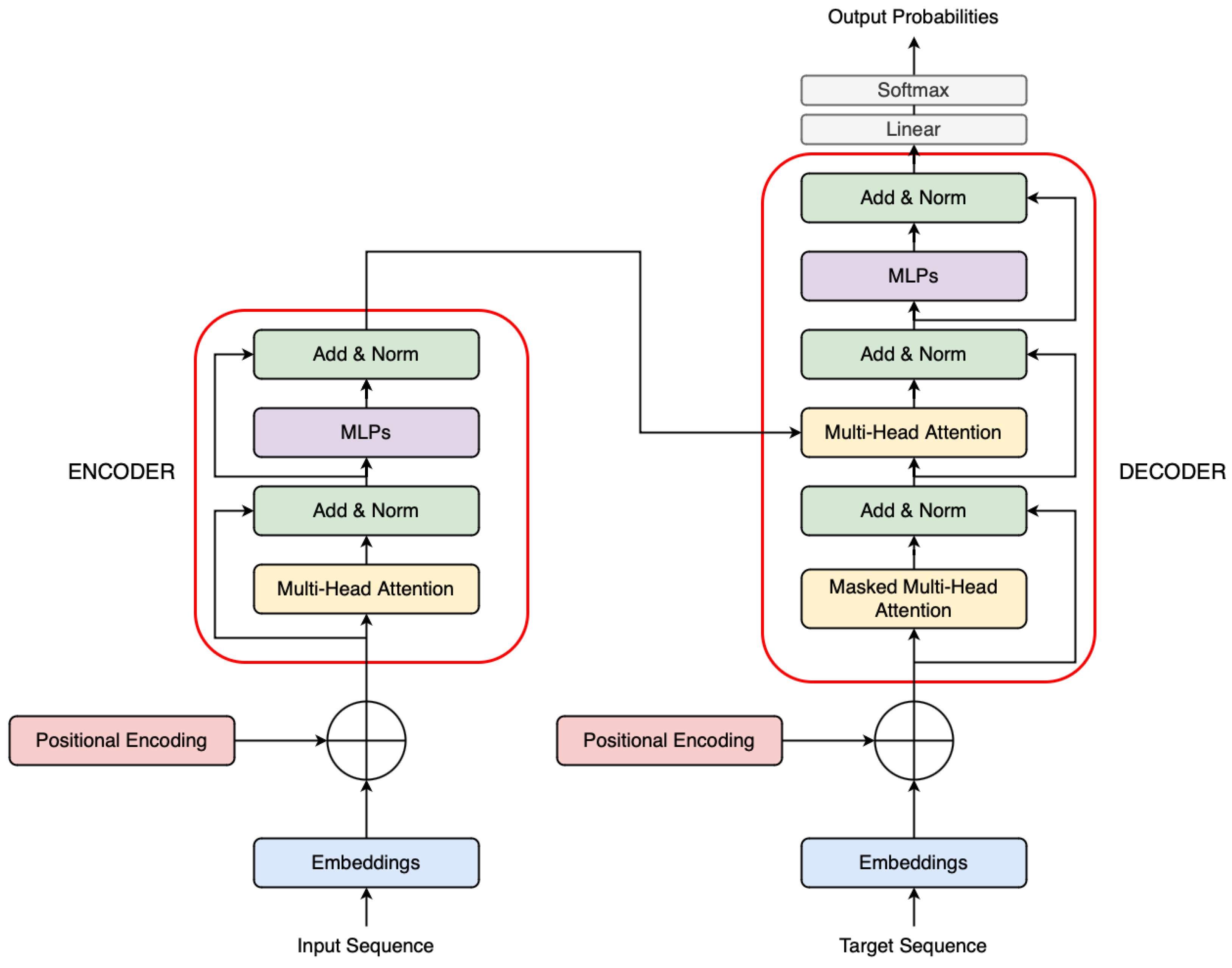

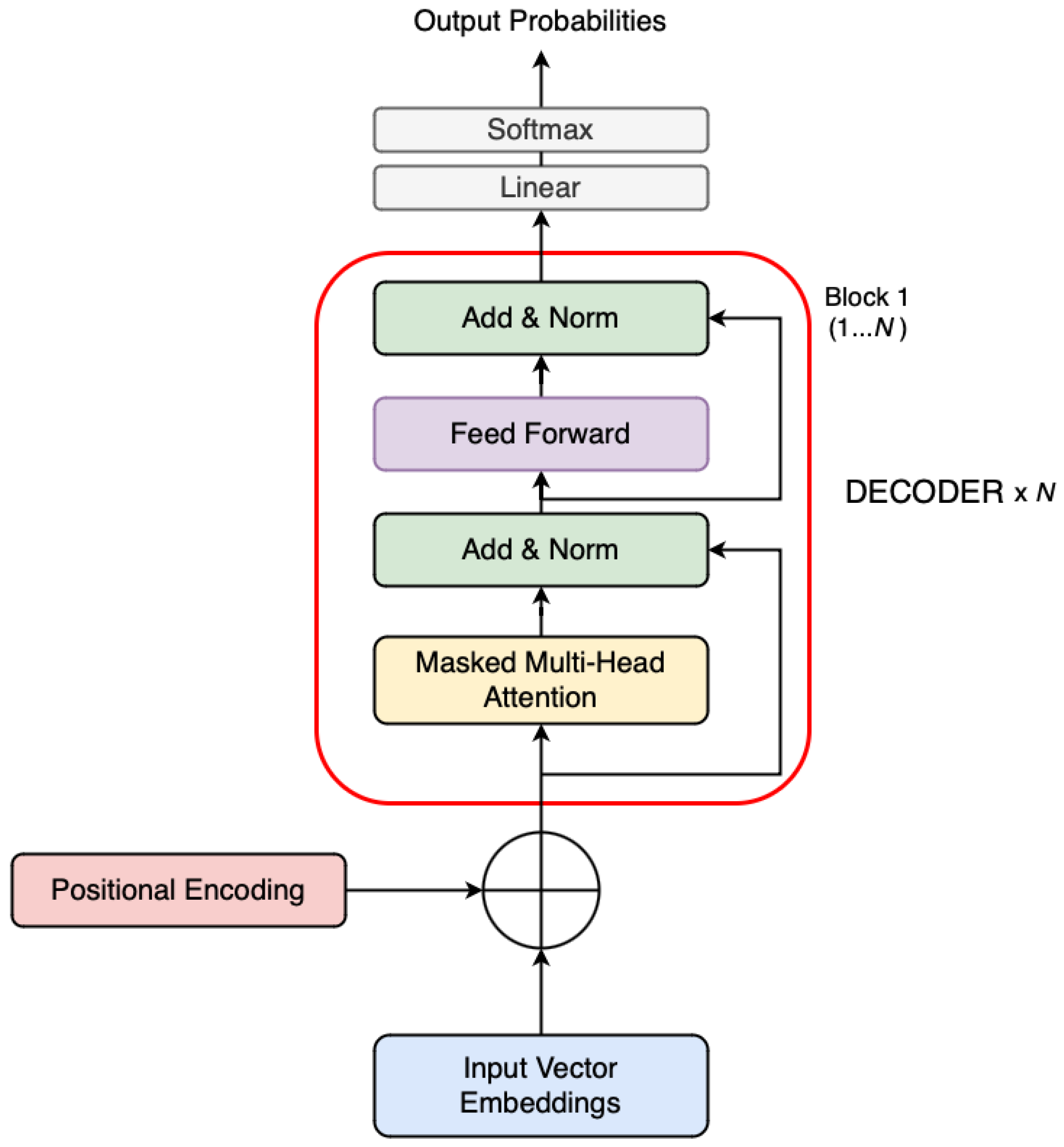

3.1. LLM Architecture

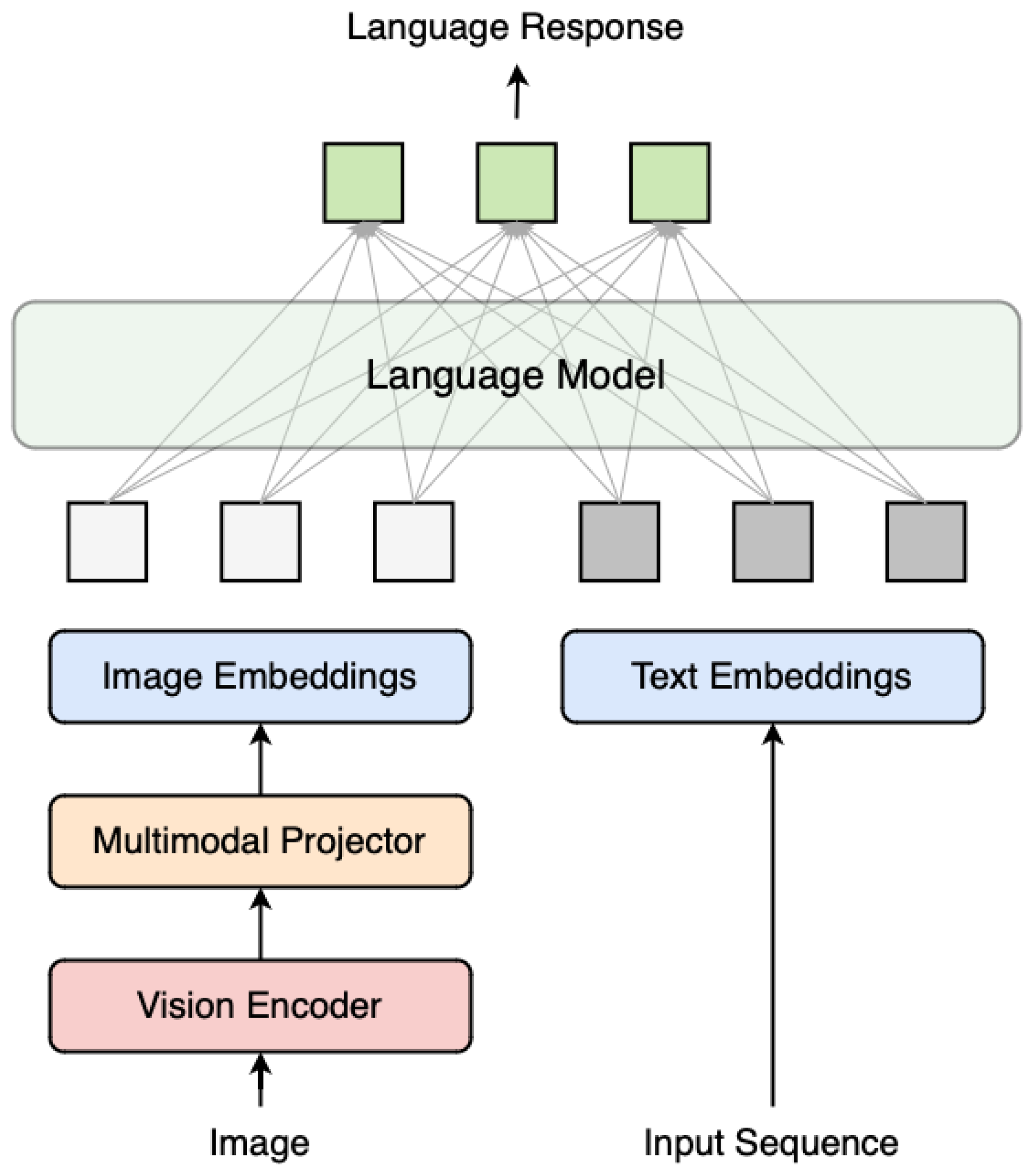

3.2. Multimodality

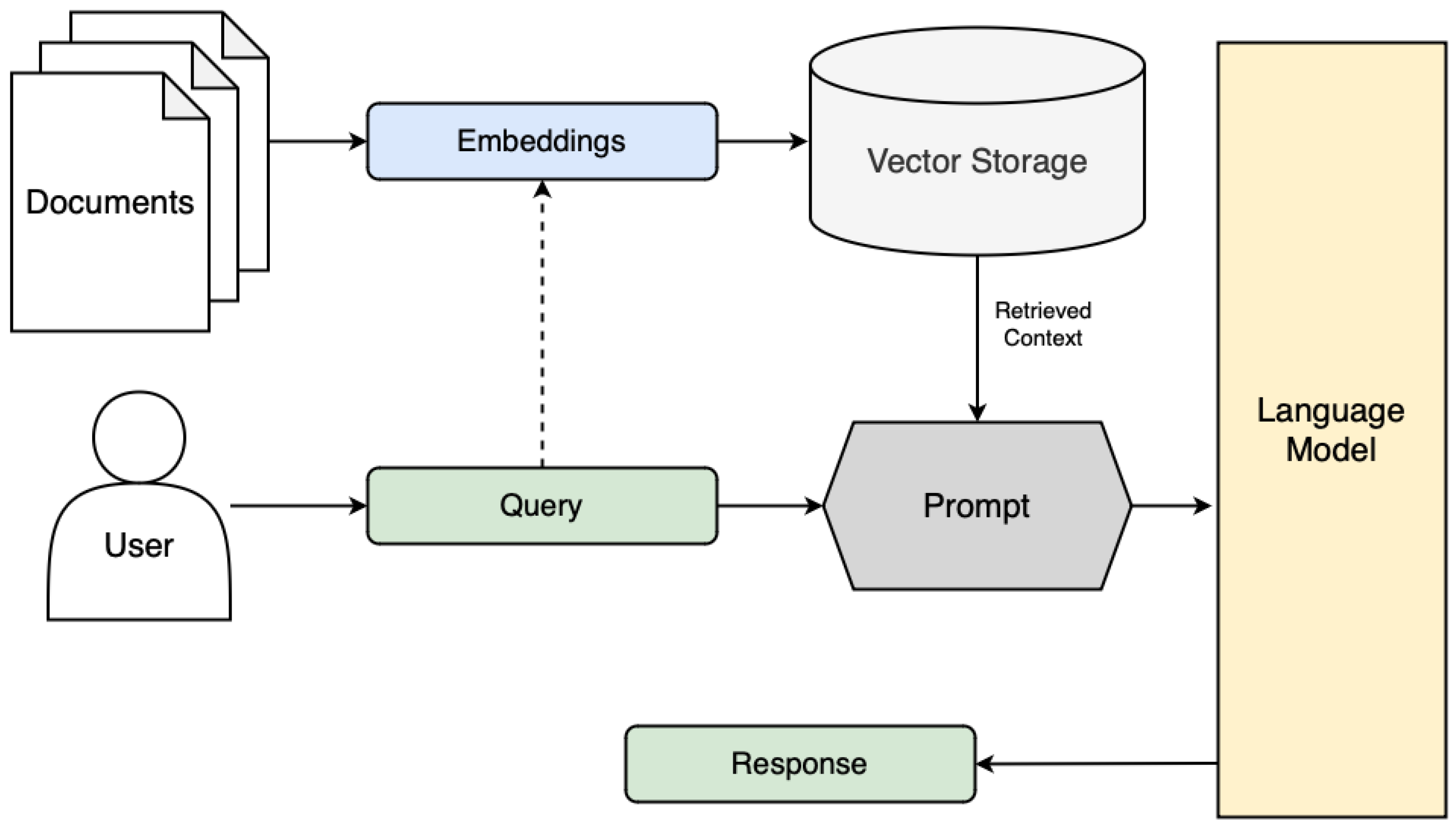

3.3. Retrieval Augmented Generation

3.4. GraphRAG

4. GraphRAG for Orbital Debris

4.1. Proposed Architecture

4.2. LLaVA Setup

4.3. GraphRAG Setup

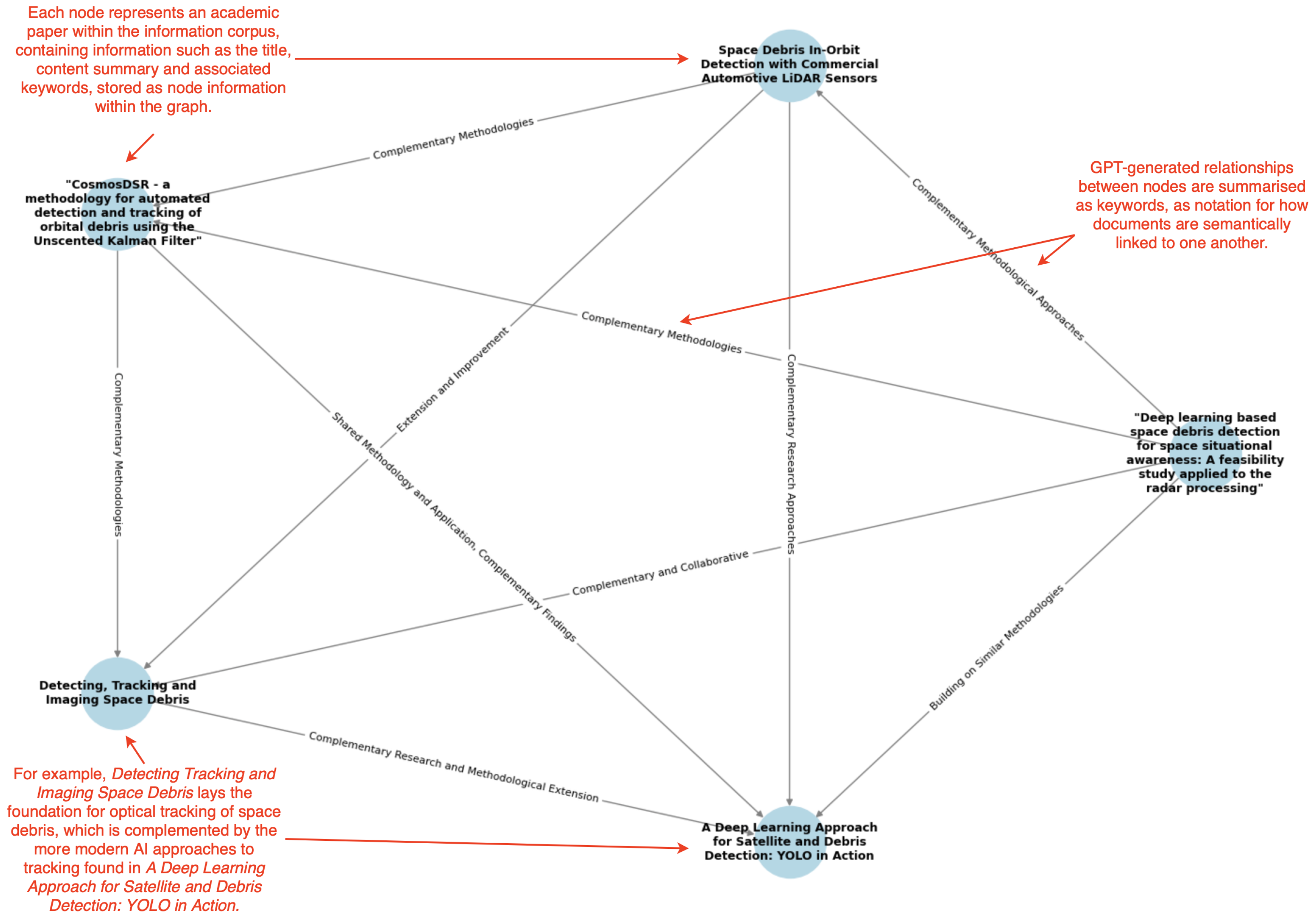

Corpus Selection

- “Detecting, Imaging and Tracking Space Debris” by Mehrholz, Leushacke, Flury, Jehn, Klinkrad and Landgraf [41]

- “A Deep Learning Approach for Satellite and Debris Detection: YOLO in Action” by Ahamed, Bin Syed, Chatterjee and Bin Habib [42]

- “CosmosDSR - a methodology for automated detection and tracking of orbital debris using the Unscented Kalman Filter” by Roll, Kurt and Woo [8]

- “Deep learning-based space debris detection for space situational awareness: A feasibility study applied to the radar processing” by Massimi, Ferrara, Petrucci and Benedetto [7]

- “Space Debris In-Orbit Detection with Commercial Automotive LiDAR Sensors” by Lopez-Calle [9]

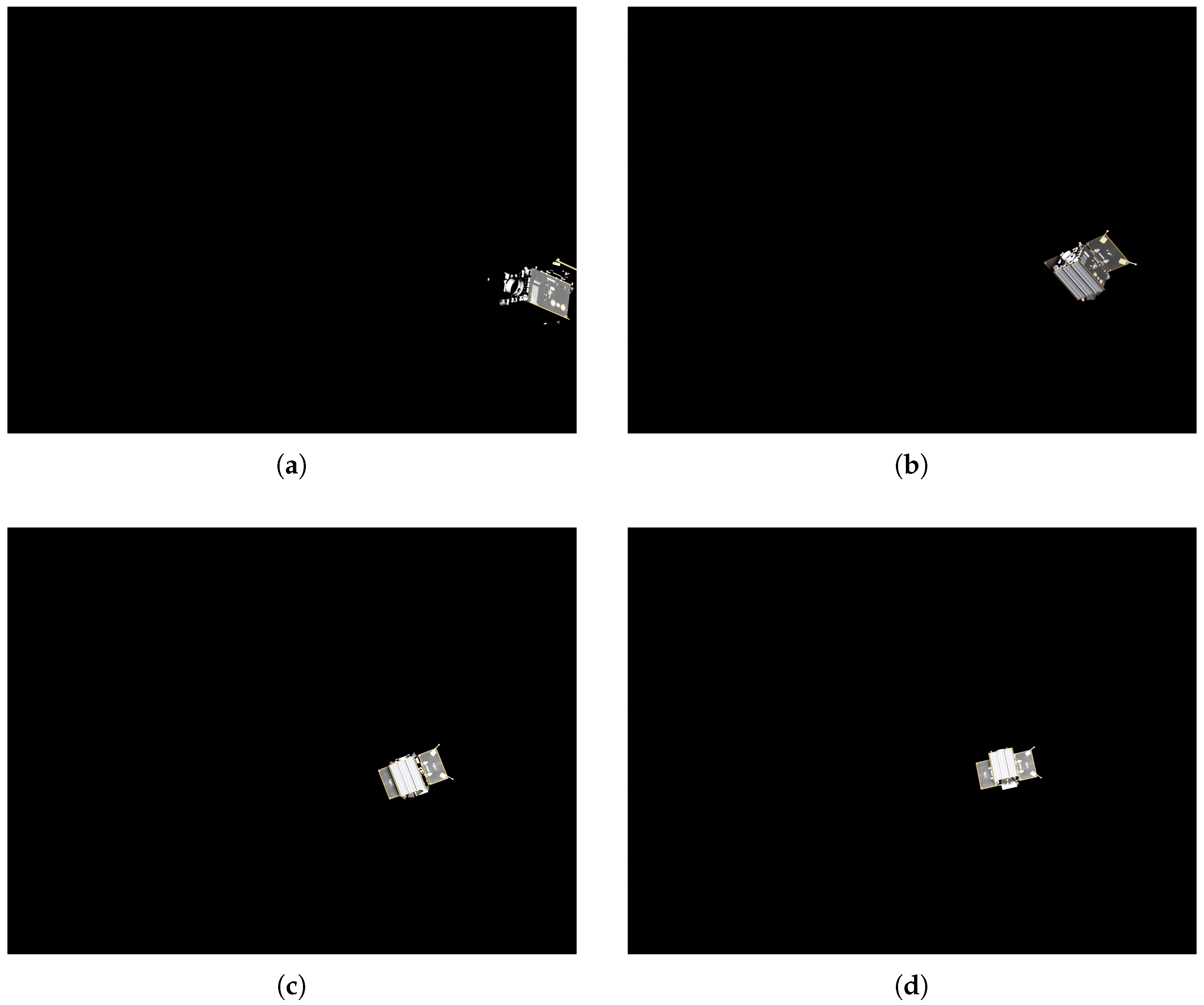

4.4. Image Selection

4.5. Query Selection

4.6. Evaluation Methodology

- Factual Accuracy: Whether the response contains verifiable, correct information grounded in known scientific literature or domain knowledge.

- Hallucination Presence: Whether the model invents facts, such as nonexistent paper titles, misidentifications, or speculative conclusions unsupported by the input.

- Contextual Relevance: Whether the response directly addresses the query and makes meaningful use of either visual input or retrieved knowledge (in the case of GraphRAG).

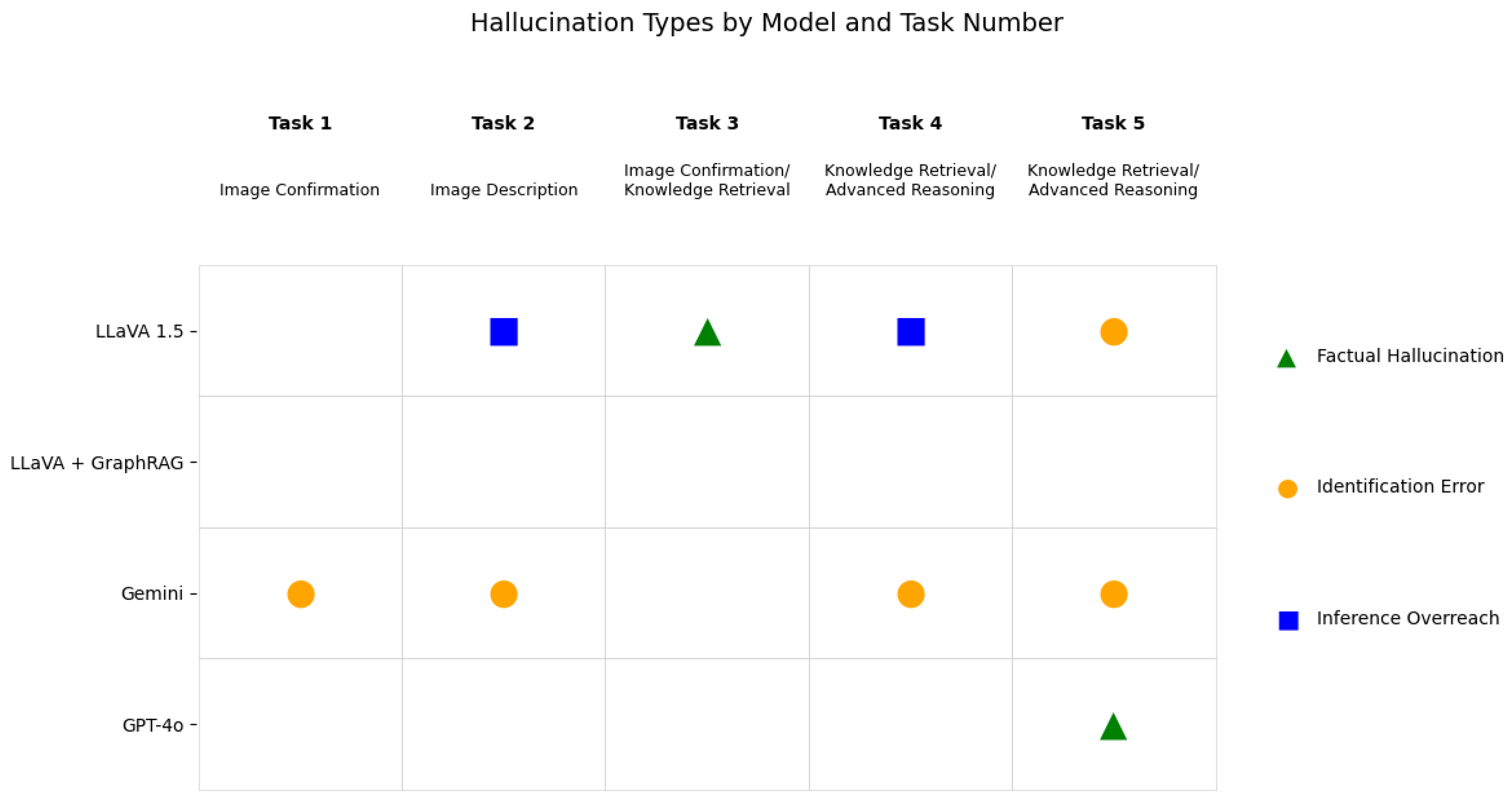

- Factual hallucinations refer to assertions that contradict known truths, such as citing non-existent articles or inventing false information.

- Identification errors arise when models incorrectly label or describe objects, people, or locations, particularly in vision–language settings.

- Inferential hallucinations occur when the model draws speculative conclusions or extrapolates beyond the provided input, despite no visual or textual evidence justifying the leap.

4.7. Results

4.7.1. Test 1

4.7.2. Test 2

4.7.3. Test 3

4.7.4. Test 4

4.7.5. Test 5

4.8. Hallucination Analysis

4.9. Runtime Comparisons

4.10. Discussion

5. Directions for Future Research

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- NASA. ARES Orbital Debris Program Office. ’Debris by the Numbers’, Orbital Debris Quarterly News, 2019. Available online: https://orbitaldebris.jsc.nasa.gov/quarterly-news/ (accessed on 10 May 2025).

- Kaineg, S. The Growing Problem of Space Debris The Growing Problem of Space Debris. Hastings Environ. Law J. Hastings Environ. Law J. 2020, 26, 277. [Google Scholar]

- Kessler, D.J.; Cour-Palais, B.G. Collision frequency of artificial satellites: The creation of a debris belt. J. Geophys. Res. 1978, 83, 2637–2646. [Google Scholar] [CrossRef]

- Jahirabadkar, S.; Narsay, P.; Pharande, S.; Deshpande, G.; Kitture, A. Space Objects Classification Techniques: A Survey. In Proceedings of the 2020 International Conference on Computational Performance Evaluation (ComPE), Shillong, India, 2–4 July 2020. [Google Scholar] [CrossRef]

- Perez, M.; Musallam, M.; Henaff, P.; Garcia, A.; Ghorbel, E.; Imsaeil, K.; Aouada, D. Detection & identification of on-orbit objects using machine learning. In Proceedings of the 8th European Conference on Space Debris (virtual), Darmstadt, Germany, 20–23 April 2021. [Google Scholar]

- Fitzgerald, G. Space Object Detection and Monitoring Using Persistent Wide Field of View Camera Arrays. Master’s Thesis, University of Dayton, Dayton, OH, USA, 2022. [Google Scholar]

- Massimi, F.; Ferrara, P.; Petrucci, R.; Benedetto, F. Deep learning-based space debris detection for space situational awareness: A feasibility study applied to the radar processing. IET Radar Sonar Navig. 2024, 18, 635–648. [Google Scholar] [CrossRef]

- Roll, D.S.; Kurt, Z.; Woo, W.L. CosmosDSR—A methodology for automated detection and tracking of orbital debris using the Unscented Kalman Filter. arXiv 2023, arXiv:2310.17158. [Google Scholar] [CrossRef]

- Lopez-Calle, I. Space Debris In-Orbit Detection with Commercial Automotive LiDAR Sensors. Sensors 2024, 24, 7293. [Google Scholar] [CrossRef]

- Liu, H.; Li, C.; Wu, Q.; Lee, Y.J. Visual Instruction Tuning. arXiv 2023, arXiv:2304.08485. [Google Scholar] [CrossRef]

- Gao, Y.; Xiong, Y.; Gao, X.; Jia, K.; Pan, J.; Bi, Y.; Dai, Y.; Sun, J.; Wang, H. Retrieval-Augmented Generation for Large Language Models: A Survey. arXiv 2023, arXiv:2312.10997. [Google Scholar] [CrossRef]

- Pajila, P.B.; Sudha, K.; Selvi, D.K.; Kumar, V.N.; Gayathri, S.; Subramanian, R.S. A Survey on Natural Language Processing and its Applications. In Proceedings of the 2023 4th International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 6–8 July 2023; pp. 996–1001. [Google Scholar] [CrossRef]

- Alberts, I.L.; Mercolli, L.; Pyka, T.; Prenosil, G.; Shi, K.; Rominger, A.; Afshar-Oromieh, A. Large language models (LLM) and ChatGPT: What will the impact on nuclear medicine be? Eur. J. Nucl. Med. Mol. Imaging 2023, 50, 1549–1552. [Google Scholar] [CrossRef]

- Yang, J.; Jin, H.; Tang, R.; Han, X.; Feng, Q.; Jiang, H.; Zhong, S.; Yin, B.; Hu, X. Harnessing the Power of LLMs in Practice: A Survey on ChatGPT and Beyond. ACM Trans. Knowl. Discov. Data 2024, 18, 1–32. [Google Scholar] [CrossRef]

- Hadi, M.U.; Tashi, Q.A.; Qureshi, R.; Shah, A.; Muneer, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Akhtar, N.; Wu, J.; et al. A Survey on Large Language Models: Applications, Challenges, Limitations, and Practical Usage. TechRxiv, Preprint, 2023. Available online: https://doi.org/10.36227/techrxiv.23589741.v1 (accessed on 25 May 2025).

- Huang, L.; Yu, W.; Ma, W.; Zhong, W.; Feng, Z.; Wang, H.; Chen, Q.; Peng, W.; Feng, X.; Qin, B.; et al. A Survey on Hallucination in Large Language Models: Principles, Taxonomy, Challenges, and Open Questions. ACM Trans. Off. Inf. Syst. 2025, 43, 1–55. [Google Scholar] [CrossRef]

- Lin, X.; Wang, W.; Li, Y.; Yang, S.; Feng, F.; Wei, Y.; Chua, T.S. Data-efficient Fine-tuning for LLM-based Recommendation. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024. [Google Scholar] [CrossRef]

- Arslan, M.; Ghanem, H.; Munawar, S.; Cruz, C. A Survey on RAG with LLMs. Procedia Comput. Sci. 2024, 246, 3781–3790. [Google Scholar] [CrossRef]

- Reimers, N.; Gurevych, I. Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Douze, M.; Guzhva, A.; Deng, C.; Johnson, J.; Szilvasy, G.; Mazaré, P.E.; Lomeli, M.; Hosseini, L.; Jégou, H. The Faiss library. arXiv 2024, arXiv:2401.08281. [Google Scholar] [CrossRef]

- Rezaei, M.R.; Hafezi, M.; Satpathy, A.; Hodge, L.; Pourjafari, E. AT-RAG: An Adaptive RAG Model Enhancing Query Efficiency with Topic Filtering and Iterative Reasoning. arXiv 2024, arXiv:2410.12886. [Google Scholar] [CrossRef]

- Agrawal, G.; Kumarage, T.; Alghamdi, Z.; Liu, H. Mindful-RAG: A Study of Points of Failure in Retrieval Augmented Generation. arXiv, Preprint, 2024. Available online: https://arxiv.org/abs/2407.12216 (accessed on 25 May 2025).

- Shao, Z.; Gong, Y.; Shen, Y.; Huang, M.; Duan, N.; Chen, W. Enhancing Retrieval-Augmented Large Language Models with Iterative Retrieval-Generation Synergy. arXiv 2023, arXiv:2305.15294. [Google Scholar] [CrossRef]

- Meduri, K.; Nadella, G.S.; Gonaygunta, H.; Maturi, M.H.; Fatima, F. Efficient RAG Framework for Large-Scale Knowledge Bases. Int. J. Nov. Res. Dev. 2024, 9, h613–h622. [Google Scholar]

- Peng, B.; Zhu, Y.; Liu, Y.; Bo, X.; Shi, H.; Hong, C.; Zhang, Y.; Tang, S. Graph Retrieval-Augmented Generation: A Survey. arXiv 2024, arXiv:2408.08921. [Google Scholar]

- Ngangmeni, J.; Rawat, D.B. Swamped with Too Many Articles? GraphRAG Makes Getting Started Easy. AI 2025, 6, 47. [Google Scholar] [CrossRef]

- Hu, Y.; Lei, Z.; Zhang, Z.; Pan, B.; Ling, C.; Zhao, L. GRAG: Graph Retrieval-Augmented Generation. arXiv 2024, arXiv:2405.16506. [Google Scholar]

- Luo, L.; Zhao, Z.; Haffari, G.; Phung, D.; Gong, C.; Pan, S. GFM-RAG: Graph Foundation Model for Retrieval Augmented Generation. arXiv 2025, arXiv:2502.01113. [Google Scholar]

- Xu, K.; Zhang, K.; Li, J.; Huang, W.; Wang, Y. CRP-RAG: A Retrieval-Augmented Generation Framework for Supporting Complex Logical Reasoning and Knowledge Planning. Electronics 2024, 14, 47. [Google Scholar] [CrossRef]

- Neo4j Inc. Neo4j: The World’s Leading Graph Database, 2015. Available online: https://neo4j.com (accessed on 25 May 2025).

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. LLaMA: Open and Efficient Foundation Language Models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Radford, A.; Kim, J.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning Transferable Visual Models From Natural Language Supervision. In Proceedings of the International Conference on Machine Learning, Virtual Event, 18–24 July 2021. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. arXiv 2023, arXiv:1706.03762. [Google Scholar]

- Su, J.; Lu, Y.; Pan, S.; Murtadha, A.; Wen, B.; Liu, Y. RoFormer: Enhanced Transformer with Rotary Position Embedding. arXiv 2023, arXiv:2104.09864. Available online: http://arxiv.org/abs/2104.09864 (accessed on 25 May 2025).

- Church, K.W.; Sun, J.; Yue, R.; Vickers, P.; Saba, W.; Chandrasekar, R. Emerging trends: A gentle introduction to RAG. Nat. Lang. Eng. 2024, 30, 870–881. [Google Scholar] [CrossRef]

- ggml-org. llama.cpp: LLM Inference in C/C++. GitHub Repository, 2025. Available online: https://github.com/ggerganov/llama.cpp (accessed on 25 May 2025).

- Yan, B.; Li, K.; Xu, M.; Dong, Y.; Zhang, Y.; Ren, Z.; Cheng, X. On protecting the data privacy of Large Language Models (LLMs) and LLM agents: A literature review. High-Confid. Comput. 2025, 5, 100300. [Google Scholar] [CrossRef]

- Dettmers, T.; Lewis, M.; Shleifer, S.; Zettlemoyer, L. 8-bit Optimizers via Block-wise Quantization. arXiv 2021, arXiv:2110.02861. [Google Scholar]

- Frantar, E.; Ashkboos, S.; Hoefler, T.; Alistarh, D. GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers. arXiv 2023, arXiv:2210.17323. [Google Scholar] [CrossRef]

- Apple Inc. Metal: Apple’s Graphics and Compute API. Available online: https://developer.apple.com/metal/ (accessed on 25 May 2025).

- Mehrholz, D.; Leushacke, L.; Flury, W.; Jehn, R.; Klinkrad, H.; Landgraf, M. Detecting, Tracking and Imaging Space Debris. ESA Bull. 2002, 109, 128–134. [Google Scholar]

- Ahamed, M.Y.; Bin Syed, M.A.; Chatterjee, P.; Bin Habib, A.Z.S. A Deep Learning Approach for Satellite and Debris Detection: YOLO in Action. In Proceedings of the 2023 26th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 13–15 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Angles, R.; Thakkar, H.; Tomaszuk, D. Mapping RDF Databases to Property Graph Databases. IEEE Access 2020, 8, 86091–86110. [Google Scholar] [CrossRef]

- Yin, C.; Zhang, Z. A Study of Sentence Similarity Based on the All-minilm-l6-v2 Model with “Same Semantics, Different Structure” After Fine Tuning. In Advances in Computer Science Research, Proceedings of the 2024 2nd International Conference on Image, Algorithms and Artificial Intelligence (ICIAAI 2024), Singapore, 9–11 August 2024; Atlantis Press: Paris, France, 2024; pp. 677–684. (accessed on 25 May 2025). [Google Scholar] [CrossRef]

- Musallam, M.A.; Al, I.K.; Oyedotun, O.; Perez, M.D.; Poucet, M.; Aouada, D. SPARK: SPAcecraft Recognition leveraging Knowledge of Space Environment. arXiv 2021, arXiv:2104.05978. [Google Scholar]

- Desai, S.; Mohr, J.; Bertin, E.; Kümmel, M.; Wetzstein, M. Detection and Removal of Artifacts in Astronomical Images. Astron. Comput. 2016, 16, 67–78. [Google Scholar] [CrossRef]

- Terzo, S.; Reale, F. On the importance of background subtraction in the analysis of coronal loops observed with TRACE. A&A 2010, 515, A7. [Google Scholar] [CrossRef][Green Version]

- Vojtekova, A.; Lieu, M.; Valtchanov, I.; Altieri, B.; Old, L.; Chen, Q.; Hroch, F. Learning to denoise astronomical images with U-nets. Mon. Not. R. Astron. Soc. 2020, 503, 3204–3215. [Google Scholar] [CrossRef]

- Roscani, V.; Tozza, S.; Castellano, M.; Merlin, E.; Ottaviani, D.; Falcone, M.; Fontana, A. A comparative analysis of denoising algorithms for extragalactic imaging surveys. A&A 2020, 643, A43. [Google Scholar] [CrossRef]

- Sheminova, V. Fourier analysis of spectra of solar-type stars. Kinemat. Fiz. Nebesnyh Tel 2017, 33, 27–48. [Google Scholar] [CrossRef]

- Yan, L.; Jin, M.; Fang, H.; Liu, H.; Zhang, T. Atmospheric-Turbulence-Degraded Astronomical Image Restoration by Minimizing Second-Order Central Moment. IEEE Geosci. Remote Sens. Lett. 2012, 9, 672–676. [Google Scholar] [CrossRef]

- Tao, J.; Cao, Y.; Ding, M.; Zhang, Z. Visible and Infrared Image Fusion-Based Image Quality Enhancement with Applications to Space Debris On-Orbit Surveillance. Int. J. Aerosp. Eng. 2022, 2022, 6300437. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Hu, E.; Wang, A.; Shiri, B.; Lin, W. VNDHR: Variational Single Nighttime Image Dehazing for Enhancing Visibility in Intelligent Transportation Systems via Hybrid Regularization. IEEE Trans. Intell. Transp. Syst. 2025, 26, 1–15. [Google Scholar] [CrossRef]

- Bai, Z.; Wang, P.; Xiao, T.; He, T.; Han, Z.; Zhang, Z.; Shou, M.Z. Hallucination of Multimodal Large Language Models: A Survey. arXiv 2025, arXiv:2404.18930. [Google Scholar] [CrossRef]

- Google DeepMind. Gemini 1: A Family of Highly Capable Multimodal Models; Technical Report; Google DeepMind: London, UK, 2023. Available online: https://storage.googleapis.com/deepmind-media/gemini/gemini_1_report.pdf (accessed on 25 May 2025).

- OpenAI. OpenAI API Documentation. OpenAI, San Francisco, CA, USA, 2021. Available online: https://platform.openai.com/docs (accessed on 25 May 2025).

- Hickson, P.; Ailawadhi, B.; Akhunov, T.; Borra, E.; Dubey, M.; Dukiya, N.; Fu, J.; Grewal, B.; Kumar, B.; Misra, K.; et al. Serendipitous Detection of Orbital Debris by the International Liquid Mirror Telescope: First Results. arXiv 2023, arXiv:2311.05614. [Google Scholar] [CrossRef]

- Yang, R.; Yang, B.; Feng, A.; Ouyang, S.; Blum, M.; She, T.; Jiang, Y.; Lecue, F.; Lu, J.; Li, I. Graphusion: A RAG Framework for Knowledge Graph Construction with a Global Perspective. arXiv 2025, arXiv:2410.17600. [Google Scholar] [CrossRef]

- Jiang, F.; Zhu, W.; Dong, L.; Wang, K.; Yang, K.; Pan, C.; Dobre, O.A. CommGPT: A Graph and Retrieval-Augmented Multimodal Communication Foundation Model. arXiv 2025, arXiv:2502.18763. [Google Scholar] [CrossRef]

- Zhan, Z.; Wang, J.; Zhou, S.; Zhang, R. MMRAG: Multi-Mode Retrieval-Augmented Generation with Large Language Models for Biomedical In-Context Learning. arXiv 2025, arXiv:2502.15954. [Google Scholar] [CrossRef]

- Lee, J.; Wang, Y.; Li, J.; Zhang, M. Multimodal Reasoning with Multimodal Knowledge Graph. arXiv 2024, arXiv:2406.02030. [Google Scholar]

- Li, Y.; Li, Y.; Wang, X.; Jiang, Y.; Zhang, Z.; Zheng, X.; Wang, H.; Zheng, H.T.; Huang, F.; Zhou, J.; et al. Benchmarking Multimodal Retrieval Augmented Generation with Dynamic VQA Dataset and Self-adaptive Planning Agent. arXiv 2024, arXiv:2411.02937. [Google Scholar]

- Wasserman, N.; Pony, R.; Naparstek, O.; Goldfarb, A.R.; Schwartz, E.; Barzelay, U.; Karlinsky, L. REAL-MM-RAG: A Real-World Multi-Modal Retrieval Benchmark. arXiv 2025, arXiv:2502.12342. [Google Scholar]

- Galdas, P. Revisiting Bias in Qualitative Research: Reflections on Its Relationship with Funding and Impact. Int. J. Qual. Methods 2017, 16, 1609406917748992. [Google Scholar] [CrossRef]

- Chan, C.M.; Xu, C.; Yuan, R.; Luo, H.; Xue, W.; Guo, Y.; Fu, J. RQ-RAG: Learning to Refine Queries for Retrieval Augmented Generation. In Proceedings of the First Conference on Language Modeling, Philadelphia, PA, USA, 7–9 October 2024. [Google Scholar]

- Peng, A.; Li, B.Z.; Sucholutsky, I.; Kumar, N.; Shah, J.; Andreas, J.; Bobu, A. Adaptive Language-Guided Abstraction from Contrastive Explanations. In Proceedings of the 8th Conference on Robot Learning, PMLR, Munich, Germany, 6–9 November 2025; Volume 270, pp. 3425–3438. [Google Scholar]

- Wadhwa, S.; Amir, S.; Wallace, B. Revisiting Relation Extraction in the era of Large Language Models. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Toronto, ON, Canada, 9–14 July 2023; pp. 15566–15589. [Google Scholar] [CrossRef]

| Query | Task(s) |

|---|---|

| Is this an image of orbital debris? | Image confirmation |

| What is this object? | Image description |

| Is this an image of orbital debris? If so, what is an example of a relevant academic paper relating to the detection of such debris? For this paper, discuss the content. | Image confirmation/knowledge retrieval |

| For the object in this image, describe some of the risks associated with such objects. | Knowledge retrieval/advanced reasoning † |

| What is the current state of the research surrounding these objects? | Knowledge retrieval/advanced reasoning † |

| Model | Response | Runtime (ms) |

|---|---|---|

| LLaVA 1.5 | Yes, the image is of an object in space, possibly an orbital debris or a satellite, floating in the blackness of space. The object is small and appears to be the only one in the scene, making it stand out against the vastness of the universe. | 9051.97 |

| LLaVA 1.5 + GraphRAG | Yes, the image is of orbital debris. | 6114.53 |

| Gemini | Based on the visual characteristics, this image likely shows the Rosetta spacecraft, and not orbital debris. Here’s why: Defined Structure: The object in the image has a clear, structured form with recognizable components like solar panels and a central body. Orbital debris is typically shown as irregular fragments and pieces. Rosetta Spacecraft: The shape and configuration in the image are consistent with images of the Rosetta spacecraft, which was a European Space Agency mission to study Comet 67P/Churyumov–Gerasimenko. | 8450.10 1 |

| GPT-4o | This image does not appear to depict orbital debris. Instead, it shows a well-structured, intact satellite. The object is symmetric, equipped with solar panels, antennae, and other instrumentation typical of operational satellites. Orbital debris usually consists of broken fragments, irregular shapes, or defunct equipment, not complete functional satellites. | 11,630.41 1 |

| Model | Response | Runtime (ms) |

|---|---|---|

| LLaVA 1.5 | The image features a small, square-shaped object floating in the middle of a black background. The object appears to be a satellite, which is a man-made device designed to orbit the Earth and perform various functions such as communication, navigation, and scientific research. The satellite’s presence in the image suggests that it is either in the process of being launched or has recently been deployed into space. | 4645.62 |

| LLaVA 1.5 + GraphRAG | The object in the image is a small satellite or space debris. It is located in the middle of a black background, which makes it stand out. The image is a close-up of the satellite, allowing for a detailed view of its structure. The satellite appears to be relatively small compared to the vastness of space, but its presence in the image highlights the importance of monitoring and tracking such objects to ensure the safety of space operations. | 7992.51 |

| Gemini | The object in the image is likely the Rosetta spacecraft. Rosetta was a European Space Agency (ESA) mission launched in 2004 to study Comet 67P/Churyumov–Gerasimenko. It was the first spacecraft to orbit a comet and the first to deploy a lander (Philae) onto a comet’s surface. The spacecraft had distinctive features: Large Solar Panels: Rosetta had two very large solar panel wings, necessary to generate power at the comet’s distance from the Sun. These are visible in the image. High-Gain Antenna: A large dish antenna was used for communication with Earth. This is also a prominent feature in the image. Box-like Structure: The main body of the spacecraft had a central, box-shaped structure where instruments and subsystems were housed. The mission concluded in September 2016 with a controlled impact of the Rosetta orbiter onto the surface of the comet. | 9021.04 1 |

| GPT-4o | The object in the image appears to be a satellite in space. It has identifiable features such as solar panels, antennas, and structural components typical of Earth observation or communication satellites. Based on the appearance, it may be a small or medium-sized satellite used for commercial, scientific, or military purposes. If you have more context (e.g., mission name, where the image is from), I can help identify it more specifically—especially if it’s related to a known satellite constellation or project like CubeSats, SPARK, or others. | 17,530.64 1 |

| Model | Response | Runtime (ms) |

|---|---|---|

| LLaVA 1.5 | Yes, the image is an image of orbital debris, which is a space debris or a fragment of a spacecraft that remains in orbit around the Earth. An example of a relevant academic paper relating to the detection of such debris is “Orbital Debris Removal: A Review of the Current State of Technology and Potential Future Approaches” by J. A. S. Lee, published in the Journal of Spacecraft and Rockets in 2013. This paper discusses the current state of technology and potential future approaches to remove orbital debris from Earth’s orbit. It reviews various methods, including active and passive removal, and discusses the challenges and limitations of each approach. The paper also highlights the importance of addressing the issue of orbital debris, as it can pose risks to both spacecraft and the environment. The paper concludes with a call for further research and development of effective orbital debris removal technologies. | 13,154.57 |

| LLaVA 1.5 + GraphRAG | Yes, the image is of orbital debris. An example of a relevant academic paper relating to the detection of such debris is “Detecting, Tracking and Imaging Space Debris” by D. Mehrholz L. Leushacke W. Flury R. Jehn H. Klinkrad M. Landgraf. This paper focuses on the current state of space debris and the potential hazards it poses. The authors discuss the efforts made by the FGAN Research Institute and the European Space Operations Centre (ESOC) in tracking and studying this debris. The paper begins by noting that the man-made space debris environment has been created by space activities since the launch of the first artificial satellite in 1957. The authors also highlight the importance of space debris monitoring and the challenges faced in detecting, tracking, and imaging space debris. The paper presents a comprehensive overview of the problem and the methods used by certain institutions for detection, tracking, and imaging of space debris. | 12,977.28 |

| Gemini | Based on the visual characteristics, the image most likely shows the Rosetta spacecraft, identifiable by its large solar panels and central structure. It is not typical orbital debris. A relevant academic paper on debris detection is “Serendipitous Detection of Orbital Debris by the International Liquid Mirror Telescope: First Results” by наблюдение, et al. (2024). This paper details the ILMT’s initial observations, detecting streaks from orbiting objects. Notably, 48% of the detected streaks did not match known cataloged objects, indicating a significant population of uncatalogued debris. The ILMT demonstrated the ability to detect objects down to magnitude 14.5, with potential for fainter detections. The study highlights the incompleteness of current debris catalogs and the value of zenith-pointing telescopes for monitoring the orbital environment. | 11,285.22 1 |

| GPT-4o | The image shows an intact satellite, not orbital debris, which typically appears fragmented or irregular. A foundational paper on debris detection is Liou and Johnson’s “Risks in Space from Orbiting Debris” (Science, 2006). It quantifies debris populations and highlights how small fragments travelling at orbital speeds pose serious threats. The paper introduces the Kessler Syndrome, where collisions create more debris, increasing the risk of further collisions. It also outlines mitigation strategies such as post-mission disposal and de-orbiting techniques. Detection methods include ground-based radar and optical systems, effective for cataloguing debris larger than 10 cm in low Earth orbit and 1 m in geostationary orbit. However, tracking smaller debris remains a challenge. The paper underscores the importance of international coordination to minimise debris generation. While primarily focused on physics and policy, it laid groundwork for later developments in AI-assisted detection and real-time tracking technologies, which now play a growing role in orbital debris monitoring. | 10,872.72 1 |

| Model | Response | Runtime (ms) |

|---|---|---|

| LLaVA 1.5 | The object in the image is a small satellite or spacecraft, which is floating in the dark sky. There are several risks associated with such objects, including the possibility of collision with other objects in space, such as satellites or debris. Collisions can cause damage to the satellite or spacecraft, potentially leading to malfunction or even destruction. Additionally, the presence of debris in space can pose a risk to the satellite or spacecraft, as it may collide with debris and cause damage. Furthermore, the satellite or spacecraft may also be at risk of being hit by meteoroids or other celestial objects, which can cause damage or even destroy the satellite or spacecraft. To mitigate these risks, it is crucial to have proper monitoring and tracking systems in place to ensure the safety of spacecraft and satellites in orbit. | 12,235.70 |

| LLaVA 1.5 + GraphRAG | The image features a small object, possibly a satellite or space debris, floating in the blackness of space. These objects can pose risks to both human activities in space and the overall safety of space operations. Some of the risks associated with such objects include: 1. Collisions: Collisions between space debris and operational satellites or other spacecraft can cause damage or even complete destruction of the affected objects. This can lead to costly repairs or loss of valuable data, as well as potential disruptions to communication, navigation, or scientific missions. 2. Debris Field Growth: The accumulation of small debris can create a chain reaction, leading to the creation of larger debris objects through collisions. This can further increase the risk of future collisions and exacerbate the problem. 3. Hazard to Human Spaceflight: The presence of debris in the vicinity of spacecraft or space stations can pose a risk to human spaceflight, as it increases the likelihood of damage to the spacecraft or the space station itself. | 12,848.58 |

| Gemini | Based on the image and the understanding that it likely depicts the Rosetta spacecraft, here are some of the risks associated with such objects in space: Operational Risks: Power Management: Rosetta relied on solar panels for power. As it moved further from the Sun on its journey with Comet 67P/Churyumov-Gerasimenko, the amount of solar power available decreased significantly. This limited the operation of its instruments and eventually led to the end of the mission. Distance and Communication: The vast distances involved in space missions create challenges for communication. As Rosetta moved further away, the signal strength weakened, and there could be periods of blackout, such as during solar conjunctions when the Sun is between the Earth and the spacecraft. Harsh Space Environment: Spacecraft are exposed to extreme temperatures, radiation, and micrometeoroid impacts, which can degrade their systems over time. Rosetta endured over 12 years in this harsh environment. Orbital Mechanics and Navigation: Navigating near a comet with a weak and irregular gravitational field, as was the case with 67P, is complex. Rosetta required frequent trajectory adjustments to stay on course, accounting for the comet’s gravity, solar radiation pressure, and even aerodynamic drag from the comet’s coma. Landing Challenges: If the object in the image is related to a landing attempt (like the Philae lander on Rosetta), there are significant risks associated with landing on an unknown and potentially hazardous surface. This includes the lander tipping over, not anchoring properly (as happened with Philae), landing in an unfavorable location (e.g., in shadow, affecting power), or being damaged by surface features. Spacecraft Longevity and Aging: Over long durations, spacecraft components degrade, increasing the risk of failure. Rosetta’s instruments and systems experienced wear and tear throughout its mission. | 12,580.45 1 |

| GPT-4o | Satellites like the one shown in the image present several risks that are important to consider in the context of orbital safety and sustainability. One of the most significant concerns is the potential for collisions in low Earth orbit, where traffic is dense and even small fragments can cause catastrophic damage due to the high velocities involved. A single collision can generate thousands of debris fragments, which in turn increase the likelihood of further collisions—a phenomenon known as the Kessler Syndrome. This poses a serious threat to both current and future missions. Another major issue is related to the end-of-life phase of satellite operations. If a satellite is not properly deorbited or moved to a designated graveyard orbit after completing its mission, it remains in orbit as a defunct object. This contributes to long-term congestion and increases the risk of collision with operational spacecraft. Additionally, there is the danger of losing control over a satellite, whether due to power system failures, damage to solar panels, attitude control issues, software errors, or cyberattacks. A non-responsive satellite becomes an unpredictable hazard, potentially drifting across multiple orbital paths. There is also the geopolitical dimension of risk, particularly the potential for satellites to become targets in conflicts involving anti-satellite (ASAT) weapons. The deliberate destruction of a satellite in orbit creates a large volume of debris, further exacerbating the already critical space debris problem. Lastly, satellites that malfunction or are improperly configured may cause radio frequency interference, which can disrupt communication links, satellite navigation signals, or Earth observation data collection, affecting both civilian and military systems. These risks underline the importance of rigorous space traffic management, responsible satellite design, and international cooperation to ensure the long-term sustainability of near-Earth space. | 14,304.82 1 |

| Model | Response | Runtime (ms) |

|---|---|---|

| LLaVA 1.5 | The current state of research surrounding these objects, which are small black objects floating in space, is still ongoing. Scientists and researchers are actively studying these objects to understand their properties, origins, and potential implications for our understanding of the universe. The study of these objects can provide valuable insights into the formation and evolution of celestial bodies, as well as the processes that shape and influence the cosmos. As a result, ongoing research in this area is crucial for advancing our knowledge of the universe and its various components. | 5455.89 |

| LLaVA 1.5 + GraphRAG | The current state of research surrounding space debris involves various institutions and organizations working together to detect, track, and study the problem. The current research papers provide different approaches to addressing the issue of space debris, such as using deep learning algorithms, radar observations, and traditional radar tracking. These papers contribute to the broader field of automated space object detection and tracking, and their methodologies could complement each other in advancing the field. | 9677.46 |

| Gemini | The Rosetta mission concluded on 30 September 2016, with a controlled landing on Comet 67P/Churyumov-Gerasimenko. 1 Currently, the Rosetta spacecraft is no longer operational as it impacted the comet’s surface at the end of its mission. However, the vast amount of data collected by the orbiter and the Philae lander continues to be analyzed by scientists worldwide. Research is ongoing, focusing on the wealth of information gathered about the comet’s composition, structure, and behavior as it orbited the Sun. This includes studying the organic molecules discovered, the unique characteristics of its water compared to Earth’s, and the insights into the comet’s formation and its potential role in the early solar system. The data is crucial for understanding comets in general and their contribution to the evolution of our solar system. 2 While there are no active missions directly studying Comet 67P at this moment, the legacy of Rosetta persists through the continuous scientific investigation of its findings. | 12,089.08 1 |

| GPT-4o | The object in the image appears to be a SPARK (Space-based Platform for Advanced Reconnaissance and Knowledge) satellite. Research on such small satellites focuses on their use for space-based surveillance, Earth observation, signal intelligence, and rapid deployment constellations. Current work includes improving their onboard autonomy, miniaturised sensors, and communication systems, as well as addressing challenges like space debris avoidance, secure data handling, and cooperative satellite swarms. There’s increasing interest in using AI for onboard processing to reduce reliance on ground stations. | 10,672.60 1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Roll, D.S.; Kurt, Z.; Li, Y.; Woo, W.L. Augmenting Orbital Debris Identification with Neo4j-Enabled Graph-Based Retrieval-Augmented Generation for Multimodal Large Language Models. Sensors 2025, 25, 3352. https://doi.org/10.3390/s25113352

Roll DS, Kurt Z, Li Y, Woo WL. Augmenting Orbital Debris Identification with Neo4j-Enabled Graph-Based Retrieval-Augmented Generation for Multimodal Large Language Models. Sensors. 2025; 25(11):3352. https://doi.org/10.3390/s25113352

Chicago/Turabian StyleRoll, Daniel S., Zeyneb Kurt, Yulei Li, and Wai Lok Woo. 2025. "Augmenting Orbital Debris Identification with Neo4j-Enabled Graph-Based Retrieval-Augmented Generation for Multimodal Large Language Models" Sensors 25, no. 11: 3352. https://doi.org/10.3390/s25113352

APA StyleRoll, D. S., Kurt, Z., Li, Y., & Woo, W. L. (2025). Augmenting Orbital Debris Identification with Neo4j-Enabled Graph-Based Retrieval-Augmented Generation for Multimodal Large Language Models. Sensors, 25(11), 3352. https://doi.org/10.3390/s25113352