Abstract

The grayscale images of passenger aircraft targets obtained via Synthetic Aperture Radar (SAR) have problems such as complex airport backgrounds, significant speckle noise, and variable scales of targets. Most of the existing deep learning-based target recognition algorithms for SAR images are transferred from optical images, and it is difficult for them to extract the multi-dimensional features of targets comprehensively. To overcome these challenges, we proposed three enhanced methods for interpreting aircraft targets based on YOLOv8. First, we employed the Shi–Tomasi corner detection algorithm and the Enhanced Lee filtering algorithm to convert grayscale images into RGB images, thereby improving detection accuracy and efficiency. Second, we augmented the YOLOv8 model with an additional detection branch, which includes a detection head featuring the Coordinate Attention (CA) mechanism. This enhancement boosts the model’s capability to detect small and multi-scale aircraft targets. Third, we integrated the Swin Transformer mechanism into the YOLOv8 backbone, forming the C2f-SWTran module that better captures long-range dependencies in the feature map. We applied these improvements to two datasets: the ISPRS-SAR-aircraft dataset and the SAR-Aircraft-1.0 dataset. The experimental results demonstrated that our methods increased the mean Average Precision (mAP50~95) by 2.4% and 3.4% over the YOLOv8 baseline, showing competitive advantages over other deep learning-based object detection algorithms.

1. Introduction

Spaceborne Synthetic Aperture Radar (SAR) captures ground imagery by transmitting and receiving electromagnetic waves [1]. It can meticulously record details of ground targets and produce high-resolution images by employing synthetic radar pulse technology. In the field of Synthetic Aperture Radar (SAR) imaging, passenger aircraft are important targets of great research value. Leveraging this technology enables real-time monitoring of passenger aircraft positions and dynamics. This not only significantly bolsters aviation management safety but also substantially improves operational efficiency [2,3,4,5]. The traditional SAR image interpretation process comprises three stages: target detection, identification, and recognition [6,7,8,9,10]. The methods in the traditional SAR image interpretation process generally require expert intervention to adjust parameters and interpret results. Moreover, the SAR imaging process can be compromised by complex environments and clutter interference, which may lead to challenges in target interpretation, reduced generalization capabilities, and lower detection accuracy than expected.

Deep learning-based algorithms have demonstrated superior performance in image recognition [11,12,13,14,15,16]. Numerous scholars have significantly advanced SAR image target interpretation. Wang et al. proposed an adaptive and robust edge detector to optimize the YOLO structure, enhancing the interpretation accuracy of aircraft targets [17]. However, it may slow the convergence of network training. Xiao et al. introduced a novel network that effectively extracts aircraft targets’ scattering features [18], yet it lacks an end-to-end methodology. Zhang et al. developed the SFRE-Net architecture to enhance the relationship between scattering features, enriching semantic information and mitigating semantic conflicts during feature fusion [19]. Jiang et al. introduced a coordinate-aware mixed attention and spatial semantics joint-context approach to diminish background clutter effects and enhance the detection of small targets [20]. Sun et al. designed the CP-FCOS network structure based on the Anchor-Free method, which can better accomplish the task of ship detection in SAR images through pixel-by-pixel prediction [21]. Zhou et al. designed the SCNet-YOLO network structure based on symmetric convolution and integrated the attention mechanism. This enables it to effectively capture multi-scale targets, and it is particularly effective in enhancing the detection ability of small targets [22]. Sun et al. designed the BiFA-YOLO network structure based on bidirectional feature fusion and angular classification, which can effectively improve the detection ability of arbitrary-oriented ships [23].

While progress has been made in developing deep learning-based algorithms for interpreting aircraft targets in SAR images, significant challenges persist in enhancing the detection accuracy in practical settings. First, radar signal sampling and imaging mechanisms contribute to the discontinuity in image characteristics. This discontinuity compromises image quality, limits detailed information about aircraft targets, reduces the correlation between components, and impedes the detection of entire aircraft targets. Second, airport backgrounds are highly complex, with strong scattering structures such as terminal buildings, runways, and vehicles. These structures can obscure target features and increase the likelihood of false positives and missed detections. Third, the considerable variation in aircraft sizes poses a challenge for maintaining prediction accuracy across different scales, particularly when image resolution is low and small targets are easily overlooked [24,25]. In conclusion, deep learning models still struggle to extract feature information from small targets and differentiate targets from background noise. We utilized two SAR image datasets, the ISPRS-SAR-Aircraft dataset [26] and the SAR-Aircraft-1.0 dataset [27], which encompass seven categories of targets, including the Boeing 787, A330, Boeing 737-800, A320/321, ARJ21, A220, and others [28].

In this paper, we enhance the capabilities of the YOLOv8 algorithm [29,30] by addressing specific challenges associated with interpreting SAR images for aircraft target detection. Recognizing the limitations of the baseline YOLOv8 algorithm in handling SAR imagery [31,32,33,34], we introduce three innovative optimization methods aimed at improving feature expression, the detection of multi-scale targets, and the overall detection accuracy. Each method targets a specific problem area, contributing to a more robust model capable of handling the complexities of SAR image analysis. The effectiveness of these enhancements is validated through extensive experimental testing, demonstrating significant improvements in the detection accuracy of aircraft targets, particularly smaller ones. Our contributions are summarized as follows:

- (1)

- Gray-to-RGB (GTR) conversion: We developed the GTR method to transform single-channel grayscale SAR images into three-channel RGB images. This conversion process augments the feature space available for the model, thereby reducing the tendency towards overfitting and enhancing detection accuracy.

- (2)

- Four-scale detectors with coordinate attention (4SDC): To address the challenge of detecting aircraft targets of varying sizes in SAR images, we introduced the 4SDC approach. This method adaptively adjusts the weights of different scale detection branches, significantly reducing missed detections of small targets and improving the model’s ability to handle multi-scale targets.

- (3)

- C2f-SWTran module integration: By incorporating the Swin Transformer mechanism into the backbone of YOLOv8, we created the C2f-SWTran module. This integration effectively captures fine-grained and global information within the imagery, leveraging a combination of self-attention and global perception mechanisms to enhance the processing of multi-scale feature maps and increase detection precision.

The structure of this paper is outlined as follows: Section 2 introduces the YOLOv8 network framework. Section 3 details three enhanced algorithms. Section 4 presents the experimental results and assesses the effectiveness of the optimization algorithms. Finally, Section 5 concludes the paper and outlines future research directions.

2. Preliminary: YOLOv8 Series Architecture

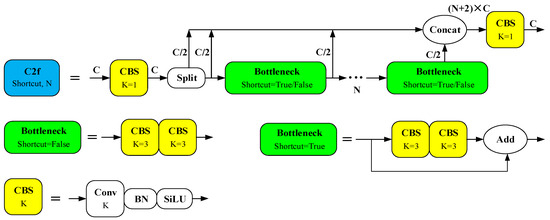

Ultralytics (Frederick, MD, USA) introduced YOLOv8 in early 2023. Compared to its predecessor YOLOv5, the YOLOv8 model retains the same architectural framework, comprising four layers: input, backbone, neck, and head. However, YOLOv8 includes enhancements in various processing details that significantly improve its overall detection performance. Specifically, in the backbone layer of YOLOv8, a Coarse-to-Fine (C2f) structure was developed, incorporating elements from the C3 residual structure of YOLOv5 and the ELAN concept from YOLOv7, as illustrated in Figure 1.

Figure 1.

Schematic diagram of C2f network structure.

Where C represents the number of channels in the feature image, the number of channels in the input and output feature maps of the C2f module is C. N represents the number of repetitions of the Bottleneck module, which varies according to the chosen YOLOv8 model variant (N/S/M/L/X) and the placement of the C2f module within the network. “K” indicates the size of the convolution kernel. Within the backbone network, the Bottleneck module in the C2f setup includes a shortcut residual structure; however, this configuration is absent in the Bottleneck module of the neck network.

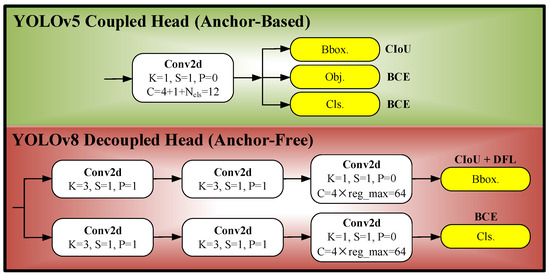

The C2f strategy divides the detection task into two phases via a split operation: rough detection and detailed analysis. Initially, the algorithm quickly locates potential targets and conducts concentrated analysis in these areas. Depending on the requirements of different scenarios, the weights between the two phases can be adaptively adjusted to balance speed and accuracy. C2f incorporates additional residual structures, facilitating freer information interaction across various network layers. These structures efficiently process and integrate information, effectively mitigating the issue of vanishing gradients often encountered in deeper networks. Unlike C3, C2f eliminates convolution operations in its branches, simplifies the model’s structure, and reduces parameter count, which decreases the risk of overfitting and enhances generalization capabilities. This configuration allows C2f to capture richer gradient flow information while maintaining lightweight model parameters and enhancing the overall detection performance of the YOLOv8 algorithm. Compared with YOLOv5, the YOLOv8 detection head employs a decoupled processing approach, discarding the object branch while retaining the classification and regression branches, and shifting from an Anchor-Based to an Anchor-Free configuration. These changes are depicted in Figure 2.

Figure 2.

Detection head comparison of YOLOv5 and YOLOv8.

YOLOv5 integrates regression, classification, and confidence detection into a single network architecture for simultaneous learning and prediction. However, conflicts in parameter optimization among these tasks can reduce its flexibility. YOLOv8 adopts a decoupling approach, separating the object detection task into regression and classification [35]. This separation minimizes parameter interference, enhances learning efficiency, and allows for more precise adjustments in the learning process of each subtask. By eliminating the confidence detection task, YOLOv8 simplifies the model’s output and reduces the number of training parameters, potentially increasing the running speed and inference efficiency. The model applies distinct loss functions and optimization strategies for regression and classification: the regression task combines the DFL function [36] and the CIoU Loss function, while the classification task employs the BCE Loss function. Integrating three distinct loss functions facilitates quicker, more precise target localization and sustains high performance in complex scenarios with dense targets. Additionally, YOLOv8 embraces an Anchor-Free design, which eliminates reliance on dataset-specific prior knowledge and mitigates issues stemming from inaccuracies in such knowledge. The direct prediction approach better captures object contours, enhancing detection performance, reducing inference times, and improving generalization capabilities.

Other minor enhancements include disabling Mosaic data augmentation in the final 10 epochs of training [37], which has been shown to improve accuracy. In the neck of the network, removing the convolutional structure in the up-sampling stage of the PAN-FPN reduces computational complexity and speeds up inference. Unlike YOLOv5, which uses a focus structure for initial down-sampling to convert spatial information into channel information, YOLOv8 has demonstrated improved performance by replacing this structure with direct convolution. Overall, YOLOv8 exhibits superior performance and efficiency in handling complex detection tasks.

3. Materials and Methods

3.1. GTR Conversion

The YOLOv8 architecture is optimized for processing three-channel RGB images, utilizing color information to boost object recognition capabilities. In contrast, the dataset includes SAR images, which are single-channel grayscale images that offer less feature information compared to three-channel color images. When processing SAR images, a pseudo-RGB image is created by duplicating the grayscale image across all three channels. While this approach satisfies the input requirements of YOLOv8, it partially reduces detection performance. To overcome this limitation, this paper proposes a novel method for converting grayscale images into RGB images by incorporating scattering characteristics. Specifically, the original SAR image is assigned to the blue (B) channel, an image highlighting peak features extracted via a corner detection algorithm is mapped to the red (R) channel, and an image processed using a denoising filter is mapped to the green (G) channel. These channels are combined to form a new RGB image that enhances detection accuracy.

3.1.1. Corner Detection Algorithm

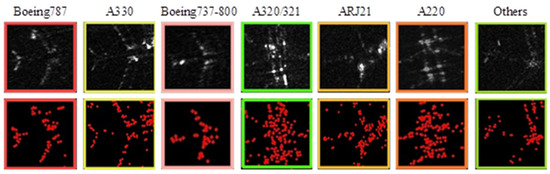

SAR images are discrete and typically of poor imaging quality. They consist of multiple irregular, bright spots known as strong scattering points, which carry crucial semantic information for identifying aircraft types. An aircraft’s structure includes the nose, fuselage, wings, and tail, with strong scattering points in SAR images often corresponding to these components’ joints. The distribution of these points reveals the geometric characteristics of aircraft targets. Different aircraft models, which have varying fuselage lengths and distinct sizes and shapes of noses, wings, and tails, therefore, display unique characteristics in their SAR images, as illustrated in Figure 3. The corner detection algorithm extracts these strong scattering points from SAR images. Integrating these peak characteristics into the RGB image can significantly enhance the accuracy of aircraft target interpretation.

Figure 3.

The peak features extracted using the Shi–Tomasi detection algorithm. The original aircraft target SAR images (first row) and the peak feature images extracted using the Shi–Tomasi detection algorithm (second row).

Harris and Shi–Tomasi are commonly used corner detection algorithms [38,39]. They identify corner points by detecting the grayscale changes in local image windows. We adopted the Shi–Tomasi method to extract corner features of aircraft targets. The Shi–Tomasi algorithm optimizes the corner response function in the Harris algorithm, which significantly improves the detection efficiency. The following is an explanation of the basic steps of the Shi–Tomasi algorithm. Firstly, a mathematical model is established based on the corner detection process in the actual image, as shown in Formula (1).

is the grayscale value of the original image, is the offset with as the center, is the weighted window function centered at , and uses the Gaussian window function in the paper. is the grayscale difference calculated based on the offset of the local window. Formula (1) performed the first-order Taylor expansion and then merged and simplified to obtain Formula (2).

and are the partial derivatives of the image in the x-axis and y-axis directions. in the formula is the covariance matrix of the image gradient, a real symmetric matrix. After diagonalization processing, the eigenvalues and in the two orthogonal directions are extracted. Formula (3) shows the corner response function.

Parameter adjustment based on experience is unnecessary; instead, smaller eigenvalues should be utilized as the scoring metric for the response function R(x, y). The Shi–Tomasi algorithm, compared to the Harris algorithm, demonstrates greater robustness and is particularly valuable in processing SAR images with complex noise. In the preprocessing of SAR images, the Shi–Tomasi algorithm is applied specifically within the aircraft target box to minimize the influence of background noise, as illustrated in Figure 4. However, when the trained model predicts aircraft types and lacks information about the aircraft target box, the Shi–Tomasi algorithm is applied to the entire SAR image during preprocessing.

Figure 4.

Corner detection results of aircraft targets using the Shi–Tomasi algorithm. The original SAR image (first column) and the image processed using the corner detection algorithm (in the bounding boxes).

3.1.2. Multiplicative Noise-Filtering Algorithm

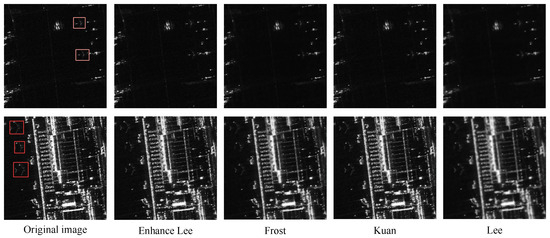

The principle of SAR imaging is based on the coherent imaging of microwaves. Interference among electromagnetic waves generates speckle noise in SAR images, a type of multiplicative noise. This noise can obscure the detailed information of aircraft targets, cause uneven brightness across the images, and severely impact the ability to identify aircraft types. We compared and analyzed four spatial domain filtering algorithms designed to suppress speckle noise in SAR images: the Lee, Frost, Kuan, and Enhanced Lee algorithms [40,41,42,43]. Each algorithm has distinct advantages and disadvantages, but their primary objective is to smooth speckle noise while preserving the detailed information of aircraft targets. In practice, the choice of filtering algorithm is tailored to specific scenarios. We extracted two images from the dataset, and the images processed using the above four algorithms are shown in Figure 5.

Figure 5.

Comparison of four filtering algorithms on SAR images. The original SAR images with bounding boxes (first column) and filtered images after Enhanced Lee, Frost, Kuan, and Lee algorithms (second, third, fourth, and fifth columns).

It is difficult to evaluate the quality of the filtered images with the naked eye. The filtering effects are evaluated using ENL [44] and ESI [45] indicators. Section 4.3.1 will provide a detailed description of the evaluation process. Through comparative analysis, the Enhanced Lee algorithm has the best performance. We used it for filtering and denoising. The formula is as follows:

where represents the filtered image, represents the original image, represents the mean value of the filtering window, and represents the weight coefficient. The formula is as follows:

where and represent the standard deviation coefficients of the filtering window and the original image, and the formula is shown as follows:

where and represent the standard deviations of the filtering window and the original image. and represent the mean values of the filtering window and the original image. and are two threshold values, and their calculation formulas are as follows:

where represents the ENL of the original image. When , this window adopts the mean filtering algorithm. When , this window should retain the information of the original image. When , this window is most affected by noise and adopts the Lee filtering algorithm.

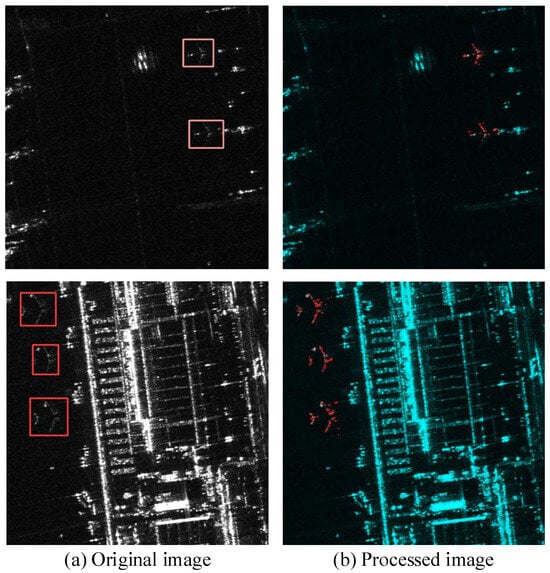

The new RGB image is constructed by assigning the Enhanced Lee filtered image to the green channel, the original SAR grayscale image to the blue channel, and the Shi–Tomasi feature image to the red channel. This channel allocation is strategically designed to maximize the complementarity of information and enhance the network’s feature learning capability for aircraft detection. Specifically, the blue channel retains the scene’s global backscattering and intensity information from the original SAR image, providing essential contextual cues. The red channel, containing the Shi–Tomasi output, highlights corner and edge features, strengthening the network’s ability to localize structural details and contours of aircraft targets. Meanwhile, the green channel incorporates the Enhanced Lee filtered image, which improves local contrast and texture by effectively reducing speckle noise while preserving fine details, crucial for distinguishing subtle aircraft features from complex backgrounds. By distributing these complementary features across separate channels, the downstream neural network is better equipped to extract, integrate, and utilize multi-modal information during training and inference. This unified representation encourages the model to learn more robust and discriminative features, ultimately improving aircraft target detection accuracy. As illustrated in Figure 6, the newly generated RGB images form the input for subsequent training and prediction, substantially boosting overall performance in aircraft detection tasks.

Figure 6.

RGB images produced using the GTR method. The original SAR images with bounding boxes (first column) and the images processed using GTR method (second column).

3.2. 4SDC Detection Model

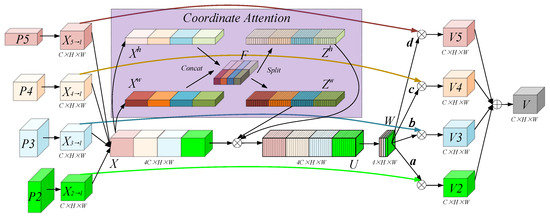

The original YOLOv8 model exhibited low detection accuracy for small targets, with its detection head comprising three branches for large, medium, and small targets, respectively. To enhance detection accuracy for tiny targets, the fourth detection branch was added to the YOLOv8 model. Initially, each detection branch contributed equally to predicting a target in the original YOLOv8. However, the deeper detection branch played a more significant role for large targets, while the shallower branch was more influential for small targets. To address this, an attention mechanism was integrated into the head of the model, enabling automatic adjustment of the weights of each branch and adaptive fusion of their contributions. Prominent attention mechanisms include SENet, CBAM, and Coordinate Attention (CA) [46,47,48]. SENet focuses solely on channel dimension correlations, overlooking spatial dimensions. CBAM addresses spatial dimension correlations but is limited to local areas. The chosen CA mechanism considers spatial and channel dimension relationships and addresses long-range dependencies. In the paper, a 4SDC model was designed to enhance the detection ability for multi-scale targets, as shown in Figure 7.

Figure 7.

The 4SDC detection model. P2, P3, P4, and P5 are the inputs; X2→l, X3→l, X4→l, and X5→l represent that different inputs are adjusted to a unified size at the l layer; V2, V3, V4, and V5 are the feature maps after adaptive fusion at the l layer; and V is the output.

P2, P3, P4, and P5 represent the four detection branches outputting from the YOLOv8 architecture. The enhancement in the algorithm involves adaptively fusing these branches at four levels. Specifically, using the P4 level as an example, which involves multi-scale feature fusion, we note that l equals 4. The feature maps of P2, P3, P4, and P5 differ in resolution and channel count. To effectively merge at the P4 level, adjusting the feature maps from the other levels is necessary. P5 performs an up-sample to change the resolution and executes 3 × 3 convolution to change the channels, and then X5→4 is obtained. P2 and P3 perform a down-sample to change the resolution and execute 3 × 3 convolution to change the channels, and then X3→4 and X2→4 are obtained. The sizes of the feature maps (X2→4, X3→4, X4→4, X5→4) are all C × H × W. They are fused in the channel dimension to obtain the feature map X, which has a size of 4C × H × W. The expression of X is as follows:

Since the resolutions of feature maps at different levels are different, simple fusion will introduce conflicting information and reduce the expressive ability of feature information. We adopted the CA mechanism, which enhances the expression of channel and spatial information and suppresses interference between unrelated information. On channel dimension C, the feature map X is average pooling in the h and w directions to obtain and . The calculation formulas are as follows:

where and are concatenated in the spatial dimension and compress the channel dimension using the full convolutional operation with a kernel size of 1 × 1, then performing the BN operation and ReLU function to obtain F, for which the formula is shown as follows:

The F is split into two vectors, and the original channel dimension is recovered using the full convolutional operation with a kernel size of 1 × 1 separately. Then, a sigmoid function is performed to obtain the weight vectors and , for which the formula is shown as follows:

The two weight vectors are multiplied by the input X to obtain U, which is shown as follows:

where and represent the feature map of the cth channel of U and X. They have the same size: 4C × H × W. The feature map U can amplify the weights of important features and capture the correlations between channel and spatial information. It can also explore the long-distance dependence between input and output features to reduce the interference of redundant and conflicting information. To further capture the spatial features, U is convolved with a kernel size of 1 × 1 to compress the channel dimension to four to obtain W with a size of 4 × H × W. Then, the softmax function is performed on W to obtain four normalized weight matrices—a, b, c, and d—all of which are H × W. The new fused feature map V is obtained by multiplying the feature maps at each level with the weight matrix, which is shown as follows (l = 4):

The weight matrices a, b, c, and d are learned and iteratively updated through network backpropagation during the model training process. These parameters adaptively adjust based on the input data to enhance aircraft target recognition in SAR images. For smaller aircraft targets, higher weights are assigned to shallow feature maps, whereas larger aircraft targets receive higher weights in deeper feature maps. This strategic allocation of weights between shallow and deep features enables more precise target recognition. Additionally, more contextual information is aggregated across feature layers with varying receptive fields, fully leveraging the multi-dimensional features of different depth layers. The resulting fused features combine robust semantic and rich textural details, thereby improving the detection and discrimination of small targets.

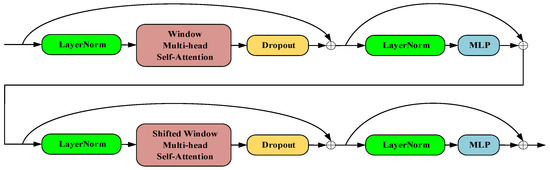

3.3. C2f-SWTran Feature Extraction Module

The backbone of YOLOv8 utilizes multiple convolutional layers and residual connections, which are effective for capturing local and hierarchical information from images. However, this convolutional architecture is limited in modeling long-range feature dependencies, as it relies on relatively small receptive fields and fixed-scale feature extraction. This limitation reduces the model’s robustness, especially in complex SAR scenarios. To address these challenges, we introduce the Swin Transformer architecture, which excels at capturing global feature dependencies through its shifted window and hierarchical structure. This design enables the effective modeling of both local and global representations, which is essential for complex visual tasks. Moreover, compared to standard global self-attention mechanisms, the Swin Transformer significantly reduces computational complexity, making it more suitable for large-scale vision applications. By integrating the strengths of both traditional convolutional structures and the Swin Transformer, we achieve complementary feature extraction. Based on this motivation, we developed the C2f-SWTran module to enhance detection accuracy. Figure 8 illustrates the architecture of the Swin Transformer.

Figure 8.

Structural diagram of the Swin Transformer block. The model applies window-based and shifted window multi-head self-attention, combined with normalization, dropout, and MLP, to efficiently extract hierarchical features.

The Swin Transformer block comprises two sub-layers that function in tandem. Its core components, W-MSA and SW-MSA, enhance the traditional Transformer architecture. W-MSA divides the full feature map into multiple non-overlapping windows and conducts self-attention independently within each window, reducing computational demands and enhancing efficiency. However, this approach initially isolated information transfer between windows. To address this limitation, SW-MSA was introduced to facilitate information flow between adjacent windows, thereby improving the model’s grasp of global information in SAR images. The Swin Transformer structure significantly boosts both performance and efficiency, making it particularly effective for computer vision detection tasks in complex environments. In the YOLOv8 architecture, Swin Transformer acts as a plug-and-play module following the C2f module, and together with C2f, it forms the integrated C2f-SWTran module.

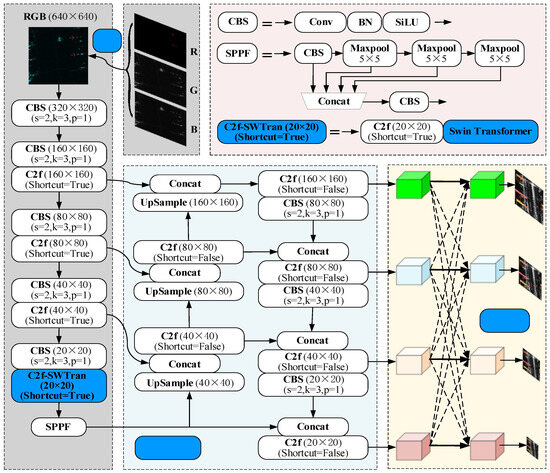

3.4. Improved YOLOv8 Structure

YOLOv8, known for its fast inference speed and high-precision detection, serves as the baseline for our study. We implemented three enhancements to improve the detection accuracy of aircraft targets. First, we transformed grayscale images in the dataset into RGB images using the Shi–Tomasi algorithm and Enhanced Lee filtering, which enhances the feature representation ability of SAR images and makes them more compatible with the requirement of the YOLOv8 model for inputting three-channel color images. Second, we developed the 4SDC module to improve the detection accuracy of multi-scale targets, especially for small targets, where the improvement in detection ability is more evident. Third, we integrated the Swin Transformer into YOLOv8′s backbone to enhance the detection accuracy. Figure 9 illustrates the improved YOLOv8 model.

Figure 9.

Overview of the improved YOLOv8 architecture. The model integrates the GTR, C2f-SWTran, and 4SDC modules to enhance feature extraction and multi-scale fusion, improving detection accuracy for SAR targets.

4. Results

To validate the effectiveness of our improvement strategies, we conducted a series of ablation studies. These studies are detailed in three main sections: an overview of the IS-PRS-SAR-Aircraft and SAR-Aircraft-1.0 datasets, a description of the experimental setup, and a comparative analysis of the methods and results.

4.1. ISPRS-SAR-Aircraft Dataset and SAR-AIRcraft-1.0 Dataset

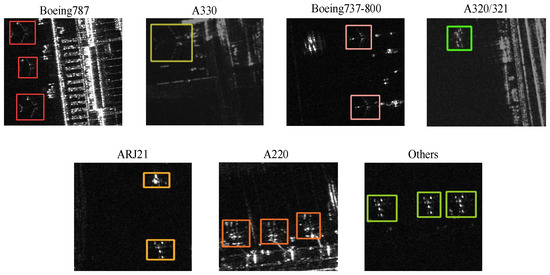

Both datasets are constructed based on the images from the domestic Gaofen-3 satellite, covering the image data of multiple civil airports at different periods. Each dataset contains seven aircraft categories. We have carefully selected seven representative images from the two datasets (Figure 10). Each image provides detailed category information. However, the presence of non-target elements such as terminal buildings and baggage trolleys, along with coherent speckle noise, contributes to the complexity of the SAR images. These images are characterized by complex scenes, diverse noises, and multiple scales, heightening the challenge of recognizing aircraft types.

Figure 10.

Examples of seven aircraft categories in SAR images. Each image shows representative samples with colored boxes marking different aircraft types, illustrating the dataset’s diversity and annotation quality.

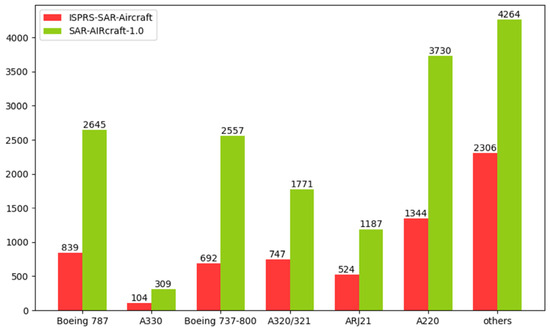

The ISPRS-SAR-Aircraft dataset contains 2000 SAR images and 6556 aircraft instances. The SAR-AIRcraft-1.0 dataset contains 4368 SAR images and 16,463 aircraft instances. The SAR images in the training, validation, and test sets are distributed according to an 8:1:1 ratio, and the processing methods of the two datasets are consistent. The number of each type of aircraft is illustrated in Figure 11.

Figure 11.

The quantity of each aircraft type in the two datasets.

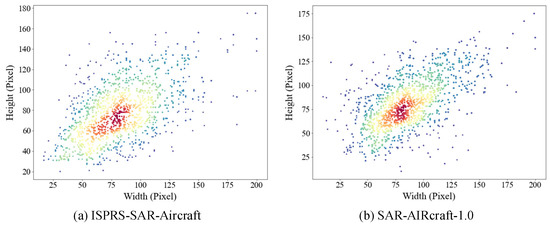

The sizes of the aircraft target boxes in the two datasets vary significantly, and there is a relatively large number of small targets. We classify anchor boxes smaller than 60 pixels as small targets. The ISPRS-SAR-Aircraft dataset contains 6556 aircraft instances, among which 1956 are small targets, and their distribution is shown in Figure 12a. The SAR-AIRcraft-1.0 dataset contains 16,463 aircraft instances, among which 3874 are small targets, and their distribution is illustrated in Figure 12b.

Figure 12.

Size distribution of target boxes in both SAR datasets. Different colors represent the different distribution densities of target box sizes.

4.2. The Configuration of Our YOLOv8 Experimental Environment

All experiments were conducted on a 64-bit Windows 11 system equipped with an NVIDIA GeForce RTX 4090 GPU, utilizing CUDA 12.4 for acceleration. The network was implemented in PyTorch 2.5.1 with Python 3.12.8. Hyperparameter configurations are summarized in Table 1. Our choices were informed by both best practices in the literature and preliminary validation experiments. The learning rate (0.01) and optimizer (SGD) were selected for their reliable convergence and robust performance in YOLO-based object detection tasks. A batch size of 32 was determined to offer an optimal balance between computational efficiency and detection accuracy. Data augmentation parameters—including Mosaic, Flipud, Fliplr, and Scale—were tuned through grid search within reasonable ranges, with final values selected based on peak mean Average Precision (mAP) on the validation set while mitigating overfitting.

Table 1.

The configurations of hyperparameters.

4.3. Experimental Results

4.3.1. Experimental Analysis of the Enhanced Lee Algorithm

In the paper, the coherent speckle-noise-filtering algorithms of Lee, Frost, Kuan, and Enhanced Lee were adopted for SAR images. Different algorithms may be suitable for various application scenarios. Evaluating the quality of these filtering algorithms through human observation is challenging. Therefore, we employed two indicators, ENL and ESI, to assess the effectiveness of the filtering algorithms. ENL serves as a measure for assessing the noise level and texture smoothness in SAR images. The intensity of the noise is evaluated using ENL. The better the performance of the filtering algorithm in noise suppression, the higher the ENL value. The formula of ENL is as follows:

where µ represents the mean value of the SAR image, and σ represents the variance of the SAR image. ESI is an indicator for evaluating the edge information preservation ability of different SAR image-filtering algorithms. ESI can measure whether the image-filtering algorithm can effectively retain the edge and detail information while removing noise. The closer the ESI value is to 1, the more effectively the filtering algorithm retains edge and detail information in the SAR image. The formula for ESI is shown below:

where represents the pixel’s grayscale value corresponding to position in the SAR image before filtering. represents the pixel’s grayscale value corresponding to position in the SAR image after filtering. ENL and ESI are usually mutually constrained. Increasing the ENL will reduce the ESI, and increasing the ESI will reduce the ENL. In SAR image recognition, edge information includes the crucial feature. If the edge information is weakened seriously, it will significantly affect the subsequent recognition of aircraft targets. Therefore, algorithms with higher ESI values are preferentially selected. We conducted experiments using the four filtering algorithms on all SAR images in the dataset. The average ENL and ESI values were calculated and are presented in Table 2:

Table 2.

ENL and ESI values for different filter algorithms.

In SAR images, the edge information of the aircraft target, processed using the Enhanced Lee algorithm, is optimally preserved with an ESI value of 0.883. This algorithm adjusts its response based on the pixel distribution within different filtering windows: it suppresses intense noise and ignores mild noise, effectively reducing noise in SAR images. For this study, we utilized the Enhanced Lee algorithm for the filtering process.

4.3.2. Experimental Analysis of GTR

As described in Section 3.1, we utilized the Shi–Tomasi corner detection algorithm and the Enhanced Lee noise-filtering algorithm to transform grayscale images in the SAR dataset into RGB images, enriching the information content and creating the RGB dataset. We adopt two assessment parameters: AP and mAP. AP is an indicator for measuring the performance of single-type aircraft target detection. The higher its value, the better the detection performance of the algorithm. Its formula is as follows:

where AP represents the area enclosed by the P-R curve and the coordinate axes, and the calculation formulas for P and R are as follows:

where P and R are determined by TP, FP, and FN. The first character indicates whether the prediction is correct. T represents correct prediction. F represents incorrect prediction. The second character represents the actual predicted result. P represents the positive class. N indicates the negative class. TP represents the number of aircraft targets predicted correctly. FP represents the number of false alarms for aircraft targets. FN represents the number of aircraft targets missed in the detection. mAP is the average value of AP for all types of aircraft. The higher its value, the better the comprehensive detection performance of the algorithm. The dataset contains a total of seven types of aircraft targets, and its formula is shown as follows:

where mAP50 indicates that the IoU threshold is 0.5. mAP50~95 indicates that the IoU threshold is between 0.5 and 0.95, with an interval of 0.05 to obtain the average of 10 mAPs. To evaluate the effectiveness of the GTR, we conducted the following ablation experiments:

As shown in Table 3 below, we performed ablation experiments on two datasets using YOLOv8n and YOLOv8l models as baselines. “×” indicates the non-use, while “√” signifies use. For the dataset ISPRS-SAR-Aircraft, integrating the GTR method improved the mAP50~95 of YOLOv8n by 1.3% and the mAP50~95 of YOLOv8l by 1.2%. For the dataset SAR-AIRcraft-1.0, integrating the GTR method improved the mAP50~95 of YOLOv8n by 1.9% and the mAP50~95 of YOLOv8l by 2.1%. This experiment demonstrates that the GTR method effectively enriches the features of SAR images, thereby improving the detection accuracy of aircraft targets.

Table 3.

Comparative experiment based on the GTR method.

4.3.3. Experimental Analysis of 4SDC Method

The 4SDC module extends the original three detection branches of YOLOv8 to include a fourth detection branch, which is integrated after the second feature layer of the backbone structure primarily to enhance detection capabilities for small targets. The CA mechanism is incorporated into the detection head, enabling adaptive adjustment of the contribution degrees of different detection branches when detecting aircraft targets, thereby improving the overall detection capabilities. To evaluate the performance of the 4SDC module, we conducted ablation studies. The experimental results are shown in Table 4.

Table 4.

Comparative experiment based on the 4SDC method.

We established two additional validation sets: the small-target validation set and the large-target validation set. Images containing small aircraft targets (less than 60 × 60 pixels) are classified into the small-target validation set, while all other images are assigned to the large-target validation set. In the ISPRS-SAR-Aircraft dataset, the small-target set includes 397 SAR images, and the large-target set comprises 1603 SAR images. Similarly, in the SAR-Aircraft-1.0 dataset, the small-target set contains 866 images, and the large-target set includes 3502 images. S-aircraft refers to the small-target validation set, and L-aircraft refers to the large-target validation set. For the dataset ISPRS-SAR-Aircraft, the integration of 4SDC improved the mAP50~95 of YOLOv8n with GTR by 0.6%, and the mAP50~95 of S-aircraft was improved by 0.9%. The integration of 4SDC improved the mAP50~95 of YOLOv8l with GTR by 0.5% and the mAP50~95 of S-aircraft by 0.8%. For the dataset SAR-AIRcraft-1.0, the integration of 4SDC improved the mAP50~95 of YOLOv8n with GTR by 0.7%, and the mAP50~95 of S-aircraft was improved by 1.6%. The integration of 4SDC improved the mAP50~95 of YOLOv8l with GTR by 0.6% and the mAP50~95 of S-aircraft by 1.4%. The ablation experiments confirmed that the 4SDC structure enhances the detection accuracy of aircraft targets, particularly for small targets. The prediction results are displayed in the figure below.

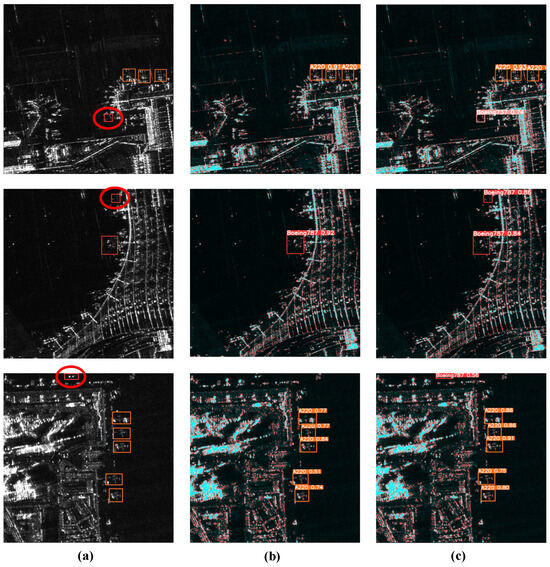

Figure 13 shows the effect diagram of aircraft target recognition after introducing the 4SDC structure. Rectangular boxes of different colors in the figure represent different types of aircraft targets. In Figure 13a, the aircraft marked with red circles are small targets. Figure 13b illustrates that YOLOv8l, when combined with the GTR algorithm, exhibits more missed detections, adversely affecting the overall detection accuracy of SAR images featuring aircraft targets. Incorporating 4SDC enhances the algorithm’s detection performance for small targets, as demonstrated in Figure 13c.

Figure 13.

Comparison of results for 4SDC. (a) The original SAR images with bounding boxes. (b) Recognition results of YOLOv8l with GTR. (c) Recognition results of YOLOv8l with GTR and 4SDC.

4.3.4. Experimental Analysis of C2f-SWTran Module

The C2f-SWTran is a plug-and-play module that, when applied to the YOLOv8 backbone, enhances the capture of global features in SAR images. This module incorporates a self-attention mechanism, which can impact inference speed due to its large parameter count. Consequently, only one C2f-SWTran module was incorporated into the backbone structure. The YOLOv8 backbone comprises five feature layers of varying image sizes. Applying the C2f-SWTran module to the shallow feature maps would increase parameter consumption. Therefore, we opted to integrate this module into the fifth-layer feature map, which has the smallest image size, balancing accuracy and efficiency. Subsequent ablation studies were conducted to evaluate this approach.

For the ISPRS-SAR-Aircraft dataset, the C2f-SWTran module achieves enhancements of 0.5% and 0.6% in the mAP50~95 for YOLOv8n and YOLOv8l (with GTR + 4SDC), respectively. C2f-SWTran still achieves 0.6% and 0.7% enhancements in the mAP50~95 for YOLOv8n and YOLOv8l (without GTR + 4SDC), respectively. For the SAR-AIRcraft-1.0 dataset, the C2f-SWTran module achieves enhancements of 0.6% and 0.7% in the mAP50~95 for YOLOv8n and YOLOv8l (with GTR + 4SDC), respectively. C2f-SWTran still achieves 0.7% and 0.8% enhancements in the mAP50~95 for YOLOv8n and YOLOv8l (without GTR + 4SDC), respectively. Regardless of whether the YOLOv8 algorithm is configured with the GTR + 4SDC module, the degree of improvement in the detection accuracy by the C2f-SWTran module remains the same and is not affected by the GTR + 4SDC module, indicating that the C2f-SWTran module and the GTR + 4SDC module are independent of each other. By applying the same analytical method to both Table 4 and Table 5, similar conclusions can be drawn: the 4SDC module and the GTR + C2f-SWTran module are independent of each other. The three key modules—GTR, 4SDC, and C2f-SWTran—are designed to be complementary and independent. The improvement in detection accuracy by each module does not affect other modules, each addressing different aspects of the detection task. Their combined use leads to a significant overall performance improvement.

Table 5.

Comparative experiment based on the C2f-SWTran module.

PARAM reflects the size of the model parameters. GFLOPs reflect the computational complexity of the model. From the experimental data, it is found that YOLOv8n and YOLOv8l with C2f-SWTran have a PARAM that is 0.24 MB and 1.1 MB larger, respectively, and GFLOPs that are 1.7 G and 10.6 G larger, respectively, compared to the original YOLOv8n and YOLOv8l. YOLOv8n and YOLOv8l with GTR + 4SDC + C2f-SWTran have a PARAM that is 0.25 MB and 1.1 MB larger, respectively, and GFLOPs that are 1.7 G and 10.6 G larger, respectively, compared to the YOLOv8n and YOLOv8l with GTR + 4SDC. The results of the ablation experiment show that after integrating the C2f-SWTran module into the fifth-layer feature map of the YOLOv8 backbone network, the overall model experiences only a slight increase in the number of parameters and computational complexity. However, there is a certain degree of improvement in the detection accuracy of aircraft targets. Therefore, the added computational cost is reasonable relative to the performance boost, and inference remains efficient for practical use.

4.3.5. Comparative Analysis of Improved Algorithms with Other Algorithms

To further demonstrate the effectiveness and superiority of our proposed method, we conducted additional experiments comparing it with state-of-the-art non-YOLO models, including Faster R-CNN, SSD, SKG-DDT [49], and EBPA2N [25]. The results are presented in Table 6 below.

Table 6.

Comparative experiment with other algorithms.

Our proposed method—based on YOLOv8l with the integration of GTR, 4SDC, and C2f-SWTran modules (last row for each dataset)—achieves the highest detection accuracy across both datasets. Specifically, (1) on the ISPRS-SAR-Aircraft dataset, our method achieves an mAP50 of 0.932 and an mAP50~95 of 0.742, outperforming all other models. Notably, compared to non-YOLO methods, such as Faster R-CNN (mAP50: 0.859) and SSD (mAP50: 0.805), our approach improves the mAP50 by over 7.3% and 12.7%. (2) On the SAR-AIRcraft-1.0 dataset, our method achieves an mAP50 of 0.929 and an mAP50~95 of 0.683. This again exceeds both traditional and recent non-YOLO approaches, such as Faster R-CNN (mAP50: 0.838), SSD (mAP50: 0.795), SKG-DDT (mAP50: 0.892), YOLOv7 (mAP50: 0.880), and EBP-A2N (mAP50: 0.913), where our approach improves the mAP50 by over 9.1%, 13.4%, 3.7%, 4.9%, and 1.6%. In addition, for the ISPRS-SAR-Aircraft dataset, the improved YOLOv8n improves the mAP50~95 by 2.4% compared to the original YOLOv8n. The improved YOLOv8l algorithm improves the mAP50~95 by 2.3% compared to the original YOLOv8l. For the SAR-AIRcraft-1.0 dataset, the improved YOLOv8n improves the mAP50~95 by 3.2% compared to the original YOLOv8n. The improved YOLOv8l improves the mAP50~95 by 3.4% compared to the original YOLOv8l. The performance gap further demonstrates the effectiveness of our proposed modules in improving SAR aircraft detection.

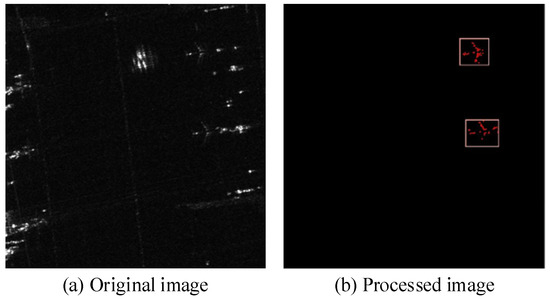

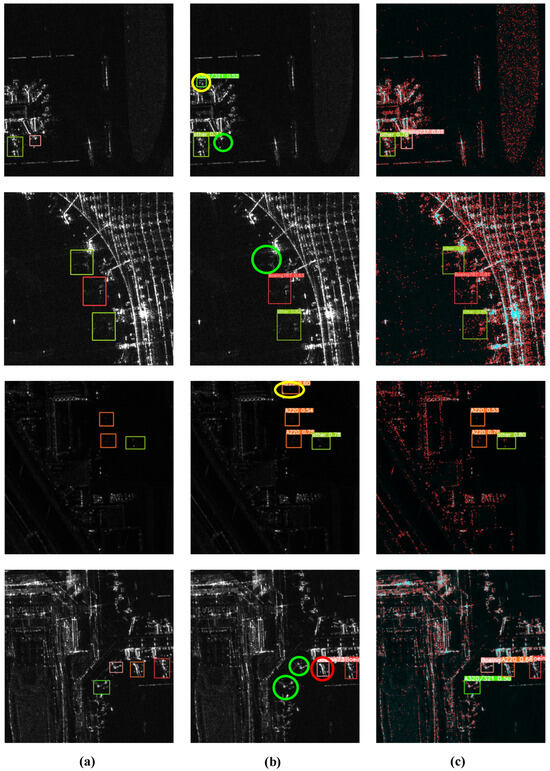

Experimental analysis suggests that the original YOLOv8 model frequently encounters issues with missed detections, false detections, and false alarms for aircraft targets. As depicted in Figure 14b, green circles indicate missed detections, red circles denote false detections, and yellow circles signify false alarms of aircraft targets. Integrating the three improvement methods designed in this study into YOLOv8 has significantly enhanced the accuracy by reducing the rates of missed detections, false detections, and false alarms, as demonstrated in Figure 14c.

Figure 14.

Comparison of the results. (a) The original SAR images with bounding boxes. (b) Recognition results of the original YOLOv8l. (c) Recognition results of our improved YOLOv8l.

4.3.6. Discussions About Cross-Dataset Adaption

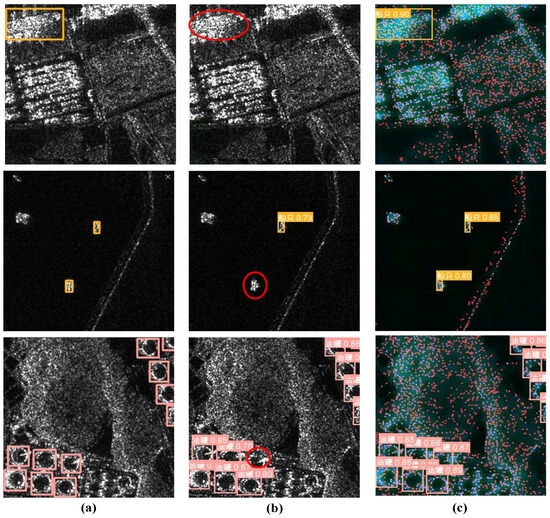

Although the method designed in this paper is applied to the recognition of aircraft targets in SAR images, it contributes a general-purpose solution to the SAR image target recognition field. To thoroughly verify the universality of this method, this paper introduces the MSAR-1.0 dataset for further research [50]. This dataset contains 28,449 detection slices sourced from the Haise-1 and Gaofen-3 satellite data. The image includes four categories of targets: aircraft, oil tanks, bridges, and ships.

In this paper, YOLOv8n is still used as the baseline. The results of the comparative analysis between the improved method and the original YOLOv8n are shown in Table 7, and the detection effects are shown in Figure 15. The original YOLOv8n detection algorithm has a relatively high number of missed detections, as shown by the red circles in Figure 15b. The improved method we proposed alleviates this problem of missed detections and improves target detection accuracy. The experiment demonstrates that the proposed method applies to recognizing targets in SAR images to a certain extent. In Figure 15, “船只” represents Ship, and “油罐” represents oil tank.

Table 7.

Comparative experiment with original YOLOv8.

Figure 15.

Comparison of the results. (a) The original SAR images with bounding boxes. (b) Recognition results of the original YOLOv8n. (c) Recognition results of our improved YOLOv8n.

5. Discussion

To ensure the detection accuracy and stability of aircraft targets, we adopted the YOLOv8 model as the baseline. Due to SAR’s imaging characteristics, directly applying the YOLOv8 model to aircraft target recognition will lead to many missed detections, false detections, and false alarms. Therefore, we optimized the entire detection process. Firstly, we proposed the GTR method to convert SAR grayscale images into RGB images by integrating the corner features of aircraft targets and the speckle-noise suppression algorithm. GTR can improve the detection accuracy of aircraft targets. It is effective not only for the YOLOv8 model but also for other deep-learning models. Secondly, we introduced a small target detection branch and integrated the CA mechanism into the detection head, effectively enhancing the detection accuracy of multi-scale targets, particularly for small targets. Finally, regarding the scattering interference in complex airport scenes, we designed the C2f-SWTran module, which considers the long-range dependence of features to further improve the detection accuracy of aircraft targets. The experiments prove that the improved YOLOv8 model can achieve optimal detection accuracy.

Due to the complexity of airport scenes, numerous interfering factors exist, such as terminal buildings, aircraft tractors, and baggage carts. Their strong scattering characteristics can all affect the identification of aircraft targets. The structure of the aircraft is also relatively complex. Different components exhibit diverse and complex scattering characteristics and are also angle sensitive. All these characteristics increase the difficulty of identifying aircraft targets. It is not easy to significantly improve the detection accuracy of aircraft targets relying solely on single-source SAR signals. To further enhance the detection accuracy, our subsequent research direction is to fuse optical images with SAR images to provide more abundant contextual information. The color, texture, and other details in optical images can help identify aircraft targets, leading to more accurate identification of aircraft types. In addition, although the GTR method proposed in the paper significantly improves the recognition accuracy of aircraft targets by fusing multi-dimensional features, it also introduces an additional SAR image preprocessing step. The entire process of aircraft target recognition is carried out in stages, making the processing procedure rather cumbersome. To address this issue, our subsequent research will explore integrating the multi-dimensional feature fusion strategy into the architecture of deep convolutional models to achieve an end-to-end network structure design. This will simplify the existing complex process and enhance the operational efficiency of the algorithm.

Author Contributions

Methodology, writing—original draft preparation, writing—review and editing, and software, X.W. and D.H.; conceptualization, supervision, and resources, W.H.; methodology, supervision, resources, and funding acquisition, Y.L.; investigation, G.Y.; validation, G.Y. and Q.J.; funding acquisition, D.H.; project administration, Q.J. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the projects “Research on the Noise Mechanism and Suppression Methods of High-precision Phase Measurement in the Low-frequency Band” (No. 2022YFC2203901) and “Research and development of key technologies for intelligent dust-free bag breaking and feeding system based on cloud IoT monitoring” (No. 20230201099GX).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The ISPRS-SAR-Aircraft dataset was provided by the 2021 Gaofen challenge on Automated High-Resolution Earth Observation Image Interpretation (available online: http://gaofen-challenge.com; accessed on 1 October 2021). The SAR-Aircraft-1.0 dataset is provided by the paper “SAR-Aircraft-1.0: High-Resolution SAR Aircraft Detection and Recognition Dataset” published in the 4th 2023 issue of the Journal of Radars (available online: https://radars.ac.cn/article/doi/10.12000/JR23043?viewType=HTML; accessed on 2 February 2024).

Acknowledgments

The authors would like to thank the anonymous reviewers.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Yang, W.; Zhang, Z. SAR Images Target Detection Based on YOLOv5. In Proceedings of the 2021 4th International Conference on Information Communication and Signal Processing (ICICSP), Shanghai, China, 24–26 September 2021; pp. 342–347. [Google Scholar]

- Luo, R.; Zhao, L.; He, Q.; Ji, K.; Kuang, G. Intelligent Technology for Aircraft Detection and Recognition through SAR Imagery: Advancements and Prospects. J. Radars 2024, 13, 307–330. [Google Scholar] [CrossRef]

- Huang, Z.; Yao, X.; Han, J. Progress and Perspective on Physically Explainable Deep Learning for Synthetic Aperture Radar Image Interpretation. J. Radars 2022, 11, 107–125. [Google Scholar]

- Guo, X.; Xu, B. SAR-NTV-YOLOv8: A Neural Network Aircraft Detection Method in SAR Images Based on Despeckling Preprocessing. Remote Sens. 2024, 16, 3420. [Google Scholar] [CrossRef]

- Jao, J.K.; Lee, C.; Ayasli, S. Coherent spatial filtering for SAR detection of stationary targets. IEEE Trans. Aerosp. Electron. Syst. 1999, 35, 614–626. [Google Scholar]

- Smith, M.E.; Varshney, P.K. VI-CFAR: A novel CFAR algorithm based on data variability. In Proceedings of the 1997 IEEE National Radar Conference, Syracuse, NY, USA, 13–15 May 1997; pp. 263–268. [Google Scholar] [CrossRef]

- Kuttikkad, S.; Chellappa, R. Non-Gaussian CFAR techniques for target detection in high resolution SAR images. In Proceedings of the 1st IEEE International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; pp. 910–914. [Google Scholar] [CrossRef]

- Dong, G.; Kuang, G. Classification on the Monogenic Scale Space: Application to Target Recognition in SAR Image. IEEE Trans. Image Process. 2015, 24, 2527–2539. [Google Scholar] [CrossRef]

- Leng, X.; Ji, K.; Zhou, S.; Xing, X. Ship Detection Based on Complex Signal Kurtosis in Single-Channel SAR Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 57, 6447–6461. [Google Scholar] [CrossRef]

- Fu, K.; Dou, F.; Li, H.; Diao, W.; Sun, X.; Xu, G. Aircraft Recognition in SAR Images Based on Scattering Structure Feature and Template Matching. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 4206–4217. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar] [CrossRef]

- Farhadi, A.; Redmon, J. Yolov3, An incremental improvement. In Proceedings of the Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar] [CrossRef]

- Wang, Q.; Sheng, J.; Tong, C.; Wang, Z.; Song, T.; Wang, M.; Wang, T. A Fast Facet-Based SAR Imaging Model and Target Detection Based on YOLOv5 with CBAM and Another Detection Head. Electronics 2023, 12, 4039. [Google Scholar] [CrossRef]

- Xiao, X.; Jia, H.; Xiao, P.; Wang, H. Aircraft Detection in SAR Images Based on Peak Feature Fusion and Adaptive Deformable Network. Remote Sens. 2022, 14, 6077. [Google Scholar] [CrossRef]

- Zhang, P.; Xu, H.; Tian, T.; Gao, P.; Tian, J. SFRE-Net: Scattering Feature Relation Enhancement Network for Aircraft Detection in SAR Images. Remote Sens. 2022, 14, 2076. [Google Scholar] [CrossRef]

- Jiang, Z.; Wang, Y.; Zhou, X.; Chen, L.; Chang, Y.; Song, D.; Shi, H. Small-Scale Ship Detection for SAR Remote Sensing Images Based on Coordinate-Aware Mixed Attention and Spatial Semantic Joint Context. Smart Cities 2023, 6, 1612–1629. [Google Scholar] [CrossRef]

- Sun, Z.; Dai, M.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. An anchor-free detection method for ship targets in high-resolution SAR images. IEEE Trans. Geosci. Remote Sens. 2021, 14, 7799–7816. [Google Scholar] [CrossRef]

- Zhou, W.; Yang, Y.; Zhao, M.; Hu, W. SCNet-YOLO: A symmetric convolution network for multi-scenario ship detection based on YOLOv7. J Supercomput 2025, 81, 630. [Google Scholar] [CrossRef]

- Sun, Z.; Leng, X.; Lei, Y.; Xiong, B.; Ji, K.; Kuang, G. BiFA-YOLO: A novel YOLO-based method for arbitrary-oriented ship detection in high-resolution SAR images. Remote Sens. 2021, 13, 4209. [Google Scholar] [CrossRef]

- Li, X.; Duan, W.; Fu, X.; Lv, X. R-SABMNet: A YOLOv8-Based Model for Oriented SAR Ship Detection with Spatial Adaptive Aggregation. Remote Sens. 2025, 17, 551. [Google Scholar] [CrossRef]

- Wang, J.; Liu, G.; Liu, J.; Dong, W.; Song, W. Automatic Aircraft Identification with High Precision from SAR Images Considering Multiscale Problems and Channel Information Enhancement. Remote Sens. 2024, 16, 3177. [Google Scholar] [CrossRef]

- 2021 Gaofen challenge on Automated High-Resolution Earth Observation Image Interpretation. Available online: http://gaofen-challenge.com (accessed on 1 October 2021).

- Wang, Z.; Kang, Y.; Zeng, X.; Wang, Y.; Zhang, T.; Sun, X. SAR-AIRcraft-1.0: High-resolution SAR aircraft detection and recognition dataset. J. Radars 2023, 12, 906–922. [Google Scholar] [CrossRef]

- Wang, X.; Xu, W.; Huang, P.; Tan, W. MSSD-Net: Multi-Scale SAR Ship Detection Network. Remote Sens. 2024, 16, 2233. [Google Scholar] [CrossRef]

- Glenn, J. Ultralytics YOLOv8. 2023. Available online: https://github.com/ultralytics/ultralytics (accessed on 3 May 2024).

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding Deep Learning Requires Rethinking Generalization. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017; Available online: https://openreview.net/forum?id=Sy8gdB9xx (accessed on 15 October 2023).

- Wu, K.; Zhang, Z.; Chen, Z.; Liu, G. Object-Enhanced YOLO Networks for Synthetic Aperture Radar Ship Detection. Remote Sens. 2024, 16, 1001. [Google Scholar] [CrossRef]

- Cao, S.; Zhao, C.; Dong, J.; Fu, X. Ship Detection in Synthetic Aperture Radar Images under Complex Geographical Environments, Based on Deep Learning and Morphological Networks. Sensors 2024, 24, 4290. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Conference, 3–7 May 2021; Available online: https://openreview.net/forum?id=YicbFdNTTy (accessed on 10 February 2024).

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A Small-Object-Detection Model Based on Improved YOLOv8 for UAV Aerial Photography Scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef] [PubMed]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes for Dense Object Detection. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–12 December 2020. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A combined corner and edge detector. In Proceedings of the Alvey Vision Conference, Manchester, UK, 31 August–2 September 1988; Volume 15, pp. 10–5244. [Google Scholar] [CrossRef]

- Shi, J.; Tomasi, C. Good Features to Track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar] [CrossRef]

- Lee, J.S. Digital image enhancement and noise filtering by use of local statistics. IEEE Trans. Pattern Anal. Mach. Intell. 1980, PAMI-2, 165–168. [Google Scholar] [CrossRef]

- Frost, V.S.; Stiles, J.A.; Shanmugan, K.S.; Holtzman, J.C. A model for radar images and its application to adaptive digital filtering of multiplicative noise. IEEE Trans. Pattern Anal. Mach. Intell. 1982, PAMI-4, 157–166. [Google Scholar] [CrossRef]

- Kuan, D.T.; Sawchuk, A.A.; Strand, T.C.; Chavel, P. Adaptive noise smoothing filter for images with signal-dependent noise. IEEE Trans. Pattern Anal. Mach. Intell. 1985, PAMI-7, 165–177. [Google Scholar] [CrossRef]

- Guo, F.; Sun, C.; Sun, N.; Ma, X.; Liu, W. Integrated Quantitative Evaluation Method of SAR Filters. Remote Sens. 2023, 15, 1409. [Google Scholar] [CrossRef]

- Xie, J.; Li, Z.; Zhou, C.; Fang, Y.; Zhang, Q. Speckle Filtering of GF-3 Polarimetric SAR Data with Joint Restriction Principle. Sensors 2018, 18, 1533. [Google Scholar] [CrossRef]

- Zhang, W.G.; Liu, F.; Jiao, L.C. SAR Image Despeckling via Bilateral Filtering. Electron. Lett. 2009, 45, 781–783. [Google Scholar] [CrossRef]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Hou, Q.; Zhou, D.; Feng, J. Coordinate attention for efficient mobile network design. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 13713–13722. [Google Scholar] [CrossRef]

- Liu, S.; Tong, M.; He, B.; Jiang, J.; He, C. Noise-to-Convex: A Hierarchical Framework for SAR Oriented Object Detection via Scattering Keypoint Feature Fusion and Convex Contour Refinement. Electronics 2025, 14, 569. [Google Scholar] [CrossRef]

- Xia, R.; Chen, J.; Huang, Z.; Wan, H.; Wu, B.; Sun, L.; Yao, B.; Xiang, H.; Xing, M. CRTransSar: A Visual Transformer Based on Contextual Joint Representation Learning for SAR Ship Detection. Remote Sens. 2022, 14, 1488. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).