Autonomous Driving of Trackless Transport Vehicles: A Case Study in Underground Mines

Abstract

Highlights

- What are the main findings?

- We propose an autonomous driving method for underground rubber-tired vehicles based on light band guidance.

- The feasibility of the method was validated through model experiments.

- What is the implication of the main finding?

- This method is characterized by simplicity, practicality, strong stability, and low cost.

- The scaled-down model experiments have strong simulation capabilities, and the experiments validated the feasibility of the method, laying the foundation for future large-scale practical applications.

Abstract

1. Introduction

- Considering the characteristics of the underground mine environment, a light-band-guided autonomous driving method is proposed. A light band is installed at the top of the tunnel and controlled by a host computer, dynamically changing and emitting red, green, and blue colors in segments. The vehicle achieves autonomous driving by using two industrial cameras as the primary sensors to track the light band, along with a small number of single-point ranging radars for short-range safety detection. The self-luminous light band ensures stable capture by cameras even in low-light tunnel conditions. In addition to its flexibility, this approach is computationally efficient, cost-effective, and highly reliable.

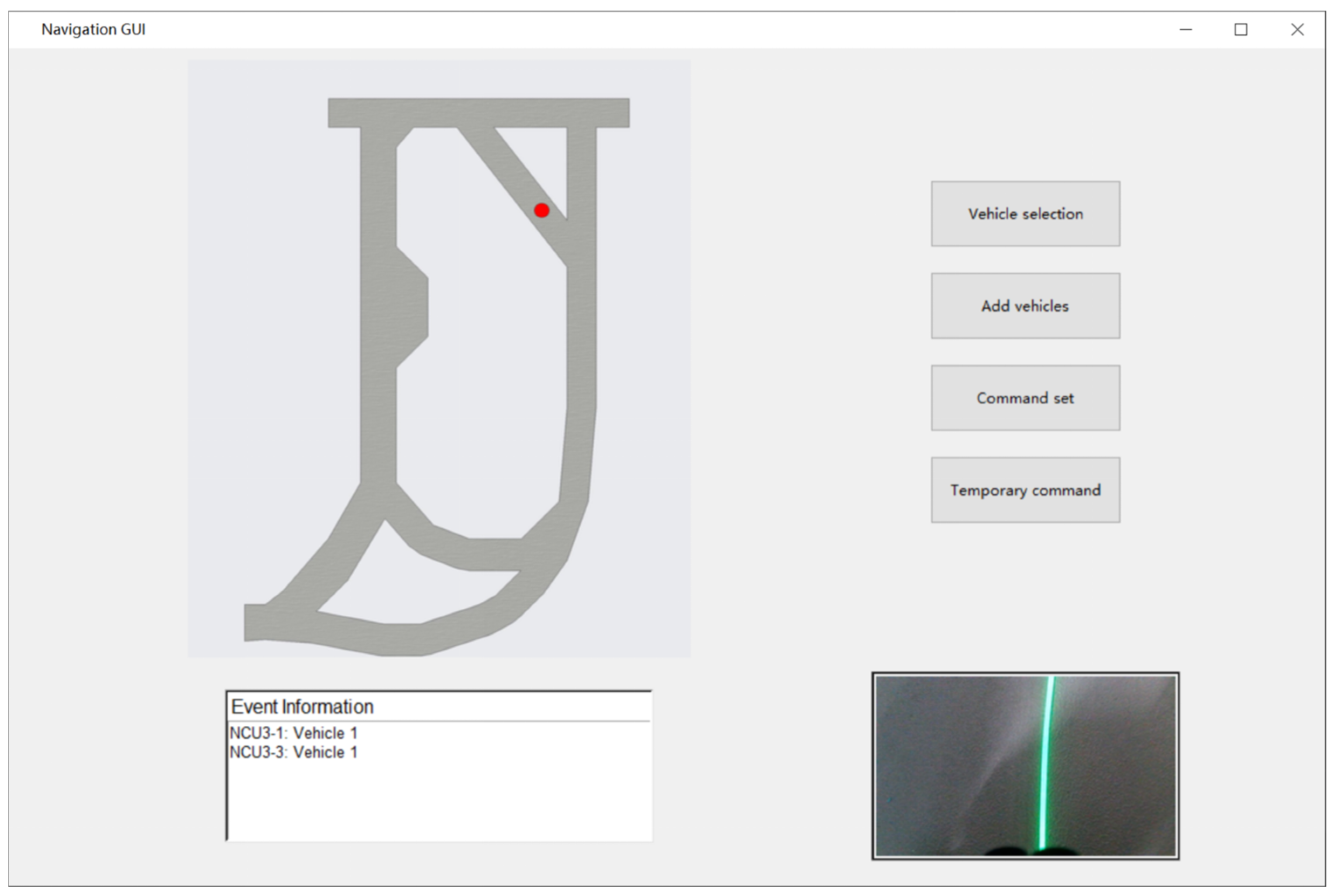

- A complete hardware and software system was developed for light-band guidance and vehicle navigation positioning. It consists of a server, a navigation control unit (NCU), and a GUI program. This system provides light-band guidance for vehicles and supports subsequent case studies.

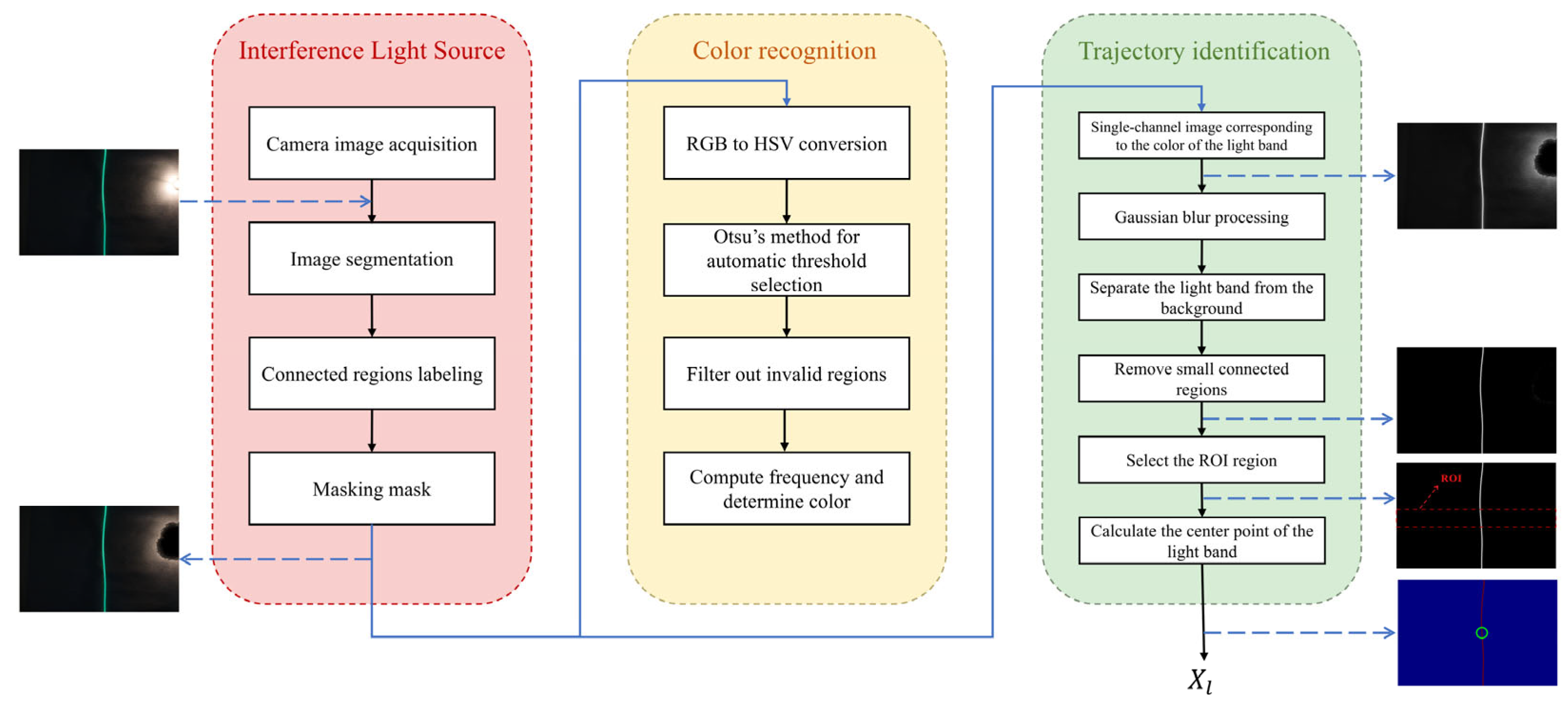

- An optimized image processing method is proposed for light-band recognition in mining environments. This method not only enables efficient and accurate color and trajectory recognition but also possesses certain anti-interference capabilities.

- In a simulated tunnel environment, multiple scenario tests were conducted using a mining vehicle model to verify the effectiveness of the proposed method.

2. Literature Review

2.1. Camera Image Acquisition in Low-Light Environments

2.2. AGV Navigation Technology

2.3. Path Planning Algorithms

3. Architecture of the Light-Band-Guided Autonomous Driving System

3.1. System Components

3.1.1. Functions of the Server Host

3.1.2. Navigation Control Unit

3.1.3. Vehicles Guided by Light Bands

3.2. Light Band Recognition Algorithm

3.2.1. Interference Light Source Removal

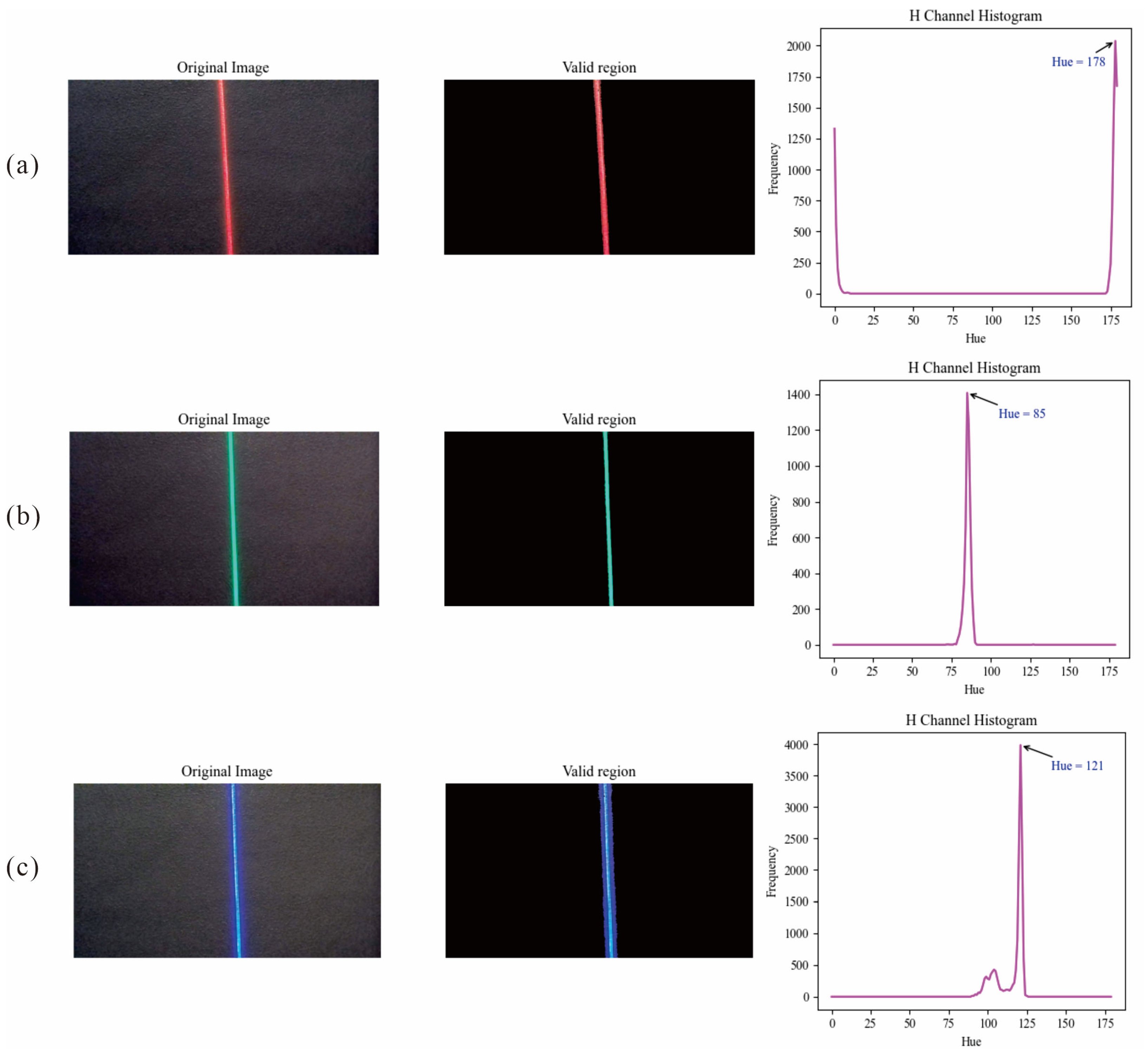

3.2.2. Light Band Color Recognition

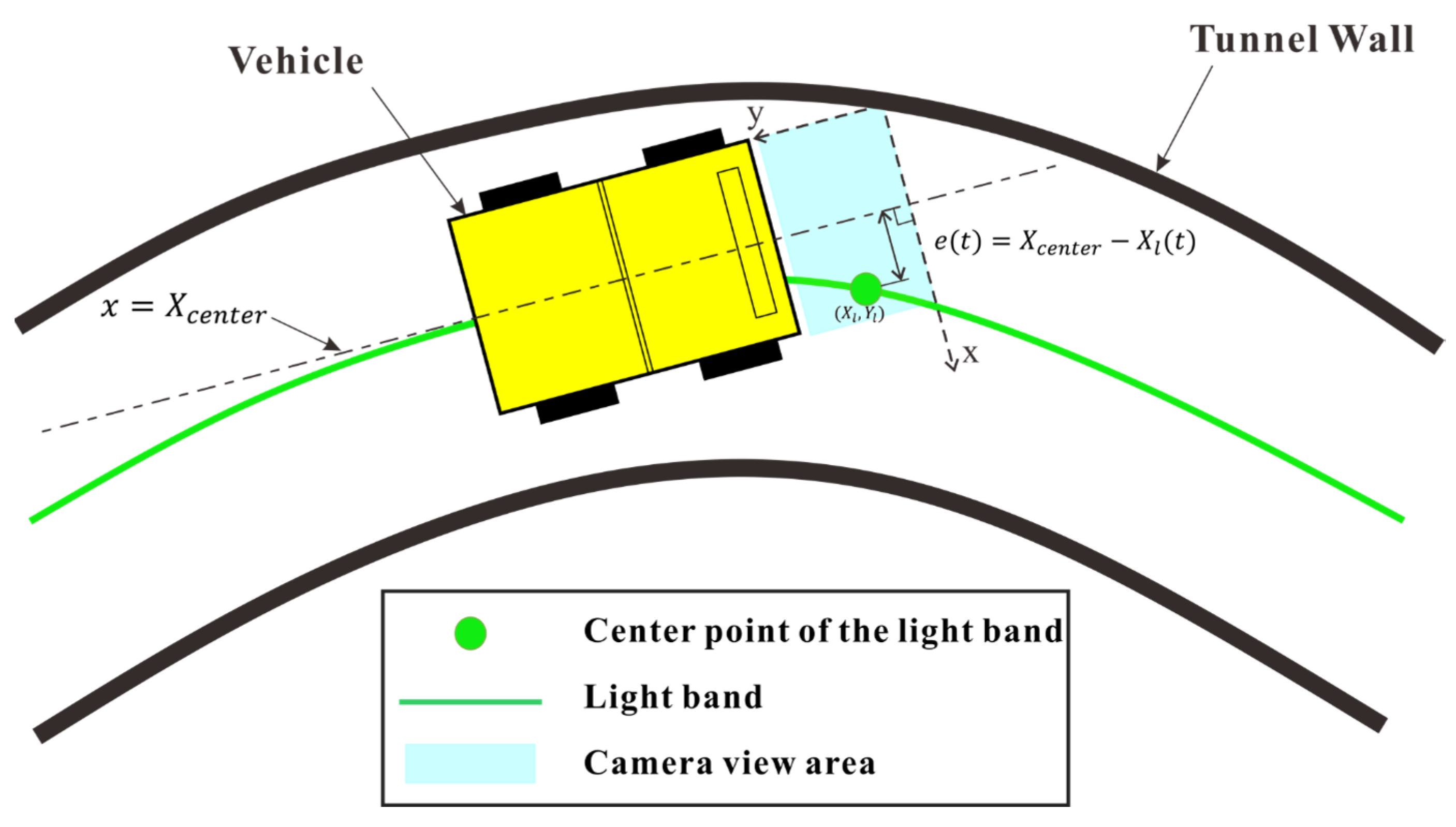

3.2.3. Light Band Trajectory Identification

3.3. Vehicle Positioning

3.3.1. Overview of Dead Reckoning Technology

3.3.2. Longitudinal Positioning and Error Correction Methods for Vehicles

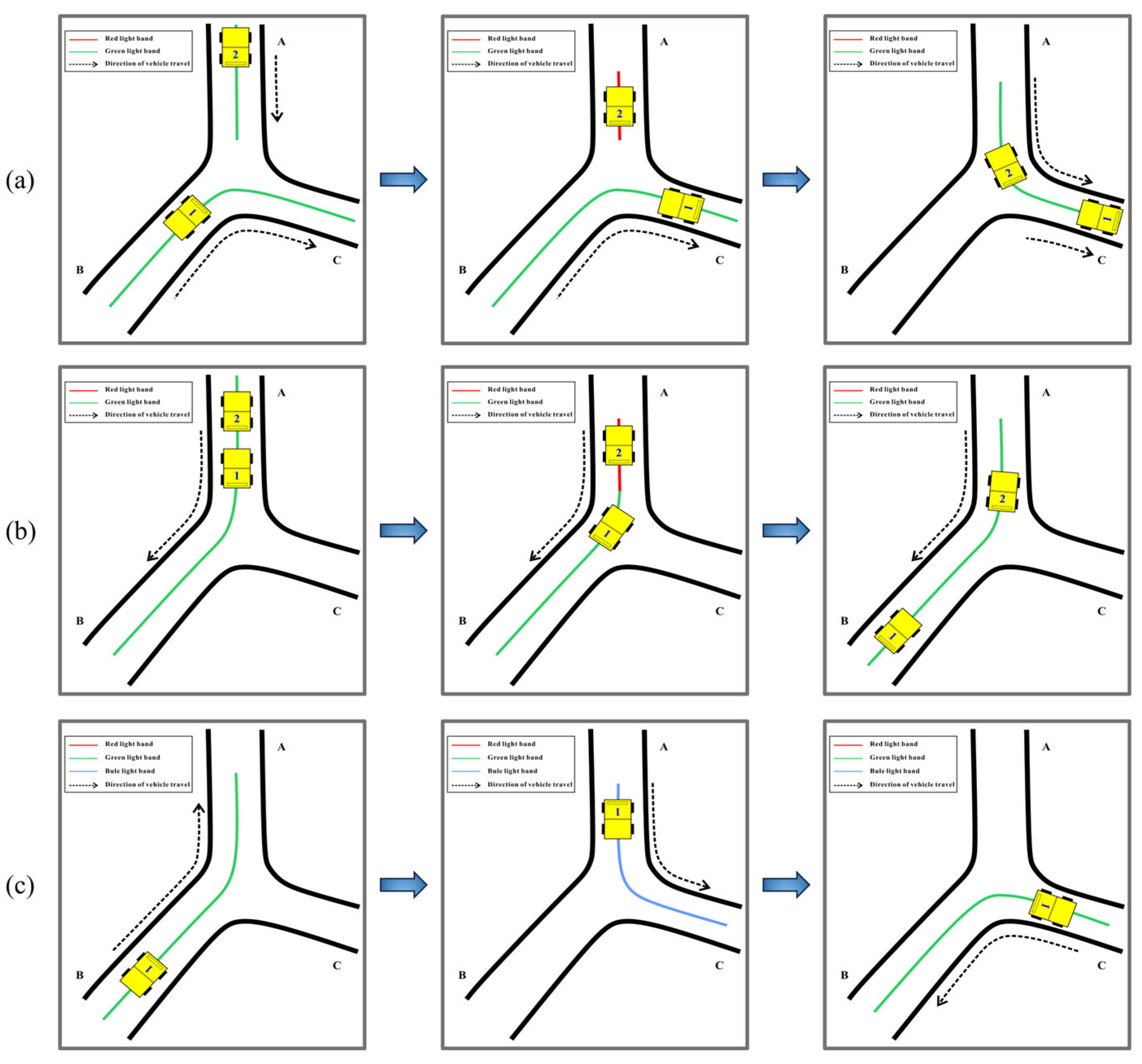

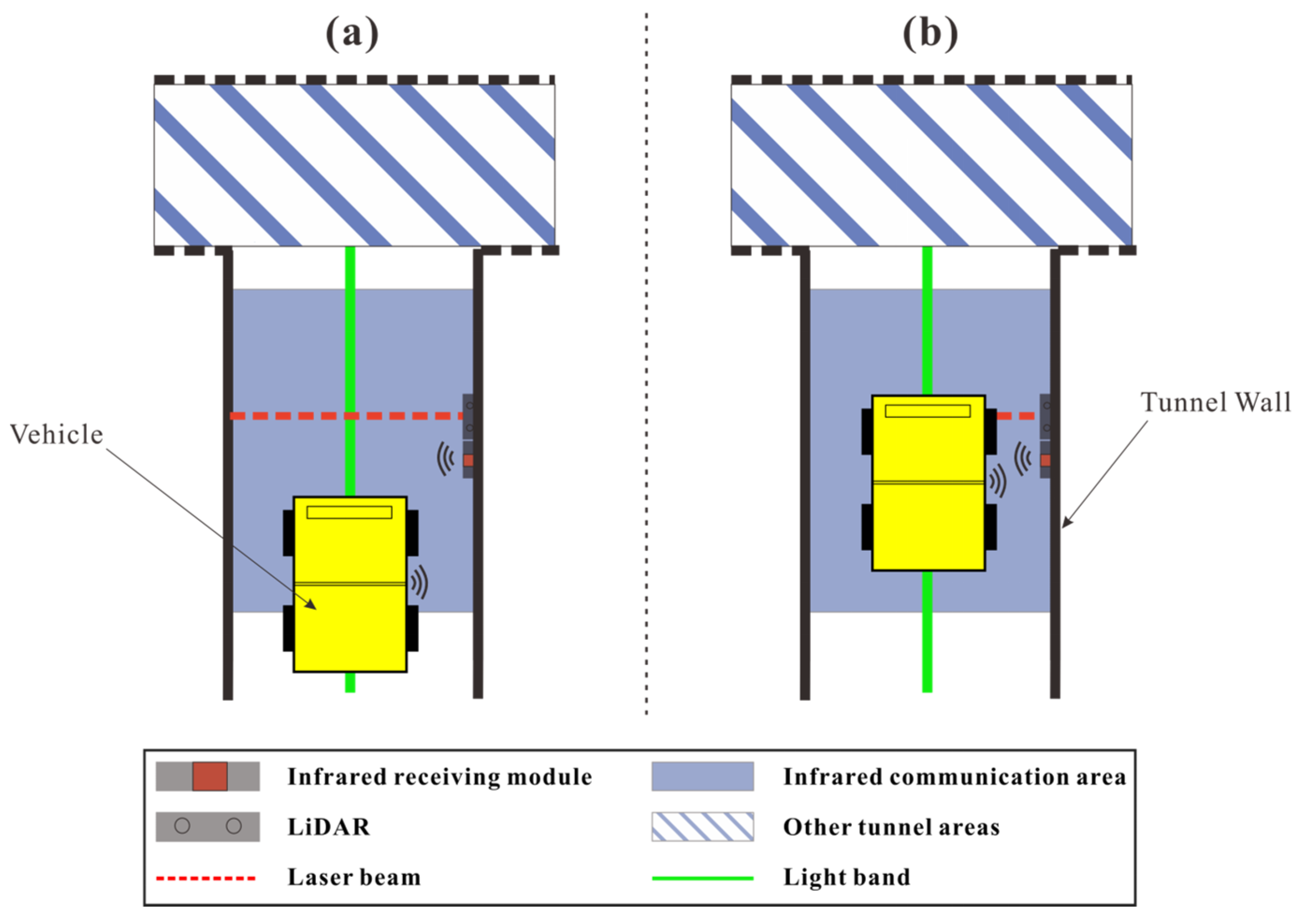

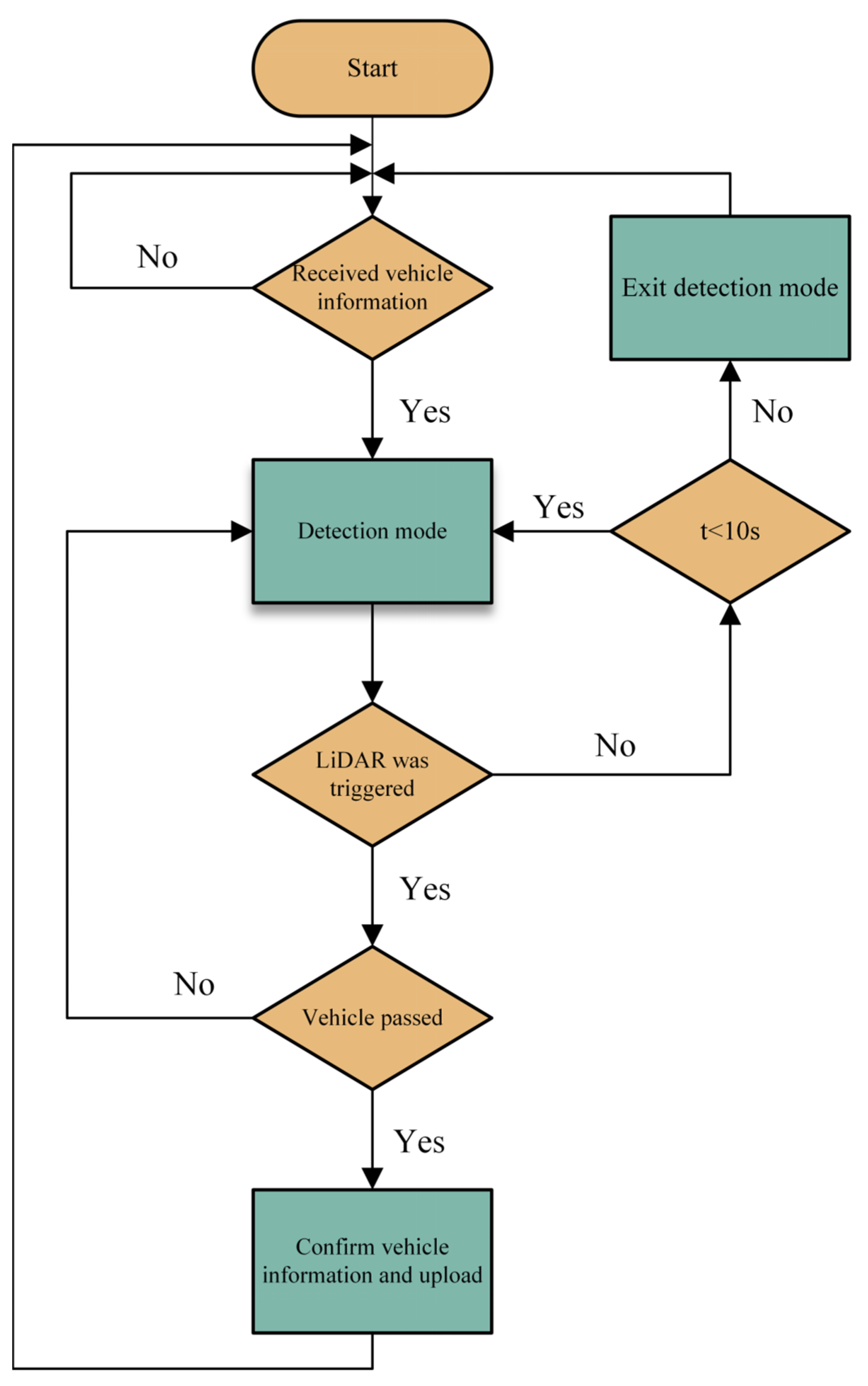

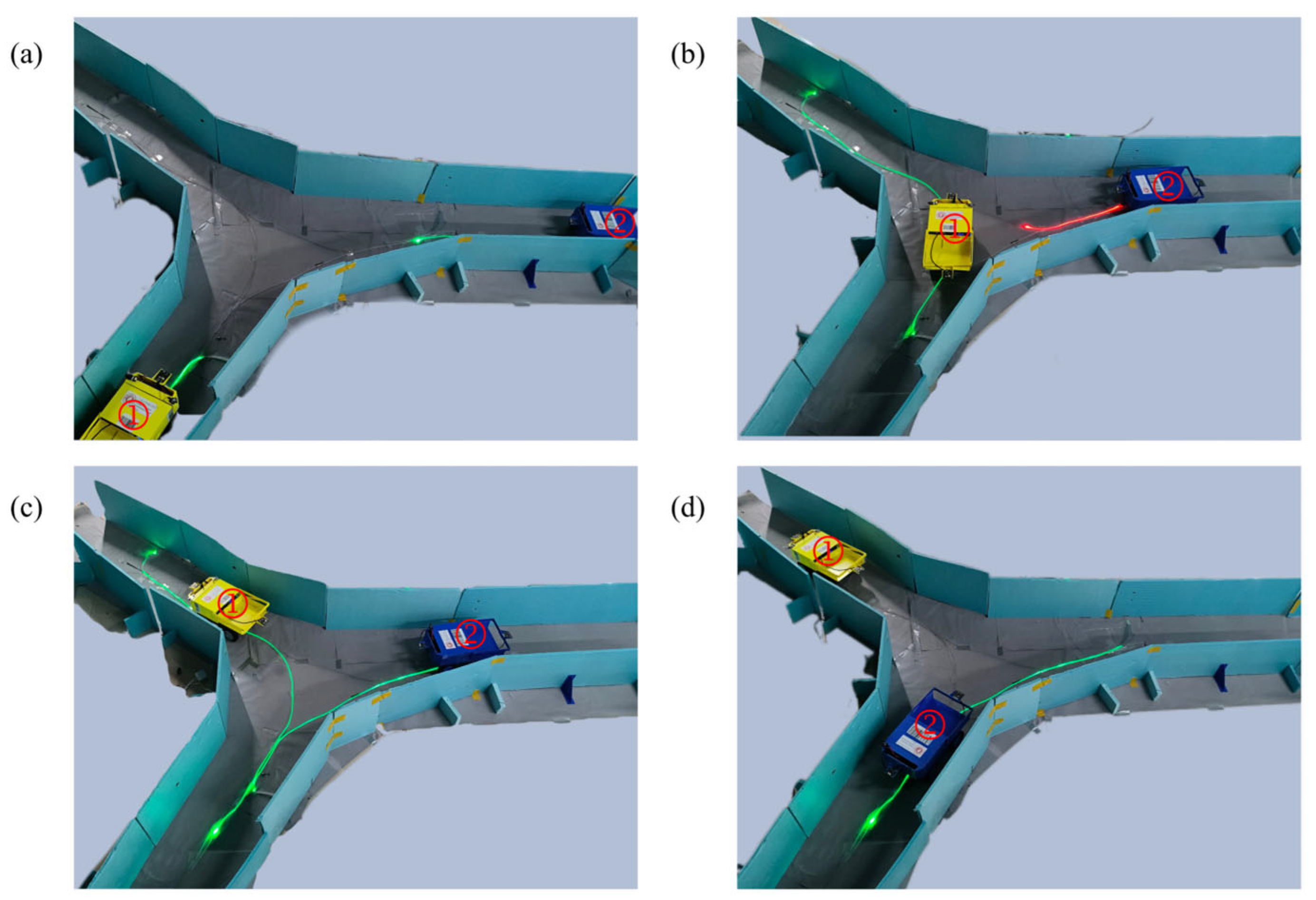

3.4. Vehicle Traffic Guidance Management and Priority Policy

4. Experimental Design and Effectiveness Verification

4.1. Construction of the Simulated Tunnel

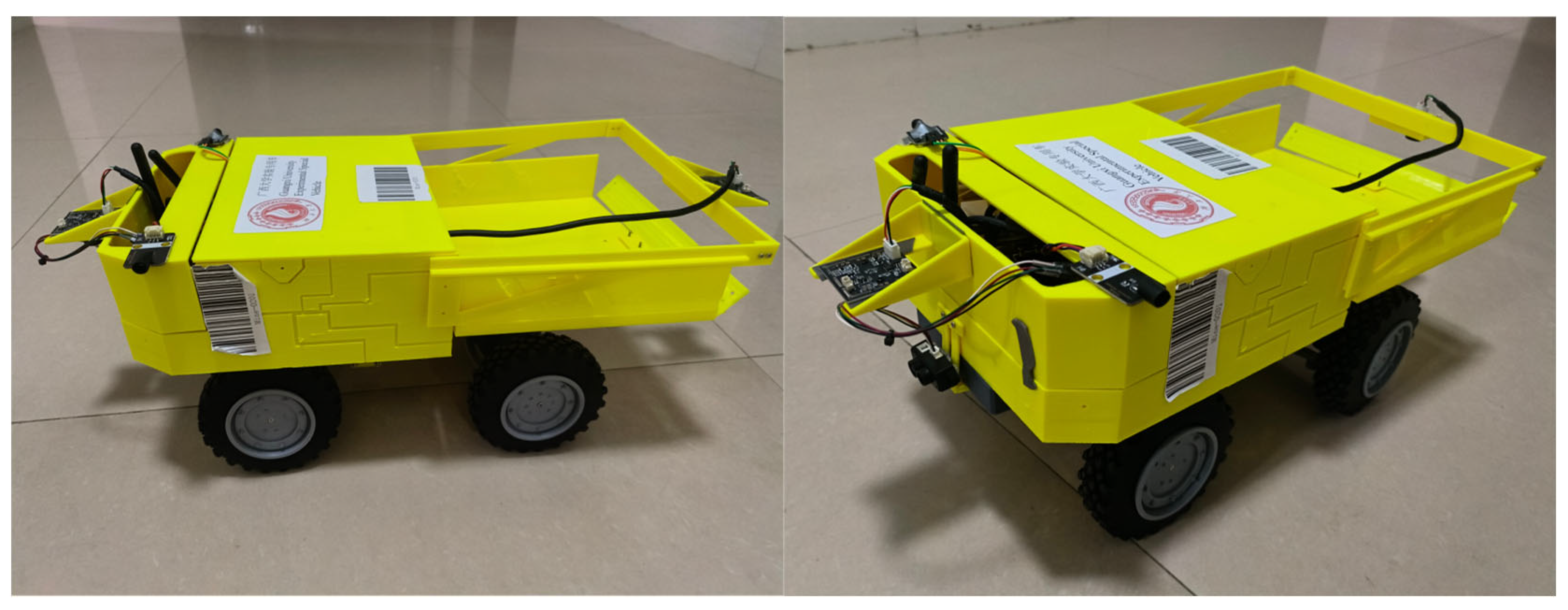

4.2. Fabrication of Vehicle Models

4.2.1. Vehicle Hardware Components

4.2.2. Automatic Control Mechanism for Vehicle Navigation Guided by Light Bands

4.2.3. Vehicle-Side Multithreading Processing and Emergency Response Design

4.3. Image Processing Performance Test

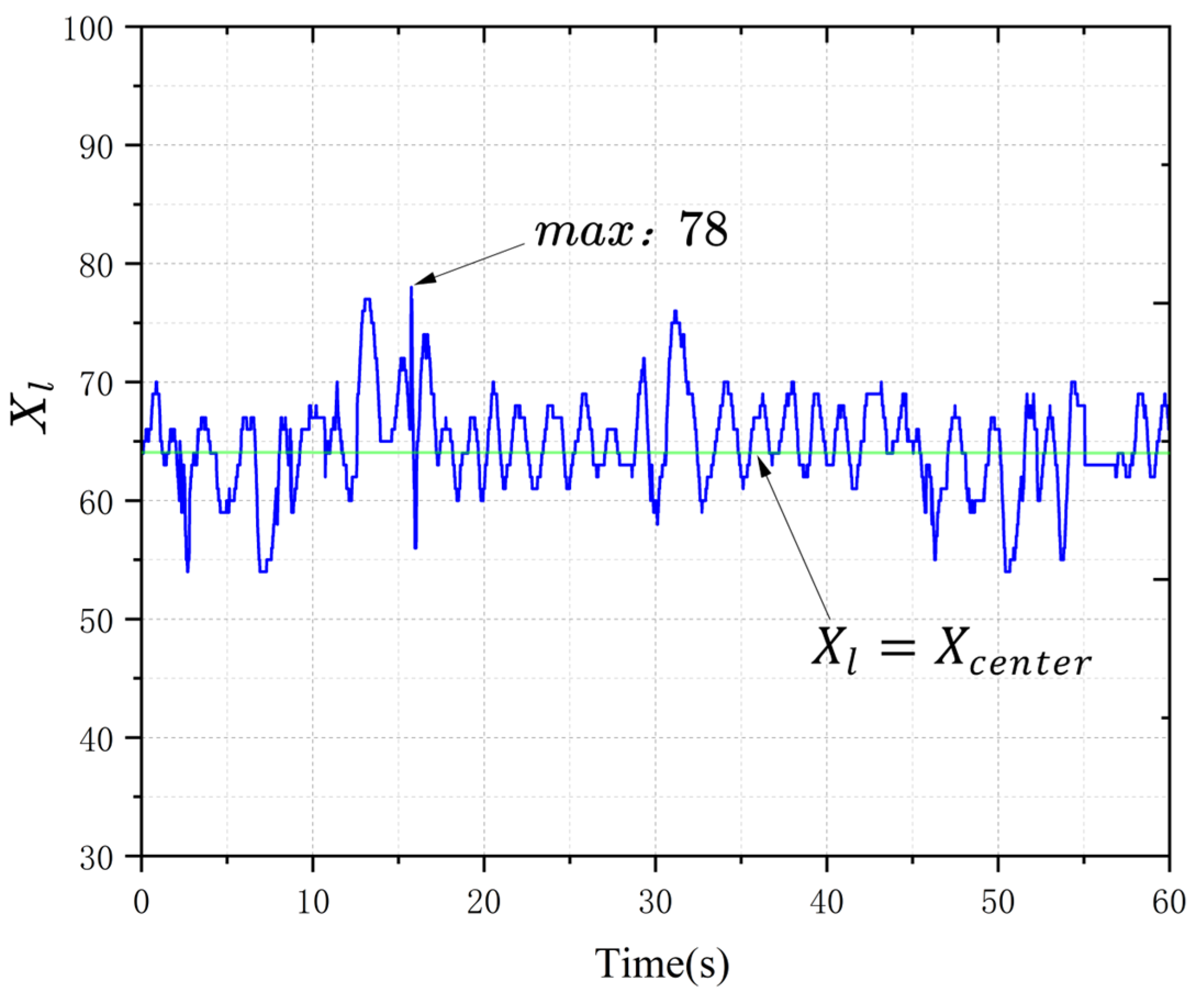

4.4. Tracking Accuracy and Repetitive Localization Accuracy

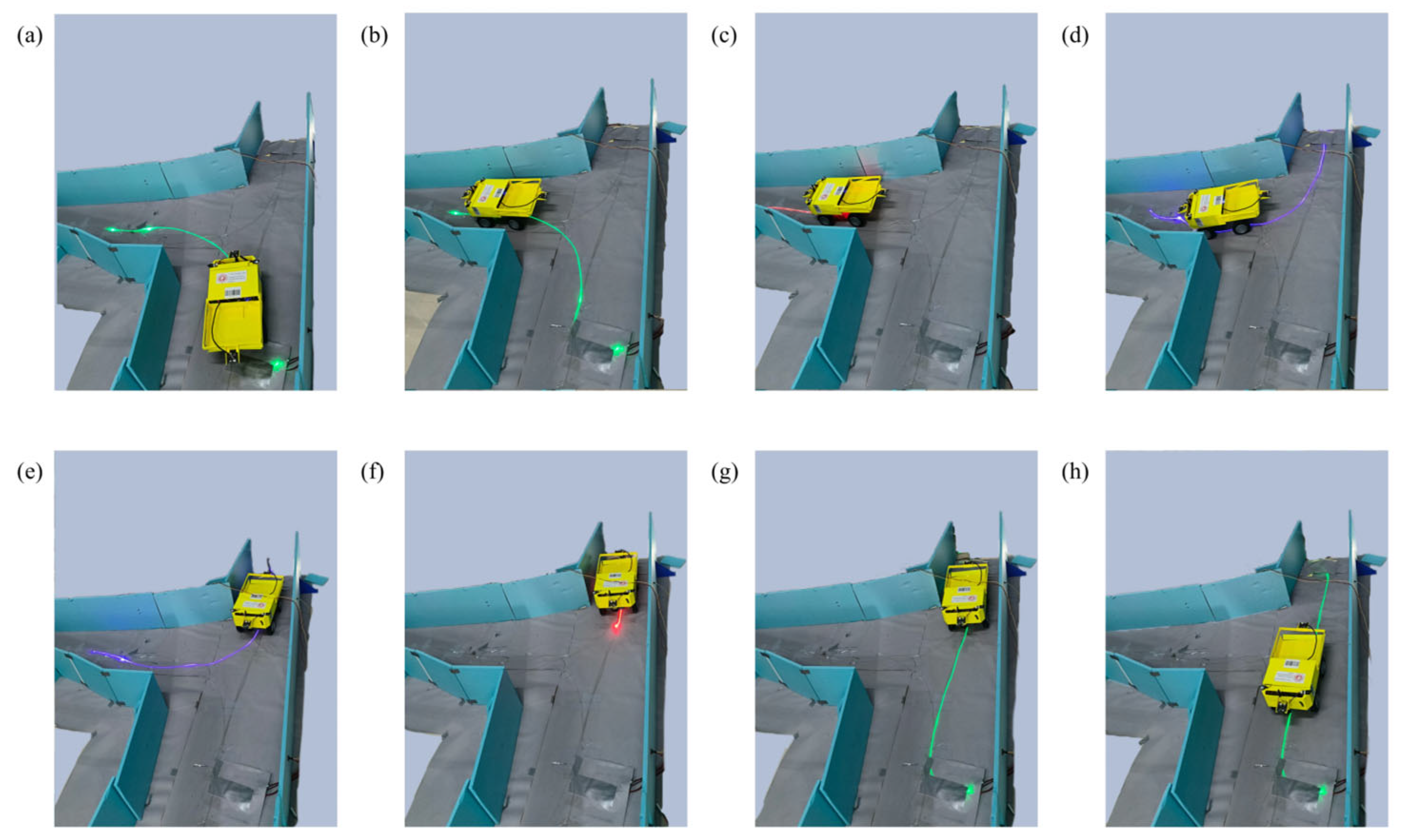

4.5. Case Study

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, J.-G.; Zhan, K. Intelligent Mining Technology for an Underground Metal Mine Based on Unmanned Equipment. Engineering 2018, 4, 381–391. [Google Scholar] [CrossRef]

- Kumar, P.; Gupta, S.; Gunda, Y.R. Estimation of human error rate in underground coal mines through retrospective analysis of mining accident reports and some error reduction strategies. Saf. Sci. 2020, 123, 104555. [Google Scholar] [CrossRef]

- Roy, S.; Prasad Mishra, D.; Agrawal, H.; Madhab Bhattacharjee, R. Development of productivity model of continuous miner operators working in hazardous underground mine environmental conditions. Measurement 2025, 239, 115516. [Google Scholar] [CrossRef]

- Li, H.; Li, Y.; Chen, P.; Yu, G.; Liao, Y. A Secure Trajectory Planning Method for Connected Autonomous Vehicles at Mining Site. Symmetry 2023, 15, 1973. [Google Scholar] [CrossRef]

- Zhang, S.; Lu, C.; Jiang, S.; Shan, L.; Xiong, N.N. An Unmanned Intelligent Transportation Scheduling System for Open-Pit Mine Vehicles Based on 5G and Big Data. IEEE Access 2020, 8, 135524–135539. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, W.; Fang, W.; Jiang, Y.; Zhao, Q. Periodic Monitoring and Filtering Suppression of Signal Interference in Mine 5G Communication. Appl. Sci. 2022, 12, 7689. [Google Scholar] [CrossRef]

- Zhang, L.; Yang, W.; Hao, B.; Yang, Z.; Zhao, Q. Edge Computing Resource Allocation Method for Mining 5G Communication System. IEEE Access 2023, 11, 49730–49737. [Google Scholar] [CrossRef]

- Wang, J.; Wang, L.; Peng, P.; Jiang, Y.; Wu, J.; Liu, Y. Efficient and accurate mapping method of underground metal mines using mobile mining equipment and solid-state lidar. Measurement 2023, 221, 113581. [Google Scholar] [CrossRef]

- Cavur, M.; Demir, E. RSSI-based hybrid algorithm for real-time tracking in underground mining by using RFID technology. Phys. Commun. 2022, 55, 101863. [Google Scholar] [CrossRef]

- Gunatilake, A.; Kodagoda, S.; Thiyagarajan, K. A Novel UHF-RFID Dual Antenna Signals Combined with Gaussian Process and Particle Filter for In-Pipe Robot Localization. IEEE Robot. Autom. Lett. 2022, 7, 6005–6011. [Google Scholar] [CrossRef]

- Stefaniak, P.; Jachnik, B.; Koperska, W.; Skoczylas, A. Localization of LHD Machines in Underground Conditions Using IMU Sensors and DTW Algorithm. Appl. Sci. 2021, 11, 6751. [Google Scholar] [CrossRef]

- Kalinov, I.; Petrovsky, A.; Ilin, V.; Pristanskiy, E.; Kurenkov, M.; Ramzhaev, V.; Idrisov, I.; Tsetserukou, D. WareVision: CNN Barcode Detection-Based UAV Trajectory Optimization for Autonomous Warehouse Stocktaking. IEEE Robot. Autom. Lett. 2020, 5, 6647–6653. [Google Scholar] [CrossRef]

- Xu, Z.; Yang, W.; You, K.; Li, W.; Kim, Y.-i. Vehicle autonomous localization in local area of coal mine tunnel based on vision sensors and ultrasonic sensors. PLoS ONE 2017, 12, e0171012. [Google Scholar] [CrossRef] [PubMed]

- Chi, H.; Zhan, K.; Shi, B. Automatic guidance of underground mining vehicles using laser sensors. Tunn. Undergr. Space Technol. 2011, 27, 142–148. [Google Scholar] [CrossRef]

- Androulakis, V.; Sottile, J.; Schafrik, S.; Agioutantis, Z. Navigation system for a semi-autonomous shuttle car in room and pillar coal mines based on 2D LiDAR scanners. Tunn. Undergr. Space Technol. 2021, 117, 104149. [Google Scholar] [CrossRef]

- Zheng, J.; Zhou, K.; Li, J. Visual-inertial-wheel SLAM with high-accuracy localization measurement for wheeled robots on complex terrain. Measurement 2025, 243, 116356. [Google Scholar] [CrossRef]

- Kim, H.; Choi, Y. 3D location estimation and tunnel mapping of autonomous driving robots through 3D point cloud registration on underground mine rampways. Undergr. Space 2025, 22, 1–20. [Google Scholar] [CrossRef]

- Li, M.; Zhu, H.; You, S.; Wang, L.; Tang, C. Efficient Laser-Based 3D SLAM for Coal Mine Rescue Robots. IEEE Access 2019, 7, 14124–14138. [Google Scholar] [CrossRef]

- Barbosa, B.H.G.; Bhatt, N.P.; Khajepour, A.; Hashemi, E. Adaptive and soft constrained vision-map vehicle localization using Gaussian processes and instance segmentation. Expert Syst. Appl. 2025, 264, 125790. [Google Scholar] [CrossRef]

- Frosi, M.; Matteucci, M. ART-SLAM: Accurate Real-Time 6DoF LiDAR SLAM. IEEE Robot. Autom. Lett. 2022, 7, 2692–2699. [Google Scholar] [CrossRef]

- Wang, J.; Wang, L.; Jiang, Y.; Peng, P.; Wu, J.; Liu, Y. A novel global re-localization method for underground mining vehicles in haulage roadways: A case study of solid-state LiDAR-equipped load-haul-dump vehicles. Tunn. Undergr. Space Technol. 2025, 156, 106270. [Google Scholar] [CrossRef]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2017, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Vinoth, K.; Sasikumar, P. Lightweight object detection in low light: Pixel-wise depth refinement and TensorRT optimization. Results Eng. 2024, 23, 102510. [Google Scholar] [CrossRef]

- Chang, R.; Zhao, S.; Rao, Y.; Yang, Y. LVIF-Net: Learning synchronous visible and infrared image fusion and enhancement under low-light conditions. Infrared Phys. Technol. 2024, 138, 105270. [Google Scholar] [CrossRef]

- Yuan, Z.; Zeng, J.; Wei, Z.; Jin, L.; Zhao, S.; Liu, X.; Zhang, Y.; Zhou, G. CLAHE-Based Low-Light Image Enhancement for Robust Object Detection in Overhead Power Transmission System. IEEE Trans. Power Deliv. 2023, 38, 2240–2243. [Google Scholar] [CrossRef]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2RNet: Low-light image enhancement via Real-low to Real-normal Network. J. Vis. Commun. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Kong, W.; Liu, M.; Zhang, J.; Wu, H.; Wang, Y.; Su, Q.; Li, Q.; Zhang, J.; Wu, C.; Zou, W.-S. Room-temperature phosphorescence and fluorescence nanocomposites as a ratiometric chemosensor for high-contrast and selective detection of 2,4,6-trinitrotoluene. Anal. Chim. Acta 2023, 1282, 341930. [Google Scholar] [CrossRef]

- Cai, Z.; Zhang, F.; Tan, Y.; Kessler, S.; Fottner, J. Integration of an IoT sensor with angle-of-arrival-based angle measurement in AGV navigation: A reliability study. J. Ind. Inf. Integr. 2024, 42, 100707. [Google Scholar] [CrossRef]

- Maza, S. Diagnostic-constrained fault-tolerant control of bi-directional AGV transport systems with fault-prone sensors. ISA Trans. 2025, 158, 227–241. [Google Scholar] [CrossRef]

- Fan, X.; Sang, H.; Tian, M.; Yu, Y.; Chen, S. Integrated scheduling problem of multi-load AGVs and parallel machines considering the recovery process. Swarm Evol. Comput. 2025, 94, 101861. [Google Scholar] [CrossRef]

- Chen, W.; Chi, W.; Ji, S.; Ye, H.; Liu, J.; Jia, Y.; Yu, J.; Cheng, J. A survey of autonomous robots and multi-robot navigation: Perception, planning and collaboration. Biomim. Intell. Robot. 2025, 5, 100203. [Google Scholar] [CrossRef]

- Zhou, Y.; Huang, N. Airport AGV path optimization model based on ant colony algorithm to optimize Dijkstra algorithm in urban systems. Sustain. Comput. Inform. Syst. 2022, 35, 100716. [Google Scholar] [CrossRef]

- Hu, L.; Wei, C.; Yin, L. Fuzzy A∗ quantum multi-stage Q-learning artificial potential field for path planning of mobile robots. Eng. Appl. Artif. Intell. 2025, 141, 109866. [Google Scholar] [CrossRef]

- Zhang, J.; Ling, H.; Tang, Z.; Song, W.; Lu, A. Path planning of USV in confined waters based on improved A * and DWA fusion algorithm. Ocean. Eng. 2025, 322, 120475. [Google Scholar] [CrossRef]

- Ho, G.T.S.; Tang, Y.M.; Leung, E.K.H.; Tong, P.H. Integrated reinforcement learning of automated guided vehicles dynamic path planning for smart logistics and operations. Transp. Res. Part E Logist. Transp. Rev. 2025, 196, 104008. [Google Scholar] [CrossRef]

- Li, S.; Liu, Y.; Wang, Z.; Dou, C.; Zhao, W. Constructing a visual detection model for floc settling velocity using machine learning. J. Environ. Manag. 2024, 370, 122805. [Google Scholar] [CrossRef]

- Chen, S.; Tong, D.; Zhang, Q.; Wu, X. Evaluation of adhesion between Styrene-Butadiene-Styrene (SBS) modified asphalt and aggregates based on rolling bottle test and image processing. Constr. Build. Mater. 2024, 431, 136531. [Google Scholar] [CrossRef]

- Zhao, S.; Wang, P.; Heidari, A.A.; Chen, H.; Turabieh, H.; Mafarja, M.; Li, C. Multilevel threshold image segmentation with diffusion association slime mould algorithm and Renyi’s entropy for chronic obstructive pulmonary disease. Comput. Biol. Med. 2021, 134, 104427. [Google Scholar] [CrossRef]

- Sun, H.; Liu, L.; Li, F. A lie group semi-supervised FCM clustering method for image segmentation. Pattern Recognit. 2024, 155, 110681. [Google Scholar] [CrossRef]

- Chernov, V.; Alander, J.; Bochko, V. Integer-based accurate conversion between RGB and HSV color spaces. Comput. Electr. Eng. 2015, 46, 328–337. [Google Scholar] [CrossRef]

- Pandey, B. Separating the blue cloud and the red sequence using Otsu’s method for image segmentation. Astron. Comput. 2023, 44, 100725. [Google Scholar] [CrossRef]

- Xiao, J.-L.; Fan, J.-S.; Liu, Y.-F.; Li, B.-L.; Nie, J.-G. Region of interest (ROI) extraction and crack detection for UAV-based bridge inspection using point cloud segmentation and 3D-to-2D projection. Autom. Constr. 2024, 158, 105226. [Google Scholar] [CrossRef]

- Wang, W.; Chen, M.; Peng, Y.; Ma, Y.; Dong, S. Unstructured environment navigation and trajectory calibration technology based on multi-data fusion. Comput. Electr. Eng. 2024, 120, 109658. [Google Scholar] [CrossRef]

- Sabet, M.T.; Daniali, H.R.M.; Fathi, A.R.; Alizadeh, E. Experimental analysis of a low-cost dead reckoning navigation system for a land vehicle using a robust AHRS. Robot. Auton. Syst. 2017, 95, 37–51. [Google Scholar] [CrossRef]

- Kumar, R.; Torres-Sospedra, J.; Chaurasiya, V.K. H2LWRF-PDR: An efficient indoor positioning algorithm using a single Wi-Fi access point and Pedestrian Dead Reckoning. Internet Things 2024, 27, 101271. [Google Scholar] [CrossRef]

- Li, N.; Wu, Y.; Ye, H.; Wang, L.; Wang, Q.; Jia, M. Scheduling optimization of underground mine trackless transportation based on improved estimation of distribution algorithm. Expert Syst. Appl. 2024, 245, 123025. [Google Scholar] [CrossRef]

- Gamache, M.; Grimard, R.; Cohen, P. A shortest-path algorithm for solving the fleet management problem in underground mines. Eur. J. Oper. Res. 2005, 166, 497–506. [Google Scholar] [CrossRef]

- Collins, J.; Chand, S.; Vanderkop, A.; Howard, D. A Review of Physics Simulators for Robotic Applications. IEEE Access 2021, 9, 51416–51431. [Google Scholar] [CrossRef]

- Stepanyants, V.G.; Romanov, A.Y. A Survey of Integrated Simulation Environments for Connected Automated Vehicles: Requirements, Tools, and Architecture. IEEE Intell. Transp. Syst. Mag. 2024, 16, 6–22. [Google Scholar] [CrossRef]

- Hanna, Y.F.; Khater, A.A.; El-Bardini, M.; El-Nagar, A.M. Real time adaptive PID controller based on quantum neural network for nonlinear systems. Eng. Appl. Artif. Intell. 2023, 126, 106952. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, J.; Zhang, N.; Cao, X.; Yuan, Y.; Sultan, M.; Attia, S. Dynamic discharging performance of a latent heat thermal energy storage system based on a PID controller. J. Energy Storage 2023, 71, 107911. [Google Scholar] [CrossRef]

- Nanmaran, R.; Balasubramaniam, D.; Senthil Kumar, P.; Vickram, A.S.; Saravanan, A.; Thanigaivel, S.; Srimathi, S.; Rangasamy, G. Compressor speed control design using PID controller in hydrogen compression and transfer system. J. Hydrogen Energy 2023, 48, 28445–28452. [Google Scholar] [CrossRef]

- Lu, J.; Lazar, E.A.; Rycroft, C.H. An extension to Voro++ for multithreaded computation of Voronoi cells. Comput. Phys. Commun. 2023, 291, 108832. [Google Scholar] [CrossRef]

- Wang, H.; Zheng, W.; Zhou, J.; Feng, L.; Du, H. Intelligent vehicle path tracking coordinated optimization based on dual-steering cooperative game with fault-tolerant function. Appl. Math. Model. 2025, 139, 115808. [Google Scholar] [CrossRef]

| Serial Number | Distance (mm) | Time (s) | Absolute Error (mm) | Relative Error (%) | Serial Number | Distance (mm) | Time (s) | Absolute Error (mm) | Relative Error (%) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 1623 | 119.893 | 27 | 0.1542 | 16 | 1735 | 115.015 | 85 | 0.4856 |

| 2 | 1693 | 115.447 | 43 | 0.2456 | 17 | 1692 | 116.431 | 42 | 0.2399 |

| 3 | 1699 | 116.835 | 49 | 0.2799 | 18 | 1468 | 113.161 | 182 | 1.0397 |

| 4 | 1677 | 121.077 | 27 | 0.1542 | 19 | 1680 | 115.602 | 30 | 0.1714 |

| 5 | 1708 | 115.642 | 58 | 0.3313 | 20 | 1651 | 115.838 | 1 | 0.0057 |

| 6 | 1693 | 116.834 | 43 | 0.2456 | 21 | 1723 | 117.312 | 73 | 0.4170 |

| 7 | 1655 | 121.013 | 5 | 0.0286 | 22 | 1687 | 117.295 | 37 | 0.2114 |

| 8 | 1717 | 114.857 | 67 | 0.3827 | 23 | 1665 | 117.139 | 15 | 0.0857 |

| 9 | 1726 | 115.618 | 76 | 0.4342 | 24 | 1613 | 122.563 | 37 | 0.2114 |

| 10 | 1670 | 117.727 | 20 | 0.1143 | 25 | 1684 | 115.215 | 34 | 0.1942 |

| 11 | 1601 | 115.743 | 49 | 0.2799 | 26 | 1670 | 117.679 | 20 | 0.1143 |

| 12 | 1640 | 118.904 | 10 | 0.0571 | 27 | 1690 | 120.675 | 40 | 0.2285 |

| 13 | 1656 | 118.878 | 6 | 0.0343 | 28 | 1699 | 116.253 | 49 | 0.2799 |

| 14 | 1645 | 117.247 | 5 | 0.0286 | 29 | 1735 | 118.076 | 85 | 0.4856 |

| 15 | 1676 | 115.895 | 26 | 0.1485 | 30 | 1706 | 116.233 | 56 | 0.3199 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, Y.; Zhang, L.; Liu, J.; Xu, Y.; Li, X. Autonomous Driving of Trackless Transport Vehicles: A Case Study in Underground Mines. Sensors 2025, 25, 3189. https://doi.org/10.3390/s25103189

Sun Y, Zhang L, Liu J, Xu Y, Li X. Autonomous Driving of Trackless Transport Vehicles: A Case Study in Underground Mines. Sensors. 2025; 25(10):3189. https://doi.org/10.3390/s25103189

Chicago/Turabian StyleSun, Yunjie, Linxin Zhang, Junhong Liu, Yonghe Xu, and Xiaoquan Li. 2025. "Autonomous Driving of Trackless Transport Vehicles: A Case Study in Underground Mines" Sensors 25, no. 10: 3189. https://doi.org/10.3390/s25103189

APA StyleSun, Y., Zhang, L., Liu, J., Xu, Y., & Li, X. (2025). Autonomous Driving of Trackless Transport Vehicles: A Case Study in Underground Mines. Sensors, 25(10), 3189. https://doi.org/10.3390/s25103189