Time-Interval-Guided Event Representation for Scene Understanding

Abstract

1. Introduction

- We propose a new method termed the “noise-based event triggering mechanism”. This method provides a probabilistic perspective to elucidate the influence of noise behavior on event triggering in static scenes. It also outlines the relationship between the event generation rate and the light intensity of the scene.

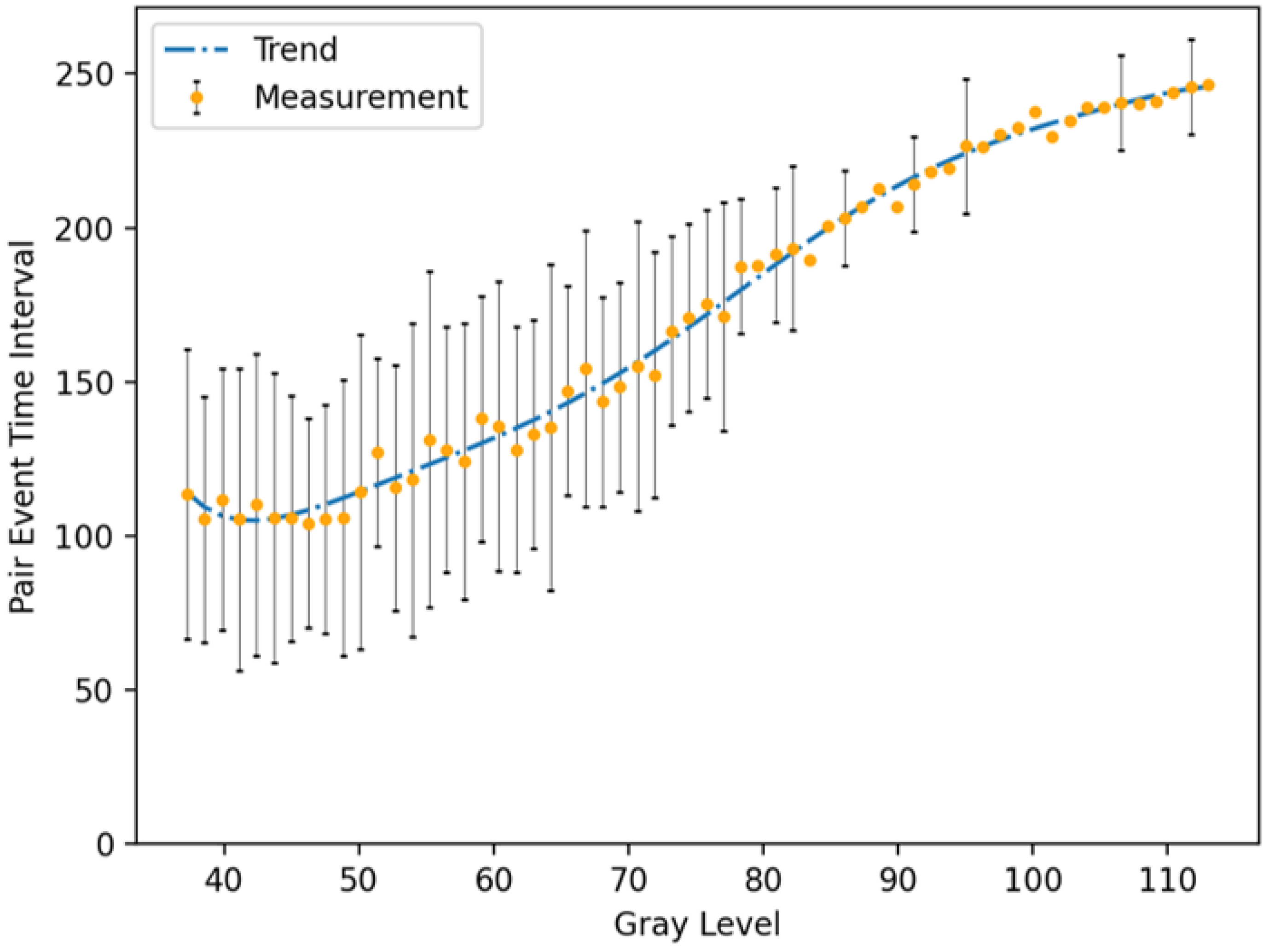

- We present the concept of “event pairs” and demonstrate that events predominantly occur in pairs. We establish the relationship between the time interval of event pairs and light intensity. Based on this observation, we propose an innovative method to convert the high temporal resolution of event signals to the relative light intensity of the static scene.

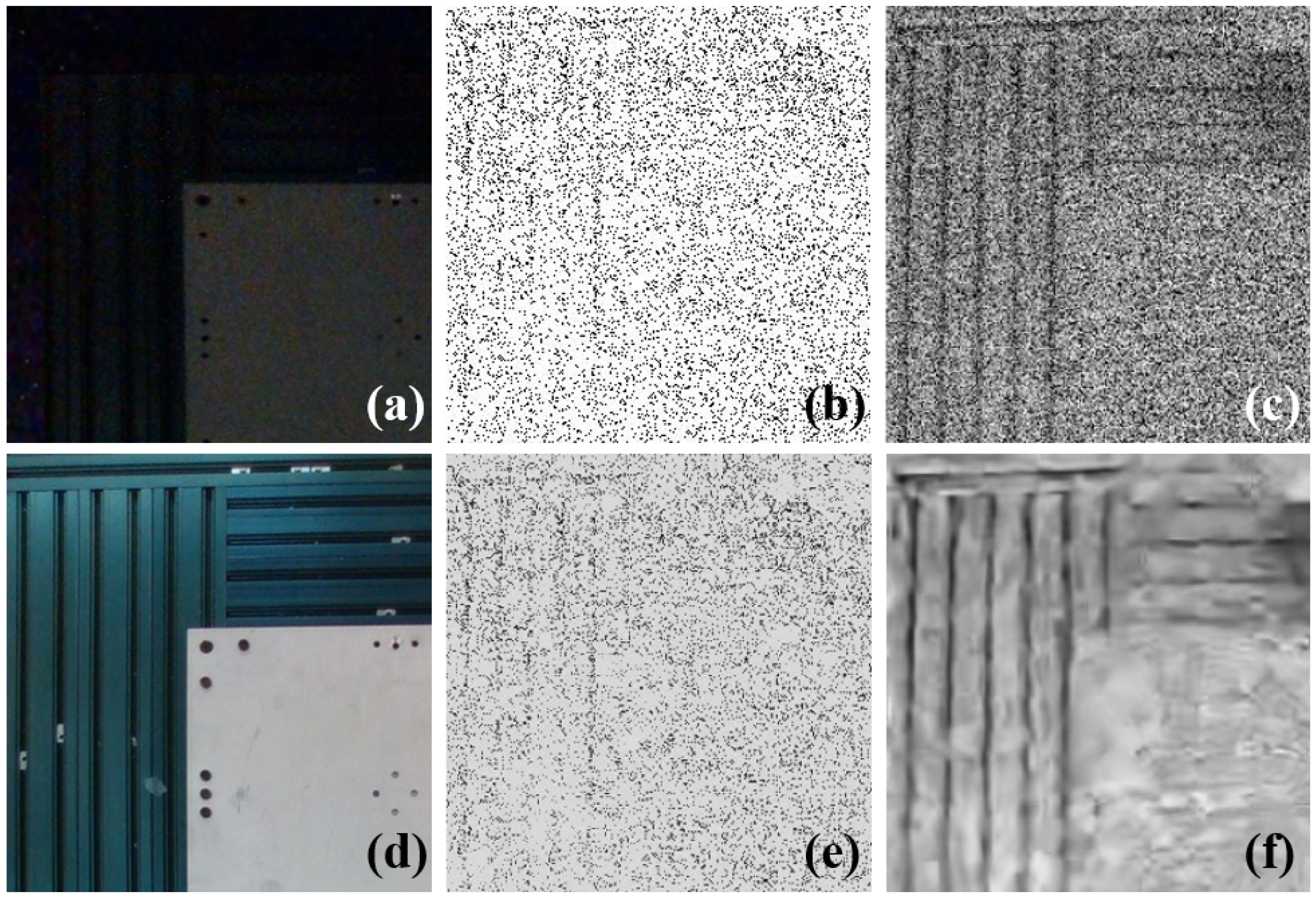

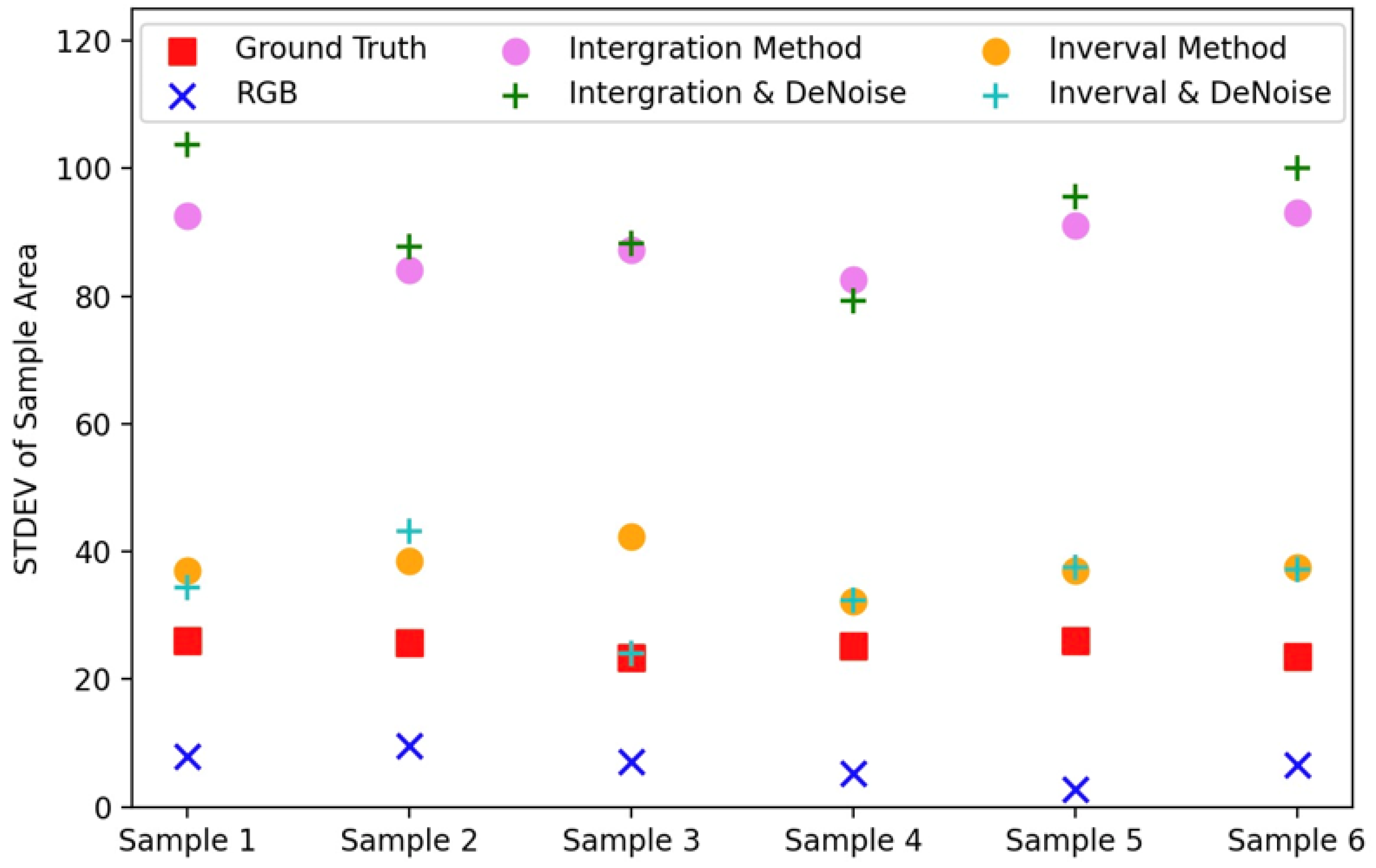

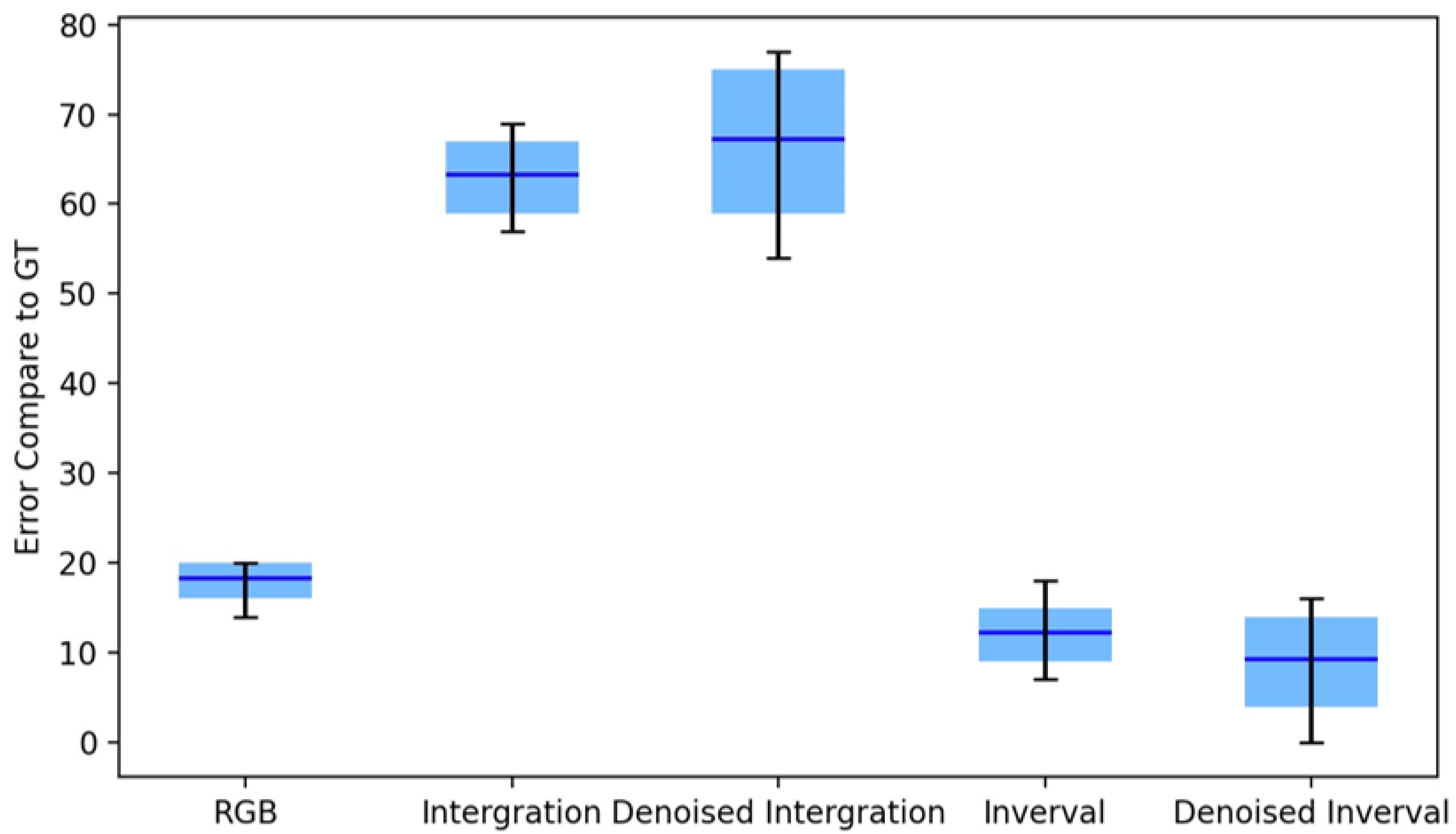

- We developed a practical application based on our method, namely feature detection under low illumination. Our demonstrations indicate that the time-interval-based method outperforms the integration-based method in detail recovery, thereby expanding the potential applications of event cameras in static scenarios.

2. Related Works

3. Preliminaries

4. Time Intervals of Event Pairs

4.1. Noise-Based Event Triggering

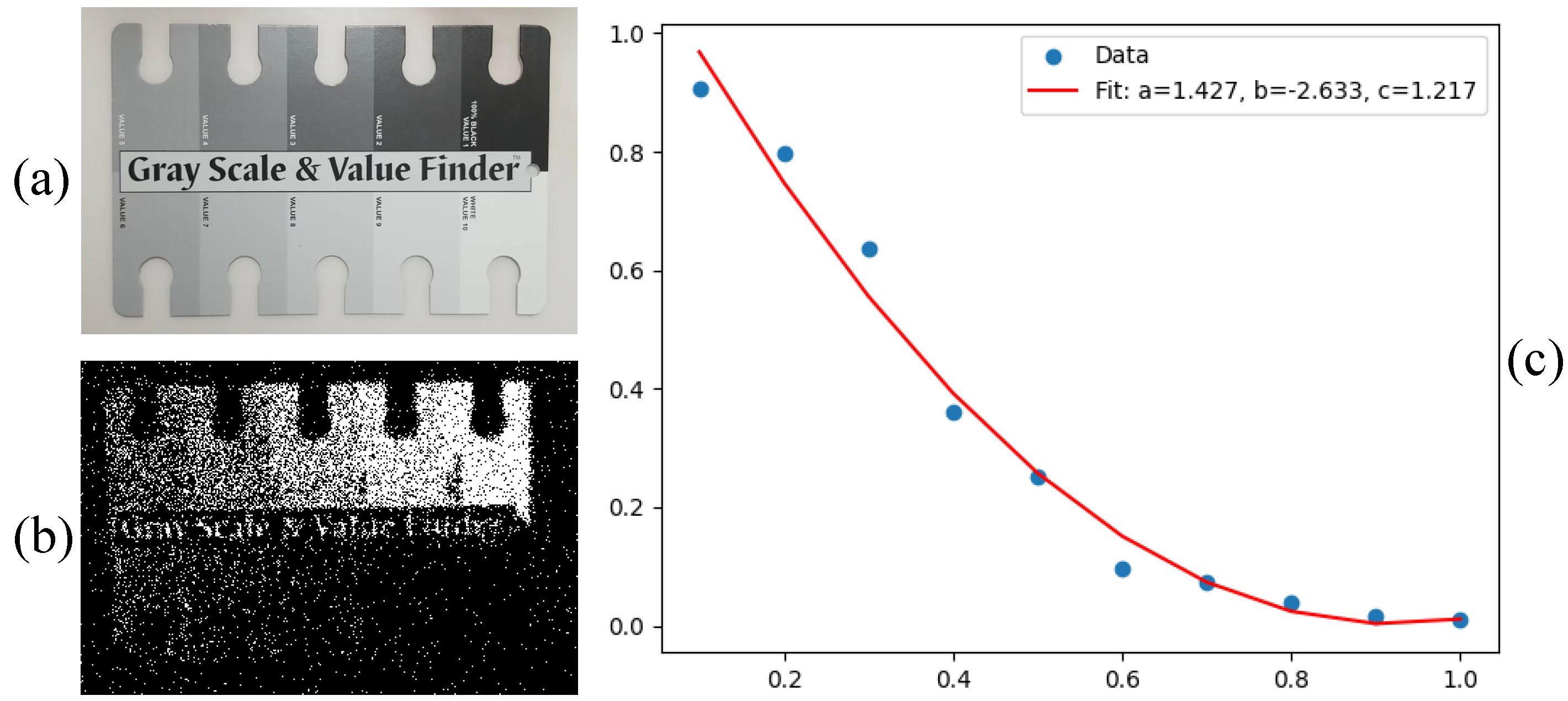

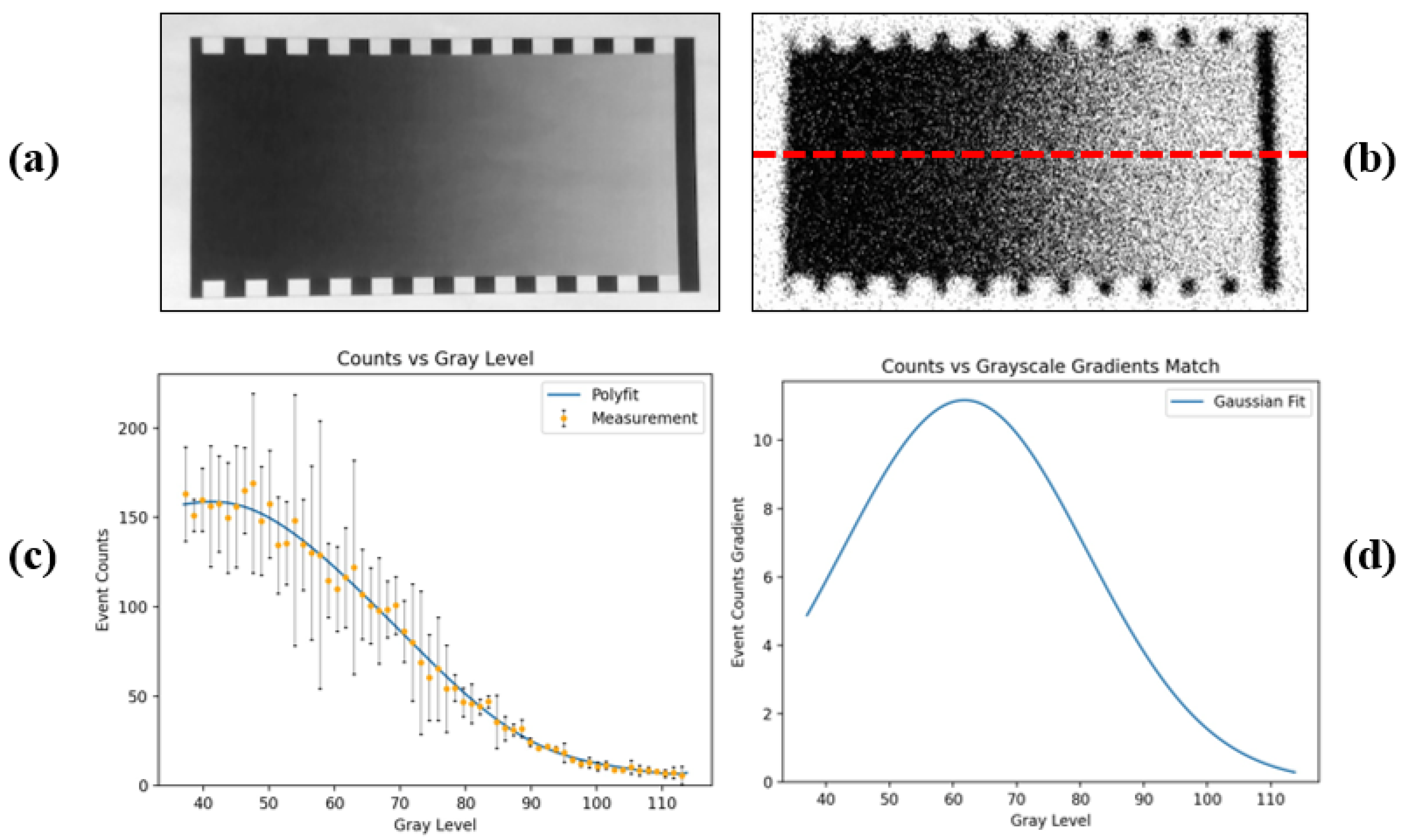

- The event generation rate in a static scene is closely associated with the scene intensity. As depicted in Figure 1, we utilize various patches within a standard grayscale checker to represent diverse illumination levels [33]. The polyfitted curve delineates the correlation between the event rate and grayscale value.

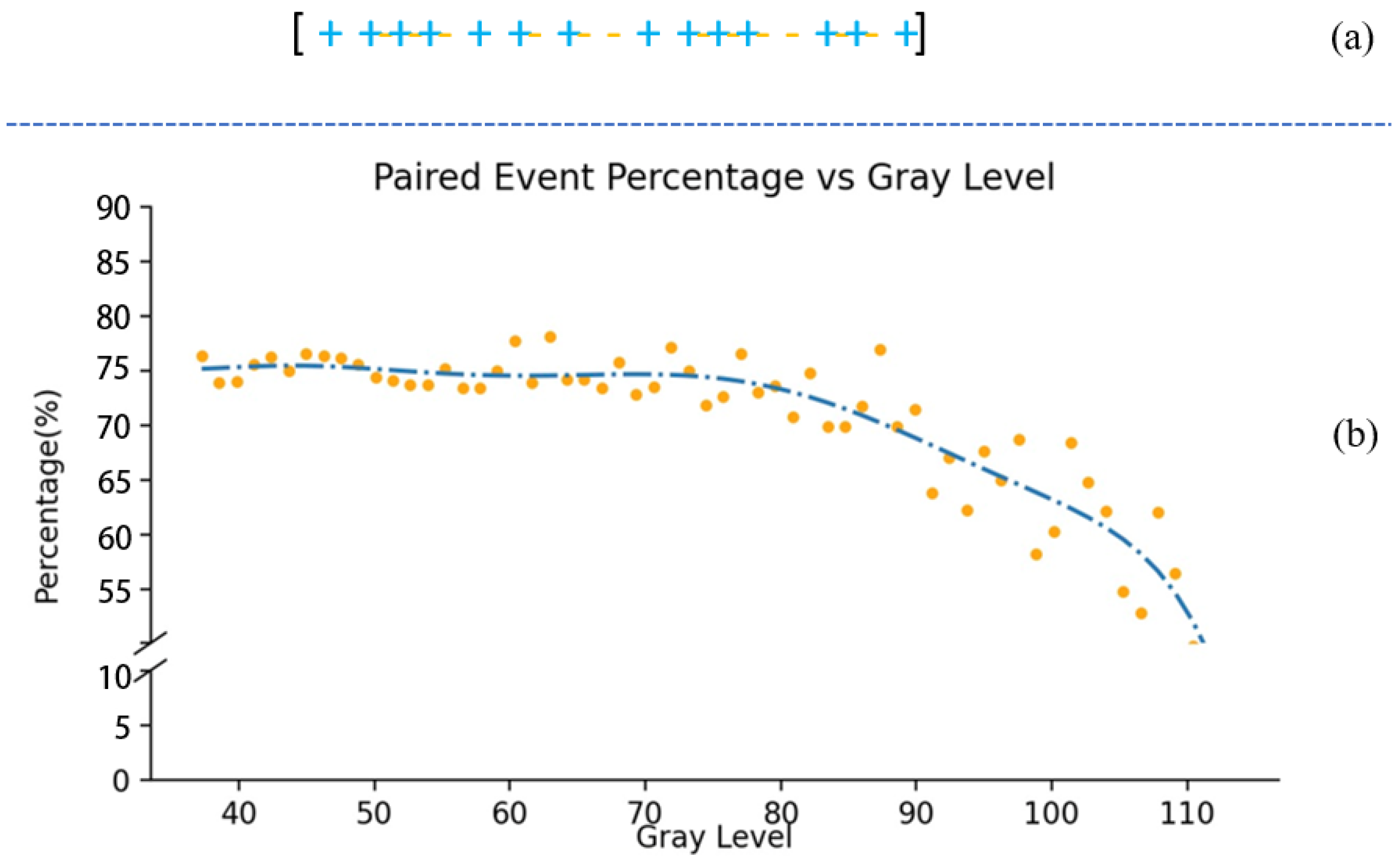

- The majority of events in the raw stream appear in pairs, comprising one positive event coupled with one negative event, forming what we define to be an “event pair”. Figure 2 illustrates the proportion of event pairs within a set of event streams, with an average proportion of 73.34%, indicating that pairs constitute the predominant form of events.

4.2. Intensity Reconstruction from Event Pairs

4.3. Experimental Verification

4.4. Time-Interval-Based Method vs. Integration-Based Method

5. HDR Imaging Using Event Cameras

- Event cameras exhibit a dynamic range exceeding 120 dB, in contrast to consumer frame cameras available at present with 40 dB. This extended range enables event cameras to capture signals under extreme lighting conditions. Theoretically, it is feasible to extract valuable information from the noisy output of event cameras.

- In contrast to the integration-based method, our approach transforms high temporal resolution into the relative light intensity of the scene. This conversion enhances contrast, enabling us to achieve more precise reconstructions.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, X.; Liu, Y.; Zhang, Z.; Qiao, Y.; Dong, C. Hdrunet: Single image HDR reconstruction with denoising and dequantization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Virtual, 19–25 June 2021; pp. 354–363. [Google Scholar]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128 × 128 120 dB 15 μs latency asynchronous temporal contrast vision sensor. IEEE J. Solid-State Circuits 2008, 43, 566–576. [Google Scholar] [CrossRef]

- Gallego, G.; Delbrück, T.; Orchard, G.; Bartolozzi, C.; Taba, B.; Censi, A.; Leutenegger, S.; Davison, A.J.; Conradt, J.; Daniilidis, K. Event-based vision: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 154–180. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Handa, A.; Benosman, R.; Ieng, S.-H.; Davison, A.J. Simultaneous mosaicing and tracking with an event camera. IEEE J. Solid-State Circuits 2008, 43, 566–576. [Google Scholar]

- Rebecq, H.; Ranftl, R.; Koltun, V.; Scaramuzza, D. Events-to-video: Bringing modern computer vision to event cameras. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 3857–3866. [Google Scholar]

- Scheerlinck, C.; Barnes, N.; Mahony, R. Continuous-time intensity estimation using event cameras. In Proceedings of the Asian Conference on Computer Vision (ACCV), Perth, Australia, 2–6 December 2018; pp. 308–324. [Google Scholar]

- Zou, Y.; Zheng, Y.; Takatani, T.; Fu, Y. Learning to reconstruct high speed and high dynamic range videos from events. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 2024–2033. [Google Scholar]

- Barranco, F.; Fermuller, C.; Ros, E. Real-time clustering and multi-target tracking using event-based sensors. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 5764–5769. [Google Scholar]

- Gehrig, D.; Rebecq, H.; Gallego, G.; Scaramuzza, D. Asynchronous, photometric feature tracking using events and frames. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 750–765. [Google Scholar]

- Muglikar, M.; Gallego, G.; Scaramuzza, D. ESL: Event-based structured light. In Proceedings of the 2021 International Conference on 3D Vision (3DV), Virtual, 1–3 December 2021; pp. 1165–1174. [Google Scholar]

- Takatani, T.; Ito, Y.; Ebisu, A.; Zheng, Y.; Aoto, T. Event-based bispectral photometry using temporally modulated illumination. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 15638–15647. [Google Scholar]

- Mueggler, E.; Huber, B.; Scaramuzza, D. Event-based, 6-DOF pose tracking for high-speed maneuvers. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Chicago, IL, USA, 14–18 September 2014; pp. 2761–2768. [Google Scholar]

- Peng, X.; Gao, L.; Wang, Y.; Kneip, L. Globally-optimal contrast maximisation for event cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3479–3495. [Google Scholar] [CrossRef] [PubMed]

- Falanga, D.; Kleber, K.; Scaramuzza, D. Dynamic obstacle avoidance for quadrotors with event cameras. Sci. Robot. 2020, 5, eaaz9712. [Google Scholar] [CrossRef] [PubMed]

- Milde, M.B.; Bertrand, O.J.N.; Benosman, R.; Egelhaaf, M.; Chicca, E. Bioinspired event-driven collision avoidance algorithm based on optic flow. In Proceedings of the 2015 International Conference on Event-Based Control, Communication, and Signal Processing (EBCCSP), Krakow, Poland, 17–19 June 2015; pp. 1–7. [Google Scholar]

- Sanket, N.J.; Parameshwara, C.M.; Singh, C.D.; Kuruttukulam, A.V.; Fermüller, C.; Scaramuzza, D.; Aloimonos, Y. EVDodgenet: Deep dynamic obstacle dodging with event cameras. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 10651–10657. [Google Scholar]

- Walters, C.; Hadfield, S. EVReflex: Dense time-to-impact prediction for event-based obstacle avoidance. In Proceedings of the 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Prague, Czech Republic, 27 September–1 October 2021; pp. 1304–1309. [Google Scholar]

- Liu, Z.; Guan, B.; Shang, Y.; Yu, Q.; Kneip, L. Line-based 6-DoF object pose estimation and tracking with an event camera. IEEE Trans. Image Process. 2024, 33, 4765–4780. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Guan, B.; Shang, Y.; Bian, Y.; Sun, P.; Yu, Q. Stereo event-based, 6-DOF pose tracking for uncooperative spacecraft. IEEE Trans. Geosci. Remote Sens. 2025, 63, 5607513. [Google Scholar] [CrossRef]

- Yu, Z.; Bu, T.; Zhang, Y.; Jia, S.; Huang, T.; Liu, J.K. Robust decoding of rich dynamical visual scenes with retinal spikes. IEEE Trans. Neural Netw. Learn. Syst. 2024, 36, 3396–3409. [Google Scholar] [CrossRef] [PubMed]

- Domínguez-Morales, M.J.; Jiménez-Fernández, Á.; Jiménez-Moreno, G.; Conde, C.; Cabello, E.; Linares-Barranco, A. Bio-inspired stereo vision calibration for dynamic vision sensors. IEEE Access 2019, 7, 138415–138425. [Google Scholar] [CrossRef]

- Jiao, J.; Chen, F.; Wei, H.; Wu, J.; Liu, M. LCE-Calib: Automatic LiDAR-Frame/Event Camera Extrinsic Calibration with a Globally Optimal Solution. IEEE/ASME Trans. Mechatron. 2023; in press. [Google Scholar]

- Muglikar, M.; Gehrig, M.; Gehrig, D.; Scaramuzza, D. How to calibrate your event camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 1403–1409. [Google Scholar]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Wang, L.; Ho, Y.-S.; Yoon, K.-J. Event-based high dynamic range image and very high frame rate video generation using conditional generative adversarial networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 10081–10090. [Google Scholar]

- Pan, L.; Hartley, R.; Scheerlinck, C.; Liu, M.; Yu, X.; Dai, Y. High frame rate video reconstruction based on an event camera. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 44, 2519–2533. [Google Scholar] [CrossRef]

- Chen, Z.; Zheng, Q.; Niu, P.; Tang, H.; Pan, G. Indoor lighting estimation using an event camera. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 14760–14770. [Google Scholar]

- Shaw, R.; Catley-Chandar, S.; Leonardis, A.; Pérez-Pellitero, E. HDR Reconstruction from Bracketed Exposures and Events. arXiv 2022, arXiv:2203.14825. [Google Scholar]

- Han, J.; Asano, Y.; Shi, B.; Zheng, Y.; Sato, I. High-fidelity event-radiance recovery via transient event frequency. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, Canada, 18–22 June 2023; pp. 20616–20625. [Google Scholar]

- Galor, D.; Cao, R.; Waller, L.; Yates, J. Leveraging noise statistics in event cameras for imaging static scenes. In Proceedings of the International Conference on Computational Photography (ICCP), Spotlight Poster Demo, Cambridge, MA, USA, 28–30 April 2023. [Google Scholar]

- Finateu, T.; Niwa, A.; Matolin, D.; Tsuchimoto, K.; Mascheroni, A.; Reynaud, E.; Mostafalu, P.; Brady, F.; Chotard, L.; LeGoff, F.; et al. 5.10 A 1280 × 720 back-illuminated stacked temporal contrast event-based vision sensor with 4.86 μm pixels. In Proceedings of the 2020 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 16–20 February 2020; pp. 112–114. [Google Scholar]

- Graca, R.; Delbruck, T. Unraveling the paradox of intensity-dependent DVS pixel noise. arXiv 2021, arXiv:2109.08640. [Google Scholar]

- Gao, Q.; Sun, X.; Yu, Z.; Chen, X. Understanding and controlling the sensitivity of event cameras in responding to static objects. In Proceedings of the 2023 IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM), Seattle, WA, USA, 28–30 June 2023; pp. 783–786. [Google Scholar]

- Brandli, C.; Berner, R.; Yang, M.; Liu, S.-C.; Delbruck, T. A 240 × 180 130 dB 3 μs latency global shutter spatiotemporal vision sensor. IEEE J. Solid-State Circuits 2014, 49, 2333–2341. [Google Scholar] [CrossRef]

- Indiveri, G.; Linares-Barranco, B.; Hamilton, T.J.; Van Schaik, A.; Etienne-Cummings, R.; Delbruck, T.; Liu, S.-C.; Dudek, P.; Häfliger, P.; Renaud, S.; et al. Neuromorphic silicon neuron circuits. Front. Neurosci. 2011, 5, 9202. [Google Scholar] [CrossRef] [PubMed]

- Sarpeshkar, R.; Delbruck, T.; Mead, C.A. White noise in MOS transistors and resistors. IEEE Circuits Devices Mag. 1993, 9, 23–29. [Google Scholar] [CrossRef]

- Lichtsteiner, P.; Posch, C.; Delbruck, T. A 128 × 128 120 dB 30 mW asynchronous vision sensor that responds to relative intensity change. In Proceedings of the 2006 IEEE International Solid-State Circuits Conference (ISSCC), San Francisco, CA, USA, 6–9 February 2006; pp. 2060–2069. [Google Scholar]

- Yang, M.; Liu, S.-C.; Delbruck, T. A dynamic vision sensor with 1% temporal contrast sensitivity and in-pixel asynchronous delta modulator for event encoding. IEEE J. Solid-State Circuits 2015, 50, 2149–2160. [Google Scholar] [CrossRef]

- Chen, J.; Chen, N.; Wang, Z.; Dou, R.; Liu, J.; Wu, N.; Liu, L.; Feng, P.; Wang, G. A review of recent advances in high-dynamic-range CMOS image sensors. Chips 2025, 4, 8. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image denoising by sparse 3-D transform domain collaborative filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed]

| Method | Integration | Gao’s | Ours |

|---|---|---|---|

| PSNR | 7.89 | 8.63 | 10.23 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, B.; Yang, W.; Wu, K.; Yang, R.; Xie, J.; Liu, H. Time-Interval-Guided Event Representation for Scene Understanding. Sensors 2025, 25, 3186. https://doi.org/10.3390/s25103186

Wang B, Yang W, Wu K, Yang R, Xie J, Liu H. Time-Interval-Guided Event Representation for Scene Understanding. Sensors. 2025; 25(10):3186. https://doi.org/10.3390/s25103186

Chicago/Turabian StyleWang, Boxuan, Wenjun Yang, Kunqi Wu, Rui Yang, Jiayue Xie, and Huixiang Liu. 2025. "Time-Interval-Guided Event Representation for Scene Understanding" Sensors 25, no. 10: 3186. https://doi.org/10.3390/s25103186

APA StyleWang, B., Yang, W., Wu, K., Yang, R., Xie, J., & Liu, H. (2025). Time-Interval-Guided Event Representation for Scene Understanding. Sensors, 25(10), 3186. https://doi.org/10.3390/s25103186