Abstract

Human–machine interface (HMI) systems are increasingly utilized to develop assistive technologies for individuals with disabilities and older adults. This study proposes two HMI systems using piezoelectric sensors to detect facial muscle activations from eye and tongue movements, and accelerometers to monitor head movements. This system enables hands-free wheelchair control for those with physical disabilities and speech impairments. A prototype wearable sensing device was also designed and implemented. Four commands can be generated using each sensor to steer the wheelchair. We conducted tests in offline and real-time scenarios to assess efficiency and usability among older volunteers. The head–machine interface achieved greater efficiency than the face–machine interface. The simulated wheelchair control tests showed that the head–machine interface typically required twice the time of joystick control, whereas the face–machine interface took approximately four times longer. Participants noted that the head-mounted wearable device was flexible and comfortable. Both modalities can be used for wheelchair control, especially the head–machine interface for patients retaining head movement. In severe cases, the face–machine interface can be used. Moreover, hybrid control can be employed to satisfy specific requirements. Compared to current commercial devices, the proposed HMIs provide lower costs, easier fabrication, and greater adaptability for real-world applications. We will further verify and improve the proposed devices for controlling a powered wheelchair, ensuring practical usability for people with paralysis and speech impairments.

1. Introduction

The World Health Organization (WHO) reports an annual increase in the global population of individuals with disabilities due to factors such as congenital conditions, aging, illness, and accidents. A significant challenge faced by individuals with disabilities is limited physical mobility [1], which can restrict their ability to move independently or communicate with others. The growing number of older adults worldwide may dramatically increase the number of people with mobility disabilities and impairments. Consequently, the development of assistive and rehabilitation devices is essential for enhancing their quality of life [2,3,4]. However, most commonly available technologies rely on controls such as buttons or joysticks, which may be inaccessible to those with severe disabilities. This highlights the need for inclusive technological advancements that accommodate the diverse needs of individuals with disabilities, posing a compelling challenge for researchers in designing innovative solutions that support independent living and social integration.

Human–machine interface (HMI) technology has become a critical area of research for developing assistive and rehabilitation devices for individuals with disabilities or the elderly [5,6]. HMI systems leverage biomedical and physical signals to facilitate machine control [6,7,8,9], with applications that include enabling control over electric wheelchairs through a variety of biomedical signals [10,11], such as facial muscle movements [12,13], head movements [14,15,16], eye tracking [17,18,19], electrooculography (EOG) [20,21,22], electromyography (EMG) [23,24,25], and electroencephalography (EEG) [26,27,28]. These studies aimed to empower individuals with severe disabilities to manage wheelchair movement independently. However, the widespread adoption of these systems has been hindered by their high cost and limited adaptability, rendering them inaccessible to many individuals with disabilities. Recent HMI research has increasingly focused on the development of more affordable and accurate systems. Innovations include the use of low-cost sensors, such as electromagnetic induction sensors [29,30], force sensors [31,32], and flex sensors [33,34], which can detect subtle movements in the tongue and facial muscles to generate control commands for electric wheelchair navigation. Despite advancements noted in previous research, several limitations remain in the sensor manufacturing process. These limitations include fabrication complexity, device durability, sensitivity, and the effects of temperature variations on measurement accuracy. Additionally, challenges continue to arise when deploying these sensors in various real-world environments, where system performance often fails to meet practical application requirements. Addressing these limitations is essential for improving the efficiency and reliability of HMI systems. For example, in our previous study [35] we utilized six thin-film piezoelectric sensors to capture facial muscle signals for electric wheelchair control. However, that approach had practical limitations, particularly regarding the inconvenience of attaching multiple sensors directly to the face.

This study introduces a wearable device with an adjustable design tailored to individual users, thereby enhancing its usability and feasibility. We reduced the number of piezoelectric sensors from six to three, while maintaining effective signal detection. Additionally, we utilized an accelerometer sensor to detect head movements, providing an alternative input for generating commands to control a simulated electric wheelchair. To evaluate its potential use for people with disabilities, we conducted experiments to verify the effectiveness of the proposed HMI system in older adult participants. The remainder of this paper is structured as follows: In Section 2, we demonstrate the prototype, which includes the wearable sensing device, face–machine interface method, head–machine interface method, and command translations. Section 3 presents the experimental results and discusses the verification of the efficiency of offline and online testing. Section 4 details the online testing procedures. Finally, Section 5 concludes and outlines future work regarding practical applications for individuals with physical disabilities and speech impairments.

2. Materials and Methods

2.1. Proposed Hands-Free HMI System Using Piezoelectric Sensors and Accelerometers for Wheelchair Control

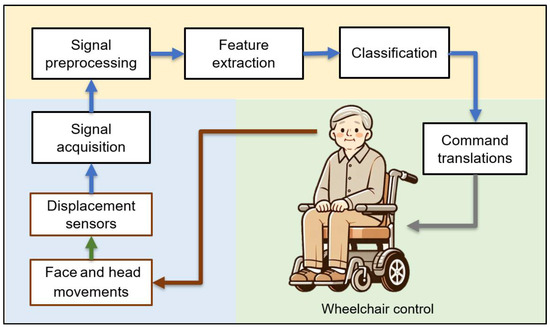

To develop practical HMIs for hands-free control, we used displacement sensors to detect mechanical displacement resulting from human activities and interface machines through head, face, and tongue movements. Figure 1 illustrates the proposed hands-free HMI system designed for wheelchair control. This system consists of three primary components. The first is signal acquisition (shown on a blue background), which includes two key procedures: detecting facial muscle movements using a piezoelectric sensor and capturing head movements with an accelerometer, followed by the conversion of analog signals into digital signals for processing. Second, the signal analysis and classification process (depicted on a yellow background) integrates an algorithm for signal segmentation, a feature extraction process to gather essential data, and the subsequent classification of the signals. Finally, command translation for wheelchair control (represented on a green background) converts the output obtained from the classification into actionable commands for wheelchair operation. This approach can also be used in various other applications.

Figure 1.

The overview of the proposed hands-free human–machine interface system for wheelchair control.

2.2. Prototyping

2.2.1. Head-Mounted Device

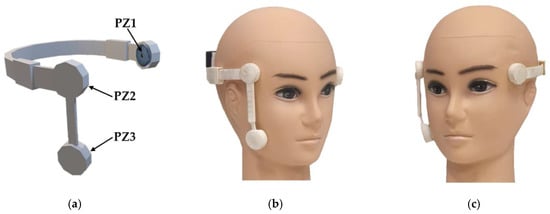

Wearable devices are widely used in medical and healthcare applications to collect data from the human body for diagnostic and treatment purposes. They also contribute to the development of HMI systems [36,37,38]. In this study, we provided a head-mounted wearable device that uses piezoelectric sensors and accelerometers to acquire physical data from the face and head. We designed a comfortable and flexible head-mounted prototype using Shapr3D, as shown in Figure 2a. The prototype consists of three main parts: (1) a headset with a flexible shoulder containing two piezoelectric sensors at the left and right ends, (2) a flexible and rotatable shoulder containing a piezoelectric sensor that can connect to the end of either side of the headset, and (3) an accelerometer with a circuit container. The prototype model was 3D printed using a polylactic acid (PLA) filament, as depicted in Figure 2b,c. This design enables the adjustment of the sensor interface without removing the wearable device, according to the individual shape and size of the head.

Figure 2.

The prototype of the head-mounted device. (a) Three-dimensional modeling. (b,c) The 3D-printed head-mounted device prototypes worn on the head from the right and left perspectives.

2.2.2. Sensing Modules and Processor

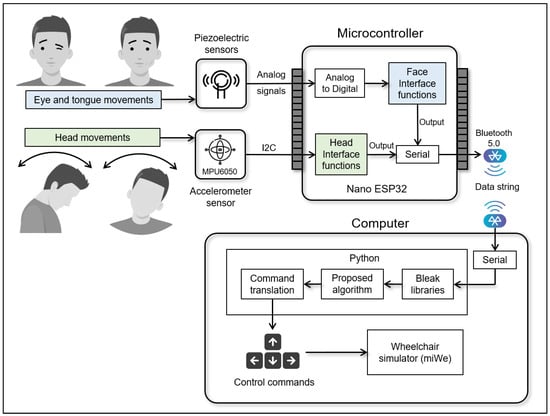

The electronic components of the head-mounted device can be divided into two parts: displacement sensor modules and the microprocessor, as shown in Figure 3. Each component is described in detail below.

Figure 3.

Diagram of the displacement sensing modules and connection for face– and head–machine interfaces.

- Displacement sensor modules

For the face–machine interface, we used a piezoelectric vibration tapping sensor made from piezoelectric ceramic, which converts mechanical energy into electrical energy. Three 20.5 mm piezoelectric vibration tapping sensors were used for eye winking and tongue pushing. As described in Section 2.2.1, two piezoelectric sensors, PZ1 and PZ2, were placed on the left and right temples near the eye, respectively. PZ3 was placed on the left or right cheek, as shown in Figure 2b,c. These modules can provide both analog and digital signals. Analog piezoelectric signals were used as the input data for the microprocessor. We used the MPU6050 sensor module for the head–machine interface. This compact motion-tracking device has a 3-axis gyroscope, a 3-axis accelerometer, and a digital motion processor. It can communicate with microcontrollers via an I2C bus interface. According to the module installation in the head-mounted device, the x- and z-axes were used to detect head movements.

- Microprocessor

We used an Arduino Nano ESP32 board as the microprocessor to process the input data from the sensing modules. The board is manufactured by Arduino S.r.l., based in Turin (Torino), Italy. This board features a NORA-W106 module from u-blox, equipped with a 32-bit ESP32 microcontroller. The Nano ESP32 supports Wi-Fi and Bluetooth wireless communication. We used a prototype shield board with a charger circuit and battery for plugging the sensor module and the ESP32 board. The ESP32 was used to convert analog piezoelectric signals to digital signals and acquire data from the MPU6050 sensor module. We programmed the ESP32 to collect and transmit data using the UART protocol through Bluetooth 5.0 modules. The modules were configured at a baud rate of 115,200 for serial communication with a computer, allowing for data processing and the implementation of algorithms. A 3.7 V/150 mAh lithium-ion battery is used to power the ESP32 board and sensor modules, providing approximately 45 min of continuous operation under normal conditions. Data processing was performed using Python (version 3.13.0). We utilized the Bluetooth Low Energy platform Agnostic Klient (Bleak) libraries (version 0.22.3) (https://pypi.org/project/bleak/, accessed on 21 January 2025) to read data from the head-mounted devices. The data were processed using the proposed algorithms and command translations. The commands were used to control simulated electric wheelchairs. We employed the McGill Immersive Wheelchair (miWe) simulator [39] to design experimental tasks that validate the effectiveness of the proposed online HMI system.

2.3. Proposed Actions and Commands

This study proposes a human–machine interface system that utilizes displacement sensing from a head-mounted device to generate commands based on face and head movements to control the direction of a wheelchair, as illustrated in Table 1. Using the proposed command, we created four directional control commands: turning left and right, and moving forward and backward. For the face–machine interface, we used eye and tongue movements. Winks in the left or right eye generated left- or right-turn commands, respectively. Blinking with both eyes created a command to move backward. Tongue pushes against the left or right cheek created a command to move forward. To develop the head–machine interface, we created a system that generates commands through head movements. The interface recognizes four distinct head-tilt directions (left, right, forward, and backward), each corresponding to a command for steering the wheelchair in these four directions. An example of the corresponding posture and actions is illustrated in Figure 3. In the idle state, a stop command is defined as a command without any action.

Table 1.

Proposed action mapping with commands for hands-free wheelchair control.

2.4. Proposed Algorithm

2.4.1. Data Preprocessing

Data preprocessing was performed before the analysis and classification stages. For piezoelectric signal processing, a high-pass filter with a 3 Hz cutoff was used to remove low-frequency noise and baseline shifts caused by motion artifacts. A low-pass filter with an 80 Hz cutoff eliminated high-frequency noise and electrical interference, while a 50 Hz notch filter was applied to remove power line interference. For accelerometer data preprocessing, a 0.1–20 Hz bandpass filter was employed to focus on relevant head movements, removing low-frequency drift from slow movements and high-frequency noise. This process ensures that signal classification accurately captures movements of the eyes, tongue, and head.

2.4.2. Face–Machine Interface

This study utilized piezoelectric sensors to measure muscle signals and generate commands for real-time processing. We applied conventional feature extraction techniques used in electromyography (EMG) to develop a method for detecting eye and tongue movements. Our approach utilizes time-domain features based on a research survey that focused on EMG-based machine control [39]. We used the maximum peak () feature derived from piezoelectric signals, which can be calculated using Equation (1).

where is the signal acquisition dataset of each piezoelectric sensor and is the value at element in the dataset.

The proposed classification algorithm for the face–machine interface consists of two main processing steps:

- (1)

- Calibration and Parameter Setting

Before testing, users must conduct a quick calibration by following the eye and tongue actions specified in Table 1 to activate each sensor for baseline parameter collection. Each action should be performed five times to compute the mean of the maximum values of five values in Equation (2), which are calculated as follows:

where denotes the baseline parameters of the piezoelectric signals obtained from the piezoelectric sensors, is defined as the index number of the piezoelectric sensors ( = 1, 2, 3), and represents the maximum value of each action calculated using Equation (1). The baseline parameters were obtained for the threshold parameters and in Equations (3) and (4), respectively, which are calculated as follows:

During these actions, the feature parameters () are defined by Equation (5) and calculated as follows:

where represents the signals acquired in real time at a sampling rate of 1000 Hz. We used 1000 samples () for window sizes of 1 s, which were utilized for processing real-time features every 2 s: and in Equations (6) and (7), calculated as follows:

After obtaining the feature parameters, the “argmax” function determines the activated sensors within the output parameter (), as stated in Equation (8), which was calculated as follows:

- (2)

- Decision Making and Command Translation

We propose command translation for simulated wheelchair control using facial muscle actions and human cognition. We directed the simulated wheelchair’s movement by combining eye and tongue actions (Table 1) to activate the target sensors. Tongue movements were used to move the wheelchair forward and backward using sensor PZ3. Winking the left eye activated sensor PZ1 to turn the wheelchair to the right. The left eye winking also activated sensor PZ2 to turn it to the left. Hence, we applied a decision rule based on the values, utilizing the index numbers of sensors () for four command classifications along with the idle state, as follows:

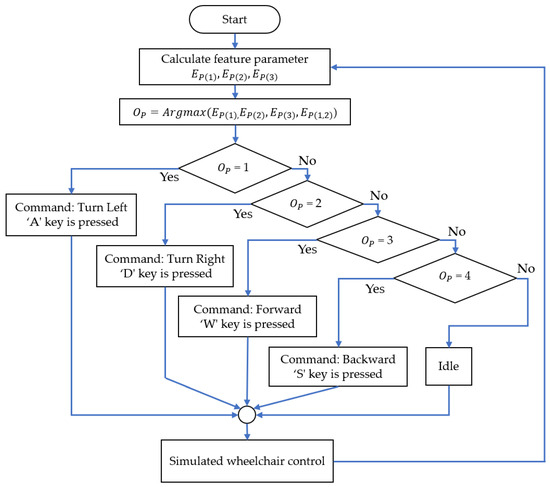

Figure 4 illustrates the proposed classification decision process for the face–machine interface system. For real-time processing, the system extracts feature parameters from three piezoelectric sensors that detect user actions and generate directional control commands every two seconds for the simulated wheelchair through keyboard interaction.

Figure 4.

Flowchart of the classification process of the face–machine interface for simulated wheelchair control.

2.4.3. Head–Machine Interface

The MPU6050 module was installed in a head-mounted device positioned in the y-plane. Hence, the angle data of the x- and z-axes were used to identify the direction of head tilt. The proposed classification algorithm for head movement detection consists of two main processing steps:

- (1)

- Calibration and Parameter Setting

Before use, the system requires a user calibration session to record the baseline acceleration along the x- and z-axes during head movements, following Table 1 for collecting baseline acceleration parameters. Each action should be performed three times to establish the maximum and minimum acceleration values along the x- and z-axes, , , , and , which are calculated as follows:

where , , , and are the signals acquired in real time. We used 200 samples () for window sizes of 1 s each.

The baseline parameters in Equations (13)–(16) for the threshold values , , , and were then established using 80 percent of the maximum and minimum accelerations along the x- and z-axes in Equations (9)–(12) to detect movement, which can be calculated as follows:

- (2)

- Decision Making and Command Translation

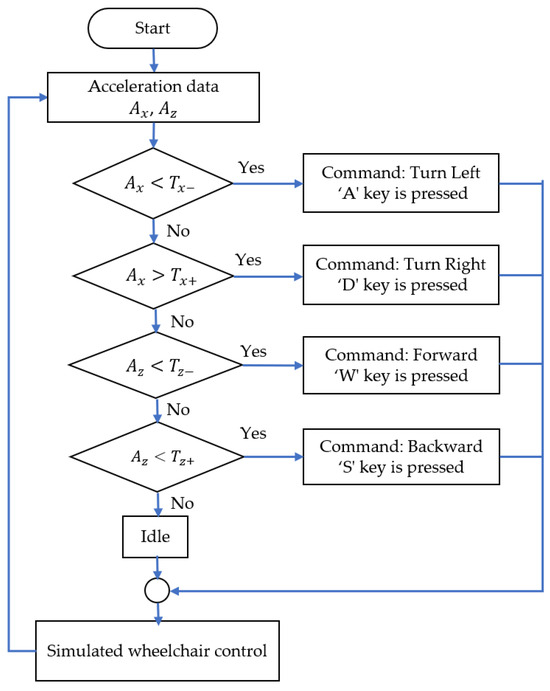

The real-time acceleration data along the x- and z-axes, and , were used to generate commands every 2 s based on threshold parameters. The classification process of the head–machine interface system is illustrated in Figure 5. Using a simple decision rule, the system categorizes the commands into four movement types, including an idle state, as follows:

Figure 5.

Flowchart of the classification process of the head–machine interface for simulated wheelchair control.

3. Results

3.1. Participants

This study focused on adults aged 60 to 69 years, regardless of gender, who were able to live independently and did not have neurological disorders affecting their mobility, in order to develop and validate the proposed HMI system in a controlled setting with older adults. The aim was to evaluate its efficiency, usability, and safety as an initial step. Participants excluded from the study included individuals with acute or chronic conditions that might hinder participation, those with impairments affecting facial, hand, or arm movements, and individuals who declined to provide informed consent.

Twelve older adults, six males and six females, aged between 60 and 69 years, with a mean age of 64.92 ± 3.95, participated in the study, as shown in Table 2. Additionally, all participants met the inclusion criteria, as none demonstrated impairments in facial muscles, bilateral hand function, or bilateral arm movement. Additionally, none had a history of neurological disorders affecting movement, such as stroke, Parkinson’s disease, or Amyotrophic Lateral Sclerosis (ALS). One participant had a lower limb impairment, and another reported weakness in the left (non-dominant) arm.

Table 2.

Participant demographics and physical condition.

The participants participated in experiments to validate the effectiveness of the proposed system in controlling a simulated electric wheelchair. The experiment included two components: (1) assessing the effectiveness of the proposed HMIs for command creation, and (2) observing the performance of real-time control for the simulated power wheelchair. The participants reviewed the documentation and signed a consent form. This document ensures that personal information remains confidential and anonymous. The Ethics Committee of Human Research at Walailak University approved the research procedure involving human subjects (protocol code: WU-EC-IN-1-280-66) on 27 November 2023. This aligns with the ethical principles of the Declaration of Helsinki, the Council for International Organizations of Medical Sciences (CIOMS), and the World Health Organization (WHO).

3.2. Experiment I: Verification of the Proposed Hands-Free HMI System

In the first experiment, we evaluated the accuracy of command issuance for wheelchair control. All participants participated in the experiments without having any prior HMI experience. They used the proposed face– and head–machine interface and conventional joystick modalities and methods to generate commands, and the accuracy rate was measured. After completing the installation and calibration, each participant participated in a 20 min training session before starting the experiments. Each participant completed two trials, with 12 commands per trial, for 24 commands for each HMI modality. The command sequence is outlined in Table 3. Participants rested for 2 min before proceeding to the subsequent trial. After that trial, they rested for 5 min before beginning the following modality to avoid muscle fatigue.

Table 3.

Sequence of commands for evaluating the proposed HMI system.

We also utilized the assessment metrics of precision, sensitivity, and accuracy to evaluate the performance of the proposed actions and algorithms of the face– and head–machine interface systems for wheelchair control. These metrics can be calculated using Equations (17)–(19) as follows:

A true positive (TP) indicates the correct result with the expected output. A true negative (TN) represents an accurate result for an unanticipated undesired outcome. A false positive (FP) refers to an inaccurately predicted result. False negatives (FNs) signify actual findings that were not expected.

Table 4 lists the accuracy values of command generation by comparing all three HMI modalities for each participant. With joystick control, the accuracy ranged from 95.8% to 100.0%, with a mean of 99.0%. The accuracy achieved with the proposed head–machine interface modality ranged from 87.5% to 100.0%, with a mean accuracy of 82.6%. The accuracy range obtained with the face–machine interface modality was 70.8% to 91.7%, with a mean accuracy of 82.3%. The efficiency of the head–machine interface system was similar to that of the joystick control. Most participants, except for Participants 7, 8, and 9, achieved a 100% accuracy rate when using both the joystick and head–machine interface modalities. The proposed head–machine interface can achieve higher accuracy than the face–machine interface. Nine participants achieved over 80% accuracy with the face–machine interface, which was lower than that in our previous work [35] using six thin-film piezoelectric sensors directly placed on the face to detect eye and tongue movements, where the accuracy exceeded 90%. This lower accuracy may have resulted from the inability of some participants to separately perform left and right winking.

Table 4.

The results of the proposed and conventional HMI modalities for all participants.

Furthermore, the classification matrix for the proposed hands-free HMI systems based on the precision, sensitivity, and accuracy of each control command, as shown in Table 5 and Table 6, was used to evaluate the effectiveness of each HMI system. Table 5 presents the results of the proposed face–machine interface. These results demonstrate that the system can identify eye and tongue movements for the four command creations with an accuracy of over 90%. However, the success rate, precision, and sensitivity of the commands for turning left and right were relatively low. For some participants, winking with either the left or right eye was difficult and could lead to errors in activating commands. The forward and backward commands exhibited high precision, sensitivity, and accuracy. Most participants generated signal features by pushing their tongues against their left or right cheeks and blinking both eyes. Table 6 shows the results for the head–machine interface. The system had high classification efficiency for command creation, achieving a success rate, precision, sensitivity, and accuracy of over 95% for each command. These results indicate the significant potential of implementing our hands-free HMIs for online wheelchair control.

Table 5.

Results of command classification of face–machine interface for wheelchair control.

Table 6.

Results of command classification of head–machine interface for wheelchair control.

3.3. Experiment II: Performance of the Proposed Hands-Free HMI System for Real-Time Simulated Wheelchair Control

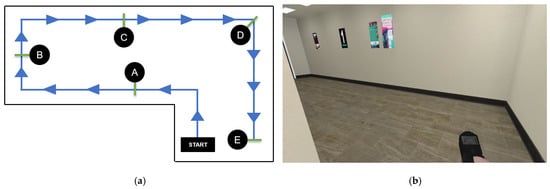

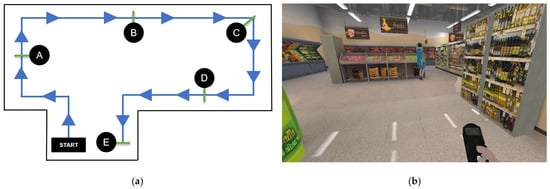

The same group of participants used the proposed HMIs and joystick (hand control) modalities to steer the simulated wheelchair to the goal and recorded the time taken. Each participant performed one round per control modality using two test routes. They wore the HMI device and sat in front of a computer to test the real-time steering of the simulated wheelchair, as shown in Figure 6. The first route in Figure 7a involved a path without obstacles, as depicted in Figure 7b. In the second route in Figure 8a, participants steered the wheelchair through a supermarket that contained obstacles, as illustrated in Figure 8a. Both routes had five checkpoints, labeled A to E, along with a designated starting point. Checkpoint E is the completion point for both routes. Participants began at the starting point and navigated the simulated wheelchair through a sequence of checkpoints, beginning at checkpoint A and continuing through B, C, and D, until reaching the final checkpoint E. Routes 1 and 2 must be completed within 3 and 6 min, respectively. If the participants could not reach the goal in time, the farthest checkpoint they reached was recorded. Before the experiments, each participant practiced controlling the simulated electric wheelchair using the HMI system for 15 min. Participants rested for 5 min before starting the next round. The recorded times taken were used to evaluate the effectiveness of the proposed HMI modalities and to assess user performance, as presented in Table 6 and Table 7.

Figure 6.

An example scenario of using the proposed HMIs to control a simulated wheelchair during the experiment.

Figure 7.

(a) Test Route 1 for controlling the simulated wheelchair in an obstacle-free environment, including five checkpoints (A to E) over a distance of 20 m. (b) A scenario encountered by the simulated electric wheelchair during testing in a corridor.

Figure 8.

(a) Test Route 2 for controlling the simulated wheelchair in an environment with obstacles, including five checkpoints (A to E) over a distance of 35 m. (b) A scenario faced by the simulated electric wheelchair during testing in a supermarket.

Table 7.

The time taken and checkpoints completed by all participants in Route 1.

Table 7 and Table 8 show the results of the efficiency comparisons between the proposed command translation patterns and the joystick based on the time spent steering the simulated wheelchair to complete Test Routes 1 and 2.

Table 8.

The time taken and checkpoints completed by all participants in Route 2.

In Table 7, the results for Test Route 1 reveal that the time taken using the joystick control ranged from 40 to 95 s across all participants, with an average time of 59.0 s. For successful participants, the time taken with the proposed face–machine interface control ranged from 99 to 354 s, and the average was 241.8 s. The time with the proposed head–machine interface control ranged from 62 to 262 s, with an average time of 117.1 s. For Test Route 2 (Table 8), the time taken with the joystick control for all participants ranged from 67 to 160 s, and the average time was 110.3 s. The time taken with the proposed face–machine interface control ranged from 217 to 673 s, and the average time was 439.5 s for successful participants. The time with the proposed head–machine interface control ranged from 67 to 262 s, with an average time of 219.8 s.

Furthermore, in Test Route 1, Participant 1 spent the least amount of time steering with the face–machine interface at 99 s, while the time with the head–machine interface was 62 s. Participant 1 also spent the least amount of time steering on Test Route 2 with the face–machine interface at 217 s, while the head–machine interface took 97 s. The shortest times spent steering with the joystick control were 40 s for Test Route 1 and 67 s for Test Route 2.

The difference between the average time taken by the face–machine interface and joystick control on Test Route 1 was 182.8 s, whereas on Route 2, it was 329.2 s. The proposed face–machine interface control took approximately four times as long as the joystick control. The difference between the average time taken by the head–machine interface and joystick control on Test Route 1 was 58.1 s, whereas that on Route 2 was 109.5 s. The proposed head–machine interface control took approximately twice as long as the joystick control. The difference between the average time taken by the head–machine interface and joystick control on Test Route 1 was 58.1 s, whereas that on Route 2 was 109.5 s. The head–machine interface control took approximately twice as long as the joystick control. The difference between the average time taken by the face–machine interface and head–machine interface controls on Test Route 1 was 124.7 s, whereas on Test Route 2, it was 219.7 s. The proposed face–machine interface control took approximately twice as long as the head–machine interface control.

3.4. Satisfaction with the Proposed Hands-Free HMIs

After completing the experiments, all participants were required to complete a satisfaction questionnaire for each HMI modality. The questionnaire included seven questions (Table 9). Each question was evaluated using a five-point Likert scale ranging from 1 to 5, where 1 indicated “strongly disagree”, 2 indicated “disagree”, 3 indicated “neutral”, 4 indicated “agree”, and 5 indicated “strongly agree”. The participants were asked to respond to all questions thoughtfully.

Table 9.

The satisfaction questionnaire.

Table 10 shows the responses to the satisfaction questionnaire provided by each participant. The analysis focused on usability and flexibility factors for developing and implementing hands-free HMIs for patients.

Table 10.

Results of satisfaction with the proposed HMIs for wheelchair control.

Regarding the ease of wearing the head-mounted device for both HMIs, the participants reported a high average satisfaction score of 4.58, ranging from 4 to 5, with a median score of 5, indicating that the device was easy to wear. The device offered a comfortable fit, as evidenced by a mean score of 4.50, ranging from 4 to 5, with a median score of 4. For ease of creating commands, the head–machine interface achieved a higher average score of 4.58, ranging from 4 to 5, compared to the face–machine interface, which averaged 3.58, ranging from 2 to 4. Additionally, participants reported that both the head–machine and face–machine interfaces were highly stable during operation, with average satisfaction scores of 4.25 and 4.5, respectively, ranging from 2 to 4, with a median of 4.

Moreover, the participants reported low fatigue levels for both modalities, with a median score of 5, indicating that they felt slightly fatigued after completing the experiment. The head–machine interface resulted in lower fatigue than the face–machine interface. In terms of confidence, participants gave the head–machine interface an average score of 4.83, with a median of 5, which was significantly higher than the face–machine interface’s average of 4.00 and median of 3. In terms of confidence, participants indicated that the head–machine interface received an average score of 4.83, with a median of 5, significantly higher than the face–machine interface, which scored 4.00 with a median of 3. Overall, satisfaction with both HMIs was high, ranging from 2 to 4. The head–machine interface received an average score of 4.83 and a median score of 5, which was higher than those of the face–machine interface, which had an average score of 4.00 and a median score of 4.

4. Discussion

According to the results of Experiment I, the proposed HMI systems demonstrated high efficiency among most participants, especially for the head–machine interface, which achieved an efficiency comparable to that of the joystick modality, as illustrated in Table 4. From the results in Table 5 and Table 6, the head–machine interface with accelerometer sensing achieved a success rate ranging from 95.8% to 100%, which was higher than that of the face–machine interface using facial muscle piezoelectric sensing, which ranged from 79.2% to 87.5%. Considering the individual commands of the face–machine interface, the forward and backward commands, activated by pushing the tongue against the cheek and blinking both eyes, yielded the highest success rates of 86.1% and 87.5%, respectively; however, these were still lower than the rate of 95.8% for the head–machine interface. Most participants found it easier to push their tongue against their cheek and blink both eyes than to wink each eye separately, influencing the efficiency of the left and right commands. For the head–machine interface, all participants demonstrated higher efficiency for the left and right commands by tilting the head compared to the forward and backward commands achieved by tilting the head forward and backward, which may have been influenced by a familiar level of head tilt. We could employ the advantages of each HMI to design a control strategy for a practical HMI system that covers all levels of physical disabilities.

Based on the results of Experiment II, the study with older adults, all participants completed the real-time simulated wheelchair control task on both test routes using the head–machine interface. However, nine participants utilized the face–machine interface for each test route because of the efficiency observed in Experiment I. Additionally, the face–machine interface required approximately twice as long to complete test routes 1 and 2 compared to the head–machine interface and took approximately four times longer than the joystick control. We found that the head–machine interface also provided high efficiency in real-time control, which required approximately twice as long as joystick control. None of the participants had experience in wheelchair control or HMI. We observed that face–machine interface control requires training sessions for beginning users to encourage them to achieve high efficiency. Moreover, we can select and recommend each command from both proposed hands-free HMIs for hybrid systems tailored to specific disability cases.

For the satisfaction results in Table 10, although the participants suggested that the prototype head-mounted device was easy to wear and comfortable for long periods during the experiments, it requires adjusting the shoulder to fit the face, which affects the efficiency and stability of the face–machine interface. Moreover, the participants reported that the face–machine interface required more effort to produce commands than the head–machine interface, which increased fatigue and lowered confidence in using the actual wheelchair control. Based on the overall score, participants expressed high satisfaction with the proposed hands-free HMIs, particularly regarding the head–machine interface modality.

4.1. Study Limitations

The proposed hands-free HMIs can be used by patients who retain movement in the head, face, or tongue to control actual powered wheelchairs. However, some limitations are as follows:

- (1)

- The study sample size is small, primarily aiming for a preliminary evaluation of the proposed head–machine interface (HMI) system with older adults for feasibility, usability, and safety before expanding to a larger, more diverse cohort.

- (2)

- The experiment was executed in a simulated environment, which may not precisely reflect real-world conditions.

4.2. Recommendations

Moreover, the preliminary experimental results indicate that the developed system can effectively control the movement of a simulated wheelchair. The recommendations for using the proposed HMIs to control wheelchairs are as follows:

- (1)

- A prototype of the head-mounted device must be designed and built for practical applications. The shoulder section, which houses piezoelectric sensors, should be flexible and shaped to fit the face.

- (2)

- Regarding hardware, the Arduino Nano ESP32 with Bluetooth was selected for rapid prototyping. A real-world implementation will need higher-performance hardware and faster communication.

- (3)

- The proposed hands-free HMIs require visual or audio feedback for training sessions and for creating real-time commands.

- (4)

- Implementing machine-learning techniques could enhance the classification accuracy of both hands-free HMIs.

- (5)

- The hands-free HMIs require validation for controlling powered wheelchairs in real environments. Additionally, validation in patients with quadriplegia should be performed.

4.3. Future Work

- (1)

- Increasing the number of older participants and including individuals with quadriplegia in future studies will help enhance the reliability of the results and the applicability of hands-free HMI systems.

- (2)

- The efficiency of command generation can be improved by implementing machine-learning techniques, which may enhance classification accuracy.

- (3)

- We will interface the prototype with a real wheelchair to test its usability, reliability, and adaptability in real-world environments. Additionally, a powered wheelchair will be modified for semi-automatic operation with an integrated obstacle avoidance system.

- (4)

- We plan to upgrade the hardware to support more efficient real-time operations by enabling faster and more stable communication protocols, such as Wi-Fi and radio frequency (RF), along with increasing battery capacity for extended use.

5. Conclusions

This study aimed to develop hands-free HMIs for wheelchair control using facial muscle activity and head movements. The two HMI modalities include the face–machine interface, which uses piezoelectric sensing based on eye and tongue movements, and the head–machine interface, which measures head movements through an accelerometer. We prototyped and developed the head-mounted wearable device, implementing the proposed algorithms to generate four commands in real-time simulated wheelchair control experiments. Older adults participated in experiments to validate the hands-free HMIs. The results indicated that the efficiency of the head–machine interface was greater than that of the face–machine interface and comparable to that of joystick control. The head–machine interface required approximately twice as much time compared to joystick control for the tested routes, whereas the face–machine interface required four times as much time compared to joystick control. Both hands-free HMI modalities, especially the head–machine interface, can be used for simulated wheelchair control. In future work, we will further verify and enhance the proposed HMIs and head-mounted devices to control powered wheelchairs for practical use by patients and older adults.

Author Contributions

Conceptualization, C.B., N.S., D.A., M.T. and Y.P.; methodology, C.B., D.A. and Y.P.; software, C.B. and Y.P.; validation, C.B., N.S., D.A., M.T. and Y.P.; formal analysis, C.B., N.S. and Y.P.; investigation, C.B. and Y.P.; resources, C.B. and Y.P.; data curation, C.B. and Y.P.; writing: original draft preparation, C.B., N.S., D.A., M.T. and Y.P.; writing: review and editing, C.B., N.S., D.A., M.T. and Y.P.; visualization, C.B., N.S. and Y.P.; supervision, D.A., M.T. and Y.P.; project administration, C.B. and Y.P.; funding acquisition, C.B. and Y.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Research and Innovation Institute of Excellence, Walailak University, grant number WU66242.

Institutional Review Board Statement

All research involving human subjects was approved by the Office of the Human Research Ethics Committee of Walailak University (WU-EC-IN-1-280-66) on 27 November 2023, in accordance with the Declaration of Helsinki, the Council for International Organizations of Medical Sciences, and World Health Organization guidelines.

Informed Consent Statement

Informed consent was obtained from all subjects participating in this study, and written informed consent was obtained from the study participants to publish this article.

Data Availability Statement

The data presented in this study are available upon request.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- World Health Organization. Global Report on Health Equity for Persons with Disabilities; World Health Organization: Geneva, Switzerland, 2022. [Google Scholar]

- Dino, M.J.S.; Davidson, P.M.; Dion, K.W.; Szanton, S.L.; Ong, I.L. Nursing and human–computer interaction in healthcare robots for older people: An integrative review. Int. J. Nurs. Stud. Adv. 2022, 4, 100072. [Google Scholar] [CrossRef] [PubMed]

- Anaya, D.F.V.; Yuce, M.R. A hands-free human-computer-interface platform for paralyzed patients using a TENG-based eyelash motion sensor. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; pp. 4567–4570. [Google Scholar]

- Barrington, N.; Gayle, C.; Hensien, E.; Ko, G.; Lin, M.; Palnati, S.; Gerling, G.J. Developing a multimodal entertainment Tool with intuitive navigation, hands-free control, and avatar features, to increase user interactivity. In Proceedings of the Systems and Information Engineering Design Symposium (SIEDS), Charlottesville, VA, USA, 28–29 April 2022; pp. 304–309. [Google Scholar] [CrossRef]

- Qi, J.; Jiang, G.; Li, G.; Sun, Y.; Tao, B. Intelligent human–computer interaction based on surface EMG gesture recognition. IEEE Access 2019, 7, 61378–61387. [Google Scholar] [CrossRef]

- Rojas, M.; Ponce, P.; Molina, A. Development of a sensing platform based on hands-free interfaces for controlling electronic devices. Front. Hum. Neurosci. 2022, 16, 867377. [Google Scholar] [CrossRef] [PubMed]

- Rechy-Ramirez, E.J.; Hu, H. Bio-signal based control in assistive robots: A survey. Digit. Commun. Netw. 2015, 1, 85–101. [Google Scholar] [CrossRef]

- Schultz, T.; Wand, M.; Hueber, T.; Krusienski, D.J.; Herff, C.; Brumberg, J.S. Biosignal-based spoken communication: A survey. IEEE ACM Trans. Aud. Speech Lang. Process. 2017, 25, 2257–2271. [Google Scholar] [CrossRef]

- Paing, M.P.; Juhong, A.; Pintavirooj, C. Design and development of an assistive system based on eye tracking. Electronics 2022, 11, 535. [Google Scholar] [CrossRef]

- Ramya, S.; Prashanth, K. Human computer interaction using bio-signals. Int. J. Adv. Res. Comput. Commun. Eng. 2020, 9, 67–71. [Google Scholar]

- Ashok, S. High-level hands-free control of wheelchair—A review. J. Med. Eng. Technol. 2017, 41, 46–64. [Google Scholar] [CrossRef]

- Saichoo, T.; Boonbrahm, P.; Punsawad, Y. A face-machine interface utilizing EEG artifacts from a neuroheadset for simulated wheelchair control. Int. J. Smart Sens. Intell. Syst. 2021, 14, 1–10. [Google Scholar] [CrossRef]

- Zhu, B.; Zhang, D.; Chu, Y.; Zhao, X. A Novel Limbs-Free Variable Structure wheelchair based on Face-Computer Interface (FCI) with Shared Control. In Proceedings of the International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 5480–5486. [Google Scholar] [CrossRef]

- Berjón, R.; Mateos, M.; Barriuso, A.; Muriel, I.; Villarrubia, G. Head tracking system for wheelchair movement control. In Highlights in Practical Applications of Agents and Multiagent Systems; Pérez, J.B., Corchado, J.M., Moreno, M.N., Julián, V., Mathieu, P., Canada-Bago, J., Ortega, A., Caballero, A.F., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 307–315. [Google Scholar] [CrossRef]

- Manta, L.F.; Cojocaru, D.; Vladu, I.C.; Dragomir, A.; Mariniuc, A.M. Wheelchair control by head motion using A noncontact method in relation to the Pacient. In Proceedings of the 20th International Carpathian Control Conference (ICCC), Krakow-Wieliczka, Poland, 26–29 May 2019; pp. 1–6. [Google Scholar]

- Jameel, H.F.; Mohammed, S.L.; Gharghan, S.K. Wheelchair Control System based on Gyroscope of Wearable Tool for the Disabled. IOP Conf. Ser. Mater. Sci. Eng. 2020, 745, 012091. [Google Scholar] [CrossRef]

- Tesfamikael, H.H.; Fray, A.; Mengsteab, I.; Semere, A.; Amanuel, Z. Simulation of Eye Tracking Control based Electric wheelchair Construction by Image Segmentation Algorithm. J. Innocative Image Process. (JIIP) 2021, 3, 21–35. [Google Scholar] [CrossRef]

- Higa, S.; Yamada, K.; Kamisato, S. Intelligent eye-controlled electric wheelchair based on estimating visual intentions using one-dimensional convolutional neural network and long short-term memory. Sensors 2023, 23, 4028. [Google Scholar] [CrossRef]

- Dahmani, M.; Chowdhury, M.E.H.; Khandakar, A.; Rahman, T.; Al-Jayyousi, K.; Hefny, A.; Kiranyaz, S. AN intelligent and low-cost eye-tracking system for motorized wheelchair control. Sensors 2020, 20, 3936. [Google Scholar] [CrossRef] [PubMed]

- Sivarajah, Y.; Chandrasena, L.U.R.; Umakanthan, A.; Vasuki, Y.; Munasinghe, R. Controlling a wheelchair by use of EOG signal. In Proceedings of the 4th International Conference on Information and Automation for Sustainability (ICIAFS), Colombo, Sri Lanka, 12–14 December 2008; pp. 283–288. [Google Scholar]

- Huang, Q.; Chen, Y.; Zhang, Z.; He, S.; Zhang, R.; Liu, J.; Zhang, Y.; Shao, M.; Li, Y. An EOG-based wheelchair robotic arm system for assisting patients with severe spinal cord injuries. J. Neural Eng. 2019, 16, 026021. [Google Scholar] [CrossRef]

- Bhuyain, M.F.; Kabir Shawon, M.A.-U.; Sakib, N.; Faruk, T.; Islam, M.K.; Salim, K.M. Design and development of an EOG-based system to control electric wheelchair for people suffering from quadriplegia or quadriparesis. In Proceedings of the International Conference on Robotics, Electrical and Signal Processing Techniques (ICREST), Dhaka, Bangladesh, 10–12 January 2019; pp. 460–465. [Google Scholar] [CrossRef]

- Manero, A.C.; McLinden, S.L.; Sparkman, J.; Oskarsson, B. Evaluating surface EMG control of motorized wheelchairs for amyotrophic lateral sclerosis patients. J. Neuroeng. Rehabil. 2022, 19, 88. [Google Scholar] [CrossRef]

- Alibhai, Z.; Burreson, T.; Stiller, M.; Ahmad, I.; Huber, M.; Clark, A. A human-computer interface for smart wheelchair control using forearm EMG signals. In Proceedings of the 3rd International Conference on Data Intelligence and Security (ICDIS), South Padre Island, TX, USA, 10–12 November 2020; pp. 34–39. [Google Scholar] [CrossRef]

- Rakasena, E.P.G.; Herdiman, L. Electric wheelchair with forward-reverse control using electromyography (EMG) control of arm muscle. J. Phys. Conf. Ser. 2020, 1450, 012118. [Google Scholar] [CrossRef]

- Siribunyaphat, N.; Punsawad, Y. Brain–computer interface based on steady-state visual evoked potential using quick-response code pattern for wheelchair control. Sensors 2023, 23, 2069. [Google Scholar] [CrossRef] [PubMed]

- Banach, K.; Małecki, M.; Rosół, M.; Broniec, A. Brain–computer interface for electric wheelchair based on alpha waves of EEG signal. Bio Algor. Med. Syst. 2021, 17, 165–172. [Google Scholar] [CrossRef]

- Pawuś, D.; Paszkiel, S. BCI wheelchair control using expert system classifying EEG signals based on power spectrum estimation and nervous tics detection. Appl. Sci. 2022, 12, 10385. [Google Scholar] [CrossRef]

- Liu, Y.; Yiu, C.; Song, Z.; Huang, Y.; Yao, K.; Wong, T.; Zhou, J.; Zhao, L.; Huang, X.; Nejad, S.K.; et al. Electronic skin as wireless human–machine interfaces for robotic VR. Sci. Adv. 2022, 8, eabl6700. [Google Scholar] [CrossRef]

- Shi, Q.; Zhang, Z.; Chen, T.; Lee, C. Minimalist and multi-functional human machine interface (HMI) using a flexible wearable triboelectric patch. Nano Energy 2019, 62, 355–366. [Google Scholar] [CrossRef]

- Zhong, J.; Ma, Y.; Song, Y.; Zhong, Q.; Chu, Y.; Karakurt, I.; Bogy, D.B.; Lin, L. A flexible Piezoelectret actuator/sensor patch for mechanical human–machine interfaces. ACS Nano 2019, 13, 7107–7116. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Zhang, D.; Zhang, B.; Wang, D.; Tang, M. Wearable pressure sensor array with layer-by-layer assembled MXene nanosheets/ag nanoflowers for motion monitoring and human–machine interfaces. ACS Appl. Mater. Interfaces 2022, 14, 48907–48916. [Google Scholar] [CrossRef]

- Lu, L.; Jiang, C.; Hu, G.; Liu, J.; Yang, B. Flexible noncontact sensing for human–machine interaction. Adv. Mater. 2021, 33, e2100218. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Solomon, S.A.; Min, J.; Tu, J.; Guo, W.; Xu, C.; Song, Y.; Gao, W. All-printed soft human–machine interface for robotic physicochemical sensing. Sci. Robot. 2022, 7, eabn0495. [Google Scholar] [CrossRef] [PubMed]

- Bouyam, C.; Punsawad, Y. Human–machine interface-based wheelchair Control using Piezoelectric Sensors based on Face and Tongue Movements. Heliyon 2022, 8, e11679. [Google Scholar] [CrossRef]

- Zhou, H.; Alici, G. Non-invasive human–machine interface (HMI) systems with hybrid on-body sensors for controlling upper-limb prosthesis: A review. IEEE Sens. J. 2022, 22, 10292–10307. [Google Scholar] [CrossRef]

- Iarlori, S.; Perpetuini, D.; Tritto, M.; Cardone, D.; Tiberio, A.; Chinthakindi, M.; Filippini, C.; Cavanini, L.; Freddi, A.; Ferracuti, F.; et al. An Overview of Approaches and Methods for the Cognitive Workload Estimation in Human–Machine Interaction Scenarios through Wearables Sensors. BioMedInformatics 2024, 4, 1155–1173. [Google Scholar] [CrossRef]

- Lu, Z.; Zhou, Y.; Hu, L.; Zhu, J.; Liu, S.; Huang, Q.; Li, Y. A wearable human–machine interactive instrument for controlling a wheelchair robotic arm system. IEEE Trans. Instrum. Meas. 2024, 73, 4005315. [Google Scholar] [CrossRef]

- Routhier, F.; Archambault, P.S.; Choukou, M.A.; Giesbrecht, E.; Lettre, J.; Miller, W.C. Barriers and facilitators of integrating the miWe immersive wheelchair simulator as a clinical Tool for training Powered wheelchair-driving skills. Ann. Phys. Rehabil. Med. 2018, 61, e91. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).