Abstract

With the intent to further increase production efficiency while making human the centre of the processes, human-centric manufacturing focuses on concepts such as digital twins and human–machine collaboration. This paper presents enabling technologies and methods to facilitate the creation of human-centric applications powered by digital twins, also from the perspective of Industry 5.0. It analyses and reviews the state of relevant information resources about digital twins for human–machine applications with an emphasis on the human perspective, but also on their collaborated relationship and the possibilities of their applications. Finally, it presents the results of the review and expected future works of research in this area.

1. Introduction

During the advancements of industrial revolutions, the concept of the digital twin (DT) has emerged, revolutionising industries and redefining our approach to design, monitoring, and maintenance. The DT is a concept that has many definitions [1], but essentially, it is a virtual digital replica or simulation of a physical entity, be it a product, process, or system. This digital counterpart is created by integrating real-time data from sensors, Internet of Things (IoT) devices, and other sources, providing a dynamic and accurate representation of the physical entity’s behaviour, while also being a powerful tool for analysis, optimisation, and decision making throughout the entire lifecycle of the corresponding physical entity.

The new trend towards human-centric manufacturing aims to place humans at the centre of manufacturing systems and processes [2]. The concept of human–machine collaboration (HMC) emerged before Industry 5.0 as a solution to combine the strengths of both machines and humans, having great potential for fulfilling human needs and easing physically and mentally demanding tasks. However, most HMC applications created are system-centric, focusing on effectiveness and productivity, rather than on humans. Because of this, HMC should also evolve to be more human-centric [3]. Industry 5.0 emphasises the importance of human workers alongside advanced technologies. HMC in industry often occurs in complex workspaces that need to adapt regularly.

The DT, together with other enabling technologies, is a solution to manage these complex systems by creating digital counterparts of these workspaces and by altering them based on our needs. By creating a DT of the HMC workspace, we can manage individual tasks and problems more intuitively. However, similar to HMC, DTs have primarily focused on productivity rather than on human-centric aspects, presenting a challenge in creating easily accessible human-centric applications. The value-driven approach of Industry 5.0 requires us to shift our focus and create a systematic approach to creating human-centred solutions.

In the transition to Industry 5.0, human-centric DTs are pivotal in creating explicit connections between humans and technologies to complement their strengths in HMC applications. This research aims to develop a systematic approach to creating human-centric, DT-driven HMC solutions. By bridging the gap between DTs and HMC, we can unlock the potential for symbiotic human–machine applications that prioritise both productivity and the quality of work life for human operators. Consequently, there is a pressing need to define how to utilise DTs to make more human-centric solutions for HMC. To address this gap, we investigate the current state of research on human-centric applications involving the use of DTs in HMC. Additionally, we identify enabling technologies and methods that facilitate human-centric applications involving DTs and HMC.

2. Background

Digital twins play a pivotal role in facilitating human–machine collaboration in Industry 5.0. They offer intuitive interfaces, real-time insights, and collaborative capabilities, empowering human operators to optimise processes and make informed decisions. This symbiotic relationship underpins Industry 5.0’s transformative potential, enabling organisations to excel in a digital world.

This section provides background literature for the key concepts of this paper. It begins with an exploration of Industry 5.0, highlighting its human-centric approach and the need for a systematic approach to manage enabling technologies (Section 2.1). The section then delves into HMC, emphasising various types of relationships and the challenges faced in designing human-centred workspaces (Section 2.2). Lastly, the concept of the DT is introduced, showcasing its role in facilitating interactions between humans and machines and the evolution towards human-centric DTs (Section 2.3).

2.1. Industry 5.0

Since the introduction of the concept of Industry 5.0, researchers have tried to define and agree on how to realise its values, including human-centric applications in various areas of industry. The literature is mostly focused on identifying human needs and what technologies could be used together to fulfil those needs. However, these solutions are often partial, lacking a systematic approach for integrating these technologies to create comprehensive human-centric applications.

Firstly, the Industry 5.0 document by the European Commission [4] presented six categories of enabling technologies, consisting of subcategories, and stated that the full potential of the mentioned technologies can be achieved by using the presented technologies together in a synergistic manner. Regarding the topic of this review paper, the document mentions “individualised human–machine-interaction” and “digital twins and simulation”; however, it does not state how to use the mentioned technologies to accomplish its values, leaving space for researchers’ interpretations [3,5,6,7]. Since Industry 5.0 complements the previous Industry 4.0 and their enabling technologies cross paths [8], it is clear that many enabling technologies of Industry 4.0 will also undoubtedly help to achieve the societal goals of Industry 5.0 [9].

The human-centric approach in industry puts human needs and interests at the centre of the processes. It also means ensuring that new technologies do not interfere with workers’ fundamental rights, such as the right to privacy, autonomy, and human dignity. Humans also should not be replaced by robots in industry, and they should synergistically combine with machines to improve workers’ health and safety conditions [10]. Starting with the previous fourth industrial revolution, human-centric solutions and concepts were also created, for example Operator 4.0 [11], focusing on human–automation symbiosis, which has now started its transition towards Operator 5.0 under Industry 5.0’s influence [12]. Similarly, the concept of human–cyber–physical systems (HCPSs) [13], a composite intelligent system comprising humans as operators, agents, or users, is also evolving towards human-centric manufacturing. Regarding human-centricity in Industry 5.0, the authors in [3] presented an industrial human needs pyramid for Industry 5.0, focusing on higher human needs like belonging, esteem, and self-actualisation. They state that human-centric manufacturing should go beyond traditional human factors and focus on a higher humanistic level, such as cognitive and psychological well-being, work–life balance, and personal growth.

2.2. Human–Machine Collaboration

HMC is one type of relationship between a human and a machine. Both the term and this type of relationship emphasise both humans and machines collaborating on the same tasks and goals simultaneously, allowing robots to leverage their strength, repeatability, and accuracy, while humans contribute their high-level cognition, flexibility, and adaptability. It involves parties with different capabilities, competencies, and resources, which must be coordinated to maximise their strengths. Other most defined human–machine relationships are coexistence and cooperation [3,14]. Apart from collaboration, human–machine relationships like cooperation, where humans and machines can temporarily share their resources or workspace, they also may work on the same goal, but have their own tasks. The first-ever relationship, coexistence, is where humans and machines do not share their workspace at all. Possible future relationships have also been defined, like coevolution and compassion [3].

In this paper, we give the word “machine” a broad meaning as it can refer to an automated or autonomous system, an agent, a robot, an algorithm, or an AI. Therefore, studies on HMC span a variety of fields, including human–robot collaboration (HRC), human–machine interaction (HMI), or human–machine teaming, involving extensive literature on robotics. While HMC is focused on synergy and combined effort, HMI refers to any situation when humans interact with machines and does not necessarily involve collaboration or working on a common goal [15]. One of the major goals of the field of HMI is also to find the “natural” means by which humans can interact and communicate with machines [16].

Many studies on HRC have already focused on effective collaboration between humans and cobots, which led to the creation of collaborative assemblies [14,17]. Cobots or collaborative robots are designed to interact physically with humans in a shared environment without barriers or protective cages. These applications also proved useful to be extended and used with their DT, which enabled real-time control and dynamic skill-based task allocation. Using predictions and simulations led to optimised behaviour without the risk of human injury or financial loss. However, these applications and studies were limited only to cobots, which are usually implemented in closed industrial cells.

HMC today faces many identical and social challenges addressed in the literature [3,5,18,19], such as transparency, explainability, technology acceptance and trust, safety, performance measures, training people, or decision-making risks while using AI [20]. Overcoming these challenges would result in improved human well-being and flexible manufacturing, where humans and machines develop their capabilities. In [19], the authors proposed a framework with guidelines and recommendations for three complexity levels of the influencing factors presented when designing human-centred HMI workspaces in an industrial setting. It was concluded that challenges from designing HMC workspaces in industrial settings require multi-disciplinary and diverse knowledge of fields with a framework to systematise research findings.

Working alongside cobots appears to be an effective method for creating personalised products, yet it also raises important issues and considerations that need to be tackled. As mentioned in [5], these concerns encompass fears of job loss among humans, psychological issues, and the challenge of dynamic task distribution. The authors noted that HRC in factories is more successful when cobots assist in repetitive tasks, allowing humans to focus on creative and innovative work.

2.3. Digital Twin

The concept of the DT was proposed in 2002, but became a reality due to the surge of IoT devices, which are used for collecting vast amounts of data, thus making DT accessible and affordable for many companies. Based on the collected data, it is possible to analyse and monitor the digital counterparts in real-time to make decisions or prevent problems in the physical world [5], making DTs essential to improve interactions between humans and machines [21]. Thus, to enable the efficient design, development, and operation of an HRC system, some DT frameworks were already created [22]. In the recent literature [23], the authors identified six key application areas for DTs with strong human involvement, one of which was ergonomics and safety, as well as other identified areas such as training and testing of robotics systems, user training and education, product and process design, validation, and testing.

During the past few years, there has been a trend to combine DTs with semantic technologies to enhance them with cognitive abilities [24]. From this trend, the concept of the Cognitive Digital Twin (CDT) [25,26] emerged as a promising next evolution stage of DTs, which can replicate human cognitive processes and execute conscious actions autonomously, with minimal or no human intervention. Besides cognitive abilities, the CDT should have multiple levels and lifecycle phases of the system. Key enabling technologies for the CDT are semantic technologies (ontology engineering, knowledge graphs), model-based system engineering, product lifecycle management, and industrial data management technologies (cloud/fog/edge computing, natural language processing, distributed ledger technology) [24]. For HRC cases in smart manufacturing, ref. [27] proposed a CDT framework and case study to learn human model knowledge through deep learning algorithms in edge–cloud 5G computing to improve the interaction, facilitating workers’ lives. Similarly, the concept of a Digital Triplet was created [28] containing the cyber world, physical world, and “intelligent activity world”, where humans solve various problems by using the DT.

Based on the reviewed literature, one of the main parts of creating human-centric DT sin HMC should involve creating a DT of a human [3,29]. Currently, most existing DT applications are developed for prediction and monitoring purposes to be used as decision-making applications for humans, and the importance of human involvement in the DT environment is overlooked, as past research is mostly focused on manufacturing devices, which creates one of the main research issues [29]. The authors in [3] also mention the importance of creating a human digital twin (HDT), which can be created according to a worker’s capabilities, behaviour pattern, and wellness index. One technical limitation in creating an HDT arises from the varied methods of communication. A DT of an electronic object can exploit real-time communication with its physical counterpart, but a DT of a human connects with its physical twin through intermediary devices, typically sensors or software applications [30].

3. Review Methodology

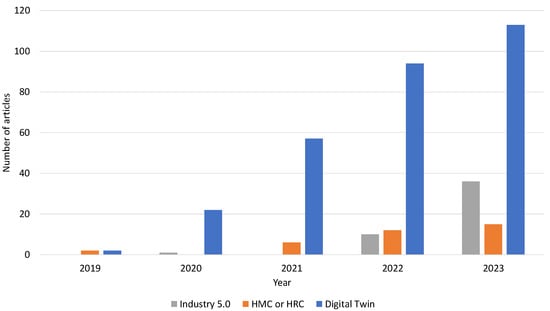

Based on the title search in the Web of Science (WoS) database for review categorisation, the numbers of review and survey papers on themes such as Industry 5.0, HMC or HRC, or DT, we can see that the trend of these concepts has increased rapidly in the last 5 years Figure 1. However, in the case of DT, most of the review and survey papers are scarcely or not at all focused on human factors. For this review, to evaluate previous review papers, we decided to select and focus only on those articles that were focused on humans in more depth, which resulted in a comparison of 20 review and survey papers that were found in the WoS database for the keyword- and title-based search as follows:

Figure 1.

Number of related review and survey articles in the past 5 years.

(TI = (digital twin*) OR AK = (digital twin*)) AND (TI = (human* OR industry 5.0 OR operator* OR user* OR people OR worker* OR employee*) OR AK = (human* OR industry 5.0 OR operator* OR user* OR people OR worker* OR employee*))

The search phrase was altered to contain the most common phrases labelling humans in various areas of industry. This also involves papers with topics such as HMI, which is also a part of the HMC scenarios. The search phrase found 29 articles related to the topic of our review paper, from which we picked only 20 more closely related articles, see Table 1. Each article was then rated based on its primary focus on coverage, as low, medium, or high, to evaluate how well it focused on enabling technologies, certain methods for these technologies, their use cases, and to what extent they also cover human–machine system topics. The authors of the papers propose challenges and future perspectives of DTs for futuristic human-centric industry transformation.

Table 1.

Comparison of related review and survey papers.

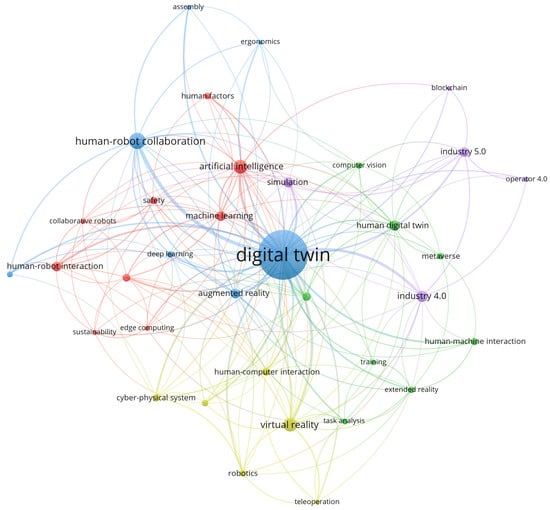

In the next phase, we picked all articles regarding our search phrase, not restricted only to reviews and surveys, and based on 422 results, we created a keyword co-occurrence map in VOSViewer to further help identify enabling technologies and methods for this topic; see Figure 2. Keywords from the network map are shown and ordered by occurrences in Table 2.

Figure 2.

Human-related digital twin keywords co-occurrence network map.

Table 2.

Co-occurrence network map keywords.

In Table 1, we can see that more than half of the related review papers chosen for comparison were published from the year 2023. After reading the papers, we concluded that some papers had satisfying higher coverage on topics related to this review paper. Still, none of them focused directly on the enabling technologies and methods of DTs for HMC regarding human-centric topics and, therefore, lacked proper depth, as they tended to focus on specific technologies or different topics.

The found keywords in Table 2 and their total link strength show that HRC is the biggest topic related to humans and DT, while the term “human–machine collaboration” is not used extensively in the literature. The technologies with the biggest total link strength include virtual reality and artificial intelligence, indicating their biggest literature coverage in implementing DTs in HMC applications.

Our background literature review and review methodology underscore a notable research gap in the current literature, indicating a pressing need for more comprehensive studies addressing the human-centric aspects of DT-driven HMC solutions. The question of how DT integration can enhance the development of human-centred HMC applications, along with identifying the most suitable technologies and methods for optimising this integration, remains unanswered. Therefore, key enabling technologies, their methods, and paradigms for DT for HMC are discussed and analysed in the next sections as existing applications, concepts, and use cases are explored and analysed from the human-centric point of view.

4. Enabling Technologies and Methods

This section discusses the importance of various enabling technologies presented in an official document of Industry 5.0 [4] and the discovered keywords from Section 3 while focusing on the use cases of these technologies. This includes digital twins and simulations (Section 4.1), artificial intelligence (Section 4.2), human–machine interaction (Section 4.3), and data transmission, storage, and analysis technologies (Section 4.4), often used together in many applications. This section will concentrate on integrating DTs with these technologies in HMC scenarios and address applications with similar topics.

4.1. Digital Twins and Simulations

Before creating a DT, it is necessary to decide on the appropriate tools. According to [49], the five-dimensional DT model can provide reference model support for applications of DT in different fields. Based on the five dimensions, the authors created a reference framework of enabling technologies for DTs, from which we include technologies for cognising and controlling the physical world, DT modelling, data management, services, and connections. Different tools support different sets of features and technologies; therefore, it is up to engineers to choose a tool that will fulfil the needs of our application. Based on our review methodology, we searched articles focusing on DT implementations and use cases with various technologies.

In Table 2, we focus on describing the most common tools we found in the literature to create DT-driven HMC applications. These tools vary in their application areas and, therefore, were used for different application problems. The cost of the software needs to also be considered when selecting commercial tools, including the cost associated with training to be able to learn to use such tools. Some tools, such as the Robot Operating System (ROS), are used, which is an open-source middleware, thereby offering a cost-effective communication framework for DT applications. ROS-compatible software, such as CoppeliaSim, Gazebo, RViz, Unity, and Blender, is often implemented as a simulation platform, although some of them are rather game engines than a simulation environment [44]. Unity, the most used tool in our review, is a proprietary software for which buying a personal license is not mandatory, similar to V-REP, where the education edition is for free. However, tools such as Technomatix Process Simulate or Matlab can be costly. In some cases, custom tools for simulation were also created.

The most common implementation areas for HMC applications included safety and ergonomics, maintenance, task planning, optimisation, testing, and training. Some articles, while having humans in the loop, were focused more on increasing productivity and optimisation. A variety of methods are utilised in the literature for the implementation of DTs to facilitate safe interactions of robots with human operators and optimise ergonomics during such interactions. Decision making supports task planning and allocation and gives operators autonomy. Human action recognition and prediction are also used to optimise production or ensure human safety. With the help of the simulation technologies, the authors also focused on designing and testing safe HMC workspaces before their implementation, which simplifies training and robot programming.

Choosing the right DT tools, as identified in Table 3, is crucial for implementing other key enabling technologies for HMC, such as AI, and HMI technologies like extended reality (XR). However, a great variety of methods and techniques for these technologies can make choosing the right ones for certain use cases complicated. Identifying the most and least common use cases for DT technologies in the HMC domain can help organisations determine how they can best leverage the technology to improve their operations and processes and start to put humans in the centre. Therefore, the next subsections will discuss enabling technologies and methods in various use cases and analyse how they enable human-centric DT in HMC applications.

Table 3.

DT tools used in HMC applications.

4.2. Artificial Intelligence

AI is one of the enabling leaders and facilitators for growth and adaptability for DTs, also becoming the main component of such systems [68]. Based on the literature review, we identified specific methods and tasks for different application problems, as seen in Table 4. In the literature focusing on humans, authors focused on topics such as safety, ergonomics, decision making, and training. Still, simultaneously, some authors focused also on increasing the effectiveness of HMC applications in areas where the focus on humans is not as big, such as robot learning, assembly line reconfiguration, or optimisation.

Most applications involving human safety use deep learning for object detection or recognition for collision avoidance or calculating the distance between humans and robots. Many DT applications use AI as a tool to optimise processes focusing on task planning or decision making. Some authors started to focus on recognising human behaviour, especially by motion detection, to be able to predict the next human action, which has wide application potential. Artificial Neural Networks are often used for computer vision-related tasks, which often involve the use of HMI devices such as Red Green Blue-Depth (RGB-D) cameras to gather data to train neural networks for tasks such as object recognition or human motion detection, mainly for solving human safety and ergonomics. Computer vision can also help with creating HDTs, as applications may involve the recognition of human facial features, expressions, poses, and gestures [23].

In [60], the authors developed a solution to monitor operator’s ergonomics by implementing a Convolutional Neural Network (Single-Shot Detector) to detect the existence or position of parts to be assembled, as well as the hands of the operator. The neural network model was trained on real-life and virtual object photos and increased its accuracy by also training on more synthetic data. In the next work, decision making based on artificial intelligence logic is used to derive alternative production system configurations, such as the optimal layout and task plans to reconfigure the system in cases of unexpected events online [59].

In [69], the authors proposed using Visual Question Answering (VQA) in HMC applications to increase effectivity and safety. VQA is a method for video understanding, consisting of computer vision and natural language processing algorithms, making it a multimodal method, consisting of more artificial intelligence methods. In some applications, the composition of multiple modules of AI is used, resolving in composable or composite AI [70]. In [54], the authors fused different human-tracking sensors and combined deep learning with semantic technologies for predicting human interactions in HRC.

AI algorithms can process and analyse large volumes of data collected from sensors, machinery, and various other sources. By extracting crucial insights and identifying patterns within these data, these algorithms can assist manufacturers in making more informed decisions and enhancing the efficiency of their operations. One of the advantages of AI-equipped DTs over conventional ones is the ability to better respond to HMC applications due to the uncertainties in the environment and sensors and the randomness and diversity of human behaviour [71]. Implementing AI in HMC can advance learning processes, allowing humans and machines to adapt to changing environments or requirements.

Table 4.

Artificial intelligence methods used in DT HMC applications.

Table 4.

Artificial intelligence methods used in DT HMC applications.

| AI Method | Specific Technique | Task | Application Problem |

|---|---|---|---|

| Traditional methods | SVM [50,72] | Classification [50] Object recognition [72] | Human skill level analysis [50] Soft-robot tactile sensor feedback [72] |

| Heuristic methods | Search algorithms [59] | Decision making [59] | Assembly line reconfiguration and planning [59] |

| Neural networks | FFN [57] RNN [73] | Object recognition [57]

Sequential data handling [73] | Human safety [57]

Dynamic changes’prediction [73] |

| Deep learning | 1D-CNN [74] Mask R-CNN [75,76] CNN [53,58,60,77,78] PVNet Parallel network [79] PointNet [79] SAE [80] | Detection or recognition [53,60,74,75,76,79] Classification [77] Human action and motion recognition [78] Pose estimation [58,79] Feature extraction [73] Anomaly detection [80] | Human safety [60,75,76,78,79,80] Ergonomics [60] Human action andintention understanding [60,77] Efficiency [78,79] Position estimation [53] Decision making [73] Object manipulation [74] |

| Reinforcement learning | Model-free RL[51] TRPO [61] PPO [61] DDPG [61] Q-learning [63] | Robot motion planning [51] Robot learning [61] Dynamic programming [63] | Training [51] Teleoperation [51] Robot skill learning [61] Assembly planning optimisation [63] |

| Deep reinforcement learning | Deep Q-learning [81] PPO [52] SAC [52] DDPG [64] D-DDPG [73] | Task scheduling [81] Decision making [81] Training [52] Humanoid robot arm control and motion planning Optimisation [64,73] | Smart manufacturing [81] Optimisation [52] Robot learning [64] Enhancing efficiency and adaptability [73] |

| Generative AI | motion GAN [82] | Human motion prediction [82] | Human action prediction [82] |

4.3. Human–Machine Interaction

Since humans interact with DTs in both physical and virtual worlds, human–computer interaction technologies and human–machine interaction should be incorporated. Similar to other technologies, HMI technologies are also challenged to become more human-centric. HMI technologies in DT applications are crucial to support various HMC applications by providing a means to interact with machines and their virtual counterparts. Various technologies are used to visualise data intuitively for users, which can fulfil many roles. As seen in Table 5, these technologies can support operators’ safety and collaboration with machines, while AI algorithms can also be implemented.

One of the most emerging technologies is XR technologies, which encompass augmented reality (AR), virtual reality (VR), and mixed reality (MR). AR is frequently found in the literature, where it is explored for its useful support for human–machine collaborative applications. Thanks to its ability to overlay digital information, it is a useful tool to enhance the safety of workers and increase their productivity and trust of technologies. Augmented reality applications are mostly used in the form of mobile devices, such as phones [83], tablets [55], or glasses.

In contrast, VR provides an immersive virtual environment for users to experience, observe, and interact with virtual objects to perceive the real environment. These virtual models map the sensor data of physical products to reflect their life-cycle process [84]. VR is commonly used in design and simulation use cases, where a fully virtual world is needed to design workshops or test new configurations. In [85], VR was used to generate an industrial human-action-recognition dataset using the DT of an industrial workstation.

One of the common techniques to enable HMI in HMC involves using depth RGB cameras, such as Microsoft Kinect, often used for perceiving the human body and workspace for safety and ergonomics within applications. Using IoT and widespread connectivity, various methods, wireless technologies, and approaches are suggested in scientific articles to offer indoor localisation services, thereby enhancing the services available to users [86]. These localisation technologies include WiFi, Radio Frequency Identification (RFID) devices, Ultra-Wideband (UWB) or Bluetooth Low Energy (BLE).

Natural user interfaces (NUIs) are a type of user interface design that aims to use natural human behaviours and actions for interaction rather than requiring the user to adapt to the technology. NUIs should be designed so that users are able to use them with little to no training [87]. Multimodal interfaces use several ways of HMI, including NUIs, so the users are either free to choose the most convenient method for themselves or use more of them to create better input to process by machines [88]. For example, the authors in [89] used a multimodal interface, which included voice recognition, hand motion recognition, and body posture recognition as the input for deep learning for collaboration scenarios.

Table 5.

HMI technologies used in DT HMC applications.

Table 5.

HMI technologies used in DT HMC applications.

| HMI Technology | Specific Technique | Task | Application Problem | |

|---|---|---|---|---|

| Touch interfaces | Tablet [55] Phone [83] | Visual augmentation [55,83] | Safety [55,83] HM cooperation [55] | |

| Web interfaces | BLE tags [80] | Indoor positioning [80] | Occupational safety monitoring [80] | |

| Extended reality | VR | HTC Vive [50,56,65] HTC Vive Pro Eye [85] Facebook Oculus [50] Sony PlayStation VR [50] Handheld sensors [65,85] | Training [65] Validation [65] Safe development [56] Data generation [85] Auto-labelling [85] Interaction with virtual environment [74] Robot operation demonstration [50] | Online shopping [74] Human productivity and comfort [50] Human action recognition [85] |

| AR | HoloLens 2 [51,79,90] Tablet [55] Phone [83] | Robot teleoperation [51] Visual augmentation [55,83,90] Real-time interaction [79] | Human safety [55,79,83,90] Intuitive human–robot interaction [51] Productivity [79] | |

| MR | HoloLens 2 [53,75] | Visual augmentation [75] Object manipulation [53] | Human safety [75] | |

| Natural user interfaces | Gestures | HoloLens 2 [53] Kinect [91] | Head gestures [53] | 3D object robot manipulation [53,91] |

| Motion | Perception Neuron Pro [85] Manus VR Prime II [54] Xsens Awinda [54] | Motion capture [85] Finger tracking [54] Body joint tracking [54] | Human motion recognition [54,85] | |

| Gaze | Pupil Invisible [54] HoloLens 2 [53] | Object focusing [54] Target tracking [53] | Assembly task precision [54] Interface adaptation [53] | |

| Voice | HoloLens 2 [53] | MR image capture [53] | 3D manipulation [53] | |

4.4. Data Transmission, Storage, and Analysis Technologies

A data-driven digital twin possesses the ability to observe, react, and adjust according to changes in its environment and operational circumstances. Data transmission technologies include wired and wireless transmissions. Both wired and wireless transmissions depend on transmission protocols. Storing collected data for processing, analysis, and management is an essential aspect of database technologies. However, traditional database technologies face challenges with the increasing volume and diversity of data from multiple sources. Therefore, big data has prompted the exploration of alternative solutions, such as distributed file storage (DFS), NoSQL databases, and NewSQL databases. This large volume of data is then preprocessed and analysed for extracting useful information through statistical methods or by database methods, which include multidimensional data analysis and OLAP methods [49]. Nonetheless, AI methods from Section 2.2, such as neural networks or deep learning, can also be used for some analytic tasks. As seen in Table 6, authors often use multiple technologies, and multiple authors do not state specific technologies in their work, but rather, specify the usage of broad technology frameworks such as TCP/IP or cloud databases.

Based on various levels of DTs, edge computing, fog computing, and cloud computing can be used for data transmission, storage, or analysis tasks. Cloud computing provides widespread, easy, and on-demand network access to a pool of resources, shareable as needed, offering high computational and storage capacities at reduced costs. Meanwhile, fog computing extends the cloud’s computing, storage, and networking capabilities to the edge network. Edge computing (EC) then allows data processing to be performed closer to the data sources [92]. As one of the enabling technologies for Industry 5.0 [4,5], EC has already found a number of applications in the literature for network operations, where the edge must be designed efficiently to ensure security, reliability, and privacy.

Since one of the main challenges of DTs is to ensure data flow between the physical and digital counterparts, one of the big research areas that can improve human safety is also task offloading [93]. In many areas, like healthcare, the risk of potential high response latency at the data centre end is critical [94]. Ruggeri et al. [95] proposed a solution that utilises a deep reinforcement learning agent in an HRC scenario, which observes safety and network metrics to decide which model should run on mobile robots and the edge based on network congestion, which greatly improved the safety metrics and reduced the network latency. In the case of task offloading, authors very often focus on Autonomous Mobile Robots (AMRs) because of their limited computation hardware, which poses a challenge in these applications.

Paula Fraga-Lamas et al. [96] proposed a mist/edge computing cyber–physical human-centred system (CPHS) that uses low-cost hardware to detect human proximity to avoid risky situations in industrial scenarios. The proposed system was evaluated in a real-world scenario, where the maximum latency was reduced and low computational complexity was preserved. Research on edge intelligence in DTs that can improve areas such as anomaly detection was also explored [97].

Taking scalability into account, an edge-based twin is most valuable due to its minimal latency, especially when compared to twins based on cloud and edge–cloud configurations [98]. As edge AI and AI-enabled hardware like graphical processing units, such as the Nvidia Jetson series, or AI accelerators, such as Intel Movidius products, continue to evolve, it becomes feasible to break down the DT of an entire factory process into smaller, modular DT processes [44].

Technologies, such as Kubernetes and Docker, streamline container management and workload orchestration, enhancing data handling and processing efficiency in modern computing [99]. The microservices architecture, adopted in the era of cloud computing, facilitates greater customizability, reusability, and scalability by splitting solutions into interconnected applications [100]. This approach, coupled with Kubernetes orchestration and containerisation, significantly boosts deployment efficiency, scalability, flexibility, and reliability in contemporary DT operations and applications [101,102].

Furthermore, incorporating the precise analysis and forecasting strengths of big data, HMC driven by DT technology will become more adaptive and forward-looking, offering significant improvements in various aspects of efficient and accurate management [103].

Table 6.

Data transmission, storage, and analysis technologies in DT HMC applications.

Table 6.

Data transmission, storage, and analysis technologies in DT HMC applications.

| Category | Technology | Task | Application Problem |

|---|---|---|---|

| Storage | MySQL [55]

MongoDB [60] Cloud database [80,104] | CAD, audio, and 3D model files [55]

Assembly step executions [60] Robotic arm motion list [104] General system data storage [80] | Human safety [60,80,104]

Productivity [60,104] |

| Data Transmission | TCP/IP [50,55,73,75]

Ethernet [55] MQTT [97] Cellular [80] WiFi [55,80] Bluetooth [80] | Physical and digital world communication [50,75]

Human–robot Android AR application [55] Robot control movement [73] Occupational safety system [80] Edge intelligence anomaly detection [97] | Human safety [55,75,80]

Productivity [55] Human skill level analysis [50] Dynamic changes’ prediction [73] Maintenance [97] |

| Analysis | Principal component analysis [50]

Parameter sensitivity analysis [105] | Dimension reduction [50]

Model adaptability enhancement [105] | Human safety [80]

Maintenance [105] |

5. Discussion

The growth of DT applications in HMC and the recent focus on human centricity indicate the potential for human-centric DT applications in various areas of industry. In this review paper, we identified the existing literature and research trends in the HMC domain using the DT as a key enabling technology. We focused on different applications based on enabling technologies identified for the Industry 5.0 era. The limitations of this study may include its main focus on the WoS database and keyword search, which may miss some work on this topic. Based on the literature reviewed, we identified several research gaps.

Firstly, the scarcity of human-centric applications stems from their recent emergence as a focus area, a lack of standardisation, and the complexity of societal and technical challenges. Although creating these human-centric applications lacks standardisation, numerous applications place a significant emphasis on the human aspects. However, most DT applications still focus on productivity as the main goal. In HMC, where humans are part of collaboration processes, focusing on human problems is often inevitable. There is a need to complement DTs with a deep understanding of human behaviours, preferences, and limitations to make the uncertainty of human behaviour less challenging to model. As a result, the following research should start by analysing the impact and significance of the analysed enabling technologies and methods in implementing human-centric DTs across various HMC applications.

Secondly, HMC applications mostly focus on arm manipulators and lack work involving mobile robots. While there are existing industrial applications, research in industrial domains may be proprietary and not widely published due to competitive reasons. At the same time, modelling mobile robots and humans in dynamic environments represents new challenges as opposed to robots whose environment is smaller and does not change much. Therefore, new case studies and experiments are essential to comprehend the practical implications, limitations, and benefits of mobile robots in HMC across various industrial contexts.

Thirdly, the methods for synergistically integrating all enabling technologies in complex systems remain unclear. According to the Industry 5.0 document, all technologies will reveal their full potential when combined with the others to create complex systems. Therefore, a framework should be proposed in future work to integrate the analysed technologies and methods, providing a systematic approach to technology selection and combination for different use cases.

Apart from identified research gaps, the analysed enabling technologies and methods, on their own, have their limitations, which need to be addressed in future research. DTs confront several challenges, including creating accurate virtual models, the absence of a standardised framework for DT development, insufficient training programs, high development costs, and the complexity of accurately modelling human interactions. Furthermore, integrating DT with cyber–physical systems and IoT sensors poses challenges in real-time connectivity and data synchronisation. Therefore, research efforts should prioritise improving the fidelity of DT models, particularly in accurately representing human interactions and physical phenomena. Collaborative efforts between academia, industry, and policymakers will be crucial [44].

Although incorporating HMI technologies into DT applications can enhance user experience and support HMC, achieving true human-centricity in these technologies presents significant challenges. Despite the potential benefits of depth RGB cameras and other IoT devices, it also raises worries regarding privacy and data security when frequently recording sensitive details concerning the movements and interactions of workers, where research can also head in the direction of human-centric privacy- or data-protection systems [106]. Additionally, AR and VR devices have to be designed to be inclusive to all humans and to mitigate any sensory overload or disorientation, particularly in complex industrial environments [107].

Similarly, there is a question on how to solve the biggest challenges to designing, implementing, and deploying fair, ethical, and trustworthy AI. We can address this challenge by focused research questions [108] encompassing issues such as identifying and addressing AI biases, ensuring transparency and explainability, establishing accountability, and developing robust legal and regulatory frameworks.

Moreover, additional limitations and challenges emerge, including reliability and latency issues in data transmission technologies, the scalability constraints of traditional database technologies, and the complexities associated with implementing edge computing, fog computing, and cloud computing solutions. Additionally, complexity challenges persist in managing containerised microservices despite their potential to enhance deployment efficiency.

As many applications achieve better outcomes with a broader array of technologies and data, the use of fusion and multimodal solutions is expected to increase in the coming years. One of the examples is composite AI [109], or composable AI systems [110], which are crucial for the advancement of AI technology, as the modularity and composition of multiple AI models will make creating complex AI systems easier and faster. For instance, in [111], the authors proposed a composite AI model employing a Generative Adversarial Network to predict preemptive migration decisions for proactive fault tolerance in containerised edge deployments.

For advancing video understanding techniques, the authors in [112] developed a Human–Robot Shared Assembly Taxonomy (HR-SAT) for HRC to represent industrial assembly scenarios and human procedural knowledge acquisition, which can be further used for various AI tasks, such as human action recognition and prediction or human–robot knowledge transfer. Federated learning [113] offers an effective method for leveraging the growing processing capabilities of edge devices and vast, varied datasets to develop machine learning models while maintaining data privacy, which could solve some ethics problems and improve human trust in collaboration with machines.

HMC applications involving mobile robots, such as drones or ground AMRs, could also be utilised in the future for their flexibility, manoeuvrability, and adaptability. However, it is harder for applications involving mobile robots to solve safety issues, as their workspace is not constrained to a smaller place than it usually is with assembly cells with cobots. For safety applications involving mobile robots, solutions, such as AR in HMC applications, could be used to visualise the path of mobile robots [83], which will also increase human trust when working in the same workspace and then collaborating on the same tasks. Exploring natural user interfaces (NUIs) for multimodal interaction with mobile robots, such as drones, may also unveil future collaborative applications [114].

Localisation and communication trends such as Visible Light Communication (VLC) or Visible Light Positioning (VLP) [115] are also promising technologies to enable human-centric HMI in future DT applications or where the use of other technologies might be limiting or undesirable.

6. Conclusions

This research presents a comprehensive literature review focused on the importance of developing human-centric applications within DT and its technologies for HMC. It examines specific technologies and methods reported in the literature for each technology category. Our focus was on identifying common use cases for DT technologies in the HMC domain, aiming to guide organisations on optimally leveraging these technologies to enhance operations and processes and prioritise human-centric approaches. Despite its importance, the DT, a key component in futuristic synergistic HMC systems, still lacks extensive literature on human-centric applications. This gap partly arises from the absence of standardised frameworks for developing these types of applications. In the coming years, a significant expansion in research on these topics is anticipated, with a focus on addressing the main challenges and exploring enabling technologies and methods.

Author Contributions

Conceptualisation and methodology, I.Z. and E.K.; formal analysis and supervision, I.Z. and E.K.; writing—original draft preparation, M.K.; writing—review and editing, M.K., E.K., C.L. and I.Z.; funding acquisition and project administration, I.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Slovak Scientific Grant Agency by the project EDge-Enabled intelligeNt systems (EDEN), project number VEGA 1/0480/22.

Conflicts of Interest

The authors declare no conflicts of interest. The founders had no role in the design of the study; in the collection, analysis, or interpretation of the data; in the writing of the manuscript; nor in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | artificial intelligence |

| AMR | Autonomous Mobile Robot |

| AR | augmented reality |

| CDT | Cognitive Digital Twin |

| DT | digital twin |

| EC | edge computing |

| HMC | human–machine collaboration |

| HRC | human-robot collaboration |

| HMI | human–machine interaction |

| HDT | human digital twin |

| IoT | Internet of Things |

| MR | mixed reality |

| NUI | natural user interface |

| ROS | Robot Operating System |

| VR | virtual reality |

| VQA | Visual Question Answering |

| WoS | Web of Science |

| XR | extended reality |

References

- Semeraro, C.; Lezoche, M.; Panetto, H.; Dassisti, M. Digital twin paradigm: A systematic literature review. Comput. Ind. 2021, 130, 103469. [Google Scholar] [CrossRef]

- Directorate-General for Research and Innovation (European Commission); Breque, M.; De Nul, L.; Petridis, A. Industry 5.0—Towards a Sustainable, Human-Centric and Resilient European Industry; Publications Office of the European Union: Luxembourg, 2021. [Google Scholar] [CrossRef]

- Lu, Y.; Zheng, H.; Chand, S.; Xia, W.; Liu, Z.; Xu, X.; Wang, L.; Qin, Z.; Bao, J. Outlook on human-centric manufacturing towards Industry 5.0. J. Manuf. Syst. 2022, 62, 612–627. [Google Scholar] [CrossRef]

- Directorate-General for Research and Innovation (European Commission); Müller, J. Enabling Technologies for Industry 5.0—Results of a Workshop with Europe’s Technology Leaders; Publications Office of the European Union: Luxembourg, 2020. [Google Scholar] [CrossRef]

- Maddikunta, P.K.R.; Pham, Q.V.; Prabadevi, B.; Deepa, N.; Dev, K.; Gadekallu, T.R.; Ruby, R.; Liyanage, M. Industry 5.0: A survey on enabling technologies and potential applications. J. Ind. Inf. Integr. 2022, 26, 100257. [Google Scholar] [CrossRef]

- Adel, A. Future of industry 5.0 in society: Human-centric solutions, challenges and prospective research areas. J. Cloud Comput. 2022, 11, 40. [Google Scholar] [CrossRef] [PubMed]

- Akundi, A.; Euresti, D.; Luna, S.; Ankobiah, W.; Lopes, A.; Edinbarough, I. State of Industry 5.0—Analysis and identification of current research trends. Appl. Syst. Innov. 2022, 5, 27. [Google Scholar] [CrossRef]

- Group, B.C. Industry 4.0. 2023. Available online: https://www.bcg.com/capabilities/manufacturing/industry-4.0 (accessed on 15 February 2024).

- Xu, X.; Lu, Y.; Vogel-Heuser, B.; Wang, L. Industry 4.0 and Industry 5.0—Inception, conception and perception. J. Manuf. Syst. 2021, 61, 530–535. [Google Scholar] [CrossRef]

- Leng, J.; Sha, W.; Wang, B.; Zheng, P.; Zhuang, C.; Liu, Q.; Wuest, T.; Mourtzis, D.; Wang, L. Industry 5.0: Prospect and retrospect. J. Manuf. Syst. 2022, 65, 279–295. [Google Scholar] [CrossRef]

- Romero, D.; Bernus, P.; Noran, O.; Stahre, J.; Fast-Berglund, Å. The operator 4.0: Human cyber-physical systems & adaptive automation towards human-automation symbiosis work systems. In Proceedings of the Advances in Production Management Systems. Initiatives for a Sustainable World: IFIP WG 5.7 International Conference, APMS 2016, Iguassu Falls, Brazil, 3–7 September 2016; Revised Selected Papers. Springer: Cham, Switzerland, 2016; pp. 677–686. [Google Scholar]

- Gladysz, B.; Tran, T.a.; Romero, D.; van Erp, T.; Abonyi, J.; Ruppert, T. Current development on the Operator 4.0 and transition towards the Operator 5.0: A systematic literature review in light of Industry 5.0. J. Manuf. Syst. 2023, 70, 160–185. [Google Scholar] [CrossRef]

- Wang, B.; Zheng, P.; Yin, Y.; Shih, A.; Wang, L. Toward human-centric smart manufacturing: A human-cyber-physical systems (HCPS) perspective. J. Manuf. Syst. 2022, 63, 471–490. [Google Scholar] [CrossRef]

- Wang, L.; Gao, R.; Váncza, J.; Krüger, J.; Wang, X.V.; Makris, S.; Chryssolouris, G. Symbiotic human-robot collaborative assembly. CIRP Ann. 2019, 68, 701–726. [Google Scholar] [CrossRef]

- Villani, V.; Pini, F.; Leali, F.; Secchi, C. Survey on human–robot collaboration in industrial settings: Safety, intuitive interfaces and applications. Mechatronics 2018, 55, 248–266. [Google Scholar] [CrossRef]

- Kolbeinsson, A.; Lagerstedt, E.; Lindblom, J. Foundation for a classification of collaboration levels for human-robot cooperation in manufacturing. Prod. Manuf. Res. 2019, 7, 448–471. [Google Scholar] [CrossRef]

- Magrini, E.; Ferraguti, F.; Ronga, A.J.; Pini, F.; De Luca, A.; Leali, F. Human-robot coexistence and interaction in open industrial cells. Robot. Comput.-Integr. Manuf. 2020, 61, 101846. [Google Scholar] [CrossRef]

- Nahavandi, S. Industry 5.0—A human-centric solution. Sustainability 2019, 11, 4371. [Google Scholar] [CrossRef]

- Simões, A.C.; Pinto, A.; Santos, J.; Pinheiro, S.; Romero, D. Designing human-robot collaboration (HRC) workspaces in industrial settings: A systematic literature review. J. Manuf. Syst. 2022, 62, 28–43. [Google Scholar]

- Xiong, W.; Fan, H.; Ma, L.; Wang, C. Challenges of human—machine collaboration in risky decision-making. Front. Eng. Manag. 2022, 9, 89–103. [Google Scholar] [CrossRef]

- Othman, U.; Yang, E. Human–robot collaborations in smart manufacturing environments: Review and outlook. Sensors 2023, 23, 5663. [Google Scholar] [CrossRef] [PubMed]

- Malik, A.A.; Bilberg, A. Digital twins of human robot collaboration in a production setting. Procedia Manuf. 2018, 17, 278–285. [Google Scholar] [CrossRef]

- Asad, U.; Khan, M.; Khalid, A.; Lughmani, W.A. Human-Centric Digital Twins in Industry: A Comprehensive Review of Enabling Technologies and Implementation Strategies. Sensors 2023, 23, 3938. [Google Scholar] [CrossRef]

- Zheng, X.; Lu, J.; Kiritsis, D. The emergence of cognitive digital twin: Vision, challenges and opportunities. Int. J. Prod. Res. 2022, 60, 7610–7632. [Google Scholar] [CrossRef]

- Zhang, N.; Bahsoon, R.; Theodoropoulos, G. Towards engineering cognitive digital twins with self-awareness. In Proceedings of the 2020 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Toronto, ON, Canada, 11–14 October 2020; IEEE: Piscataway, NJ, USA, 2020; p. 3891. [Google Scholar]

- Al Faruque, M.A.; Muthirayan, D.; Yu, S.Y.; Khargonekar, P.P. Cognitive digital twin for manufacturing systems. In Proceedings of the 2021 Design, Automation & Test in Europe Conference & Exhibition (DATE), Virtual, 1–5 February 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 440–445. [Google Scholar]

- Shi, Y.; Shen, W.; Wang, L.; Longo, F.; Nicoletti, L.; Padovano, A. A cognitive digital twins framework for human-robot collaboration. Procedia Comput. Sci. 2022, 200, 1867–1874. [Google Scholar] [CrossRef]

- Umeda, Y.; Ota, J.; Kojima, F.; Saito, M.; Matsuzawa, H.; Sukekawa, T.; Takeuchi, A.; Makida, K.; Shirafuji, S. Development of an education program for digital manufacturing system engineers based on ‘Digital Triplet’concept. Procedia Manuf. 2019, 31, 363–369. [Google Scholar] [CrossRef]

- Lu, Y.; Liu, C.; Kevin, I.; Wang, K.; Huang, H.; Xu, X. Digital Twin-driven smart manufacturing: Connotation, reference model, applications and research issues. Robot. Comput.-Integr. Manuf. 2020, 61, 101837. [Google Scholar] [CrossRef]

- Barricelli, B.R.; Casiraghi, E.; Fogli, D. A survey on digital twin: Definitions, characteristics, applications, and design implications. IEEE Access 2019, 7, 167653–167671. [Google Scholar] [CrossRef]

- Yin, Y.; Zheng, P.; Li, C.; Wang, L. A state-of-the-art survey on Augmented Reality-assisted Digital Twin for futuristic human-centric industry transformation. Robot. Comput.-Integr. Manuf. 2023, 81, 102515. [Google Scholar] [CrossRef]

- Mazumder, A.; Sahed, M.; Tasneem, Z.; Das, P.; Badal, F.; Ali, M.; Ahamed, M.; Abhi, S.; Sarker, S.; Das, S.; et al. Towards next generation digital twin in robotics: Trends, scopes, challenges, and future. Heliyon 2023, 9, e13359. [Google Scholar] [CrossRef] [PubMed]

- Zhang, C.; Wang, Z.; Zhou, G.; Chang, F.; Ma, D.; Jing, Y.; Cheng, W.; Ding, K.; Zhao, D. Towards new-generation human-centric smart manufacturing in Industry 5.0: A systematic review. Adv. Eng. Inform. 2023, 57, 102121. [Google Scholar] [CrossRef]

- Wang, B.; Zhou, H.; Li, X.; Yang, G.; Zheng, P.; Song, C.; Yuan, Y.; Wuest, T.; Yang, H.; Wang, L. Human Digital Twin in the context of Industry 5.0. Robot. Comput.-Integr. Manuf. 2024, 85, 102626. [Google Scholar] [CrossRef]

- Hu, Z.; Lou, S.; Xing, Y.; Wang, X.; Cao, D.; Lv, C. Review and perspectives on driver digital twin and its enabling technologies for intelligent vehicles. IEEE Trans. Intell. Veh. 2022, 7, 417–440. [Google Scholar] [CrossRef]

- Park, J.S.; Lee, D.G.; Jimenez, J.A.; Lee, S.J.; Kim, J.W. Human-Focused Digital Twin Applications for Occupational Safety and Health in Workplaces: A Brief Survey and Research Directions. Appl. Sci. 2023, 13, 4598. [Google Scholar] [CrossRef]

- Elbasheer, M.; Longo, F.; Mirabelli, G.; Nicoletti, L.; Padovano, A.; Solina, V. Shaping the role of the digital twins for human-robot dyad: Connotations, scenarios, and future perspectives. IET Collab. Intell. Manuf. 2023, 5, e12066. [Google Scholar] [CrossRef]

- Guruswamy, S.; Pojić, M.; Subramanian, J.; Mastilović, J.; Sarang, S.; Subbanagounder, A.; Stojanović, G.; Jeoti, V. Toward better food security using concepts from industry 5.0. Sensors 2022, 22, 8377. [Google Scholar] [CrossRef] [PubMed]

- Kaur, D.P.; Singh, N.P.; Banerjee, B. A review of platforms for simulating embodied agents in 3D virtual environments. Artif. Intell. Rev. 2023, 56, 3711–3753. [Google Scholar] [CrossRef]

- Inamura, T. Digital Twin of Experience for Human–Robot Collaboration through Virtual Reality. Int. J. Autom. Technol. 2023, 17, 284–291. [Google Scholar] [CrossRef]

- Lehtola, V.V.; Koeva, M.; Elberink, S.O.; Raposo, P.; Virtanen, J.P.; Vahdatikhaki, F.; Borsci, S. Digital twin of a city: Review of technology serving city needs. Int. J. Appl. Earth Obs. Geoinf. 2022, 114, 102915. [Google Scholar] [CrossRef]

- Feddoul, Y.; Ragot, N.; Duval, F.; Havard, V.; Baudry, D.; Assila, A. Exploring human-machine collaboration in industry: A systematic literature review of digital twin and robotics interfaced with extended reality technologies. Int. J. Adv. Manuf. Technol. 2023, 129, 1917–1932. [Google Scholar] [CrossRef]

- Falkowski, P.; Osiak, T.; Wilk, J.; Prokopiuk, N.; Leczkowski, B.; Pilat, Z.; Rzymkowski, C. Study on the Applicability of Digital Twins for Home Remote Motor Rehabilitation. Sensors 2023, 23, 911. [Google Scholar] [CrossRef] [PubMed]

- Ramasubramanian, A.K.; Mathew, R.; Kelly, M.; Hargaden, V.; Papakostas, N. Digital twin for human–robot collaboration in manufacturing: Review and outlook. Appl. Sci. 2022, 12, 4811. [Google Scholar] [CrossRef]

- Wilhelm, J.; Petzoldt, C.; Beinke, T.; Freitag, M. Review of digital twin-based interaction in smart manufacturing: Enabling cyber-physical systems for human-machine interaction. Int. J. Comput. Integr. Manuf. 2021, 34, 1031–1048. [Google Scholar] [CrossRef]

- Lv, Z. Digital Twins in Industry 5.0. Research 2023, 6, 0071. [Google Scholar] [CrossRef]

- Agnusdei, G.P.; Elia, V.; Gnoni, M.G. Is digital twin technology supporting safety management? A bibliometric and systematic review. Appl. Sci. 2021, 11, 2767. [Google Scholar] [CrossRef]

- Bhattacharya, M.; Penica, M.; O’Connell, E.; Southern, M.; Hayes, M. Human-in-Loop: A Review of Smart Manufacturing Deployments. Systems 2023, 11, 35. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F.; Hu, T.; Anwer, N.; Liu, A.; Wei, Y.; Wang, L.; Nee, A. Enabling technologies and tools for digital twin. J. Manuf. Syst. 2021, 58, 3–21. [Google Scholar] [CrossRef]

- Wang, Q.; Jiao, W.; Wang, P.; Zhang, Y. Digital twin for human-robot interactive welding and welder behavior analysis. IEEE/CAA J. Autom. Sin. 2020, 8, 334–343. [Google Scholar] [CrossRef]

- Li, C.; Zheng, P.; Li, S.; Pang, Y.; Lee, C.K. AR-assisted digital twin-enabled robot collaborative manufacturing system with human-in-the-loop. Robot. Comput.-Integr. Manuf. 2022, 76, 102321. [Google Scholar] [CrossRef]

- Matulis, M.; Harvey, C. A robot arm digital twin utilising reinforcement learning. Comput. Graph. 2021, 95, 106–114. [Google Scholar] [CrossRef]

- Park, K.B.; Choi, S.H.; Lee, J.Y.; Ghasemi, Y.; Mohammed, M.; Jeong, H. Hands-free human–robot interaction using multimodal gestures and deep learning in wearable mixed reality. IEEE Access 2021, 9, 55448–55464. [Google Scholar] [CrossRef]

- Tuli, T.B.; Kohl, L.; Chala, S.A.; Manns, M.; Ansari, F. Knowledge-based digital twin for predicting interactions in human-robot collaboration. In Proceedings of the 2021 26th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Vasteras, Sweden, 7–10 September 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–8. [Google Scholar]

- Michalos, G.; Karagiannis, P.; Makris, S.; Tokçalar, Ö.; Chryssolouris, G. Augmented reality (AR) applications for supporting human-robot interactive cooperation. Procedia CIRP 2016, 41, 370–375. [Google Scholar] [CrossRef]

- Oyekan, J.O.; Hutabarat, W.; Tiwari, A.; Grech, R.; Aung, M.H.; Mariani, M.P.; López-Dávalos, L.; Ricaud, T.; Singh, S.; Dupuis, C. The effectiveness of virtual environments in developing collaborative strategies between industrial robots and humans. Robot. Comput.-Integr. Manuf. 2019, 55, 41–54. [Google Scholar] [CrossRef]

- Dröder, K.; Bobka, P.; Germann, T.; Gabriel, F.; Dietrich, F. A machine learning-enhanced digital twin approach for human-robot-collaboration. Procedia Cirp 2018, 76, 187–192. [Google Scholar] [CrossRef]

- Lee, H.; Kim, S.D.; Al Amin, M.A.U. Control framework for collaborative robot using imitation learning-based teleoperation from human digital twin to robot digital twin. Mechatronics 2022, 85, 102833. [Google Scholar] [CrossRef]

- Kousi, N.; Gkournelos, C.; Aivaliotis, S.; Lotsaris, K.; Bavelos, A.C.; Baris, P.; Michalos, G.; Makris, S. Digital twin for designing and reconfiguring human–robot collaborative assembly lines. Appl. Sci. 2021, 11, 4620. [Google Scholar] [CrossRef]

- Dimitropoulos, N.; Togias, T.; Zacharaki, N.; Michalos, G.; Makris, S. Seamless human–robot collaborative assembly using artificial intelligence and wearable devices. Appl. Sci. 2021, 11, 5699. [Google Scholar] [CrossRef]

- Liang, C.J.; Kamat, V.R.; Menassa, C.C. Teaching robots to perform quasi-repetitive construction tasks through human demonstration. Autom. Constr. 2020, 120, 103370. [Google Scholar] [CrossRef]

- Kousi, N.; Gkournelos, C.; Aivaliotis, S.; Giannoulis, C.; Michalos, G.; Makris, S. Digital twin for adaptation of robots’ behavior in flexible robotic assembly lines. Procedia Manuf. 2019, 28, 121–126. [Google Scholar] [CrossRef]

- De Winter, J.; EI Makrini, I.; Van de Perre, G.; Nowé, A.; Verstraten, T.; Vanderborght, B. Autonomous assembly planning of demonstrated skills with reinforcement learning in simulation. Auton. Robot. 2021, 45, 1097–1110. [Google Scholar] [CrossRef]

- Liu, C.; Gao, J.; Bi, Y.; Shi, X.; Tian, D. A multitasking-oriented robot arm motion planning scheme based on deep reinforcement learning and twin synchro-control. Sensors 2020, 20, 3515. [Google Scholar] [CrossRef]

- Malik, A.A.; Masood, T.; Bilberg, A. Virtual reality in manufacturing: Immersive and collaborative artificial-reality in design of human-robot workspace. Int. J. Comput. Integr. Manuf. 2020, 33, 22–37. [Google Scholar] [CrossRef]

- Bilberg, A.; Malik, A.A. Digital twin driven human–robot collaborative assembly. CIRP Ann. 2019, 68, 499–502. [Google Scholar] [CrossRef]

- Malik, A.A.; Brem, A. Digital twins for collaborative robots: A case study in human-robot interaction. Robot. Comput.-Integr. Manuf. 2021, 68, 102092. [Google Scholar] [CrossRef]

- Fuller, A.; Fan, Z.; Day, C.; Barlow, C. Digital twin: Enabling technologies, challenges and open research. IEEE Access 2020, 8, 108952–108971. [Google Scholar] [CrossRef]

- Wang, T.; Li, J.; Kong, Z.; Liu, X.; Snoussi, H.; Lv, H. Digital twin improved via visual question answering for vision-language interactive mode in human–machine collaboration. J. Manuf. Syst. 2021, 58, 261–269. [Google Scholar] [CrossRef]

- Baranyi, G.; Dos Santos Melício, B.C.; Gaál, Z.; Hajder, L.; Simonyi, A.; Sindely, D.; Skaf, J.; Dušek, O.; Nekvinda, T.; Lőrincz, A. AI Technologies for Machine Supervision and Help in a Rehabilitation Scenario. Multimodal Technol. Interact. 2022, 6, 48. [Google Scholar] [CrossRef]

- Huang, Z.; Shen, Y.; Li, J.; Fey, M.; Brecher, C. A survey on AI-driven digital twins in industry 4.0: Smart manufacturing and advanced robotics. Sensors 2021, 21, 6340. [Google Scholar] [CrossRef] [PubMed]

- Jin, T.; Sun, Z.; Li, L.; Zhang, Q.; Zhu, M.; Zhang, Z.; Yuan, G.; Chen, T.; Tian, Y.; Hou, X.; et al. Triboelectric nanogenerator sensors for soft robotics aiming at digital twin applications. Nat. Commun. 2020, 11, 5381. [Google Scholar] [CrossRef] [PubMed]

- Lv, Q.; Zhang, R.; Sun, X.; Lu, Y.; Bao, J. A digital twin-driven human-robot collaborative assembly approach in the wake of COVID-19. J. Manuf. Syst. 2021, 60, 837–851. [Google Scholar] [CrossRef] [PubMed]

- Sun, Z.; Zhu, M.; Zhang, Z.; Chen, Z.; Shi, Q.; Shan, X.; Yeow, R.C.H.; Lee, C. Artificial Intelligence of Things (AIoT) enabled virtual shop applications using self-powered sensor enhanced soft robotic manipulator. Adv. Sci. 2021, 8, 2100230. [Google Scholar] [CrossRef] [PubMed]

- Choi, S.H.; Park, K.B.; Roh, D.H.; Lee, J.Y.; Mohammed, M.; Ghasemi, Y.; Jeong, H. An integrated mixed reality system for safety-aware human-robot collaboration using deep learning and digital twin generation. Robot. Comput.-Integr. Manuf. 2022, 73, 102258. [Google Scholar] [CrossRef]

- Hata, A.; Inam, R.; Raizer, K.; Wang, S.; Cao, E. AI-based safety analysis for collaborative mobile robots. In Proceedings of the 2019 24th IEEE International Conference on Emerging Technologies and Factory Automation (ETFA), Zaragoza, Spain, 10–13 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1722–1729. [Google Scholar]

- Laamarti, F.; Badawi, H.F.; Ding, Y.; Arafsha, F.; Hafidh, B.; El Saddik, A. An ISO/IEEE 11073 standardized digital twin framework for health and well-being in smart cities. IEEE Access 2020, 8, 105950–105961. [Google Scholar] [CrossRef]

- Wang, T.; Li, J.; Deng, Y.; Wang, C.; Snoussi, H.; Tao, F. Digital twin for human-machine interaction with convolutional neural network. Int. J. Comput. Integr. Manuf. 2021, 34, 888–897. [Google Scholar] [CrossRef]

- Zhang, C.; Zhou, G.; Ma, D.; Wang, R.; Xiao, J.; Zhao, D. A deep learning-enabled human-cyber-physical fusion method towards human-robot collaborative assembly. Robot. Comput.-Integr. Manuf. 2023, 83, 102571. [Google Scholar] [CrossRef]

- Zhan, X.; Wu, W.; Shen, L.; Liao, W.; Zhao, Z.; Xia, J. Industrial internet of things and unsupervised deep learning enabled real-time occupational safety monitoring in cold storage warehouse. Saf. Sci. 2022, 152, 105766. [Google Scholar] [CrossRef]

- Xia, K.; Sacco, C.; Kirkpatrick, M.; Saidy, C.; Nguyen, L.; Kircaliali, A.; Harik, R. A digital twin to train deep reinforcement learning agent for smart manufacturing plants: Environment, interfaces and intelligence. J. Manuf. Syst. 2021, 58, 210–230. [Google Scholar] [CrossRef]

- Gui, L.Y.; Zhang, K.; Wang, Y.X.; Liang, X.; Moura, J.M.; Veloso, M. Teaching robots to predict human motion. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 562–567. [Google Scholar]

- Papcun, P.; Cabadaj, J.; Kajati, E.; Romero, D.; Landryova, L.; Vascak, J.; Zolotova, I. Augmented reality for humans-robots interaction in dynamic slotting “chaotic storage” smart warehouses. In Proceedings of the Advances in Production Management Systems. Production Management for the Factory of the Future: IFIP WG 5.7 International Conference, APMS 2019, Austin, TX, USA, 1–5 September 2019; Proceedings, Part I. Springer: Cham, Switzerland, 2019; pp. 633–641. [Google Scholar]

- Zhang, Z.; Wen, F.; Sun, Z.; Guo, X.; He, T.; Lee, C. Artificial intelligence-enabled sensing technologies in the 5G/internet of things era: From virtual reality/augmented reality to the digital twin. Adv. Intell. Syst. 2022, 4, 2100228. [Google Scholar] [CrossRef]

- Dallel, M.; Havard, V.; Dupuis, Y.; Baudry, D. Digital twin of an industrial workstation: A novel method of an auto-labeled data generator using virtual reality for human action recognition in the context of human–robot collaboration. Eng. Appl. Artif. Intell. 2023, 118, 105655. [Google Scholar] [CrossRef]

- Zafari, F.; Gkelias, A.; Leung, K.K. A survey of indoor localization systems and technologies. IEEE Commun. Surv. Tutor. 2019, 21, 2568–2599. [Google Scholar] [CrossRef]

- Steinberg, G. Natural user interfaces. In Proceedings of the ACM SIGCHI Conference on Human Factors in Computing Systems, Austin, TX, USA, 5–10 May 2012. [Google Scholar]

- Karpov, A.; Yusupov, R. Multimodal interfaces of human–computer interaction. Her. Russ. Acad. Sci. 2018, 88, 67–74. [Google Scholar] [CrossRef]

- Liu, H.; Fang, T.; Zhou, T.; Wang, Y.; Wang, L. Deep learning-based multimodal control interface for human-robot collaboration. Procedia Cirp 2018, 72, 3–8. [Google Scholar] [CrossRef]

- Li, C.; Zheng, P.; Yin, Y.; Pang, Y.M.; Huo, S. An AR-assisted Deep Reinforcement Learning-based approach towards mutual-cognitive safe human-robot interaction. Robot. Comput.-Integr. Manuf. 2023, 80, 102471. [Google Scholar] [CrossRef]

- Horváth, G.; Erdős, G. Gesture control of cyber physical systems. Procedia Cirp 2017, 63, 184–188. [Google Scholar] [CrossRef]

- Qi, Q.; Zhao, D.; Liao, T.W.; Tao, F. Modeling of cyber-physical systems and digital twin based on edge computing, fog computing and cloud computing towards smart manufacturing. In Proceedings of the International Manufacturing Science and Engineering Conference. American Society of Mechanical Engineers, College Station, TX, USA, 18–22 June 2018; Volume 51357, p. V001T05A018. [Google Scholar]

- Urbaniak, D.; Rosell, J.; Suárez, R. Edge Computing in Autonomous and Collaborative Assembly Lines. In Proceedings of the 2022 IEEE 27th International Conference on Emerging Technologies and Factory Automation (ETFA), Stuttgart, Germany, 6–9 September 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–4. [Google Scholar]

- Wan, S.; Gu, Z.; Ni, Q. Cognitive computing and wireless communications on the edge for healthcare service robots. Comput. Commun. 2020, 149, 99–106. [Google Scholar] [CrossRef]

- Ruggeri, F.; Terra, A.; Hata, A.; Inam, R.; Leite, I. Safety-based Dynamic Task Offloading for Human-Robot Collaboration using Deep Reinforcement Learning. In Proceedings of the 2022 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Kyoto, Japan, 23–27 October 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2119–2126. [Google Scholar]

- Fraga-Lamas, P.; Barros, D.; Lopes, S.I.; Fernández-Caramés, T.M. Mist and Edge Computing Cyber-Physical Human-Centered Systems for Industry 5.0: A Cost-Effective IoT Thermal Imaging Safety System. Sensors 2022, 22, 8500. [Google Scholar] [CrossRef] [PubMed]

- Huang, H.; Yang, L.; Wang, Y.; Xu, X.; Lu, Y. Digital twin-driven online anomaly detection for an automation system based on edge intelligence. J. Manuf. Syst. 2021, 59, 138–150. [Google Scholar] [CrossRef]

- Khan, L.U.; Saad, W.; Niyato, D.; Han, Z.; Hong, C.S. Digital-twin-enabled 6G: Vision, architectural trends, and future directions. IEEE Commun. Mag. 2022, 60, 74–80. [Google Scholar] [CrossRef]

- Casalicchio, E. Container orchestration: A survey. Systems Modeling: Methodologies and Tools; Springer: Cham, Switzerland, 2019; pp. 221–235. [Google Scholar]

- De Lauretis, L. From monolithic architecture to microservices architecture. In Proceedings of the 2019 IEEE International Symposium on Software Reliability Engineering Workshops (ISSREW), Berlin, Germany, 27–30 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 93–96. [Google Scholar]

- Costa, J.; Matos, R.; Araujo, J.; Li, J.; Choi, E.; Nguyen, T.A.; Lee, J.W.; Min, D. Software aging effects on kubernetes in container orchestration systems for digital twin cloud infrastructures of urban air mobility. Drones 2023, 7, 35. [Google Scholar] [CrossRef]

- Costantini, A.; Di Modica, G.; Ahouangonou, J.C.; Duma, D.C.; Martelli, B.; Galletti, M.; Antonacci, M.; Nehls, D.; Bellavista, P.; Delamarre, C.; et al. IoTwins: Toward implementation of distributed digital twins in industry 4.0 settings. Computers 2022, 11, 67. [Google Scholar] [CrossRef]

- Qi, Q.; Tao, F. Digital twin and big data towards smart manufacturing and industry 4.0: 360 degree comparison. IEEE Access 2018, 6, 3585–3593. [Google Scholar] [CrossRef]

- Mourtzis, D.; Angelopoulos, J.; Panopoulos, N. Closed-Loop Robotic Arm Manipulation Based on Mixed Reality. Appl. Sci. 2022, 12, 2972. [Google Scholar] [CrossRef]

- Wang, J.; Ye, L.; Gao, R.X.; Li, C.; Zhang, L. Digital Twin for rotating machinery fault diagnosis in smart manufacturing. Int. J. Prod. Res. 2019, 57, 3920–3934. [Google Scholar] [CrossRef]

- Human, S.; Alt, R.; Habibnia, H.; Neumann, G. Human-centric personal data protection and consenting assistant systems: Towards a sustainable Digital Economy. In Proceedings of the 55th Hawaii International Conference on System Sciences, Maui, HI, USA, 4–7 January 2022. [Google Scholar]

- Lutz, R.R. Safe-AR: Reducing risk while augmenting reality. In Proceedings of the 2018 IEEE 29th International Symposium on Software Reliability Engineering (ISSRE), Memphis, TN, USA, 15–18 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 70–75. [Google Scholar]

- Robert, L.; Bansal, G.; Lutge, C. ICIS 2019 SIGHCI workshop panel report: Human computer interaction challenges and opportunities for fair, trustworthy and ethical artificial intelligence. AIS Trans. Hum.-Comput. Interact. 2020, 12, 96–108. [Google Scholar] [CrossRef]

- Gartner. What’s New in Artificial Intelligence from the 2023. Available online: https://www.gartner.com/en/articles/what-s-new-in-artificial-intelligence-from-the-2023-gartner-hype-cycle (accessed on 15 February 2024).

- Stoica, I.; Song, D.; Popa, R.A.; Patterson, D.; Mahoney, M.W.; Katz, R.; Joseph, A.D.; Jordan, M.; Hellerstein, J.M.; Gonzalez, J.E.; et al. A berkeley view of systems challenges for ai. arXiv 2017, arXiv:1712.05855. [Google Scholar]

- Tuli, S.; Casale, G.; Jennings, N.R. Pregan: Preemptive migration prediction network for proactive fault-tolerant edge computing. In Proceedings of the IEEE INFOCOM 2022-IEEE Conference on Computer Communications, Virtual, 2–5 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 670–679. [Google Scholar]

- Zheng, H.; Lee, R.; Lu, Y. HA-ViD: A Human Assembly Video Dataset for Comprehensive Assembly Knowledge Understanding. arXiv 2023, arXiv:2307.05721. [Google Scholar]

- Brecko, A.; Kajati, E.; Koziorek, J.; Zolotova, I. Federated learning for edge computing: A survey. Appl. Sci. 2022, 12, 9124. [Google Scholar] [CrossRef]

- Fernandez, R.A.S.; Sanchez-Lopez, J.L.; Sampedro, C.; Bavle, H.; Molina, M.; Campoy, P. Natural user interfaces for human-drone multi-modal interaction. In Proceedings of the 2016 International Conference on Unmanned Aircraft Systems (ICUAS), Arlington, VA, USA, 7–10 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1013–1022. [Google Scholar]

- Danys, L.; Zolotova, I.; Romero, D.; Papcun, P.; Kajati, E.; Jaros, R.; Koudelka, P.; Koziorek, J.; Martinek, R. Visible Light Communication and localization: A study on tracking solutions for Industry 4.0 and the Operator 4.0. J. Manuf. Syst. 2022, 64, 535–545. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).