SonicGuard Sensor—A Multichannel Acoustic Sensor for Long-Term Monitoring of Abdominal Sounds Examined through a Qualification Study

Abstract

1. Introduction

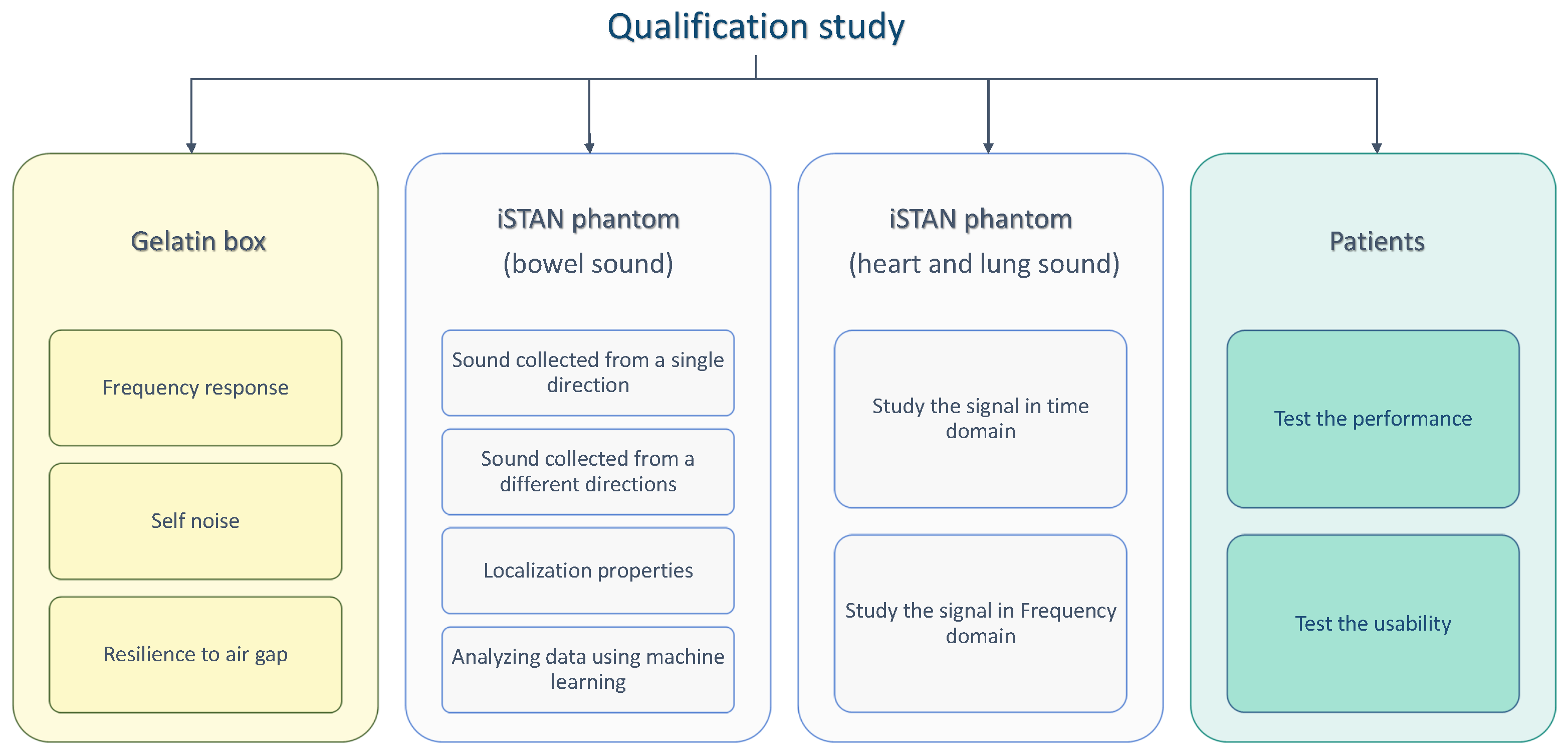

2. Materials and Methods

2.1. Body Sound Audio Sensors

2.2. Experimental Setup

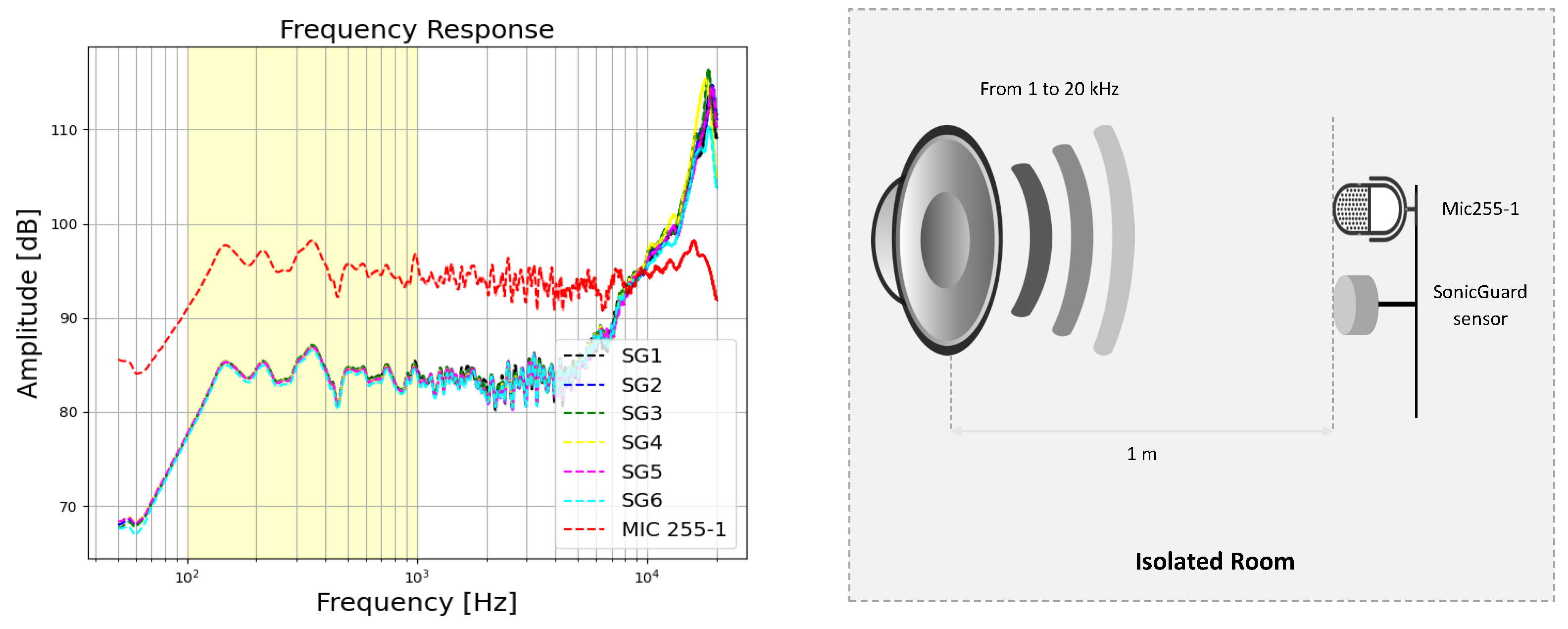

2.2.1. Basic Qualification Tests

2.2.2. Test Using the Gelatin Box

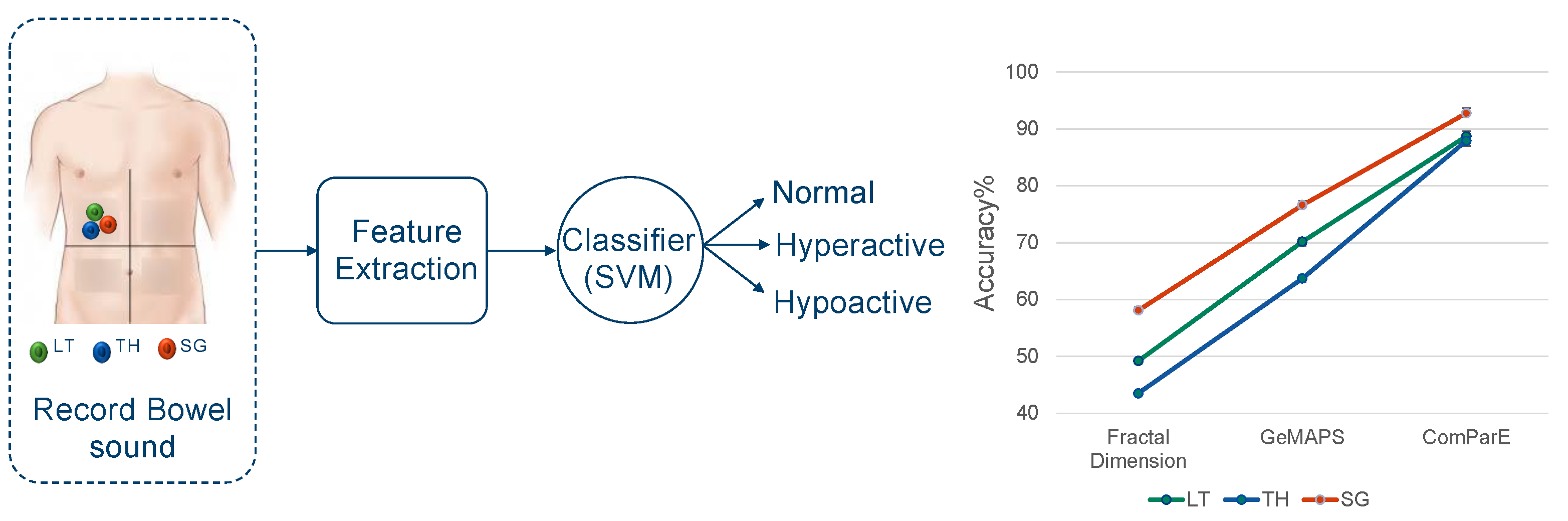

2.2.3. Tests Using the iSTAN Phantom

2.2.4. Test with Patients

3. Results and Discussion

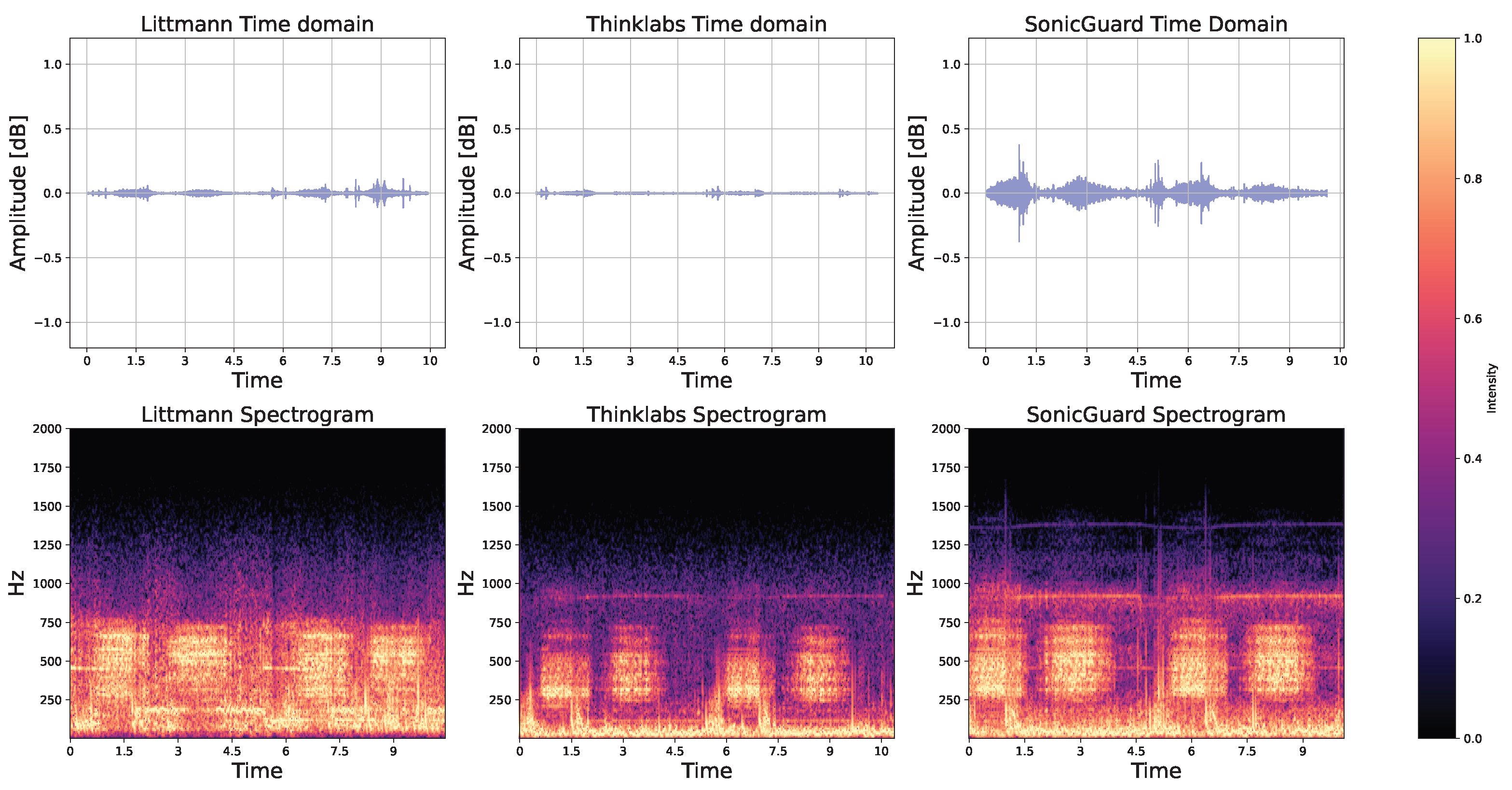

3.1. Basic Qualification Tests

3.2. Test Using the Gelatin Box

3.3. Tests Using the iSTAN Phantom

3.4. Analyzing Audio Sensor Data Using Machine Learning

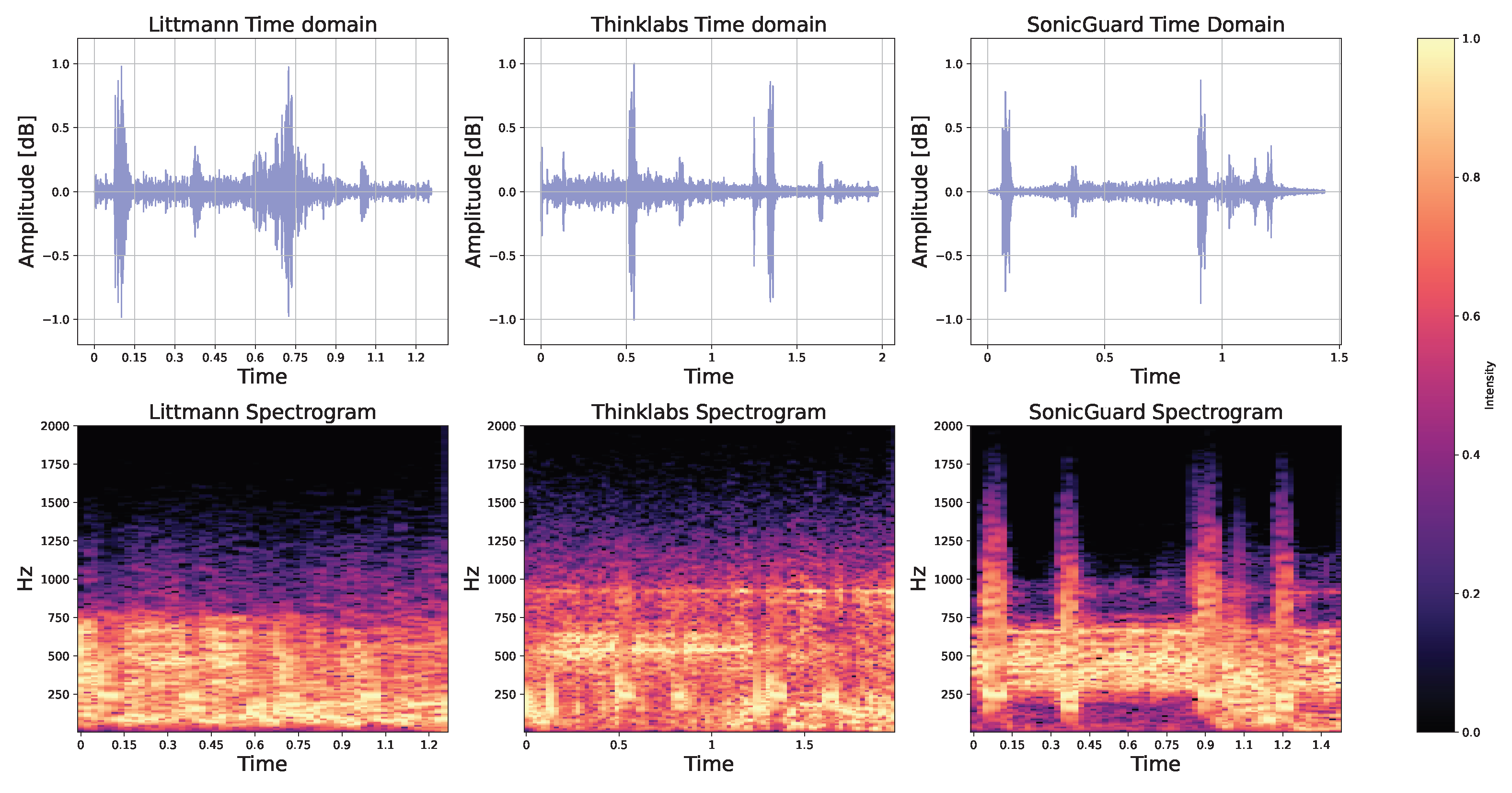

3.5. Applicability to Heart and Lung Sounds

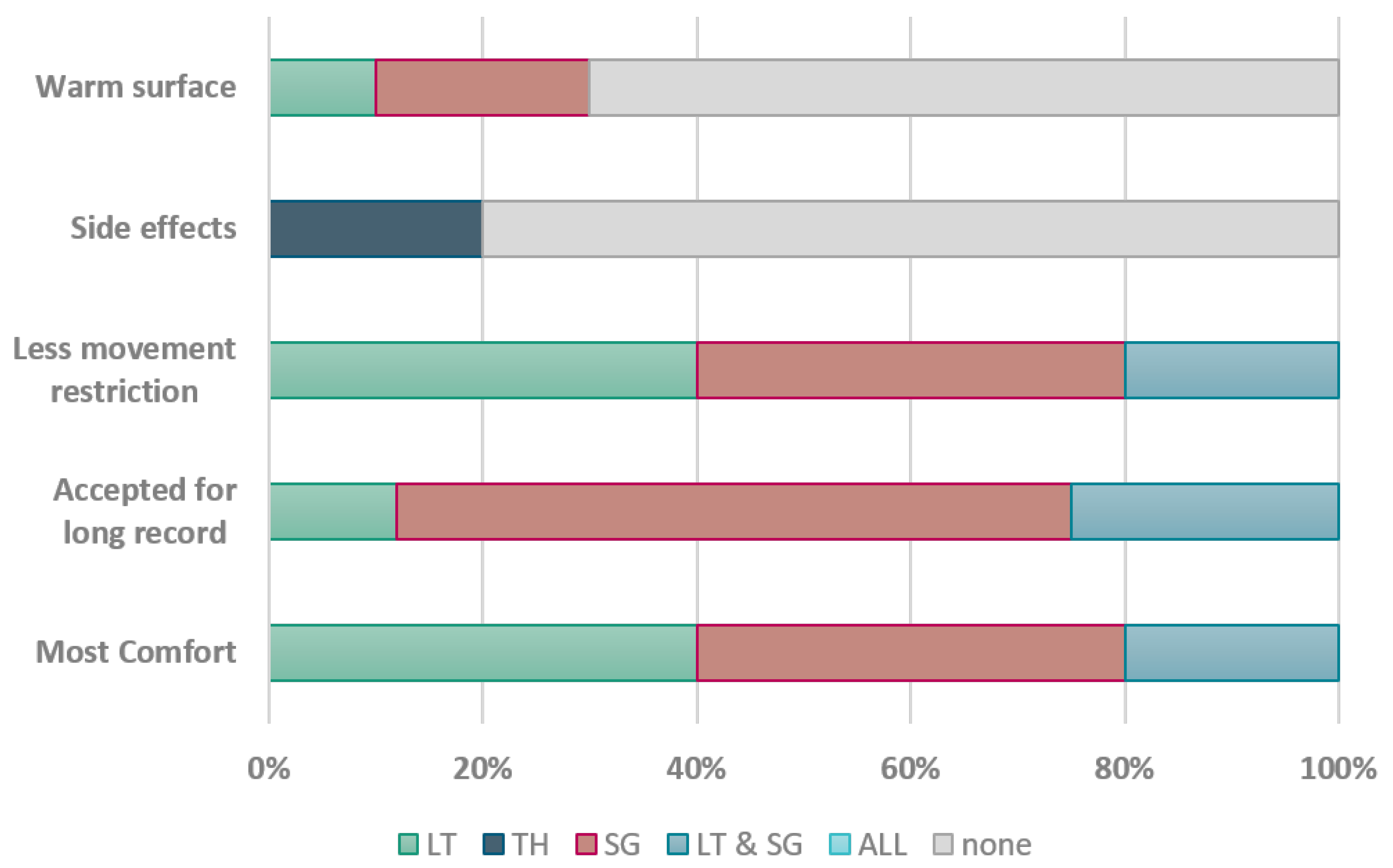

3.6. Test with Patients

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. SonicGuard Configuration

Appendix B. Gelatin Box Phantom Details

Appendix C. Machine Learning Results

| Fractal Dimension | GeMAPS | CoMparE | |||||||

|---|---|---|---|---|---|---|---|---|---|

| LT | TH | SG | LT | TH | SG | LT | TH | SG | |

| SVM | 49.2 | 43.5 | 58.1 | 70.16 | 63.7 | 76.6 | 88.7 | 87.9 | 92.74 |

| DTC | 33.8 | 33.1 | 34.6 | 35.5 | 42.7 | 44.3 | 29.8 | 23.4 | 45.2 |

| KNN | 34.7 | 33.1 | 36.3 | 33.11 | 32.3 | 35 | 33.8 | 33.1 | 33.1 |

Appendix D. List of Questions

- Which stethoscope did you find the most comfortable?

- Which stethoscope would you be willing to wear for an extended period of time?

- Which stethoscope allows you the greatest freedom of movement?

- Have you experienced any adverse effects from any of the stethoscopes?

- Did any of the stethoscopes feel like it matched the temperature of your skin?

References

- Qian, K.; Hu, B.; Yamamoto, Y.; Schuller, B.W. The Voice of the Body: Why AI Should Listen to It and an Archive. Cyborg Bionic Syst. 2023, 4, 0005. [Google Scholar] [CrossRef] [PubMed]

- Dornbush, S.; Turnquest, A.E. Physiology, Heart Sounds. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2022; Updated. [Google Scholar]

- Li, F.; Zhang, Z.; Wang, L.; Liu, W. Heart sound classification based on improved mel-frequency spectral coefficients and deep residual learning. Front. Physiol. 2022, 13, 1084420. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.; Hyon, Y.; Jung, S.S.; Lee, S.; Yoo, G.; Chung, C.; Ha, T. Respiratory sound classification for crackles, wheezes, and rhonchi in the clinical field using deep learning. Sci. Rep. 2021, 11, 17186. [Google Scholar] [CrossRef] [PubMed]

- Liu, S.; Mallol-Ragolta, A.; Parada-Cabaleiro, E.; Qian, K.; Jing, X.; Kathan, A.; Hu, B.; Schuller, B.W. Audio self-supervised learning: A survey. Patterns 2022, 3, 100616. [Google Scholar] [CrossRef] [PubMed]

- Soni, P.N.; Shi, S.; Sriram, P.R.; Ng, A.Y.; Rajpurkar, P. Contrastive learning of heart and lung sounds for label-efficient diagnosis. Patterns 2022, 3, 100400. [Google Scholar] [CrossRef] [PubMed]

- Julius, S.; Stewart, B. Diagnostic significance of abdominal murmurs. N. Engl. J. Med. 1967, 276, 1175–1178. [Google Scholar] [CrossRef]

- Allwood, G.; Du, X.; Webberley, K.M.; Osseiran, A.; Marshall, B.J. Advances in Acoustic Signal Processing Techniques for Enhanced Bowel Sound Analysis. IEEE Rev. Biomed. Eng. 2019, 12, 240–253. [Google Scholar] [CrossRef]

- Nowak, J.K.; Nowak, R.; Radzikowski, K.; Grulkowski, I.; Walkowiak, J. Automated Bowel Sound Analysis: An Overview. Sensors 2021, 21, 5294. [Google Scholar] [CrossRef]

- Choudry, M.; Stead, T.S.; Mangal, R.K.; Ganti, L. The History and Evolution of the Stethoscope. Cureus 2022, 14, e28171. [Google Scholar] [CrossRef]

- Kevat, A.; Kalirajah, A.; Roseby, R. Artificial intelligence accuracy in detecting pathological breath sounds in children using digital stethoscopes. Respir Res. 2020, 21, 253. [Google Scholar] [CrossRef]

- Wade, C.I.; Streitz, M.J. Anatomy, Abdomen and Pelvis: Abdomen. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2022. [Google Scholar]

- Lee, S.; Kim, Y.; Yeo, W. Soft, Wearable, Digital Stethoscope for Cardiac Biometric Security. Eng. Proc. 2021, 10, 73. [Google Scholar] [CrossRef]

- Klum, M.; Urban, M.; Tigges, T.; Pielmus, A.-G.; Feldheiser, A.; Schmitt, T.; Orglmeister, R. Wearable Cardiorespiratory Monitoring Employing a Multimodal Digital Patch Stethoscope: Estimation of ECG, PEP, LVET and Respiration Using a 55 mm Single-Lead ECG and Phonocardiogram. Sensors 2020, 20, 2033. [Google Scholar] [CrossRef]

- Lee, S.H.; Kim, Y.-S.; Yeo, M.-K.; Mahmood, M.; Zavanelli, N.; Chung, C.; Heo, J.Y.; Kim, Y.; Jung, S.-S.; Yeo, W.-H. Fully portable continuous real-time auscultation with a soft wearable stethoscope designed for automated disease diagnosis. Sci. Adv. 2022, 8, eabo5867. [Google Scholar] [CrossRef]

- Yilmaz, G.; Rapin, M.; Pessoa, D.; Rocha, B.M.; de Sousa, A.M.; Rusconi, R.; Carvalho, P.; Wacker, J.; Paiva, R.P.; Chételat, O. A Wearable Stethoscope for Long-Term Ambulatory Respiratory Health Monitoring. Sensors 2020, 20, 5124. [Google Scholar] [CrossRef]

- Kaneshiro, M.; Kaiser, W.; Pourmorady, J.; Fleshner, P.; Russell, M.; Zaghiyan, K.; Lin, A.; Martinez, B.; Patel, A.; Nguyen, A.; et al. Postoperative Gastrointestinal Telemetry with an Acoustic Biosensor Predicts Ileus vs. Uneventful GI Recovery. J. Gastrointest. Surg. 2016, 20, 132–139, discussion 139. [Google Scholar] [CrossRef]

- Spiegel, B.M.; Kaneshiro, M.; Russell, M.M.; Lin, A.; Patel, A.; Tashjian, V.C.; Zegarski, V.; Singh, D.; Cohen, S.E.; Reid, M.W.; et al. Validation of an Acoustic Gastrointestinal Surveillance Biosensor for Postoperative Ileus. J. Gastrointest. Surg. 2014, 18, 1795–1803. [Google Scholar] [CrossRef] [PubMed]

- Blass, K.; Schober, K.; Bonagura, J.; Scansen, B.; Visser, L.; Lu, J.; Smith, D.; Ward, J. Clinical evaluation of the 3M Littmann Electronic Stethoscope Model 3200 in 150 cats. J. Feline Med. Surg. 2013, 15, 893–900. [Google Scholar] [CrossRef] [PubMed]

- Landge, K.; Kidambi, B.; Singal, A.; Basha, M.D. Electronic stethoscopes: Brief review of clinical utility, evidence, and future implications. J. Pract. Cardiovasc. Sci. 2018, 4, 65. [Google Scholar] [CrossRef]

- 3M Littmann CORE Digital Stethoscope. Available online: https://shorturl.at/kovV1 (accessed on 18 February 2024).

- Thinklabs One Digital Stethoscope. Available online: https://www.thinklabs.com/manual (accessed on 18 February 2024).

- Cook, J.R.; Bouchard, R.R.; Emelianov, S.Y. Tissue-mimicking phantoms for photoacoustic and ultrasonic imaging. Biomed. Opt. Express 2011, 2, 3193–3206. [Google Scholar] [CrossRef] [PubMed]

- Manickam, K.; Machireddy, R.R.; Seshadri, S. Characterization of biomechanical properties of agar based tissue mimicking phantoms for ultrasound stiffness imaging techniques. J. Mech. Behav. Biomed. Mater. 2014, 35, 132–143. [Google Scholar] [CrossRef]

- Bourdages, M.; Demers, M.E.; Dube, S.; Gasse, C.; Girard, M.; Boutin, A.; Ray, J.; Bujold, E.; Demers, S. First-Trimester Abdominal Adipose Tissue Thickness to Predict Gestational Diabetes. J. Obstet. Gynaecol. Can. 2018, 40, 883–887. [Google Scholar] [CrossRef] [PubMed]

- Pandur, A.; Bánfai, B.; Radnai, B.; Deutsch, K.; Betlehem, J. The examination of the efficiency of simulations. Resuscitation 2016, 106, E88. [Google Scholar] [CrossRef]

- Goh, C.; Hamadicharef, B.; Henderson, G.; Ifeachor, E. Comparison of Fractal Dimension Algorithms for the Computation of EEG Biomarkers for Dementia. In Proceedings of the CIMED’05: Computational Intelligence in Medicine and Healthcare, Lisbon, Portugal, 29 June–1 July 2005. [Google Scholar]

- Eyben, F.; Wöllmer, M.; Schuller, B. openSMILE—The Munich Versatile and Fast Open-Source Audio Feature Extractor. In Proceedings of the 18th ACM International Conference on Multimedia (MM), Florence, Italy, 25–29 October 2010; pp. 1459–1462. [Google Scholar]

- Hearst, M.; Dumais, S.; Osuna, E.; Platt, J.; Scholkopf, B. Support vector machines. IEEE Intell. Syst. Their Appl. 1998, 13, 18–28. [Google Scholar] [CrossRef]

- Kusko, M.C.; Maselli, K. Introduction to Cardiac Auscultation. In Learning Cardiac Auscultation; Springer: London, UK, 2015. [Google Scholar]

- Gupta, P.; Wen, H.; Francesco, L.; Ayazi, F. Detection of pathological mechano-acoustic signatures using precision accelerometer contact microphones in patients with pulmonary disorders. Sci. Rep. 2021, 11, 13427. [Google Scholar] [CrossRef] [PubMed]

- Guntur, S.R.; Kim, S.C.; Choi, M.J. A Cost-Effective Reusable Tissue Mimicking Phantom for High Intensity Focused Ultrasonic Liver Surgery. Bioengineering 2022, 9, 786. [Google Scholar] [CrossRef]

- Varacallo, M.; Scharbach, S.; Al-Dhahir, M.A. Anatomy, Anterolateral Abdominal Wall Muscles; StatPearls Publishing: Treasure Island, FL, USA, 2022. [Google Scholar]

- Störchle, P.; Müller, W.; Sengeis, M.; Holasek, S.; Fürhapter-Rieger, A. Measurement of mean subcutaneous fat thickness: Eight standardised ultrasound sites compared to 216 randomly selected sites. Sci. Rep. 2018, 8, 16268. [Google Scholar] [CrossRef]

- Kim, J.; Lim, H.; Lee, S.I.; Kim, Y.J. Thickness of rectus abdominis muscle and abdominal subcutaneous fat tissue in adult women: Correlation with age, pregnancy, laparotomy, and body mass index. Arch. Plast. Surg. 2012, 39, 528–533. [Google Scholar] [CrossRef]

| Dimension | Littmann | Thinklabs | SonicGuard |

|---|---|---|---|

| Weight [g] | 232 | 50 | 10 |

| Diameter [mm] | 46 | 46 | 20 |

| Height [mm] | 25 | 28 | 8 |

| Frequency range [Hz] | 20–20,000 | 20–10,000 | 20–20,000 |

| Battery life [hours] | 8 | 4 | 24 |

| Sound Source | Sensor Location | Normal | Hyperactive | Hypoactive | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| LT | TH | SG | LT | TH | SG | LT | TH | SG | ||

| ALL | Centre | 5.65 | 0.56 | 8.65 | 0.21 | 0.04 | 0.17 | 0.19 | 0.28 | 8.88 |

| RUQ | RUQ | 6.48 | 30.2 | 84.76 | 0.18 | 0.05 | 0.22 | 0.18 | 0.05 | 0.27 |

| LUQ | LUQ | 1.12 | 0.96 | 10.3 | 0.21 | 0.51 | 0.11 | 0.18 | 0.05 | 0.62 |

| RLQ | RLQ | 18.12 | 32.24 | 37.31 | 0.14 | 0.005 | 2.14 | 0.18 | 0.05 | 1.65 |

| LLQ | LLQ | 38.41 | 18.19 | 35.3 | 13.85 | 4.54 | 25.23 | 0.17 | 0.05 | 0.77 |

| RUQ | LUQ | 0.18 | 0.005 | 1.29 | 0.18 | 0.05 | 0.29 | 0.22 | 0.05 | 0.23 |

| RUQ | RLQ | 0.22 | 0.005 | 3.23 | 0.19 | 0.05 | 0.34 | 0.19 | 0.05 | 0.38 |

| RUQ | LLQ | 0.18 | 0.005 | 0.67 | 0.18 | 0.05 | 0.95 | 0.18 | 0.05 | 1.13 |

| Sound Source | Normal | Hyperactive | Hypoactive | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| SG RUQ | SG LUQ | SG RLQ | SG LLQ | SG RUQ | SG LUQ | SG RLQ | SG LLQ | SG RUQ | SG LUQ | SG RLQ | SG LLQ | |

| RUQ | 69.26 | 19.80 | 7.87 | 1.31 | 68.07 | 22.13 | 3.81 | 14.93 | 97.13 | 17.76 | 8.86 | 1.31 |

| LUQ | 2.67 | 31.5 | 0.65 | 23.35 | 1.99 | 27.80 | 9.89 | 8.01 | 1.22 | 43.55 | 1.159 | 2.53 |

| RLQ | 51.04 | 18.10 | 155.4 | 3.78 | 49.7 | 17.43 | 80.27 | 5.61 | 52.96 | 16.53 | 104.84 | 3.78 |

| LLQ | 49.26 | 22.79 | 94.74 | 202.23 | 49.27 | 18.67 | 64.14 | 113.35 | 50.19 | 21.01 | 68.29 | 250.23 |

| Dynamic Range | Zero Crossing | Fractal Dimension | ||||

|---|---|---|---|---|---|---|

| No Filter | With Filter | No Filter | With Filter | No Filter | With Filter | |

| Littmann | 1.30 ± 0.65 | 1.99 ± 0.99 | 229.5 ± 41.1 | 187.95 ± 38.28 | 1.07 ± 0.05 | 1.01 ± 0.004 |

| Thinklabs | 0.23 ± 0.15 | 0.32 ± 0.23 | 1803.4 ± 528.3 | 202.66 ± 51.44 | 1.63 ± 0.23 | 1.03 ± 0.01 |

| SonicGuard | 0.70 ± 0.35 | 0.5 8± 0.28 | 241.65 ± 79.5 | 143.38 ± 55.80 | 1.33 ± 0.12 | 1.04 ± 0.01 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mansour, Z.; Uslar, V.; Weyhe, D.; Hollosi, D.; Strodthoff, N. SonicGuard Sensor—A Multichannel Acoustic Sensor for Long-Term Monitoring of Abdominal Sounds Examined through a Qualification Study. Sensors 2024, 24, 1843. https://doi.org/10.3390/s24061843

Mansour Z, Uslar V, Weyhe D, Hollosi D, Strodthoff N. SonicGuard Sensor—A Multichannel Acoustic Sensor for Long-Term Monitoring of Abdominal Sounds Examined through a Qualification Study. Sensors. 2024; 24(6):1843. https://doi.org/10.3390/s24061843

Chicago/Turabian StyleMansour, Zahra, Verena Uslar, Dirk Weyhe, Danilo Hollosi, and Nils Strodthoff. 2024. "SonicGuard Sensor—A Multichannel Acoustic Sensor for Long-Term Monitoring of Abdominal Sounds Examined through a Qualification Study" Sensors 24, no. 6: 1843. https://doi.org/10.3390/s24061843

APA StyleMansour, Z., Uslar, V., Weyhe, D., Hollosi, D., & Strodthoff, N. (2024). SonicGuard Sensor—A Multichannel Acoustic Sensor for Long-Term Monitoring of Abdominal Sounds Examined through a Qualification Study. Sensors, 24(6), 1843. https://doi.org/10.3390/s24061843