Abstract

Color data are often required for cultural heritage documentation. These data are typically acquired via standard digital cameras since they facilitate a quick and cost-effective way to extract RGB values from photos. However, cameras’ absolute sensor responses are device-dependent and thus not colorimetric. One way to still achieve relatively accurate color data is via camera characterization, a procedure which computes a bespoke RGB-to-XYZ matrix to transform camera-dependent RGB values into the device-independent CIE XYZ color space. This article applies and assesses camera characterization techniques in heritage documentation, particularly graffiti photographed in the academic project INDIGO. To this end, this paper presents COOLPI (COlor Operations Library for Processing Images), a novel Python-based toolbox for colorimetric and spectral work, including white-point-preserving camera characterization from photos captured under diverse, real-world lighting conditions. The results highlight the colorimetric accuracy achievable through COOLPI’s color-processing pipelines, affirming their suitability for heritage documentation.

1. Introduction

Accurately documenting color is necessary for correct color identification, dissemination and reproduction, especially in cultural heritage studies [,,]. Nowadays, heritage documentation relies almost exclusively on digital techniques, in most cases through photo acquisition and image processing procedures [,,]. The problem is that the RGB values recorded by the silicon sensor of consumer digital cameras are device-dependent, and none of them satisfy the Luther–Ives condition; i.e., the RGB values registered by the camera sensor are not a linear combination of the color-matching functions of the standard observer defined by the Commission Internationale de l’Éclaraige (CIE) []. In addition, the RGB data are dependent on the scene illumination. If an image is captured in its final form (as a JPG or TIFF file), the RGB values also depend on the image processing parameters set in the camera. Therefore, color values provided by any digital camera cannot be considered strictly colorimetric [,].

Given that many documentation and preservation techniques for cultural assets are based on digital images, it is necessary to implement procedures that can turn the camera-specific pixel values into reasonably color-accurate output RGB values. One widely used approach used to achieve this is camera characterization, a procedure yielding a bespoke RGB-to-XYZ matrix. This matrix can then transform the device-dependent RGB data recorded by the camera into the camera-independent, human-vision-based color space CIE XYZ [,]. Afterwards, these CIE XYZ tristimulus values can be transformed into any possible RGB output color space like sRGB or Adobe RGB (1998) via well-known procedures.

Different methodologies have been developed to estimate this RGB-to-XYZ transformation matrix [,,,,,,,,]. For all of them, it is highly recommended to use RAW RGB values for the computations since RAW data tend to be linear to the image radiance [,]. In addition, RAW data are not subject to the pre-processing that in-camera-generated JPG or TIFF images undergo, which, in most cases, is applied automatically with little control over the process [,,]. A linear regression model suffices to compute the matrix since the relationship between the RAW RGB values and CIE XYZ tristimulus values is expected to be linear []. After the linear regression adjustment, a 3 × 3 color correction matrix or camera characterization matrix is obtained that characterizes the RGB-to-XYZ transformation.

Using linear regression models for camera characterization is advantageous for multiple reasons: they are easy to interpret even for non-expert users, are computationally cost-effective, and provide relatively accurate results. However, a significant drawback is their dependency on the data used. The transformation matrices are illuminant-dependent since the relative RAW RGB values are highly sensitive to changes in the spectral distribution of the scene illumination. Therefore, applying RGB-to-XYZ matrices should be restricted to scenarios where an object is photographed under the same illumination conditions as those used to acquire data for the camera characterization procedure. If there is a change in illumination, a new transformation matrix must be calculated for that particular illuminant and that 3 × 3 matrix should not be used for a different illuminant. From a practical point of view, this technique is unfeasible, especially in heritage documentation projects where many photographs acquired under different illumination conditions must be handled.

One example of such a case is INDIGO (INventory and DIsseminate Graffiti along the dOnaukanal), a two-year academic project launched in September 2021 through funding from the Heritage Science Austria program of the Austrian Academy of Sciences. Project INDIGO wanted to push the status quo boundaries in inventorying and understanding extensive graffiti-scapes. This was mainly achieved by acquiring photos of new graffiti via weekly photographic tours, leading to a massive volume of digital photos obtained in widely varying illumination conditions [,,].

One way to address the problem is to perform the RGB-to-XYZ transformation under the assumption of homogeneous D65 illumination. Normally, the standard illuminant D65 defined by the CIE should be used in all colorimetric calculations. D65 represents average daylight and has a correlated color temperature of approximately 6500 K []. Previous investigations in rock painting scenarios indicated that satisfactory results were obtained using second-order polynomial models for colorimetric camera characterization [,,]. However, in practice, it is not possible to work under controlled lighting conditions, especially not in outdoor photography where there is rarely a single reference white point present. Natural outdoor scenes also feature different states of adaptation and viewing conditions because of the simultaneous appearance of shadowed areas and regions with direct incident sunlight.

The present study aims to obtain an accurate RGB-to-XYZ transformation matrix from photos acquired under different illumination conditions. To achieve this objective, an analysis of the well-established white point preservation (WPP) technique has been carried out. The WPP is a variant of a linear least square regression model in which a constraint is added to preserve the reference white point along with the grays []. This constraint makes it possible to obtain RGB-to-XYZ transformation matrices that are more robust to changes in illumination.

The present research was split into the following tasks to achieve this objective:

- Assessment of the WPP technique under different illumination conditions;

- Evaluation of the influence of color charts from different manufacturers on this process;

- Comparison of an ordinary least squares (OLS) model versus a set of linear regression algorithms;

- Analysis of the color accuracy achieved by applying the RGB-to-XYZ transformation matrix to a real-world scenario with graffiti photos.

As a novel aspect of this study, the analysis and processing of the digital photos have been carried out entirely using the functionalities implemented in the COlor Operations Library for Processing Images or COOLPI package [,,]. COOLPI was created within the INDIGO project. Since one of the fundamental pillars of the INDIGO project was the creation of color-accurate digital orthophotos, the COOLPI package integrates classes, methods, and functions for color image correction.

2. Materials and Methods

2.1. RGB-to-XYZ Color Transformation Matrix Computation

Different digital camera devices have different opto-electronic conversion functions and spectral sensitivities. Manufacturers typically do not provide this information, and the most accurate and correct way to obtain the spectral sensitivities of a camera is through specific instrumentation (monochromators and spectroradiometers) under strict laboratory conditions []. The problem is that these instruments are unavailable to the general public, so an alternative methodology must be applied to achieve color-accurate data from photos.

From a practical point of view, a widely used approach is the RGB-to-XYZ transformation matrix estimation based on a target-based method []. This methodology establishes the mathematical relationship between the RGB data of a set of samples recorded by the camera and their CIE XYZ values measured by a colorimeter or preferably a spectrophotometer. To obtain the RGB- to-XYZ transformation matrix coefficients, two different sets of data are required: the CIE XYZ values (computed from the spectral data) of the patches of a color reference chart (like the famous ColorChecker) used as colorimetric reference; and the RGB data registered by the camera extracted from a digital image of the same color patches. Also, the illuminant under which both measurements were taken must be considered.

2.2. White Point Preservation Constraint

The RGB values acquired by a digital camera are highly sensitive to the incident spectral radiance, so they will vary according to the illuminant under which the photograph was taken. As a direct consequence, the RGB-to-XYZ transformation matrix coefficients obtained vary depending on the data used for training the model [].

One way to address the variation in the RGB-to-XYZ matrix is via the WPP constraint [,], which normalizes the RGB and XYZ data into the range [0, 1] using the reflectance closest to a perfect white diffuser. Effectively, all RAW RGB and CIE XYZ values are divided by the RGB and XYZ coordinates corresponding to a white patch on a color reference target. In this way, any pixel with the maximum possible RGB values (1,1,1) in the RAW image will map onto CIE XYZ (1,1,1) values, which implies that the whites (and the grays) are preserved regardless of the illuminant []. In this way, obtaining more robust and stable transformation matrices in the case of illumination changes is possible.

2.3. Spectral Data

Color reference targets are widely used tools for digital imaging applications, particularly for color calibration. However, different reference targets, provided by different manufacturers, exist. The most widespread targets are probably those provided by X-Rite (now Calibrite) and Datacolor [,,]. For that reason, the color reference targets selected for this study as a colorimetric reference are as follows:

- Calibrite ColorChecker Digital SG (CCDSG, 96 patches or color samples when the achromatic patches from the edges are removed);

- Calibrite ColorChecker Classic (CCC, 24 patches);

- X-rite ColorChecker Passport Photo 2 (XRCCPP, 24 patches);

- Datacolor Spyder Checkr (SCK100, 48 patches).

The reflectance data of the color patches were measured using a Konica Minolta CM600d spectrophotometer (Konica Minolta, Spain, Valencia) with the SpectraMagic NX software v.3.31. The CIE XYZ tristimulus values for the standard D65 illuminant were computed in COOLPI directly from the reflectance data acquired by the spectrophotometer using the CIE formulation by integrating the product of the spectral reflectance, the spectral power distribution (SPD) of the D65 illuminant, and the color-matching-functions for the 2° observer (a mathematical representation of the average color vision of humans) [,].

2.4. Illuminants

The digital photos were acquired in two scenarios: laboratory or controlled illumination conditions and in situ illuminants (corresponding with indoor and outdoor illuminants). The shots under controlled lighting took place in a Just Normlicht Color Viewing Light S PROFESSIONAL (JNCVLS) color cabin. The JNCVLS integrates illuminants approximately corresponding to the CIE standard D65, A, F11 (or TL84), and D50 []. Theoretical SPD data for standard illuminants are provided by the CIE []. However, it is highly recommended that actual measurements of the cabin illuminant be taken at the time of the photo. Thus, the SPD for all color cabin illuminants were measured using a Sekonic C-7000 SPECTROMASTER spectrometer (SEKONIC, Austria, Viena) and labeled using the prefix JN-, indicating that it is a color cabin illuminant, followed by the nomenclature of the standard illuminant, i.e., JN-D65, JN-A, JN-F, and JN-D50, respectively.

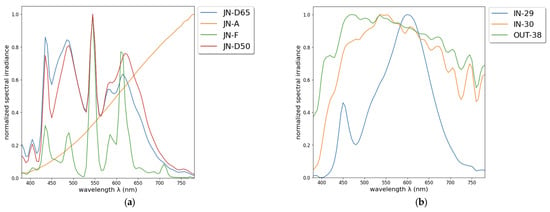

For photographs taken under uncontrolled lighting conditions, the SPDs of the illuminants were also measured at the time of image capture and labeled with the prefixes IN- and OUT- for indoor and outdoor, respectively, followed by the number identifying the measurement made with the spectrometer. In total, three different in situ illuminants were considered: IN-29, corresponding to artificial indoor lighting; IN-30, for indoor ambient natural light; and OUT-38, for natural daylight or direct sunlight outdoor illumination. Figure 1 shows the SPD of the illuminants obtained with the spectrometer. In addition, Table 1 mentions the correlated color temperature (CCT) in K and the white point Xn, Yn, and Zn tristimulus values (CIE 1931 2° observer) for each illuminant, as these values are useful in defining an illuminant in addition to its SPD.

Figure 1.

SPD illuminants: (a) Laboratory settings. (b) In situ.

Table 1.

Illuminant information: CCT in K and white point Xn, Yn, and Zn values (CIE 1931 2° standard observer).

2.5. Digital Photos

Two different digital cameras were used for this study: a Nikon D5600 (Nikon Corporation, Spain, Valencia) and a Nikon Z 7II (Nikon Corporation, Austria, Vienna), the latter being one of the official INDIGO cameras []. The exposure time, ISO, and aperture were controlled for all the photos to ensure proper exposure. As a sample, Figure 2 displays the images corresponding to the XRCCPP reference target for the Nikon D5600 and Figure 3 for the Nikon Z 7II camera. Since both cameras allow saving photos as NEF files (i.e., Nikon Electronic Format, Nikon’s RAW image file format), these were used in this study. The RAW data were black-level corrected, demosaiced with COOLPI’s default adaptive homogeneity-directed demosaicing algorithm (i.e., to ensure every pixel had R, G, and B values), and scaled in the range [0, 1]; no white balancing was applied. The resulting data are henceforth referred to as the original data (unconstrained). These photos supplied the camera- and illumination-specific RAW RGB data used to calculate the coefficients of the RGB-to-XYZ transformation matrices, a procedure detailed in Section 2.7.

Figure 2.

Nikon D5600 sample photos (displayed as fully rendered JPGs) of the XRCCPP under different light conditions (SPD of illuminant is showed at upper right for each image): (a) Laboratory settings: JN-D65, JN-A, JN-F, and JN-D50. (b) In situ settings: IN-29, IN-30, and OUT-38.

Figure 3.

Nikon Z 7II sample photos (displayed as fully rendered JPGs) of the XRCCPP under in situ illuminants: IN-29, IN-30, and IN-38). SPD of illuminant is showed at upper right for each image.

For the Nikon D5600, a total of 28 images were available: 16 were acquired under controlled (or laboratory) lighting conditions: 1 for each combination of cabin illuminant (JN-D65, JN-A, JN-F, and JN-D65; Section 2.4) and color reference target (CCDSG, CCC, XRCCPP, and SCK100; Section 2.3); the remaining 12 were taken under in situ illuminants: 1 for each combination of in situ illuminant (IN-29, IN-30, and OUT-38; Section 2.4) and reference target (CCDSG, CCC, XRCCPP, and SCK100; Section 2.3). The D5600 photos were used to compute the transformation matrix coefficients using the WPP constraint from a theoretical perspective and compared with the results achieved using the original data (see Section 2.6).

However, since it is generally impossible to work under controlled lighting conditions, there was an interest in evaluating the accuracy achieved in determining the transformation matrix using only photos taken under in situ illumination, and its application to graffiti photos as part of the INDIGO project. To that end, 12 Nikon Z 7II photos acquired in situ under natural illumination were used to estimate the RGB-to-XYZ matrix: 1 for each combination of in situ illuminant (IN-29, IN-30, and OUT-38; Section 2.4) and color reference target (CCDSG, CCC, XRCCPP, and SCK100; Section 2.3). After obtaining the matrix coefficients, they were applied to 45 photos of different graffiti located along the Donaukanal in Vienna, Austria.

2.6. Three Model Datasets

Section 2.5 clarified that this study used 28 Nikon D5600 photos and 12 Nikon Z 7II photos. From this photo collection, three model-specific datasets were generated. These datasets are labelled with the prefix ND- or NZ- indicating the camera used (i.e., the Nikon D5600 and Z 7II, respectively) followed by the suffix ODS (for the original, unconstrained data) or WPPDS (for the data considering the WPP constraint).

In addition to the number of images used for each dataset, Table 2 details the color samples (i.e., the patches of the reference chart) used, considering the different illuminants and color reference targets described in Section 2.5. The ‘Laboratory Photos’ column refers to images acquired under controlled lighting conditions (illuminants JN-D65, JN-A, JN-F, and JN-D65; Section 2.4) for each of the color reference targets considered (CCDSG, CCC, XRCCPP, and SCK100; Section 2.3), while the ‘In situ Photos’ column refers to images acquired under indoor and outdoor illuminants (IN-29, IN-30, and OUT-38; Section 2.4), for the same color reference targets. These datasets were used in the linear regression fitting to obtain the RGB-to-XYZ transformation matrix coefficients for each camera.

Table 2.

Datasets for model computation.

Using two datasets for the Nikon D5600 has a threefold purpose. First, it allows the evaluation of the improvement achieved by including the WPP constraint in the OLS adjustment (ND-ODS vs. ND-WPPDS). Second, different regression models can be compared. Finally, and this is one of the most important aspects of this study, it was possible to analyze whether the coefficients of the transformation matrix obtained after adjustment (from the ND-WPPDS) in outdoor lighting are comparable to those obtained in laboratory conditions.

For the ODS dataset, the 2° XYZ and RAW RGB values were scaled to the range (0, 1) as follows:

in which tonal range refers to the image’s potential different tones, a number defined by the bit depth of the image (e.g., a 12-bit RAW image maximally contains 212—1 or 4095 tonal levels).

For the WPPDS dataset, the RAW RGB and 2° XYZ values were normalized using the patch with the maximum XYZ and RAW RGB value (white point preservation constraint, Section 2.2), which corresponds with the white patch of the color reference target as follows:

where Rwpp, Gwpp, Bwpp, Xwpp, Ywpp, and Zwpp refer to the white patch RAW RGB and 2° CIE XYZ values, respectively.

2.7. Linear Regression Model Comparison

The aim was to find the mapping function between the RAW RGB color values registered by the digital camera (device-dependent) and their corresponding CIE XYZ tristimulus values (device-independent). Different regression models can be applied to compute this transformation [,,,,,,]. The number of parameters of the transformation will depend on the regression model used. However, since RAW RGB data tend to be linear, using linear regression models is accurate enough, so a 3 × 3 RGB-to-XYZ transformation matrix will be obtained.

The normalized RAW RGB and CIE XYZ triplets (see Section 2.6) can be expressed as a positional vector as follows:

Denoting M as the RAW RGB to CIE XYZ 3 × 3 transformation matrix, we can express the mathematical model as:

where the subindex n denotes the number of samples used for training the model.

The model can be adjusted using an ordinary least squares regression (OLS). The least square regression determines the model’s coefficients so that the sum of the squared residuals is at a minimum. The entire OLS process can be divided into the following stages:

- Loading the model data (i.e., the RAW RGB and CIE XYZ triplets of the color patches).

- Splitting these patches into two randomly selected datasets: training (80%) and testing (20%).

- OLS model regression adjustment using the training data to obtain the coefficients for the transformation matrix.

- Model assessment from test data. The RMSE and the CIE XYZ residuals for the predicted values were computed. Also, color difference metrics were calculated to evaluate the color accuracy obtainable via the computed transformation matrix (Section 2.8).

In addition, a comparison between different linear regression models was carried out to examine whether improving the regression model in terms of results and computational effort is possible. The OLS model was compared with the following robust and well-established models: multi-task Lasso CV (MTLCV); Bayesian ridge regression (BR); the Huber regressor (HR), and the Theil–Sen regressor (TSR). The model comparison was performed using the Scikit-learn Python package, so details about these different regression models can be found in the Scikit-learn API documentation [].

2.8. Model Assessment

The model assessment metrics were computed using the test data. Since RMSE and residuals are in CIE XYZ units and do not necessarily reflect perceived visual errors, and since the CIELAB color space is approximately perceptually uniform, color difference metrics have been included to assess the colorimetric accuracy achieved after the adjustment [,,]. Thus, for calculating color differences, the 2° CIE XYZ predicted values must be transformed into the CIELAB color space (under illuminant D65) using the CIE formulation []. Conversion between color spaces is well-documented in the literature. We recommend following the method described in reference [] for a detailed analysis. The CIELAB space characterizes colors according to three parameters: L* for luminance, a* for the green–red chromaticity axis, and b* for the blue–yellow chromaticity axis. Two different color difference metrics were used: the legacy but often reported and the improved or CIEDE2000 [], which is the current industry standard.

Given a pair of color stimuli defined in CIELAB space, the color difference between them can be computed as follows []:

where , , and are the differences between the L*, a*, and b* coordinates of the two-color stimuli. A difference value of 0 indicates that the colors are identical, and higher values indicate increasing perceptual differences between the colors.

However, it is worth noting that has some limitations and may not perfectly match human perception in all cases. Therefore, more advanced color difference formulas, such as have been developed to address some of these limitations. is a color difference equation recommended by the CIE in 2001 that improves the computation of color differences for industrial applications [,,]. The equation corrects the non-uniformity of the CIELAB color space, adding lightness, chroma, and hue weighting functions and a scaling factor for CIELAB a* axis [,,].

The color difference equation must be applied as follows [,,]:

where the SL, SC, SH, and RT are the weighting functions, and kL, kC, and kH are the parametric factors or correction terms for variations in experimental conditions (under reference conditions, they are set to kL = kC = kH = 1 []).

Given the intricacies inherent in the computation of color differences, this article provides solely the pertinent equation facilitating its determination (Equation (10)). For an exhaustive study of each of the parameters involved in the calculation of , the reader is referred to the specialized colorimetric references, which give a more detailed account of their calculation and the relevant mathematical observations []. It is imperative to note that these references have been meticulously considered during the integration of the equation into the COOLPI framework.

In order to evaluate the quality of the model fit, both the RMSE and the mean of the residuals in CIE XYZ units were analyzed, as well as the values of the color differences and to enable the adjustment’s evaluation from a colorimetric point of view. Different criteria can be considered in defining a maximum acceptable color difference, and these thresholds are often goal-specific. For example, Vrhel and Trussell set the threshold at ≤3 for color correction purposes [], while Song and Luo [] stablished ≤ 4.5 as the acceptability threshold when viewing images on a monitor. The digitization advice provided by the Metamorfoze Preservation Imaging Guidelines [] mentions an average color difference of ≤2.83 for the neutral patches and ≤4 for all patches of a CCDSG target. Using the latter guidelines—and in agreement with our experience in rock art documentation []—this paper uses an average threshold of ≤4. Although values from different color difference metrics should not be compared (they vary across the CIELAB colour space), and even though thresholds are usually tighter than their counterparts (see [] or a comparison of both metrics in dentistry), this paper adopts an average threshold of ≤4. The fact that the threshold is identical to the threshold is not only for ease of use, but also because it is in line with the − regression equation proposed by Lee and Powers for colour differences below 5 [].

2.9. Methodological Application in a Real Scenario

Typically, cultural heritage photo acquisition occurs under non-homogeneous and uncontrolled illumination. This is certainly the case when photographing cultural assets, like graffiti, placed outside. From the dataset obtained using the images from the Nikon Z 7II camera, the transformation matrix coefficients were calculated using the WPP constraint (Section 4). The color correction matrix was then applied to photos of graffiti located along Vienna’s Donaukanal. To comprehend the outdoor lighting conditions, every graffito photo was complemented by a measurement of the solar spectral illumination (i.e., the illumination’s SPD) using a Sekonic C-7000 SPECTROMASTER (Section 2.4).

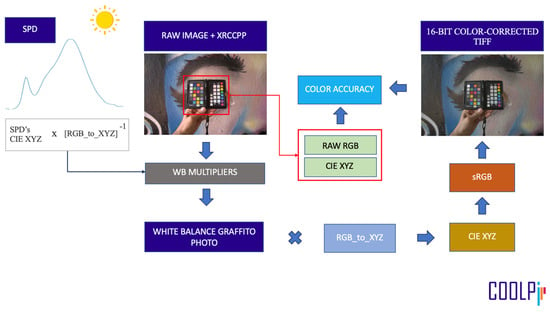

All the steps of the processing pipeline—which was entirely executed within COOLPI (Section 2.10)—can be summarized as follows (the workflow diagram is shown in Figure 4):

Figure 4.

Color correction pipeline.

- Load and minimally process (see Section 2.5) the RAW graffito sample photo, which includes an XRCCPP as a colorimetric reference;

- Obtain the measured SPD of the illuminant corresponding to the graffito photo;

- Compute the SPD’s CIE XYZ values and multiply them by the inverse of the transformation matrix to yield the white balance multipliers;

- Apply the multipliers to compute the white-balanced graffito photo;

- Apply the RGB-to-XYZ color transformation to the white-balanced graffito photo;

- Transform the CIE XYZ to sRGB to obtain a 16-bit color-corrected TIFF image;

- Extract the RAW RGB data of the patches of the XRCCPP (for color accuracy evaluation);

- Assess the color accuracy achieved after the process (computing the metrics described in Section 2.8).

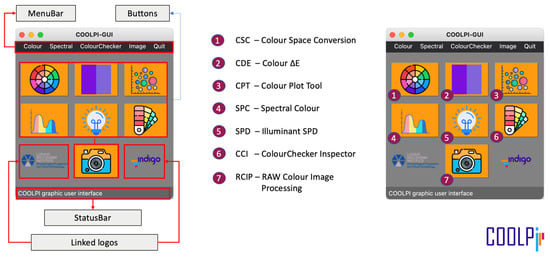

2.10. COOLPI

Since one of INDIGO’s main goals was to obtain color-accurate orthophotographs, the COOLPI toolbox was developed within the project. COOLPI is an open-source and completely free Python package, including classes, methods, and functions for colorimetric and spectral data treatment [,]. The package has been developed and tested rigorously following the colorimetric standards published by the CIE []. In addition, the COOLPI toolbox includes functionalities for RAW image conversion, facilitating control over every RAW development step (something that commercial image processing software tends to hide from the user). COOLPI also comes with a graphical user interface (GUI) that integrates the main functionalities of the COOLPI package. The GUI was made to be intuitive and user-friendly, especially for non-programmers (Figure 5).

Figure 5.

COOLPI-GUI initial view (Source: COOLPI API Documentation []).

3. Results and Discussion

3.1. White Point Preservation Assessment

As a starting point for this study, we analyzed the behavior of the WPP constraint for computing the RGB-to-XYZ transformation matrix. This analysis was carried out for the two scenarios, i.e., laboratory and in situ illumination. Therefore, the ND-ODS and ND-WPPDS datasets of the Nikon D5600 images (Table 2) were used to compute and perform the model comparison.

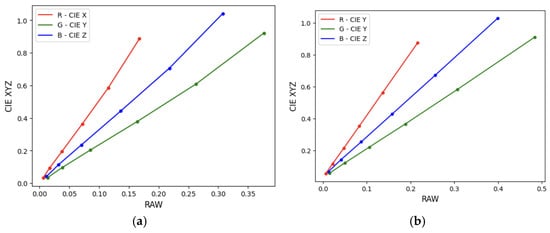

Although it is assumed that a camera’s RGB values are linearly related to the incident radiance, it is best to check this assumption. One way of doing this is by plotting the RGB values of the gray patches on the photographed color reference target against their measured CIE XYZ tristimulus values. Figure 6 depicts the outcome of this process for the Nikon D5600 using the XRPPCC and the CCDSG color reference targets. Both graphs show that no non-linearity correction is needed for the RAW RGB data [].

Figure 6.

Assessment of the linearity of RAW data from the Nikon D5600 camera. Grayscale patch plot for two color reference targets: (a) XRCCPP. (b) CCDSG.

To determine the color reference target most suitable for obtaining the RGB-to-XYZ matrix, a pre-analysis applied an OLS regression model for the ND-WPPDS dataset to all reference targets under the JN-D65 illuminant (see Table 3). The training and test columns refer to the color patches used for model training and evaluation, respectively. These patches were randomly selected using the train_test_split function of the scikit-learn Python library. As mentioned in Section 2.8, the RMSE and the mean of the residuals (in CIE XYZ units) were analyzed, as well as the and color differences.

Table 3.

Color reference target selection via an OLS model assessment on the ND-WPPDS dataset under JN-D65 illuminant (the best results are highlighted in bold).

(Equation (10)) values less than five were obtained for all targets, with higher values for color reference charts featuring more patches. The lowest value (1.642) was obtained with the XRCCPP target, although the model was trained with the fewest data points. In addition, that reference chart also resulted in the highest predictive capability (R2 value of 0.968) (Table 3). As expected, the OLS regression model proved accurate enough for obtaining the transformation matrix coefficients, given the linearity of the RAW RGB data.

After selecting the best-performing color reference chart, the comparative analysis between the ND-ODS and ND-WPPDS datasets was carried out using only the XRCCPP data under each illuminant as training/test data.

Table 4 shows the illuminant-specific model assessment metrics obtained after the adjustment using the ND-ODS and NP-WPPDS datasets. In addition, the results are grouped into “Laboratory” (JN-D65, JN-A, JN-F, and JN-D50), “In situ” (IN-29, IN-30, and OUT-38), and “All” illuminants. The computed RGB-to-XYZ transformation matrix coefficients are shown in Table 5 for the ND-ODS and ND-WPPDS datasets, again for each illuminant separately and grouped into “Laboratory”, “In situ” or “All”.

Table 4.

OLS model assessment metrics for the ND-ODS dataset only for the XRCCPP target under the different illuminants (highlighted in bold are the best results obtained).

Table 5.

RGB-to-XYZ 3 × 3 transformation matrix coefficients for the XRCCPP color chart data.

These analyses show that similar results are obtained using the ND-ODS or ND-WPPDS datasets. Both datasets perform with sufficient accuracy under laboratory conditions and in situ lighting. Values lower than two were obtained for the color difference (Table 4). As expected, significant distinctness is found when comparing the results between the two datasets when grouping illuminants. For the ND-ODS dataset, values greater than four were obtained, while they were always less than four for the ND-WPPDS data. values below four (i.e., the threshold value established for color differences; Section 2.8) guarantee that it is impossible to perceive the difference between the predicted and observed data for the color patches used for model testing, i.e., between the patches in the real world and the color-corrected digital image.

Although from a statistical point of view it can be stated that both datasets give satisfactory results, we are mainly interested in comparing the transformation matrix coefficients obtained with or without the WPP constraint. Large coefficient differences can be observed for the ND-ODS dataset, where it is clear that they depend on the illumination used to obtain them. The opposite situation occurs with the ND-WPPDS dataset. In this case, the coefficients obtained are more homogeneous across illumination changes thanks to the WPP constraint (Table 5).

The ND-WPPDS data yield higher values for the RMSE and residual CIE XYZ values than the ND-ODS data, because the former feature the constraint that the maximum value for CIE XYZ is (1,1,1). Although the resulting matrix preserves the white and grays, it will not work well for pixels whose tristimulus values exceed (1,1,1). For both datasets, higher RMSE values were obtained when the regression used the data for all illuminants. However, it should be noted that higher values were also obtained for R2, which implies a higher predictive capability for the model. The highest predictive capabilities were found in the WPP-constrained data. Overall, it is thus clear that the WPP constraint provides better and more stable computation of the coefficients in the RGB-to-XYZ transformation matrix.

The next step was to evaluate the behavior of the WPP constraint on large datasets. Thus, an additional OLS adjustment was performed using the data for all of the color reference charts photographed under each illuminant, and one for all in situ, laboratory, and laboratory + in situ photographs. The model assessment metrics computed after the adjustment can be found in Table 6; Table 7 lists the coefficients obtained for the color transformation matrix.

Table 6.

OLS model assessment metrics for the full ND-WPPDS dataset (highlighted in bold are the best results obtained).

Table 7.

RGB-to-XYZ 3 × 3 transformation matrix coefficients for the full ND-WPPDS dataset.

In view of the results obtained, it can be seen that the combination of color charts performs very well under in situ illuminants. In fact, better results were obtained compared to the model trained using only the JNCVLS color cabin photos where the highest RMSE (0.058) and value (5.067) were achieved (Table 6). This is due to the limitations of the color cabin, which cannot be entirely closed. Also, a larger dataset was used for model training and validation compared to the adjustment using the images under in situ illumination (Table 6, row ‘All’). However, the fit can be considered sufficiently accurate. Regardless of the illuminant used, the coefficients of the transformation matrix exhibit minimal variation (Table 7).

For practical purposes, the results obtained for the in situ illuminants are equivalent to those obtained using the entire WPPDS dataset, in terms of the model predictive capability and color difference metrics, since color differences less than four are sufficiently accurate.

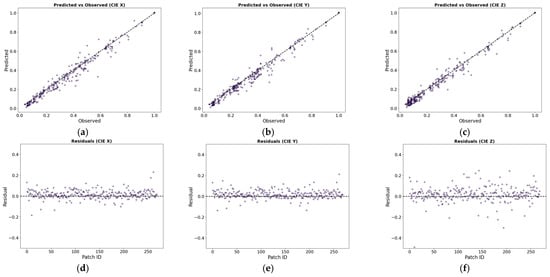

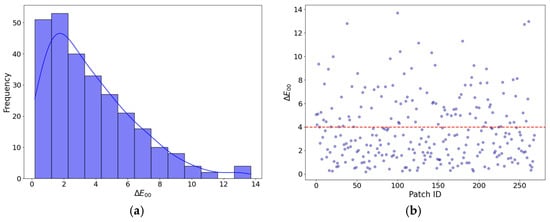

As a supplementary aid to the statistical model metrics presented in Table 6, Figure 7 shows the predicted versus the observed CIE XYZ values, and the residuals obtained after the adjustment using the full ND-WPPDS dataset, while Figure 8 shows the color difference obtained. Observing the graphs, we can affirm that the OLS regression model is adequate to carry out the regression, obtaining satisfactory and accurate results from a colorimetric point of view. Thus, this implies that the RGB-to-XYZ transformation matrix coefficients can be estimated with sufficient accuracy by the model, for laboratory and/or in situ illumination.

Figure 7.

The upper row depicts the predicted versus observed CIE XYZ values for: (a) CIE X, (b) CIE Y, and (c) CIE Z. The lower row depicts the residuals: (d) CIE X, (e) CIE Y, (f) CIE Z. These values result from an OLS adjustment on all ND-WPPDS data.

Figure 8.

color difference values after an OLS adjustment on all ND-WPPDS data: (a) Histogram; (b) values (the red horizontal line marks the limit set at four).

3.2. Linear Regression Model Comparison

After analyzing the behavior of the OLS regression model, the question is whether it is possible to improve the results by employing more robust and complex linear models, such as those provided by specific mathematical computing libraries like Scikit-learn (which is very versatile and easy to implement and understand).

As stated in Section 2.7, a comparison between the OLS, MTLCV, BR, HB, and TSR regression models was carried out. This model comparison was performed using the full ND-WPPDS dataset from the Nikon D5600 images (Table 2).The results of the comparative analysis are shown in Table 8, and the coefficients for the transformation matrix are shown in Table 9.

Table 8.

Model assessment metrics for the different linear regression adjustments for the full ND-WPPDS dataset (the best results obtained are highlighted in bold).

Table 9.

RGB-to-XYZ 3x3 transformation matrix coefficients for the different linear regression adjustments for the full ND-WPPDS dataset.

The best result was obtained using the HR model, since this model is robust to outliers. However, as can be seen, there were no major improvements compared to OLS. On the other hand, the MTLCV method (which includes a leave-one-out cross-validation) produced identical RMSE and R2 compared to an OLS regression, but with slightly higher color differences, so an OLS adjustment including cross-validation is not necessary. The predictive capability of the models (R2 around 0.97), as well as the average color differences obtained after fitting ( less than four), are all equivalent (Table 8). This can also be noted by comparing the coefficients obtained for the transformation matrix (Table 9). The greatest difference is observed in the coefficients obtained via the TSR model, but these differences are not significant from a practical perspective.

From a computational standpoint, there are no major distinctions either. It should be noted that the BR, HR, and TSR models must be calculated independently to obtain the coefficients of the transformation matrix (i.e., three different regression models are needed: RAW RGB to X, RAW RGB to Y, and RAW RGB to Z). However, their implementation in Python is not complicated; in terms of computational cost, there is no difference (except for models trained on large datasets, in which case it would have to be considered by the user).

Although models more robust to outliers (such as HR) can be used, this analysis showed that using the OLS model is appropriate: it is straightforward to interpret, easy to implement, and computationally effective, while providing results comparable to those of more complex models. Thus, in view of the comparison performed, other linear regression models did not result in better results.

4. Practical Application in a Graffiti Scenario

So far, this paper has shown that one can obtain a reliable RGB-to-XYZ transformation matrix via an OLS regression model with WPP constraint using Nikon D5600 images taken under different lighting conditions: that is, laboratory settings and/or in situ illuminants (e.g., indoor, outdoor, or both). However, how transferrable is this approach to another camera like Nikon’s Z 7II, and what do the results look like when that computed characterization matrix is applied to a collection of graffiti photographs taken during typical heritage documentation activities?

First, the Nikon Z7 II transform matrix was computed via the OLS regression model using only photos taken under in situ lighting conditions (i.e., the NZ-WPPDS dataset; Table 2), since this is common in cultural heritage documentation projects. The statistical estimators and color differences obtained after the adjustment are given in Table 10 for each illuminant and grouped. Table 11 lists the coefficients of the RGB-to-XYZ transformation matrix.

Table 10.

OLS model assessment metrics for the full NZ-WPPDS dataset (the best results are highlighted in bold).

Table 11.

RGB-to-XYZ 3 × 3 transformation matrix coefficients for the full NZ-WPPDS dataset.

The best results were obtained for illuminant IN-30, corresponding to indoor ambient light conditions (Section 2.4). Noteworthy are the model’s predictive capability (R2 = 0.995) and the average color difference of less than two (Table 10). Even when considering the entire NZ-WPPDS dataset (grouping all illuminants), the predictive capability remained high (R2 = 0.985) and stayed below three. The coefficients for each of the four matrices were also similar, as shown in Table 11. Therefore, this methodology for estimating a transformation matrix can be considered appropriate for characterizing different digital photo cameras in various illumination conditions.

Upon obtaining the RGB-to-XYZ transformation matrix for the Nikon Z 7II camera (i.e., the in situ matrix, Table 11), the color transformation was applied to 45 photos of graffiti located along Vienna’s Donaukanal. These photos were acquired by a staff member of project INDIGO during one of the weekly photographic tours. INDIGO’s photographic tours aimed to document new graffiti that had appeared since the last tour. Since almost 13 km of urban surfaces had to be checked during each tour, data were collected relatively quickly and during all weather conditions. This is an important fact to consider because it means the data resulted from a practical, real-world scenario and were not just collected to optimize the colorimetric results. During a photographic tour, each new graffito was documented as follows []. First, an XRCCPP target was photographed in front of the graffito, so the target received the same illumination as the graffito. Then, the graffito’s spectral illumination was measured with a Sekonic C-7000 SPECTROMASTER spectrometer. Finally, the graffito was photographed from all possible sides, enabling the creation of a textured 3D surface model and an orthophotograph. This test utilizes the XRCCPP color chart and the illumination’s SPD of 45 new graffiti documented on 22 July 2022.

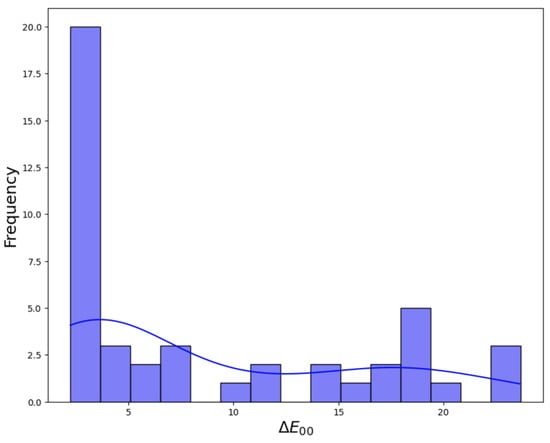

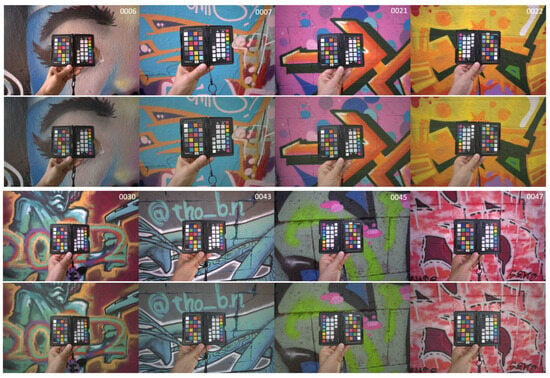

After following the methodological steps described in Section 2.9, average color difference values for the 24 patches of the XRCCPP chart could be computed. These are given in Table 12, and Figure 9 shows the histogram for the color difference values obtained. Table 13 classifies the absolute and relative number of images according to these average color differences. In addition, Figure 10 depicts a selection of eight original versus color-corrected photos for which the color difference was less than four.

Table 12.

color difference values for the graffiti sample images (instances with a difference of less than four are highlighted in bold).

Figure 9.

Histogram of color difference values for the graffiti sample images.

Table 13.

All graffiti photos classified according to their color difference.

Figure 10.

Application of the Nikon Z 7II transformation matrix to a sample of graffiti images. The upper photo of each pair is the in-camera-generated JPG photo, with its color-corrected version depicted below. The graffito ID is mentioned in the upper right corner of each photo pair.

With values of less than four obtained for only half of the photos, these results are less positive than those of the more controlled tests. This is likely due to four factors. First, the data were acquired outdoors in an urban setting. Although it was sunny during photo acquisition, a graffito’s illumination (and thus also that of the reference target) cannot always be homogonous, for example, when located under a bridge (see Figure 11 on the left) or partly in the shade. Such non-homogenous illumination of the target negatively affects achievable color accuracy. Second, the ColorChecker target is held at arm’s length, making it not unthinkable that the photographer partly influenced the target’s illuminations (for example, by partly shielding it or because his clothes reflect on the target). Third, the SPD has been used to compute the white balance multipliers (via the camera transformation matrix; see Section 2.9). The correctness of this approach assumes a suitable camera transformation matrix and identical illumination conditions for the target and the spectrometer measurement. When the latter is compromised, mediocre results will result. Fourth, the target’s patches are not Lambertian reflectors, so a target that is held not perfectly perpendicular to the camera’s optical axis might negatively influence the results (see Figure 11 on the right).

Figure 11.

Two cases where the photographs of the XRCCPP target are not ideal. (Case A), for image of graffito take in a shadow area. (Case B), for graffito including metal and fluorescent inks. The graffito ID is mentioned in the upper right corner of each photo pair.

Although data collected during several hours of photographing graffiti in warm conditions is not expected to yield the same colorimetric accuracy as data carefully gathered for academic purposes, all four issues warrant further research so that the entire graffiti documentation workflow—or any heritage workflow for that matter—can be further optimized. Despite these limitations, the current method already allows for computing color-accurate graffiti photographs in half of all cases with a method in which all parameters are controlled and do not result from a black-box procedure.

5. Conclusions

Although it is advisable to carry out photographic measurements under laboratory conditions, this requirement can rarely be met, especially in outdoor photography, as is often the case in cultural heritage documentation. This study, which used a white point preservation (WPP) constraint to characterize digital cameras and compute an RGB-to-XYZ transformation matrix, revealed that the color differences achievable via in situ illuminants were largely similar to those obtained under laboratory settings. Furthermore, the results underscored the efficacy of the WPP constraint in camera characterization and revealed that the employed ordinary least squares regression fitting yields results that are comparably accurate to those of more robust but computationally more demanding fitters.

Its application to graffiti photos highlighted that the proposed workflow is suitable for generating color-accurate results in real-world heritage documentation scenarios using different lighting conditions. Although a few aspects of the graffiti documentation pipeline need improvements, these corrections likely have less to do with the developed method and more with the data acquisition. Notably, the workflow has been seamlessly integrated into the COOLPI package, thus supporting the straightforward retrieval of color-accurate data from digital images. The authors are confident that the methodology and its application through COOLPI are suitable for all fields where accurate color information is required.

Author Contributions

Conceptualization, A.M.-T. and G.J.V.; methodology, A.M.-T.; software, A.M.-T.; validation, A.M.-T.; formal analysis, A.M.-T.; investigation, A.M.-T. and G.J.V.; resources, A.M.-T. and G.J.V.; writing—original draft preparation, A.M.-T.; writing—review and editing, G.J.V., D.H.-L. and D.G.-A.; visualization, A.M.-T.; supervision, G.J.V., D.H.-L. and D.G.-A.; project administration, G.J.V.; funding acquisition, G.J.V. All authors have read and agreed to the published version of the manuscript.

Funding

The INDIGO project, Heritage_2020-014_INDIGO, was funded by the Heritage Science Austria program of the Austrian Academy of Sciences (ÖAW).

Data Availability Statement

The code for the analysis performed is available in the COOLPI GitHub repository at https://github.com/GraffitiProjectINDIGO/coolpi/tree/main/wpp_data (accessed on 24 February 2024).

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Boochs, F.; Bentkowska-Kafel, A.; Degrigny, C.; Karaszewski, M.; Karmacharya, A.; Kato, Z.; Picollo, M.; Sitnik, R.; Trémeau, A.; Tsiafaki, D.; et al. Colour and Space in Cultural Heritage: Key Questions in 3D Optical Documentation of Material Culture for Conservation, Study and Preservation. In Digital Heritage. Progress in Cultural Heritage: Documentation, Preservation, and Protection; Ioannides, M., Magnenat-Thalmann, N., Fink, E., Žarnić, R., Yen, A.-Y., Quak, E., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 11–24. [Google Scholar]

- Korytkowski, P.; Olejnik-Krugly, A. Precise Capture of Colors in Cultural Heritage Digitization. Color Res. Appl. 2017, 42, 333–336. [Google Scholar] [CrossRef]

- Molada-Tebar, A.; Marqués-Mateu, Á.; Lerma, J.L. Correct Use of Color for Cultural Heritage Documentation. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, IV-2-W6, 107–113. [Google Scholar] [CrossRef]

- Feitosa-Santana, C.; Gaddi, C.M.; Gomes, A.E.; Nascimento, S.M.C. Art through the Colors of Graffiti: From the Perspective of the Chromatic Structure. Sensors 2020, 20, 2531. [Google Scholar] [CrossRef] [PubMed]

- Gaiani, M.; Apollonio, F.I.; Ballabeni, A.; Remondino, F. Securing Color Fidelity in 3D Architectural Heritage Scenarios. Sensors 2017, 17, 2437. [Google Scholar] [CrossRef]

- Markiewicz, J.; Pilarska, M.; Łapiński, S.; Kaliszewska, A.; Bieńkowski, R.; Cena, A. Quality Assessment of the Use of a Medium Format Camera in the Investigation of Wall Paintings: An Image-Based Approach. Measurement 2019, 132, 224–237. [Google Scholar] [CrossRef]

- Rowlands, D.A. Color Conversion Matrices in Digital Cameras: A Tutorial. OE 2020, 59, 110801. [Google Scholar] [CrossRef]

- Punnappurath, A.; Brown, M.S. Learning Raw Image Reconstruction-Aware Deep Image Compressors. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 1013–1019. [Google Scholar] [CrossRef] [PubMed]

- Sharma, G. Color Fundamentals for Digital Imaging. In Digital Color Imaging Handbook; CRC Press: Boca Raton, FL, USA, 2003; ISBN 978-1-315-22008-6. [Google Scholar]

- CIE TC 1-85; CIE 015:2018 Colorimetry. 4th ed. International Commission on Illumination (CIE): Vienna, Austria, 2019.

- Molada-Tebar, A.; Lerma, J.L.; Marqués-Mateu, Á. Camera Characterization for Improving Color Archaeological Documentation. Color Res. Appl. 2018, 43, 47–57. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Drew, M.S. The Maximum Ignorance Assumption with Positivity; Society for Imaging Science and Technology: Springfield, VA, USA, 1996; pp. 202–205. [Google Scholar]

- Finlayson, G.D.; Drew, M.S. White-Point Preserving Color Correction. CIC 1997, 5, 258–261. [Google Scholar] [CrossRef]

- Bianco, S.; Gasparini, F.; Russo, A.; Schettini, R. A New Method for RGB to XYZ Transformation Based on Pattern Search Optimization. IEEE Trans. Consum. Electron. 2007, 53, 1020–1028. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Morovic, P.M. Metamer Constrained Color Correction. J. Imaging Sci. Technol. 2000, 44, 295–300. [Google Scholar] [CrossRef]

- Funt, B.; Ghaffari, R.; Bastani, B. Optimal Linear RGB-to-XYZ Mapping for Color Display Calibration. Available online: https://summit.sfu.ca/item/18268 (accessed on 2 November 2023).

- Hubel, P.M.; Holm, J.; Finlayson, G.D.; Drew, M.S. Matrix Calculations for Digital Photography. Color Imaging Conf. 1997, 5, 105–111. [Google Scholar] [CrossRef]

- Rao, A.R.; Mintzer, F. Color Calibration of a Colorimetric Scanner Using Non-Linear Least Squares. In Proceedings of the IS&T’s 1998 PICS Conference, Portland, OR, USA, 17–20 May 1998. [Google Scholar]

- Vazquez-Corral, J.; Connah, D.; Bertalmío, M. Perceptual Color Characterization of Cameras. Sensors 2014, 14, 23205–23229. [Google Scholar] [CrossRef]

- Chakrabarti, A.; Scharstein, D.; Zickler, T.E. An Empirical Camera Model for Internet Color Vision. In Proceedings of the British Machine Vision Conference (BMVC), London, UK, 7–10 September 2009; Volume 1, p. 4. [Google Scholar]

- Ramanath, R.; Snyder, W.E.; Yoo, Y.; Drew, M.S. Color Image Processing Pipeline. IEEE Signal Process. Mag. 2005, 22, 34–43. [Google Scholar] [CrossRef]

- Tominaga, S.; Nishi, S.; Ohtera, R. Measurement and Estimation of Spectral Sensitivity Functions for Mobile Phone Cameras. Sensors 2021, 21, 4985. [Google Scholar] [CrossRef] [PubMed]

- Molada-Teba, A.; Verhoeven, G.J. Towards Colour-Accurate Documentation of Anonymous Expressions. Disseminate Graffiti-Scapes 2022, 86–130. [Google Scholar] [CrossRef]

- Verhoeven, G.; Wild, B.; Schlegel, J.; Wieser, M.; Pfeifer, N.; Wogrin, S.; Eysn, L.; Carloni, M.; Koschiček-Krombholz, B.; Molada-Tebar, A.; et al. Project INDIGO: Document, Disseminate & Analyse a Graffiti-Scape. In Proceedings of the 9th International Workshop 3D-ARCH" 3D Virtual Reconstruction and Visualization of Complex Architectures, Mantova, Italy, 2–4 March 2022; ISPRS: Baton Rouge, LA, USA, 2022; Volume XLVI-2/W1-2022, pp. 513–520. [Google Scholar]

- Wild, B.; Verhoeven, G.J.; Wieser, M.; Ressl, C.; Schlegel, J.; Wogrin, S.; Otepka-Schremmer, J.; Pfeifer, N. AUTOGRAF—AUTomated Orthorectification of GRAFfiti Photos. Heritage 2022, 5, 2987–3009. [Google Scholar] [CrossRef]

- Verhoeven, G.J.; Wogrin, S.; Schlegel, J.; Wieser, M.; Wild, B. Facing a Chameleon—How Project INDIGO Discovers and Records New Graffiti. Disseminate Graffiti-Scapes 2022, 63–85. [Google Scholar] [CrossRef]

- Molada-Tebar, A.; Riutort-Mayol, G.; Marqués-Mateu, Á.; Lerma, J.L. A Gaussian Process Model for Color Camera Characterization: Assessment in Outdoor Levantine Rock Art Scenes. Sensors 2019, 19, 4610. [Google Scholar] [CrossRef] [PubMed]

- Molada-Tebar, A.; Marqués-Mateu, Á.; Lerma, J.L.; Westland, S. Dominant Color Extraction with K-Means for Camera Characterization in Cultural Heritage Documentation. Remote Sens. 2020, 12, 520. [Google Scholar] [CrossRef]

- Molada-Tebar, A. COOLPI: COlour Operations Library for Processing Images 2022. Available online: https://pypi.org/project/coolpi/ (accessed on 24 February 2024).

- Molada-Tebar, A. COOLPI Documentation 2023. Available online: https://graffitiprojectindigo.github.io/COOLPI/ (accessed on 24 February 2024).

- Darrodi, M.M.; Finlayson, G.; Goodman, T.; Mackiewicz, M. Reference Data Set for Camera Spectral Sensitivity Estimation. J. Opt. Soc. Am. A JOSAA 2015, 32, 381–391. [Google Scholar] [CrossRef]

- ISO 17321-1:2012. Available online: https://www.iso.org/standard/56537.html (accessed on 2 November 2023).

- Calibrite. Available online: https://calibrite.com/us/ (accessed on 2 November 2023).

- Spyder. Available online: https://www.datacolor.com/spyder/ (accessed on 2 November 2023).

- X-Rite Color Management, Measurement, Solutions, and Software. Available online: https://www.xrite.com/en (accessed on 2 November 2023).

- Color Viewing Light PRO (EN). Available online: https://www.just-normlicht.com/en/articlelist.html?id=36&name=Color-Viewing-Light-PRO (accessed on 2 November 2023).

- Bianco, S.; Schettini, R.; Vanneschi, L. Empirical Modeling for Colorimetric Characterization of Digital Cameras. In Proceedings of the 2009 16th IEEE International Conference on Image Processing (ICIP), Cairo, Egypt, 7–10 November 2009; pp. 3469–3472. [Google Scholar]

- Cheung, V.; Westland, S.; Connah, D.; Ripamonti, C. A Comparative Study of the Characterisation of Colour Cameras by Means of Neural Networks and Polynomial Transforms. Color. Technol. 2004, 120, 19–25. [Google Scholar] [CrossRef]

- Finlayson, G.D.; Mackiewicz, M.; Hurlbert, A. Color Correction Using Root-Polynomial Regression. IEEE Trans. Image Process. 2015, 24, 1460–1470. [Google Scholar] [CrossRef]

- Hong, G.; Luo, M.R.; Rhodes, P.A. A Study of Digital Camera Colorimetric Characterization Based on Polynomial Modeling. Color Res. Appl. 2001, 26, 76–84. [Google Scholar] [CrossRef]

- Pointer, M.R.; Attridge, G.G.; Jacobson, R.E. Practical Camera Characterization for Colour Measurement. Imaging Sci. J. 2001, 49, 63–80. [Google Scholar] [CrossRef]

- Scikit-Learn. Linear Models. Available online: https://scikit-learn/stable/modules/linear_model.html (accessed on 3 November 2023).

- Fan, Y.; Li, J.; Guo, Y.; Xie, L.; Zhang, G. Digital Image Colorimetry on Smartphone for Chemical Analysis: A Review. Measurement 2021, 171, 108829. [Google Scholar] [CrossRef]

- Luo, M.R.; Cui, G.; Rigg, B. The Development of the CIE 2000 Colour-Difference Formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

- Paravina, R.D.; Aleksić, A.; Tango, R.N.; García-Beltrán, A.; Johnston, W.M.; Ghinea, R.I. Harmonization of Color Measurements in Dentistry. Measurement 2021, 169, 108504. [Google Scholar] [CrossRef]

- Nguyen, C.-N.; Vo, V.-T.; Nguyen, L.-H.-N.; Thai Nhan, H.; Nguyen, C.-N. In Situ Measurement of Fish Color Based on Machine Vision: A Case Study of Measuring a Clownfish’s Color. Measurement 2022, 197, 111299. [Google Scholar] [CrossRef]

- Sharma, G.; Wu, W.; Dalal, E.N. The CIEDE2000 Color-Difference Formula: Implementation Notes, Supplementary Test Data, and Mathematical Observations. Color Res. Appl. 2005, 30, 21–30. [Google Scholar] [CrossRef]

- Melgosa, M.; Alman, D.H.; Grosman, M.; Gómez-Robledo, L.; Trémeau, A.; Cui, G.; García, P.A.; Vázquez, D.; Li, C.; Luo, M.R. Practical Demonstration of the CIEDE2000 Corrections to CIELAB Using a Small Set of Sample Pairs. Color Res. Appl. 2013, 38, 429–436. [Google Scholar] [CrossRef]

- Vrhel, M.J.; Trussell, H.J. Color Correction Using Principal Components. Color Res. Appl. 1992, 17, 328–338. [Google Scholar] [CrossRef]

- Song, T.; Luo, R. Testing Color-Difference Formulae on Complex Images Using a CRT Monitor. In Proceedings of the Color Science and Engineering Systems, Technologies, Applications, Scottsdale, AZ, USA, 1 January 2000; Volume 2000, pp. 44–48. [Google Scholar]

- Van Dormolen, H. Metamorfoze Preservation Imaging Guidelines. Archiving 2008, 5, 162–165. [Google Scholar] [CrossRef]

- Paravina, R.D.; Pérez, M.M.; Ghinea, R. Acceptability and Perceptibility Thresholds in Dentistry: A Comprehensive Review of Clinical and Research Applications. J. Esthet. Restor. Dent. 2019, 31, 103–112. [Google Scholar] [CrossRef] [PubMed]

- Lee, Y.-K.; Powers, J.M. Comparison of CIE Lab, CIEDE 2000, and DIN 99 Color Differences between Various Shades of Resin Composites. Int. J. Prosthodont. 2005, 18, 150. [Google Scholar] [PubMed]

- Westland, S.; Ripamondi, C.; Cheung, V. Characterisation of Cameras. In Computational Colour Science using MATLAB®; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2012; pp. 143–157. ISBN 978-0-470-71089-0. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).