Abstract

In this paper, we propose a method for the three-dimensional (3D) image visualization of objects under photon-starved conditions using multiple observations and statistical estimation. To visualize 3D objects under these conditions, photon counting integral imaging was used, which can extract photons from 3D objects using the Poisson random process. However, this process may not reconstruct 3D images under severely photon-starved conditions due to a lack of photons. Therefore, to solve this problem, in this paper, we propose N-observation photon-counting integral imaging with statistical estimation. Since photons are extracted randomly using the Poisson distribution, increasing the samples of photons can improve the accuracy of photon extraction. In addition, by using a statistical estimation method, such as maximum likelihood estimation, 3D images can be reconstructed. To prove our proposed method, we implemented the optical experiment and calculated its performance metrics, which included the peak signal-to-noise ratio (PSNR), structural similarity (SSIM), peak-to-correlation energy (PCE), and the peak sidelobe ratio (PSR).

1. Introduction

Three-dimensional (3D) image visualization under photon-starved conditions is challenging nowadays. There are several 3D imaging techniques, such as light detection and ranging (LiDAR), stereoscopic imaging, holography, and integral imaging. LiDAR [1] can detect the shape of 3D objects by detecting and measuring the time it takes for light to be reflected from the objects; however, it is not cost-effective and it may not record the image’s information (i.e., the RGB map). Stereoscopic imaging [2,3,4,5,6] can obtain 3D information by analyzing the disparity of stereoscopic images (i.e., binocular images), and it is simple to implement because it uses only several cameras; however, it may not provide high resolution 3D information and full parallax due to the limit of the unidirectional disparity of stereoscopic images. On the other hand, holography [7,8,9,10,11] can provide full parallax and continuous viewing points of 3D images by using coherent light sources. The term is derived from a Greek word meaning “perfect imaging”. However, it is very complicated to implement because its system alignment is very sensitive and it uses a lot of optical components, such as a beam splitter, a laser, and a spatial light filter.

To overcome the problems in the 3D imaging techniques mentioned above, integral imaging, which was first proposed by G. Lippmann [12], has been studied. Integral imaging can provide full parallax and continuous viewing points without any special viewing devices and coherent light sources. However, it has several drawbacks such as low viewing resolution, a narrow viewing angle, and a shallow depth of focus. Several studies have been conducted to solve these disadvantages [13,14,15,16,17,18,19,20,21,22,23,24,25,26,27]. In addition, 3D imaging techniques may not provide 3D information of objects under photon-starved conditions due to the lack of photons. To obtain 3D information of objects under these conditions, photon-counting integral imaging has been studied [28,29,30,31,32,33,34,35,36,37].

Photon-counting integral imaging, which uses photon-counting imaging and integral imaging, can record and reconstruct 3D images under photon-starved conditions. Photon-counting imaging can detect photons that rarely occur in unit time and space under photon-starved conditions by using the computational photon-counting model [38]. The computational photon-counting model follows a Poisson distribution due to the characteristics of photon occurrence. Therefore, it can record the images randomly from the original scene with the expected number of photons. However, it may not obtain the images when the light intensity is very low (i.e., the light intensity is close to zero) since the Poisson distribution will return zero values. In addition, to estimate the images of the original scene statistically under photon-starved conditions, many samples are required; therefore, photon-counting integral imaging has been proposed [28,29,30,31,32,33,34]. Photon-counting integral imaging uses multiple 2D images with different perspectives from 3D objects through a lenslet or camera array, where these images are referred to as elemental images. Three-dimensional information of a scene can be estimated using statistical estimation techniques such as maximum likelihood estimation or Bayesian estimation [29]. However, it still exhibits low visual quality due to the randomness of the Poisson distribution and the lack of photons.

In this paper, we propose a method for 3D photon-counting integral imaging with multiple observations to enhance the visual quality of photon-counting images and reconstructed 3D images under photon-starved conditions. From the original scene, multiple photon-counting images, which are generated by photon-counting imaging, are statistically independent. Thus, randomness in the Poisson distribution can be overcome. In addition, the accuracy of statistical estimation can be improved due to the increase in the number samples. Therefore, our proposed method improves the visual quality of photon-counting images and reconstructed 3D images under photon-starved conditions by photon-counting integral imaging with N observations. To verify the visual quality enhancement of our proposed method, experimental results are shown and numerical results such as the peak signal-to-noise ratio (PSNR), structural similarity (SSIM), peak-to-correlation energy (PCE), and the peak sidelobe ratio (PSR) are calculated.

This paper is organized as follows. We present the basic concept of photon-counting integral imaging and N-observation photon-counting integral imaging in Section 2. We give the experimental results along with a discussion of these results in Section 3. Finally, we conclude our work with a summary in Section 4.

2. N-Observation Photon-Counting Integral Imaging

In this section, we describe the basic concept of integral imaging, photon-counting imaging, photon-counting integral imaging, and N-observation photon-counting integral imaging. Photon-counting imaging is used to record an image under photon-starved conditions. To visualize a 3D image under these conditions, photon-counting integral imaging uses statistical estimations such as maximum likelihood estimation (MLE) and Bayesian approaches with a uniform distribution and a gamma distribution as prior information, respectively. However, under severely photon-starved conditions, photon-counting integral imaging may not visualize 3D objects for object recognition. To overcome this problem, in this paper, we propose photon-counting integral imaging with N observations and verify the visual quality enhancement of our proposed method by optical experiments and numerical results.

2.1. Integral Imaging

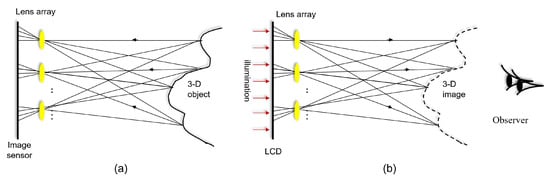

Integral imaging, which was first proposed by G. Lippmann in 1908, can provide full parallax and continuous viewing points of color 3D images without any special viewing glasses. In addition, it does not require a coherent light source such as a laser. It consists of two processes: pickup and display (or reconstruction). Figure 1 illustrates the basic concept of integral imaging. In the pickup stage, as shown in Figure 1a, rays coming from the 3D object are recorded through a lens array. These rays generate multiple 2D images with different perspectives for the 3D object, which are referred to as elemental images. Then, in the display stage, as depicted in Figure 1b, elemental images are projected through the homogeneous lens array in the pickup stage. Finally, a 3D image can be observed by the human eye without any special viewing glasses. In addition, this 3D image has full parallax and continuous viewing points. However, as shown in Figure 1b, the depth of the 3D image is reversed, and is referred to as the pseudoscopic real image. To solve this problem, Arai et al. [14] proposed a pseudoscopic to orthoscopic conversion (i.e., a P/O conversion) by rotating each elemental image through 180°. Thus, using a P/O conversion, the orthoscopic virtual 3D image can be displayed in space. However, the resolution of each elemental image is very low since the resolution of the image sensor is divided by the number of lenses. Therefore, the resolution of the 3D image in the display stage may be insufficient for observers.

Figure 1.

Integral imaging: (a) pickup and (b) display [26].

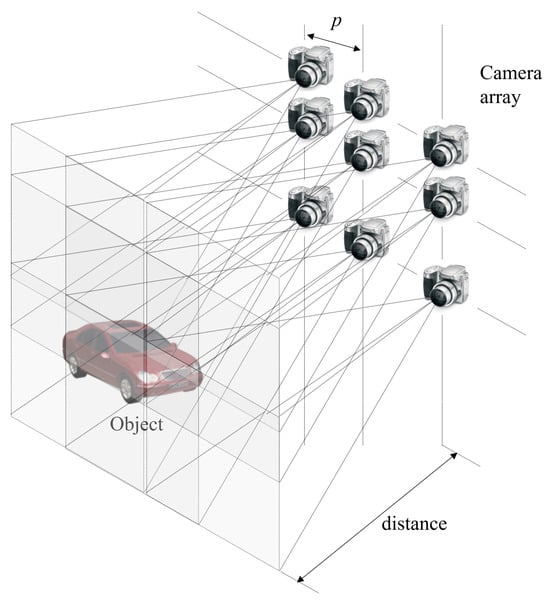

To solve this resolution problem, synthetic aperture integral imaging (SAII) was proposed by Jang et al. [16]. Figure 2 shows the schematic of SAII, which uses a camera array instead of a lens array to obtain a high resolution for each elemental image. In lens-array-based integral imaging, the pitch between lenses is stationary while, in SAII, the pitch between cameras may be dynamic. Therefore, it is possible to obtain higher 3D resolution (i.e., lateral and longitudinal resolutions) than when using lens-array-based integral imaging. However, since the resolution of each elemental image is high, the conventional display panel may not display elemental images in the 3D image display. Thus, volumetric computational reconstruction (VCR) was proposed [19,27].

Figure 2.

Synthetic aperture integral imaging (SAII).

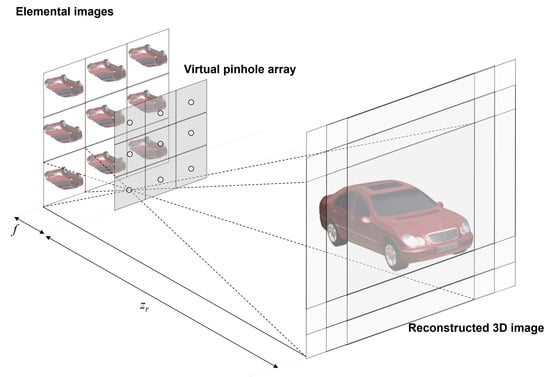

VCR can provide a sectional image at the reconstruction depth for a 3D image using high resolution elemental images produced by SAII. It projects elemental images through a virtual pinhole array on the reconstruction plane where each elemental image is shifted, as illustrated in Figure 3. VCR can be implemented as follows [19,27]:

where are the number of pixels for each elemental image; f is the focal length of the virtual pinhole; are the pitches between the virtual pinholes; are the sensor sizes; and is the reconstruction depth. Finally, various sectional images at different reconstruction depths for a 3D image can be obtained as follows:

where is the kth column and lth row elemental image produced using SAII and is the overlapping matrix at the reconstruction depth, .

Figure 3.

Volumetric computational reconstruction (VCR) of integral imaging [26].

In the VCR mentioned above, since the number of shifting pixels is stationary for each elemental image, the quantization error may increase. For example, when and the number of elemental images in the x direction is 10, and the shifting pixel values are calculated using Equation (1): and . Thus, 0, 0.3, 0.6, … quantization errors may occur for each shifting pixel value. These quantization errors may cause degradation in the visual quality of the 3D images. Therefore, we need to reduce this quantization error for VCR. In this paper, we utilize VCR with nonuniform shifting pixels. In this technique, the shifting pixel values are calculated as follows [27]:

By Equation (3), the shifting pixel values are calculated: . Thus, 0, 0.3, 0.4, … quantization errors for each shifting pixel value occur. Note that a VCR with nonuniform shifting pixels has lower quantization error than a VCR with stationary shifting pixels. Therefore, the depth resolution of the 3D images may be enhanced in VCR with nonuniform shifting pixels. In this paper, we use this VCR method for reconstructing 3D images expressed by [27].

2.2. Photon-Counting Imaging

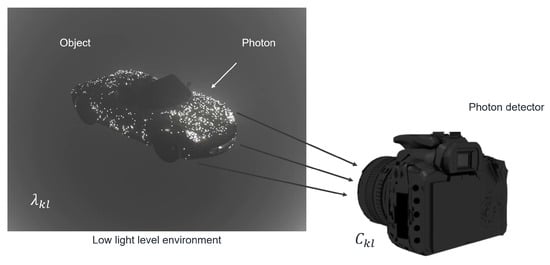

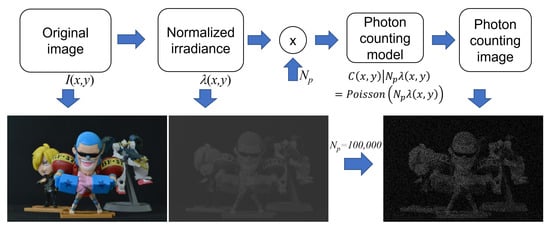

Under photon-starved conditions, the number of photons reflected from objects may be low. Thus, it may be difficult to record the images of objects under these conditions. To solve this problem, photon-counting imaging has been proposed. Photons emitted from objects can be recorded by a physical photon-counting detector, as shown in Figure 4. However, it is difficult to adjust the number of extracted photons that are emitted from objects. In addition, it is very expensive to implement. Therefore, in this paper, we introduce a computational photon-counting model that can be realised using a Poisson distribution since photons may occur rarely in unit time and space [38]. Figure 5 describes the computational photon-counting model. First, the original image, , is normalized so that the image has unit energy to control the number of photons. Then, multiplying the number of extracted photons, , by the normalized image, , and implementing a Poisson random process, aphoton-counting image, , can be obtained. The computational photon-counting process is expressed as follows [28,29]:

where is adjustable. When is large more photons can be extracted and vice versa.

Figure 4.

Physical photon-counting detector [37].

Figure 5.

Computational photon-counting model.

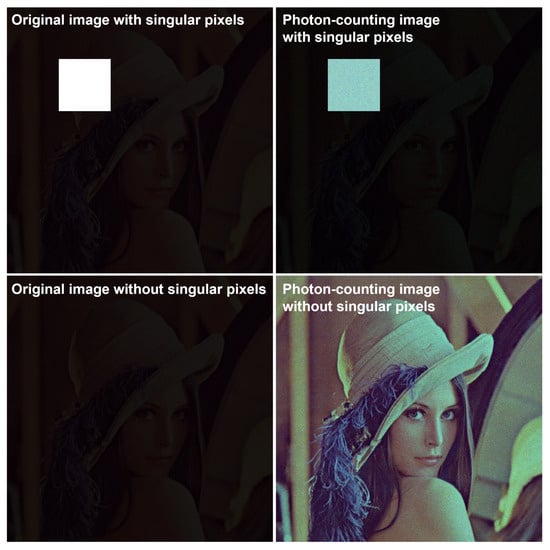

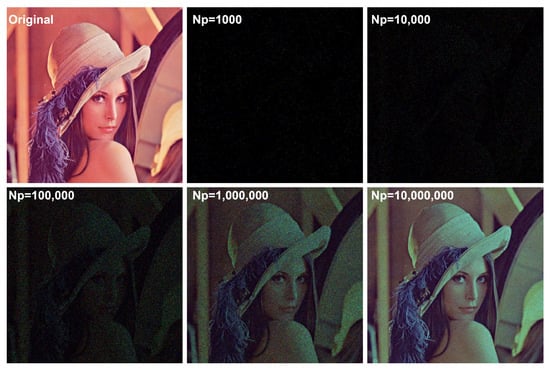

If an object or scene has singular pixel intensity (i.e., an extremely high pixel value compared with others) most photons are extracted from singular pixels, as shown in Figure 6. Thus, image visualization or recording may be difficult. To overcome this difficulty, photon-counting imaging can be applied after choosing a region of interest (ROI) that does not contain any singular pixels. In addition, the visual quality of a photon-counting image may be degraded due to photon-starved conditions, as shown in Figure 7. To solve these problems, a more accurate estimation method with a lot of samples (i.e., more photons) is required. Since integral imaging can produce multiple 2D images with different perspectives via a lens array or a camera array, it can provide a solution by using a statistical estimation method, such as maximum likelihood estimation (MLE) and Bayesian approaches. Photon-counting integral imaging is presented in next subsection.

Figure 6.

Photon-counting images with singular pixels.

Figure 7.

Photon-counting images with various number of photons.

2.3. Photon-Counting Integral Imaging

Integral imaging can record multiple 2D images with different perspectives from 3D objects through a lens array or a camera array. Here, these 2D images are referred to as elemental images and they can be sampled to estimate a scene of objects in photon-counting imaging with statistical estimation. Therefore, photon-counting integral imaging can be utilized to reconstruct an image under photon-starved conditions. To describe photon-counting integral imaging, we need to consider a statistical estimation method, such as MLE.

Photon-counting images generated from elemental images by integral imaging are followed by the application of a Poisson distribution. Thus, the likelihood function can be written as follows [28,29]:

where is the normalized irradiance of the elemental image in the kth column and the lth row calculated using Equation (5), is its photon-counting image calculated using Equation (6), and are the number of photon-counting images in the x and y directions, respectively.

By taking the logarithm and maximizing Equation (8), the estimated scene can be obtained as follows [28,29]:

Finally, using volumetric computational reconstruction (VCR) with the nonuniform shifting pixel of integral imaging, 3D reconstructed images under photon-starved conditions are obtained by [28,29]:

where is the overlapping matrix at the reconstruction depth, . Figure 8 shows the results under photon-starved conditions using Equations (7)–(12). As shown in Figure 8, the reconstructed 3D images produced by photon-counting integral imaging with MLE (see Figure 8b,c) have better visual quality than the photon-counting image (see Figure 8a).

Figure 8.

(a) Photon-counting image with = 1,500,000, (b) reconstructed 3D image by photon-counting integral imaging with MLE at = 190 mm, and (c) reconstructed 3D image by photon-counting integral imaging with MLE at = 230 mm.

Since severely photon-starved conditions have only a few photons, photon-counting integral imaging may not reconstruct 3D images. This implies that an accurate scene with enhanced visual quality may not be visualized under these conditions; therefore, to solve this problem, in this paper, we propose photon-counting integral imaging with N observations.

2.4. N-Observation Photon-Counting Integral Imaging

In photon-counting imaging, photons may be detected randomly with probabilities from a Poisson distribution. Photon-counting images with single observation by Equations (5) and (6) may not be visualized well under severely photon-starved conditions as only a few photons are detected from the scene. This means that the number of photons (i.e., the number of samples) can determine the visual quality of a photon-counting image under these conditions. To increase the number of photons, in this paper, we observe N photon-counting images from a scene. Then, using a statistical estimation method, such as MLE, we estimate the scene under severely photon-starved conditions. Here, this method is called N-observation photon-counting imaging.

In N-observation photon-counting imaging, multiple photon-counting images can be generated randomly through a Poisson distribution as follows:

where n is the index of the Nth observation for a photon-counting image. Now, N photon-counting images can construct the likelihood function for each elemental image since they are statistically independent.

For convenience of calculation, we take the logarithm of the likelihood function as follows:

Then, using MLE, a scene (i.e., elemental image) under photon-starved conditions can be estimated by the following equations.

Now, a scene under photon-starved conditions is estimated by N-observation photon-counting imaging: the estimated scene is the average of the N observed photon-counting images. Thus, the visual quality of a photon-counting image may be enhanced statistically. For a 3D reconstruction of a scene under these conditions, VCR with a nonuniform shifting pixel of integral imaging is utilized as follows:

Figure 9 shows the results obtained by conventional photon-counting integral imaging and our proposed method with 100 observations under severely photon-starved conditions ( = 5000). As shown in Figure 9, the reconstructed 3D image produced by our proposed method has better visual quality than the reconstructed 3D image produced by the conventional method, which has more noise.

Figure 9.

3D reconstructed images by (a) conventional photon-counting integral imaging and (b) our proposed method with 100 observations, where the expected number of photons is = 5000.

3. Experimental Results

3.1. Experimental Setup

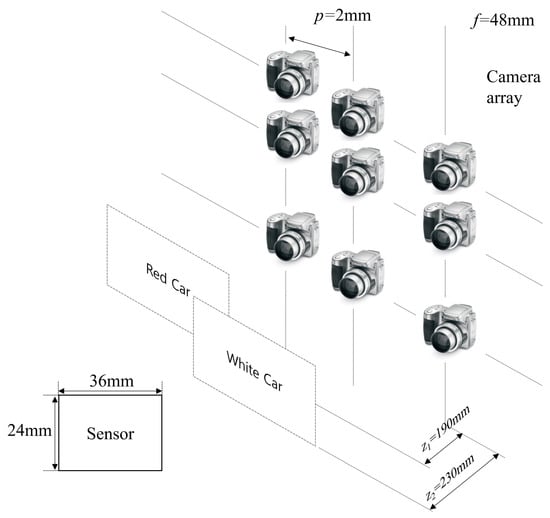

To show the feasibility of our proposed method, we implemented the optical experiment. Figure 10 illustrates the experimental setup. To capture the elemental images with high resolution, we utilized SAII with a camera (Nikon D850, Tokyo, Japan) array and a white light. The focal length of the camera, f, was 48 mm; the pitch between cameras, p was 2 mm; the first object (red car) was located 190 mm from the camera array; and the second object (white car) was located 230 mm from the camera array. The resolution of each elemental image was 752(H) × 500(V), and the number of elemental images was 10(H) × 10(V). To observe the reconstructed 3D images with the human eye, we set the expected number of photons as = 18,800, which is 0.05 photons per pixel of each elemental image. In addition, the range of observations was set from 1 to 100.

Figure 10.

Experimental setup.

3.2. Experimental Results

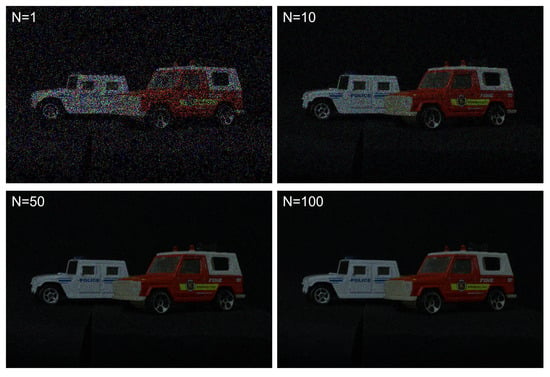

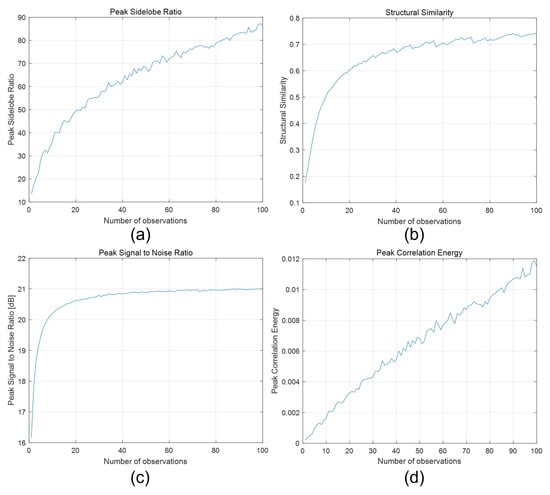

To generate photon-counting images, Equations (5) and (6) were applied to elemental images by SAII. Figure 11 shows the photon-counting images with different observations, where = 18,800 (i.e., 0.05 photon/pixel). A photon-counting image with N = 1 observations is the same as a photon-counting image produced by conventional photon-counting imaging. As shown in Figure 11, the greater the number of observations the better the obtained visual quality was. To evaluate the visual quality of photon-counting images with different observations, we calculated performance metrics such as the peak sidelobe ratio (PSR), structural similarity (SSIM), the peak signal-to-noise ratio (PSNR), and peak-to-correlation energy (PCE), as shown in Figure 12. Among these performance metrics, PSR and PCE were calculated as follows:

where c is the correlation value of the kth law nonlinear filter [39], is the Fourier transform of the photon-counting image, is the Fourier transform of the reference image, k is the nonlinear coefficient of the kth law nonlinear filter, is the phase for the Fourier transform of the photon-counting image, and is the phase for the Fourier transform of the reference image. In addition, is the maximum value of the correlation values, is the mean of the correlation values, is the standard deviation of the correlation values, is the maximum value of the squared correlation values, and is the total energy of the correlation value.

Figure 11.

Photon-counting images with different observations where = 18,800.

Figure 12.

Performance metrics via different observations. (a) Peak sidelobe ratio, (b) structural similarity, (c) peak signal-to-noise ratio, and (d) peak-to-correlation energy, where the number of observations N ranged from 1 to 100.

In Figure 12a, the PSR values for a single observation and 100 observations were 13.3691 and 86.0931, respectively. Note that 100 observations had a PSR value that was 6.5 times better than that of a single observation. In Figure 12b, SSIM for a single observation and 100 observations were 0.1752 and 0.7436, respectively. The SSIM of 100 observations was almost 4 times better than that of a single observation. In Figure 12c, the PSNRs for a single and 100 observations were 16.1721 and 21.0083 [dB], respectively. Their difference was almost 5 [dB], which implied that 100 observations also performanced better than a single observation. Finally, in Figure 12d, PCE for a single and 100 observations were and 0.0115, respectively. In this case, PCE for 100 observations was approximately 54 times better than that of a single observation. As a result, for a photon-counting image, N-observation photon-counting imaging can enhance the visual quality of an image under photon-starved conditions.

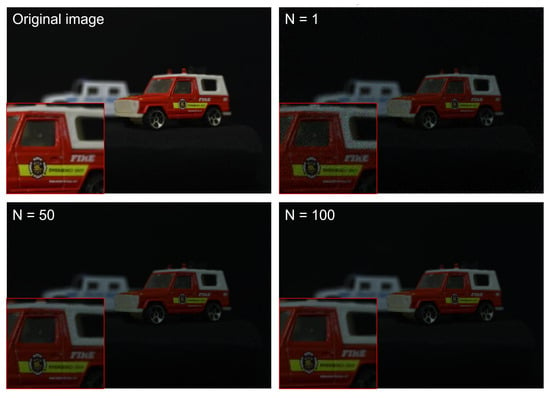

Now, let us consider 3D reconstructed images obtained by N-observation photon-counting integral imaging, which can be implemented by Equations (13)–(19). Figure 13 shows 3D reconstruction results for a red car at a reconstruction depth of 190mm with a different number of observations where = 18,800. As shown in Figure 13, the red car is in focus and it is remarkable that the higher the number of observations used the better the visual quality of the obtained 3D images.

Figure 13.

3D reconstruction results at a reconstruction depth of 190 mm with a different number of observations where = 18,800.

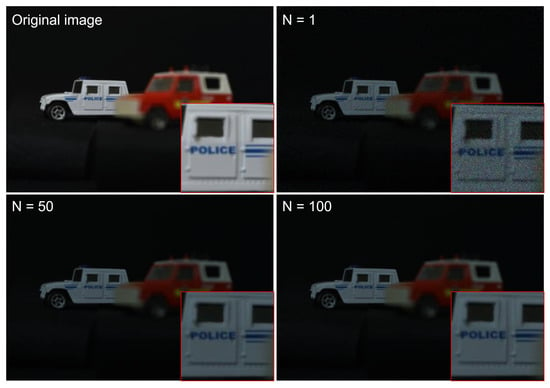

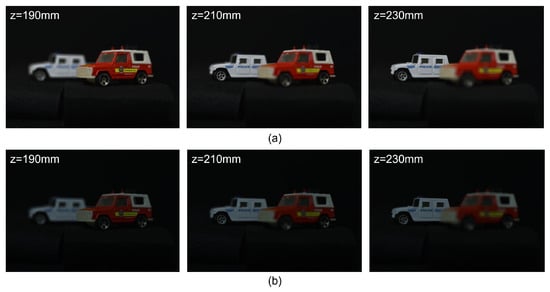

Figure 14 shows 3D reconstruction results for a white car at a reconstruction depth of 230 mm with a different numbers of observations where = 18,800. It was noted that the higher the number of observations, the better the visual quality of the obtained 3D images. In addition, to show the ability of 3D reconstruction, we reconstructed 3D images at various reconstruction depths by Equations (4) and (19). The 3D reconstruction results are shown in Figure 15.

Figure 14.

3D reconstruction results at a reconstruction depth of 230 mm with a different number of observations where = 18,800.

Figure 15.

3D reconstruction results via different reconstruction depths using (a) original elemental images and (b) N = 20 observation photon-counting elemental images where = 18,800.

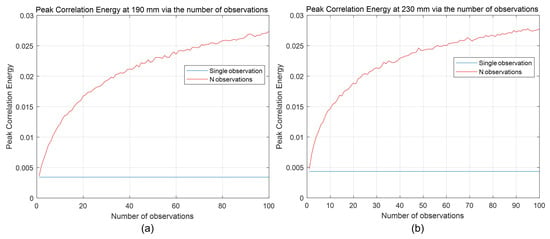

To evaluate the visual quality of our 3D reconstruction results, we calculated the peak-to-correlation energy that was mentioned earlier. Figure 16 shows the peak-to-correlation energy for our 3D reconstruction results at 190 mm and 230 mm via various numbers of observations where = 18,800. In Figure 16a, PCE for a single observation and 100 observations at 190 mm were 0.0036 and 0.0274, respectively, where the result for 100 observations was approximately 7.54 times better than that for a single observation. In Figure 16b, PCE for a single observation and 100 observations at 230 mm were 0.0043 and 0.0278, respectively. PCE of 100 observations was almost 6.4 times better than PCE of a single observation. Therefore, N-observation photon-counting integral imaging can enhance the visual quality of 3D images under severely photon-starved conditions.

Figure 16.

Peak-to-correlation energy for 3D reconstruction results (a) at 190 mm and (b) 230 mm via various number of observations where = 18,800.

4. Conclusions

In this paper, we proposed N-observation photon-counting integral imaging for 3D image visualization under severely photon-starved conditions. In conventional photon-counting integral imaging, the reconstructed 3D images may not be visualized sufficiently due to a lack of photons. On the other hand, since the number of samples can increase by using multiple observations (i.e., multiple photon-counting image generations), in our proposed method, the estimated scene may be more accurate than a single observation statistically. In addition, when the number of photons cannot increase, conventional photon-counting integral imaging may not visualize 3D images due to a lack of photons. In contrast, our proposed method can visualize 3D images under these conditions by increasing the number of observations, which is the novelty of our proposed method. Therefore, we believe that our method can be utilized by various applications under severely photon-starved conditions, such as in an unmanned vehicle under inclement weather conditions, a low-radiation medical device, defense, astronomy, and so on. However, our method has several drawbacks. It requires a lot of processing time because it implements multiple observations of photon-counting imaging to obtain the photon-counting image. In addition, the accuracy of the estimation results may be insufficient because our method uses a uniform distribution as the prior information of the original scene. This may be solved by using Bayesian approaches that have a specific statistical distribution, such as a Gamma distribution, as the prior information. In addition, processing time can be enhanced by only detecting photons from the objects and reducing the number of observations. We will investigate these issues in future work.

Author Contributions

Conceptualization, H.-W.K. and M.C.; writing—original draft preparation, H.-W.K. and M.C.; writing—review and editing, M.-C.L. and M.C.; supervision, M.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by a research grant from Hankyong National University in the year of 2022.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

All data are contained within the article.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| MLE | maximum likelihood estimation; |

| PCE | peak-to-correlation energy; |

| PSNR | peak signal-to-noise ratio; |

| PSR | peak sidelobe ratio; |

| SAII | synthetic aperture integral imaging; |

| SSIM | structural similarity; |

| VCR | volumetric computational reconstruction. |

References

- Stitch, M.L.; Woodburry, E.J.; Morse, J.H. Optical ranging system uses laser transmitter. Electronics 1961, 34, 51–53. [Google Scholar]

- Wheatstone, C. Contributions to the physiology of vision—Part the first. On some remarkable, and hitherto unobserved, phenomena of binocular vision. Philos. Trans. R. Soc. Lond. 1838, 128, 371–394. [Google Scholar]

- Dodgson, N.A. Autostereoscopic 3D displays. Computer 2005, 38, 31–36. [Google Scholar] [CrossRef]

- Chen, C.H.; Huang, Y.P.; Chuang, S.C.; Wu, C.L.; Shieh, H.P.D.; Mphepo, W.; Hsieh, C.T.; Hsu, S.C. Liquid crystal panel for highefficiency barrier type autostereoscopic three-dimensional displays. Appl. Opt. 2009, 48, 3446–3454. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.H.; Zhao, W.X.; Tao, Y.H.; Li, D.H.; Zhao, R.L. Stereo viewing zone in parallax-barrier-based autostereoscopic display. Optik 2010, 121, 2008–2011. [Google Scholar] [CrossRef]

- Hong, J.; Kim, Y.; Choi, H.J.; Hahn, J.; Park, J.H.; Kim, H.; Min, S.W.; Chen, N.; Lee, B. Three-dimensional display technologies of recent interest: Principles, status, and issues [Invited]. Appl. Opt. 2011, 50, 87–115. [Google Scholar] [CrossRef] [PubMed]

- Gabor, D. A new microscopic principle. Nature 1948, 161, 777–778. [Google Scholar] [CrossRef] [PubMed]

- Wang, H.; Lyu, M.; Situ, G. eHoloNet: A learning-based end-to-end approach for in-line digital holographic reconstruction. Opt. Exp. 2018, 26, 22603–22614. [Google Scholar] [CrossRef]

- Ren, Z.; Xu, Z.; Lam, E.Y. End-to-end deep learning framework for digital holographic reconstruction. Adv. Photonics 2019, 1, 016004. [Google Scholar] [CrossRef]

- Shevkunov, I.; Katkovnik, V.; Claus, D.; Pedrini, G.; Petrov, N.V.; Egiazarian, K. Spectral object recognition in hyperspectral holography with complex-domain denoising. Sensors 2019, 19, 5188. [Google Scholar] [CrossRef]

- Bordbar, B.; Zhou, H.; Banerjee, P.P. 3D object recognition through processing of 2D holograms. Appl. Opt. 2019, 58, G197–G203. [Google Scholar] [CrossRef] [PubMed]

- Lippmann, G. La Photographie Integrale. Comp. Ren. Acad. Des Sci. 1908, 146, 446–451. [Google Scholar]

- Burckhardt, C.B. Optimum parameters and resolution limitation of integral photography. J. Opt. Soc. Am. 1968, 58, 71–76. [Google Scholar] [CrossRef]

- Arai, J.; Okano, F.; Hoshino, H.; Yuyama, I. Gradient index lens array method based on real time integral photography for three dimensional images. Appl. Opt. 1998, 37, 2034–2045. [Google Scholar] [CrossRef]

- Jang, J.-S.; Javidi, B. Improved viewing resolution of three-dimensional integral imaging by use of nonstationary micro-optics. Opt. Lett. 2002, 27, 324–326. [Google Scholar] [CrossRef]

- Jang, J.-S.; Javidi, B. Three-dimensional synthetic aperture integral imaging. Opt. Lett. 2002, 27, 1144–1146. [Google Scholar] [CrossRef]

- Jang, J.-S.; Javidi, B. Improvement of viewing angle in integral imaging by use of moving lenslet arrays with low fill factor. Appl. Opt. 2003, 42, 1996–2002. [Google Scholar] [CrossRef]

- Jang, J.-S.; Javidi, B. Large depth-of-focus time-multiplexed three-dimensional integral imaging by use of lenslets with nonuniform focal lengths and aperture sizes. Opt. Lett. 2003, 28, 1924–1926. [Google Scholar] [CrossRef]

- Hong, S.-H.; Jang, J.-S.; Javidi, B. Three-dimensional volumetric object reconstruction using computational integral imaging. Opt. Exp. 2004, 12, 483–491. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Corral, M.; Javidi, B.; Martinez-Cuenca, R.; Saavedra, G. Formation of real, orthoscopic integral images by smart pixel mapping. Opt. Exp. 2005, 13, 9175–9180. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Cuenca, R.; Saavedra, G.; Martinez-Corral, M.; Javidi, B. Extended depth-of-field 3-D display and visualization by combination of amplitude-modulated microlenses and deconvolution tools. IEEE J. Disp. Tech. 2005, 1, 321–327. [Google Scholar] [CrossRef]

- Martinez-Cuenca, R.; Pons, A.; Saavedra, G.; Martinez-Corral, M.; Javidi, B. Optically-corrected elemental images for undistorted integral image display. Opt. Exp. 2006, 14, 9657–9663. [Google Scholar] [CrossRef] [PubMed]

- Stern, A.; Javidi, B. Three-dimensional image sensing, visualization, and processing using integral imaging. Proc. IEEE 2006, 94, 591–607. [Google Scholar] [CrossRef]

- Levoy, M. Light fields and computational imaging. IEEE Comput. Mag. 2006, 39, 46–55. [Google Scholar] [CrossRef]

- Martinez-Cuenca, R.; Saavedra, G.; Martinez-Corral, M.; Javidi, B. Progress in 3-D multiperspective display by integral imaging. Proc. IEEE 2009, 97, 1067–1077. [Google Scholar] [CrossRef]

- Cho, M.; Daneshpanah, M.; Moon, I.; Javidi, B. Three-Dimensional Optical Sensing and Visualization Using Integral Imaging. Proc. IEEE 2010, 99, 556–575. [Google Scholar]

- Cho, B.; Kopycki, P.; Martinez-Corral, M.; Cho, M. Computational volumetric reconstruction of integral imaging with improved depth resolution considering continuously non-uniform shifting pixels. Opt. Laser Eng. 2018, 111, 114–121. [Google Scholar] [CrossRef]

- Tavakoli, B.; Javidi, B.; Watson, E. Three-dimensional visualization by photon counting computational integral imaging. Opt. Exp. 2008, 16, 4426–4436. [Google Scholar] [CrossRef]

- Jung, J.; Cho, M.; Dey, D.-K.; Javidi, B. Three-dimensional photon counting integral using Bayesian estimation. Opt. Lett. 2010, 35, 1825–1827. [Google Scholar] [CrossRef]

- Aloni, D.; Stern, A.; Javidi, B. Three-dimensional photon counting integral imaging reconstruction using penalized maximum likelihood expectation maximization. Opt. Exp. 2011, 19, 19681–19687. [Google Scholar] [CrossRef]

- Cho, M.; Javidi, B. Three-dimensional photon counting integral imaging using moving array lens technique. Opt. Lett. 2012, 37, 1487–1489. [Google Scholar] [CrossRef] [PubMed]

- Markman, A.; Javidi, B.; Tehranipoor, M. Photon-counting security tagging and verification using optically encoded QR codes. IEEE. Photonics J. 2013, 6, 1–9. [Google Scholar] [CrossRef]

- Markman, A.; Javidi, B. Full-phase photon-counting double-random-phase encryption. JOSA A 2014, 31, 394–403. [Google Scholar] [CrossRef] [PubMed]

- Cho, M. Three-dimensional color photon counting microscopy using Bayesian estimation with adaptive priori information. Chin. Opt. Lett. 2015, 13, 070301. [Google Scholar]

- Rajput, S.K.; Kumar, D.; Nishchal, N.K. Photon counting imaging and phase mask multiplexing for multiple images authentication and digital hologram security. Appl. Opt. 2015, 54, 1657–1666. [Google Scholar] [CrossRef]

- Gupta, A.K.; Nishchal, N.K. Low-light phase imaging using in-line digital holography and the transport of intensity equation. J. Opt. 2021, 23, 025701. [Google Scholar] [CrossRef]

- Jang, J.-Y.; Cho, M. Lensless three-dimensional imaging under photon-starved conditions. Sensors 2023, 23, 2336. [Google Scholar] [CrossRef]

- Goodman, J.W. Statistical Optics, 2nd ed.; Wiley: New York, NY, USA, 2015. [Google Scholar]

- Javidi, B. Nonlinear joint power spectrum based optical correlation. Appl. Opt. 1989, 28, 2358–2367. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).