Multilayer Perceptron-Based Error Compensation for Automatic On-the-Fly Camera Orientation Estimation Using a Single Vanishing Point from Road Lane

Abstract

1. Introduction

1.1. Background

1.2. Purpose of Study

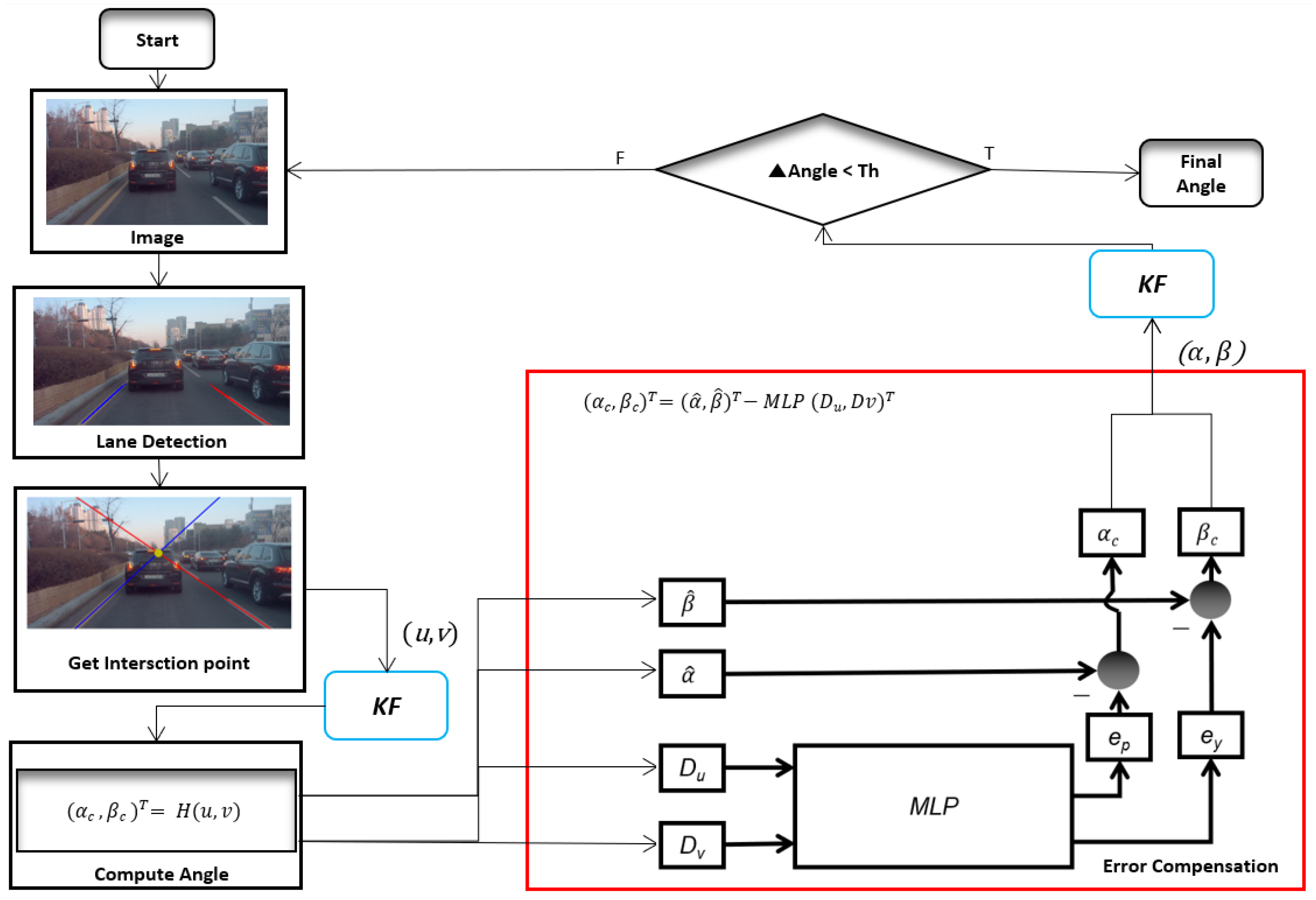

- A working pipeline for the MLP-based adaptive error correction automatic on-the-fly camera orientation estimation algorithm with a single VP from a road lane is proposed, along with relevant quantitative analysis.

- A stable camera on-the-fly orientation estimation variant is proposed. It uses a Kalman filter that can estimate the angular pitch and yaw concerning the road lane.

- The residual error of using VP to estimate the camera orientation is compensated for, and several related compensation modules are compared.

2. Related Work

3. Proposed Method

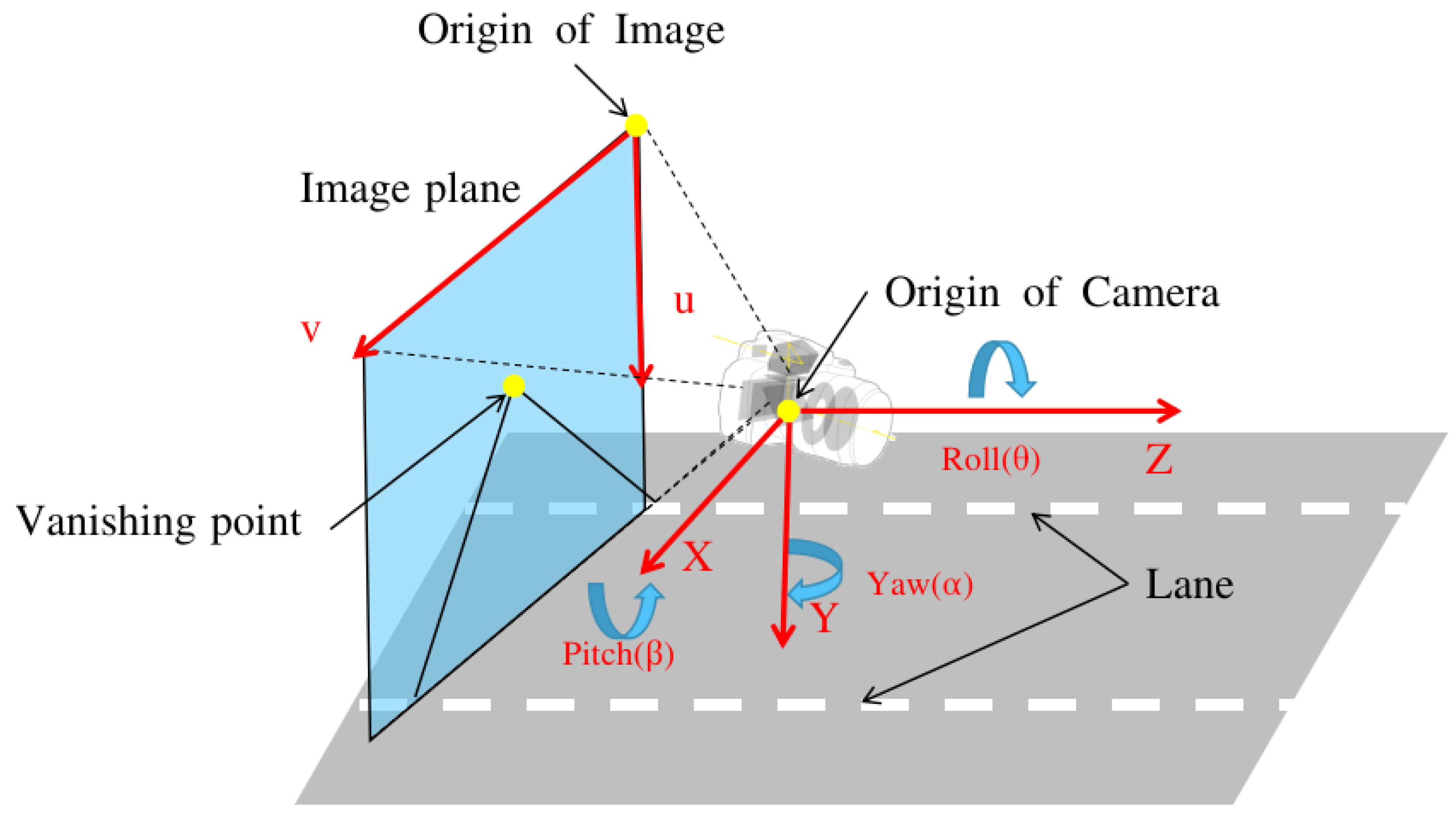

3.1. Camera Orientation Estimation

3.2. Error Compensation of Orientation Estimation

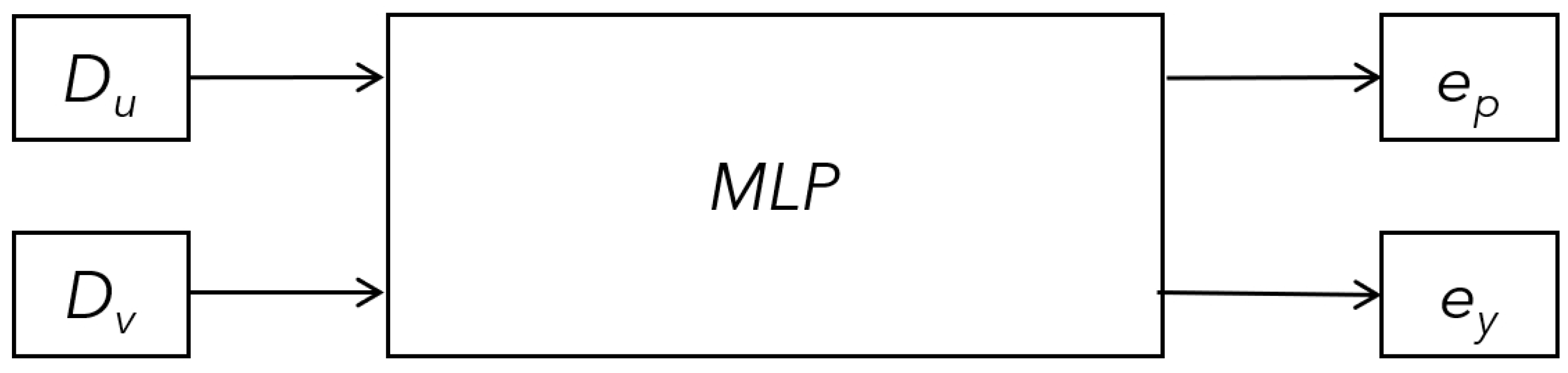

3.3. Multilayer Perceptron

4. Experimental Result

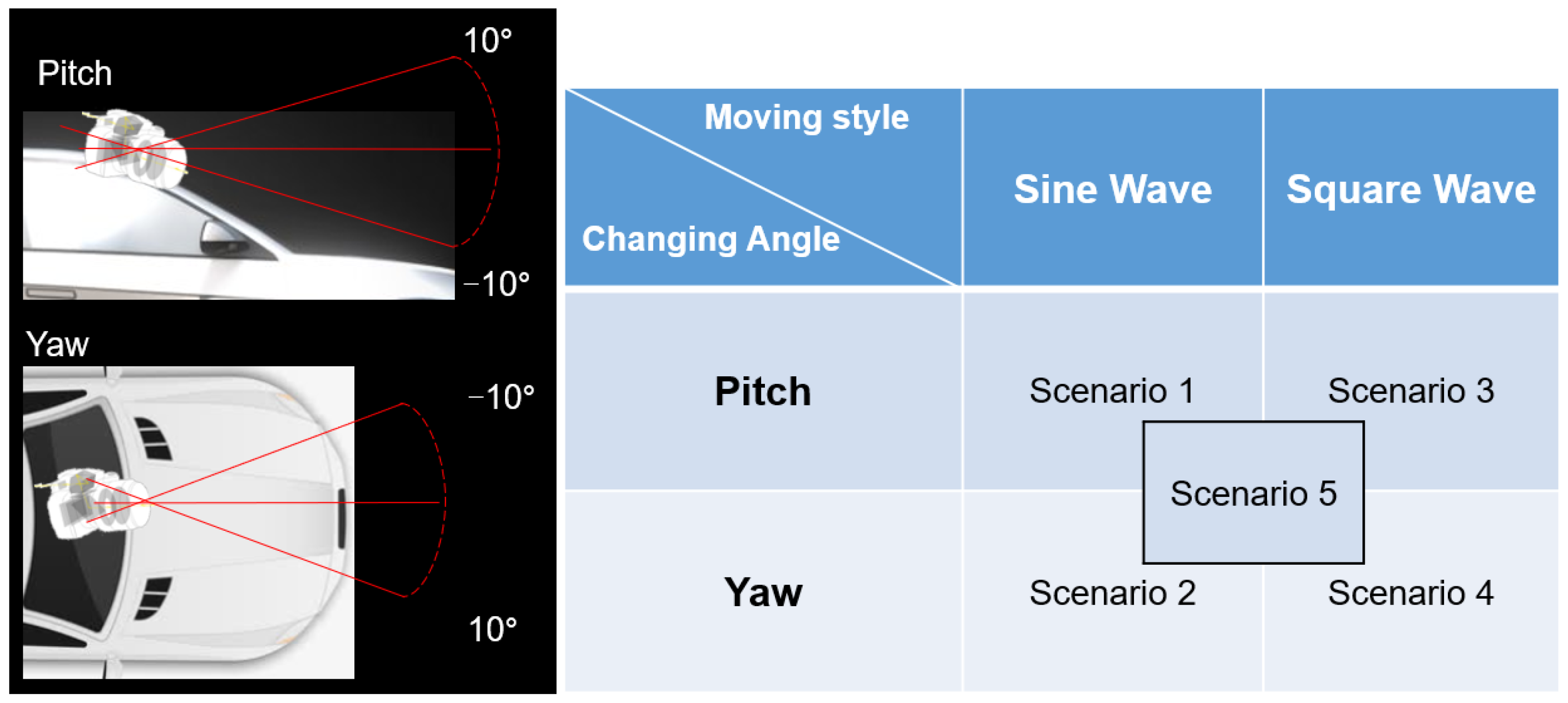

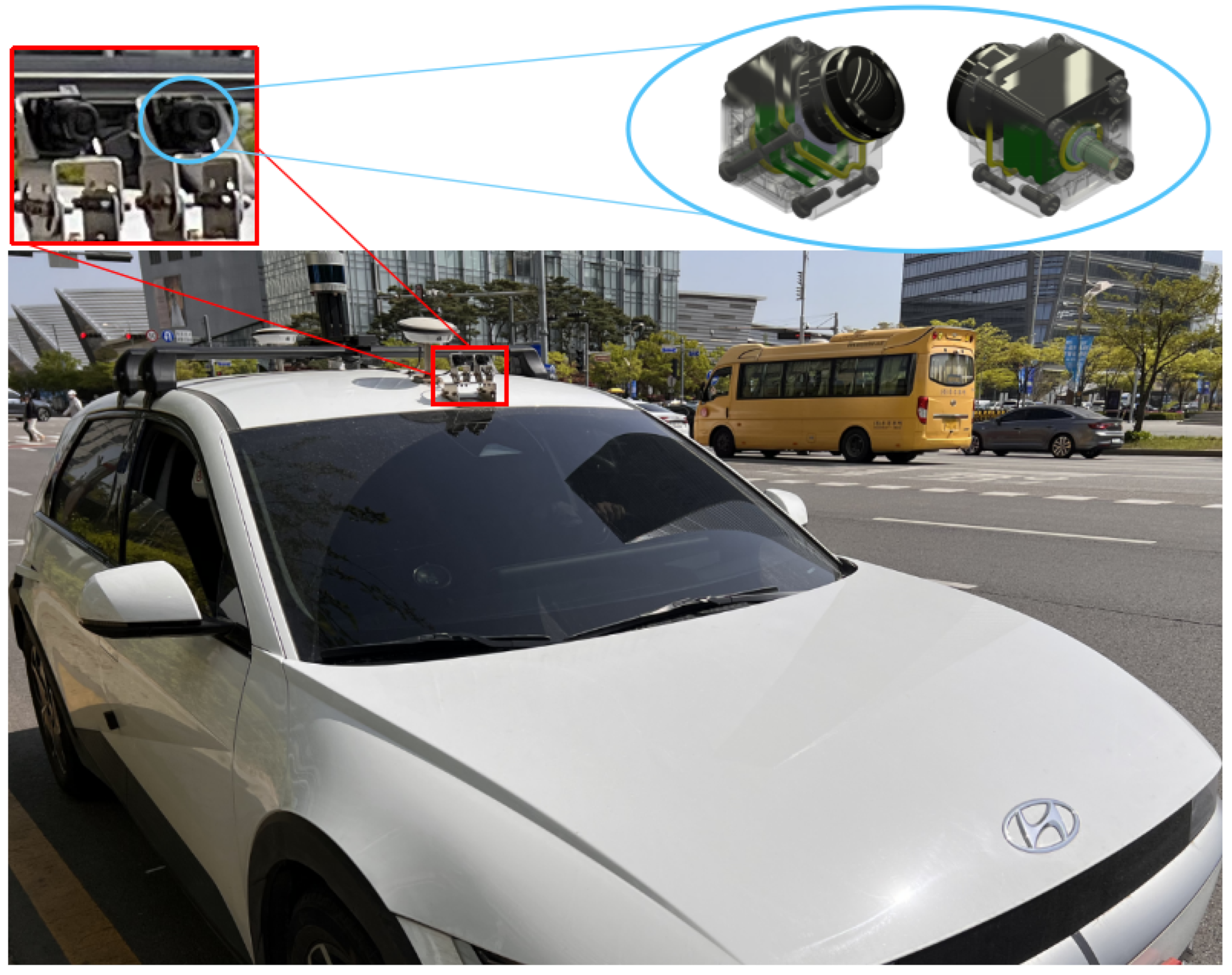

4.1. Experimental Environment: Simulation and Real

- Scenario 1: For the algorithm to follow the pitch motion with a 0.1° variation, it modifies the pitch angle continuously while the yaw angle is fixed to .

- Scenario 2: For the algorithm to follow the yaw motion with a 0.1° variation, the yaw angle is modified continuously while the pitch angle is fixed to .

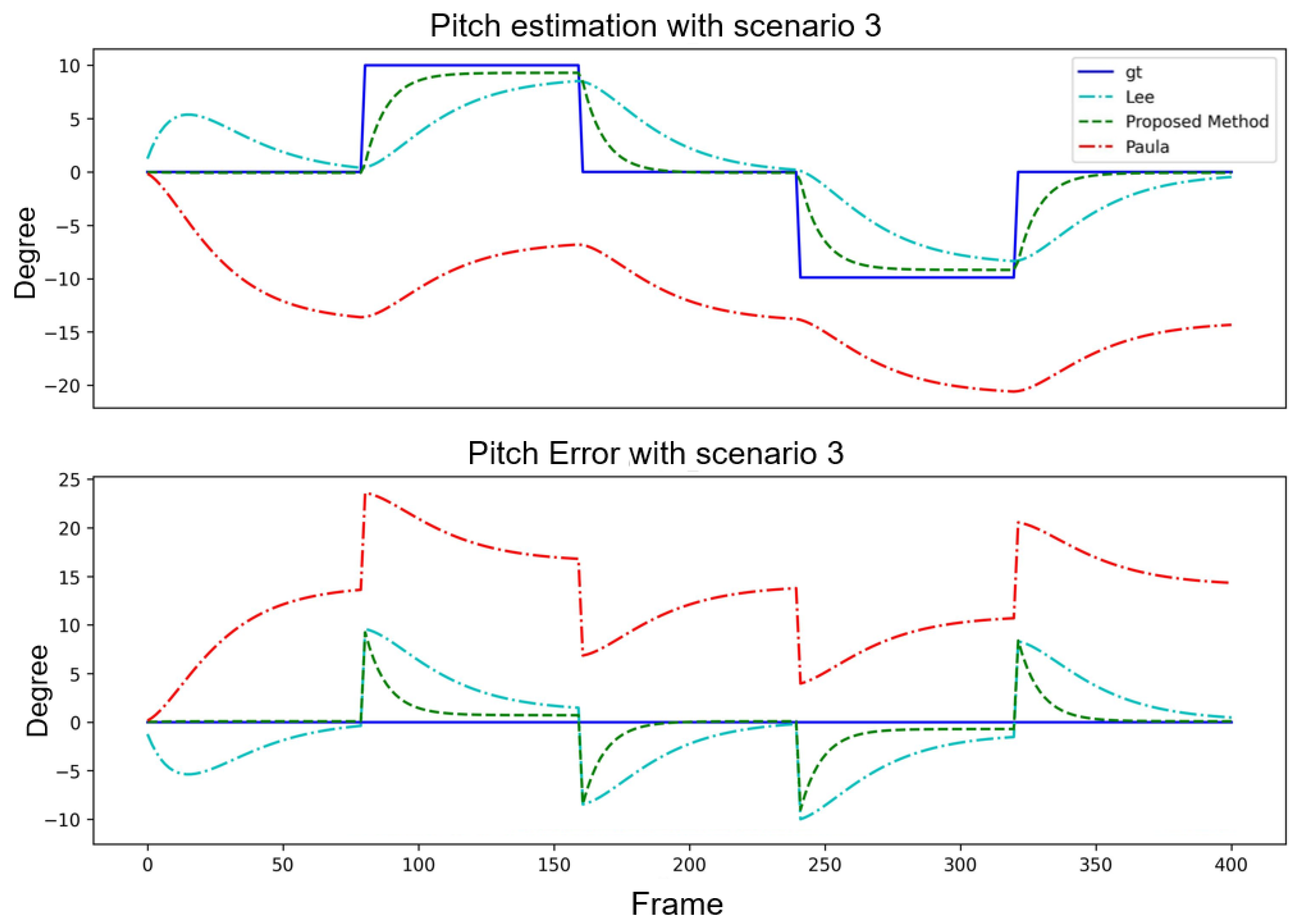

- Scenario 3: For the system to converge in certain frames to correct the pitch angle with a variation, the pitch angle is modified by in each frame while the yaw angle is fixed to .

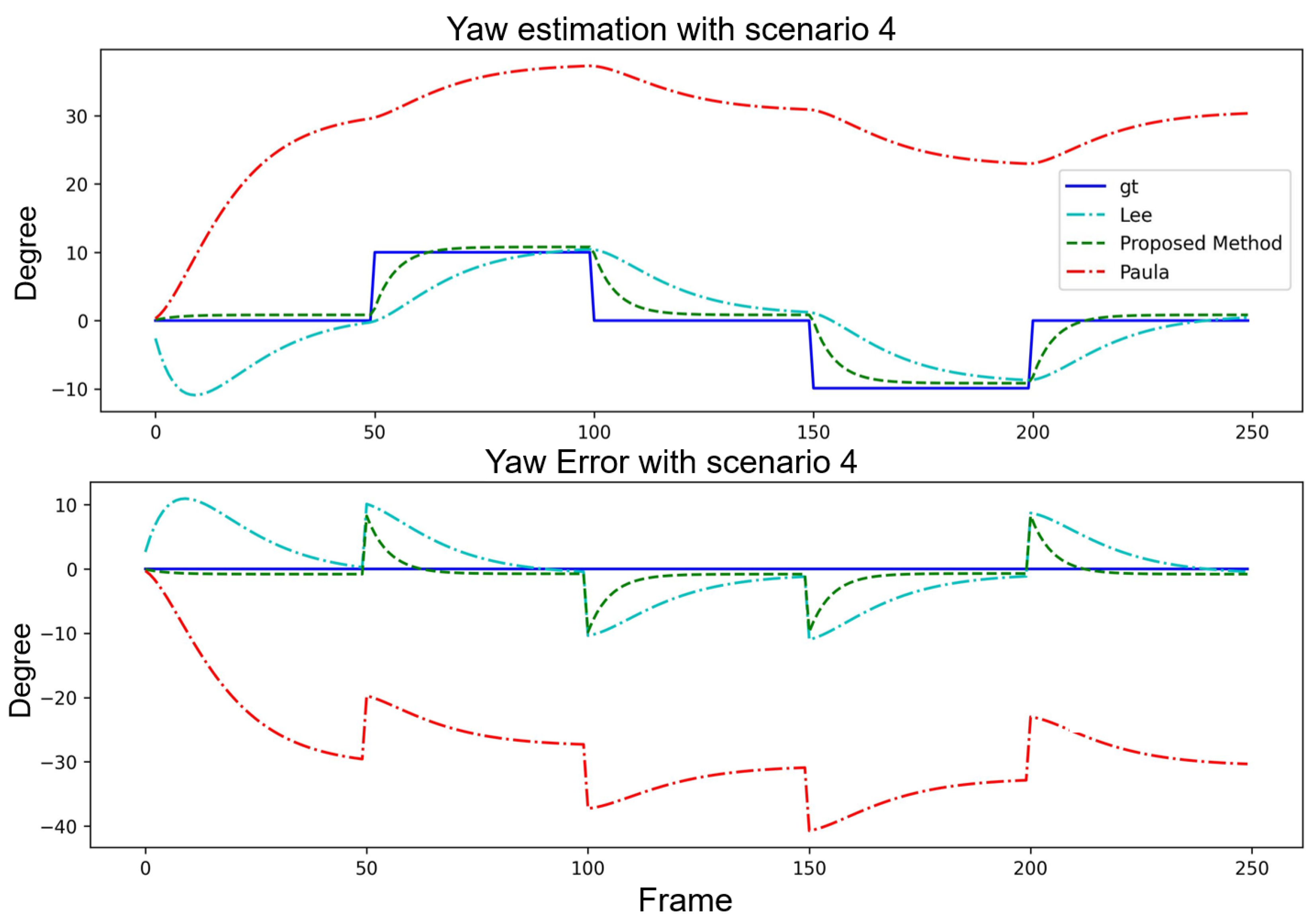

- Scenario 4: For the system to converge in certain frames to correct the yaw angle with a variation, the yaw angle is modified by in each frame while the pitch angle is fixed to .

- Scenario 5: For the system to converge in certain frames to correct the angle with a variation, the pitch and yaw angle is modified by in each frame.

4.2. Results

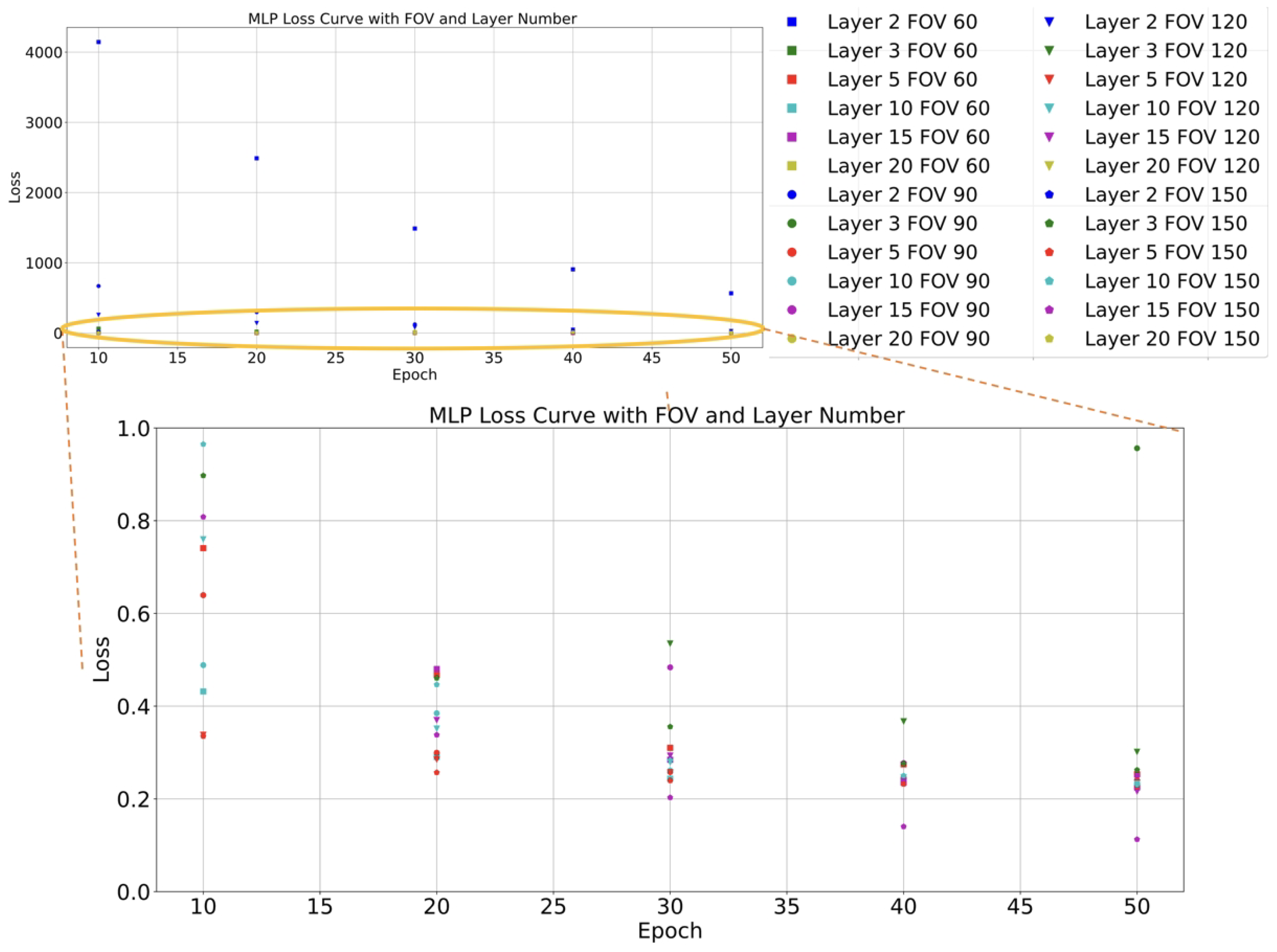

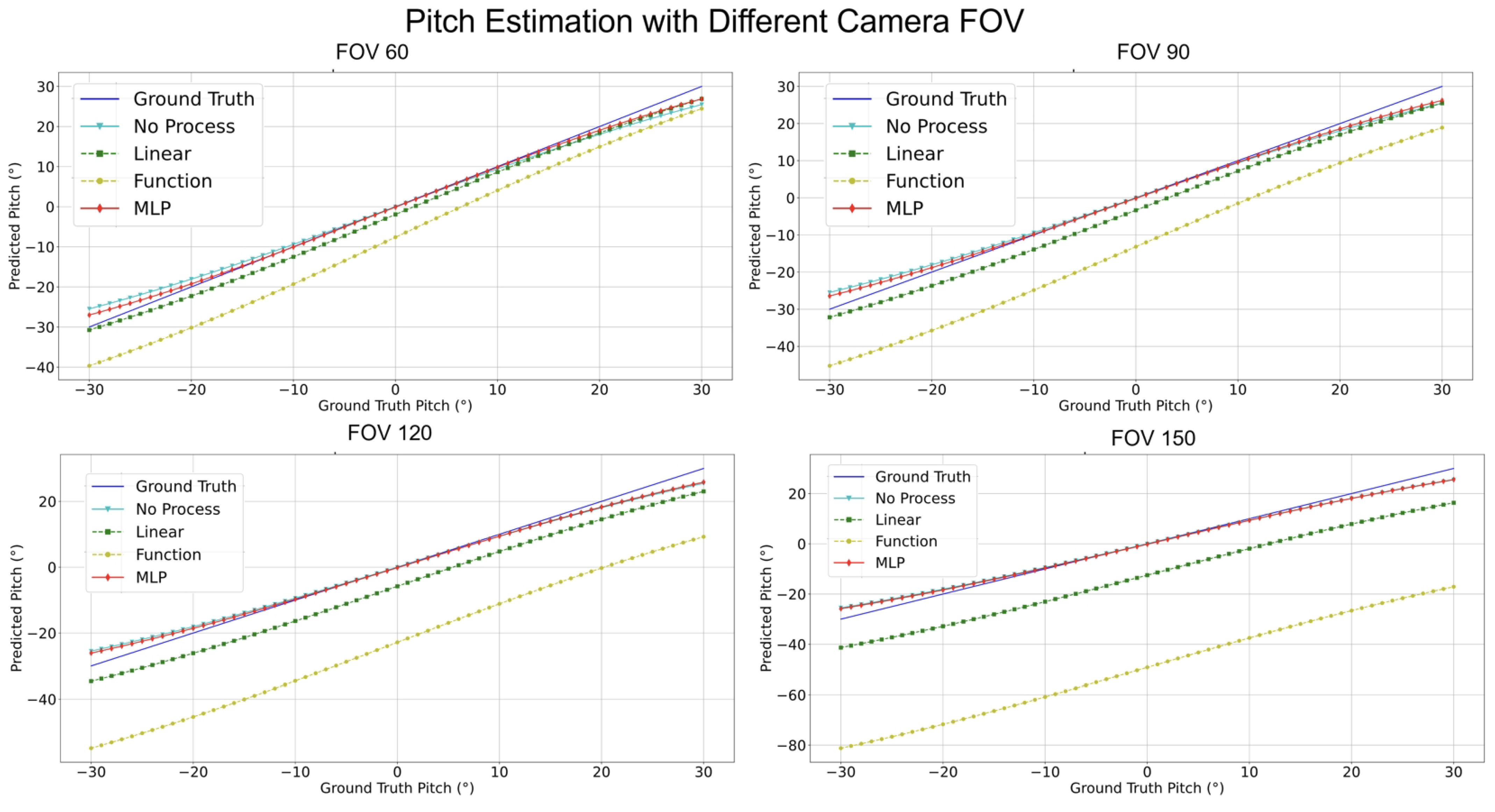

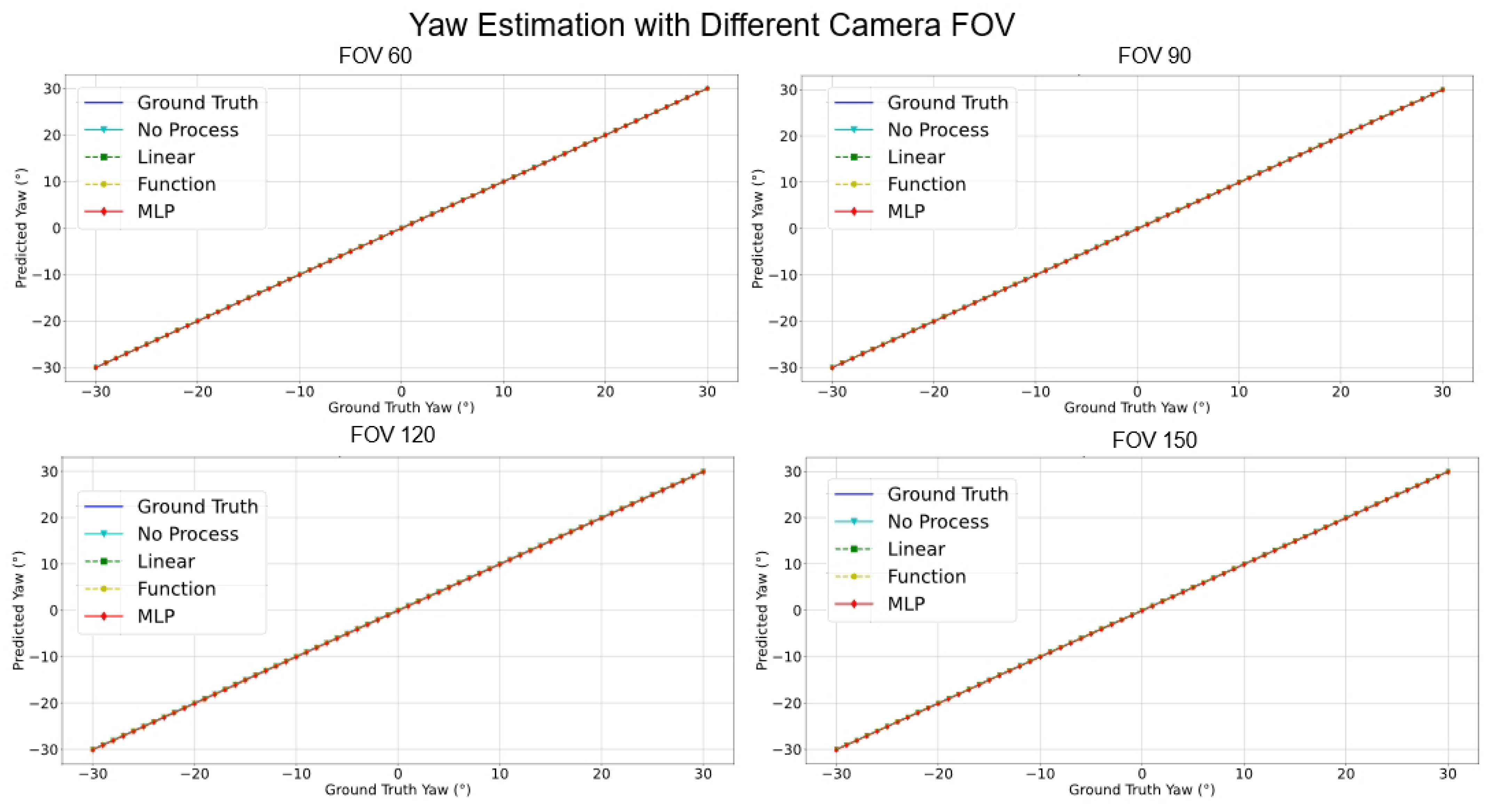

5. Ablation Study

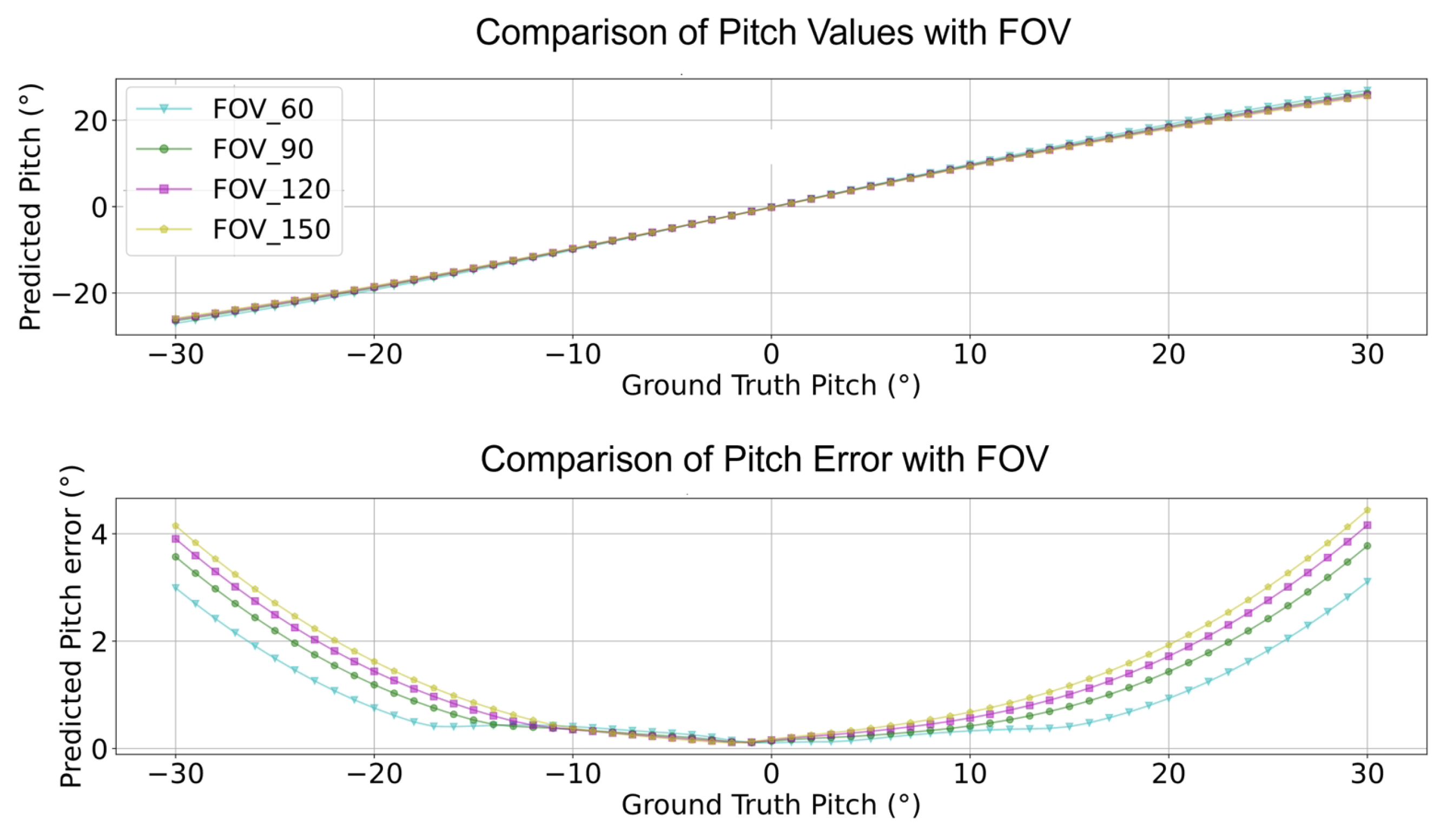

- Pitch Analysis: The MLP method consistently outperformed other techniques in estimating pitch angles across all FOV ranges. Relative to GT, the estimations from MLP consistently exhibited remarkable accuracy. Conversely, the “No process” method presented substantial deviations from true values.

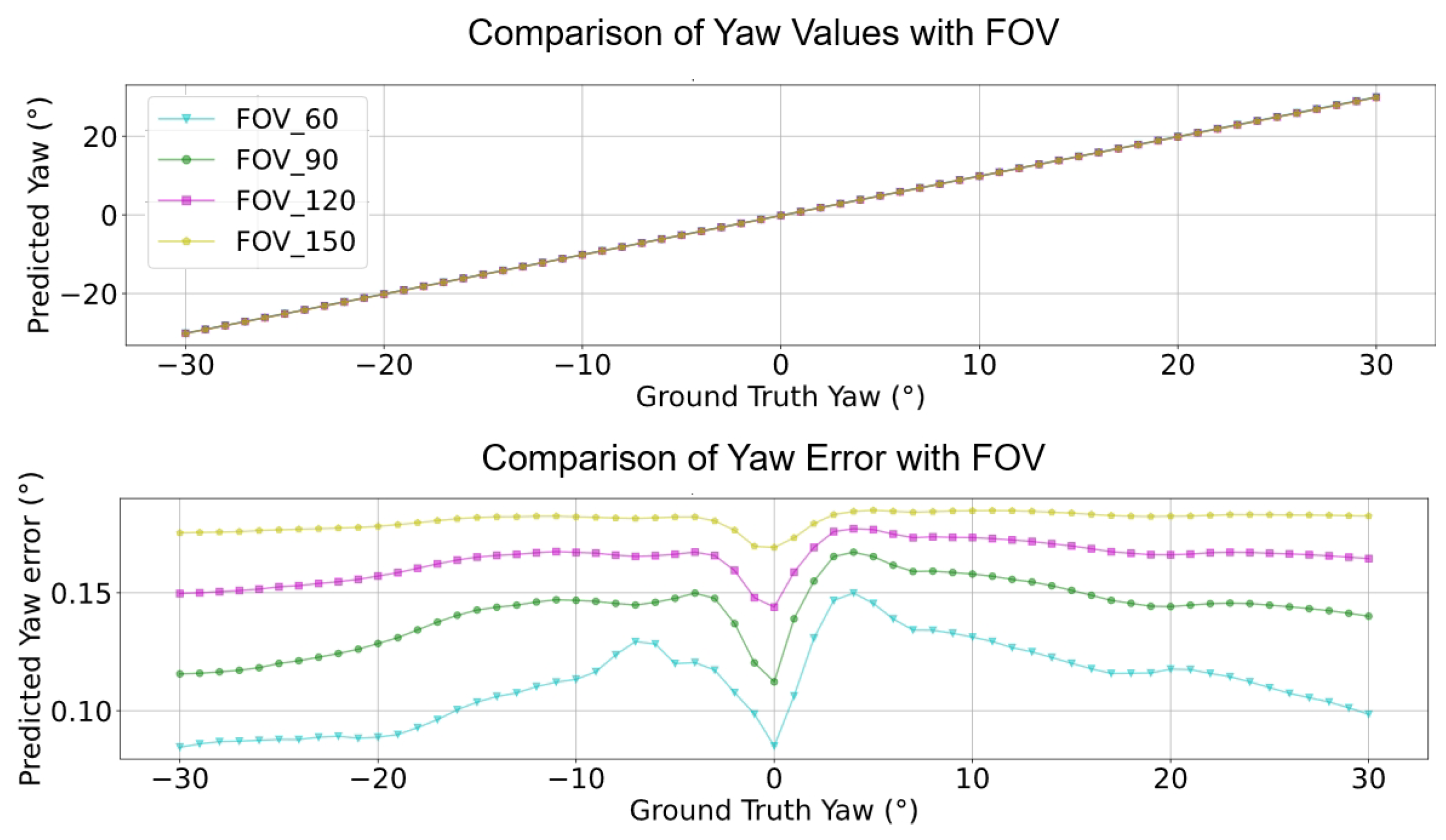

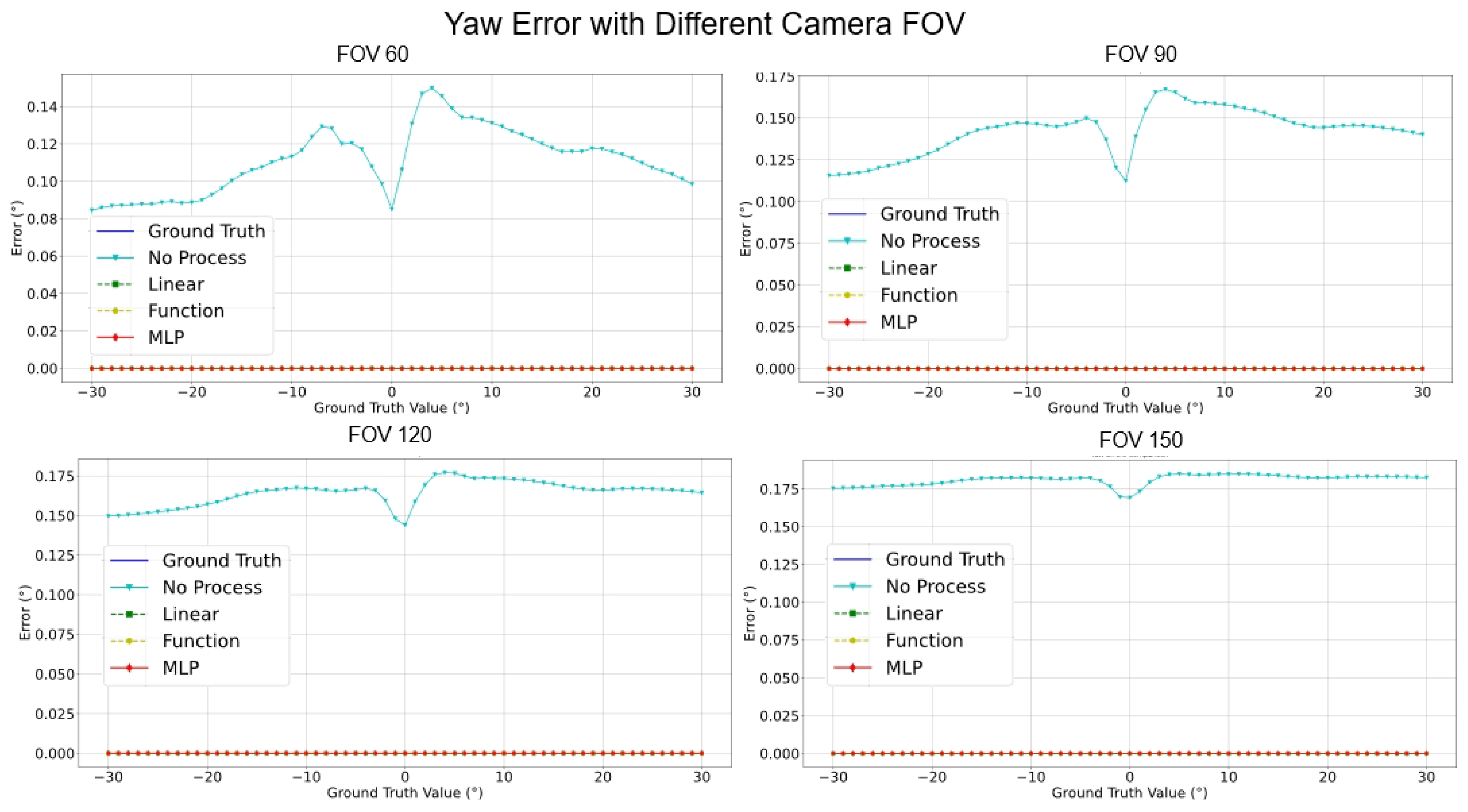

- Yaw Analysis: Several methods achieved commendable precision for yaw estimations across most FOVs. Nonetheless, the “Linear” encountered marginal error increments at specific angles, while the accuracy of the MLP remained relatively invariant.

- FOV Assessment: Across varied FOVs, the MLP method stood out for its pitch and yaw angle estimations accuracy. While the linear and other methods demonstrated efficacy within certain angular ranges, they exhibited noticeable deviations under particular conditions.

- Estimation Error for Pitch: Across all FOVs, MLP consistently registered the most minor error. Notably, at a 150° FOV, its performance superiority was markedly evident. The error of the Linear Regression trajectory steeply ascended with FOV increments. Notably, the error magnitude for the “Function” surged most significantly with FOV enlargement.

- Estimation Error for Yaw: Astonishingly, both Linear Regression and Function Compensation methods yielded zero error across all FOVs, epitomizing impeccable estimations. Similarly, the performance of MLP mirrored this perfection. In comparison, the “No process” method, while competent, exhibited a marginal error increase as the FOV expanded.

- Processing Time Assessment: The “Function” consistently achieved the swiftest processing times across all FOVs, signifying optimal resource efficiency. In contrast, the “Linear” generally demanded more prolonged processing intervals. MLP and the “No process” methods displayed commendable consistency in processing durations across all FOVs.

6. Discussions

6.1. Practical Application and Limitations

6.2. Future Work

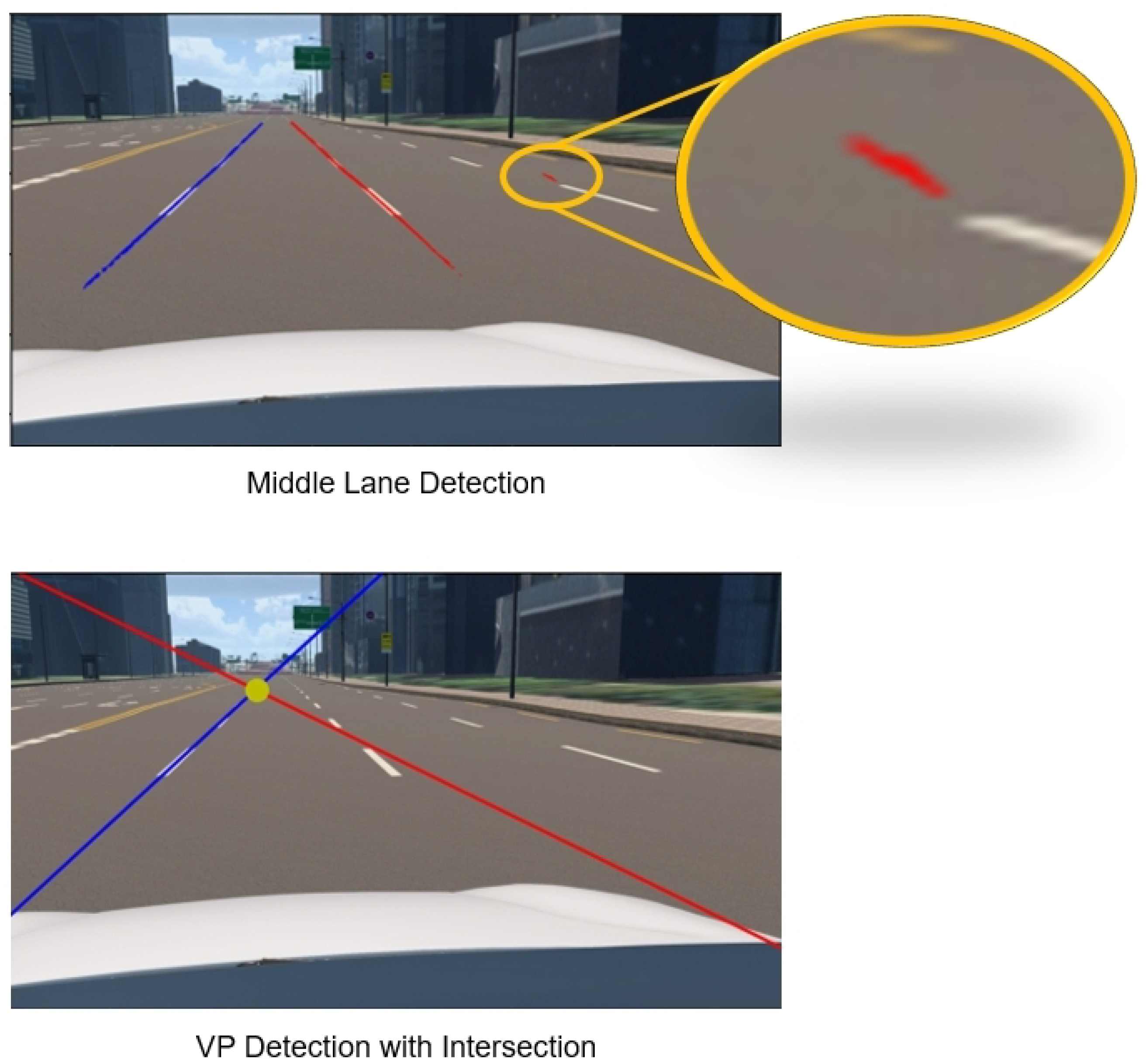

- Accurate Lane Detection and Its Process: For more accurate VP prediction, accurate lane instance segmentation or similar instance classification functions are needed. Building an end-to-end deep neural network from VP detection to orientation estimation may be a good research direction.

- More Accurate Angle Estimation: The performance of the compensated angles will show relative advantages compared to other methods. However, as far as the method itself is concerned, there is still room for improvement, such as the prediction performance of pitch in the real environment, the generalization of the compensation part to the camera FOV ability, etc.

- FOV Assessment: Across varied FOVs, the MLP method stood out for its pitch and yaw angle estimations accuracy. While the linear and other methods demonstrated efficacy within certain angular ranges, they exhibited noticeable deviations under particular conditions.

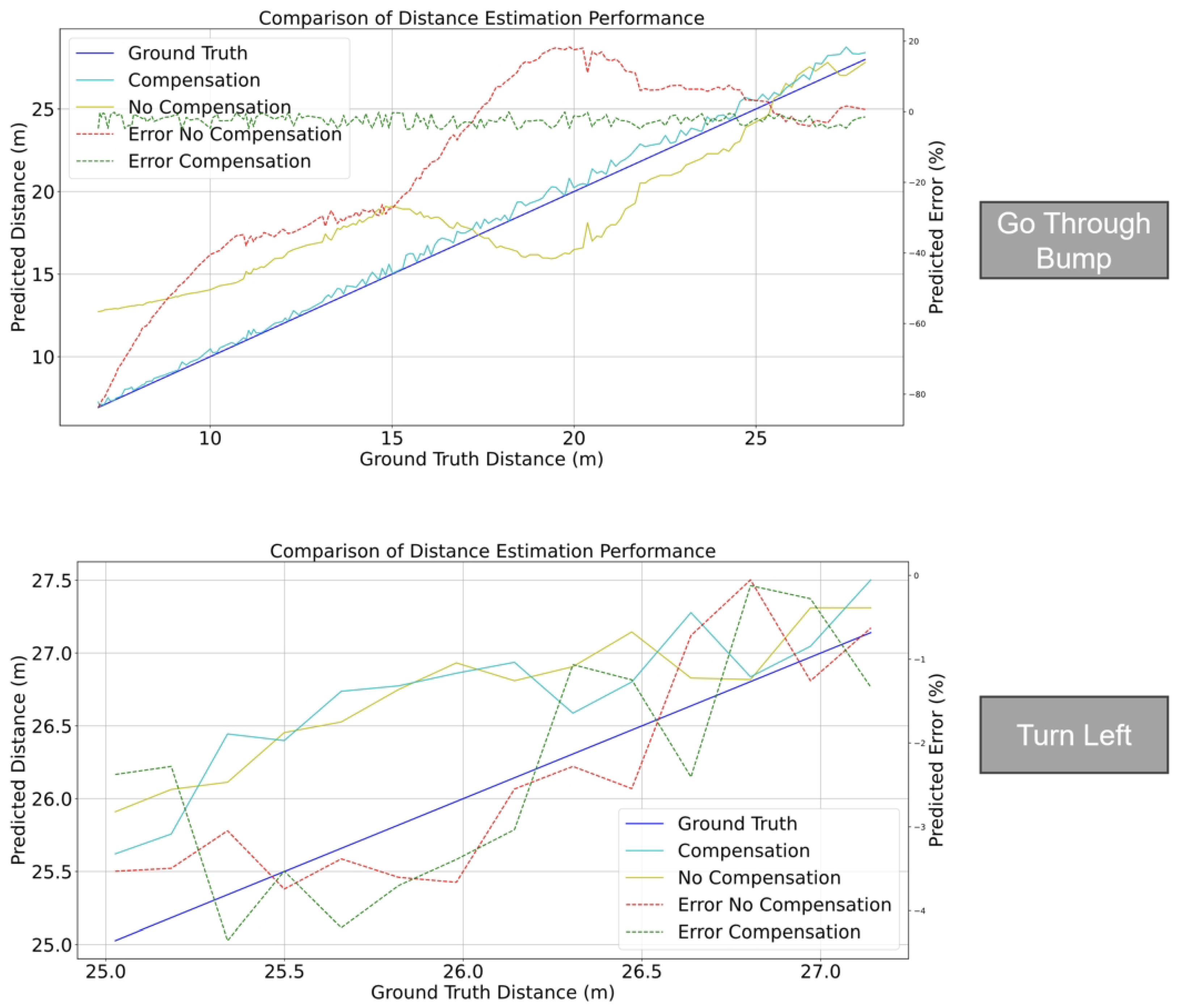

- Curve Case Process: The proposed method fails when cornering, which is a practical shortcoming. Therefore, defining the necessity and application of orientation estimation during curves is also necessary.

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gupta, A.; Choudhary, A. A Framework for Camera-Based Real-Time Lane and Road Surface Marking Detection and Recognition. IEEE Trans. Intell. Veh. 2018, 3, 476–485. [Google Scholar] [CrossRef]

- Chen, L.; Tang, T.; Cai, Z.; Li, Y.; Wu, P.; Li, H.; Qiao, Y. Level 2 autonomous driving on a single device: Diving into the devils of openpilot. arXiv 2022, arXiv:2206.08176. [Google Scholar]

- Kim, J.K.; Kim, H.R.; Oh, J.S.; Li, X.Y.; Jang, K.J.; Kim, H. Distance Measurement of Tunnel Facilities for Monocular Camera-based Localization. J. Inst. Control Robot. Syst. 2023, 29, 7–14. [Google Scholar] [CrossRef]

- Vajgl, M.; Hurtik, P.; Nejezchleba, T. Dist-YOLO: Fast object detection with distance estimation. Appl. Sci. 2022, 12, 1354. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, L.; Liu, H.; Shang, Y. A flexible online camera calibration using line segments. J. Sens. 2016, 2016, 2802343. [Google Scholar] [CrossRef]

- Hold-Geoffroy, Y.; Sunkavalli, K.; Eisenmann, J.; Fisher, M.; Gambaretto, E.; Hadap, S.; Lalonde, J.F. A perceptual measure for deep single image camera calibration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2354–2363. [Google Scholar]

- Ge, D.-Y.; Yao, X.-F.; Xiang, W.-J.; Liu, E.-C.; Wu, Y.-Z. Calibration on Camera’s Intrinsic Parameters Based on Orthogonal Learning Neural Network and Vanishing Points. IEEE Sens. J. 2020, 20, 11856–11863. [Google Scholar] [CrossRef]

- Lébraly, P.; Deymier, C.; Ait-Aider, O.; Royer, E.; Dhome, M. Flexible extrinsic calibration of non-overlapping cameras using a planar mirror: Application to vision-based robotics. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 5640–5647. [Google Scholar]

- Ly, D.S.; Demonceaux, C.; Vasseur, P.; Pégard, C. Extrinsic calibration of heterogeneous cameras by line images. Mach. Vis. Appl. 2014, 25, 1601–1614. [Google Scholar] [CrossRef]

- Jansen, W.; Laurijssen, D.; Daems, W.; Steckel, J. Automatic calibration of a six-degrees-of-freedom pose estimation system. IEEE Sens. J. 2019, 19, 8824–8831. [Google Scholar] [CrossRef]

- Domhof, J.; Kooij, J.F.P.; Gavrila, D.M. A Joint Extrinsic Calibration Tool for Radar, Camera and Lidar. IEEE Trans. Intell. Veh. 2021, 6, 571–582. [Google Scholar] [CrossRef]

- Lee, J.H.; Lee, D.-W. A Hough-Space-Based Automatic Online Calibration Method for a Side-Rear-View Monitoring System. Sensors 2020, 20, 3407. [Google Scholar] [CrossRef] [PubMed]

- Wu, Z.; Fu, W.; Xue, R.; Wang, W. A Novel Line Space Voting Method for Vanishing-Point Detection of General Road Images. Sensors 2016, 16, 948. [Google Scholar] [CrossRef] [PubMed]

- de Paula, M.B.; Jung, C.R.; da Silveira, L.G. Automatic on-the-fly extrinsic camera calibration of onboard vehicular cameras. Expert Syst. Appl. 2014, 41, 1997–2007. [Google Scholar] [CrossRef]

- Lee, J.K.; Baik, Y.K.; Cho, H.; Yoo, S. Online Extrinsic Camera Calibration for Temporally Consistent IPM Using Lane Boundary Observations with a Lane Width Prior. arXiv 2020, arXiv:2008.03722. [Google Scholar]

- Jang, J.; Jo, Y.; Shin, M.; Paik, J. Camera Orientation Estimation Using Motion-Based Vanishing Point Detection for Advanced Driver-Assistance Systems. IEEE Trans. Intell. Transp. Syst. 2021, 22, 6286–6296. [Google Scholar] [CrossRef]

- Guo, K.; Ye, H.; Gu, J.; Tian, Y. A Fast and Simple Method for Absolute Orientation Estimation Using a Single Vanishing Point. Appl. Sci. 2022, 12, 8295. [Google Scholar] [CrossRef]

- Wang, L.L.; Tsai, W.H. Camera calibration by vanishing lines for 3-D computer vision. IEEE Trans. Pattern Anal. Mach. Intell. 1991, 13, 370–376. [Google Scholar] [CrossRef]

- Meng, L.; Li, Y.; Wang, Q.L. A calibration method for mobile omnidirectional vision based on structured light. IEEE Sens. J. 2021, 21, 11451–11460. [Google Scholar] [CrossRef]

- Bellino, M.; de Meneses, Y.L.; Kolski, S.; Jacot, J. Calibration of an embedded camera for driver-assistant systems. In Proceedings of the IEEE International Conference on Industrial Informatics, Perth, Australia, 10–12 August 2005; pp. 354–359. [Google Scholar]

- Zhuang, S.; Zhao, Z.; Cao, L.; Wang, D.; Fu, C.; Du, K. A Robust and Fast Method to the Perspective-n-Point Problem for Camera Pose Estimation. IEEE Sens. J. 2023, 23, 11892–11906. [Google Scholar] [CrossRef]

- Bazin, J.C.; Pollefeys, M. 3-line RANSAC for orthogonal vanishing point detection. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura, Portugal, 7–12 October 2012; pp. 4282–4287. [Google Scholar]

- Tabb, A.; Yousef, K.M.A. Parameterizations for reducing camera reprojection error for robot-world hand-eye calibration. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–3 October 2015; pp. 3030–3037. [Google Scholar]

- Taud, H.; Mas, J.F. Multilayer perceptron (MLP). In Geomatic Approaches for Modeling Land Change Scenarios; Springer: Cham, Switzerland, 2018; pp. 451–455. [Google Scholar]

- Rong, G.; Shin, B.H.; Tabatabaee, H.; Lu, Q.; Lemke, S.; Možeiko, M.; Kim, S. Lgsvl simulator: A high fidelity simulator for autonomous driving. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Hong, C.J.; Aparow, V.R. System configuration of Human-in-the-loop Simulation for Level 3 Autonomous Vehicle using IPG CarMaker. In Proceedings of the 2021 IEEE International Conference on Internet of Things and Intelligence Systems (IoTaIS), Bandung, Indonesia, 23–24 November 2021; pp. 215–221. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning (CoRL), Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Samak, T.V.; Samak, C.V.; Xie, M. Autodrive simulator: A simulator for scaled autonomous vehicle research and education. In Proceedings of the 2021 2nd International Conference on Control, Robotics and Intelligent System, Qingdao, China, 20–22 August 2021; pp. 1–5. [Google Scholar]

- Rojas, M.; Hermosilla, G.; Yunge, D.; Farias, G. An Easy to Use Deep Reinforcement Learning Library for AI Mobile Robots in Isaac Sim. Appl. Sci. 2022, 24, 8429. [Google Scholar] [CrossRef]

- Shah, S.; Dey, D.; Lovett, C.; Kapoor, A. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. Field and Service Robotics: Results of the 11th International Conference; Springer International Publishing: Cham, Switzerland, 2017; pp. 621–635. [Google Scholar]

- Park, C.; Kee, S.C. Online local path planning on the campus environment for autonomous driving considering road constraints and multiple obstacles. Appl. Sci. 2021, 11, 3909. [Google Scholar] [CrossRef]

| Simulator | Image Resolution | FOV | Camera Model | Camera Pose | Map Generating | CPU/GPU Minimum Requirements |

|---|---|---|---|---|---|---|

| LGSVL [25] | 0∼1920 × 0∼1080 | 30∼90 | Idea | R (0.1)/T (0.1) | - | 4 GHz Quad core CPU/GTX 1080 8 GB |

| Carmaker [26] | 0∼1920 × 0∼1920 | 30∼180 | Physical | R (0.1)/T (0.1) | Unity | RAM 4 GB 1 GHz CPU/- |

| CARLA [27] | 0∼1920 × 0∼1920 | 30∼180 | Physical | R (0.1)/T (0.1) | Unity/Unreal | RAM 8 GB Inter i5/GTX 970 |

| NVIDIA DRIVE Sim [28] | 0∼1920 × 0∼1920 | 30∼200 | Physical | - | Unity/Unreal | RAM 64 GB/RTX 3090 |

| Isaac Sim [29] | 0∼1920 × 0∼1920 | 0∼90 | Physical | R (0.001)/ T (10 × 10−5) | Unity/Unreal | RAM 32 GB Inter i7 7th/RTX 2070 8 GB |

| Air Sim [30] | 0∼1920 × 0∼1920 | 30∼180 | Physical | R (0.1)/T (0.1) | Unity/Unreal | RAM 8 GB Inter i5/GTX 970 |

| MORAI [31] | 0∼1920 × 0∼1920 | 30∼179 | Idea/Physical | R (10 × 10−6)/ T (10 × 10−6) | Unity | RAM 16 GB I5 9th/RTX 2060 Super |

| Paula et al. [14] | Lee et al. [15] | Proposed Method | |

|---|---|---|---|

| avgE | 2.551 | 0.020 | 0.015 |

| minE | 3.865 | 0.130 | 0.120 |

| maxE | 4.958 | 0.236 | 0.220 |

| Stdev | 2.525 | 1.817 | 1.352 |

| Paula et al. [14] | Lee et al. [15] | Proposed Method | |

|---|---|---|---|

| avgE | −29.109 | −0.105 | −0.566 |

| minE | 0.307 | 0.017 | 0.002 |

| maxE | 34.221 | 11.810 | 6.211 |

| Stdev | 5.576 | 3.285 | 1.726 |

| Paula et al. [14] | Lee et al. [15] | Proposed Method | |

|---|---|---|---|

| avgE | −10.392 | 1.465 | 1.731 |

| minE | −13.210 | −0.088 | −0.057 |

| maxE | −3.766 | 3.073 | 4.317 |

| Stdev | 5.013 | 5.051 | 5.009 |

| Paula et al. [14] | Lee et al. [15] | Proposed Method | |

|---|---|---|---|

| avgE | −20.334 | 4.523 | 4.804 |

| minE | 0.307 | 0.673 | 0.112 |

| maxE | 38.270 | 20.763 | 14.877 |

| Stdev | 13.340 | 9.049 | 6.347 |

| Metric | Paula et al. [14] | Lee et al. [15] | Proposed Method | |||

|---|---|---|---|---|---|---|

| Pitch | Yaw | Pitch | Yaw | Pitch | Yaw | |

| avgE | 9.241 | −22.823 | −2.573 | −0.297 | −2.567 | −0.256 |

| ssE | −14.266 | 24.854 | −0.836 | −1.400 | −0.847 | −1.395 |

| Stdev | 2.680 | 2.630 | 0.165 | 0.199 | 0.172 | 0.156 |

| FOV | Accuracy | Method | |||

|---|---|---|---|---|---|

| No Process | Linear | Function | MLP | ||

| 60 | Pitch/degree | 1.572 | 1.944 | 7.606 | 0.881 |

| yaw/degree | 0.111 | 0 | 0 | 0 | |

| Processing time/ms | 0.025 | 0.098 | 0.003 | 0.063 | |

| FLOPs | 70 | 80 | 120 | 37,318 | |

| 90 | Pitch/degree | 1.572 | 3.344 | 13.174 | 1.155 |

| yaw/degree | 0.142 | 0 | 0 | 0 | |

| Processing time/ms | 0.025 | 0.097 | 0.003 | 0.063 | |

| FLOPs | 70 | 80 | 120 | 37,318 | |

| 120 | Pitch/degree | 1.572 | 5.793 | 22.817 | 1.336 |

| yaw/degree | 0.164 | 0 | 0 | 0 | |

| Processing time/ms | 0.024 | 0.094 | 0.003 | 0.061 | |

| FLOPs | 70 | 80 | 120 | 37,318 | |

| 150 | Pitch/degree | 1.572 | 12.482 | 49.164 | 1.473 |

| yaw/degree | 0.181 | 0 | 0 | 0 | |

| Processing time/ms | 0.024 | 0.096 | 0.003 | 0.062 | |

| FLOPs | 70 | 80 | 120 | 37,318 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Kim, H.; Kakani, V.; Kim, H. Multilayer Perceptron-Based Error Compensation for Automatic On-the-Fly Camera Orientation Estimation Using a Single Vanishing Point from Road Lane. Sensors 2024, 24, 1039. https://doi.org/10.3390/s24031039

Li X, Kim H, Kakani V, Kim H. Multilayer Perceptron-Based Error Compensation for Automatic On-the-Fly Camera Orientation Estimation Using a Single Vanishing Point from Road Lane. Sensors. 2024; 24(3):1039. https://doi.org/10.3390/s24031039

Chicago/Turabian StyleLi, Xingyou, Hyoungrae Kim, Vijay Kakani, and Hakil Kim. 2024. "Multilayer Perceptron-Based Error Compensation for Automatic On-the-Fly Camera Orientation Estimation Using a Single Vanishing Point from Road Lane" Sensors 24, no. 3: 1039. https://doi.org/10.3390/s24031039

APA StyleLi, X., Kim, H., Kakani, V., & Kim, H. (2024). Multilayer Perceptron-Based Error Compensation for Automatic On-the-Fly Camera Orientation Estimation Using a Single Vanishing Point from Road Lane. Sensors, 24(3), 1039. https://doi.org/10.3390/s24031039