1. Introduction

Parkinson’s disease (PD) is a neurodegenerative condition that affects the motor system due to a reduction in dopamine-producing neurons within the brain [

1]. While PD symptoms may vary from person to person, one of the common and disabling motor symptoms in more advanced stages of the disease is freezing of gait (FOG) [

2,

3]. FOG is a walking disturbance that interferes with the ability to perform cyclical stepping activities and leads to an involuntary arrest of forward walking progression [

1]. FOG has been described as feeling like one’s feet are ‘glued to the floor’ [

4,

5]. Freezing can also lead to overall reduced mobility and falling [

4].

Rhythmic cues (i.e., cues administered at a constant rhythm in the form of auditory, visual, or tactile stimuli) can improve gait speed, reduce the number of freezing episodes, and increase the distance that people with PD can walk without experiencing FOG [

6]. However, constant cues throughout the day, as well as cueing at a rhythm different from a person’s intended stepping rhythm, could be distracting and may even induce FOG [

7]. Intelligent cues, which are administered only when needed, could reduce the disadvantages of constant rhythmic cueing. To enable intelligent cueing, it is necessary to first develop a system capable of detecting—or preferably—predicting FOG.

Detection and prediction of FOG episodes in real-time have been addressed through various methods, primarily leveraging wearable sensors [

8,

9,

10,

11,

12,

13,

14]. Existing studies report varying sensitivities (63% to 100%) and specificities (59% to 100%) for FOG detection [

8]. In [

15], a convolutional neural network was used to predict FOG, on average, 2.72 s prior to onset, with a sensitivity of 84.61% and a specificity of 94.74%. This study classified FOG identification as a prediction. The study utilized acceleration data from the left leg and wrist, which may be difficult to acquire in a real-world device. In [

16], a convolutional neural network employing a single inertial sensor placed on the waist achieved 87.7% sensitivity and 88.3% specificity. This model identified FOG episodes 1.2 s prior to onset. While many studies have focused on inertial measurement unit-based wearable devices [

9,

10,

11,

12,

13,

16,

17,

18], plantar-pressure sensors offer reduced measurement noise and added discretion in a real-world device. In [

19], plantar-pressure data were utilized, yielding 96% sensitivity and 94% specificity for FOG detection. However, FOG episode predictive capabilities were not specified. While FOG identification methods provide valuable insights, their limitations lie in the reactive nature of detection, responding either during or after the onset of a freeze. Anticipating freezing events before they occur could enable timely intervention to prevent the onset of a freeze.

To further research FOG prediction using plantar-pressure data, this paper extends previous work [

20,

21] on the development of a FOG prediction and detection system using plantar-pressure data. The model in [

20] was trained on data from only 11 participants with PD. A new dataset to train the model could have different FOG presentations, occurrence rates, and durations that can impact model performance. Furthermore, a larger number of participants would provide more varied data for the model training, which could improve performance overall. There is a need to determine if expanding the dataset would improve the model for greater generalizability and validate the model on a larger number of participants. In this paper, the number of participants was doubled, and analysis of the models trained on the different datasets was performed to help understand the generalizability of the models and to demonstrate greater model generalizability on a larger number of participants. Furthermore, outliers in the datasets were removed to analyze their effect on the generated models. This analysis will help in understanding the effect of different datasets on the proposed FOG prediction models. Such models could be implemented in intelligent cueing to enhance the mobility and safety of people with PD.

The rest of the paper is organized as follows:

Section 2 outlines the design and implementation of the plantar-pressure sensor system, along with the data collection and analysis protocols.

Section 3 presents the results, including the system’s accuracy, reliability, and performance metrics in real-time FOG detection.

Section 4 discusses the implications and limitations of these findings, comparing the results from multiple datasets. Finally,

Section 5 concludes with a summary of the contributions, potential applications, and recommendations for future research.

2. Materials and Methods

2.1. Participants

This study extends the model’s development and testing in [

20]. The data collection methods are the same as in [

20] and are briefly described here. For a previous study, data were collected from 11 participants (formally diagnosed with Parkinson’s disease by a neurologist), 7 of whom froze during testing (

Table 1). In this paper, 4 participants from [

20] were re-tested (nearly 2 years after they were first tested), and 6 new participants were tested (

Table 2). Ethics approval was obtained from the University of Ottawa (H-05-19-3547) and the University of Waterloo (40954). A convenience sample of 6 new participants and 4 previously tested participants was recruited from the Ottawa-Outaouais community for this study. All participants provided informed consent.

The current paper explores the performance of a freeze prediction/detection model when trained using 3 groups of participants, referred to as Dataset 1, Dataset 2, and Dataset 3. Dataset 1 (

n = 11) consisted of the initial 11 participants in [

20]. Dataset 2 (

n = 10) consisted of the second group of participants who were tested two years later: four of the participants from Dataset 1 and six new participants. Dataset 3 (

n = 21) consisted of all participants from both groups (Datasets 1 and 2).

Table 1 and

Table 2 show the participant’s age and time since PD diagnosis at the time of testing.

Walking trial data were collected from 21 participants with PD. The participants for Dataset 2 were screened using the following criteria: experience freezing of gait at least once a day and able to walk the trial path unassisted. Participants performed walking trials at the Human Movement Performance Laboratory, University of Ottawa, while on their regular medication schedule. To increase the likelihood of FOG during walking, trials were scheduled prior to the participant’s next regularly scheduled medication dose, when possible. The New Freezing of Gait Questionnaire (NFOG-Q) [

22] and the Unified Parkinson’s Disease Rating Scale (UPDRS) Motor Examination (Part III) [

23] were used to assess the severity of FOG and PD motor symptoms.

The participants’ shoes were fitted with pressure-sensing insoles (FScan, Tekscan, Boston, MA, USA) for the walking trials. For each participant, a new set of FScan insoles was fitted to their shoes and calibrated according to the manufacturer’s instructions. Participants were asked to walk a freeze-inducing path [

20] 30 times. When necessary to induce freezing, participants were asked to carry a tray with objects on top while performing a verbal task, which consisted of continuously saying words out loud beginning with a random letter.

2.2. Data Labeling

The plantar-pressure data and the videos were synchronized using a custom application written in MATLAB 2019b (The MathWorks Inc., Natick, MA, USA). The application used a one-foot stomp at the beginning of each trial as a synchronization event and several subsequent heel-strike events for verification. FOG episodes were labeled by researcher S.P. in consultation with researcher J.N. in cases of doubt. The labels were applied to the 30 Hz video data and were transferred to the 100 Hz plantar-pressure data using linear interpolation to the closest data point.

As in [

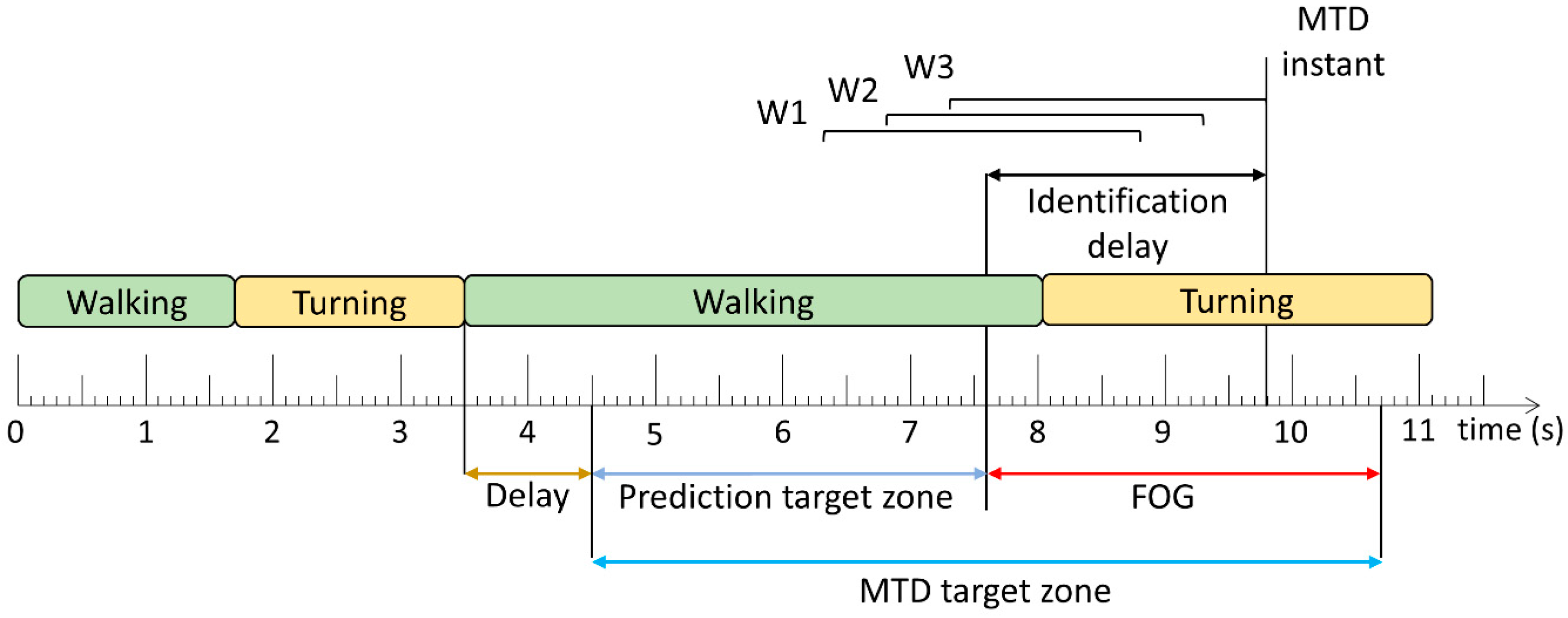

20], a FOG episode was defined as “the instant the stepping foot fails to leave the ground despite the clear intention to step” until “the instant the stepping foot begins or resumes an effective step”. An “effective step” was defined as a step where “the heel lifted from the ground, provided that it was followed by a smooth toe-off with the entire foot lifting from the ground and advancing into the next step without loss of balance”. As shown in

Figure 1, each timestamp was labeled as either FOG or non-FOG, and a pre-FOG segment was defined as the 2 s immediately preceding the beginning of the FOG episode. The pre-FOG segment was set to be 2 s based on previous FOG prediction studies [

18,

20,

24,

25].

2.3. Windowing

The data were grouped into 1 s windows, which overlapped by 0.8 s (i.e., 0.2 s shift between consecutive windows,

Figure 1). Machine learning models classified each window as the target class or non-target class. The target class was a FOG episode and the short period immediately prior. Purely FOG windows (W19) and pre-FOG windows (W9–W18) were assigned to the target class, and the non-FOG windows (windows that included any non-FOG data) (W1–W8, W20) were assigned to the non-target class.

2.4. Feature Extraction

Each data window was used to calculate features, which were passed to the FOG identification models for training. These features were based on the foot center-of-pressure (COP) position during the 1 s window, where X is the medial/lateral (ML) direction, and Y is the anterior/posterior (AP) direction. At any time, the COP coordinates were only considered valid if the total ground reaction force of one foot accounted for more than 5% of the total ground reaction force for two feet. This was done to remove erroneous COP position data caused by residual pressure data while the foot was in the swing phase. Following this check, the COP velocity was calculated as the first derivative of the COP position.

Based on previous research [

20], the models used 4 features from each foot for a total of 8 features. One feature was in the time domain (number of AP COP reversals), and the other features were in the frequency domain. Fast Fourier transform was used to calculate the dominant frequency of the COP velocity in X (ML) and the dominant frequency of the COP velocity in Y (AP), and a wavelet transform was used to calculate the mean approximation coefficient power COP position Y (AP). All features were calculated for the right and left sides. The features are summarized in

Table 3.

2.5. FOG Prediction Model Training

To facilitate dataset comparisons, all prediction models used the same parameters and training methods. Similar to those in [

20], the models developed in the current paper were binary decision tree ensembles using random undersampling boosting (RUSBoosting). Each of the 100 trees had 5 splits. A leave-one-freezer-out cross-validation was performed, as in [

25]. Participants who did not freeze were always included in the training dataset and never held out as test participants.

2.6. Performance Evaluation

Two methods were used to evaluate the trained models: window-based and FOG episode-based. For the window-based evaluation, each window classification from the models was compared to the ground truth label to calculate sensitivity and specificity. The FOG episode-based evaluation determined if and when each episode was detected by the model. A model trigger decision (MTD) was defined as classifications of 3 consecutive windows as FOG (

Figure 2). The time between FOG onset and successful MTD identification was the identification delay (ID). The ID was negative if the model predicted the episode before FOG onset and positive if the model detected the episode after onset.

The MTD target zone defines the time window during which the upcoming FOG can be predicted or detected. Before this zone, any MTD would likely not be related to the upcoming FOG, and after this zone, the FOG has ended and any MTD would be irrelevant. In [

20] and this paper, the prediction target zone was set to a 6 s period initially. However, if another FOG episode, turn-to-walk transition, or stand-to-walk transition occurred within the initial prediction target zone, the prediction target zone’s length was adjusted to exclude FOG, turning, and standing data. The start of the prediction target zone was set to 1 s (shown as delay in

Figure 2) after these turning and standing events so that false positives during turning or standing were not erroneously considered as early predictions of subsequent, unrelated FOG episodes.

Similar to [

20], MTDs were classified into true positives (within the MTD target zone) and false positives (outside the MTD target zone). The false positive rate (false positives/walking trial) was calculated for each participant. Furthermore, for all datasets in this paper, false positive MTDs that occurred during standing or gait initiation were ignored. Gait initiation was defined as the first second of walking after standing. As a final stage in model development, a 2.5 s no-cue interval was used, wherein MTDs were ignored if they occurred less than 2.5 s after the previous MTD [

25].

The modeling approach shown in

Figure 3, adapted from [

20,

21], was used to study the effects of expanding the dataset on model performance. To achieve this, three new models were trained: one on the existing dataset, one on a new dataset, and one on the combination of both datasets. This combination is possible because of the similarity of the data acquisition and walking path between datasets. The sensitivity, specificity, and ID from each model give a unique overview of the model performance on a micro level, exploring what kind of data most effectively impacts model performance.

3. Results

Table 4 and

Table 5 present the NFOG-Q score, UPDRS-III score, and whether the participant froze during testing for Datasets 1 and 2.

Table 6 and

Table 7 show the number of trials, total trial duration, number of FOG episodes, total FOG episode duration, and total MTD target zone duration. Compared to Dataset 1, the average age of the participants for Dataset 2 was 1.7 years inferior, the average time since PD diagnosis was 0.45 years longer, the average NFOG-Q score was 5.1 points higher (maximum = 28), and the average UPDRS-III score was 0.9 points lower (maximum = 56). The standard deviation of age and time since PD diagnosis did not differ by more than 1.07 years. The NFOG-Q standard deviation differed by 0.1 points, and the UPDRS-III score standard deviation differed by 1.6 points. In Dataset 1, 7 of the 11 participants froze during testing, whereas in Dataset 2, all 10 participants froze during testing.

Table 4 and

Table 5 also show the change in NFOG-Q and UPDRS-III scores for participants in both Dataset 1 and Dataset 2 (P05, P07, P08, and P11). Given the degenerative nature of PD, it was expected that NFOG-Q and UPDRS-III would increase after 2 years, indicating a worsening of Parkinson’s symptoms. For participants P07 and P11, the NFOG-Q scores were indeed higher, indicating more severe freezing symptoms. For P11, this is also supported by not freezing during Dataset 1 testing but freezing during testing 2 years later. Based on the NFOG-Q score, participant P05 reported less freezing in the second questionnaire, but they did not freeze during the first data collection and did freeze during the second.

Table 8 shows the metrics used to evaluate model performance on the three datasets. The model trained on Dataset 2 had the highest sensitivity (82.50%), percentage of FOG episodes identified (94.86%), and the lowest ID (−1.10 s), though it had the lowest specificity (77.27%) of the three models. Dataset 1 had greater differences than Dataset 2 when both were compared with Dataset 3.

Figure 4 shows the distribution of FOG identifications relative to FOG onset. Dataset 1 shows the highest ID and the lowest relative number of identifications before onset. Dataset 2 has the lowest ID and shows the highest relative number of identifications before onset. Dataset 3’s performance lies mostly between Datasets 1 and 2.

To analyze possible outlier effects, outliers were removed from Datasets 1 and 3. The outliers include P07, who experienced FOG significantly more than the other participants, and non-freezers, who did not experience FOG during testing, as shown in

Figure 5. The datasets were modified in three ways: P07 removed, all non-freezers removed, or both P07 and all non-freezers removed. Note that P07B (P07 was re-tested approximately 2 years later) did not have as many FOG occurrences or as much total FOG duration as P07 compared to their respective dataset and was thus not removed from the modifications. The results from these modifications are shown in

Table 9.

As shown in

Figure 6, removing P07 from Dataset 1 (Dataset 1a) caused sensitivity to drop by 20.09% from 75.40% to 55.31% and caused specificity to increase by 5.38% from 83.08% to 88.56%. Removing non-freezers from Dataset 1 (Dataset 1b) improved the performance compared to the model trained on Dataset 1. Sensitivity increased by 2.92% from 75.40% to 78.32%, and specificity dropped by 0.48% from 83.08% to 82.16%. When both P07 and non-freezers were removed from Dataset 1 (Dataset 1c), the model performance metrics were between the results for Datasets 1a and 1b, except for ID, which was higher and indicated later detection.

4. Discussion

The performance evaluation of the models across three datasets demonstrates their effectiveness in predicting FOG episodes. The models exhibit comparable performance to the existing methods in detecting and predicting FOG in a real-time system. This finding underscores the potential readiness of the model for integration into FOG identification and prevention systems, offering promising implications for enhancing care and quality of life for people who experience FOG.

The model trained on Dataset 2 outperformed the model trained on Dataset 1 in all metrics, and although there may be other contributing factors, there are two possible reasons for this difference. Firstly, the difference between Dataset 1 and the other datasets may have been caused in part by the presence of four participants who did not experience FOG during testing. Dataset 2, by comparison, had zero non-freezers. Secondly, P07, who is present in Dataset 1 but not Dataset 2, experienced 221 freezing episodes, while all other participants in the dataset combined experienced 141 freezes (14.1 freezes per participant excluding P07). P07’s total freeze time for all trials was 335.92 s, while all other participants combined experienced a total of 226.34 s (22.63 s of freezing per participant, excluding P07). Therefore, the increased severity and duration of P07’s freezing may have biased the model to prioritize identifying P07’s freezes over other participants.

Removing P07 from Dataset 1 (to produce Dataset 1a) adversely affected model performance. While the model became better at identifying true negatives and avoiding false positives, it was much worse at identifying true positives and avoiding false negatives. The model took less risk with its predictions, meaning it avoided false positives at the cost of missing true positives. This is most likely due to insufficient training data with FOG in the dataset. While RUSBoosting was used for all datasets to address the class imbalance usually present in FOG data, the method has limitations. Without sufficient target class data, undersampling and boosting cannot generate a well-performing model. In Dataset 3, which has more freeze data overall than Dataset 1, the effect of removing P07 was much less apparent. The model trained on Dataset 3a (P07 removed) outperformed the model trained on Dataset 3 in all metrics except for sensitivity, which dropped by only 0.61%. This shows that while P07’s data differ significantly from the other participants’ data, P07’s data contains many FOG instances and is, therefore, helpful for model performance when FOG instances in the training data are limited.

On the other hand, removing only non-freezers from Dataset 1 (to produce Dataset 1b) improved sensitivity by 2.92% with minimal effect on specificity. Interestingly, doing the same for Dataset 3 slightly worsened performance in all metrics, indicating that some non-freeze data were not detrimental to model performance when a dataset has a large amount of freeze data. In summary, the model trained on Dataset 1b (non-freezers removed) outperformed the model trained on Dataset 1, and the model trained on Dataset 3a (P07 removed) slightly outperformed the model trained on Dataset 3.

The composition of the training datasets significantly influenced model performance. Removing P07 from Dataset 1 led to a 20.09% drop in sensitivity but improved specificity by 5.38%, highlighting P07’s contribution to critical freeze data despite its distinctiveness. Conversely, removing non-freezers (Dataset 1b) improved sensitivity by 2.92% with minimal specificity impact, suggesting that non-freezers’ data diluted the model’s ability to focus on FOG patterns. These findings emphasize the need for balanced datasets that include diverse FOG severities and non-freezing behaviors to enhance both sensitivity and specificity. This demonstrates the importance of larger, more representative datasets for robust and generalizable models.

In all metrics and ID distribution (

Figure 4), Dataset 3’s model performance was between the performance of Datasets 1 and 2 models because Dataset 3 is a combination of Datasets 1 and 2. However, the difference between the models was not large. Sensitivity and specificity varied by a maximum of 7.1% and 5.81% between models, respectively. In general, the models identified over 74% of FOG episodes and predicted over 40% of FOG episodes. The models identified FOG episodes before freezing onset by 0.64 s, 1.10 s, and 0.94 s for Datasets 1–3, respectively. The normalized ID distribution also had a nearly identical shape for all three models. The similarity of results across datasets indicated that the model trained on Dataset 3 was generalizable to predict FOG for people with PD who experience FOG once per day or more, thus supporting the readiness of the model for deployment in a FOG identification and prevention system.

Despite this study’s promising findings, there are some limitations. One such limitation is the size of the dataset. This study used data from 21 sessions and 17 participants to generate results. Even though the similarity between datasets signifies that the model is sufficiently trained, further increasing the number of participants could provide a more robust validation of the findings. Another limitation of the study is regarding the uncontrolled presence of various FOG presentations that may have influenced the results, as the most common manifestation in the data could have biased the analysis. Researchers have categorized FOG into three manifestations (also known as phenotypes) based on leg movement: akinesia, trembling, and shuffling steps [

26]. Each of these categories of FOG has unique characteristics that may require different treatment. Exploring different classifications and developing a separate model for each type of FOG manifestation could yield more comprehensive insights, but this would require more participants. Further limitations stem from a finite amount of data for training the machine learning model. The model’s performance may have been affected by the imbalance between freezers and non-freezers in the training dataset. Optimizing the balance between these groups could mitigate potential biases in the model. Moreover, FOG is a symptom of mid to late-stage PD, meaning that the participants in this study have relatively higher disease severity compared to the broader PD population. Lastly, while the leave-one-freezer-out approach was employed for testing each model, evaluating a model’s performance on an entirely separate dataset would provide further validation of this study’s findings.

5. Conclusions

This paper presented an analysis of real-time FOG prediction and detection in PD using machine learning techniques. A viable model was developed and evaluated for FOG prediction and detection based on varying datasets created from 21 data collection sessions on 17 participants. This study highlights the value of expanding the dataset size and combining multiple datasets to improve the performance and robustness of FOG prediction models. Larger and more diverse datasets can significantly enhance the generalizability and accuracy of predictive models. These findings underscore the importance of exploring new datasets and methodologies in advancing the accuracy and effectiveness of FOG detection systems.

The results of this research demonstrate the feasibility and effectiveness of machine learning models to predict and detect FOG episodes based only on plantar-pressure data. The models showed good performance across multiple metrics, including sensitivity, specificity, FOG identification rates, and prediction lead times. The model trained on the largest dataset (Dataset 3) achieved a FOG identification rate of 86.84% and predicted FOG episodes on average 0.94 s before their onset. The model had 77.69% sensitivity and 79.99% specificity using leave-one-freezer-out cross-validation. These results suggest that the model has the potential to provide useful FOG instance information that can be used in a cueing device to help prevent FOG episodes and improve mobility for people with PD.

An in-depth analysis of the participants in the datasets revealed that the model performs best when trained on a dataset with a balance of freeze data between participants. Unbalanced datasets with too much or too little freezing hindered model performance. To address this, future models could be trained while limiting the amount of freezing data used from a single participant to reduce model bias. The number of non-freezers could also be limited to achieve the same effect. However, this should be done while maintaining substantial freezing and non-freezing data from freezers. Training the model with too little freezing data decreased model specificity and slightly increased the ID.

Furthermore, this study revealed that the proposed approach generated consistent and generalizable results for different datasets, indicating robustness and readiness for implementation in real-world applications. The comparison between datasets with and without specific participants highlighted the importance of data diversity in model training, as the presence of non-freezers or participants with varying degrees of FOG affected model performance.

Overall, this research contributes valuable insights into the development of a real-time FOG prediction and detection system, which could have a substantial effect on the quality of life for individuals living with PD. With further refinement and validation, the model could be integrated into wearable devices or smartphone applications to provide on-demand assistance and support for FOG management, ultimately enhancing mobility and safety for people with PD.

Author Contributions

Conceptualization, S.P., J.K., J.N. and E.D.L.; methodology, S.P., J.K., J.N. and E.D.L.; investigation, S.P. and A.A.; data curation, S.P. and A.A.; writing—original draft preparation, A.A., S.P. and E.J.; writing—review and editing, A.A., S.P., J.K., J.N. and E.D.L.; supervision, J.K., J.N. and E.D.L.; project administration, J.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Weston Family Foundation through its Weston Brain Institute, Microsoft Canada, Waterloo Artificial Intelligence Institute and Network for Aging Research at the University of Waterloo, Natural Sciences and Engineering Research Council of Canada (NSERC), Ontario Ministry of Colleges and Universities, and the University of Waterloo.

Institutional Review Board Statement

This study was conducted according to the guidelines of the Declaration of Helsinki and approved by the Ethics Committee of the University of Waterloo (#40954, 23 May 2019) and the Research Ethics Board of the University of Ottawa (H-05-19-3547, 11 July 2019).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the participants to publish this paper.

Data Availability Statement

The data will be made available from the authors upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study, in the collection, analyses, or interpretation of data, in the writing of the manuscript, or in the decision to publish the results.

References

- Jankovic, J. Parkinson’s Disease: Clinical Features and Diagnosis. J. Neurol. Neurosurg. Psychiatry 2008, 79, 368–376. [Google Scholar] [CrossRef] [PubMed]

- Crouse, J.J.; Phillips, J.R.; Jahanshahi, M.; Moustafa, A.A. Postural Instability and Falls in Parkinson’s Disease. Rev. Neurosci. 2016, 27, 549–555. [Google Scholar] [CrossRef] [PubMed]

- Okuma, Y.; Silva de Lima, A.L.; Fukae, J.; Bloem, B.R.; Snijders, A.H. A Prospective Study of Falls in Relation to Freezing of Gait and Response Fluctuations in Parkinson’s Disease. Park. Relat. Disord. 2018, 46, 30–35. [Google Scholar] [CrossRef] [PubMed]

- Bloem, B.R.; Hausdorff, J.M.; Visser, J.E.; Giladi, N. Falls and Freezing of Gait in Parkinson’s Disease: A Review of Two Intercon-nected, Episodic Phenomena. Mov. Disord. 2004, 19, 871–884. [Google Scholar] [CrossRef]

- Nutt, J.G.; Bloem, B.R.; Giladi, N.; Hallett, M.; Horak, F.B.; Nieuwboer, A. Freezing of Gait: Moving Forward on a Mysterious Clinical Phenomenon. Lancet Neurol. 2011, 10, 734–744. [Google Scholar] [CrossRef] [PubMed]

- Ginis, P.; Nackaerts, E.; Nieuwboer, A.; Heremans, E. Cueing for People with Parkinson’s Disease with Freezing of Gait: A Narrative Review of the State-of-the-Art and Novel Perspectives. Ann. Phys. Rehabil. Med. 2018, 61, 407–413. [Google Scholar] [CrossRef] [PubMed]

- Nieuwboer, A. Cueing for Freezing of Gait in Patients with Parkinson’s Disease: A Rehabilitation Perspective. Mov. Disord. 2008, 23, S475–S481. [Google Scholar] [CrossRef] [PubMed]

- Huang, T.; Li, M.; Huang, J. Recent Trends in Wearable Device Used to Detect Freezing of Gait and Falls in People with Parkinson’s Disease: A Systematic Review. Front. Aging Neurosci. 2023, 15, 1119956. [Google Scholar] [CrossRef] [PubMed]

- Barzallo, B.; Punin, C.; Llumiguano, C.; Huerta, M. Wireless Assistance System During Episodes of Freezing of Gait by Means Superficial Electrical Stimulation. In Proceedings of the World Congress on Medical Physics and Biomedical Engineering 2018, Prague, Czech Republic, 3–8 June 2018; Springer Singapore Pte. Limited: Singapore, 2018; Volume 68, pp. 865–870. [Google Scholar]

- Mikos, V.; Heng, C.-H.; Tay, A.; Yen, S.-C.; Yu Chia, N.S.; Ling Koh, K.M.; Leng Tan, D.M.; Lok Au, W. A Neural Network Accelerator with Integrated Feature Extraction Processor for a Freezing of Gait Detection System. In Proceedings of the 2018 IEEE Asian Solid-State Circuits Conference (A-SSCC), Tainan, Taiwan, 5–7 November 2018; pp. 59–62. [Google Scholar]

- Punin, C.; Barzallo, B.; Huerta, M.; Bermeo, A.; Bravo, M.; Llumiguano, C. Wireless Devices to Restart Walking during an Episode of FOG on Patients with Parkinson’s Disease. In Proceedings of the 2017 IEEE Second Ecuador Technical Chapters Meeting (ETCM), Salinas, Ecuado, 16–20 October 2017; pp. 1–6. [Google Scholar]

- Rodríguez-Martín, D.; Pérez-López, C.; Samà, A.; Català, A.; Moreno Arostegui, J.M.; Cabestany, J.; Mestre, B.; Alcaine, S.; Prats, A.; de la Cruz Crespo, M.; et al. A Waist-Worn Inertial Measurement Unit for Long-Term Monitoring of Parkinson’s Disease Patients. Sensors 2017, 17, 827. [Google Scholar] [CrossRef] [PubMed]

- Bächlin, M.; Plotnik, M.; Roggen, D.; Giladi, N.; Hausdorff, J.M.; Tröster, G. A Wearable System to Assist Walking of Parkinsońs Disease Patients Benefits and Challenges of Context-Triggered Acoustic Cueing. Methods Inf. Med. 2010, 49, 88–95. [Google Scholar] [CrossRef]

- Pardoel, S.; Shalin, G.; Nantel, J.; Lemaire, E.D.; Kofman, J. Early Detection of Freezing of Gait during Walking Using Inertial Measurement Unit and Plantar Pressure Distribution Data. Sensors 2021, 21, 2246. [Google Scholar] [CrossRef]

- Xia, Y.; Sun, H.; Zhang, B.; Xu, Y.; Ye, Q. Prediction of Freezing of Gait Based on Self-Supervised Pretraining via Contrastive Learning. Biomed. Signal Process Control 2024, 89, 105765. [Google Scholar] [CrossRef]

- Borzì, L.; Sigcha, L.; Rodríguez-Martín, D.; Olmo, G. Real-Time Detection of Freezing of Gait in Parkinson’s Disease Using Mul-ti-Head Convolutional Neural Networks and a Single Inertial Sensor. Artif. Intell. Med. 2023, 135, 102459. [Google Scholar] [CrossRef] [PubMed]

- Sigcha, L.; Borzì, L.; Pavón, I.; Costa, N.; Costa, S.; Arezes, P.; López, J.M.; De Arcas, G. Improvement of Performance in Freezing of Gait Detection in Parkinson’s Disease Using Transformer Networks and a Single Waist-Worn Triaxial Accelerometer. Eng. Appl. Artif. Intell. 2022, 116, 105482. [Google Scholar] [CrossRef]

- Borzì, L.; Mazzetta, I.; Zampogna, A.; Suppa, A.; Olmo, G.; Irrera, F. Prediction of Freezing of Gait in Parkinson’s Disease Using Wearables and Machine Learning. Sensors 2021, 21, 614. [Google Scholar] [CrossRef]

- Marcante, A.; Di Marco, R.; Gentile, G.; Pellicano, C.; Assogna, F.; Pontieri, F.E.; Spalletta, G.; Macchiusi, L.; Gatsios, D.; Giannakis, A.; et al. Foot Pressure Wearable Sensors for Freezing of Gait Detection in Parkinson’s Disease. Sensors 2021, 21, 128. [Google Scholar] [CrossRef] [PubMed]

- Pardoel, S.; Nantel, J.; Kofman, J.; Lemaire, E.D. Prediction of Freezing of Gait in Parkinson’s Disease Using Unilateral and Bilateral Plantar-Pressure Data. Front. Neurol. 2022, 13, 831063. [Google Scholar] [CrossRef]

- Shalin, G.; Pardoel, S.; Lemaire, E.D.; Nantel, J.; Kofman, J. Prediction and Detection of Freezing of Gait in Parkinson’s Disease from Plantar Pressure Data Using Long Short-Term Memory Neural-Networks. J. Neuroeng. Rehabil. 2021, 18, 167. [Google Scholar] [CrossRef]

- Nieuwboer, A.; Rochester, L.; Herman, T.; Vandenberghe, W.; Emil, G.E.; Thomaes, T.; Giladi, N. Reliability of the New Freezing of Gait Questionnaire: Agreement between Patients with Parkinson’s Disease and Their Carers. Gait Posture 2009, 30, 459–463. [Google Scholar] [CrossRef]

- Goetz, C.G.; Tilley, B.C.; Shaftman, S.R.; Stebbins, G.T.; Fahn, S.; Martinez-Martin, P.; Poewe, W.; Sampaio, C.; Stern, M.B.; Dodel, R.; et al. Movement Disorder Society-Sponsored Revision of the Unified Parkinson’s Disease Rating Scale (MDS-UPDRS): Scale Presentation and Clinimetric Testing Results. Mov. Disord. 2008, 23, 2129–2170. [Google Scholar] [CrossRef]

- Palmerini, L.; Rocchi, L.; Mazilu, S.; Gazit, E.; Hausdorff, J.M.; Chiari, L. Identification of Characteristic Motor Patterns Preceding Freezing of Gait in Parkinson’s Disease Using Wearable Sensors. Front. Neurol. 2017, 8, 394. [Google Scholar] [CrossRef]

- Pardoel, S.; Shalin, G.; Lemaire, E.D.; Kofman, J.; Nantel, J. Grouping Successive Freezing of Gait Episodes Has Neutral to Detri-mental Effect on Freeze Detection and Prediction in Parkinson’s Disease. PLoS ONE 2021, 16, e0258544. [Google Scholar] [CrossRef] [PubMed]

- Schaafsma, J.D.; Balash, Y.; Gurevich, T.; Bartels, A.L.; Hausdorff, J.M.; Giladi, N. Characterization of Freezing of Gait Subtypes and the Response of Each to Levodopa in Parkinson’s Disease. Eur. J. Neurol. 2003, 10, 391–398. [Google Scholar] [CrossRef] [PubMed]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).