High-Resolution Single-Pixel Imaging of Spatially Sparse Objects: Real-Time Imaging in the Near-Infrared and Visible Wavelength Ranges Enhanced with Iterative Processing or Deep Learning

Abstract

1. Introduction

2. Materials and Methods

2.1. Constraints and Objectives for the Proposed Sampling Framework: SPI Pattern Requirements for DMD Modulators

- Sampling patterns should be binary (with values 0 and 1) because DMD mirrors have two states.

- Sampling patterns should include approximately half of the pixels in the on state (due to the arguments related to light efficiency, Fellgett’s advantage, level of quantization errors induced by DAQ, and signal entropy considerations).

- Sampling should be differential, meaning that image reconstruction is based on the differences between measurements with consecutive patterns. This eliminates any constant bias from stray light, the background, or inactive areas of the DMD.

- Sampling patterns should have a spatial spectrum dominated by low spatial frequency contents because most real-world images have their Fourier representation concentrated at low spatial frequencies (this is the usual approach in compressive SPI with, for example, the selection of a subset of Walsh–Hadamard, DCT or wavelet patterns).

- Sampling should provide easily accessible information on the locations of sparse areas of the probed image. Identifying sparse image regions makes it possible to reconstruct dense regions with better accuracy also at a low sampling rate. For instance, random binary patterns would measure mostly the mean value of the entire image. The best would be patterns in the forms of small figures with various shapes.

2.2. Sampling Patterns

| Algorithm 1 Construction of a binary measurement matrix from image maps. | |

| Input: — matrix containing l maps with pixels | |

| Input: —vector of length n with random integer values in the range | |

| Input: —a look-up table; binary matrix, such that is full rank. Here is finite difference operator that subtracts matrix rows. | |

| Output: — measurement matrix with rows containing binary patterns with n pixels each | |

| function make patterns() | |

| for do | ▹ Iterate maps |

| ▹i-th map | |

| for do | ▹ Iterate rows of |

| for do | ▹ Iterate sectors of map |

| ▹ Index of the next sampling pattern | |

| ▹ Get indices of all pixels of j-th sector of i-th map | |

| ▹ Assign binary values to pixels of a sector | |

| end for | |

| end for | |

| end for | |

| return | ▹ Return the measurement matrix |

| end function | |

2.3. Differential Multiplexing

2.4. Initial Image Reconstruction

| Algorithm 2 Image reconstruction from a compressive measurement. | |

| Input: —measurement vector of length k (it i assumed that , where is the image size in pixels, and that the measurement equation is , where is the measured image, and is the binary measurement matrix) | |

| Input: — binary array such that at pixels p belonging to region j of map i unless . In further notation, () represents a slice of which corresponds to the i-th map. | |

| Input: —array of l matrices () with dimensions . Here is the generalized matrix inverse g applied to the measurement matrix after taking the differences of its rows with operator | |

| Input: —the initial image reconstruction vector of size (set by us to the initial reconstruction result but may also be filled with constant positive values) | |

| Input: —learning rate (we took ) | |

| Output: —vector of size n with the reconstructed image | |

| function IMAGE RECONSTRUCT(, , , , f ) | |

| for do | |

| for do | ▹ Loop over maps |

| ▹ Expected pixel sums in sectors of map | |

| ▹ Current pixel sums in sectors of map j | |

| where else 0 | |

| end for | |

| end for | |

| return | ▹ Return the reconstructed image |

| end function | |

2.5. Reconstruction Enhancement with an Iterative Algorithm

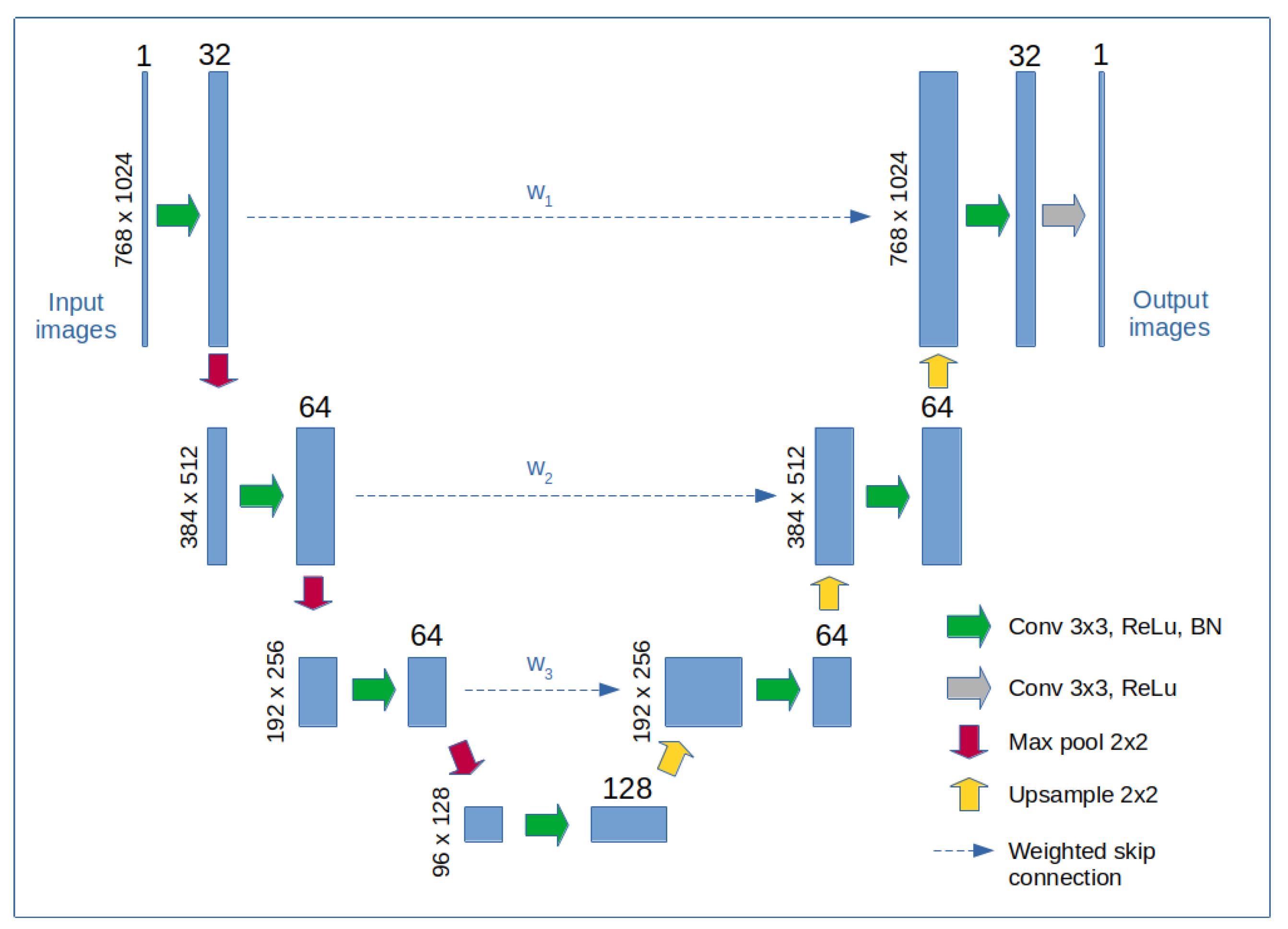

2.6. Reconstruction Enhancement with a Neural Network

2.7. Optical Set-Up

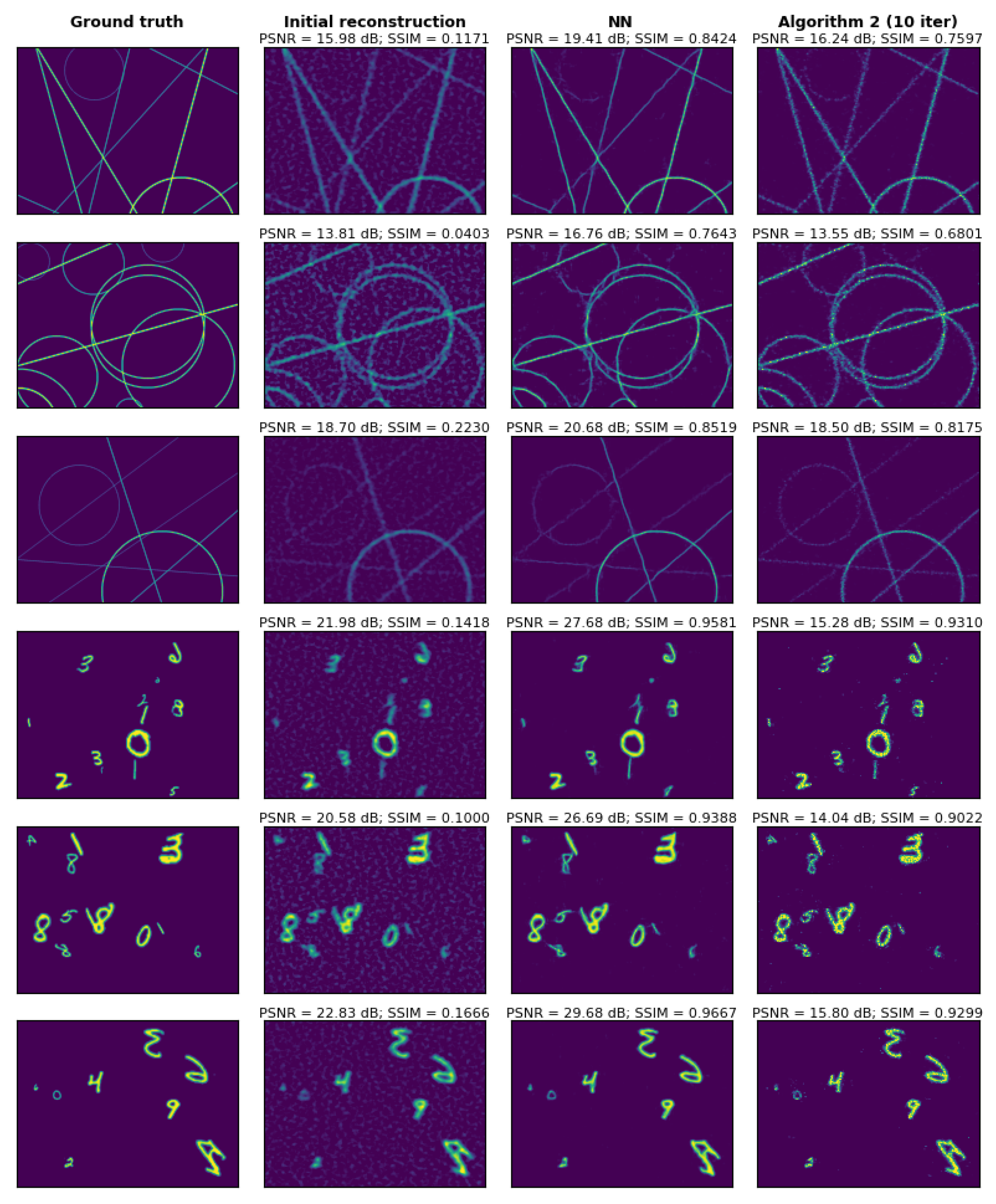

3. Results

3.1. Image Acquisition and Reconstruction Times and Computational Requirements

3.2. Effect of Compression, Background Noise, and Detector Noises on Imaging

3.3. Experimental Results

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Abbreviations

| SPI | Single-Pixel Imaging |

| DMD | Digital Micromirror Device |

| NN | Neural Network |

| PSNR | Peak Signal to Noise Ratio |

| SSIM | Structural Similarity index |

| MSE | Mean Square Error |

| FDRI | Fourier Domain Regularized Inversion |

| MD-FDRI | Map-based Differential FDRI |

| SNR | Signal-to-Noise-Ratio |

| GPU | Graphical Processing Unit |

Appendix A

References

- Duarte, M.F.; Davenport, M.A.; Takhar, D.; Laska, J.N.; Sun, T.; Kelly, K.F.; Baraniuk, R.G. Single-pixel imaging via compressive sampling. IEEE Sig. Proc. Mag. 2008, 25, 83–91. [Google Scholar] [CrossRef]

- Candes, E.J.; Romberg, J.K.; Tao, T. Stable signal recovery from incomplete and inaccurate measurements. Commun. Pure Appl. Math. 2006, 59, 1207–1223. [Google Scholar] [CrossRef]

- Osorio Quero, C.A.; Durini, D.; Rangel-Magdaleno, J.; Martinez-Carranza, J. Single-pixel imaging: An overview of different methods to be used for 3D space reconstruction in harsh environments. Rev. Sci. Instrum. 2021, 92, 111501. [Google Scholar] [CrossRef] [PubMed]

- Gibson, G.M.; Johnson, S.D.; Padgett, M.J. Single-pixel imaging 12 years on: A review. Opt. Express 2020, 28, 28190–28208. [Google Scholar] [CrossRef]

- Edgar, M.P.; Gibson, G.M.; Padgett, M.J. Principles and prospects for single-pixel imaging. Nat. Photonics 2018, 13, 13–20. [Google Scholar] [CrossRef]

- Mahalanobis, A.; Shilling, R.; Murphy, R.; Muise, R. Recent results of medium wave infrared compressive sensing. Appl. Opt. 2014, 53, 8060–8070. [Google Scholar] [CrossRef]

- Radwell, N.; Mitchell, K.J.; Gibson, G.M.; Edgar, M.P.; Bowman, R.; Padgett, M.J. Single-pixel infrared and visible microscope. Optica 2014, 1, 285–289. [Google Scholar] [CrossRef]

- Denk, O.; Musiienko, A.; Žídek, K. Differential single-pixel camera enabling low-cost microscopy in near-infrared spectral region. Opt. Express 2019, 27, 4562. [Google Scholar] [CrossRef]

- Zhou, Q.; Ke, J.; Lam, E.Y. Near-infrared temporal compressive imaging for video. Opt. Lett. 2019, 44, 1702. [Google Scholar] [CrossRef]

- Pastuszczak, A.; Stojek, R.; Wróbel, P.; Kotyński, R. Differential real-time single-pixel imaging with Fourier domain regularization—Applications to VIS-IR imaging and polarization imaging. Opt. Express 2021, 29, 2685–26700. [Google Scholar] [CrossRef]

- Ye, J.T.; Yu, C.; Li, W.; Li, Z.P.; Lu, H.; Zhang, R.; Zhang, J.; Xu, F.; Pan, J.W. Ultraviolet photon-counting single-pixel imaging. Appl. Phys. Lett. 2023, 123, 024005. [Google Scholar] [CrossRef]

- Stantchev, R.; Yu, X.; Blu, T.; Pickwell-MacPherson, E. Real-time terahertz imaging with a single-pixel detector. Nat. Commun. 2020, 11, 2535. [Google Scholar] [CrossRef] [PubMed]

- Zanotto, L.; Piccoli, R.; Dong, J.; Morandotti, R.; Razzari, L. Single-pixel terahertz imaging: A review. Opto-Electron Adv. 2020, 3, 200012. [Google Scholar] [CrossRef]

- Olbinado, M.P.; Paganin, D.M.; Cheng, Y.; Rack, A. X-ray phase-contrast ghost imaging using a single-pixel camera. Optica 2021, 8, 1538–1544. [Google Scholar] [CrossRef]

- Li, J.; Cheng, K.; Qi, S.; Zhang, Z.; Zheng, G.; Zhong, J. Full-resolution, full-field-of-view, and high-quality fast Fourier single-pixel imaging. Opt. Lett. 2023, 48, 49–52. [Google Scholar] [CrossRef]

- Czajkowski, K.; Pastuszczak, A.; Kotynski, R. Real-time single-pixel video imaging with Fourier domain regularization. Opt. Express 2018, 26, 20009–20022. [Google Scholar] [CrossRef]

- Higham, C.; Murray-Smith, R.; Padgett, M.; Edgar, M. Deep learning for real-time single-pixel video. Sci. Rep. 2018, 8, 2369. [Google Scholar] [CrossRef]

- Rizvi, S.; Cao, J.; Zhang, K.; Hao, Q. DeepGhost: Real-time computational ghost imaging via deep learning. Sci. Rep. 2020, 10, 11400. [Google Scholar] [CrossRef]

- Wang, G.; Deng, H.; Ma, M.; Zhong, X.; Gong, X. A non-iterative foveated single-pixel imaging using fast transformation algorithm. Appl. Phys. Lett. 2023, 123, 081101. [Google Scholar] [CrossRef]

- Zhu, X.; Li, Y.; Zhang, Z.; Zhong, J. Adaptive real-time single-pixel imaging. Opt. Lett. 2024, 49, 1065–1068. [Google Scholar] [CrossRef]

- Zhang, Z.; Zheng, S.; Qiu, M.; Situ, G.; Brady, D.J.; Dai, Q.; Suo, J.; Yuan, X. A Decade Review of Video Compressive Sensing: A Roadmap to Practical Applications. Engineering 2024. In Press. [Google Scholar] [CrossRef]

- Ni, Y.; Zhou, D.; Yuan, S.; Bai, X.; Xu, Z.; Chen, J.; Li, C.; Zhou, X. Color computational ghost imaging based on a generative adversarial network. Opt. Lett. 2021, 46, 1840–1843. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Wang, R.; Zhao, G.; Xiao, H.; Wang, D.; Liang, J.; Tian, X.; Cheng, L.; Zhang, X. Sub-Nyquist computational ghost imaging with deep learning. Opt. Express 2020, 28, 3846–3853. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Wang, H.; Wang, H.; Li, G.; Situ, G. Learning from simulation: An end-to-end deep-learning approach for computational ghost imaging. Opt. Express 2019, 27, 25560–25572. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Wang, C.; Deng, C.; Han, S.; Situ, G. Single-pixel imaging using physics enhanced deep learning. Photon. Res. 2022, 10, 104–110. [Google Scholar] [CrossRef]

- Tian, Y.; Fu, Y.; Zhang, J. Plug-and-play algorithms for single-pixel imaging. Opt. Lasers Eng. 2022, 154, 106970. [Google Scholar] [CrossRef]

- Cheng, Z.; Lu, R.; Wang, Z.; Zhang, H.; Chen, B.; Meng, Z.; Yuan, X. BIRNAT: Bidirectional Recurrent Neural Networks with Adversarial Training for Video Snapshot Compressive Imaging. In Proceedings of the Computer Vision—ECCV 2020, Glasgow, UK, 23–28 August 2020; Vedaldi, A., Bischof, H., Brox, T., Frahm, J.M., Eds.; Springer: Berlin/Heidelberg, Germany, 2020; pp. 258–275. [Google Scholar] [CrossRef]

- Qin, C.; Schlemper, J.; Caballero, J.; Price, A.N.; Hajnal, J.V.; Rueckert, D. Convolutional Recurrent Neural Networks for Dynamic MR Image Reconstruction. IEEE Trans. Med. Imaging 2019, 38, 280–290. [Google Scholar] [CrossRef]

- Li, Z.; Huang, J.; Shi, D.; Chen, Y.; Yuan, K.; Hu, S.; Wang, Y. Single-pixel imaging with untrained convolutional autoencoder network. Opt. Laser Technol. 2023, 167, 109710. [Google Scholar] [CrossRef]

- Liu, S.; Meng, X.; Yin, Y.; Wu, H.; Jiang, W. Computational ghost imaging based on an untrained neural network. Opt. Lasers Eng. 2021, 147, 106744. [Google Scholar] [CrossRef]

- Wang, C.H.; Li, H.Z.; Bie, S.H.; Lv, R.B.; Chen, X.H. Single-Pixel Hyperspectral Imaging via an Untrained Convolutional Neural Network. Photonics 2023, 10, 224. [Google Scholar] [CrossRef]

- Stojek, R.; Pastuszczak, A.; Wrobel, P.; Kotynski, R. Single pixel imaging at high pixel resolutions. Opt. Express 2022, 30, 22730–22745. [Google Scholar] [CrossRef] [PubMed]

- Pastuszczak, A.; Stojek, R.; Wróbel, P.; Kotyński, R. Single pixel imaging at high resolution with sampling based on image maps. In Proceedings of the 2024 International Workshop on the Theory of Computational Sensing and its Applications to Radar, Multimodal Sensing and Imaging (CoSeRa), Santiago de Compostela, Spain, 18–20 September 2024; pp. 86–90. [Google Scholar] [CrossRef]

- Zhao, W.; Gao, L.; Zhai, A.; Wang, D. Comparison of Common Algorithms for Single-Pixel Imaging via Compressed Sensing. Sensors 2023, 23, 4678. [Google Scholar] [CrossRef] [PubMed]

- Bian, L.; Suo, J.; Dai, Q.; Chen, F. Experimental comparison of single-pixel imaging algorithms. J. Opt. Soc. Am. A 2018, 35, 78–87. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Ma, X.; Zhong, J. Single-pixel imaging by means of Fourier spectrum acquisition. Nat. Commun. 2015, 6, 6225. [Google Scholar] [CrossRef]

- Czajkowski, K.M.; Pastuszczak, A.; Kotynski, R. Single-pixel imaging with sampling distributed over simplex vertices. Opt. Lett. 2019, 44, 1241–1244. [Google Scholar] [CrossRef]

- Yu, W.K.; Liu, X.F.; Yao, X.R.; Wang, C.; Zhai, Y.; Zhai, G.J. Complementary compressive imaging for the telescopic system. Sci. Rep. 2014, 4, 5834. [Google Scholar] [CrossRef]

- Gong, W. Disturbance-free single-pixel imaging camera via complementary detection. Opt. Express 2023, 31, 30505–30513. [Google Scholar] [CrossRef]

- Yu, W.K.; Yao, X.R.; Liu, X.F.; Li, L.Z.; Zhai, G.J. Compressive moving target tracking with thermal light based on complementary sampling. Appl. Opt. 2015, 54, 4249–4254. [Google Scholar] [CrossRef]

- Fellgett, P. Conclusions on multiplex methods. J. Phys. Colloq. 1967, 28, C2-165–C2-171. [Google Scholar] [CrossRef]

- Scotté, C.; Galland, F.; Rigneault, H. Photon-noise: Is a single-pixel camera better than point scanning? A signal-to-noise ratio analysis for Hadamard and Cosine positive modulation. J. Phys. Photonics 2023, 5, 035003. [Google Scholar] [CrossRef]

- Phillips, D.B.; Sun, M.J.; Taylor, J.M.; Edgar, M.P.; Barnett, S.M.; Gibson, G.M.; Padgett, M.J. Adaptive foveated single-pixel imaging with dynamic supersampling. Sci. Adv. 2017, 3, e1601782. [Google Scholar] [CrossRef] [PubMed]

- Cui, H.; Cao, J.; Zhang, H.; Zhou, C.; Yao, H.; Hao, Q. Uniform-sampling foveated Fourier single-pixel imaging. Opt. Laser Technol. 2024, 179, 111249. [Google Scholar] [CrossRef]

- Deng, L. The mnist database of handwritten digit images for machine learning research. IEEE Signal Process. Mag. 2012, 29, 141–142. [Google Scholar] [CrossRef]

- Sobczak, K.; Kotynski, R.; Stojek, R.; Pastuszczak, A.; Cwojdzinska, M.; Wróbel, P. Large Datasets for High Resolution Single Pixel Imaging; Data Repository of the University of Warsaw: Warsaw, Poland, 2024. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stojek, R.; Pastuszczak, A.; Wróbel, P.; Cwojdzińska, M.; Sobczak, K.; Kotyński, R. High-Resolution Single-Pixel Imaging of Spatially Sparse Objects: Real-Time Imaging in the Near-Infrared and Visible Wavelength Ranges Enhanced with Iterative Processing or Deep Learning. Sensors 2024, 24, 8139. https://doi.org/10.3390/s24248139

Stojek R, Pastuszczak A, Wróbel P, Cwojdzińska M, Sobczak K, Kotyński R. High-Resolution Single-Pixel Imaging of Spatially Sparse Objects: Real-Time Imaging in the Near-Infrared and Visible Wavelength Ranges Enhanced with Iterative Processing or Deep Learning. Sensors. 2024; 24(24):8139. https://doi.org/10.3390/s24248139

Chicago/Turabian StyleStojek, Rafał, Anna Pastuszczak, Piotr Wróbel, Magdalena Cwojdzińska, Kacper Sobczak, and Rafał Kotyński. 2024. "High-Resolution Single-Pixel Imaging of Spatially Sparse Objects: Real-Time Imaging in the Near-Infrared and Visible Wavelength Ranges Enhanced with Iterative Processing or Deep Learning" Sensors 24, no. 24: 8139. https://doi.org/10.3390/s24248139

APA StyleStojek, R., Pastuszczak, A., Wróbel, P., Cwojdzińska, M., Sobczak, K., & Kotyński, R. (2024). High-Resolution Single-Pixel Imaging of Spatially Sparse Objects: Real-Time Imaging in the Near-Infrared and Visible Wavelength Ranges Enhanced with Iterative Processing or Deep Learning. Sensors, 24(24), 8139. https://doi.org/10.3390/s24248139