Mind the Step: An Artificial Intelligence-Based Monitoring Platform for Animal Welfare †

Abstract

1. Introduction

2. Materials and Methods

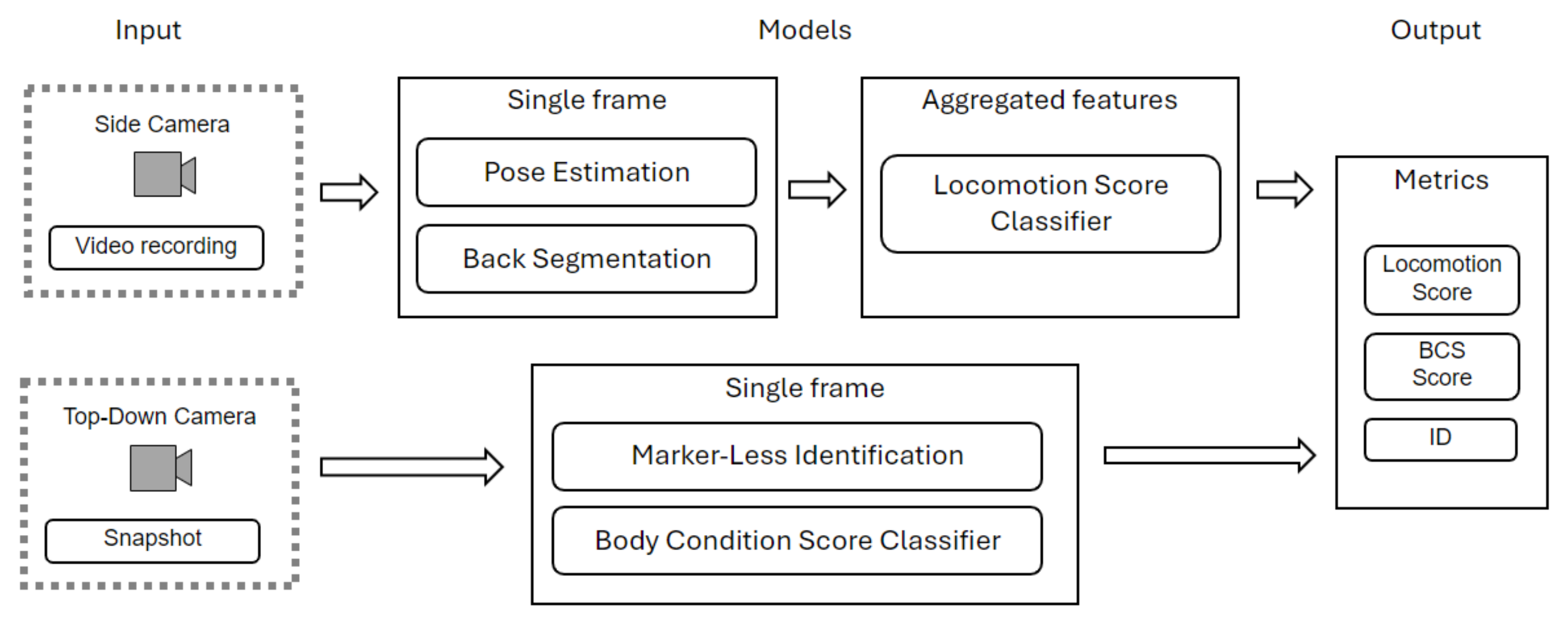

2.1. System 1—Health Status Assessment

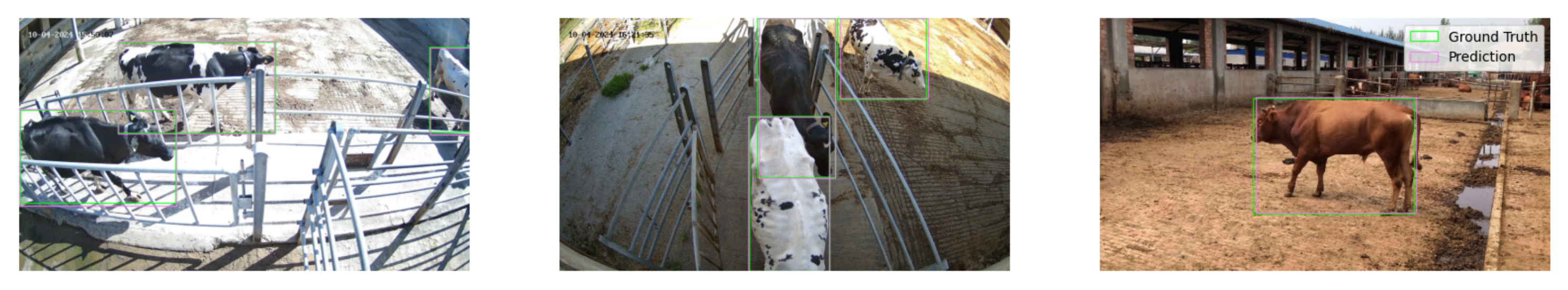

2.1.1. Setup and Data

2.1.2. Cattle Detection and Tracking

2.1.3. Pose Estimation and Locomotion Features

2.1.4. Locomotion Score Classifier

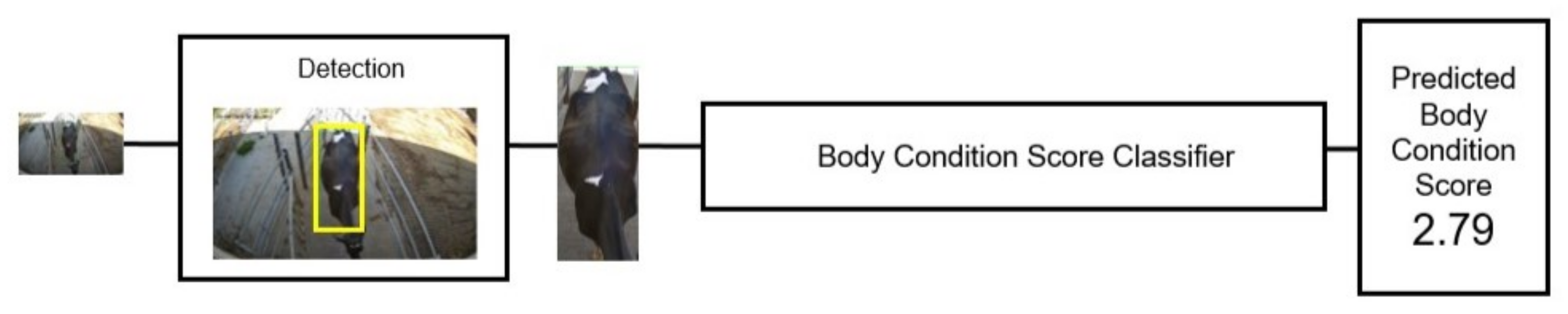

2.1.5. Body Condition Score Classifier

2.1.6. Markerless Identification

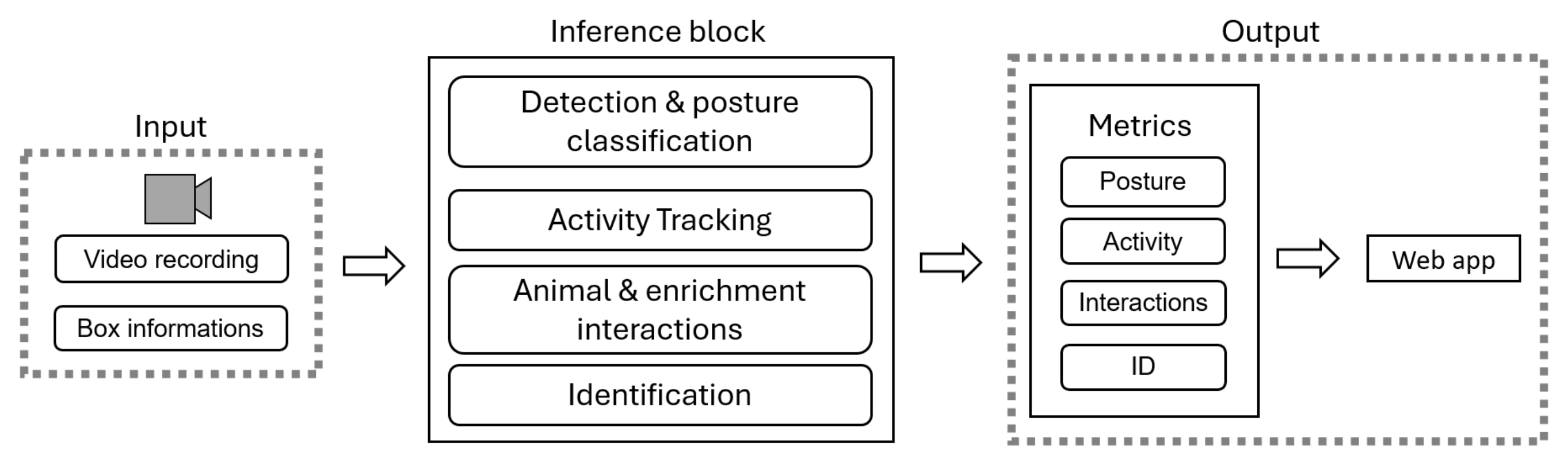

2.2. System 2—Behavioral Analysis

2.2.1. Setup and Data

2.2.2. Identification and Monitoring Models

2.2.3. Pig Detection, Classification, and Tracking

2.2.4. Activity Tracking and Body-Part Segmentation

2.2.5. Interactions

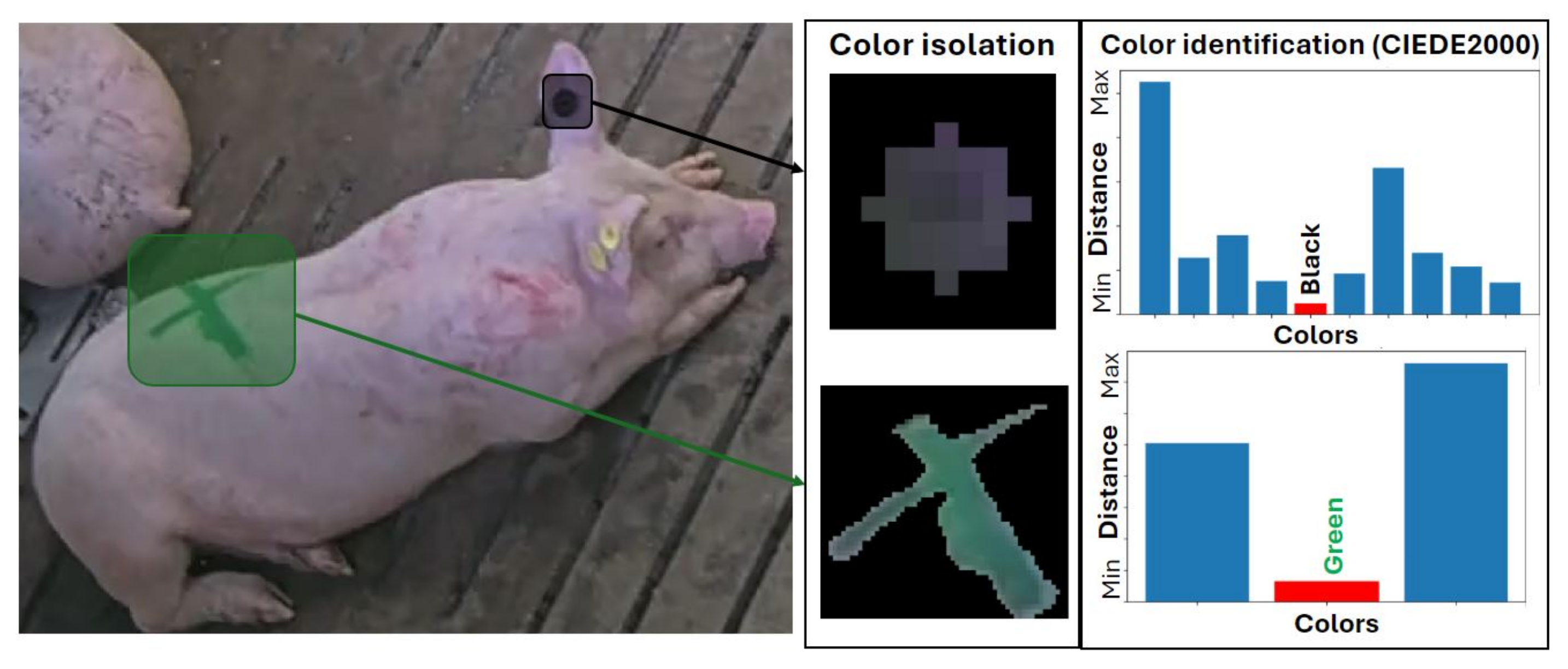

2.2.6. Identification

3. Results

3.1. Dairy Cattle Health Monitoring

3.1.1. Comparative Results Across Datasets

3.1.2. Performance

3.2. Pig Behavioral Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Tzanidakis, C.; Simitzis, P.; Panagakis, P. Precision Livestock Farming (PLF) Systems: Improving Sustainability and Efficiency of Animal Production. In Sustainability; Springer International Publishing: Berlin/Heidelberg, Germany, 2023; pp. 285–337. [Google Scholar] [CrossRef]

- Siegford, J.M.; Steibel, J.P.; Han, J.; Benjamin, M.; Brown-Brandl, T.; Dórea, J.R.; Morris, D.; Norton, T.; Psota, E.; Rosa, G.J. The quest to develop automated systems for monitoring animal behavior. Appl. Anim. Behav. Sci. 2023, 265, 106000. [Google Scholar] [CrossRef]

- O’Grady, M.; Langton, D.; O’Hare, G. Edge computing: A tractable model for smart agriculture? Artif. Intell. Agric. 2019, 3, 42–51. [Google Scholar] [CrossRef]

- Chessa, S.; Dimitri, G.M.; Gori, M.; Kocian, A. WoA: An infrastructural, web-based approach to digital agriculture. In Proceedings of the 14th International Symposium on Ambient Intelligence (ISAmI 2023), Guimarães, Portugal, 12–14 July 2023; pp. 113–122. [Google Scholar]

- Curry, E. Dataspaces: Fundamentals, Principles, and Techniques. In Real-Time Linked Dataspaces; Springer International Publishing: Berlin/Heidelberg, Germany, 2019; pp. 45–62. [Google Scholar] [CrossRef]

- Wutke, M.; Heinrich, F.; Das, P.P.; Lange, A.; Gentz, M.; Traulsen, I.; Warns, F.K.; Schmitt, A.O.; Gültas, M. Detecting Animal Contacts—A Deep Learning-Based Pig Detection and Tracking Approach for the Quantification of Social Contacts. Sensors 2021, 21, 7512. [Google Scholar] [CrossRef] [PubMed]

- Higaki, S.; Matsui, Y.; Miwa, M.; Yamamura, T.; Hojo, T.; Yoshioka, K.; Vang, A.; Negreiro, A.; Dórea, J.R. Leveraging computer vision-based pose estimation technique in dairy cows for objective mobility analysis and scoring system. Comput. Electron. Agric. 2024, 217, 108573. [Google Scholar] [CrossRef]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common Objects in Context. In Proceedings of the Computer Vision—ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 740–755. [Google Scholar]

- Fan, Q.; Liu, S.; Li, S.; Zhao, C. Bottom-up cattle pose estimation via concise multi-branch network. Comput. Electron. Agric. 2023, 211, 107945. [Google Scholar] [CrossRef]

- Li, R.; Fu, C.; Yi, W.; Yi, X. Calib-Net: Calibrating the Low-Cost IMU via Deep Convolutional Neural Network. Front. Robot. AI 2022, 8, 772583. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLO by Ultralytics, 2023. Software. Available online: https://github.com/ultralytics/ultralytics (accessed on 11 September 2023).

- Andrew, W.; Gao, J.; Campbell, N.W.; Dowsey, A.W.; Burghardt, T. Visual Identification of Individual Holstein Friesian Cattle via Deep Metric Learning. Comput. Electron. Agric. 2021, 185, 106133. [Google Scholar] [CrossRef]

- Zia, A.; Sharma, R.; Arablouei, R.; Bishop-Hurley, G.; McNally, J.; Bagnall, N.; Rolland, V.; Kusy, B.; Petersson, L.; Ingham, A. CVB: A Video Dataset of Cattle Visual Behaviors. arXiv 2023, arXiv:2305.16555. [Google Scholar]

- Sprecher, D.; Hostetler, D.; Kaneene, J. A lameness scoring system that uses posture and gait to predict dairy cattle reproductive performance. Theriogenology 1997, 47, 1179–1187. [Google Scholar] [CrossRef] [PubMed]

- Edmonson, A.J.; Lean, I.J.; Weaver, L.D.; Farver, T.; Webster, G. A Body Condition Scoring Chart for Holstein Dairy Cows. J. Dairy Sci. 1989, 72, 68–78. [Google Scholar] [CrossRef]

- Schroff, F.; Kalenichenko, D.; Philbin, J. FaceNet: A Unified Embedding for Face Recognition and Clustering. arXiv 2015, arXiv:1503.03832. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-object Tracking by Associating Every Detection Box. In Proceedings of the Computer Vision—ECCV 2022: 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Avidan, S., Brostow, G., Cissé, M., Farinella, G.M., Hassner, T., Eds.; Springer Nature: Cham, Switzerland, 2022; pp. 1–21. [Google Scholar]

- Luo, M.R.; Cui, G.; Rigg, B. The development of the CIE 2000 colour-difference formula: CIEDE2000. Color Res. Appl. 2001, 26, 340–350. [Google Scholar] [CrossRef]

| Feature | Description |

|---|---|

| Body length | Distance between withers and pin key points |

| Right step size | Front-back hoof distance (n1) |

| Left step size | Front-back hoof distance (n1) |

| Head position | Head height (n2) |

| Front-right hoof–elbow shift | Horizontal shift from front-right hoof to elbow (n1) |

| Front-left hoof–elbow shift | Horizontal shift from front-left hoof to elbow (n1) |

| Back-right hoof–elbow shift | Horizontal shift from back-right hoof to elbow (n1) |

| Back-left hoof–elbow shift | Horizontal shift from back-left hoof to elbow (n1) |

| Back straightness score | Back segmented edge line score |

| Public Portion | Acquired Portion | Complete Test Set | ||||

|---|---|---|---|---|---|---|

| Model | No. of Instances | mAP50 | No. of Instances | mAP50 | No. of Instances | mAP50 |

| Detection | 13,629 | 0.985 | 2418 | 0.957 | 16,047 | 0.978 |

| Pose | 3288 | 0.983 | 400 | 0.881 | 3688 | 0.972 |

| Model | Metric | Inference Time [ms] | ||

|---|---|---|---|---|

| RTX2060 | RTX3060 | H100 | ||

| Detection | mAP50 = 0.978 | 37.29 | 34.44 | 26.54 |

| Pose | mAP50 = 0.972; OKS = 0.786 | 20.17 | 19.51 | 10.57 |

| Back | mAP50 = 0.951; IoU = 0.931 | 26.29 | 19.34 | 13.29 |

| LS Score | = 0.96 | 1.93 | 1.48 | 0.15 |

| BCS | = 0.83 | 22.64 | 18.06 | 10.31 |

| Identif. | 98% (Acc) | 13.17 | 11.30 | 7.57 |

| Model | Inference Time [ms] | |

|---|---|---|

| V100 | H100 | |

| Detection and Classification | 25 | 18 |

| Body Segmentation | 27 | 18 |

| Interactions | 17 | 11 |

| Identification (both models) | 40 | 30 |

| Activity Tracking | 8 | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Michielon, A.; Litta, P.; Bonelli, F.; Don, G.; Farisè, S.; Giannuzzi, D.; Milanesi, M.; Pietrucci, D.; Vezzoli, A.; Cecchinato, A.; et al. Mind the Step: An Artificial Intelligence-Based Monitoring Platform for Animal Welfare. Sensors 2024, 24, 8042. https://doi.org/10.3390/s24248042

Michielon A, Litta P, Bonelli F, Don G, Farisè S, Giannuzzi D, Milanesi M, Pietrucci D, Vezzoli A, Cecchinato A, et al. Mind the Step: An Artificial Intelligence-Based Monitoring Platform for Animal Welfare. Sensors. 2024; 24(24):8042. https://doi.org/10.3390/s24248042

Chicago/Turabian StyleMichielon, Andrea, Paolo Litta, Francesca Bonelli, Gregorio Don, Stefano Farisè, Diana Giannuzzi, Marco Milanesi, Daniele Pietrucci, Angelica Vezzoli, Alessio Cecchinato, and et al. 2024. "Mind the Step: An Artificial Intelligence-Based Monitoring Platform for Animal Welfare" Sensors 24, no. 24: 8042. https://doi.org/10.3390/s24248042

APA StyleMichielon, A., Litta, P., Bonelli, F., Don, G., Farisè, S., Giannuzzi, D., Milanesi, M., Pietrucci, D., Vezzoli, A., Cecchinato, A., Chillemi, G., Gallo, L., Mele, M., & Furlanello, C. (2024). Mind the Step: An Artificial Intelligence-Based Monitoring Platform for Animal Welfare. Sensors, 24(24), 8042. https://doi.org/10.3390/s24248042