LiDAR-Based Negative Obstacle Detection for Unmanned Ground Vehicles in Orchards

Abstract

1. Introduction

2. Methods

2.1. Tilted Installation of LiDAR

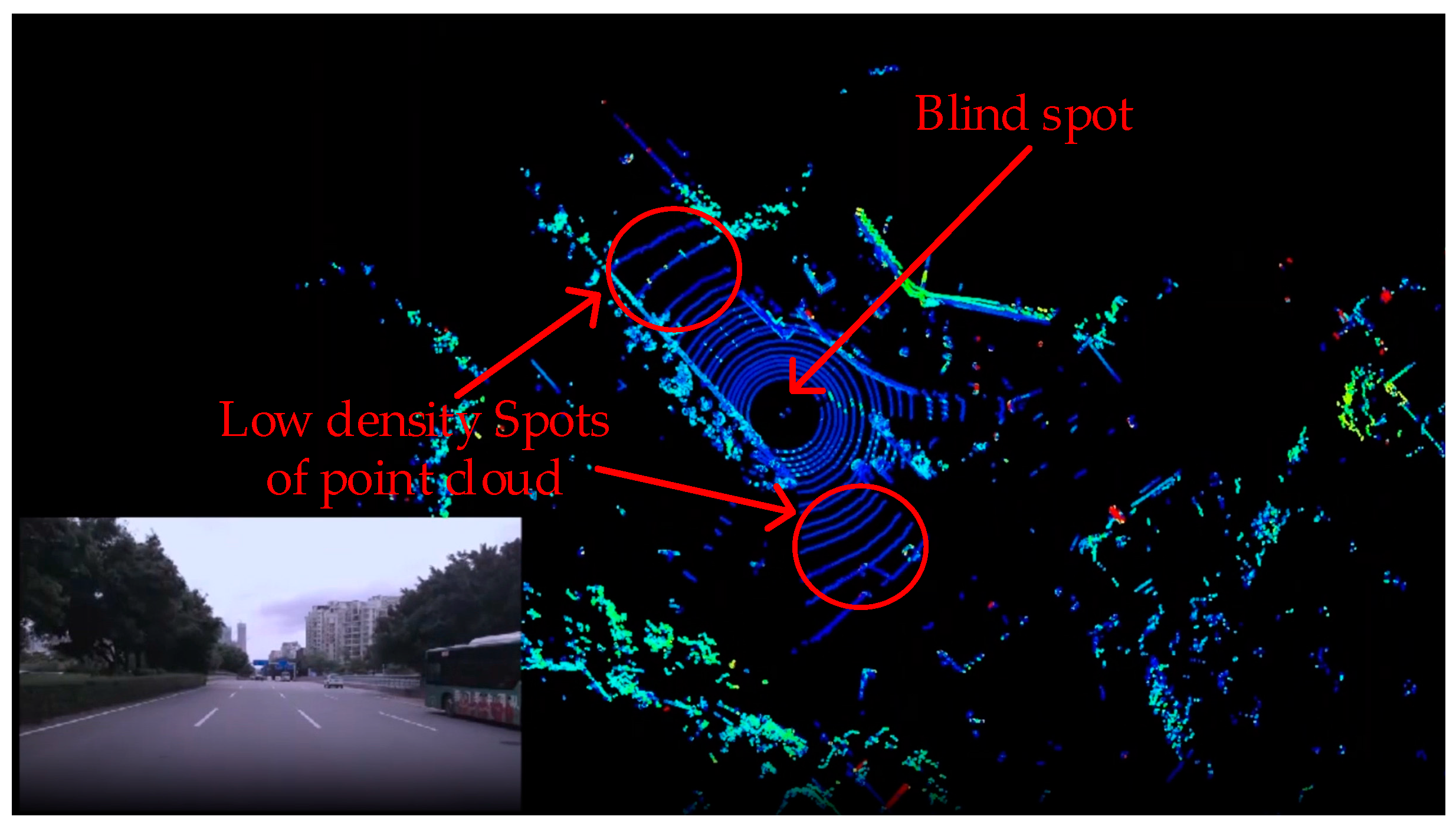

2.1.1. The Disadvantages of Traditional Upright Setup

2.1.2. The Use of MID-70 LiDAR

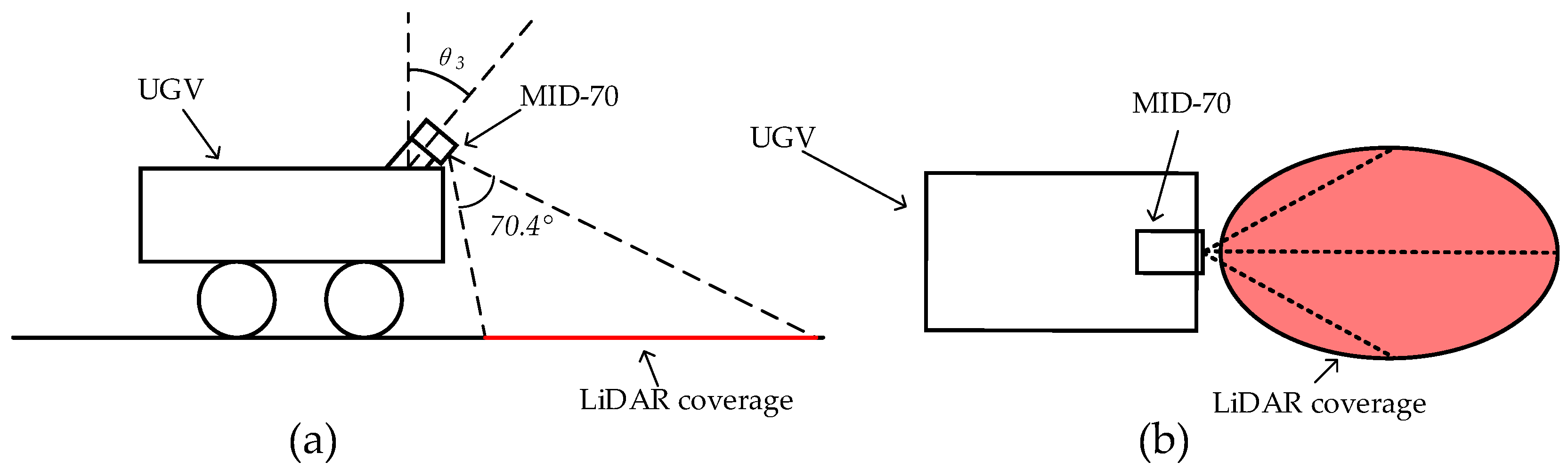

2.1.3. The Tilted Installation of LiDAR

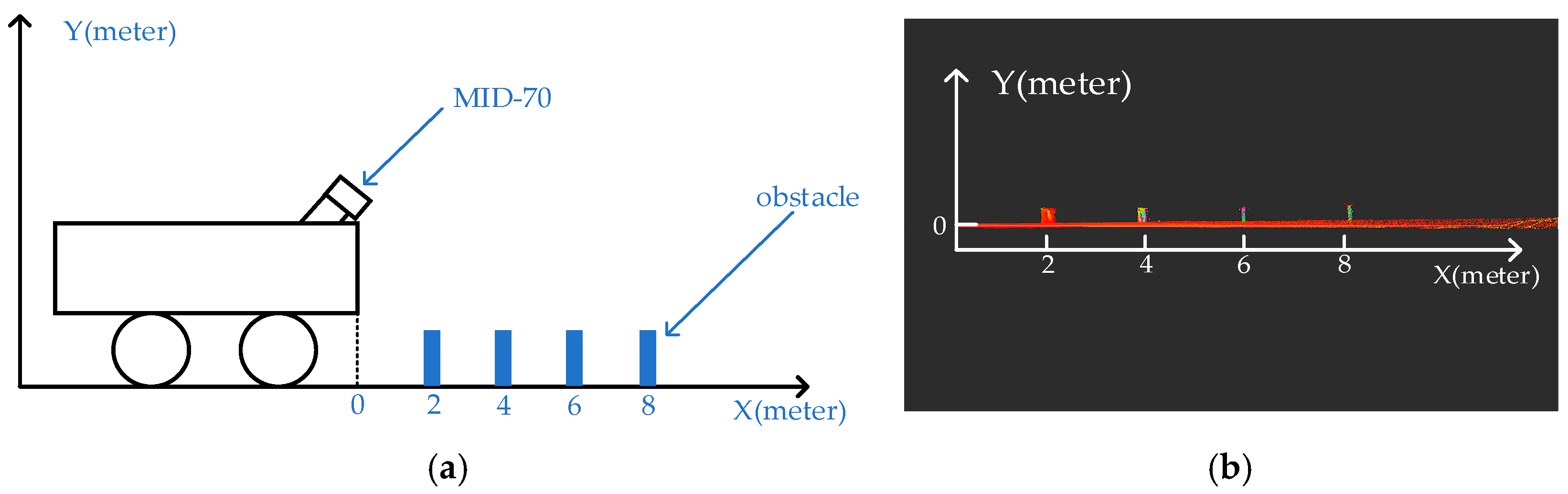

2.1.4. Experimental Arrangements

2.2. Negative Obstacle Detection Method Based on Point Cloud Spatial Geometric Features

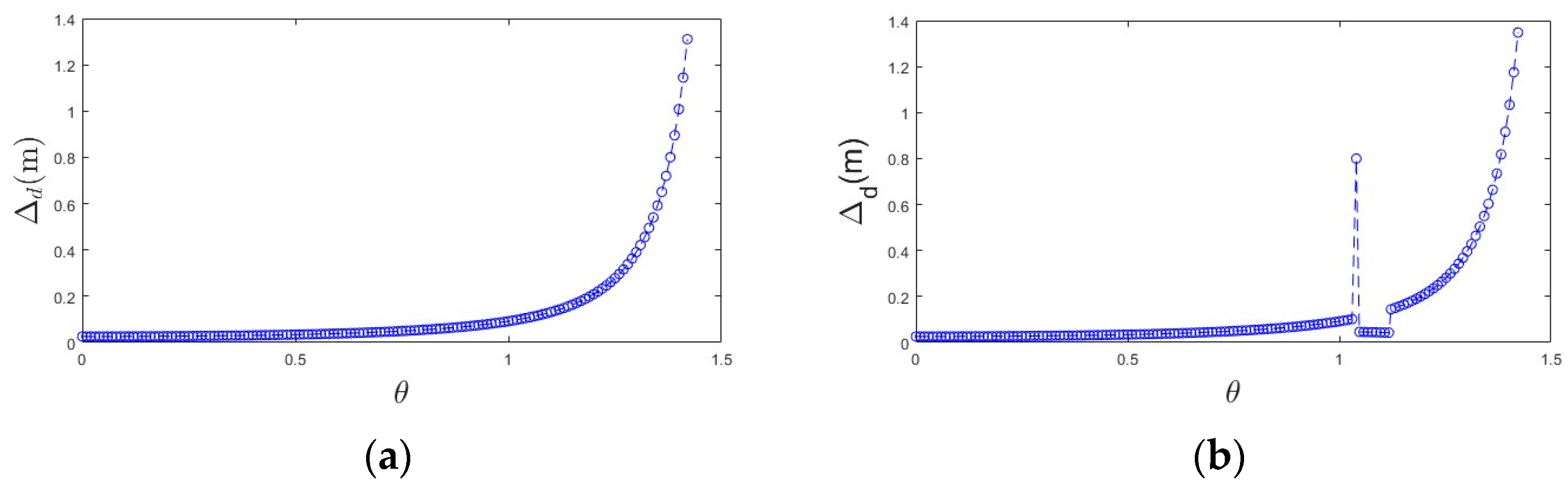

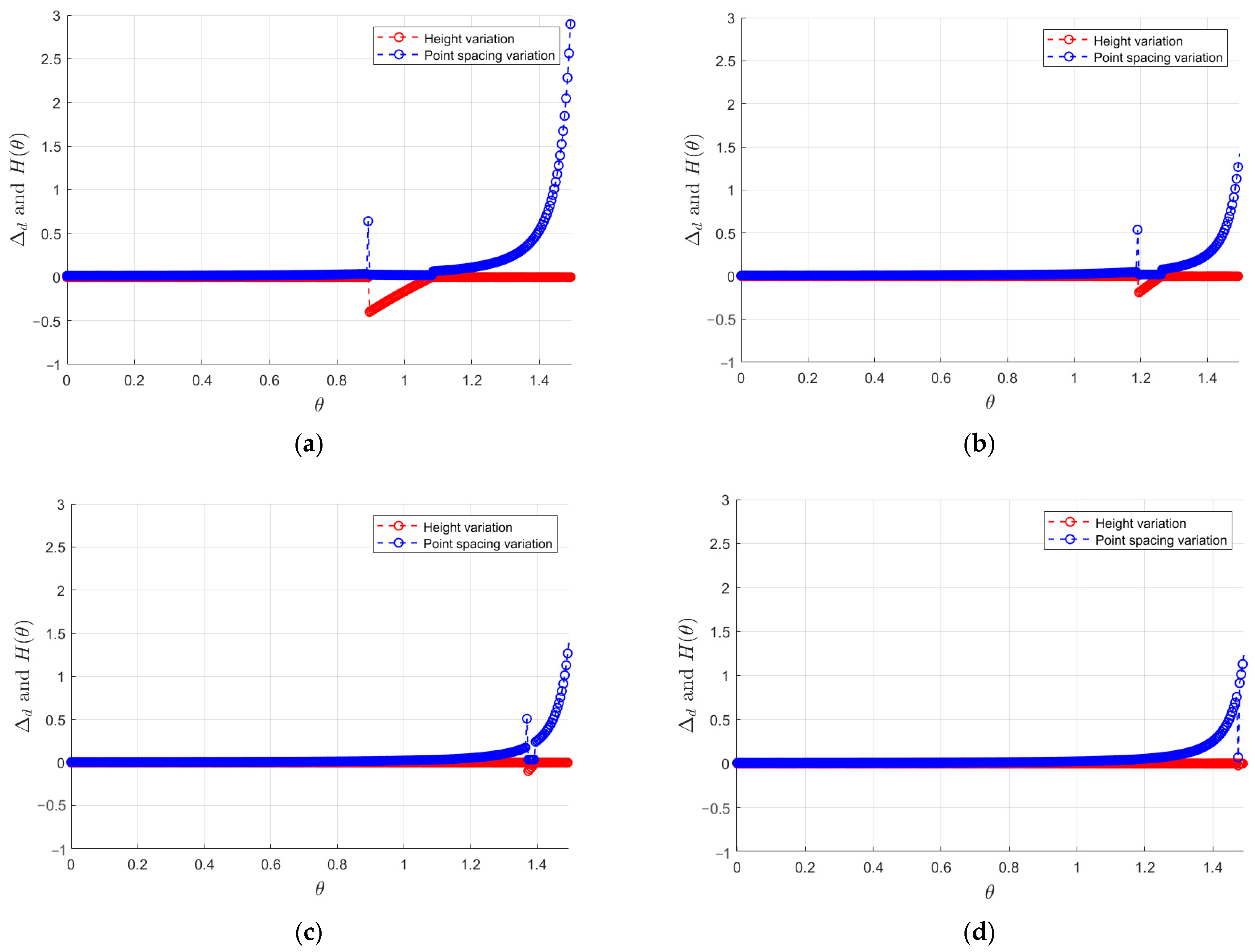

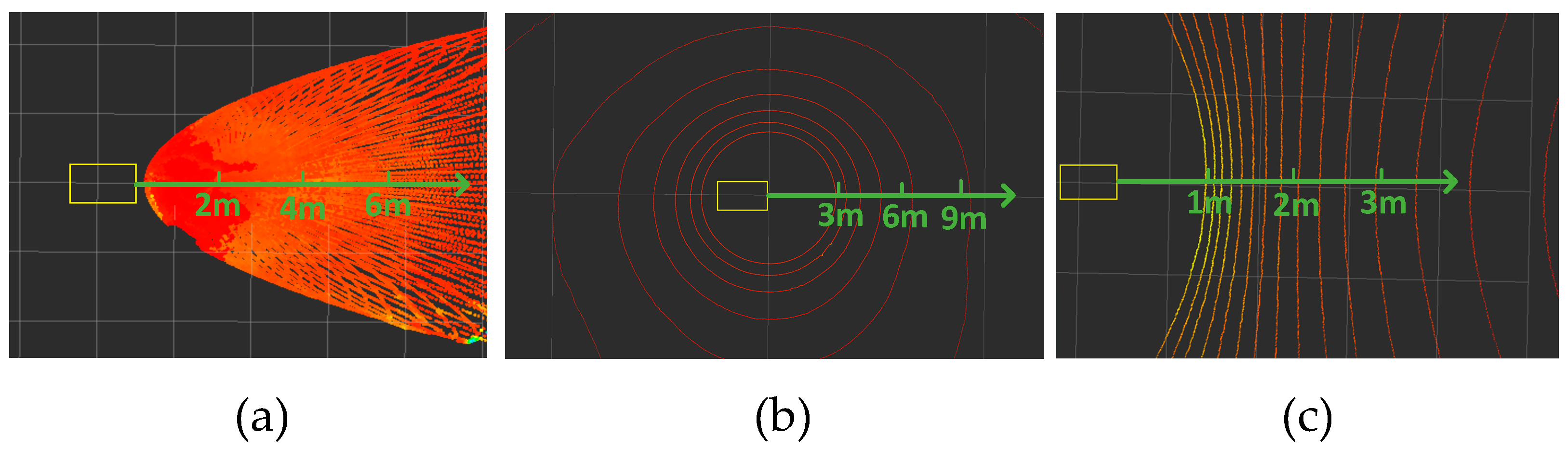

2.2.1. Spatial Geometric Characterization of Negative Obstacle Point Cloud

2.2.2. Negative Obstacle Detection: An Algorithm-Specific Process

2.2.3. Experimental Arrangement

2.3. Experimental System and Evaluation Indicators

3. Results

3.1. Experimental Result and Analysis of Tilt-Mounting Method

3.2. Experimental Result and Analysis of Negative Obstacles Detection Algorithms

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ma, W.; Zhou, X.; Boansi, D.; Horlu, G.S.A.; Owusu, V. Adoption and Intensity of Agricultural Mechanization and Their Impact on Non-Farm Employment of Rural Women. World Dev. 2024, 173, 106434. [Google Scholar] [CrossRef]

- Manduchi, R.; Castano, A.; Talukder, A.; Matthies, L. Obstacle Detection and Terrain Classification for Autonomous Off-Road Navigation. Auton. Robot. 2005, 18, 81–102. [Google Scholar] [CrossRef]

- Matthies, L.; Rankin, A. Negative Obstacle Detection by Thermal Signature. In Proceedings of the Proceedings 2003 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2003)(Cat. No. 03CH37453), Las Vegas, NV, USA, 27–31 October 2003; IEEE: Las Vegas, NV, USA, 2003; Volume 1, pp. 906–913. [Google Scholar]

- Li, N.; Yu, X.; Liu, X.; Lu, C.; Liang, Z.; Su, B. 3D-Lidar Based Negative Obstacle Detection in Unstructured Environment. In Proceedings of the 2020 4th International Conference on Vision, Image and Signal Processing, Bangkok, Thailand, 9–11 December 2020; ACM: Bangkok, Thailand, 2020; pp. 1–6. [Google Scholar]

- Matthies, L.; Kelly, A.; Litwin, T.; Tharp, G. Obstacle Detection for Unmanned Ground Vehicles: A Progress Report. In Proceedings of the Intelligent Vehicles’ 95. Symposium, Detroit, MI, USA, 25–26 September 1995; IEEE: Detroit, MI, USA, 1995; pp. 66–71. [Google Scholar]

- Bellutta, P.; Manduchi, R.; Matthies, L.; Owens, K.; Rankin, A. Terrain Perception for DEMO III. In Proceedings of the IEEE Intelligent Vehicles Symposium 2000 (Cat. No. 00TH8511), Dearborn, MI, USA, 5 October 2000; IEEE: Dearborn, MI, USA, 2000; pp. 326–331. [Google Scholar]

- Heckman, N.; Lalonde, J.-F.; Vandapel, N.; Hebert, M. Potential Negative Obstacle Detection by Occlusion Labeling. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Diego, CA, USA, 29 October–2 November 2007; IEEE: San Diego, CA, USA, 2007; pp. 2168–2173. [Google Scholar]

- Moore, J.G. Managing the Orchard; Agricultural Experiment Station of the University of Wisconsin: Madison, WI, USA, 1916. [Google Scholar]

- Shang, E.; An, X.; Li, J.; Ye, L.; He, H. Robust Unstructured Road Detection: The Importance of Contextual Information. Int. J. Adv. Robot. Syst. 2013, 10, 179. [Google Scholar] [CrossRef]

- Chen, T.; Dai, B.; Wang, R.; Liu, D. Gaussian-Process-Based Real-Time Ground Segmentation for Autonomous Land Vehicles. J. Intell. Robot Syst. 2014, 76, 563–582. [Google Scholar] [CrossRef]

- Zhou, S.; Xi, J.; McDaniel, M.W.; Nishihata, T.; Salesses, P.; Iagnemma, K. Self-supervised Learning to Visually Detect Terrain Surfaces for Autonomous Robots Operating in Forested Terrain. J. Field Robot. 2012, 29, 277–297. [Google Scholar] [CrossRef]

- Dodge, D.; Yilmaz, M. Convex Vision-Based Negative Obstacle Detection Framework for Autonomous Vehicles. IEEE Trans. Intell. Veh. 2023, 8, 778–789. [Google Scholar] [CrossRef]

- Ahmed, A.; Ashfaque, M.; Ulhaq, M.U.; Mathavan, S.; Kamal, K.; Rahman, M. Pothole 3D Reconstruction With a Novel Imaging System and Structure From Motion Techniques. IEEE Trans. Intell. Transport. Syst. 2022, 23, 4685–4694. [Google Scholar] [CrossRef]

- Sun, T.; Pan, W.; Wang, Y.; Liu, Y. Region of Interest Constrained Negative Obstacle Detection and Tracking With a Stereo Camera. IEEE Sens. J. 2022, 22, 3616–3625. [Google Scholar] [CrossRef]

- Ghani, M.F.A.; Sahari, K.S.M. Detecting Negative Obstacle Using Kinect Sensor. Int. J. Adv. Robot. Syst. 2017, 14, 172988141771097. [Google Scholar] [CrossRef]

- Feng, Z.; Guo, Y.; Sun, Y. Segmentation of Road Negative Obstacles Based on Dual Semantic-Feature Complementary Fusion for Autonomous Driving. IEEE Trans. Intell. Veh. 2024, 9, 4687–4697. [Google Scholar] [CrossRef]

- Liu, C.; Zhao, Z.; Zhou, Y.; Ma, L.; Sui, X.; Huang, Y.; Yang, X.; Ma, X. Object Detection and Information Perception by Fusing YOLO-SCG and Point Cloud Clustering. Sensors 2024, 24, 5357. [Google Scholar] [CrossRef] [PubMed]

- Koch, C.; Brilakis, I. Pothole Detection in Asphalt Pavement Images. Adv. Eng. Inform. 2011, 25, 507–515. [Google Scholar] [CrossRef]

- Dhiman, A.; Klette, R. Pothole Detection Using Computer Vision and Learning. IEEE Trans. Intell. Transport. Syst. 2020, 21, 3536–3550. [Google Scholar] [CrossRef]

- Silvister, S.; Komandur, D.; Kokate, S.; Khochare, A.; More, U.; Musale, V.; Joshi, A. Deep Learning Approach to Detect Potholes in Real-Time Using Smartphone. In Proceedings of the 2019 IEEE Pune Section International Conference (PuneCon), Pune, India, 18–20 December 2019; IEEE: Pune, India, 2019; pp. 1–4. [Google Scholar]

- Thiruppathiraj, S.; Kumar, U.; Buchke, S. Automatic Pothole Classification and Segmentation Using Android Smartphone Sensors and Camera Images with Machine Learning Techniques. In Proceedings of the 2020 IEEE Region 10 Conference (Tencon), Osaka, Japan, 16–19 November 2020; IEEE: Osaka, Japan, 2020; pp. 1386–1391. [Google Scholar]

- Kang, B.; Choi, S. Pothole Detection System Using 2D LiDAR and Camera. In Proceedings of the 2017 Ninth International Conference on Ubiquitous and Future Networks (ICUFN), Milan, Italy, 4–7 July 2017; IEEE: Milan, Italy, 2017; pp. 744–746. [Google Scholar]

- Wu, J.; Xu, H.; Tian, Y.; Pi, R.; Yue, R. Vehicle Detection under Adverse Weather from Roadside LiDAR Data. Sensors 2020, 20, 3433. [Google Scholar] [CrossRef] [PubMed]

- Sakai, M.; Aoki, Y.; Takagi, M. The Support System of Firefighters by Detecting Objects in Smoke Space. In Proceedings of the Optomechatronic Machine Vision, Sapporo, Japan, 5–7 December 2005; SPIE: Sapporo, Japan, 2005; Volume 6051, pp. 191–201. [Google Scholar]

- Xiao, A.; Huang, J.; Guan, D.; Cui, K.; Lu, S.; Shao, L. PolarMix: A General Data Augmentation Technique for LiDAR Point Clouds. Adv. Neural Inf. Process. Syst. 2022, 35, 11035–11048. [Google Scholar]

- Larson, J.; Trivedi, M. Lidar Based Off-Road Negative Obstacle Detection and Analysis. In Proceedings of the 2011 14th International IEEE Conference on Intelligent Transportation Systems (ITSC), Washington, DC, USA, 5–7 October 2011; IEEE: Washington, DC, USA, 2011; pp. 192–197. [Google Scholar]

- Shang, E.; An, X.; Wu, T.; Hu, T.; Yuan, Q.; He, H. LiDAR Based Negative Obstacle Detection for Field Autonomous Land Vehicles: LiDAR Based Negative Obstacle Detection for Field Autonomous Land Vehicles. J. Field Robot. 2016, 33, 591–617. [Google Scholar] [CrossRef]

- Park, G.; Koh, J.; Kim, J.; Moon, J.; Choi, J.W. LiDAR-Based 3D Temporal Object Detection via Motion-Aware LiDAR Feature Fusion. Sensors 2024, 24, 4667. [Google Scholar] [CrossRef]

- Chen, L.; Yang, J.; Kong, H. Lidar-Histogram for Fast Road and Obstacle Detection. In Proceedings of the 2017 IEEE international conference on robotics and automation (ICRA), Singapore, 29 May–3 June; IEEE: Singapore, 2017; pp. 1343–1348. [Google Scholar]

- Ravi, R.; Bullock, D.; Habib, A. Pavement Distress and Debris Detection Using a Mobile Mapping System with 2D Profiler LiDAR. Transp. Res. Rec. 2021, 2675, 428–438. [Google Scholar] [CrossRef]

- Ravi, R.; Habib, A.; Bullock, D. Pothole Mapping and Patching Quantity Estimates Using LiDAR-Based Mobile Mapping Systems. Transp. Res. Rec. 2020, 2674, 124–134. [Google Scholar] [CrossRef]

- Song, E.; Jeong, S.; Hwang, S.-H. Edge-Triggered Three-Dimensional Object Detection Using a LiDAR Ring. Sensors 2024, 24, 2005. [Google Scholar] [CrossRef]

- Ruan, S.; Li, S.; Lu, C.; Gu, Q. A Real-Time Negative Obstacle Detection Method for Autonomous Trucks in Open-Pit Mines. Sustainability 2022, 15, 120. [Google Scholar] [CrossRef]

- Atencio, E.; Plaza-Muñoz, F.; Muñoz-La Rivera, F.; Lozano-Galant, J.A. Calibration of UAV Flight Parameters for Pavement Pothole Detection Using Orthogonal Arrays. Autom. Constr. 2022, 143, 104545. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, X.; Cervone, G.; Yang, L. Detection of Asphalt Pavement Potholes and Cracks Based on the Unmanned Aerial Vehicle Multispectral Imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3701–3712. [Google Scholar] [CrossRef]

- Goodin, C.; Carrillo, J.; Monroe, J.G.; Carruth, D.W.; Hudson, C.R. An Analytic Model for Negative Obstacle Detection with Lidar and Numerical Validation Using Physics-Based Simulation. Sensors 2021, 21, 3211. [Google Scholar] [CrossRef] [PubMed]

- Vandapel, N.; Donamukkala, R.R.; Hebert, M. Unmanned Ground Vehicle Navigation Using Aerial Ladar Data. Int. J. Robot. Res. 2006, 25, 31–51. [Google Scholar] [CrossRef]

- Li, J.; Deng, G.; Luo, C.; Lin, Q.; Yan, Q.; Ming, Z. A Hybrid Path Planning Method in Unmanned Air/Ground Vehicle (UAV/UGV) Cooperative Systems. IEEE Trans. Veh. Technol. 2016, 65, 9585–9596. [Google Scholar] [CrossRef]

- Deng, S.; Xu, Q.; Yue, Y.; Jing, S.; Wang, Y. Individual Tree Detection and Segmentation from Unmanned Aerial Vehicle-LiDAR Data Based on a Trunk Point Distribution Indicator. Comput. Electron. Agric. 2024, 218, 108717. [Google Scholar] [CrossRef]

- Jiang, A.; Ahamed, T. Navigation of an Autonomous Spraying Robot for Orchard Operations Using LiDAR for Tree Trunk Detection. Sensors 2023, 23, 4808. [Google Scholar] [CrossRef]

- Matthies, L.; Grandjean, P. Stochastic Performance Modeling and Evaluation of Obstacle Detectability with Imaging Range Sensors. IEEE Trans. Robot. Autom. 1994, 10, 783–792. [Google Scholar] [CrossRef]

- Verbraecken, J.; Van de Heyning, P.; De Backer, W.; Van Gaal, L. Body Surface Area in Normal-Weight, Overweight, and Obese Adults. A Comparison Study. Metabolism 2006, 55, 515–524. [Google Scholar] [CrossRef]

| Setup Method | S1 | S2 | S3 | S4 |

|---|---|---|---|---|

| Traditional upright mount | 0 | 36 | 22 | 18 |

| The tilt mechanical LiDAR | 105 | 82 | 27 | 25 |

| The tilt solid-state mount | 835 | 551 | 264 | 161 |

| Negative Obstacle Scenario | N0 | N1 | N2 | Psuccess | Pfalse | Pmiss | Dmax (m) | Average Time Spent (ms) |

|---|---|---|---|---|---|---|---|---|

| O1 | 525 | 490 | 30 | 93.3% | 5.7% | 6.7% | 8.0 | 16.2 |

| O2 | 684 | 635 | 36 | 92.8% | 5.3% | 7.2% | 8.5 | 17.1 |

| O3 | 396 | 352 | 32 | 88.9% | 8.1% | 11.1% | 6.9 | 18.9 |

| O4 | 384 | 368 | 10 | 95.8% | 2.6% | 4.2% | 8.4 | 16.8 |

| average | 497 | 461 | 27 | 92.7% | 5.4% | 7.3% | 8.0 | 17.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xie, P.; Wang, H.; Huang, Y.; Gao, Q.; Bai, Z.; Zhang, L.; Ye, Y. LiDAR-Based Negative Obstacle Detection for Unmanned Ground Vehicles in Orchards. Sensors 2024, 24, 7929. https://doi.org/10.3390/s24247929

Xie P, Wang H, Huang Y, Gao Q, Bai Z, Zhang L, Ye Y. LiDAR-Based Negative Obstacle Detection for Unmanned Ground Vehicles in Orchards. Sensors. 2024; 24(24):7929. https://doi.org/10.3390/s24247929

Chicago/Turabian StyleXie, Peng, Hongcheng Wang, Yexian Huang, Qiang Gao, Zihao Bai, Linan Zhang, and Yunxiang Ye. 2024. "LiDAR-Based Negative Obstacle Detection for Unmanned Ground Vehicles in Orchards" Sensors 24, no. 24: 7929. https://doi.org/10.3390/s24247929

APA StyleXie, P., Wang, H., Huang, Y., Gao, Q., Bai, Z., Zhang, L., & Ye, Y. (2024). LiDAR-Based Negative Obstacle Detection for Unmanned Ground Vehicles in Orchards. Sensors, 24(24), 7929. https://doi.org/10.3390/s24247929