Integrating Vision and Olfaction via Multi-Modal LLM for Robotic Odor Source Localization

Abstract

1. Introduction

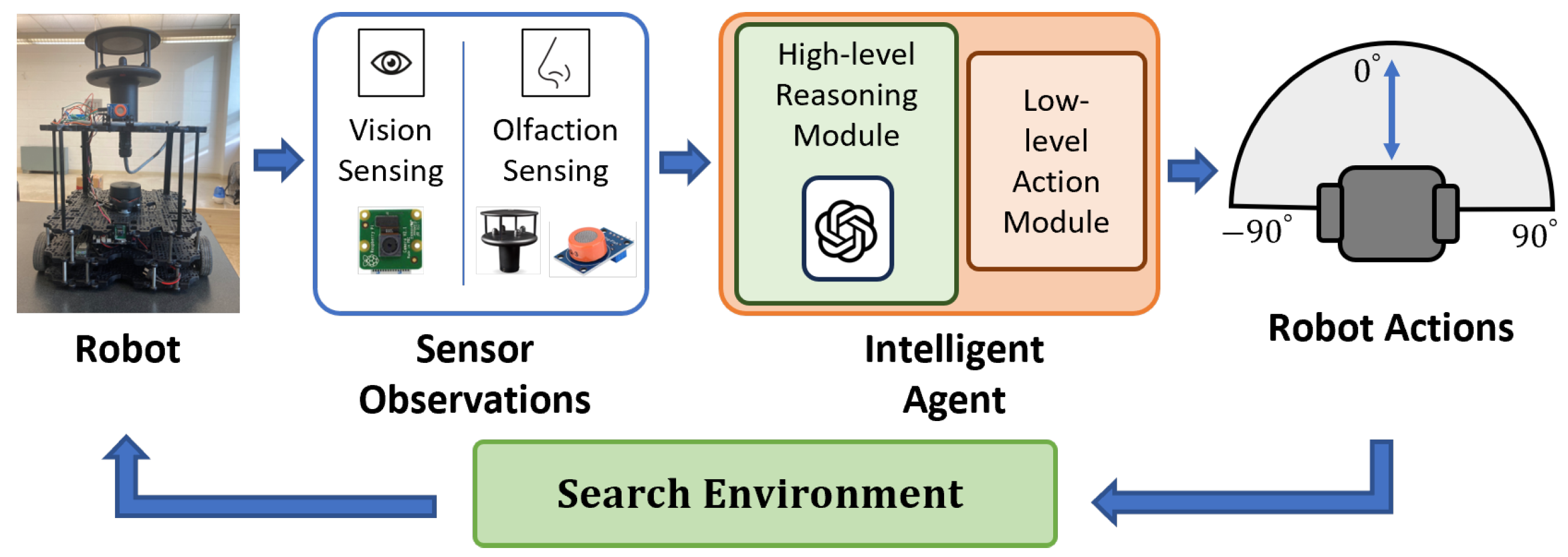

- Integrating vision and olfaction sensing to localize odor sources in complex real-world environments.

- Developing an OSL navigation algorithm that utilizes the zero-shot multi-modal reasoning capability of a multi-modal LLM for OSL. This includes designing modules to process inputs to and outputs from the LLM model.

- Implementing the proposed intelligent agent in real-world experiments and comparing its search performance to the rule-based Fusion navigation algorithm [21].

2. Related Works

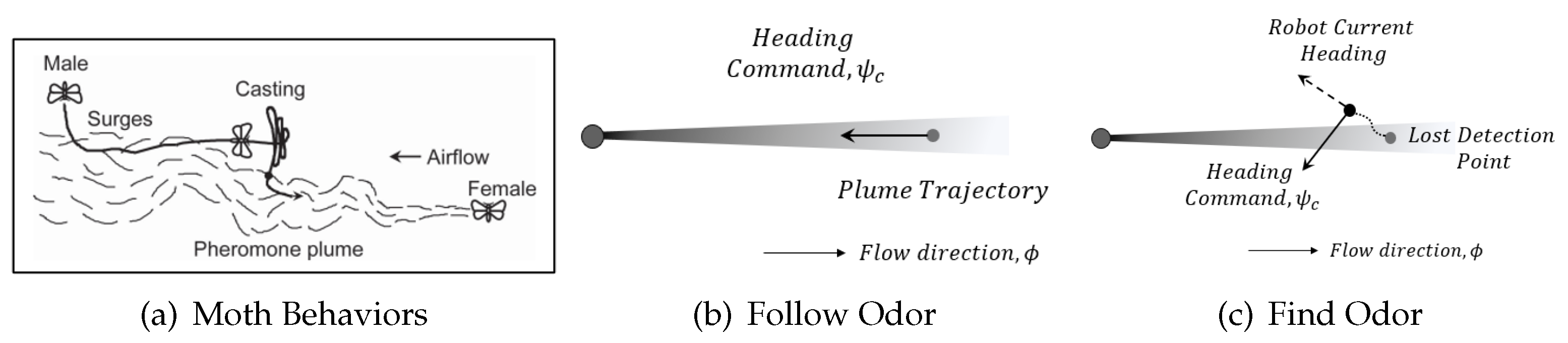

2.1. Olfactory-Based Methods

2.2. Vision and Olfaction Integration in OSL

2.3. LLMs in Robotics

2.4. Research Niche

3. Methodology

3.1. Problem Statement

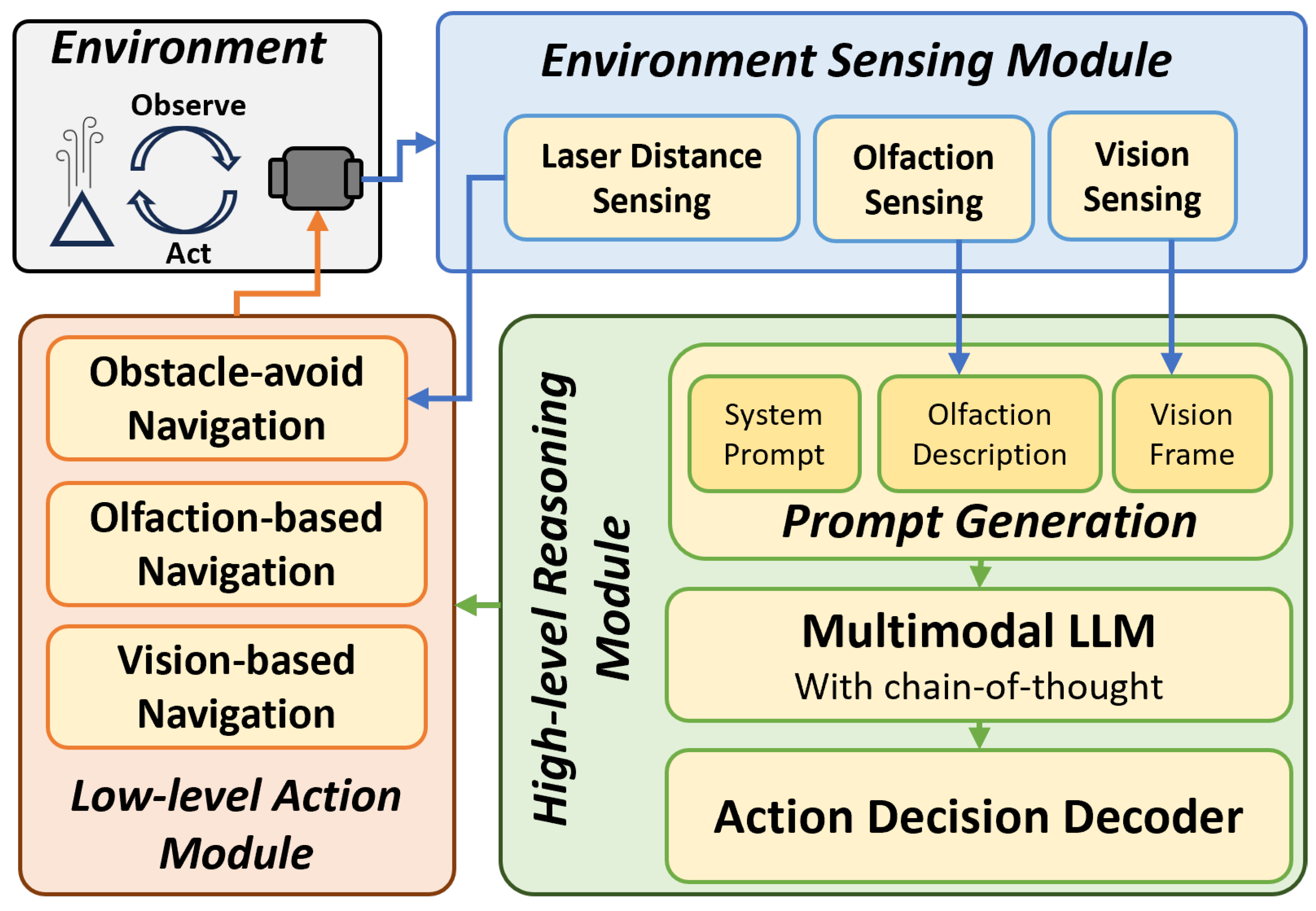

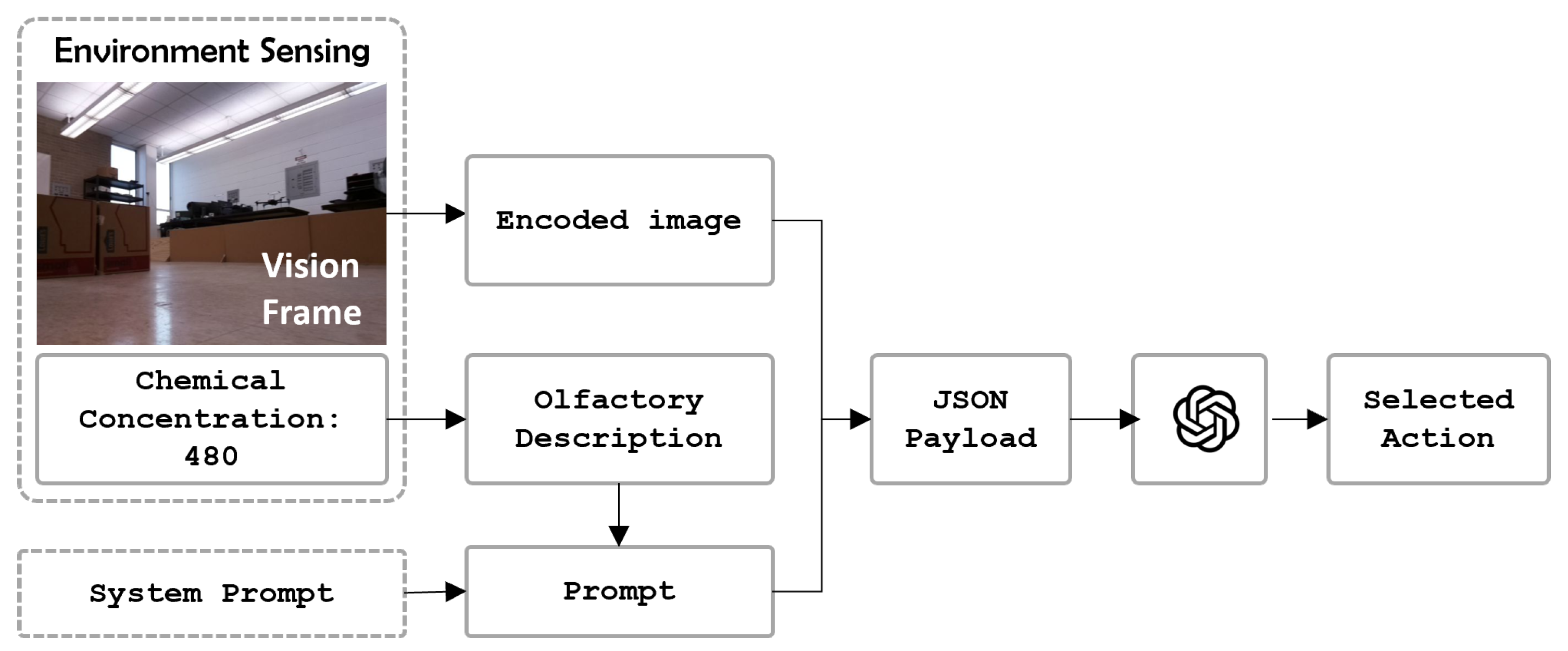

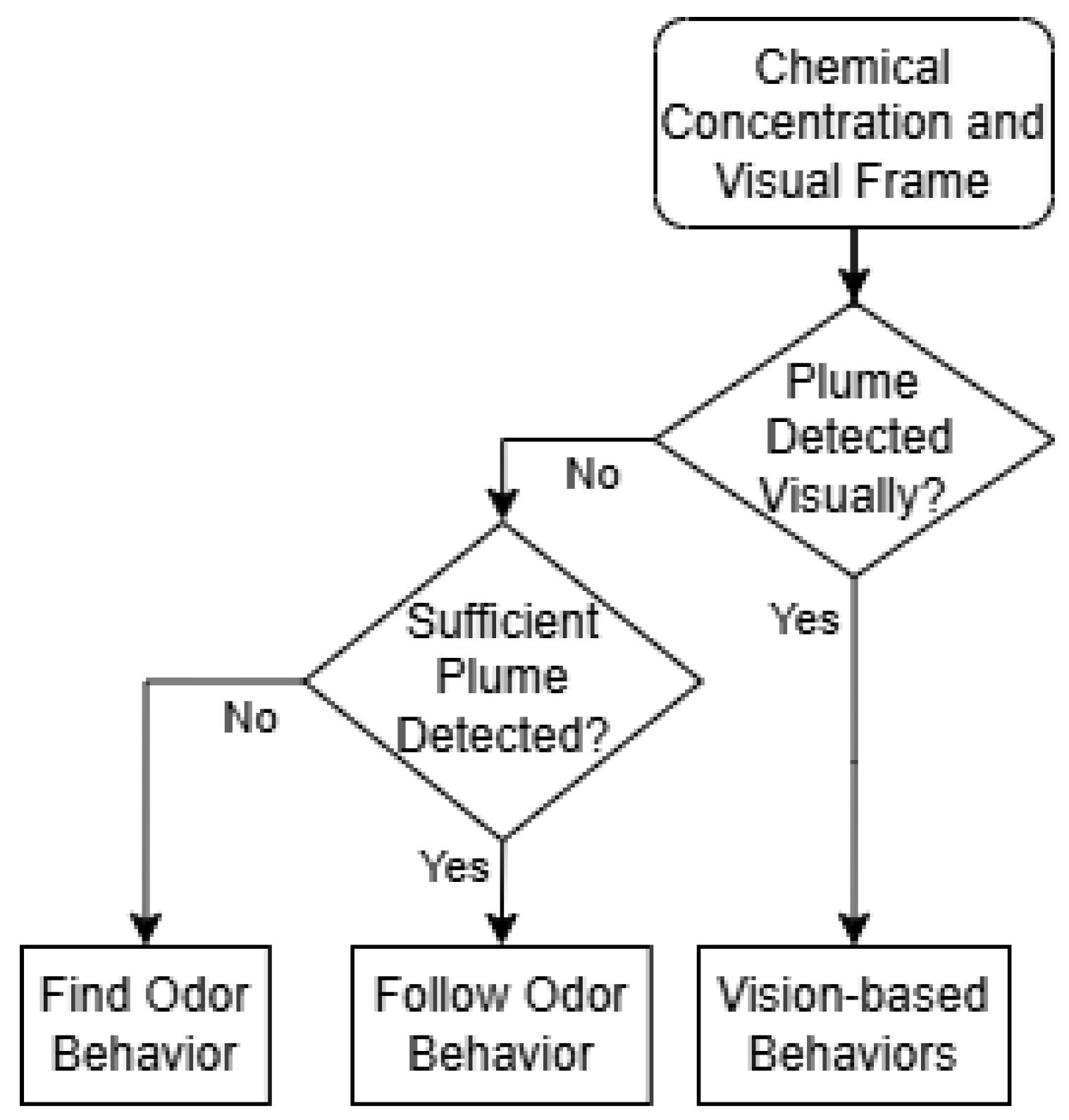

3.2. Environment Sensing Module

3.3. High-Level Reasoning Module

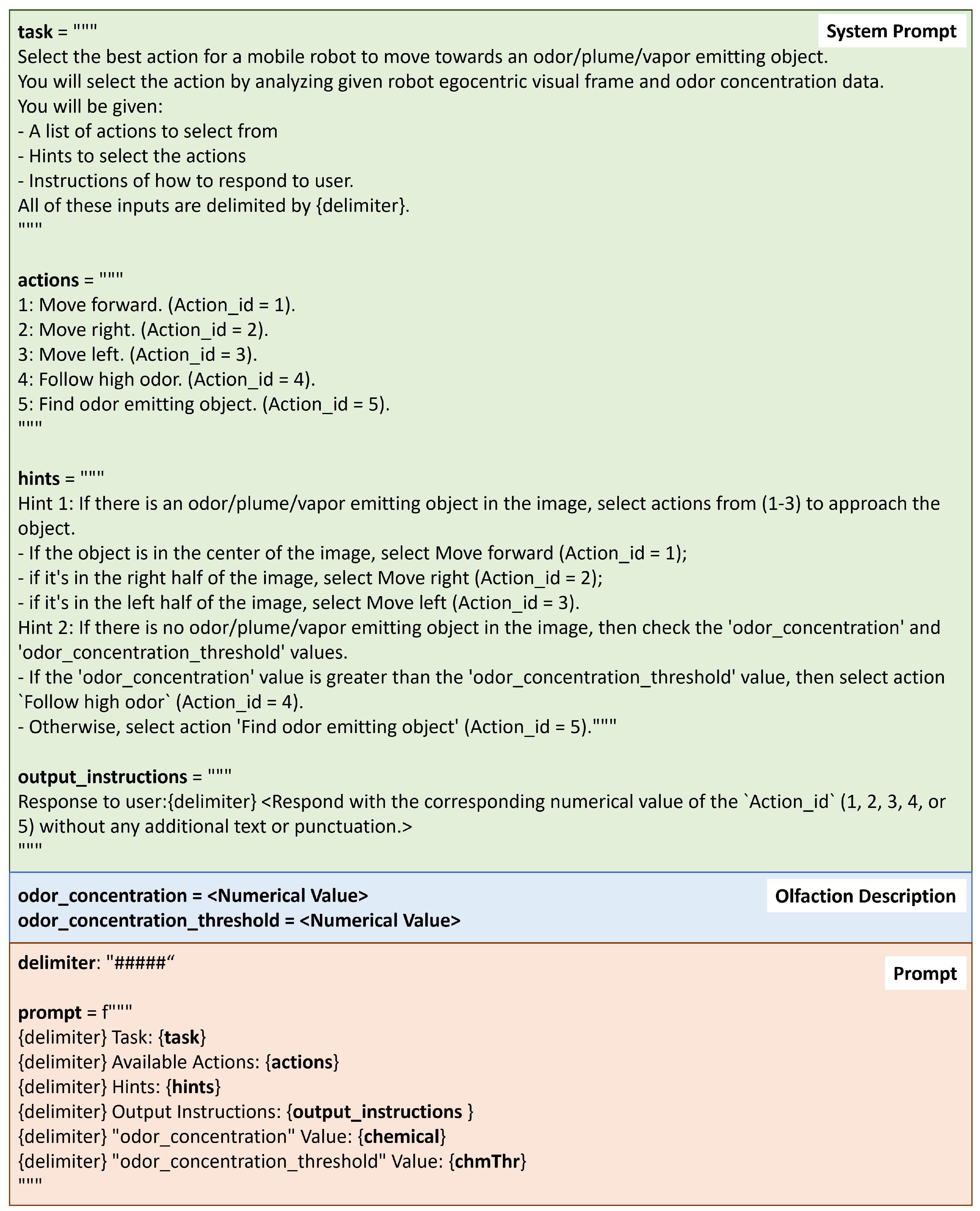

- Task: Describes the objective and process the LLM should follow.

- Actions: Lists the actions available for the LLM to choose from.

- Hints: Guides the LLM to select appropriate vision-based or olfaction-based actions based on multi-modal reasoning.

- Output instruction: Directs the LLM to generate only the action without additional reasoning.

3.4. Low-Level Action Module

4. Experiment

4.1. Experiment Setup

4.2. Comparison of Algorithms

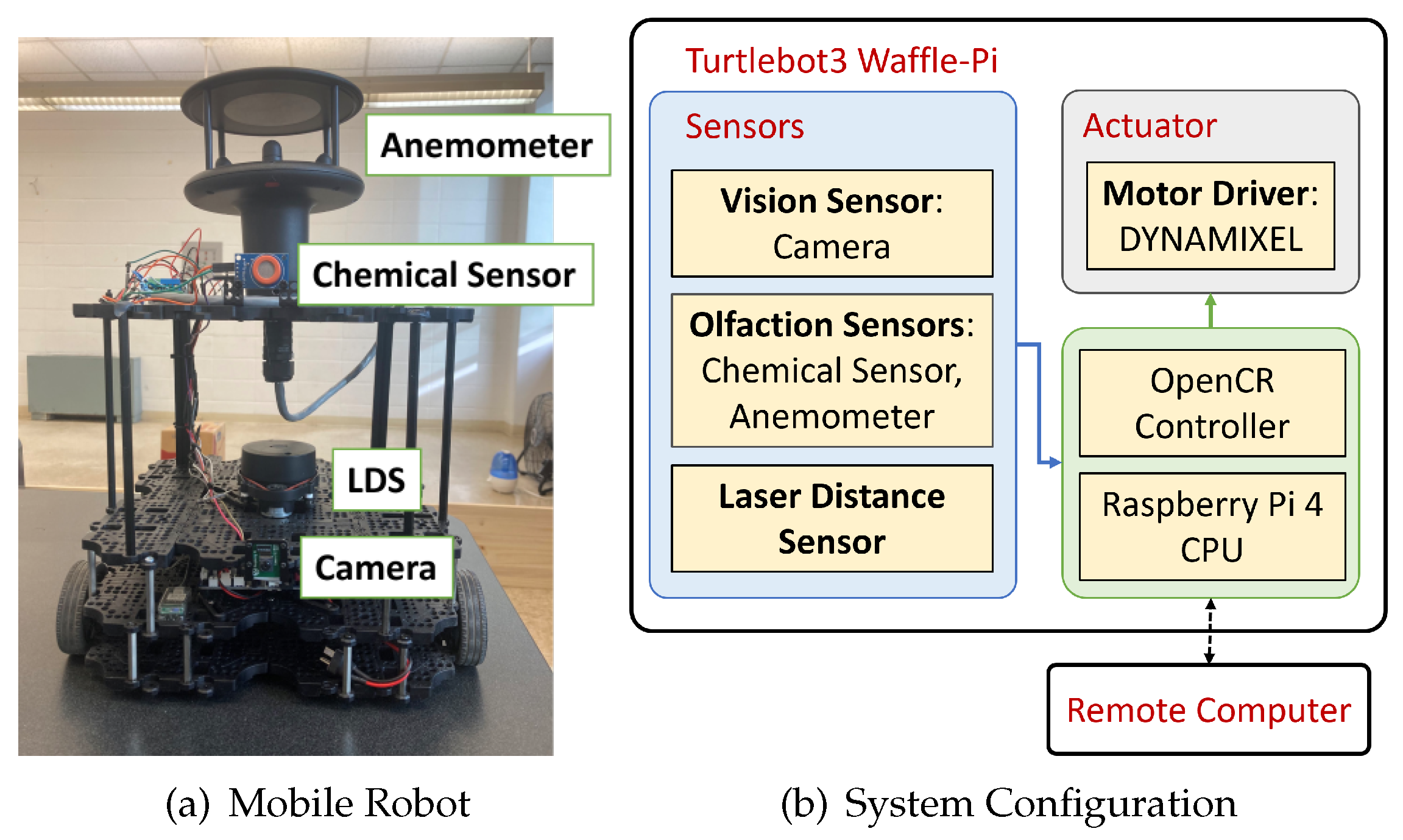

4.3. Robot Platform

- Camera: Raspberry Pi Camera v2, that can record 1080p video at 30 frames per second (FPS). This was used to capture the robot’s egocentric vision frame.

- LDS: LDS-02, that can detect 160–800 mm distance over 360 degrees. This was used to detect distances from obstacles.

- Anemometer: WindSonic, Gill Inc., that can sense 0–75 m/s wind over 360 degrees. This was used to record wind direction and speed.

- Chemical sensor: MQ3 alcohol detector, that can sense 25–500 ppm alcohol concentration. This was used to record odor concentration.

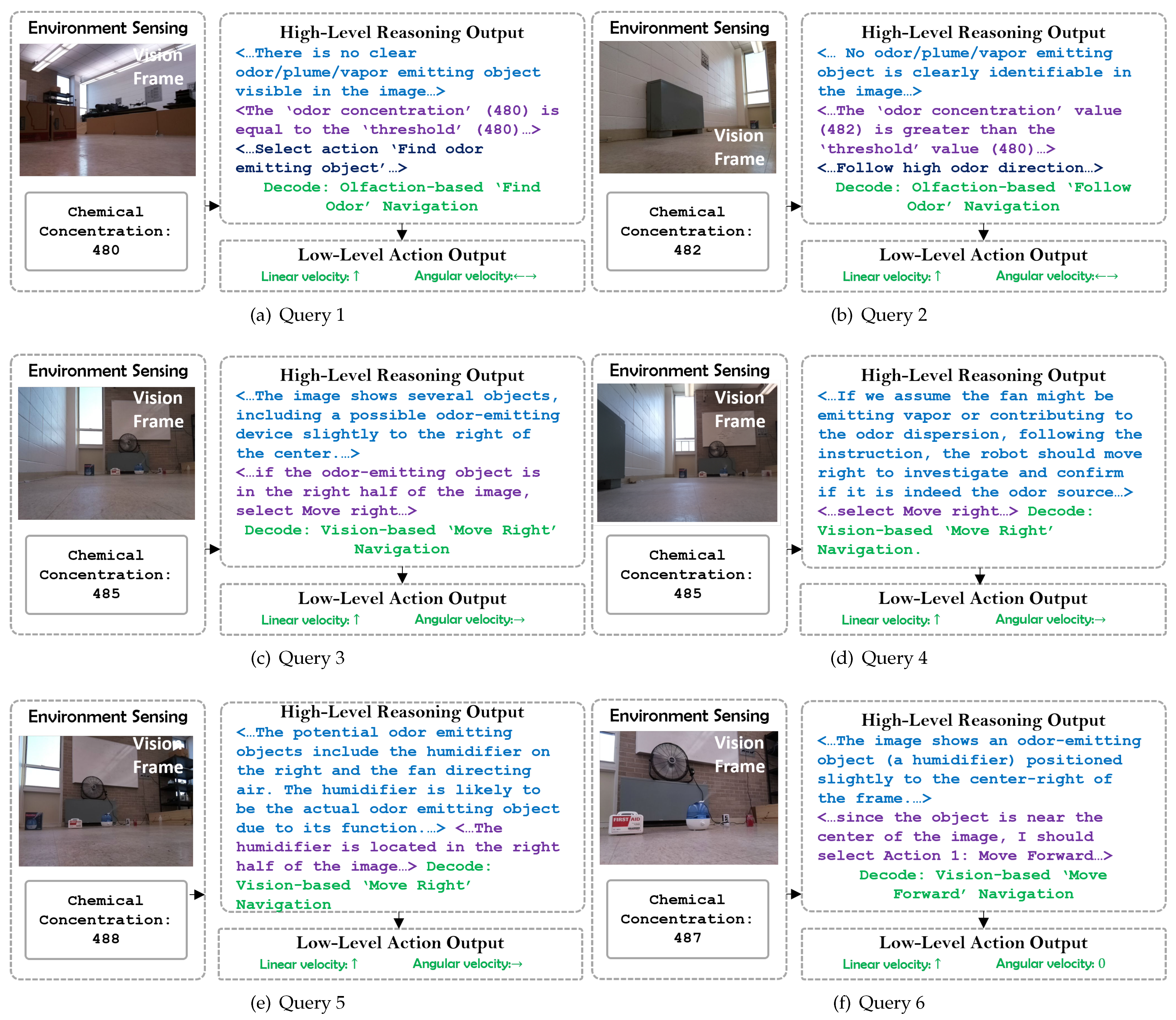

4.4. Sample Run

4.5. Repeated Test Results

5. Limitations and Future Work

6. Conclusions

- Our proposed method is a multi-modal navigation algorithm that integrates olfactory and visual sensors. Unlike single-modal algorithms, such as the moth-inspired navigation, our approach leverages visual inputs to enhance performance. For instance, when the robot visually identifies the odor source, it can approach it directly, significantly improving the success rate in locating odor sources in both unidirectional and non-unidirectional flow environments. Moth-inspired navigation relies primarily on wind measurements, achieving high success rates in unidirectional flows (10/16 in Table 2) but struggling in non-unidirectional flows (0/16 in Table 3). In contrast, with the help of visual detection, our method demonstrates robust performance across both scenarios. Additionally, in unidirectional search environments, the proposed method reduces the average search time by 18.1 s compared to the ‘olfaction-only’ navigation algorithm, and 14.9 s compared to the vision-based algorithm. In non-unidirectional search environments, the proposed method shortens the average search time by 5.37 s relative to the vision-based algorithm.

- Furthermore, compared to the rule-based vision and olfaction fusion algorithms (Fusion in Table 2 and Table 3), our approach incorporates the reasoning and semantic understanding capabilities of LLMs. This allows for more intelligent decision making beyond predefined rules. For example, when presented with an electrical fan (query 3 in Figure 11), the LLM can deduce that the odor source is likely near the fan—an inference unattainable by rule-based Fusion algorithms, which rely solely on recognizing visible odor plumes. As a result, compared to the Fusion navigation algorithm, the proposed method reduces the average search time by 3.87 s in unidirectional search environments, and by 12.49 s in non-unidirectional search environments.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ANN | Artificial neural network |

| CNN | Convolutional neural netowrk |

| OSL | Odor source localization |

| LLM | Large language model |

| LSTM | Long short-term memory |

| Fusion | Vision and olfaction fusion |

| FWER | Family-wise error rate |

| Tukey’s HSD | Tukey’s Honestly Significant Difference |

| VLMs | Vision language models |

| WOL | Web Ontology Language |

References

- Purves, D.; Augustine, G.; Fitzpatrick, D.; Katz, L.; LaMantia, A.; McNamara, J.; Williams, S. The Organization of the Olfactory System. Neuroscience 2001, 337–354. [Google Scholar]

- Sarafoleanu, C.; Mella, C.; Georgescu, M.; Perederco, C. The importance of the olfactory sense in the human behavior and evolution. J. Med. Life 2009, 2, 196. [Google Scholar] [PubMed]

- Kowadlo, G.; Russell, R.A. Robot odor localization: A taxonomy and survey. Int. J. Robot. Res. 2008, 27, 869–894. [Google Scholar] [CrossRef]

- Wang, L.; Pang, S.; Noyela, M.; Adkins, K.; Sun, L.; El-Sayed, M. Vision and Olfactory-Based Wildfire Monitoring with Uncrewed Aircraft Systems. In Proceedings of the 2023 20th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 25–28 June 2023; pp. 716–723. [Google Scholar]

- Burgués, J.; Hernández, V.; Lilienthal, A.J.; Marco, S. Smelling nano aerial vehicle for gas source localization and mapping. Sensors 2019, 19, 478. [Google Scholar] [CrossRef] [PubMed]

- Fu, Z.; Chen, Y.; Ding, Y.; He, D. Pollution source localization based on multi-UAV cooperative communication. IEEE Access 2019, 7, 29304–29312. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, J. Underground odor source localization based on a variation of lower organism search behavior. IEEE Sens. J. 2017, 17, 5963–5970. [Google Scholar] [CrossRef]

- Russell, R.A. Robotic location of underground chemical sources. Robotica 2004, 22, 109–115. [Google Scholar] [CrossRef]

- Wang, L.; Pang, S.; Xu, G. 3-dimensional hydrothermal vent localization based on chemical plume tracing. In Proceedings of the Global Oceans 2020: Singapore–US Gulf Coast, Biloxi, MS, USA, 5–30 October 2020; pp. 1–7. [Google Scholar]

- Jing, T.; Meng, Q.H.; Ishida, H. Recent progress and trend of robot odor source localization. IEEJ Trans. Electr. Electron. Eng. 2021, 16, 938–953. [Google Scholar] [CrossRef]

- Cardé, R.T.; Mafra-Neto, A. Mechanisms of flight of male moths to pheromone. In Insect Pheromone Research; Springer: Berlin/Heidelberg, Germany, 1997; pp. 275–290. [Google Scholar]

- López, L.L.; Vouloutsi, V.; Chimeno, A.E.; Marcos, E.; i Badia, S.B.; Mathews, Z.; Verschure, P.F.; Ziyatdinov, A.; i Lluna, A.P. Moth-like chemo-source localization and classification on an indoor autonomous robot. In On Biomimetics; IntechOpen: London, UK, 2011. [Google Scholar] [CrossRef][Green Version]

- Zhu, H.; Wang, Y.; Du, C.; Zhang, Q.; Wang, W. A novel odor source localization system based on particle filtering and information entropy. Robot. Auton. Syst. 2020, 132, 103619. [Google Scholar] [CrossRef]

- Vergassola, M.; Villermaux, E.; Shraiman, B.I. ‘Infotaxis’ as a strategy for searching without gradients. Nature 2007, 445, 406. [Google Scholar] [CrossRef]

- Luong, D.N.; Kurabayashi, D. Odor Source Localization in Obstacle Regions Using Switching Planning Algorithms with a Switching Framework. Sensors 2023, 23, 1140. [Google Scholar] [CrossRef] [PubMed]

- Jakuba, M.V. Stochastic Mapping for Chemical Plume Source Localization with Application to Autonomous Hydrothermal Vent Discovery. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2007. [Google Scholar] [CrossRef]

- Hu, H.; Song, S.; Chen, C.P. Plume Tracing via Model-Free Reinforcement Learning Method. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 2515–2527. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Park, M.; Kim, C.W.; Shin, D. Source localization for hazardous material release in an outdoor chemical plant via a combination of LSTM-RNN and CFD simulation. Comput. Chem. Eng. 2019, 125, 476–489. [Google Scholar] [CrossRef]

- Frye, M.A.; Duistermars, B.J. Visually mediated odor tracking during flight in Drosophila. JoVE (J. Vis. Exp.) 2009, 28, e1110. [Google Scholar] [CrossRef]

- Huang, D.; Yan, C.; Li, Q.; Peng, X. From Large Language Models to Large Multimodal Models: A Literature Review. Appl. Sci. 2024, 14, 5068. [Google Scholar] [CrossRef]

- Hassan, S.; Wang, L.; Mahmud, K.R. Robotic Odor Source Localization via Vision and Olfaction Fusion Navigation Algorithm. Sensors 2024, 24, 2309. [Google Scholar] [CrossRef]

- Berg, H.C. Motile behavior of bacteria. Phys. Today 2000, 53, 24–29. [Google Scholar] [CrossRef]

- Lockery, S.R. The computational worm: Spatial orientation and its neuronal basis in C. elegans. Curr. Opin. Neurobiol. 2011, 21, 782–790. [Google Scholar] [CrossRef]

- Radvansky, B.A.; Dombeck, D.A. An olfactory virtual reality system for mice. Nat. Commun. 2018, 9, 839. [Google Scholar] [CrossRef]

- Sandini, G.; Lucarini, G.; Varoli, M. Gradient driven self-organizing systems. In Proceedings of the 1993 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS’93), Yokohama, Japan, 26–30 July 1993; Volume 1, pp. 429–432. [Google Scholar]

- Grasso, F.W.; Consi, T.R.; Mountain, D.C.; Atema, J. Biomimetic robot lobster performs chemo-orientation in turbulence using a pair of spatially separated sensors: Progress and challenges. Robot. Auton. Syst. 2000, 30, 115–131. [Google Scholar] [CrossRef]

- Russell, R.A.; Bab-Hadiashar, A.; Shepherd, R.L.; Wallace, G.G. A comparison of reactive robot chemotaxis algorithms. Robot. Auton. Syst. 2003, 45, 83–97. [Google Scholar] [CrossRef]

- Lilienthal, A.; Duckett, T. Experimental analysis of gas-sensitive Braitenberg vehicles. Adv. Robot. 2004, 18, 817–834. [Google Scholar] [CrossRef]

- Ishida, H.; Nakayama, G.; Nakamoto, T.; Moriizumi, T. Controlling a gas/odor plume-tracking robot based on transient responses of gas sensors. IEEE Sens. J. 2005, 5, 537–545. [Google Scholar] [CrossRef]

- Murlis, J.; Elkinton, J.S.; Carde, R.T. Odor plumes and how insects use them. Annu. Rev. Entomol. 1992, 37, 505–532. [Google Scholar] [CrossRef]

- Vickers, N.J. Mechanisms of animal navigation in odor plumes. Biol. Bull. 2000, 198, 203–212. [Google Scholar] [CrossRef]

- Cardé, R.T.; Willis, M.A. Navigational strategies used by insects to find distant, wind-borne sources of odor. J. Chem. Ecol. 2008, 34, 854–866. [Google Scholar] [CrossRef]

- Nevitt, G.A. Olfactory foraging by Antarctic procellariiform seabirds: Life at high Reynolds numbers. Biol. Bull. 2000, 198, 245–253. [Google Scholar] [CrossRef]

- Wallraff, H.G. Avian olfactory navigation: Its empirical foundation and conceptual state. Anim. Behav. 2004, 67, 189–204. [Google Scholar] [CrossRef]

- Shigaki, S.; Sakurai, T.; Ando, N.; Kurabayashi, D.; Kanzaki, R. Time-varying moth-inspired algorithm for chemical plume tracing in turbulent environment. IEEE Robot. Autom. Lett. 2017, 3, 76–83. [Google Scholar] [CrossRef]

- Shigaki, S.; Shiota, Y.; Kurabayashi, D.; Kanzaki, R. Modeling of the Adaptive Chemical Plume Tracing Algorithm of an Insect Using Fuzzy Inference. IEEE Trans. Fuzzy Syst. 2019, 28, 72–84. [Google Scholar] [CrossRef]

- Jin, W.; Rahbar, F.; Ercolani, C.; Martinoli, A. Towards efficient gas leak detection in built environments: Data-driven plume modeling for gas sensing robots. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 7749–7755. [Google Scholar]

- Ojeda, P.; Monroy, J.; Gonzalez-Jimenez, J. Robotic gas source localization with probabilistic mapping and online dispersion simulation. IEEE Trans. Robot. 2024, 40, 3551–3564. [Google Scholar] [CrossRef]

- Rahbar, F.; Marjovi, A.; Kibleur, P.; Martinoli, A. A 3-D bio-inspired odor source localization and its validation in realistic environmental conditions. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 3983–3989. [Google Scholar]

- Shigaki, S.; Yoshimura, Y.; Kurabayashi, D.; Hosoda, K. Palm-sized quadcopter for three-dimensional chemical plume tracking. IEEE Trans. Instrum. Meas. 2022, 71, 1–12. [Google Scholar] [CrossRef]

- Hutchinson, M.; Liu, C.; Chen, W.H. Information-based search for an atmospheric release using a mobile robot: Algorithm and experiments. IEEE Trans. Control. Syst. Technol. 2018, 27, 2388–2402. [Google Scholar] [CrossRef]

- Rahbar, F.; Marjovi, A.; Martinoli, A. An algorithm for odor source localization based on source term estimation. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 973–979. [Google Scholar]

- Jiu, H.; Chen, Y.; Deng, W.; Pang, S. Underwater chemical plume tracing based on partially observable Markov decision process. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419831874. [Google Scholar]

- Luong, D.N.; Tran, H.Q.D.; Kurabayashi, D. Reactive-probabilistic hybrid search method for odour source localization in an obstructed environment. SICE J. Control. Meas. Syst. Integr. 2024, 17, 2374569. [Google Scholar] [CrossRef]

- Pang, S.; Zhu, F. Reactive planning for olfactory-based mobile robots. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; pp. 4375–4380. [Google Scholar]

- Wang, L.; Pang, S. Chemical Plume Tracing using an AUV based on POMDP Source Mapping and A-star Path Planning. In Proceedings of the OCEANS 2019 MTS/IEEE SEATTLE, Seattle, WA, USA, 27–31 October 2019; pp. 1–7. [Google Scholar]

- Bilgera, C.; Yamamoto, A.; Sawano, M.; Matsukura, H.; Ishida, H. Application of convolutional long short-term memory neural networks to signals collected from a sensor network for autonomous gas source localization in outdoor environments. Sensors 2018, 18, 4484. [Google Scholar] [CrossRef]

- Thrift, W.J.; Cabuslay, A.; Laird, A.B.; Ranjbar, S.; Hochbaum, A.I.; Ragan, R. Surface-enhanced Raman scattering-based odor compass: Locating multiple chemical sources and pathogens. ACS Sens. 2019, 4, 2311–2319. [Google Scholar] [CrossRef]

- Wang, L.; Pang, S. An Implementation of the Adaptive Neuro-Fuzzy Inference System (ANFIS) for Odor Source Localization. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems, Las Vegas, NV, USA, 24 October 2020–24 January 2021. [Google Scholar]

- Monroy, J.; Ruiz-Sarmiento, J.R.; Moreno, F.A.; Melendez-Fernandez, F.; Galindo, C.; Gonzalez-Jimenez, J. A semantic-based gas source localization with a mobile robot combining vision and chemical sensing. Sensors 2018, 18, 4174. [Google Scholar] [CrossRef]

- Chowdhary, K. Natural language processing for word sense disambiguation and information extraction. arXiv 2020, arXiv:2004.02256. [Google Scholar]

- Nayebi, A.; Rajalingham, R.; Jazayeri, M.; Yang, G.R. Neural foundations of mental simulation: Future prediction of latent representations on dynamic scenes. Adv. Neural Inf. Process. Syst. 2024, 36, 70548–70561. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar]

- Wei, J.; Wang, X.; Schuurmans, D.; Bosma, M.; Xia, F.; Chi, E.; Le, Q.V.; Zhou, D. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 2022, 35, 24824–24837. [Google Scholar]

- Ouyang, L.; Wu, J.; Jiang, X.; Almeida, D.; Wainwright, C.; Mishkin, P.; Zhang, C.; Agarwal, S.; Slama, K.; Ray, A.; et al. Training language models to follow instructions with human feedback. Adv. Neural Inf. Process. Syst. 2022, 35, 27730–27744. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Touvron, H.; Lavril, T.; Izacard, G.; Martinet, X.; Lachaux, M.A.; Lacroix, T.; Rozière, B.; Goyal, N.; Hambro, E.; Azhar, F.; et al. Llama: Open and efficient foundation language models. arXiv 2023, arXiv:2302.13971. [Google Scholar]

- Li, C.; Gan, Z.; Yang, Z.; Yang, J.; Li, L.; Wang, L.; Gao, J. Multimodal foundation models: From specialists to general-purpose assistants. Found. Trends® Comput. Graph. Vis. 2024, 16, 1–214. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Shi, Y.; Shang, M.; Qi, Z. Intelligent layout generation based on deep generative models: A comprehensive survey. Inf. Fusion 2023, 100, 101940. [Google Scholar] [CrossRef]

- Wang, J.; Wu, Z.; Li, Y.; Jiang, H.; Shu, P.; Shi, E.; Hu, H.; Ma, C.; Liu, Y.; Wang, X.; et al. Large language models for robotics: Opportunities, challenges, and perspectives. arXiv 2024, arXiv:2401.04334. [Google Scholar]

- Dorbala, V.S.; Sigurdsson, G.; Piramuthu, R.; Thomason, J.; Sukhatme, G.S. Clip-nav: Using clip for zero-shot vision-and-language navigation. arXiv 2022, arXiv:2211.16649. [Google Scholar]

- Chen, P.; Sun, X.; Zhi, H.; Zeng, R.; Li, T.H.; Liu, G.; Tan, M.; Gan, C. A2 Nav: Action-Aware Zero-Shot Robot Navigation by Exploiting Vision-and-Language Ability of Foundation Models. arXiv 2023, arXiv:2308.07997. [Google Scholar]

- Zhou, G.; Hong, Y.; Wu, Q. Navgpt: Explicit reasoning in vision-and-language navigation with large language models. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 7641–7649. [Google Scholar]

- Schumann, R.; Zhu, W.; Feng, W.; Fu, T.J.; Riezler, S.; Wang, W.Y. Velma: Verbalization embodiment of llm agents for vision and language navigation in street view. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 18924–18933. [Google Scholar]

- Shah, D.; Osinski, B.; Ichter, B.; Levine, S. Robotic Navigation with Large Pre-Trained Models of Language. arXiv 2022, arXiv:2207.04429v2. [Google Scholar]

- Yu, B.; Kasaei, H.; Cao, M. L3mvn: Leveraging large language models for visual target navigation. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Detroit, MI, USA, 1–5 October 2023; pp. 3554–3560. [Google Scholar]

- Zhou, K.; Zheng, K.; Pryor, C.; Shen, Y.; Jin, H.; Getoor, L.; Wang, X.E. Esc: Exploration with soft commonsense constraints for zero-shot object navigation. In Proceedings of the International Conference on Machine Learning, Honolulu, HI, USA, 23–29 July 2023; pp. 42829–42842. [Google Scholar]

- Jatavallabhula, K.M.; Kuwajerwala, A.; Gu, Q.; Omama, M.; Chen, T.; Maalouf, A.; Li, S.; Iyer, G.; Saryazdi, S.; Keetha, N.; et al. Conceptfusion: Open-set multimodal 3d mapping. arXiv 2023, arXiv:2302.07241. [Google Scholar]

- Brohan, A.; Brown, N.; Carbajal, J.; Chebotar, Y.; Dabis, J.; Finn, C.; Gopalakrishnan, K.; Hausman, K.; Herzog, A.; Hsu, J.; et al. Rt-1: Robotics transformer for real-world control at scale. arXiv 2022, arXiv:2212.06817. [Google Scholar]

- Farrell, J.A.; Pang, S.; Li, W. Chemical plume tracing via an autonomous underwater vehicle. IEEE J. Ocean. Eng. 2005, 30, 428–442. [Google Scholar] [CrossRef]

- Ishida, H.; Moriizumi, T. Machine olfaction for mobile robots. In Handbook of Machine Olfaction: Electronic Nose Technology; Wiley-VCH: Weinheim, Germany, 2002; pp. 399–417. [Google Scholar]

- Hassan, S.; Wang, L.; Mahmud, K.R. Multi-Modal Robotic Platform Development for Odor Source Localization. In Proceedings of the 2023 Seventh IEEE International Conference on Robotic Computing (IRC), Laguna Hills, CA, USA, 11–13 December 2023; pp. 59–62. [Google Scholar]

| Symbol | Parameter |

|---|---|

| p | Visual observation |

| u | Wind speed |

| Wind direction in body frame | |

| Odor concentration |

| Navigation Algorithm | Search Time (s) | Traveled Distance (m) | Success Rate ↑ | ||

|---|---|---|---|---|---|

| Mean ↓ | Std. Dev. ↓ | Mean ↓ | Std. Dev. ↓ | ||

| Olfaction-only | 98.46 | 11.87 | 6.86 | 0.35 | 10/16 |

| Vision-only | 95.23 | 3.91 | 6.68 | 0.27 | 8/16 |

| Fusion | 84.2 | 12.42 | 6.12 | 0.52 | 12/16 |

| Proposed LLM-based | 80.33 | 4.99 | 6.14 | 0.34 | 16/16 |

| Navigation Algorithm | Search Time (s) | Traveled Distance (m) | Success Rate ↑ | ||

|---|---|---|---|---|---|

| Mean ↓ | Std. Dev. ↓ | Mean ↓ | Std. Dev. ↓ | ||

| Olfaction-only | - | - | - | - | 0/16 |

| Vision-only | 90.67 | - | 6.69 | - | 2/16 |

| Fusion | 97.79 | 4.69 | 7.08 | 0.53 | 8/16 |

| Proposed LLM-based | 85.3 | 5.03 | 6.37 | 0.31 | 12/16 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hassan, S.; Wang, L.; Mahmud, K.R. Integrating Vision and Olfaction via Multi-Modal LLM for Robotic Odor Source Localization. Sensors 2024, 24, 7875. https://doi.org/10.3390/s24247875

Hassan S, Wang L, Mahmud KR. Integrating Vision and Olfaction via Multi-Modal LLM for Robotic Odor Source Localization. Sensors. 2024; 24(24):7875. https://doi.org/10.3390/s24247875

Chicago/Turabian StyleHassan, Sunzid, Lingxiao Wang, and Khan Raqib Mahmud. 2024. "Integrating Vision and Olfaction via Multi-Modal LLM for Robotic Odor Source Localization" Sensors 24, no. 24: 7875. https://doi.org/10.3390/s24247875

APA StyleHassan, S., Wang, L., & Mahmud, K. R. (2024). Integrating Vision and Olfaction via Multi-Modal LLM for Robotic Odor Source Localization. Sensors, 24(24), 7875. https://doi.org/10.3390/s24247875