An Improved Generative Adversarial Network-Based and U-Shaped Transformer Method for Glass Curtain Crack Deblurring Using UAVs

Abstract

1. Introduction

1.1. Image Deblurring

1.2. GAN

1.3. U-Net

1.4. Transformer

2. Methodology

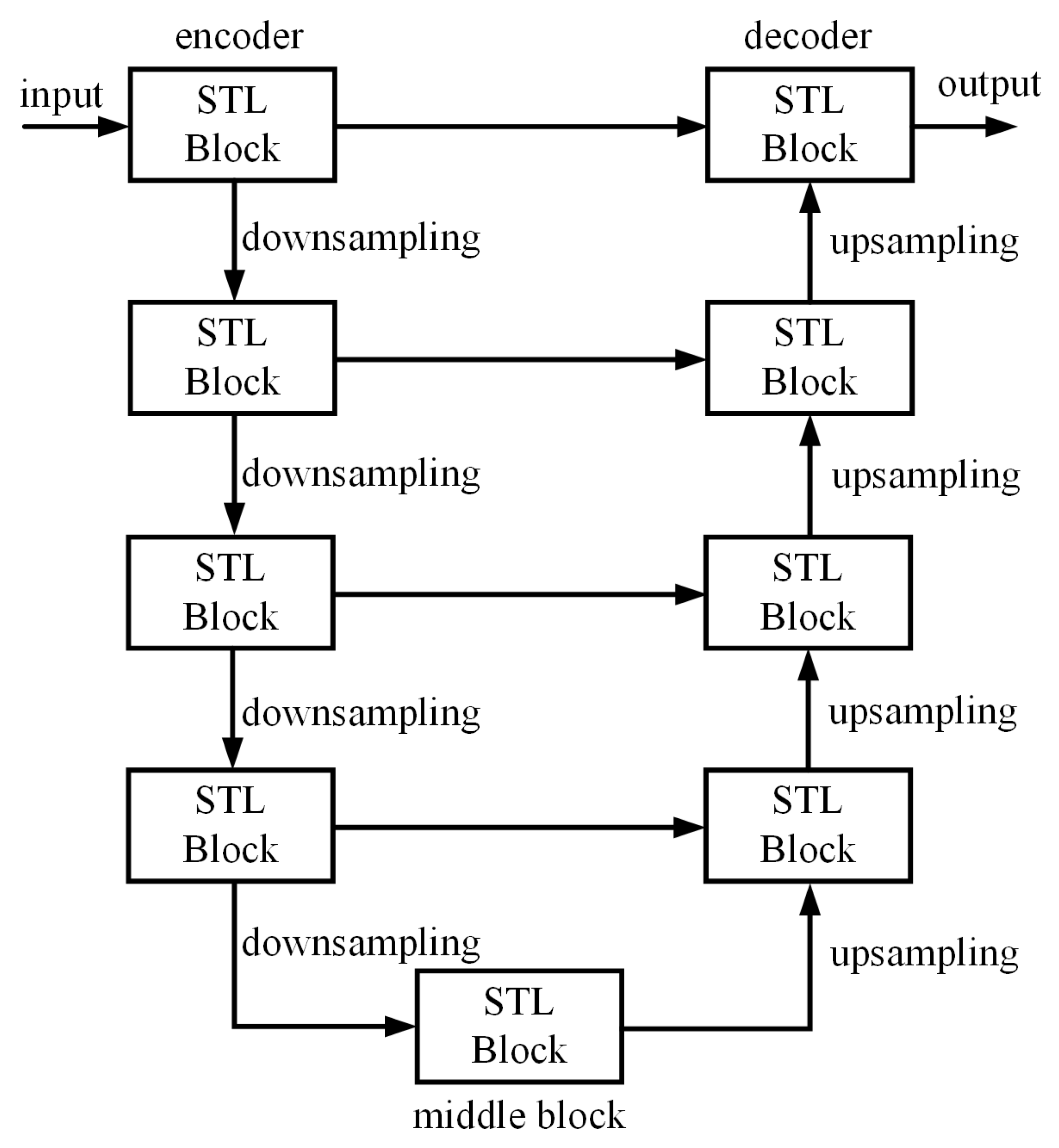

2.1. GlassCurtainCrackDeblurNet

2.2. GlassCurtainCrackDeblurImage Generator

2.3. Swin Transformer Layer Block

3. Experiments

3.1. Datasets

3.2. Evaluation Metrics

3.3. Training Details

4. Results and Discussion

4.1. Results of Comparative Experiments

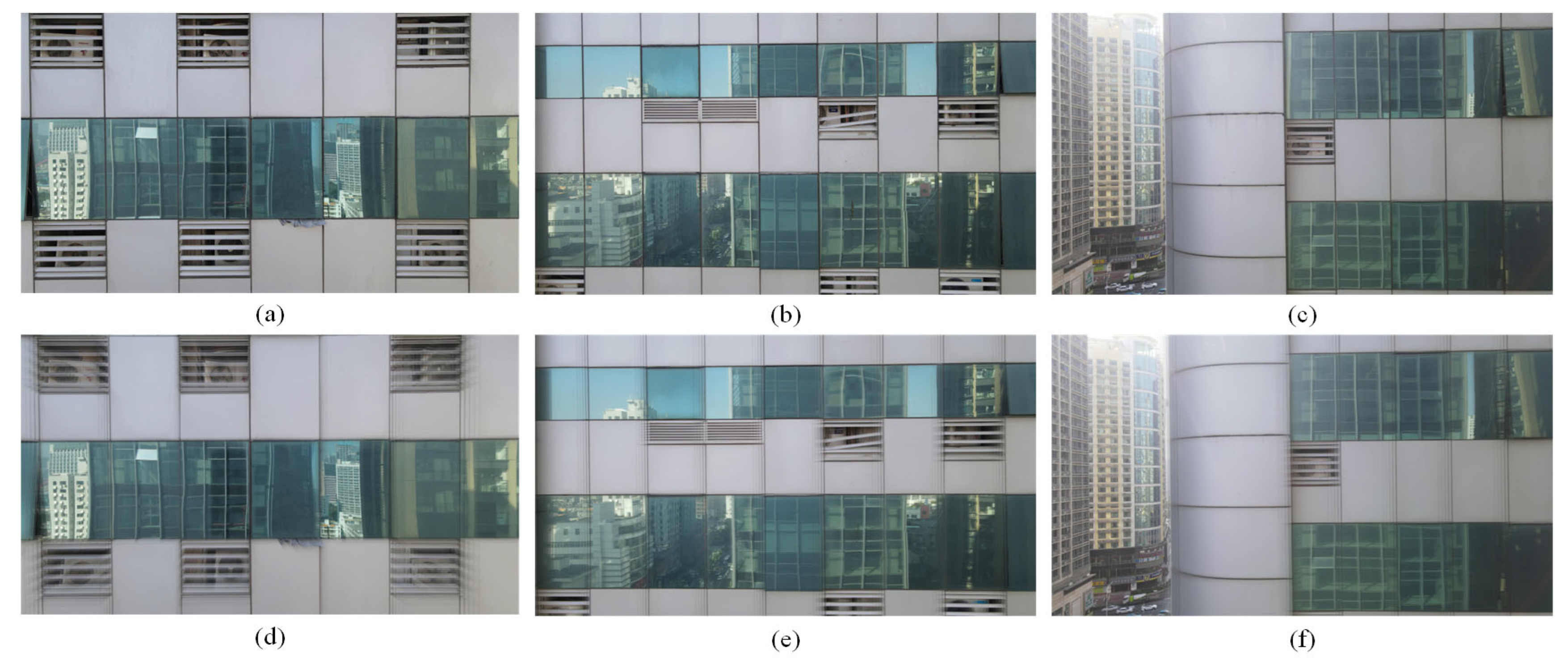

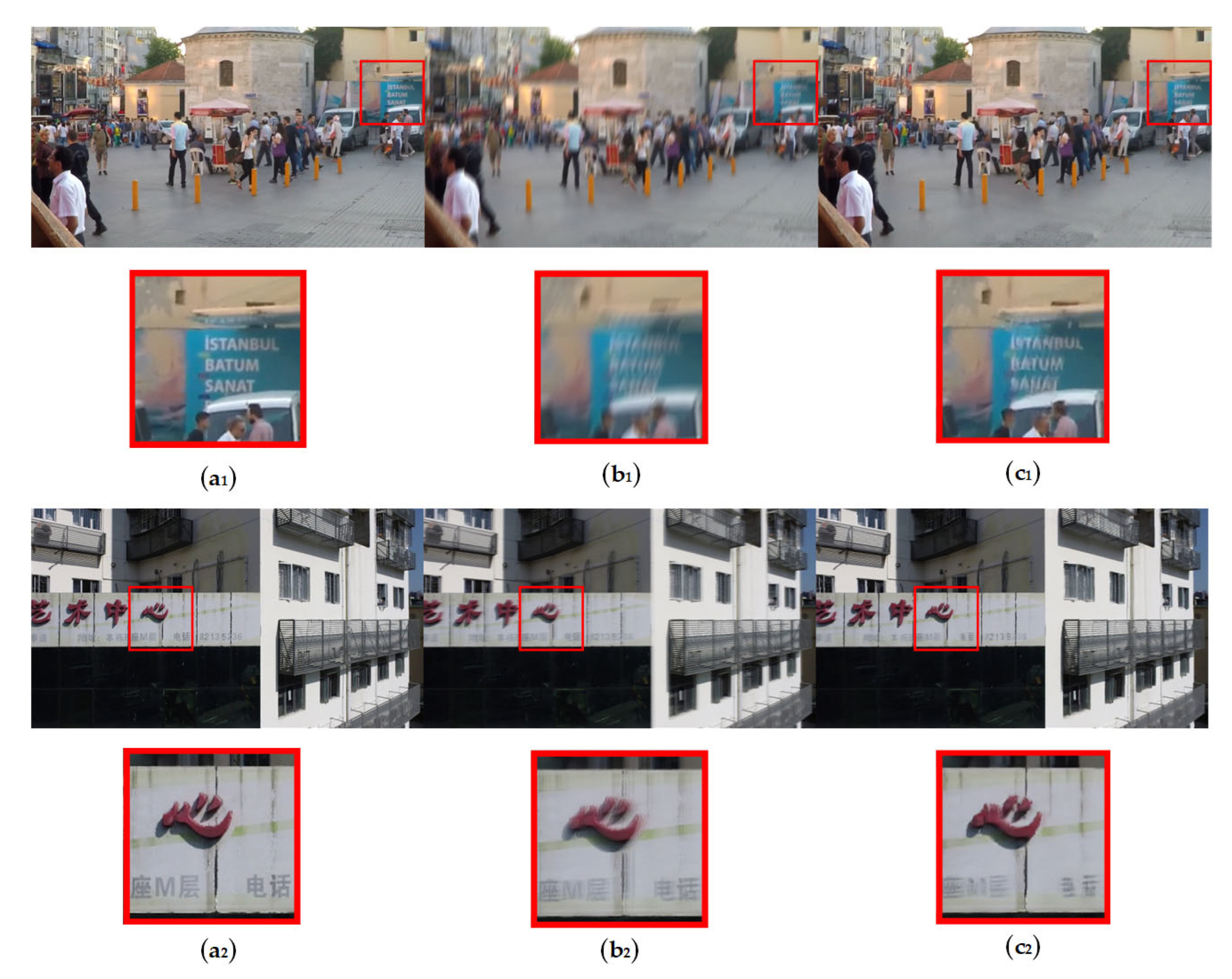

4.1.1. Qualitative Evaluation

4.1.2. Quantitative Evaluation

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kejriwal, L.; Singh, I. A Hybrid Filtering Approach of Digital Video Stabilization for UAV Using Kalman and Low Pass Filter. Procedia Comput. Sci. 2016, 93, 359–366. [Google Scholar] [CrossRef][Green Version]

- Zhan, Z.; Yang, X.; Li, Y.; Pang, C. Video deblurring via motion compensation and adaptive information fusion. Neurocomputing 2019, 341, 88–98. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, J.; Qiu, T.; Qi, W. An Adaptive Deblurring Vehicle Detection Method for High-Speed Moving Drones: Resistance to Shake. Entropy 2021, 23, 1358. [Google Scholar] [CrossRef] [PubMed]

- Sun, F.; Zhao, T.; Zhu, B.; Jia, X.; Wang, F. Deblurring transformer tracking with conditional cross-attention. Multimed. Syst. 2022, 29, 1131–1144. [Google Scholar] [CrossRef]

- Jia, J. Mathematical models and practical solvers for uniform motion deblurring. In Motion Deblurring; Cambridge University Press: Cambridge, UK, 2014; pp. 1–30. [Google Scholar] [CrossRef]

- Xu, Y.; Wang, L.; Hu, X.; Peng, S. Single-Image Blind Deblurring for Non-uniform Camera-Shake Blur. In Proceedings of the Asian Conference on Computer Vision, Daejeon, Republic of Korea, 5–9 November 2012; pp. 336–348. [Google Scholar] [CrossRef]

- Schmidt, U.; Rother, C.; Nowozin, S.; Jancsary, J.; Roth, S. Discriminative Non-blind Deblurring. In Proceedings of the 2013 IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 604–611. [Google Scholar] [CrossRef]

- Riegler, G.; Schulter, S.; Ruther, M.; Bischof, H. Conditioned Regression Models for Non-blind Single Image Super-Resolution. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 522–530. [Google Scholar] [CrossRef]

- Das, R.; Bajpai, A.; Venkatesan, S.M. Fast Non-blind Image Deblurring with Sparse Priors. In Proceedings of the International Conference on Computer Vision and Image Processing, Roorkee, India, 26–28 February 2016; pp. 629–641. [Google Scholar] [CrossRef]

- Rangaswamy, M.A.D. Blind and Non-Blind Deblurring using Residual Whiteness Measures. Int. J. Res. Appl. Sci. Eng. Technol. 2017, 5, 1004–1009. [Google Scholar] [CrossRef]

- Wang, R.; Ma, G.; Qin, Q.; Shi, Q.; Huang, J. Blind UAV Images Deblurring Based on Discriminative Networks. Sensors 2018, 18, 2874. [Google Scholar] [CrossRef]

- Zhang, H.; Yang, J.; Zhang, Y.; Huang, T.S. Sparse representation based blind image deblurring. In Proceedings of the 2011 IEEE International Conference on Multimedia and Expo, Barcelona, Spain, 11–15 July 2011; pp. 1–6. [Google Scholar] [CrossRef]

- Liu, G.; Chang, S.; Ma, Y. Blind Image Deblurring Using Spectral Properties of Convolution Operators. IEEE Trans. Image Process. 2014, 23, 5047–5056. [Google Scholar] [CrossRef]

- Leclaire, A.; Moisan, L. No-Reference Image Quality Assessment and Blind Deblurring with Sharpness Metrics Exploiting Fourier Phase Information. J. Math. Imaging Vis. 2015, 52, 145–172. [Google Scholar] [CrossRef]

- Pan, J.; Sun, D.; Pfister, H.; Yang, M. Blind Image Deblurring Using Dark Channel Prior. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NE, USA, 27–30 June 2016; pp. 1628–1636. [Google Scholar] [CrossRef]

- Yan, Y.; Ren, W.; Guo, Y.; Wang, R.; Cao, X. Image Deblurring via Extreme Channels Prior. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6978–6986. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.C.; Bengio, Y. Generative adversarial networks. Commun. ACM 2014, 63, 139–144. [Google Scholar] [CrossRef]

- Kupyn, O.; Budzan, V.; Mykhailych, M.; Mishkin, D.; Matas, J. DeblurGAN: Blind Motion Deblurring Using Conditional Adversarial Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2017; pp. 8183–8192. [Google Scholar] [CrossRef]

- Kupyn, O.; Martyniuk, T.; Wu, J.; Wang, Z. DeblurGAN-v2: Deblurring (Orders-of-Magnitude) Faster and Better. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 8877–8886. [Google Scholar] [CrossRef]

- Ramakrishnan, S.; Pachori, S.; Gangopadhyay, A.; Raman, S. Deep Generative Filter for Motion Deblurring. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2993–3000. [Google Scholar] [CrossRef]

- Chen, J.; Chen, J.; Chao, H.; Yang, M. Image Blind Denoising with Generative Adversarial Network Based Noise Modeling. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3155–3164. [Google Scholar] [CrossRef]

- Zhang, K.; Luo, W.; Zhong, Y.; Ma, L.; Liu, W.; Li, H. Adversarial Spatio-Temporal Learning for Video Deblurring. IEEE Trans. Image Process. 2018, 28, 291–301. [Google Scholar] [CrossRef]

- Zhang, K.; Luo, W.; Zhong, Y.; Lin, M.; Stenger, B.; Liu, W.; Li, H. Deblurring by Realistic Blurring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2734–2743. [Google Scholar] [CrossRef]

- Nah, S.; Kim, T.H.; Lee, K.M. Deep Multi-scale Convolutional Neural Network for Dynamic Scene Deblurring. In Proceedings of the 2017 IEEE Conference On Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2016; pp. 257–265. [Google Scholar] [CrossRef]

- Liu, Y.; Yeoh, J.K.W.; Chua, D.K.H. Deep Learning–Based Enhancement of Motion Blurred UAV Concrete Crack Images. J. Comput. Civil. Eng. 2020, 34, 04020028. [Google Scholar] [CrossRef]

- Lee, J.; Gwon, G.; Kim, I.; Jung, H. A Motion Deblurring Network for Enhancing UAV Image Quality in Bridge Inspection. Drones 2023, 7, 657. [Google Scholar] [CrossRef]

- Sharif, S.M.A.; Naqvi, R.A.; Ali, F.; Biswas, M. DarkDeblur: Learning single-shot image deblurring in low-light condition. Expert Syst. Appl. 2023, 222, 119739. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015. [Google Scholar] [CrossRef]

- Hu, T.; Sun, W.; Liang, M.; Shao, T. Algorithm for raw silk stem and defect extraction based on multi-scale fusion and attention mechanism. China Meas. Test 2023, 8, 1–9. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y. Image Restoration Using Very Deep Convolutional Encoder-Decoder Networks with Symmetric Skip Connections. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29, pp. 2802–2810. [Google Scholar]

- Liu, P.; Zhang, H.; Zhang, K.; Lin, L.; Zuo, W. Multi-level Wavelet-CNN for Image Restoration. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 886–88609. [Google Scholar] [CrossRef]

- Chen, L.; Lu, X.; Zhang, J.; Chu, X.; Chen, C. HINet: Half Instance Normalization Network for Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 182–192. [Google Scholar] [CrossRef]

- Wang, Z.; Cun, X.; Bao, J.; Liu, J. Uformer: A General U-Shaped Transformer for Image Restoration. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2021; pp. 17662–17672. [Google Scholar] [CrossRef]

- Chen, L.; Chu, X.; Zhang, X.; Sun, J. Simple Baselines for Image Restoration. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, L. A generative adversarial network approach for removing motion blur in the automatic detection of pavement cracks. Comput.-Aided Civ. Inf. 2024, 39, 3412–3434. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Tang, X.; Gao, R. Research on voice control of wheeled mobile robot combined with CTC and Transformer. China Meas. Test 2024, 50, 117–123. [Google Scholar]

- Meng, W.; Yu, B.; Bai, L.; Xu, J.; Gu, J.; Guo, F. STGCN-Transformer-based short-term electricity net load forecasting. China Meas. Test 2024, 92, 102864. [Google Scholar]

- Wang, X.; Bu, C.; Shi, Z. Face anti-spoofing based on improved SwinTransformer. China Meas. Test 2024, 3, 1–10. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16 × 16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar] [CrossRef]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Gool, L.V.; Timofte, R. SwinIR: Image Restoration Using Swin Transformer. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision Workshops (ICCVW), Montreal, BC, Canada, 10–17 October 2021; pp. 1833–1844. [Google Scholar] [CrossRef]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M. Restormer: Efficient Transformer for High-Resolution Image Restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 5718–5729. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv 2015, arXiv:1511.06434. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Kong, L.; Dong, J.; Li, M.; Ge, J.; Pan, J. Efficient Frequency Domain-based Transformers for High-Quality Image Deblurring. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2022; pp. 5886–5895. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2016; pp. 5967–5976. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

- Fatica, M. CUDA toolkit and libraries. In Proceedings of the 2008 IEEE Hot Chips 20 Symposium (HCS), Stanford, CA, USA, 24–26 August 2008; pp. 1–22. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Loshchilov, I.; Hutter, F. SGDR: Stochastic Gradient Descent with Warm Restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

| Key Characteristics | Number |

|---|---|

| Without cracks | 2899 |

| Simple cracks | 828 |

| Complex cracks | 414 |

| single-directional blur | 3106 |

| multi-directional blur | 1035 |

| Method | MAE | PSNR (dB) | SSIM | Time (s) |

|---|---|---|---|---|

| DeblurGANv2 | 0.0401 | 25.51 | 0.80 | 0.12 |

| Method [35] | 0.0384 | 27.85 | 0.85 | 1.46 |

| Restormer | 0.0215 | 28.94 | 0.88 | 1.97 |

| GlassCurtainCrackDeblurNet (ours) | 0.0198 | 29.76 | 0.89 | 0.93 |

| Method | MAE | PSNR (dB) | SSIM | Time (s) |

|---|---|---|---|---|

| DeblurGANv2 | 0.0521 | 25.36 | 0.81 | 0.71 |

| Method [35] | 0.0352 | 27.97 | 0.84 | 1.49 |

| Restormer | 0.0207 | 28.66 | 0.87 | 18.32 |

| GlassCurtainCrackDeblurNet (ours) | 0.0161 | 30.40 | 0.91 | 0.92 |

| Method | Image Refinement | Structural Awareness | Instant Inference Ability |

|---|---|---|---|

| DeblurGANv2 | √ | ||

| Method [35] | √ | ||

| Restormer | √ | ||

| GlassCurtainCrackDeblurNet (ours) | √ | √ | √ |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, J.; Liu, G. An Improved Generative Adversarial Network-Based and U-Shaped Transformer Method for Glass Curtain Crack Deblurring Using UAVs. Sensors 2024, 24, 7713. https://doi.org/10.3390/s24237713

Huang J, Liu G. An Improved Generative Adversarial Network-Based and U-Shaped Transformer Method for Glass Curtain Crack Deblurring Using UAVs. Sensors. 2024; 24(23):7713. https://doi.org/10.3390/s24237713

Chicago/Turabian StyleHuang, Jiaxi, and Guixiong Liu. 2024. "An Improved Generative Adversarial Network-Based and U-Shaped Transformer Method for Glass Curtain Crack Deblurring Using UAVs" Sensors 24, no. 23: 7713. https://doi.org/10.3390/s24237713

APA StyleHuang, J., & Liu, G. (2024). An Improved Generative Adversarial Network-Based and U-Shaped Transformer Method for Glass Curtain Crack Deblurring Using UAVs. Sensors, 24(23), 7713. https://doi.org/10.3390/s24237713