1. Introduction

Blind face restoration (BFR) aims to restore a high-quality (HQ) face image from a low-quality (LQ) image that has been degraded by unknown and complex factors, such as downsampling, blur, noise, and compression artifacts. BFR is a highly ill-posed problem because the unknown degradation makes it difficult to determine a single solution for a given LQ image, leading to multiple possible outcomes. Since facial images are sensitive to even subtle differences, having detailed information is essential for accurate restoration. By utilizing high-quality (HQ) reference images of the same individual, it becomes possible to achieve a high quality of image that is difficult to attain with BFR methods that do not use reference images. In this context, the reference-based blind face restoration (RefBFR) method has gained significant attention for its unique ability to leverage additional reference images to improve accuracy for practical scenarios. As a result, it can be applied for various applications, including face recognition [

1,

2], face detection [

3,

4,

5] and age estimation [

6,

7].

Recently, several RefBFR studies [

8,

9,

10,

11,

12,

13] have been proposed based on deep learning [

14]. Among these methods, PGDiff [

13] has demonstrated outstanding performance in RefBFR using a training-free guided diffusion model [

15]. It provides guidance to an unconditional diffusion model pre-trained for face image generation by incorporating the gradients of the losses during the reverse diffusion process. Their loss function is structured as a combination of multiple distances, each representing a specific desired attribute of the additional images. These include the coarsely restored image, generated using an external restorer such as CodeFormer [

16], and the reference image, processed through the ArcFace network [

1]. However, the guidance technique of PGDiff [

13] may not be the optimal solution for RefBFR. This limitation arises from their gradients, which focus on using low-level information solely from the coarsely restored image, while relying on high-level information exclusively from the reference image. As a result, this approach fails to capture the crucial low-level details from the reference image and the high-level features from the coarsely restored image, leading to sub-optimal results. Moreover, the guidance derived from merely summing the gradients of multiple loss functions often results in sub-optimal results, as these gradients may be incompatible, causing conflicts.

To address this problem, we propose a novel gradient adjustment method for RefBFR called Pivot Direction Gradient guidance (PDGrad) within a guided diffusion framework. Inspired by PCGrad [

17], the essence of our method is to reduce gradient interference by directly modifying the conflicting gradients of the loss. To this end, we first define the loss function based on both low-level and high-level features. Similar to PGDiff [

13], we utilize external information such as the coarsely restored image

which is obtained using the pre-trained restoration method such as CodeFormer [

16] and the reference

. However, unlike PGDiff, we utilize both

and

to compute the loss at each level. This is because these two images capture complementary characteristics of face images.

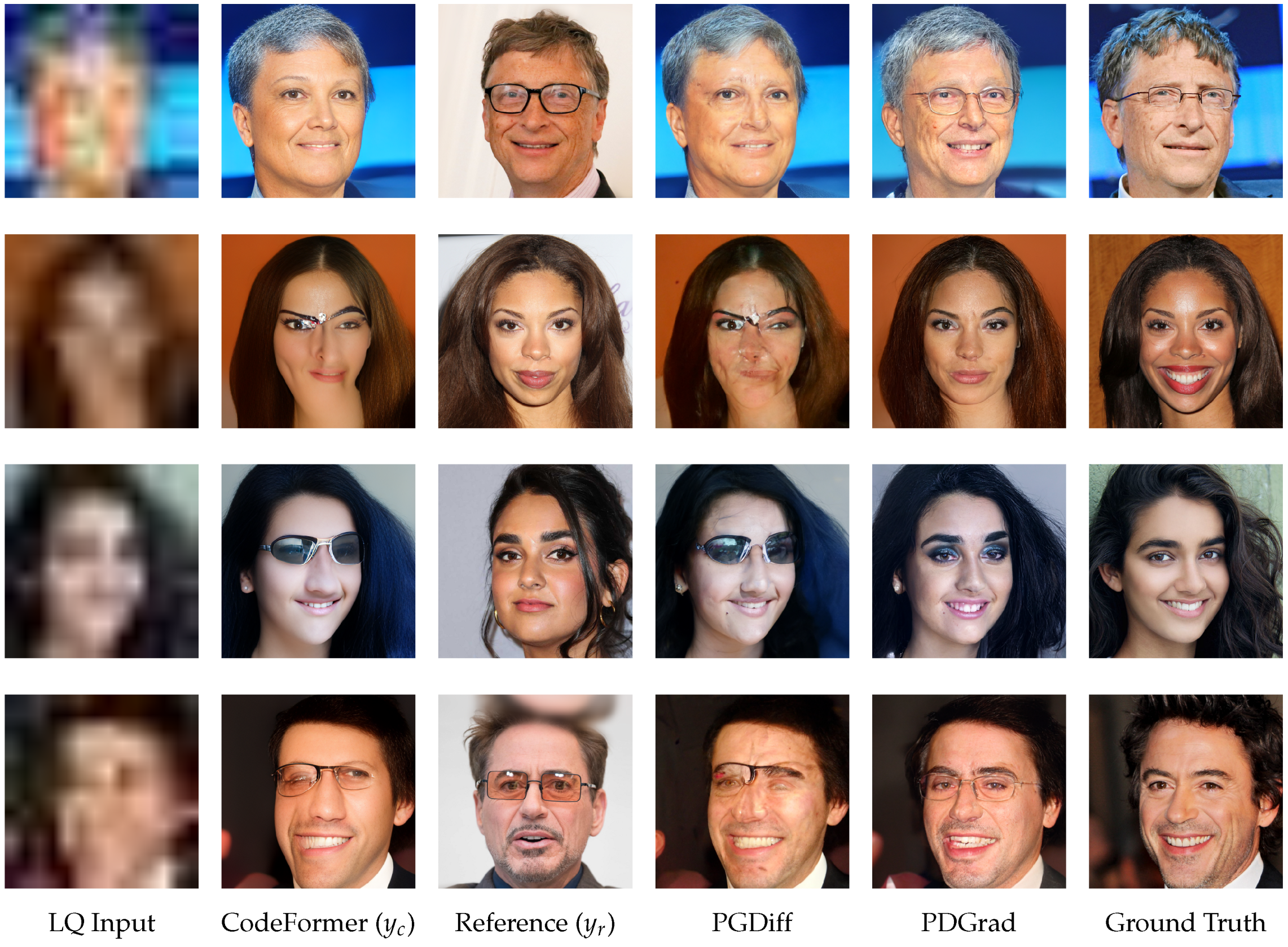

Figure 1 illustrates the complementary properties of

and

. Generally,

is aligned well with the LQ input and making it easy to compare with the prediction for low-level information such as edge, color and shape. However, certain areas of

are not restored effectively. In contrast,

provides more reliable high-level information, such as identity, and is partially aligned to input, helping to compensate for the low-level details in regions where

has significant degradation. Based on this observation, our approach efficiently and comprehensively leverages both images, enabling the effective integration of detailed and contextual information from both

and

.

In this situation, simply summing the gradients of the losses at each level can lead to conflicting gradients. To address this issue, we establish a proper pivot gradient for the loss at each feature level and align other gradients to this pivot when conflicts arise. This approach allows us to fully harness the distinct advantages of both

and

. Specifically, for the loss using low-level features, the gradient from the loss using

is prioritized, and the gradient of the loss using

is modified by projecting it onto the plane orthogonal to

when a conflict arises. Conversely, for the loss using high-level features, the gradient from the loss using

is emphasized, and the gradient from the loss using

is projected onto that of

to avoid conflict and fully utilize the information in

. Additionally, if the magnitude of the adjusted gradient exceeds that of the pivot gradient, it is adaptively scaled according to the ratio between the two, placing greater emphasis on the pivot. As exemplified in

Figure 1, the proposed PDGrad outperforms previous methods by preserving the properties of the prioritized image at each feature level while selectively extracting elements of the properties of other images in a manner that aligns with the prioritized image.

In summary, the proposed method provides the following key contributions:

We propose a novel gradient adjustment method called PDGrad for RefBFR within a training-free guided diffusion framework.

The loss function of the proposed method consists of two components: low-level and high-level losses, where both the coarsely restored image and the reference image are fully incorporated.

Our proposed PDGrad establishes a proper pivot gradient for the loss at each level and adjusts other gradients to align with this pivot by modifying their direction and magnitude, thereby mitigating gradient interference.

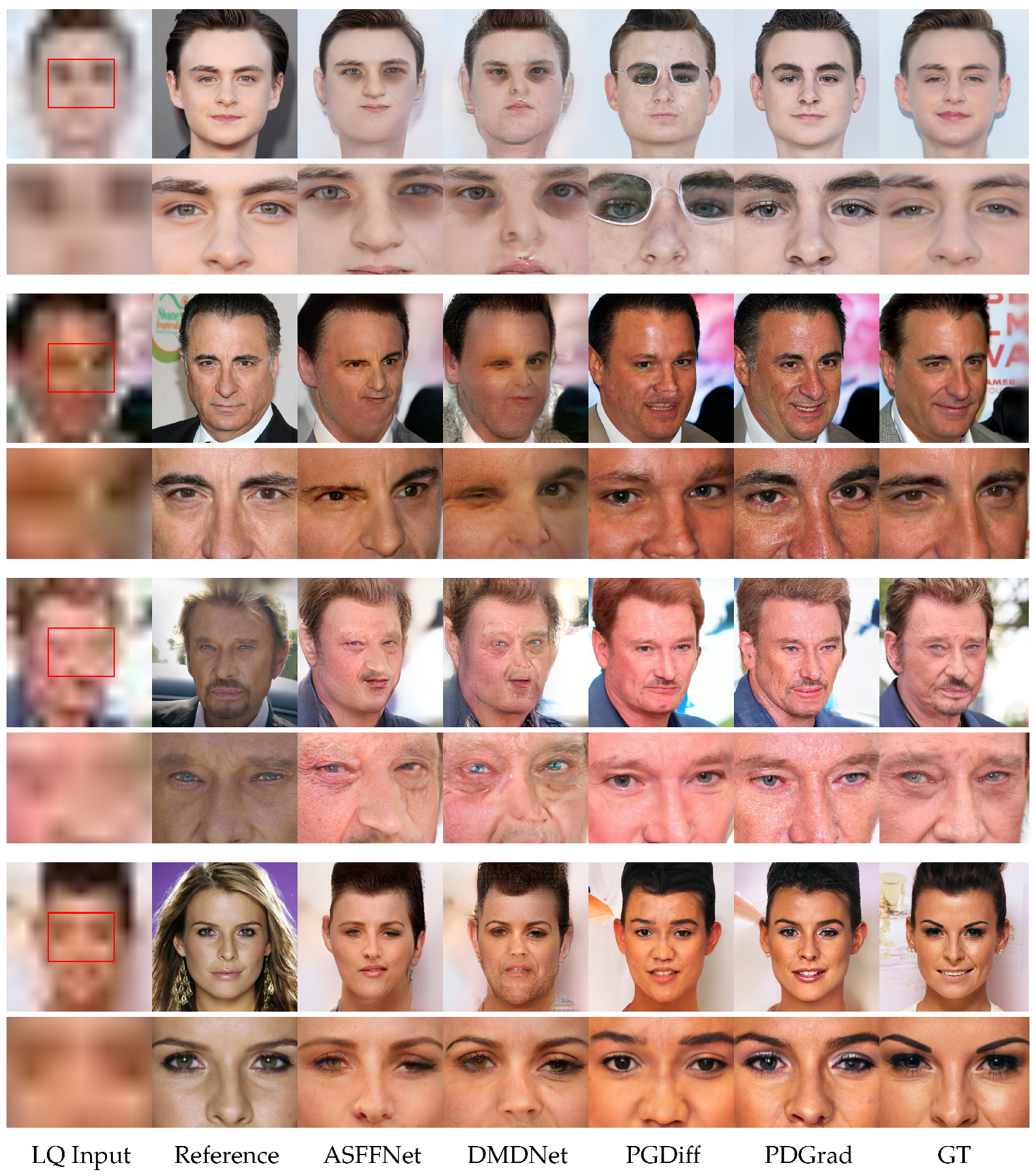

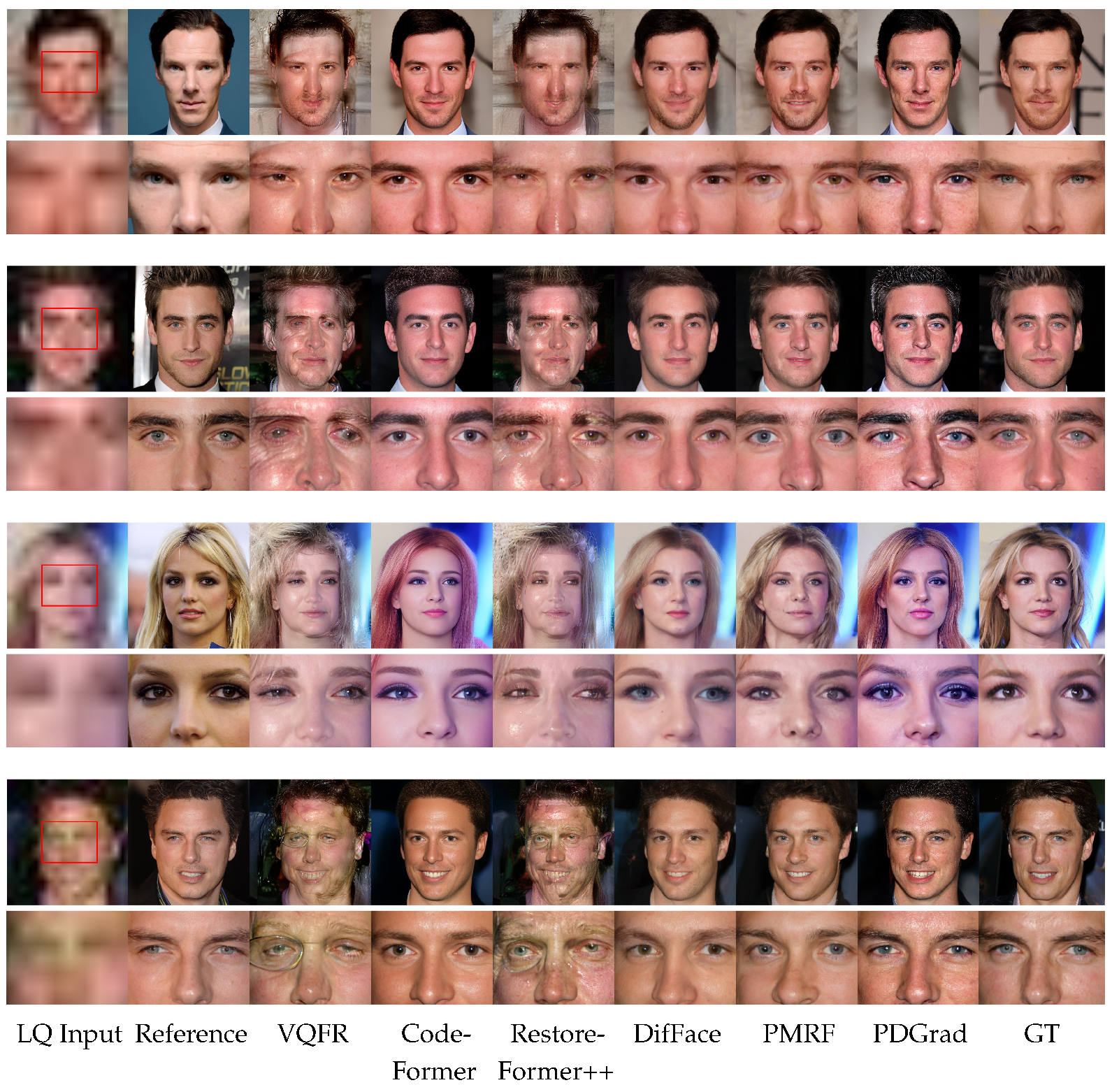

Extensive comparisons show the superiority of our method against previous state-of-the-art RefBFR methods.

In this paper, we outline the organization as follows:

Section 2 discusses previous works on blind face restoration.

Section 3 provides detailed explanation of the proposed PDGrad. In

Section 4, we compare and analyze the experimental outcomes of several methods, including our proposed approach. Finally, in

Section 5, we discuss the conclusions.

2. Related Works

Most recent BFR studies have focused on utilizing face-specific prior information, such as geometric facial priors, reference priors and generative facial priors. Note that our proposed method can be viewed as a study that exploits both reference priors and generative facial priors.

Unlike natural images, faces consist of a common structural shape and components (e.g., eyes, nose, mouth and hair). Inspired by this, several approaches have been proposed to utilize the geometric priors. including facial landmarks [

18,

19], semantic segmentation map [

20,

21,

22,

23] and 3D shapes [

24,

25,

26]. However, as pointed out by [

16,

27], such priors have limitations in guiding the fine details and texture information of the face (e.g., wrinkles and eye pupils). Furthermore, estimating geometric face priors from severely degraded inputs makes it difficult to obtain reliable results, which can affect performance.

Various methods [

8,

9,

11] have been developed to utilize high-quality facial images of the same individual as reference for restoration, aiming to leverage the distinct facial features of each person. However, these methods heavily rely on reference images of the same individual, which cannot be easily accessible. To mitigate this issue, DFDNet [

10] utilizes a facial component dictionary as reference information. However, their approach may be sub-optimal for face restoration tasks since their dictionary is extracted from the pre-trained face recognition model. Inspired by [

10], DMDNet [

12] introduces dual dictionaries that extend beyond a single general dictionary, allowing for more flexible handling of degraded inputs, regardless of whether reference images are present. While [

10,

12] utilize the facial component dictionary extracted from the face recognition model, ENTED [

28] introduces a vector quantized dictionary along with a latent space refinement technique. In contrast to the above methods that leverage a single reference image, ASFFNet [

11] utilizes multiple reference images to select the most suitable guidance image and learns the landmark weights to improve the reconstruction quality. ENTED [

28] is a blind face restoration framework that uses a high-quality reference image to restore a single degraded input image. It substitutes corrupted semantic features with high-quality codes, inspired by vector quantization, and generates style codes containing high-quality texture information. PFStorer [

29] utilizes a diffusion model for face restoration and Super-Resolution, using several images of the individual’s face to customize the restoration process while preserving fine details.

Recently, numerous studies have widely leveraged the capability of generative models such as the Generative Adversarial Network (GAN) [

30], Vector Quantized-Variational AutoEncoder (VQVAE) [

31], Vector Quantized-Generative Adversarial Network (VQGAN) [

32] and Denoising diffusion probabilistic models (DDPMs) [

33,

34,

35]. GAN inversion-based methods [

36,

37] try to find the closest latent vector in the GAN latent space corresponding to a given input image. GFP-GAN [

27] and GPEN [

38] design their encoder networks to effectively find the latent vector for an input image, then utilize the pre-trained GAN model as a decoder in their methods. VQFR [

39] uses a vector-quantized (VQ) codebook as a dictionary to enhance high-quality facial details. By employing a parallel decoder for the fusion of input features with texture features from the VQ codebook, this approach preserves fidelity while achieving detailed facial restoration. CodeFormer [

16] is a transformer-based architecture for code prediction that captures the global structure of low-quality facial images, enabling the generation of natural faces even from severely degraded inputs. To adapt to varying levels of degradation, a controllable feature transformation (CFT) module is included, offering a versatile balance between fidelity and quality. RestoreFormer++ [

40] enhances facial image restoration by utilizing fully spatial and multi-head cross-attention to merge contextual, semantic and structural information from degraded face features with high-quality priors. PMRF [

41] presents an algorithm that predicts the posterior mean and then uses a rectified flow model to transport it to a high-quality image.

Building on the powerful generative capabilities of the diffusion model [

33,

34,

35], several studies [

42,

43,

44,

45] have explored its application for BFR, and several additional studies [

13,

44,

46,

47] have also explored its application for BFR. DR2 [

46] proposes a two-stage framework DR2E for blind face restoration that uses a pretrained diffusion model to remove various types of degradation and a module for detail enhancement and upsampling, and, furthermore, eliminates the need for synthetically degraded data during training. IPC [

47] proposes a conditional diffusion-based BFR framework like SR3 to restore severely degraded face images. This framework employs a region-adaptive strategy that enhances restoration quality while preserving identity information. DifFace [

44] establishes a posterior distribution for mapping LQ images to HQ counterparts via a pre-trained diffusion model. To achieve this, the approach estimates a transition distribution from the LQ input image to an intermediate noisy image using a diffuse estimator within the diffusion model to enhance robustness to severe degradations. Additionally, it incorporates a Markov chain that transitions the intermediate image to the HQ target image by repeatedly applying a pre-trained diffusion model, which further improves face restoration performance. Lu et al. [

48] propose a diffusion-based architecture that incorporates 3D facial priors. These priors are derived from a reconstructed 3D face from an initially restored image and are integrated into the diffusion reverse process to provide structural and identity information.

However, these studies have not been developed to effectively leverage reference images for further enhancement. Meanwhile, PGDiff [

13] proposed a partial guidance approach that is extensible to utilizing a reference image. By incorporating identity loss into the diffusion-based restoration method, it outperforms existing diffusion-prior-based methods. Inspired by this, we also propose a method that incorporates both diffusion prior and reference prior. However, unlike PGDiff [

13], which does not consider the conflicts between gradients arising from multiple losses, our approach effectively addresses these conflicts through the integration of the proposed PDGrad, leading to more consistent and high-quality results for RefBFR.