Emergency Detection in Smart Homes Using Inactivity Score for Handling Uncertain Sensor Data

Abstract

1. Introduction

2. Related Work

3. Inactivity Score-Based Approach for Emergency Detection

3.1. Inactivity Score

3.2. Emergency Detection Using the Inactivity Score

4. Evaluation

- CASAS, Aruba https://casas.wsu.edu/datasets/aruba.zip (last accessed: 16 July 2024), and CASAS, Aruba2 https://casas.wsu.edu/datasets/aruba2.zip (last accessed: 16 July 2024) [52]. These datasets contain sensor data collected in the home of a single resident. For the evaluation, only the ON-events of the motion sensors were considered. Due to strong correlations among sensors within the same room, only the motion sensors with the IDs `M007’, `M019’, `M020’, `M024’, and `M027’ were used. A rolling filter was applied, allowing only one sensor to be activated per hour to prevent frequent or nearly continuous trigger events by motion sensors when a person just stays in a room.Due to a data gap, only the sequence up to 2012-03-18 18:49:34 was used for Aruba2.

- CASAS, Kyoto https://casas.wsu.edu/datasets/kyoto.zip (last accessed: 16 July 2024) [53]. This dataset is similar to Aruba and Aruba2 but was recorded in a different household with two residents. Here, similarly, only the ON-events of the motion sensors were considered, and a rolling filter was applied. The sensors used were `M007’, `M017’, `M020’, `M021’, `M029’, `M031’, `M038’, `M045’, and `M051’.

- Wilhelm, Water HH-01, HH-05, HH-11, and HH-12 https://zenodo.org/records/7506076 (last accessed: 16 July 2024) [26]. These datasets contain water consumption data from various households measured by smart water meters. The data were analyzed and converted into activity data as presented by Wilhelm et al. [26]. Since all activity events were created by a single sensor, assigning activities to specific rooms was impossible. Due to larger measurement gaps, only the most extended sequence without a gap of more than one hour was considered for each dataset.

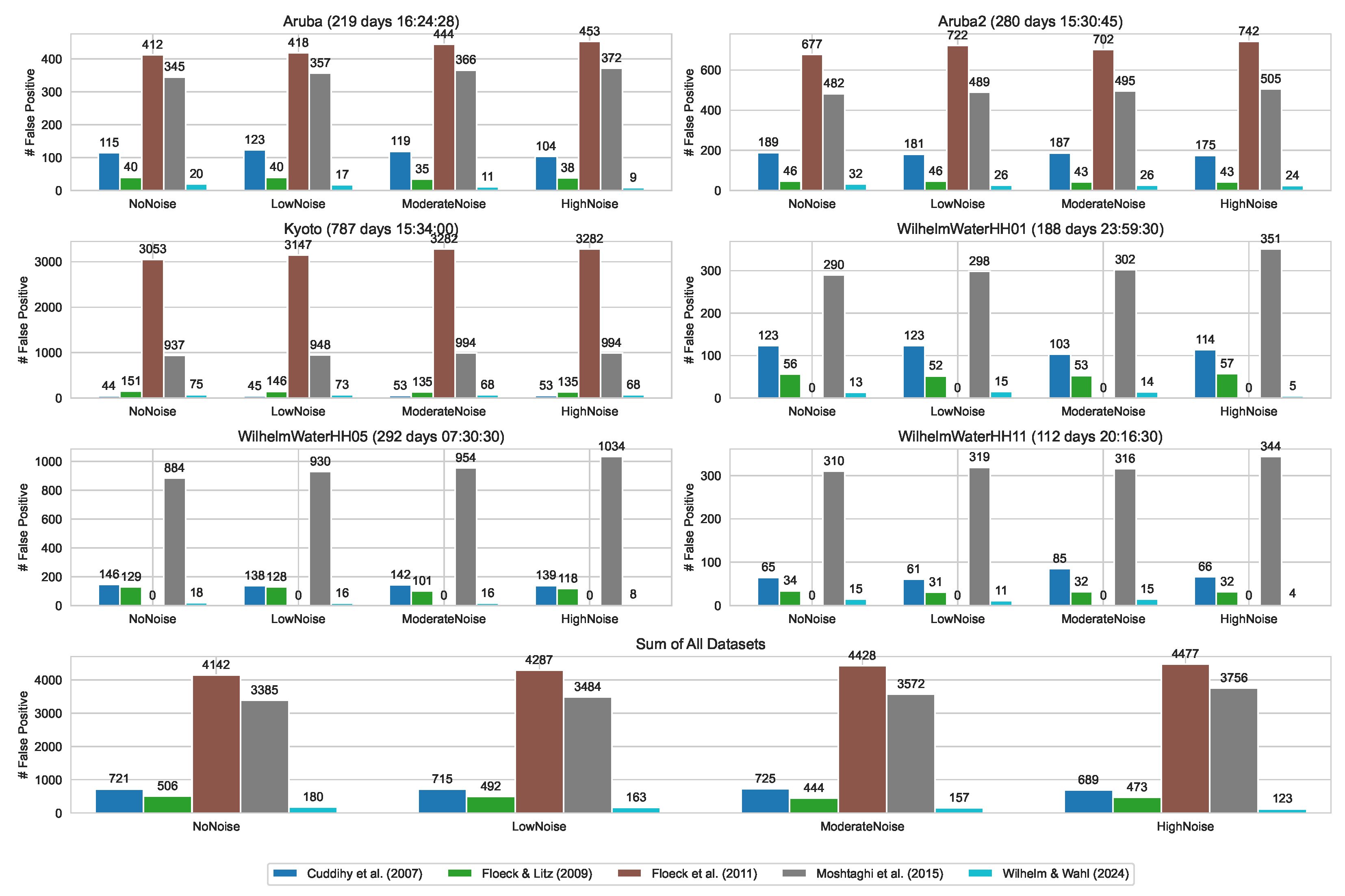

4.1. False-Positive Detection

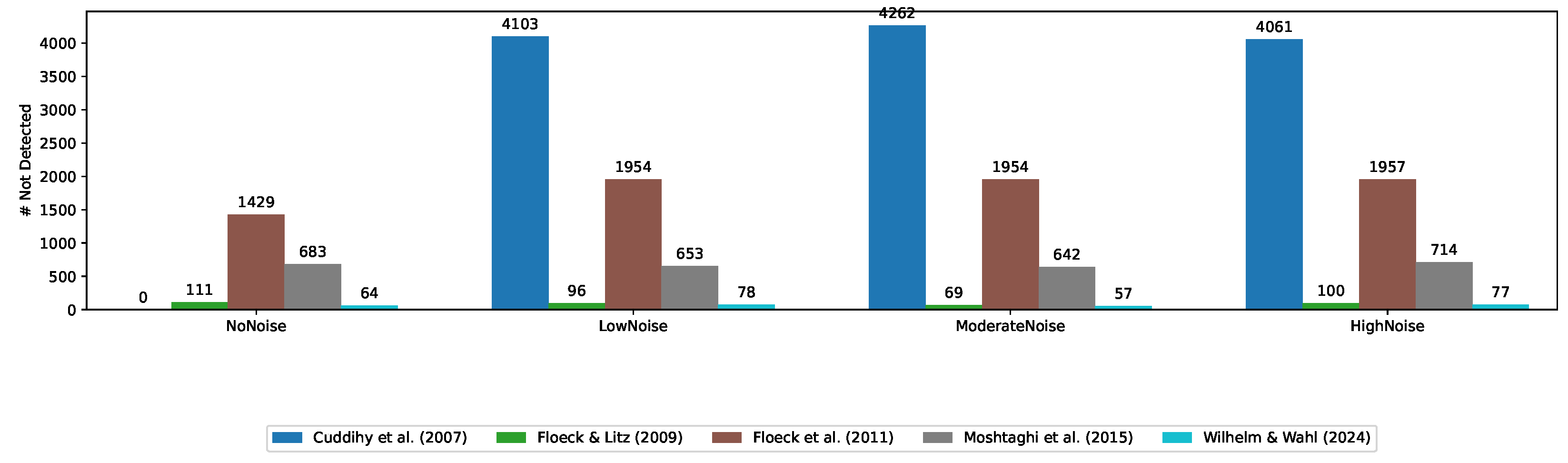

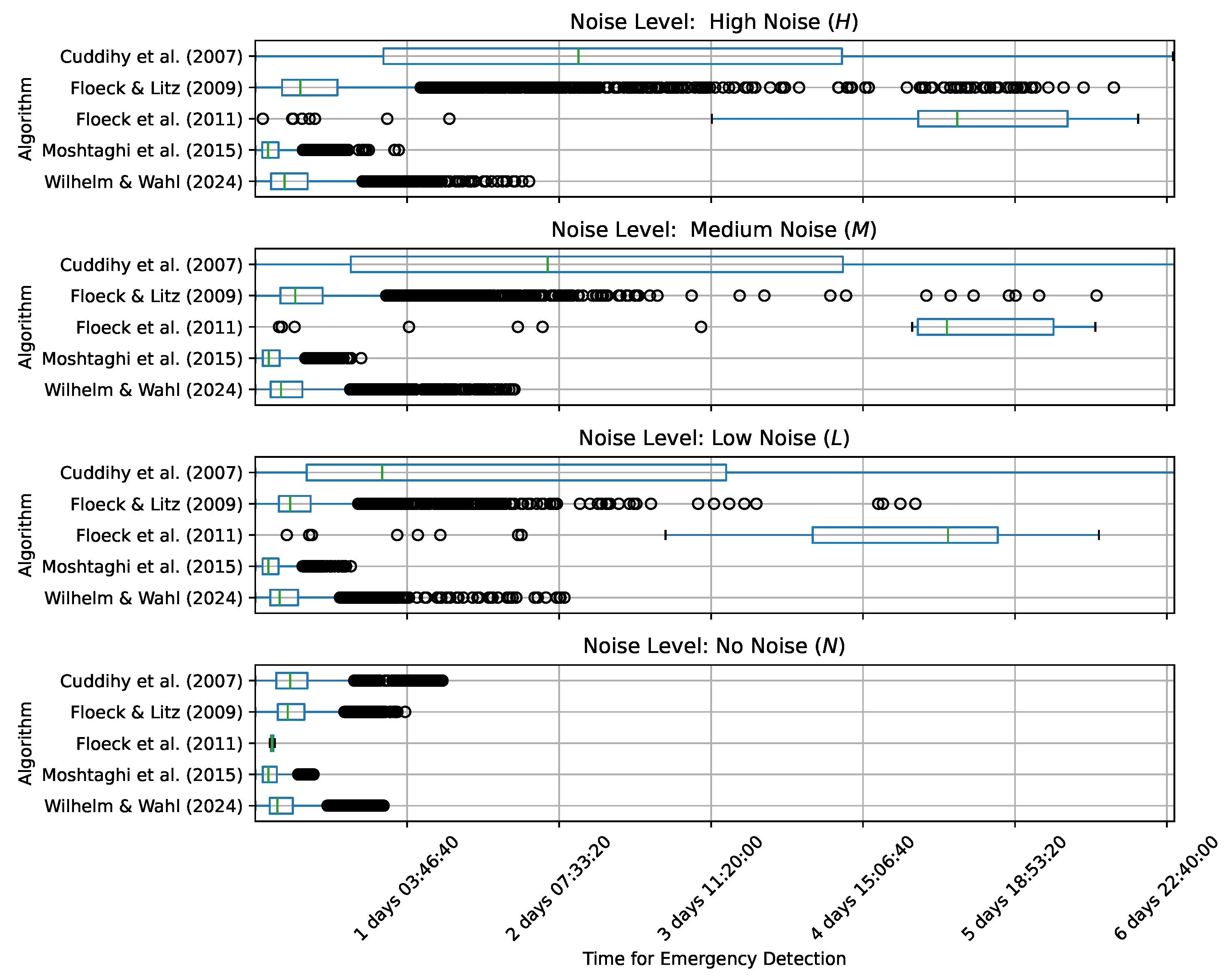

4.2. Emergency Detection Time

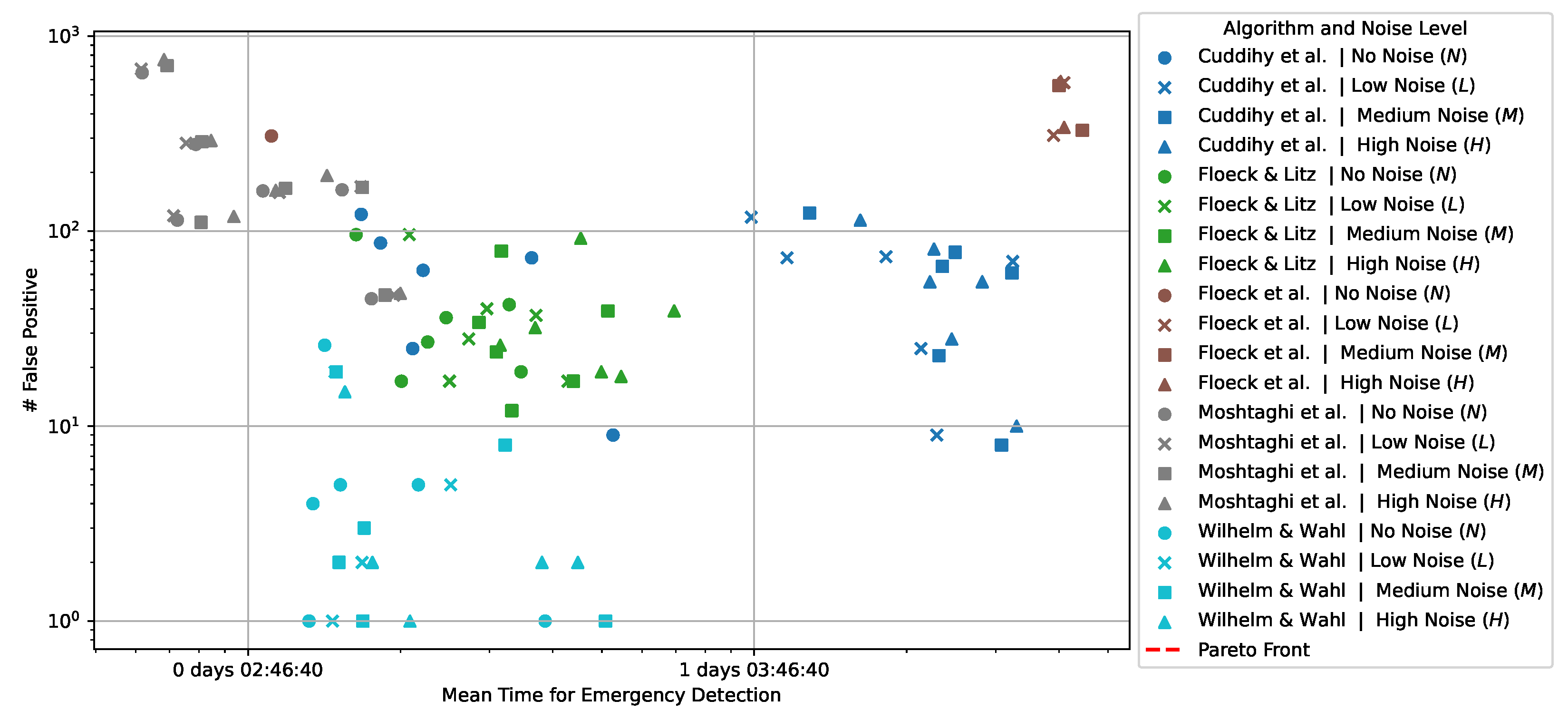

4.3. False Positives vs. Emergency Detection Times

5. Discussion

6. Conclusions and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AAL | Ambient-Assisted Living |

| PIR | Passive Infrared Motion Sensors |

| IQR | Interquartile Range |

References

- United Nations, Department of Economic and Social Affairs, Population Division. World Population Ageing 2019 (ST/ESA/SER.A/444). 2020. Available online: https://www.un.org/en/development/desa/population/publications/pdf/ageing/WorldPopulationAgeing2019-Report.pdf (accessed on 23 September 2024).

- Kanasi, E.; Ayilavarapu, S.; Jones, J. The aging population: Demographics and the biology of aging. Periodontology 2000, 72, 13–18. [Google Scholar] [CrossRef] [PubMed]

- Naumann, D.; Oswald, F. Wohnen im Alter. In Proceedings of the Handbuch Soziale Arbeit und Alter; Springer VS: Wiesbaden, Germany, 2020; pp. 369–377. [Google Scholar] [CrossRef]

- Scharlach, A.E.; Moore, K.D. Aging in Place. In Proceedings of the Handbook of Theories of Aging, New York, NY, USA, 19 May 2016; pp. 407–426. [Google Scholar]

- DIN SPEC 91280:2012-09; Technikunterstütztes Leben (AAL)—Klassifikation von Dienstleistungen für Technikunterstütztes Leben im Bereich der Wohnung und des Direkten Wohnumfelds. ’DIN Deutsches Institut für Normung e. V.: Berlin, Germany, 2012. [CrossRef]

- Rashidi, P.; Mihailidis, A. A Survey on Ambient-Assisted Living Tools for Older Adults. IEEE J. Biomed. Health Inform. 2013, 17, 579–590. [Google Scholar] [CrossRef]

- Kubitza, J.; Reuschenbach, B. Gestürzt und über Tage Hilflos Allein; Springer Science and Business Media LLC: Berlin/Heidelberg, Germany, 2021; Volume 74, pp. 30–32. [Google Scholar] [CrossRef]

- Prückner, S. Notfallmedizin im demographischen Wandel—Möglichkeiten und Grenzen einer automatisierten Notfallerkennung bei alten Menschen im häuslichen Umfeld. Postdoctoral Theses, Ludwig-Maximilians-Universität München, München, Germany, 2022. [Google Scholar]

- von Renteln-Kruse, W. Stürze älterer Menschen. Dmw-Dtsch. Med. Wochenschr. 2004, 129, 880–883. [Google Scholar] [CrossRef] [PubMed]

- Szepanski, J. Die Zahl der Stürze steigt. Heilberufe 2016, 68, 26–27. [Google Scholar] [CrossRef]

- Tinetti, M.E.; Liu, W.L.; Claus, E.B. Predictors and Prognosis of Inability to Get up after Falls among Elderly Persons; American Medical Association: Chicago, IL, USA, 1993; Volume 269, pp. 65–70. [Google Scholar]

- Schell, B.; Prückner, S.; Luiz, T.; Madler, C. Zum Problem der Verzögerten Alamierung bei häuslichen Sturzereignissen. Available online: https://www.ai-online.info/abstracts/pdf/dacAbstracts/2008/P_PO_2.4_3_00800.pdf (accessed on 23 September 2024).

- Fleming, J.; Brayne, C. Inability to get up after falling, subsequent time on floor, and summoning help: Prospective cohort study in people over 90. BMJ 2008, 337, a2227. [Google Scholar] [CrossRef]

- Simpson, P.M.; Bendall, J.C.; Tiedemann, A.; Lord, S.R.; Close, J.C.T. Epidemiology of Emergency Medical Service Responses to Older People Who Have Fallen: A Prospective Cohort Study; Informa UK Limited: London, UK, 2014; Volume 18, pp. 185–194. [Google Scholar] [CrossRef]

- Wild, D.; Nayak, U.; Isaacs, B. How dangerous are falls in old people at home? Br. Med. J. (Clin. Res. Ed.) 1981, 282, 266–268. [Google Scholar] [CrossRef]

- Vellas, B.; Cayla, F.; Bocquet, H.; De Pemille, F.; Albarede, J. Prospective Study of Restriction of Acitivty in Old People after Falls; Oxford University Press: Oxford, UK, 1987; Volume 16, pp. 189–193. [Google Scholar]

- Gurley, R.J.; Lum, N.; Sande, M.; Lo, B.; Katz, M.H. Persons Found in Their Homes Helpless or Dead; Mass Medical Soc.: Waltham, MA, USA, 1996; Volume 334, pp. 1710–1716. [Google Scholar]

- Prückner, S.; Madler, C.; Beyer, D.; Berger, M.; Kleinberger, T.; Becker, M. Emergency Monitoring and Pprevention-eu Project Emerge; Fraunhofer IESE: Kaiserslautern, Germany, 2007. [Google Scholar]

- Bloch, F.; Lundy, J.E.; Rigaud, A.S. Profile differences of purchasers, non-purchasers, and users and non-users of Personal Emergency Response Systems: Results of a prospective cohort study. Disabil. Health J. 2017, 10, 607–610. [Google Scholar] [CrossRef]

- Heinbüchner, B.; Hautzinger, M.; Becker, C.; Pfeiffer, K. Satisfaction and use of personal emergency response systems. Z. Gerontol. Geriatr. 2010, 43, 219–223. [Google Scholar] [CrossRef]

- Elbert, D.; Storf, H.; Eisenbarth, M.; Ünalan, Ö.; Schmitt, M. An approach for detecting deviations in daily routine for long-term behavior analysis. In Proceedings of the 5th International ICST Conference on Pervasive Computing Technologies for Healthcare, Dublin, Ireland, 23–26 May 2011. [Google Scholar] [CrossRef]

- Floeck, M.; Litz, L. Inactivity patterns and alarm generation in senior citizens’ houses. In Proceedings of the 2009 European Control Conference (ECC), Budapest, Hungary, 23–26 August 2009. [Google Scholar] [CrossRef]

- Fouquet, Y.; Franco, C.; Demongeot, J.; Villemazet, C.; Vuillerme, N. Telemonitoring of the elderly at home: Real-time pervasive follow-up of daily routine, automatic detection of outliers and drifts. Smart Home Syst. 2010, 121–138. [Google Scholar] [CrossRef]

- Wilhelm, S.; Jakob, D.; Ahrens, D. Human Presence Detection by Monitoring the Indoor CO2 Concentration. In Proceedings of the Conference on Mensch und Computer, New York, NY, USA, 6–9 September 2020; MuC ’20. pp. 199–203. [Google Scholar] [CrossRef]

- Wilhelm, S.; Kasbauer, J. Exploiting Smart Meter Power Consumption Measurements for Human Activity Recognition (HAR) with a Motif-Detection Based Non-Intrusive Load Monitoring (NILM) Approach. Sensors 2021, 21, 8036. [Google Scholar] [CrossRef]

- Wilhelm, S.; Kasbauer, J.; Jakob, D.; Elser, B.; Ahrens, D. Exploiting Smart Meter Water Consumption Measurements for Human Activity Event Recognition. J. Sens. Actuator Netw. 2023, 12, 46. [Google Scholar] [CrossRef]

- Bakar, U.A.B.U.A.; Ghayvat, H.; Hasanm, S.F.; Mukhopadhyay, S.C. Activity and Anomaly Detection in Smart Home: A Survey. In Proceedings of the Smart Sensors, Measurement and Instrumentation; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; pp. 191–220. [Google Scholar] [CrossRef]

- Demiris, G.; Hensel, B.K. Technologies for an Aging Society: A Systematic Review of “Smart Home” Applications. Yearb. Med. Inform. 2008, 17, 33–40. [Google Scholar] [CrossRef]

- Lapierre, N.; Neubauer, N.; Miguel-Cruz, A.; Rincon, A.R.; Liu, L.; Rousseau, J. The state of knowledge on technologies and their use for fall detection: A scoping review. Int. J. Med. Inform. 2018, 111, 58–71. [Google Scholar] [CrossRef] [PubMed]

- Ren, L.; Peng, Y. Research of Fall Detection and Fall Prevention Technologies: A Systematic Review. IEEE Access 2019, 7, 77702–77722. [Google Scholar] [CrossRef]

- Barger, T.S.; Brown, D.E.; Alwan, M. Health-Status Monitoring Through Analysis of Behavioral Patterns. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2005, 35, 22–27. [Google Scholar] [CrossRef]

- Dong, Q.; Yang, Y.; Wang, H.; Xu, J.-H. Fall alarm and inactivity detection system design and implementation on Raspberry Pi. In Proceedings of the 2015 17th International Conference on Advanced Communication Technology (ICACT), PyeongChang, Republic of Korea, 1–3 July 2015. [Google Scholar] [CrossRef]

- Jansen, B.; Deklerck, R. Context aware inactivity recognition for visual fall detection. In Proceedings of the 2006 Pervasive Health Conference and Workshops, Innsbruck, Austria, 29 November–1 December 2006. [Google Scholar] [CrossRef]

- Liu, C.L.; Lee, C.H.; Lin, P.M. A fall detection system using k-nearest neighbor classifier. Expert Syst. Appl. 2010, 37, 7174–7181. [Google Scholar] [CrossRef]

- Youm, S.; Kim, C.; Choi, S.; Kang, Y.S. Development of a methodology to predict and monitor emergency situations of the elderly based on object detection. Multimed. Tools Appl. 2019, 78, 5427–5444. [Google Scholar] [CrossRef]

- El-Bendary, N.; Tan, Q.; Pivot, F.C.; Lam, A. Fall Detection and Prevention for the Elderly: A Review of Trends and Challenges. Int. J. Smart Sens. Intell. Syst. 2013, 6, 1230–1266. [Google Scholar] [CrossRef]

- Kim, J.; Min, K.; Jung, M.; Chi, S. Occupant behavior monitoring and emergency event detection in single-person households using deep learning-based sound recognition. Build. Environ. 2020, 181, 107092. [Google Scholar] [CrossRef]

- Sharma, J.; Granmo, O.C.; Goodwin, M. Emergency Detection with Environment Sound Using Deep Convolutional Neural Networks. In Proceedings of the Fifth International Congress on Information and Communication Technology; Advances in Intelligent Systems and Computing; Springer: Singapore, 2020; Volume 1184, pp. 144–154. [Google Scholar] [CrossRef]

- Principi, E.; Squartini, S.; Bonfigli, R.; Ferroni, G.; Piazza, F. An integrated system for voice command recognition and emergency detection based on audio signals. Expert Syst. Appl. 2015, 42, 5668–5683. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, X.; Arifoglu, D.; Lu, C.; Bouchachia, A.; Geng, Y.; Zheng, G. A Survey on Ambient Sensor-Based Abnormal Behaviour Detection for Elderly People in Healthcare. Electronics 2023, 12, 1539. [Google Scholar] [CrossRef]

- Masciadri, A.; Scarantino, C.; Comai, S.; Salice, F. Understanding Home Inactivity for Human Behavior Anomaly Detection. In Proceedings of the 5th EAI International Conference on Smart Objects and Technologies for Social Good, ACM, Valencia, Spain, 25–27 September 2019. [Google Scholar] [CrossRef]

- Floeck, M.; Litz, L. Activity- and Inactivity-Based Approaches to Analyze an Assisted Living Environment. In Proceedings of the 2008 Second International Conference on Emerging Security Information, Systems and Technologies, Cap Esterel, France, 25–31 August 2008. [Google Scholar] [CrossRef]

- Cuddihy, P.; Weisenberg, J.; Graichen, C.; Ganesh, M. Algorithm to Automatically Detect Abnormally Long Periods of Inactivity in a Home. In Proceedings of the 1st ACM SIGMOBILE International Workshop on Systems and Networking Support for Healthcare and Assisted Living Environments (HealthNet ’07); Association for Computing Machinery: New York City, NY, USA, 2007. [Google Scholar] [CrossRef]

- Weisenberg, J.; Cuddihy, P.; Rajiv, V. Augmenting Motion Sensing to Improve Detection of Periods of Unusual Inactivity. In Proceedings of the 2nd International Workshop on Systems and Networking Support for Health Care and Assisted Living Environments (HealthNet ’08); Association for Computing Machinery: New York City, NY, USA, 2008. [Google Scholar] [CrossRef]

- Floeck, M.; Litz, L.; Rodner, T. An Ambient Approach to Emergency Detection Based on Location Tracking. In Proceedings of the Toward Useful Services for Elderly and People with Disabilities, Montreal, Canada, 20–22 June 2011; Lecture Notes in Computer Science. Springer: Berlin/Heidelberg, Germany, 2011; Volume 6719, pp. 296–302. [Google Scholar] [CrossRef]

- Moshtaghi, M.; Zukerman, I.; Russell, R.A. Statistical models for unobtrusively detecting abnormal periods of inactivity in older adults. User Model. User-Adapt. Interact. 2015, 25, 231–265. [Google Scholar] [CrossRef]

- Moshtaghi, M.; Zukerman, I.; Albrecht, D.; Russell, R.A. Monitoring Personal Safety by Unobtrusively Detecting Unusual Periods of Inactivity. In Proceedings of the User Modeling, Adaptation, and Personalization (UMAP 2013); Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2013; Volume 7899, pp. 139–151. [Google Scholar] [CrossRef]

- Moshtaghi, M.; Zukerman, I.; Russell, R.A.; Albrecht, D. Towards Detecting Inactivity Using an In-Home Monitoring System. In Proceedings of the 2013 IEEE 8th Conference on Industrial Electronics and Applications (ICIEA), Melbourne, VIC, Australia, 19–21 June 2013. [Google Scholar] [CrossRef]

- Moshtaghi, M.; Zukerman, I. Modeling the Tail of a Hyperexponential Distribution to Detect Abnormal Periods of Inactivity in Older Adults. In Lecture Notes in Computer Science, Proceedings of the Trends in Artificial Intelligence (PRICAI 2014); Springer: Cham, Switzerland, 2014; Volume 8862, pp. 985–997. [Google Scholar] [CrossRef]

- Valera, A.C.; Tan, H.P.; Bai, L. Improving the Sensitivity of Unobtrusive Inactivity Detection in Sensor-Enabled Homes for the Elderly. In Proceedings of the 2016 IEEE International Conference on Pervasive Computing and Communication Workshops (PerCom Workshops), Sydney, NSW, Australia, 14–18 March 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Wilhelm, S. Exploiting Home Infrastructure Data for the Good: Emergency Detection by Reusing Existing Data Sources. In Proceedings of the Advances in Intelligent Systems and Computing; Springer International Publishing: Berlin/Heidelberg, Germany, 2021; pp. 51–58. [Google Scholar] [CrossRef]

- Cook, D. Learning Setting-Generalized Activity Models for Smart Spaces. IEEE Intell. Syst. 2010, 27, 32–38. [Google Scholar] [CrossRef] [PubMed]

- Cook, D.J.; Crandall, A.S.; Thomas, B.L.; Krishnan, N.C. CASAS: A Smart Home in a Box. Computer 2012, 46, 62–69. [Google Scholar] [CrossRef]

- Polst, S.; Storck, S. Fehlalarme bei AAL-Systemen—Ergebnisse einer Pilotstudie. 2020. Available online: https://dl.gi.de/items/c17673cf-2694-4977-8aaf-43d8a6d8b4b5 (accessed on 8 August 2024). [CrossRef]

| Time | Sensor | Certainty |

|---|---|---|

| 06:32:00 | Sensor-4 | 0.8 |

| 06:48:00 | Sensor-1 | 0.6 |

| 06:53:00 | Sensor-1 | 0.6 |

| 07:13:00 | Sensor-1 | 0.6 |

| 07:20:00 | Sensor-2 | 0.9 |

| 08:13:00 | Sensor-3 | 0.3 |

| 09:56:00 | Sensor-1 | 0.6 |

| Dataset | #Sensors | #Events | Days Covered |

|---|---|---|---|

| CASAS Aruba | 5 | 2861 | ≈220 days |

| CASAS Aruba2 | 5 | 4146 | ≈281 days |

| CASAS Kyoto | 9 | 13,501 | ≈788 days |

| Wilhelm, Water HH-01 | 1 | 3731 | ≈189 days |

| Wilhelm, Water HH-05 | 1 | 13,773 | ≈292 days |

| Wilhelm, Water HH-11 | 1 | 3843 | ≈113 days |

| Wilhelm, Water HH-12 | 1 | 1099 | ≈111 days |

| Noise Level | Description |

|---|---|

| No noise (N) | |

| Low noise (L) | |

| Medium noise (M) | |

| High noise (H) |

| Algorithm | No Noise (N) | Low Noise (L) | Medium Noise (M) | High Noise (H) |

|---|---|---|---|---|

| Cuddihy et al. [43] | 0 days 07:44:37 | 2 days 01:09:09 | 2 days 17:00:13 | 2 days 19:36:40 |

| Floeck and Litz [22,42] | 0 days 06:59:19 | 0 days 08:26:53 | 0 days 10:09:28 | 0 days 13:17:45 |

| Floeck et al. [45] | 0 days 03:05:23 | 4 days 15:00:54 | 4 days 22:27:07 | 4 days 16:54:23 |

| Moshtaghi et al. [46] | 0 days 02:55:34 | 0 days 03:05:59 | 0 days 03:11:37 | 0 days 03:10:15 |

| Wilhelm and Wahl | 0 days 05:23:28 | 0 days 06:09:02 | 0 days 06:48:56 | 0 days 07:16:23 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wilhelm, S.; Wahl, F. Emergency Detection in Smart Homes Using Inactivity Score for Handling Uncertain Sensor Data. Sensors 2024, 24, 6583. https://doi.org/10.3390/s24206583

Wilhelm S, Wahl F. Emergency Detection in Smart Homes Using Inactivity Score for Handling Uncertain Sensor Data. Sensors. 2024; 24(20):6583. https://doi.org/10.3390/s24206583

Chicago/Turabian StyleWilhelm, Sebastian, and Florian Wahl. 2024. "Emergency Detection in Smart Homes Using Inactivity Score for Handling Uncertain Sensor Data" Sensors 24, no. 20: 6583. https://doi.org/10.3390/s24206583

APA StyleWilhelm, S., & Wahl, F. (2024). Emergency Detection in Smart Homes Using Inactivity Score for Handling Uncertain Sensor Data. Sensors, 24(20), 6583. https://doi.org/10.3390/s24206583