Dual-Path Large Kernel Learning and Its Applications in Single-Image Super-Resolution †

Abstract

1. Introduction

- An architecture called DLKL has been proposed.

- A dual-path large kernel attention mechanism is designed based on DLKL.

- A lightweight SISR model employing the DLKL method is introduced.

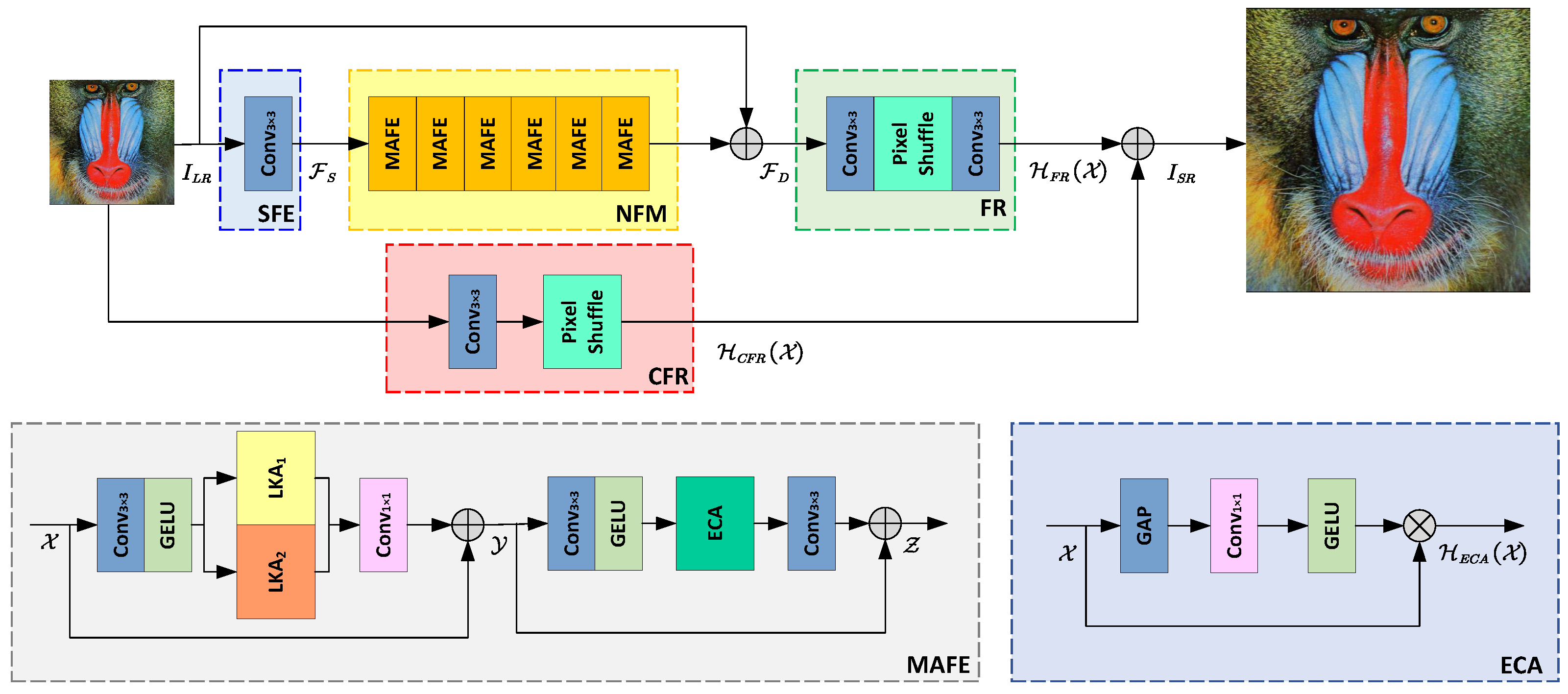

2. Proposed Method

2.1. Shallow Feature Extraction (SFE)

2.2. Nonlinear Feature Mapping (NFM)

2.2.1. MAFE

2.2.2. LKA

2.3. Feature Reconstruction (FR)

2.4. Coarse Feature Reconstruction (CFR)

2.5. Loss Function

3. Experimental Results and Analysis

3.1. Datasets

3.2. Experimental Details

3.3. Quantitative Analytics

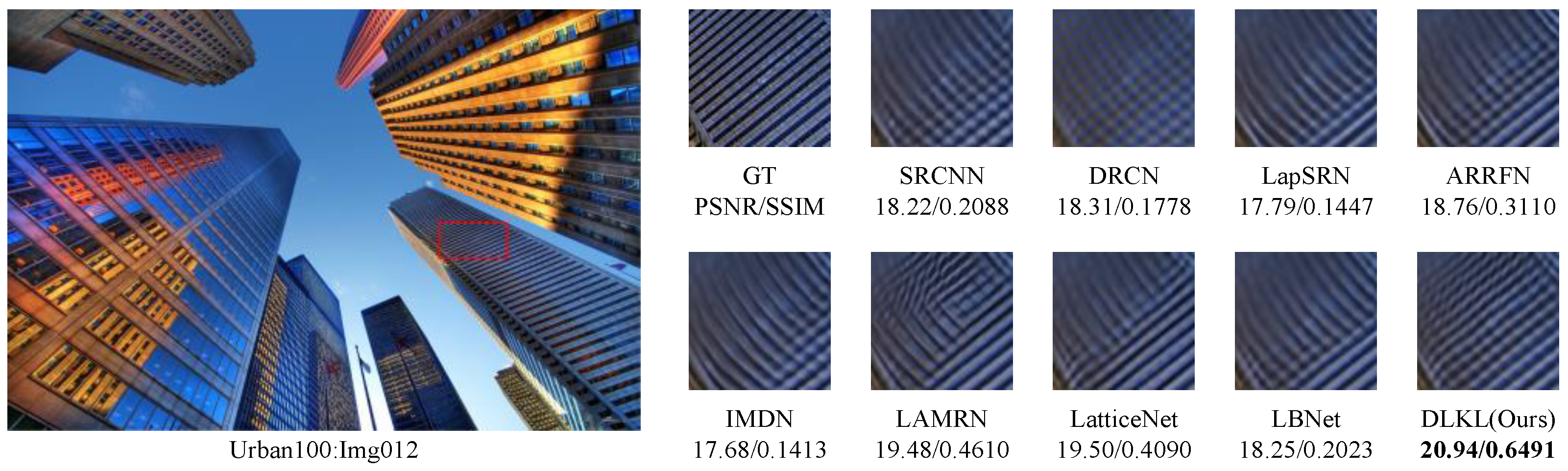

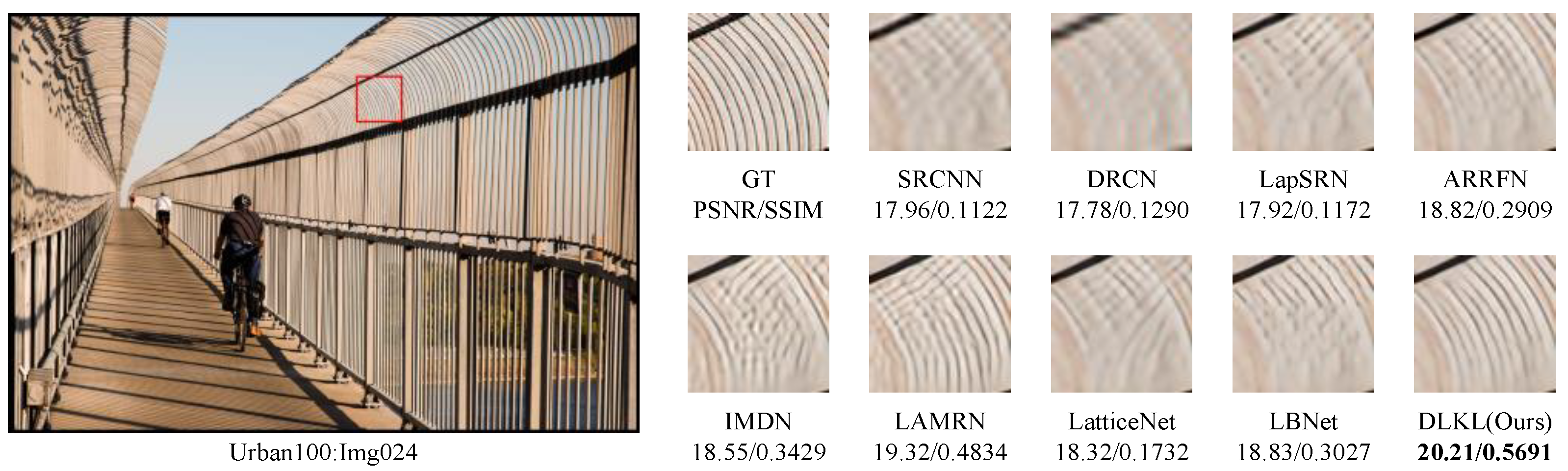

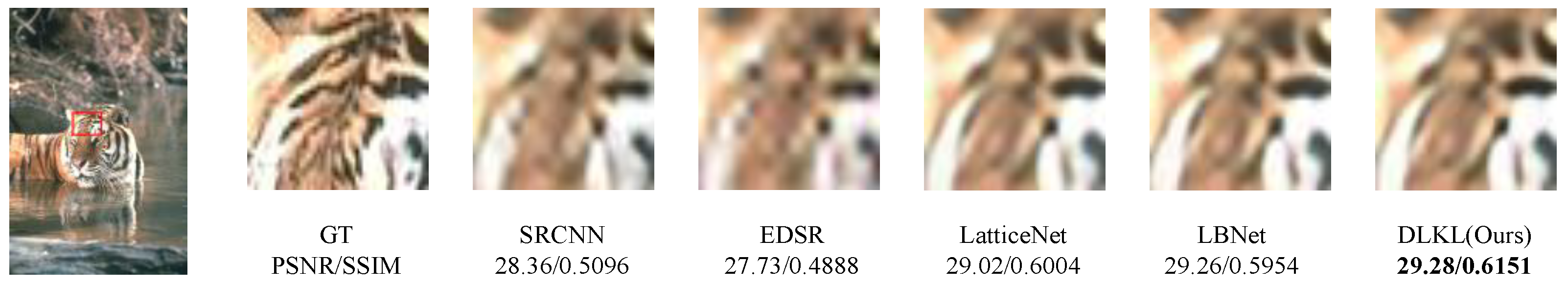

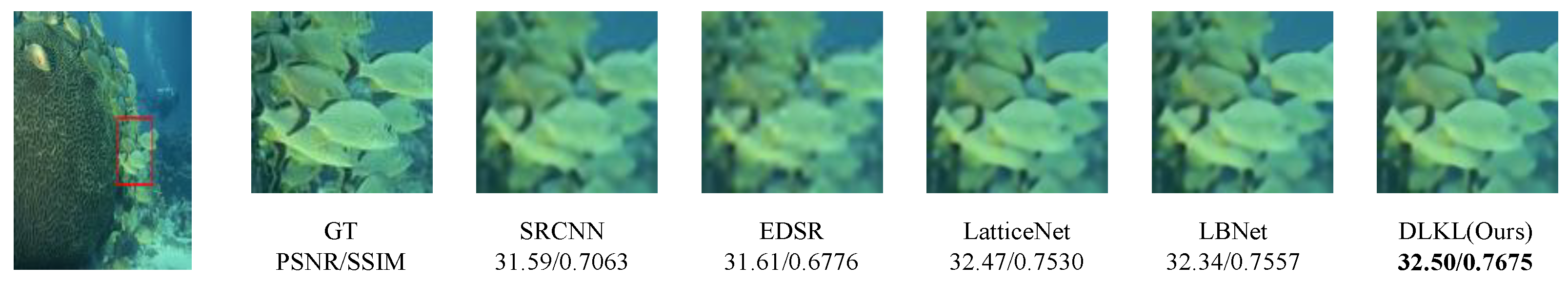

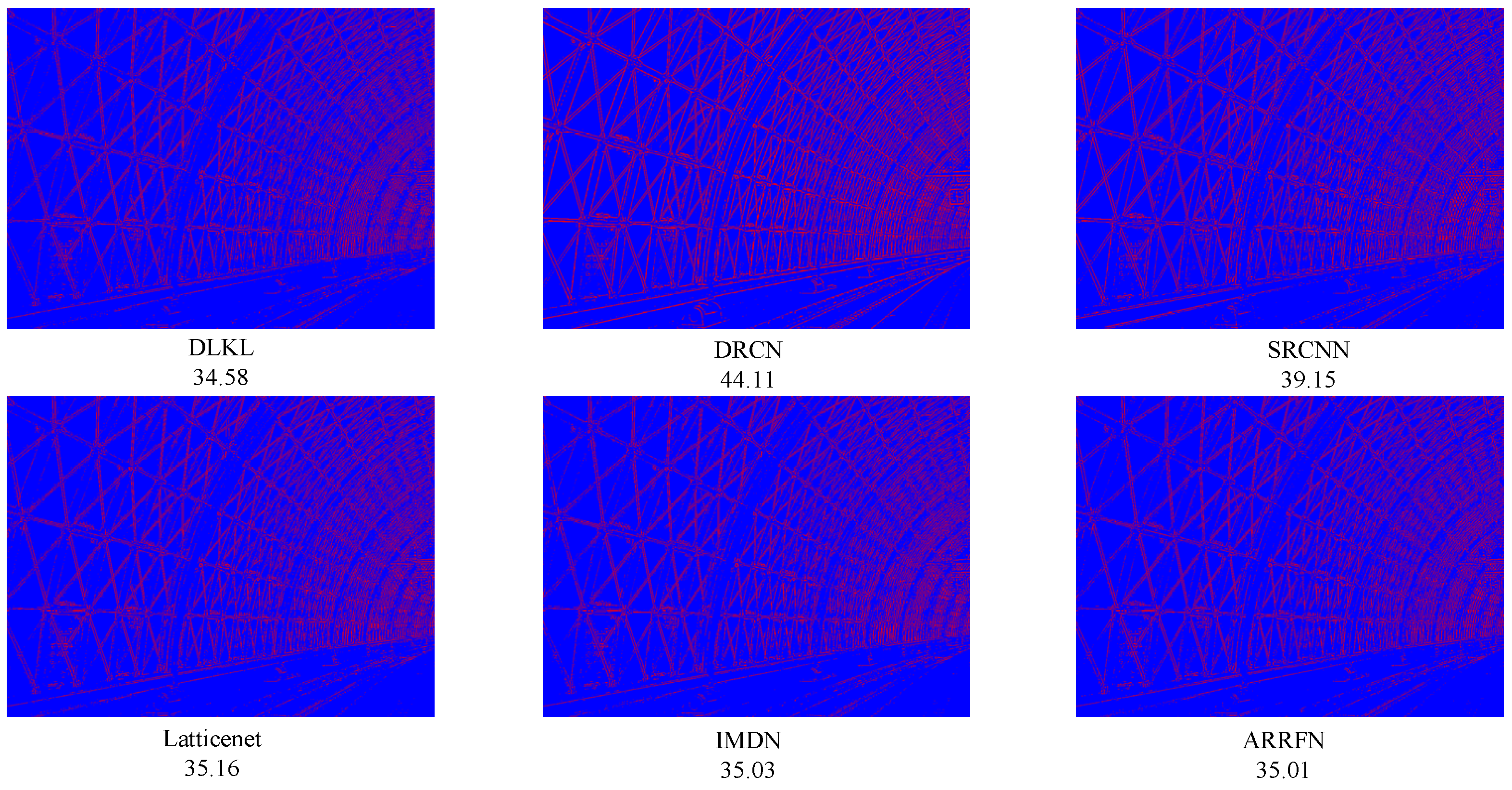

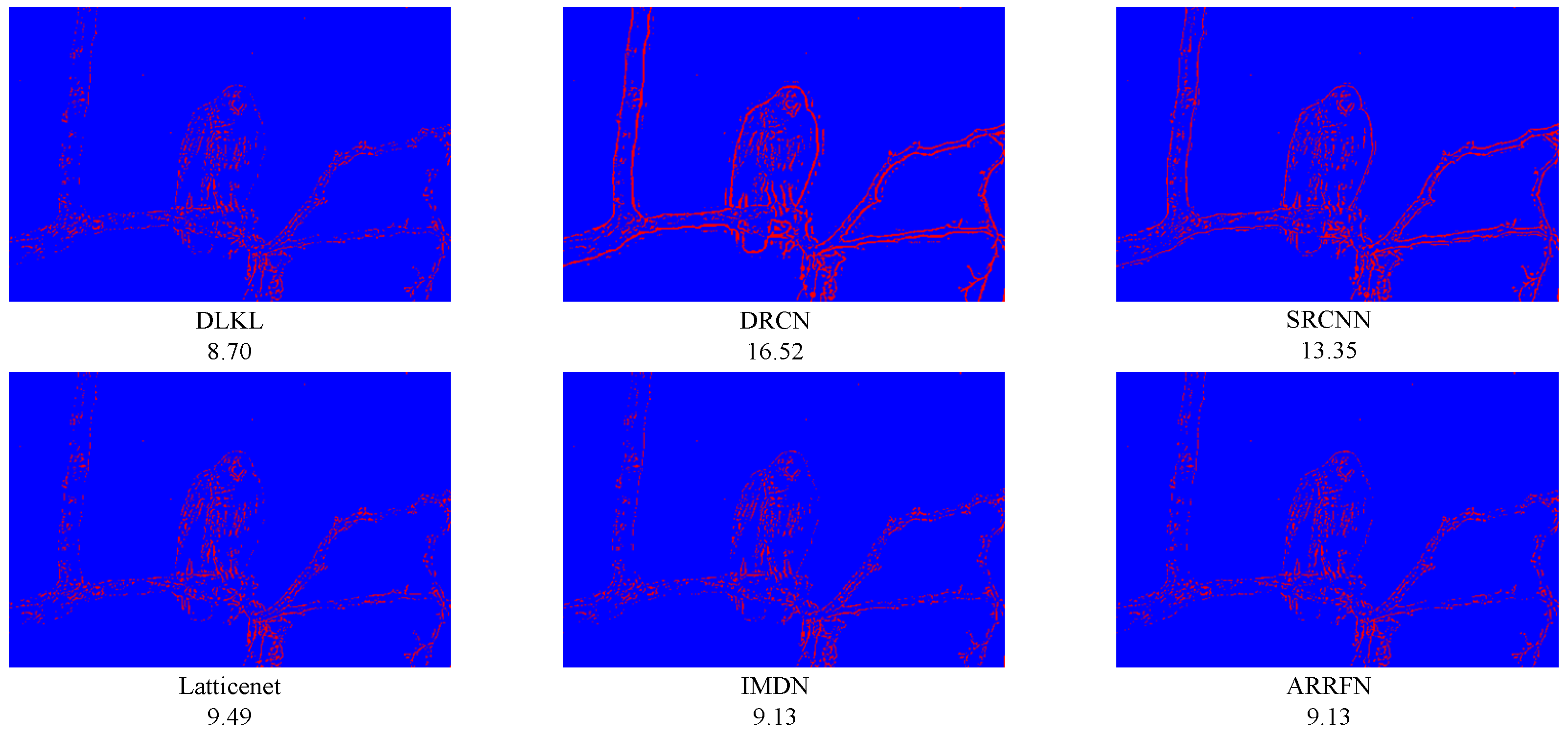

3.4. Visual Perception

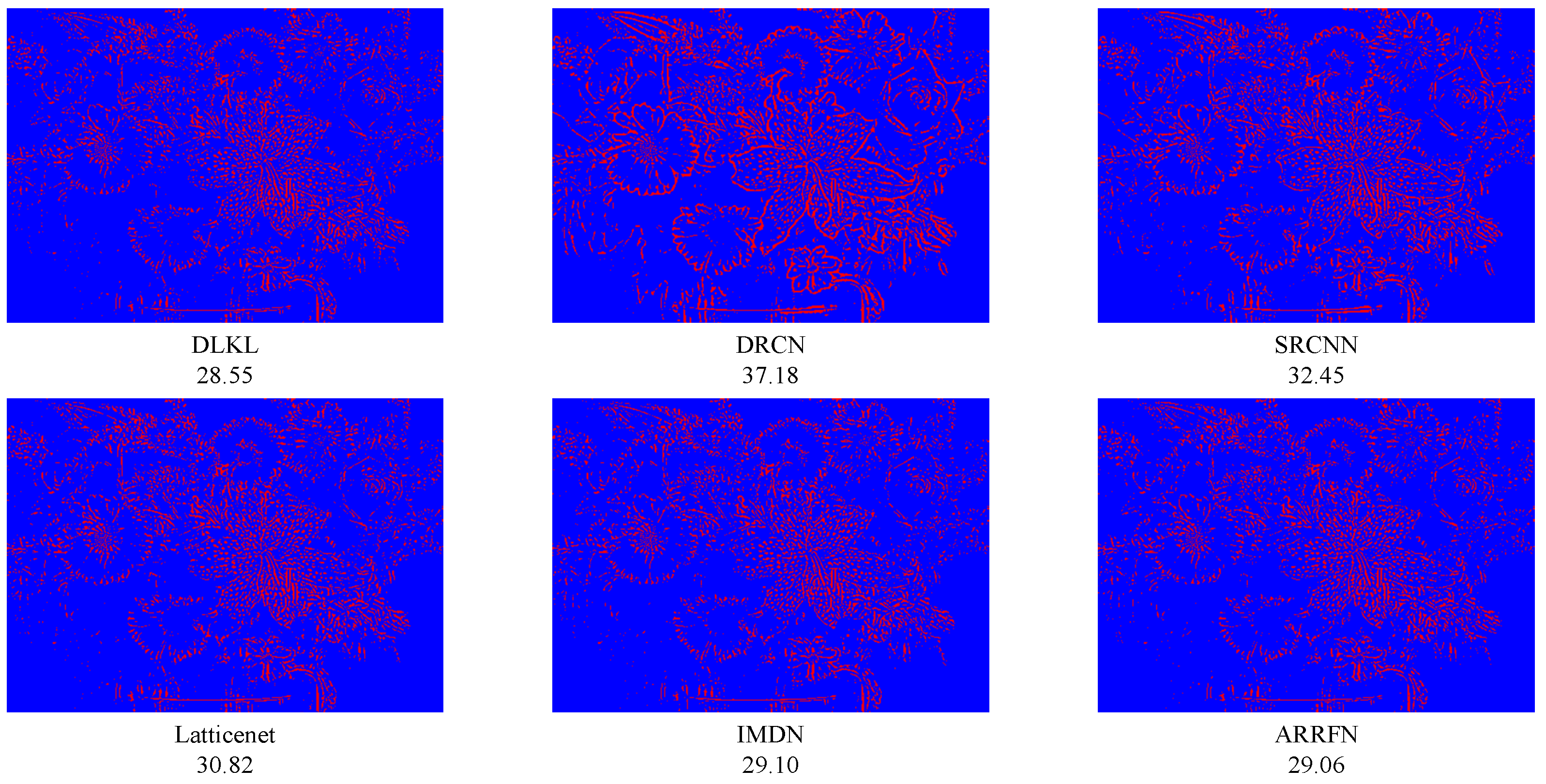

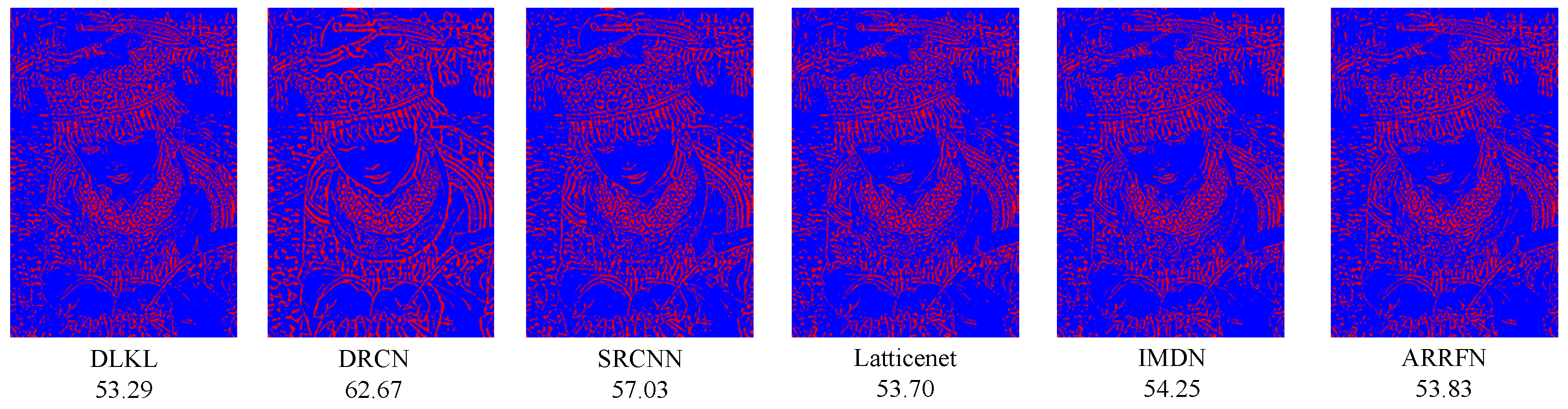

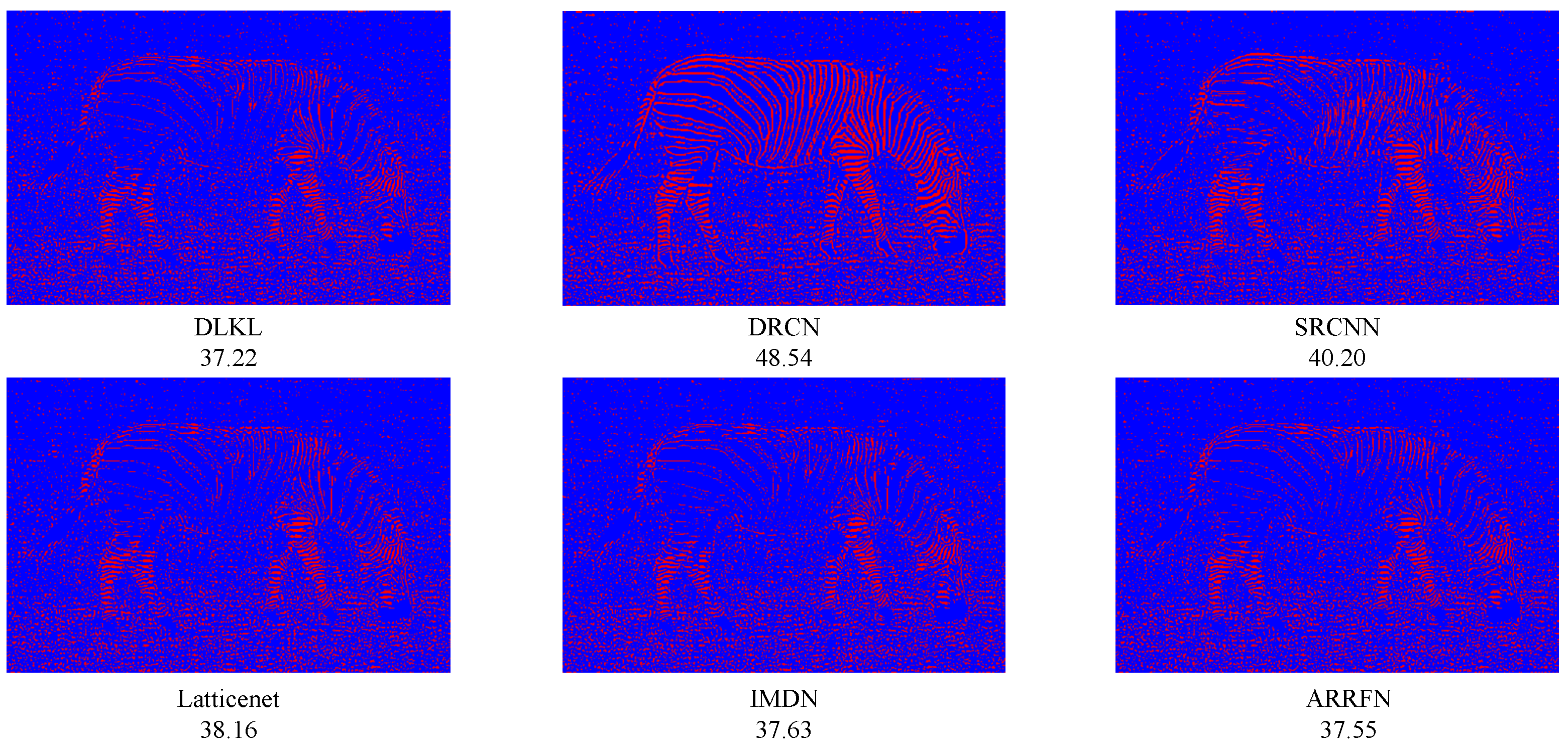

3.5. Reconstruction Error

3.6. MOS Comparisons

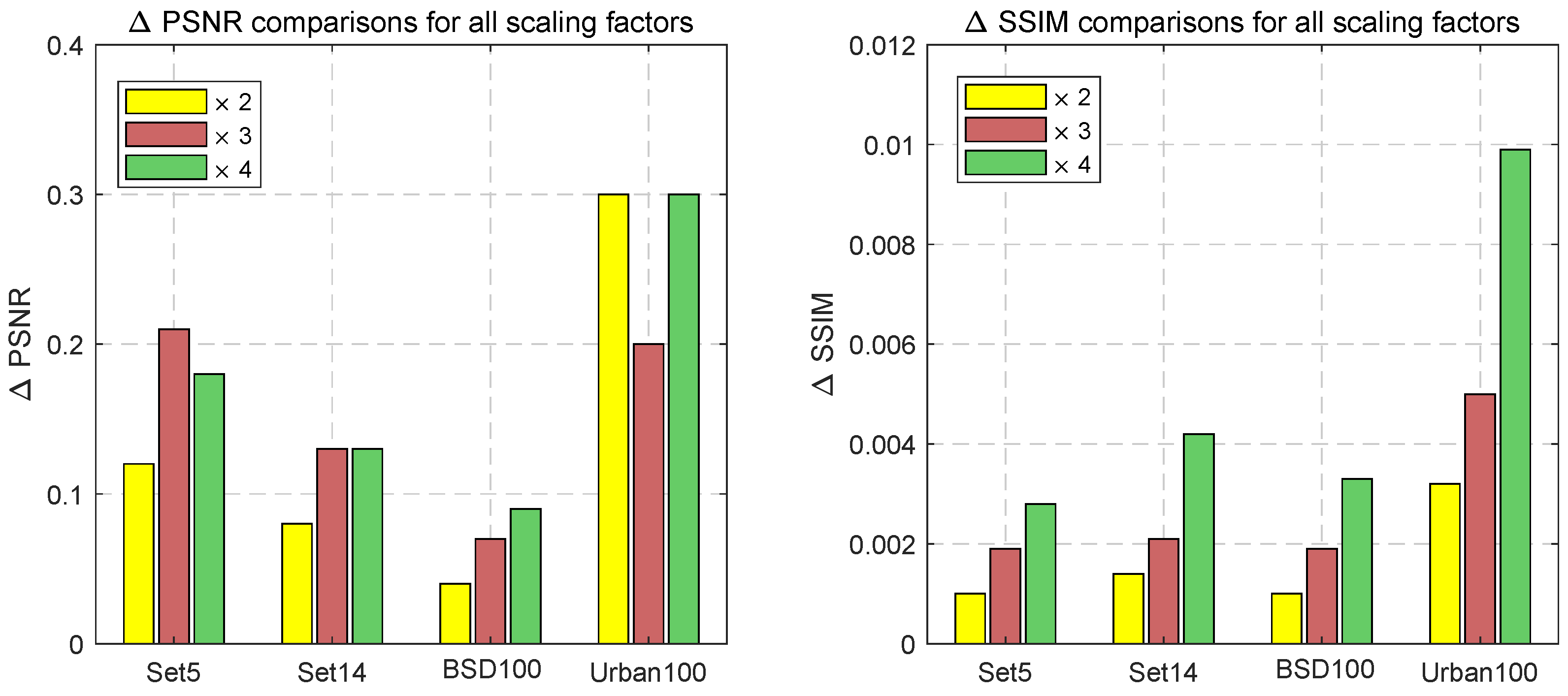

3.7. Comparisons with the Benchmark Model IMDN

3.8. Comparisons with the Recurrent-Based Methods

3.9. Comparison with Transformer-Based Methods

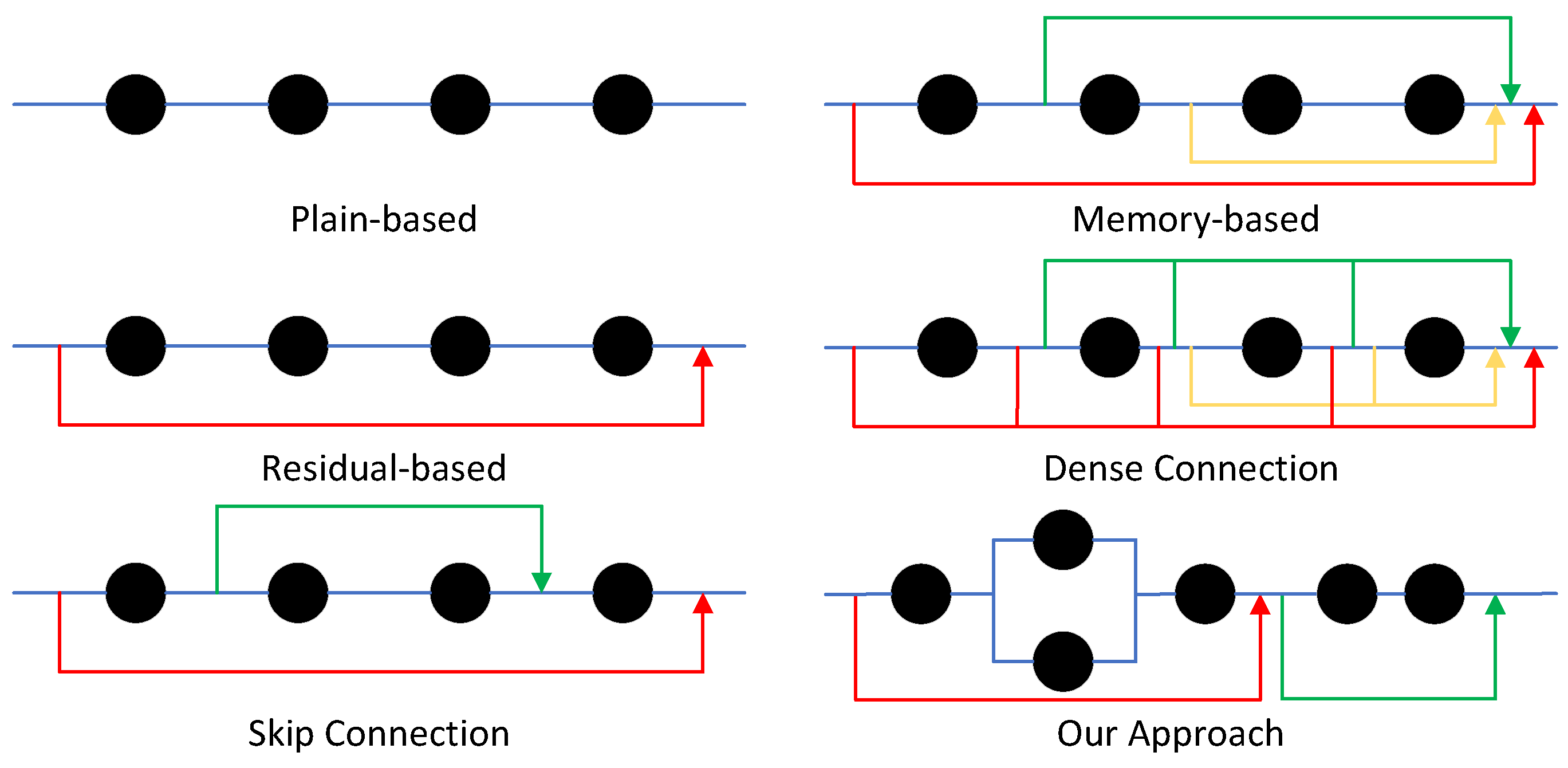

3.10. Comparisons of Topologies of Information Flow

3.11. Comparisons of the Inference Speed

3.12. Ablation Study

3.13. Trade-off between Performance and Efficiency

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, H.; Cheng, D.; Kou, Q.; Asad, M.; Jiang, H. Indicative Vision Transformer for end-to-end zero-shot sketch-based image retrieval. Adv. Eng. Inform. 2024, 60, 102398. [Google Scholar] [CrossRef]

- Zhang, H.; Cheng, D.; Jiang, H.; Liu, J.; Kou, Q. Task-like training paradigm in CLIP for zero-shot sketch-based image retrieval. Multimed. Tools Appl. 2024, 83, 57811–57828. [Google Scholar] [CrossRef]

- Jiang, H.; Asad, M.; Liu, J.; Zhang, H.; Cheng, D. Single image detail enhancement via metropolis theorem. Multimed. Tools Appl. 2024, 83, 36329–36353. [Google Scholar] [CrossRef]

- Park, S.; Choi, G.; Park, S.; Kwak, N. Dual-stage Super-resolution for edge devices. IEEE Access 2023, 11, 123798–123806. [Google Scholar] [CrossRef]

- Nguyen, D.P.; Vu, K.H.; Nguyen, D.D.; Pham, H.A. F2SRGAN: A Lightweight Approach Boosting Perceptual Quality in Single Image Super-Resolution via a Revised Fast Fourier Convolution. IEEE Access 2023, 11, 29062–29073. [Google Scholar] [CrossRef]

- Cheng, D.; Wang, Y.; Zhang, H.; Li, L.; Kou, Q.; Jiang, H. Intermediate-term memory mechanism inspired lightweight single image super resolution. Multimed. Tools Appl. 2024, 83, 76905–76934. [Google Scholar] [CrossRef]

- Cheng, D.; Yuan, H.; Qian, J.; Kou, Q.; Jiang, H. Image Super-Resolution Algorithms Based on Deep Feature Differentiation Network. J. Electron. Inf. Technol. 2024, 46, 1–10. [Google Scholar]

- Cheng, D.; Chen, J.; Kou, Q.; Nie, S.; Zhang, L. Lightweight Super-resolution Reconstruction Method Based on Hierarchical Features Fusion and Attention Mechanism for Mine Image. Chin. J. Sci. Instrum. 2022, 43, 73–84. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image Super-Resolution Using Deep Convolutional Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 38, 295–307. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1646–1654. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honoluu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Deeply-Recursive Convolutional Network for Image Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1637–1645. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Zhao, H.; Kong, X.; He, J.; Qiao, Y.; Dong, C. Efficient Image Super-Resolution Using Pixel Attention. In Proceedings of the Computer Vision—ECCV 2020 Workshops, Glasgow, UK, 23–28 August 2020; pp. 56–72. [Google Scholar]

- Chen, H.; Wang, Y.; Guo, T.; Xu, C.; Deng, Y.; Liu, Z.; Ma, S.; Xu, C.; Xu, C.; Gao, W. Pre-Trained Image Processing Transformer. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 12294–12305. [Google Scholar]

- Kou, Q.; Cheng, D.; Zhang, H.; Liu, J.; Guo, X.; Jiang, H. Single Image Super Resolution via Multi-Attention Fusion Recurrent Network. IEEE Access 2023, 11, 98653–98665. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wan, J.; Yin, H.; Liu, Z.; Chong, A.; Liu, Y. Lightweight Image Super-Resolution by Multi-Scale Aggregation. IEEE Trans. Broadcast. 2021, 67, 372–382. [Google Scholar] [CrossRef]

- Wang, Z.; Gao, G.; Li, J.; Yu, Y.; Lu, H. Lightweight Image Super-Resolution with Multi-Scale Feature Interaction Network. In Proceedings of the 2021 IEEE International Conference on Multimedia and Expo (ICME), Shenzhen, China, 5–9 July 2021; pp. 1–6. [Google Scholar]

- Lan, R.; Sun, L.; Liu, Z.; Lu, H.; Pang, C.; Luo, X. MADNet: A Fast and Lightweight Network for Single-Image Super Resolution. IEEE Trans. Cybern. 2020, 51, 1443–1453. [Google Scholar] [CrossRef] [PubMed]

- Guo, M.H.; Lu, C.Z.; Liu, Z.N.; Cheng, M.M.; Hu, S.M. Visual attention network. Comput. Vis. Media 2022, 9, 733–752. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 11531–11539. [Google Scholar]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In Proceedings of the British Machine Vision Conference, Surrey, UK, 3–7 September 2012; pp. 135.1–135.10. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On Single Image Scale-Up Using Sparse-Representations. In Curves and Surfaces; Springer: Berlin/Heidelberg, Germany, 2010; pp. 711–730. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A database of human segmented natural images and its application to evaluating segmentation algorithms and measuring ecological statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 416–423. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single image super-resolution from transformed self-exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 5197–5206. [Google Scholar]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep Laplacian Pyramid Networks for Fast and Accurate Super-Resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5835–5843. [Google Scholar]

- Hui, Z.; Gao, X.; Yang, Y.; Wang, X. Lightweight Image Super-Resolution with Information Multi-distillation Network. In Proceedings of the ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 2024–2032. [Google Scholar]

- Wang, L.; Dong, X.; Wang, Y.; Ying, X.; Lin, Z.; An, W.; Guo, Y. Exploring Sparsity in Image Super-Resolution for Efficient Inference. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 4915–4924. [Google Scholar]

- Sun, L.; Pan, J.; Tang, J. Shufflemixer: An efficient convnet for image super-resolution. Adv. Neural Inf. Process. Syst. 2022, 35, 17314–17326. [Google Scholar]

- Gao, G.; Wang, Z.; Li, J.; Li, W.; Yu, Y.; Zeng, T. Lightweight bimodal network for single-image super-resolution via symmetric CNN and recursive transformer. In Proceedings of the International Joint Conferences on Artificial Intelligence Organization, Vienna, Austria, 23–29 July 2022; pp. 913–919. [Google Scholar]

- Qin, J.; Zhang, R. Lightweight single image super-resolution with attentive residual refinement network. Neurocomputing 2022, 500, 846–855. [Google Scholar] [CrossRef]

- Luo, X.; Qu, Y.; Xie, Y.; Zhang, Y.; Li, C.; Fu, Y. Lattice Network for Lightweight Image Restoration. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 4826–4842. [Google Scholar] [CrossRef]

- Qin, J.; Chen, L.; Jeon, S.; Yang, X. Progressive interaction-learning network for lightweight single-image super-resolution in industrial applications. IEEE Trans. Ind. Inform. 2023, 19, 2183–2191. [Google Scholar] [CrossRef]

- Huang, H.; Shen, L.; He, C.; Dong, W.; Liu, W. Differentiable Neural Architecture Search for Extremely Lightweight Image Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 2672–2682. [Google Scholar] [CrossRef]

- Chen, Y.; Xia, R.; Yang, K.; Zou, K. MICU: Image super-resolution via multi-level information compensation and U-net. Expert Syst. Appl. 2024, 245, 123111. [Google Scholar] [CrossRef]

- Wanga, K.; Yanga, X.; Jeon, G. Hybrid attention feature refinement network for lightweight image super-resolution in metaverse immersive display. IEEE Trans. Consum. Electron. 2024, 70, 3232–3244. [Google Scholar] [CrossRef]

- Hao, F.; Wu, J.; Liang, W.; Xu, J.; Li, P. Lightweight blueprint residual network for single image super-resolution. Expert Syst. Appl. 2024, 250, 123954. [Google Scholar] [CrossRef]

- Yan, Y.; Xu, X.; Chen, W.; Peng, X. Lightweight Attended Multi-Scale Residual Network for Single Image Super-Resolution. IEEE Access 2021, 9, 52202–52212. [Google Scholar] [CrossRef]

- Lu, Z.; Li, J.; Liu, H.; Huang, C.; Zhang, L.; Zeng, T. Transformer for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 457–466. [Google Scholar]

- Choi, H.; Lee, J.; Yang, J. N-gram in swin transformers for efficient lightweight image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 2071–2081. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y.B. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. Adv. Neural Inf. Process. Syst. 2016, 29, 2810–2818. [Google Scholar]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. Memnet: A persistent memory network for image restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4539–4547. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Change Loy, C.; Qiao, Y.; Tang, X. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar]

- Muqeet, A.; Hwang, J.; Yang, S.; Kang, J.; Kim, Y.; Bae, S.H. Multi-attention based ultra lightweight image super-resolution. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Glasgow, UK, 23–28 August 2020; pp. 103–118. [Google Scholar]

| Model | Scale | FLOP | Set5 [23] | Set14 [24] | BSD100 [25] | Urban100 [26] |

|---|---|---|---|---|---|---|

| PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | PSNR/SSIM | |||

| SRCNN (TPAMI 2014) [9] | 52.7 G | 36.66/0.9542 | 32.43/0.9073 | 31.34/0.8879 | 29.50/0.8953 | |

| LapSRN (TPAMI 2017) [27] | 29.9 G | 37.52/0.9590 | 33.08/0.9130 | 31.80/0.8950 | 30.41/0.9100 | |

| IMDN (ACM MM 2019) [28] | 186.7 G | 38.00/0.9604 | 33.63/0.9173 | 32.19/0.8994 | 32.17/0.9283 | |

| SMSR (CVPR 2021) [29] | 131.6 G | 38.00/0.9601 | 33.64/0.9173 | 32.17/0.8990 | 32.19/0.9284 | |

| ShuffleMixer (NeurIPS 2022) [30] | 91.0 G | 38.01/0.9606 | 33.63/0.9180 | 32.17/0.8995 | 31.89/0.9257 | |

| LBNet (IJCAI 2022) [31] | - | 38.05/0.9607 | 33.65/0.9177 | 32.15/0.8994 | 32.30/0.9291 | |

| ARRFN (NC 2022) [32] | - | 38.01/0.9603 | 33.66/0.9174 | 32.20/0.8999 | 32.37/0.9295 | |

| LatticeNet (TPAMI 2023) [33] | 169.0 G | 38.06/0.9607 | 33.70/0.9187 | 32.20/0.8999 | 32.25/0.9302 | |

| PILN (TII 2023) [34] | - | 38.08/0.9607 | 33.72/0.9181 | 32.23/0.9003 | 32.38/0.9306 | |

| DLSR (TCSVT 2023) [35] | - | 38.04/0.9606 | 33.67/0.9183 | 32.21/0.9002 | 32.26/0.9297 | |

| MICU (ESWA 2024) [36] | - | 37.93/0.9601 | 33.63/0.9170 | 32.17/0.8987 | 32.09/0.9271 | |

| HAFRN (TCE 2024) [37] | - | 38.05/0.9606 | 33.66/0.9187 | 32.21/0.8999 | 32.20/0.9289 | |

| LBRN (ESWA 2024) [38] | - | 38.08/0.9608 | 33.57/0.9173 | 32.23/0.9005 | 32.35/0.9303 | |

| Our DLKL | 174.1 G | 38.12/0.9614 | 33.71/0.9187 | 32.23/0.9004 | 32.47/0.9315 | |

| SRCNN (TPAMI 2014) [9] | 52.7 G | 32.75/0.9090 | 29.30/0.8219 | 28.41/0.7863 | 26.25/0.7989 | |

| LapSRN (TPAMI 2017) [27] | - | 33.82/0.9232 | 29.87/0.8324 | 28.82/0.7980 | 27.07/0.8281 | |

| IMDN (ACM MM 2019) [28] | 84.0 G | 34.36/0.9270 | 30.32/0.8417 | 29.09/0.8046 | 28.17/0.8519 | |

| SMSR (CVPR 2021) [29] | 67.8 G | 34.40/0.9270 | 30.33/0.8412 | 29.10/0.8050 | 28.25/0.8536 | |

| ShuffleMixer (NeurIPS 2022) [30] | 43.0 G | 34.40/0.9272 | 30.37/0.8423 | 29.12/0.8051 | 28.08/0.8498 | |

| LBNet (IJCAI 2022) [31] | - | 34.47/0.9277 | 30.38/0.8417 | 29.13/0.8061 | 28.42/0.8559 | |

| ARRFN (NC 2022) [32] | - | 34.38/0.9272 | 30.36/0.8422 | 29.09/0.8050 | 28.22/0.8533 | |

| LatticeNet (TPAMI 2023) [33] | 76.0 G | 34.40/0.9272 | 30.32/0.8416 | 29.10/0.8049 | 28.19/0.8513 | |

| PILN (TII 2023) [34] | - | 34.39/0.9269 | 30.34/0.8415 | 29.08/0.8048 | 28.09/0.8500 | |

| DLSR (TCSVT 2023) [35] | - | 34.49/0.9279 | 30.39/0.8428 | 29.13/0.8061 | 28.26/0.8548 | |

| MICU (ESWA 2024) [36] | - | 34.38/0.9274 | 30.35/0.8419 | 29.10/0.8048 | 28.14/0.8518 | |

| HAFRN (TCE 2024) [37] | - | 34.45/0.9276 | 30.40/0.8433 | 29.12/0.8058 | 28.16/0.8528 | |

| LBRN (ESWA 2024) [38] | - | 34.43/0.9276 | 30.39/0.8429 | 29.13/0.8059 | 28.29/0.8545 | |

| Our DLKL | 101.3 G | 34.57/0.9289 | 30.45/0.8438 | 29.16/0.8065 | 28.37/0.8569 | |

| SRCNN (TPAMI 2014) [9] | 52.7 G | 30.48/0.8628 | 27.50/0.7503 | 26.90/0.7110 | 24.53/0.7212 | |

| LapSRN (TPAMI 2017) [27] | 149.4 G | 31.54/0.8850 | 28.19/0.7720 | 27.32/0.7280 | 25.21/0.7560 | |

| IMDN (ACM MM 2019) [28] | 48.0 G | 32.21/0.8948 | 28.58/0.7811 | 27.56/0.7353 | 26.04/0.7838 | |

| SMSR (CVPR 2021) [29] | 41.6 G | 32.12/0.8932 | 28.55/0.7808 | 27.55/0.7351 | 26.11/0.7868 | |

| ShuffleMixer (NeurIPS 2022) [30] | 28.0 G | 32.21/0.8953 | 28.66/0.7827 | 27.61/0.7366 | 26.08/0.7835 | |

| LBNet (IJCAI 2022) [31] | - | 32.29/0.8960 | 28.68/0.7832 | 27.62/0.7382 | 26.27/0.7906 | |

| ARRFN (NC 2022) [32] | - | 32.22/0.8952 | 28.60/0.7817 | 27.57/0.7355 | 26.09/0.7858 | |

| LatticeNet (TPAMI 2023) [33] | 43.0 G | 32.18/0.8943 | 28.61/0.7812 | 27.57/0.7355 | 26.14/0.7844 | |

| PILN (TII 2023) [34] | - | 32.22/0.8949 | 28.62/0.7813 | 27.59/0.7365 | 26.19/0.7878 | |

| DLSR (TCSVT 2023) [35] | 20.41 G | 32.33/0.8963 | 28.68/0.7832 | 27.61/0.7374 | 26.19/0.7892 | |

| MICU (ESWA 2024) [36] | - | 32.21/0.8945 | 28.65/0.7820 | 27.57/0.7359 | 26.15/0.7872 | |

| HAFRN (TCE 2024) [37] | - | 32.24/0.8953 | 28.60/0.7816 | 27.58/0.7365 | 26.02/0.7849 | |

| LBRN (ESWA 2024) [38] | - | 32.33/0.8964 | 28.62/0.7826 | 27.60/0.7377 | 26.17/0.7882 | |

| Our DLKL | 66.1 G | 32.39/0.8976 | 28.71/0.7853 | 27.65/0.7386 | 26.34/0.7937 |

| Dataset | Scale | Top 5 Algorithms |

|---|---|---|

| Set5 [23] | DLKL > LBNet > LatticeNet> LAMRN > ARRFN | |

| Set14 [24] | LatticeNet > DLKL > LAMRN > IMDN > LBNet | |

| BSD100 [25] | DLKL > LAMRN > ARRFN > LatticeNet> LBNet | |

| Urban100 [26] | DLKL > LBNet > ARRFN> LAMRN > LatticeNet |

| Transformer-Based Methods | Scale | Para | Set5 [23] |

|---|---|---|---|

| LBNet (IJCAI 2022) [31] | 742 K | 32.29/0.8960 | |

| ESRT (CVPR 2022) [40] | 751 K | 32.19/0.8947 | |

| NGswin (CVPR 2023) [41] | 1019 K | 32.33/0.8963 | |

| DLKL | 864 K | 32.39/0.8976 |

| Method | Speed | Method | Speed | Method | Speed | Method | Speed |

|---|---|---|---|---|---|---|---|

| SRCNN [9] | 297 | LapSRN [27] | 189 | EDSR-BASE [11] | 315 | IMDN [28] | 38 |

| SMSR [29] | 309 | ARRFN [32] | 234 | LBNet [31] | 679 | DLKL | 142 |

| LKA 3-5-1 | LKA 5-7-1 | LKA 7-9-1 | Para | FLOP | Set5 [23] PSNR/SSIM | Set14 [24] PSNR/SSIM | BSD100 [25] PSNR/SSIM | Urban100 [26] PSNR/SSIM |

|---|---|---|---|---|---|---|---|---|

| ✓ | × | × | 767.96 K | 113.69 G | 37.93/0.9587 | 33.43/0.9152 | 32.08/0.8975 | 31.74/0.9237 |

| × | ✓ | × | 778.20 K | 115.20 G | 37.94/0.9592 | 33.46/0.9159 | 32.10/0.8978 | 31.74/0.9238 |

| × | × | ✓ | 791.01 K | 117.31 G | 37.93/0.9584 | 33.44/0.9154 | 32.09/0.8977 | 31.73/0.9234 |

| ✓ | ✓ | × | 837.08 K | 123.73 G | 37.97/0.9598 | 33.50/0.9168 | 32.12/0.8983 | 31.80/0.9243 |

| × | ✓ | ✓ | 864.25 K | 127.35 G | 37.98/0.9601 | 33.51/0.9171 | 32.11/0.8981 | 31.78/0.9238 |

| ✓ | × | ✓ | 853.47 K | 125.84 G | 37.96/0.9601 | 33.50/0.9166 | 32.10/0.8976 | 31.77/0.9235 |

| ✓ | ✓ | ✓ | 904.38 K | 133.47 G | 37.98/0.9604 | 33.52/0.9176 | 32.11/0.8984 | 31.80/0.9246 |

| MAFEn | Chan | Para | FLOP | Set5 [23] PSNR/SSIM | Set14 [24] PSNR/SSIM | BSD100 [25] PSNR/SSIM | Urban100 [26] PSNR/SSIM |

|---|---|---|---|---|---|---|---|

| 4 | 64 | 0.84 M | 123.78 G | 38.06/0.9603 | 33.61/0.9160 | 32.18/0.8994 | 32.15/0.9281 |

| 4 | 96 | 1.86 M | 274.45 G | 38.13/0.9617 | 33.71/0.9186 | 32.24/0.9006 | 32.40/0.9304 |

| 6 | 64 | 1.18 M | 174.1 G | 38.12/0.9614 | 33.71/0.9187 | 32.23/0.9004 | 32.47/0.9315 |

| 6 | 96 | 2.62 M | 386.26 G | 38.16/0.9621 | 33.83/0.9197 | 32.29/0.9011 | 32.60/0.9327 |

| LKA | ECA | Para | FLOP | Set5 [23] PSNR/SSIM | Set14 [24] PSNR/SSIM | BSD100 [25] PSNR/SSIM | Urban100 [26] PSNR/SSIM |

|---|---|---|---|---|---|---|---|

| × | × | 743.92 K | 109.99 G | 37.81/0.9573 | 33.34/0.9142 | 32.03/0.8969 | 31.62/0.9213 |

| ✓ | × | 837.08 K | 123.73 G | 37.97/0.9598 | 33.50/0.9168 | 32.12/0.8983 | 31.80/0.9243 |

| × | ✓ | 743.93 K | 110.03 G | 37.84/0.9576 | 33.40/0.9149 | 32.06/0.8972 | 31.72/0.9225 |

| ✓ | ✓ | 837.09 K | 123.77 G | 37.99/0.9602 | 33.51/0.9169 | 32.15/0.8987 | 31.91/0.9255 |

| Block | Number | Chan | Para | FLOP | Set5 [23] PSNR/SSIM | Set14 [24] PSNR/SSIM | BSD100 [25] PSNR/SSIM | Urban100 [26] PSNR/SSIM |

|---|---|---|---|---|---|---|---|---|

| Resblock [42] | 12 | 64 | 1.04 M | 153.48 G | 37.74/0.9587 | 33.25/0.9139 | 31.95/0.8963 | 31.07/0.9162 |

| RRDB [46] | 4 | 64 | 0.89 M | 131.73 G | 37.87/0.9598 | 33.38/0.9155 | 32.05/0.8972 | 31.48/0.9193 |

| RCAB [13] | 4 | 64 | 0.74 M | 66.54 G | 37.77/0.9591 | 33.21/0.9132 | 31.92/0.8960 | 31.09/0.9165 |

| MAB [47] | 14 | 90 | 1.08 M | 158.50 G | 37.94/0.9601 | 33.48/0.9162 | 32.15/0.8986 | 31.79/0.9232 |

| DLKL | 4 | 64 | 0.83 M | 123.77 G | 37.99/0.9604 | 33.51/0.9165 | 32.15/0.8987 | 31.91/0.9254 |

| Dual Path | Set5 [23] PSNR/SSIM | Set14 [24] PSNR/SSIM | BSD100 [25] PSNR/SSIM | Urban100 [26] PSNR/SSIM |

|---|---|---|---|---|

| × | 37.99/0.9602 | 33.51/0.9169 | 32.15/0.8987 | 31.91/0.9255 |

| ✓ | 38.00/0.9605 | 33.55/0.9175 | 32.16/0.8993 | 31.95/0.9264 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, Z.; Sun, M.; Jiang, H.; Ma, X.; Zhang, R.; Lv, C.; Kou, Q.; Cheng, D. Dual-Path Large Kernel Learning and Its Applications in Single-Image Super-Resolution. Sensors 2024, 24, 6174. https://doi.org/10.3390/s24196174

Su Z, Sun M, Jiang H, Ma X, Zhang R, Lv C, Kou Q, Cheng D. Dual-Path Large Kernel Learning and Its Applications in Single-Image Super-Resolution. Sensors. 2024; 24(19):6174. https://doi.org/10.3390/s24196174

Chicago/Turabian StyleSu, Zhen, Mang Sun, He Jiang, Xiang Ma, Rui Zhang, Chen Lv, Qiqi Kou, and Deqiang Cheng. 2024. "Dual-Path Large Kernel Learning and Its Applications in Single-Image Super-Resolution" Sensors 24, no. 19: 6174. https://doi.org/10.3390/s24196174

APA StyleSu, Z., Sun, M., Jiang, H., Ma, X., Zhang, R., Lv, C., Kou, Q., & Cheng, D. (2024). Dual-Path Large Kernel Learning and Its Applications in Single-Image Super-Resolution. Sensors, 24(19), 6174. https://doi.org/10.3390/s24196174