SiamDCFF: Dynamic Cascade Feature Fusion for Vision Tracking

Abstract

1. Introduction

- We introduce a dynamic attention mechanism in this paper and propose a local feature guidance module. We then utilize the controllability of this dynamic attention structure to design comparative experiments, verifying that the performance of fully convolutional trackers based on a Siamese network can be significantly improved by establishing global dependencies for the features output from depth-wise cross-correlation operations.

- According to the results of comparative experiments, we present a dynamic cascade feature fusion module. This module cascades a depth-wise cross-correlation module, a local feature guidance module, and the dynamic attention modules to achieve multi-level integration among features. Furthermore, we propose a tracking model named SiamDCFF, which can model the dynamic process of local-to-global feature fusion.

2. Related Works

2.1. Siamese Network-Based Visual Trackers

2.2. Vision Transformer

3. Method

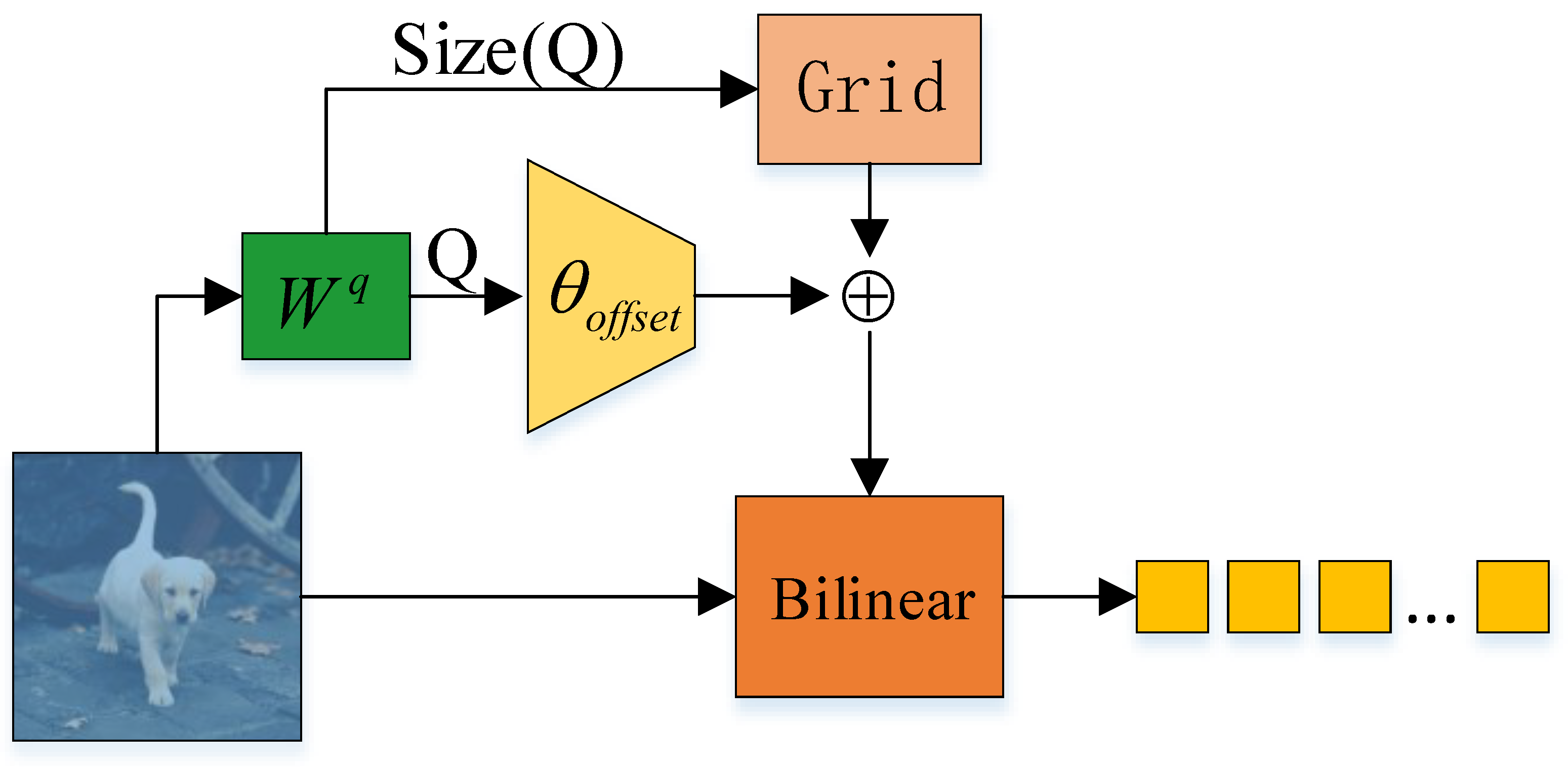

3.1. Dynamic Attention Module

3.2. Location for Adding Global Information

3.3. Local Feature Guidance Module

3.4. Dynamic Cascade Feature Fusion Module

3.5. Detection Subnetwork

4. Experiments

4.1. Implementation Details

4.2. Dataset Introduction

4.3. Comparative Experiments

4.4. Evaluation on Public Datasets

4.5. Visualization of Tracking Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

- Chen, Z.; Zhong, B.; Li, G.; Zhang, S.; Ji, R. Siamese box adaptive network for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6668–6677. [Google Scholar]

- Guo, D.; Wang, J.; Cui, Y.; Wang, Z.; Chen, S. SiamCAR: Siamese fully convolutional classification and regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6269–6277. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.F.; Vedaldi, A.; Torr, P.H.S. Fully-convolutional siamese networks for object tracking. In Proceedings of the Computer Vision–ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Proceedings, Part II 14. Springer: Cham, Switzerland, 2016; pp. 850–865. [Google Scholar]

- Li, B.; Yan, J.; Wu, W.; Zhu, Z.; Hu, X. High performance visual tracking with siamese region proposal network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 8971–8980. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems 25 (NIPS 2012): 26th Annual Conference on Neural Information Processing Systems 2012, Lake Tahoe, NV, USA, 3–6 December 2012; Volume 25. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chopra, S.; Hadsell, R.; LeCun, Y. Learning a similarity metric discriminatively, with application to face verification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 539–546. [Google Scholar]

- Ye, B.; Chang, H.; Ma, B.; Shan, S.; Chen, X. Joint feature learning and relation modeling for tracking: A one-stream framework. In Proceedings of the European Conference Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 341–357. [Google Scholar]

- Cui, Y.; Jiang, C.; Wang, L.; Wu, G. Mixformer: End-to-end tracking with iterative mixed attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 13608–13618. [Google Scholar]

- Xia, Z.; Pan, X.; Song, S.; Li, L.E.; Huang, G. Vision transformer with deformable attention. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 4794–4803. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NIPS), Long Beach, CA, USA, 4–9 December 2017; Curran Associates: Red Hook, NY, USA, 2017; pp. 6000–6010. [Google Scholar]

- Guo, D.; Shao, Y.; Cui, Y.; Wang, Z.; Zhang, L.; Shen, C. Graph attention tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 9543–9552. [Google Scholar]

- Yu, Y.; Xiong, Y.; Huang, W.; Scott, M.R. Deformable siamese attention networks for visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6728–6737. [Google Scholar]

- Wang, N.; Zhou, W.; Wang, J.; Li, H. Transformer meets tracker: Exploiting temporal context for robust visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 1571–1580. [Google Scholar]

- Lin, T.-Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V 13. Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M. ImageNet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Real, E.; Shlens, J.; Mazzocchi, S.; Pan, X.; Vanhoucke, V. Youtube-boundingboxes: A large high-precision human-annotated data set for object detection in video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5296–5305. [Google Scholar]

- Mueller, M.; Smith, N.; Ghanem, B. A benchmark and simulator for uav tracking. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Cham, Switzerland, 2016; pp. 445–461. [Google Scholar]

- Huang, L.; Zhao, X.; Huang, K. GOT-10k: A large high-diversity benchmark for generic object tracking in the wild. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1562–1577. [Google Scholar] [CrossRef] [PubMed]

- Wu, Y.; Lim, J.; Yang, M.H. Object Tracking Benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef] [PubMed]

- Zhao, M.; Okada, K.; Inaba, M. Trtr: Visual tracking with transformer. arXiv 2021, arXiv:2105.03817. [Google Scholar]

- Zhang, Z.; Peng, H.; Fu, J.; Li, B.; Hu, W. Ocean: Object-aware anchor-free tracking. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXI 16. Springer: Cham, Switzerland, 2020; pp. 771–787. [Google Scholar]

- Zhang, Z.; Peng, H. Deeper and wider siamese networks for real-time visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4591–4600. [Google Scholar]

- Cao, Z.; Fu, C.; Ye, J.; Li, B.; Li, Y. HiFT: Hierarchical feature transformer for aerial tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 15457–15466. [Google Scholar]

- Du, F.; Liu, P.; Zhao, W.; Tang, X. Correlation-guided attention for corner detection based visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 6836–6845. [Google Scholar]

- Bhat, G.; Danelljan, M.; Gool, L.V.; Timofte, R. Learning discriminative model prediction for tracking. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6182–6191. [Google Scholar]

- Zheng, L.; Tang, M.; Wang, J.; Lu, H. Learning features with differentiable closed-form solver for tracking. arXiv 2019, arXiv:1906.10414. [Google Scholar]

- Nam, G.; Oh, S.W.; Lee, J.Y.; Kim, S.J. DMV: Visual object tracking via part-level dense memory and voting-based retrieval. arXiv 2020, arXiv:2003.09171. [Google Scholar]

- Zhu, Z.; Wang, Q.; Li, B.; Wu, W.; Yan, J.; Hu, W. Distractor-aware siamese networks for visual object tracking. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 101–117. [Google Scholar]

- Wang, G.; Luo, C.; Xiong, Z.; Zeng, W. SPM-Tracker: Series-parallel matching for real-time visual object tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 3643–3652. [Google Scholar]

- Xu, Y.; Wang, Z.; Li, Z.; Yuan, Y.; Yu, G. Siamfc++: Towards robust and accurate visual tracking with target estimation guidelines. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 12549–12556. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Atom: Accurate tracking by overlap maximization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4660–4669. [Google Scholar]

- Hu, S.; Zhou, S.; Lu, J.; Yu, H. Flexible Dual-Branch Siamese Network: Learning Location Quality Estimation and Regression Distribution for Visual Tracking. IEEE Trans. Comput. Soc. Syst. 2023, 11, 1451–1459. [Google Scholar] [CrossRef]

- Gao, J.; Zhang, T.; Xu, C. Graph convolutional tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 4649–4659. [Google Scholar]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. Eco: Efficient convolution operators for tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6638–6646. [Google Scholar]

- Danelljan, M.; Gool, L.V.; Timofte, R. Probabilistic regression for visual tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 7183–7192. [Google Scholar]

| Tracker | Success Rate ↑ | Norm.P ↑ | Precision ↑ |

|---|---|---|---|

| SiamCAR_paper [3] | 0.614 | 0.760 | - |

| SiamCAR_Ours | 0.607 | 0.753 | 0.788 |

| SiamCAR+L | 0.621 | 0.773 | 0.811 |

| SiamCAR+L+D | 0.623 | 0.773 | 0.818 |

| SiamCAR+L+1D | 0.633 | 0.787 | 0.831 |

| SiamCAR+L+3D | 0.623 | 0.779 | 0.815 |

| SiamCAR+L+6D | 0.610 | 0.759 | 0.795 |

| Tracker | AO ↑ | SR0.5 ↑ | SR0.75 ↑ |

|---|---|---|---|

| SiamCAR_paper [3] | 0.569 | 0.670 | 0.415 |

| SiamCAR_Ours | 0.547 | 0.635 | 0.426 |

| SiamCAR+L | 0.541 | 0.608 | 0.409 |

| SiamCAR+L+D | 0.584 | 0.687 | 0.467 |

| SiamCAR+L+1D | 0.586 | 0.688 | 0.455 |

| SiamCAR+L+3D | 0.589 | 0.697 | 0.463 |

| SiamCAR+L+6D | 0.552 | 0.640 | 0.434 |

| Tracker | Success ↑ | Norm.P ↑ | Precision ↑ |

|---|---|---|---|

| SiamDW [25] | 0.536 | 0.728 | 0.776 |

| SiamRPN [5] | 0.581 | 0.737 | 0.772 |

| HiFT [26] | 0.589 | 0.758 | 0.787 |

| TrTr-offline [23] | 0.594 | - | - |

| SiamRPN++ [1] | 0.611 | 0.765 | 0.804 |

| SiamCAR [3] | 0.614 | 0.760 | - |

| CGACD [27] | 0.620 | 0.782 | 0.815 |

| Ocean [24] | 0.621 | 0.788 | 0.823 |

| SiamBAN [2] | 0.631 | - | 0.833 |

| SiamGAT [14] | 0.640 | 0.806 | 0.835 |

| SiamAttn [15] | 0.650 | - | 0.845 |

| TrTr-online [23] | 0.652 | - | - |

| Ours | 0.645 | 0.800 | 0.845 |

| Tracker | AO ↑ | SR0.5 ↑ | SR0.75 ↑ | FPS |

|---|---|---|---|---|

| DaSiamRPN [31] | 0.444 | 0.536 | 0.202 | 134.40 |

| CGACD [27] | 0.511 | 0.612 | 0.323 | 37.73 |

| SPM [32] | 0.513 | 0.593 | 0.359 | 72.30 |

| SiamFC++ [33] | 0.526 | 0.625 | 0.347 | 186.29 |

| ATOM [34] | 0.556 | 0.634 | 0.402 | 20.71 |

| SiamCAR [3] | 0.569 | 0.670 | 0.415 | 17.21 |

| SiamFDB [35] | 0.586 | 0.658 | 0.434 | 36.52 |

| DMV50 [30] | 0.601 | 0.695 | 0.492 | 31.55 |

| DCFST [29] | 0.610 | 0.716 | 0.463 | 50.65 |

| DiMP50 [28] | 0.611 | 0.717 | 0.492 | 34.05 |

| Ours | 0.610 | 0.718 | 0.494 | 21.76 |

| Tracker | Precision ↑ | Success ↑ |

|---|---|---|

| GCT [36] | 0.853 | 0.647 |

| ECO-HC [37] | 0.856 | 0.643 |

| DaSiamRPN [31] | 0.880 | 0.658 |

| TrTr-online [23] | 0.901 | 0.691 |

| Ocean-offline [24] | 0.902 | 0.673 |

| PrDiMP [38] | - | 0.696 |

| SiamCAR [3] | 0.910 | 0.697 |

| SiamBAN [2] | 0.910 | 0.696 |

| SiamRPN++ [1] | 0.915 | 0.696 |

| Ocean-online [24] | 0.920 | 0.684 |

| TrTr-online [23] | 0.933 | 0.715 |

| Ours | 0.916 | 0.704 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, J.; Wu, N.; Hu, S. SiamDCFF: Dynamic Cascade Feature Fusion for Vision Tracking. Sensors 2024, 24, 4545. https://doi.org/10.3390/s24144545

Lu J, Wu N, Hu S. SiamDCFF: Dynamic Cascade Feature Fusion for Vision Tracking. Sensors. 2024; 24(14):4545. https://doi.org/10.3390/s24144545

Chicago/Turabian StyleLu, Jinbo, Na Wu, and Shuo Hu. 2024. "SiamDCFF: Dynamic Cascade Feature Fusion for Vision Tracking" Sensors 24, no. 14: 4545. https://doi.org/10.3390/s24144545

APA StyleLu, J., Wu, N., & Hu, S. (2024). SiamDCFF: Dynamic Cascade Feature Fusion for Vision Tracking. Sensors, 24(14), 4545. https://doi.org/10.3390/s24144545