Abstract

The bird’s-eye view (BEV) method, which is a vision-centric representation-based perception task, is essential and promising for future Autonomous Vehicle perception. It has advantages of fusion-friendly, intuitive, end-to-end optimization and is cheaper than LiDAR. The performance of existing BEV methods, however, would be deteriorated under the situation of a tire blow-out. This is because they quite rely on accurate camera calibration which may be disabled by noisy camera parameters during blow-out. Therefore, it is extremely unsafe to use existing BEV methods in the tire blow-out situation. In this paper, we propose a geometry-guided auto-resizable kernel transformer (GARKT) method, which is designed especially for vehicles with tire blow-out. Specifically, we establish a camera deviation model for vehicles with tire blow-out. Then we use the geometric priors to attain the prior position in perspective view with auto-resizable kernels. The resizable perception areas are encoded and flattened to generate BEV representation. GARKT predicts the nuScenes detection score (NDS) with a value of 0.439 on a newly created blow-out dataset based on nuScenes. NDS can still obtain 0.431 when the tire is completely flat, which is much more robust compared to other transformer-based BEV methods. Moreover, the GARKT method has almost real-time computing speed, with about 20.5 fps on one GPU.

1. Introduction

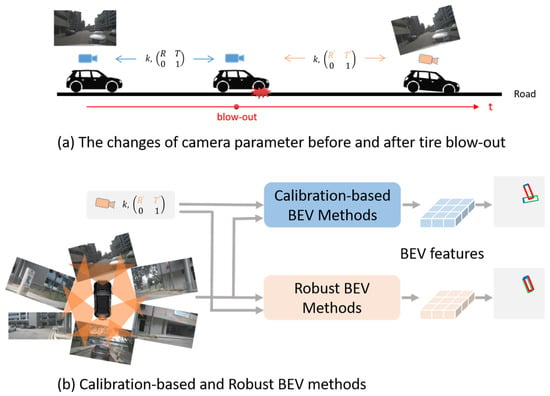

Three-dimensional object detection in BEV is one of the basic functions for autonomous vehicles to understand the physical world. The LiDAR-based methods [1,2,3] can provide 3D information accurately by point clouds, but are too expensive. Therefore, with the advantages of being low-cost and easy to deploy, the vision-centric methods [4,5,6] which only use a multi-view camera as input have attracted a lot of attention both from the research and industrial communities. Nevertheless, it is ill-posed to obtain 3D information from 2D perspective images in BEV. According to how the view transformation is performed, existing vision-centric methods can be roughly classified into two main categories: geometry-based methods and learning-based methods [7]. Most of the existing methods do not consider the limited conditions for tire blow-out. As a highly hazardous safety accident, a tire blow-out not only makes vehicle handling difficult, but also introduces a large amount of noise during 3D object detection. The main challenge of tire blow-out for 3D object detection is the unmeasurable failure of original calibration parameters, which directly leads to the performance deterioration of existing methods as shown in Figure 1a. Before the tire blow-out, the camera parameters almost remain unchanged. After tire blow-out, the camera parameters significantly deviate from the calibrated values.

Figure 1.

Schematic diagram of tire blow-out and our proposed solution.

Geometry-based methods transfer the view based on physical principles (e.g., camera extrinsic and intrinsic). The most classic one is the homograph-based method which uses a homography matrix to transfer perspective images to BEV under the strict assumption of flat ground [7]. Similarly, the depth-based method lifts 2D information to 3D space through depth estimation in an explicit or implicit way. Generally, the geometry-based method completely relies on camera parameters, which are not robust to tire blow-out. Leveraging the powerful representation capabilities of neural networks, the learning-based methods are becoming increasingly popular, and the main representation is the transformer-based method. The transformer-based method directly learns the view transformation through the attention mechanism, which belongs to a top-down paradigm. Two types of technologies are frequently used in transformer-based methods, one is the point-wise transformation, and another is the global transformation. The point-wise transformation starts by using BEV grids to calculate the corresponding position on the perspective images based on camera parameters, then the extracted 2D features are used to form features in the BEV space. The main drawback of point-wise transformation is that it relies heavily on camera parameters, which makes it unable to cope with a tire blow-out situation. On the contrary, the global transformation is completely decoupled from camera parameters, as each BEV grid can interact with pixels from all images. While the global transformation has the advantage of being independent of camera parameters, its computational cost increases linearly with image size, which can easily lead to low-efficiency.

To solve the problem of blow-out vehicles’ BEV perception, we propose a GARKT method which is robust to the noise of camera parameters, as shown in Figure 1b. Compared with the calibration-based methods, our robust BEV method is capable of handling noisy camera parameters. In the perception results, the red rectangle represents ground truth, the green rectangle represents the prediction results, and the blue line means the head of vehicle. According to the characteristics of the blow-out vehicles’ camera deviation, we first project the BEV positions to 2D positions at multi-view feature maps based on the imprecise camera parameters. Then, we cover the prior 2D positions with the kernels which are auto-resizable to the camera deviation, and the BEV representation is generated when the BEV queries interact with the flatten kernel features.

Our contributions are summarized as follows:

- Based on the vibration characteristics of vehicles with tire blow-out, we establish noise models for cameras at different mounting positions to simulate the camera deviation in real scenarios.

- A geometry-guided auto-resizable kernel transformer method, namely GARKT, is proposed to address the perception problem in a tire blow-out situation in a robust and efficient way.

- Experimental results demonstrate that the GARKT can handle the tire blow-out situation and achieve acceptable performance of 3D object detection, which greatly enhances the driving safety.

2. Related Work

The 3D object detection technology uses sensors such as LiDAR and cameras to perceive the environment around the vehicle, identify obstacles, vehicles, pedestrians, and other objects that appear during driving, and measure their distances and speeds. In this paper, we focus on camera-only 3D detection methods.

2.1. Camera-Based 3D Perception

The camera-based 3D object detection consists of mono-view and multi-view detection [8]. In a monocular setting, object detection methods have three categories [9], including direct, depth-based, and grid-based methods. The early method for 3D detection is Mono3D [10]. It first directly samples many candidate 3D bounding boxes and then makes a selection by exploiting multiple features such as semantics and shapes. The standard approach for predicting 3D objects is projecting bounding boxes from 2D detectors [11]. Another way with a good performance is direct 3D key-point detection such as SMOKE [12] and FCOS3D [13]. Depth-based methods utilize depth pre-training to get a better performance [14]. The BEV grid-based method is becoming more mainstream in the united autonomous driving process, even for mono-view. [15] is the first paper trying to transform image features into the orthographic BEV 3D space. CaDDN [9] trains the voxel features and then collapses them into BEV features that could be used for object detection.

In multi-view 3D detection, one obvious way is to just fuse the results from several independent monocular detectors through post-processing. However, post-processing is suboptimal and cannot afford end-to-end training [16]. DETR3D [17] is the first research to fuse the multi-view image feature by transformer model in the early stage. According to Qian [8], BEV perception performance is better than 3D perspective object detection. BEV features have shown great potential for various perception tasks because of impressive and unified 3D perception capability [18]. Then, more and more researchers have tried to generate BEV features from multi-camera features. BEV methods fall into two categories, 2D-to-3D and 3D-to-2D [19]. The 2D-to-3D detection methods [20,21,22] have explicit depth estimation originated from LSS [23]. Depth estimation is highly related with accurate camera extrinsic parameters, which could be affected when tire blow-out occurs. Therefore, only transformer-based 3D-to-2D approaches are considered in this paper. Typical 3D-to-2D methods learn BEV features from multiple image features by incorporating temporal and spatial attention [18,24,25,26].

2.2. Camera Calibration

Many of the aforementioned 3D-to-2D methods still utilize camera extrinsic parameters when projecting 3D point to 2D image plane. BEVFormer [18] relies on camera intrinsics and extrinsics to get the reference points on 2D views. It develops spatial cross-attention based on deformable attention [27] and utilizes temporal information to improve robustness on extrinsic noise. PolarFormer [24] constructs a polar alignment module that transforms polar rays from multiple camera views to a shared world coordinate using camera intrinsics and extrinsics as inputs. PETRv2 [26] develops a feature-guided position encoder to relieve the effect of extrinsics. It also applies random 3D rotation to camera extrinsics for robustness analysis.

Camera extrinsics, however, may be biased due to various real scenarios, such as the camera shake caused by a car bump or camera offset by environmental forces. As the autonomous driving system has high requirements of safety and reliability, many researchers have put great effort on camera calibration to get accurate camera intrinsics and extrinsics. Compared to initial calibration using a calibration target, the online calibration method that can continuously calibrate the cameras on the fly is much preferred. Most researchers introduce online intelligent calibration for object detection with multi-modal sensors [28,29,30]. In contrast, calibration-free or camera-only methods are currently a hot research area [31,32,33]. Fan et al. [31] proposed a calibration-free BEV representation network for an infrastructure camera. They constructed a similarity-based cross-view fusion module for 3D detection without calibration parameters and additional depth supervision. Zhang et al. [33] predicted camera extrinsic parameters by detecting vanishing points and horizon change, and then features immune to the extrinsics perturbation were extracted for monocular 3D object detection. Jiang et al. [32] presented a multi-camera calibration free transformer for robust BEV representation, which does not rely on camera intrinsics and extrinsics.

In summary, extrinsic-based object detection methods are susceptible to external perturbation. Online calibration, as a compensation, brings additional calibration procedure and higher computation cost. In addition, extrinsic-based detection methods only consider the same extrinsic drift for all cameras, which is not applicable for cameras in a tire blow-out scenario. By contrast, camera extrinsics are not used at all in extrinsic-free object detection methods. It would inevitably sacrifice detection performance. We find that geometry-guided kernel transformer (GKT) [34] might be a compromise between totally extrinsic-based and extrinsic-free methods. In a tire blow-out setting, camera extrinsics may be minorly disturbed, but still in an acceptable range. These affected extrinsics still have valuable information for view transformation and feature fusion. This is the main motivation of this research. Therefore, we start from the original extrinsics, and propose a new approach for blow-out related perception.

3. Method

3.1. Modeling of Camera Deviation for Vehicles with Tire Blow-Out

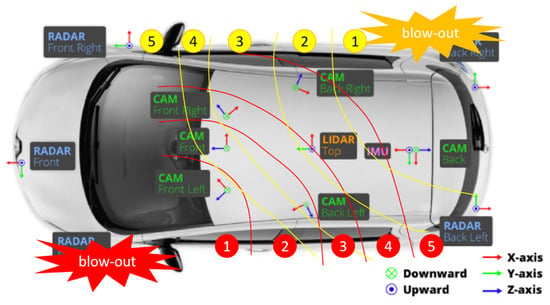

One of the hidden dangers to driving safety is tire blow-out in vehicles, which is often caused by tire abrasion, road potholes, abnormal tire pressure, and so on. The duration of blow-out is extremely short (less than 0.1 s). By using the tire pressure sensors (AutelTPMS, 315 MHz/433 MHz) to detect the tire pressure of four tires, we can quickly and accurately determine which tire has experienced a tire blow-out. Moreover, the vibration wave generated from the blow-out tire decreases in intensity as the propagation distance increases. Therefore, we divide the degree of camera deviation into 5 levels based on the position of blow-out tire and cameras, as presented in Figure 2. The installation of cameras is based on the nuScenes dataset. When the left-front tire has a blow-out (red color), we divide camera deviations from 1 to 5 degrees. The lower the degree value is, the greater the deviation of the camera caused by a tire blow-out is. The same goes for the tire blow-out in the right-rear (yellow).

Figure 2.

Visualization of tire blow-out and the different deviation levels of cameras in different positions.

Specifically, Table 1 shows the different blow-out positions and the degree of impact on corresponding cameras. In order to simulate the impact of blow-out on cameras, we introduce rotation deviation and translation deviation into the original calibration parameters. Taking the left-front tire blow-out as an example, the translation deviation of can be expressed as

and the rotation deviation of can be expressed as:

Specifically, , , and can be expressed as:

The , , and correspond to the noise of rotation. Similarly, the , , and correspond to the noise of translation. Both the rotation and translation noises are relative to the camera coordinate axis and subject to normal distribution with different standard deviation:

where the subscript represents the degree of camera vibration as shown in Table 1. is the standard deviation of camera translation, is the standard deviation of camera rotation. The higher the value of is, the smaller the deviation of the camera is. To simulate the decreasing vibration of blow-out, we fix the standard deviation of the fifth level and construct a decreasing sequence by setting a fixed interval. The pseudocode of modeling camera deviation for different levels is as Algorithm 1:

| Algorithm 1 Modeling of camera deviation |

| ; |

| ; |

| ; |

| according to Formulas (6) and (7), separately; |

| according to Formula (1); |

| according to Formulas (3)–(5); |

| according to Formula (2); |

| . |

Table 1.

The different blow-out positions and the degree of impact on the corresponding cameras. F: front camera, FR: front-right camera, FL: front-left camera, BR: back-right camera, BL: back-left camera, B: back camera.

Table 1.

The different blow-out positions and the degree of impact on the corresponding cameras. F: front camera, FR: front-right camera, FL: front-left camera, BR: back-right camera, BL: back-left camera, B: back camera.

| The Position of Tire Blow-Out | Degree 1 | Degree 2 | Degree 3 | Degree 4 | Degree 5 |

|---|---|---|---|---|---|

| Left-front tire | FL | F, BL | FR | BR | B |

| Left-rear tire | B | BL | FL, BR | F | FR |

| Right-front tire | FR | F, BR | FL | BL | B |

| Right-rear tire | B | BR | FR, BL | F | FL |

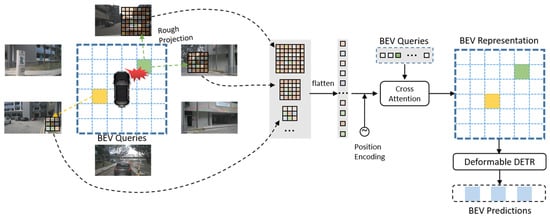

3.2. Overall Architecture

In a blow-out vehicle, each camera has different degrees of deviation. For instance, the front camera and the back-left camera will deteriorate significantly if the front-left wheel blows out. In contrast, the back camera is almost unaffected. To improve BEV perception performance under this special circumstance, we propose a new BEV perception framework based on GARKT. It is of high-efficiency and robust in the blow-out setting. In Figure 3, it is assumed that the front-right wheel blows out. Firstly, the priori calibration parameters are used to guide the rough projection from the 3D BEV position to multi-view images. Secondly, based on the position of the tire blow-out and different impact on the cameras, we extract kernel features with different kernel size, and unfold them to interact with BEV queries with the aim of generating BEV representation. The multi-view feature map from all cameras is extracted first with the common image backbone. Since the front camera and the back-right camera are affected with different degrees of deviation, we design a wide perception area for those most interfered. The perception location in perspective view is first determined by the camera’s geometric priors. Specifically, each BEV grid position is roughly projected to 2D coordinates in multi-view images:

where is the intrinsics of the camera, is the rotation matrix, and is the translation matrix. Then kernels with different sizes are generated with the center of . It is noted that the more affected by deviation, the larger the kernel size is. Further, the kernel part which exceeds the images would be set to zero. Finally, all kernel regions are unfolded to interact with BEV queries with the aim of generating BEV representation.

Figure 3.

Geometry-guided auto-resizable kernel transformer framework.

3.3. Configuration of Kernel

Chen et al. [34] found that large kernel which may cause a heavy computation load is more robust to the deviation compared to small kernel. To strike a balance between robustness and computation efficiency, we initial to set the kernel size for different degrees of impact as shown in Table 2. Specifically, for the convenience of simulation experiments, we set rectangle kernel to different degrees of impact in the form of arithmetic progression. It is noted that there are no restrictions on the size and shape of the kernels, of which can be adjusted to meet the balance need of the computational efficiency and receptive field. A basic principle is that a larger receptive field should be assigned to a higher degree of impact. Furthermore, during the training process of the network, the kernel size will be automatically adjusted. Based on the default kernel size configuration, we further study the performance of different kernel settings under noisy extrinsics in IV, the experiment part.

Table 2.

Initial kernel size for different impact degrees.

4. Experiment

4.1. Experiment Setting

Datasets and evaluation metrics. We evaluate the proposed GARKT method on the public large-scale autonomous driving dataset nuScenes [35], which collects 1000 driving scenes with the duration of about 20 s. Among the 1000 driving scenes, 700, 150, and 150 scenes are divided for training, validation, and testing, respectively. The six surrounding-view images are resized into . Since the very nature of the tire blow-outs involves immediate expiration of camera extrinsics, we created two datasets based on nuScenes by adding different levels of camera translation and rotation to camera extrinsics in order to simulate the camera deviation. In Dataset_1, standard deviations of translation and rotation are set as 0.05 (m) and 0.005 (rad). In Dataset_2, standard deviations of translation and rotation are set as 0.2 (m) and 0.02 (rad). Tires in Dataset_2 are almost flat compared to Dataset_1. The setting of noise for each-view image are designed according to Section 3.1. Then, all the models are trained only on this new nuScenes training set, and evaluated on the new nuScenes validation set. Moreover, the nuScenes detection score (NDS) which was provided by nuScenes is regarded as our evaluation metric. The NDS is calculated through 6 other metrics, including mean average precision (mAP), a set of true positive metrics that measure attribute (AAE), velocity (AVE), orientation (AOE), scale (ASE), and translation (ATE) errors. The calculation of NDS can be expressed as follows:

Implementation Details. To extract image features, GARKT uses EfficientNet-B4 [36] as the image backbone, where the input image size is . Kernel sizes for different impact degree are set as Table 2 in our base model. The influence of kernel size setting is examined in ablation study. We train our models with batch size of 8 on 4 NVIDIA GPUs for 10 epochs. The Adam optimizer is chosen to train the models with a learning rate of , which takes about 7 h until convergence. The FPS metric is counted by infer time on a single GPU. No data augmentation and historical frames are adopted. All experiments are implemented in PyTorch 2.2.0.

4.2. Main Results

Our proposed GARKT (the kernel size is set as Table 2) with different image backbones (EfficientNet-B4 and ResNet101) is compared with other BEV-based methods, such as BEVFormer [18], PolarFormer [24], BEVDet [20], PETR [37], and Fast-BEV [38]. All these models adopt a unified Resnet101-DCN backbone, which is initialized from an FCOS3D [13] checkpoint trained on the nuScenes 3D detection dataset. In order to compare the vanilla model, we do not adopt any performance improving tricks such as data augmentation, multi-frame feature fusion. The performance of Fast-BEV without these tricks drops a lot.

The performance tested on Dataset_1 is shown in Table 3. GARKT designed especially for the blow-out vehicle has a bit lower NDS compared with BEVFormer, but higher NDS compared to PolarFormer, BEVDet, PETR, and Fast-BEV. This means that BEVFormer with deformation attention is also robust to sight camera deviation in Dataset_1. PolarFormer has a lower NDS compared to BEVFormer in the situation of tire blow-out, while the reverse is true in their original paper. This is because PolarFormer uses polar coordinates, which is much more sensitive to camera distortion.

Table 3.

Comparison with other methods on Dataset_1. The standard deviation of translation and rotation is 0.05 and 0.005 respectively.

One advantage of GARKT is it is light weight, so it has fast inference speed, which is almost real-time computing. The GARKT with backbone of ResNet101 achieves slightly higher NDS compared with GARKT with backbone of EfficientNet-B4, at the expense of inference speed reduction from 20.577 FPS to 18.412 FPS. Fast-BEV which develops from M2BEV [16] also has high infer speed, but lower NDS with no data augmentation and multi-frame features.

In order to test the robustness of GARKT, Dataset_2 with flat tire is computed and shown in Table 4. NDS of GARKT with different backbones only drops slightly, however NDS of BEVFormer, PolarFormer, BEVDet, PETR, and Fast-BEV drops severely. This demonstrates the efficient design of a resizable perception kernel in this research.

Table 4.

Comparison with other methods on Dataset_2. The standard deviation of translation and rotation is 0.2 and 0.02 respectively.

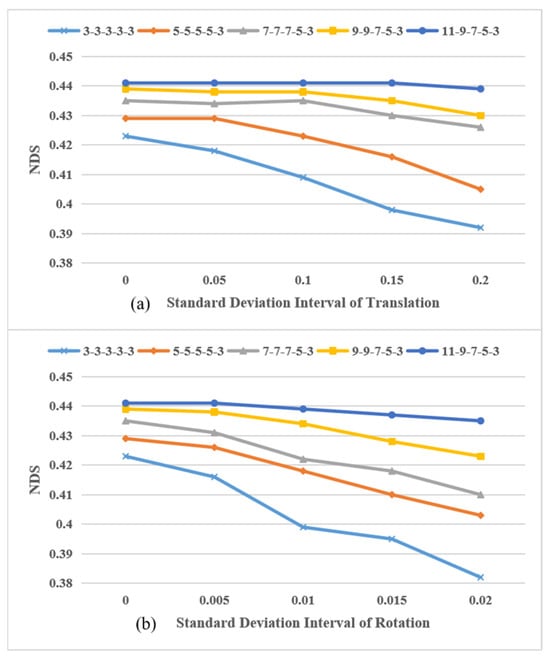

4.3. Noisy Extrinsics Analysis

To analyze the influence of noisy extrinsics introduced by tire blow-out, we study the performance of models with different kernel configurations under different standard deviation intervals of translation and rotation, respectively. The influence of translation’s standard deviation interval is shown in Figure 4a. Similarly, the influence of rotation’s standard deviation interval is shown in Figure 4b. Figure 4a represents the influence of translation’s standard deviation interval. Figure 4b represents the influence of rotation’s standard deviation interval. The fixed standard deviation of the fifth level of translation and rotation is 0.01 (m) and 0.001 (rad). The curve of 3-3-3-3-3 means that we set the kernel size to from impact degree 1 to 5, which can be regarded as the GKT model [34]. Similarly, the curve of 9-9-7-5-3 means that we set the kernel size to for impact degree 1 and 2, and set kernel size to , , for impact degree 3, 4, and 5, respectively. Other curves follow the same pattern. It is noted that, when the influence of translation is selected as the target, the corresponding influence of rotation is turned off and vice versa. For both translation and rotation, with the increase in the standard deviation interval, the NDS performance of all kernel settings have a downward trend. However, with the reasonable kernel setting of 11-9-7-5-3, the robustness to calibration noise is greatly enhanced, which is because that larger kernel size is set according to a larger impact degree.

Figure 4.

The performance of different kernel settings under noisy extrinsics.

4.4. Convergence and Inference Speed

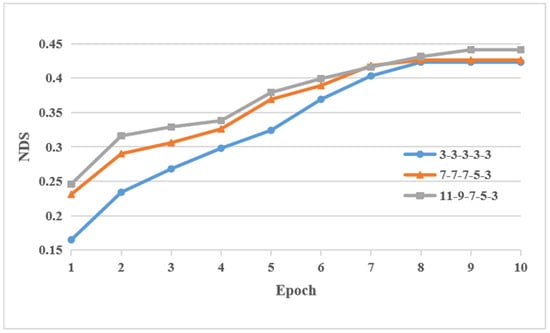

Furthermore, the convergence speed of models with different kernel configurations without deviation of calibration is shown in Figure 5. It is noted that for three different kernel settings, the convergence speed of curve 3-3-3-3-3 and curve 7-7-7-5-3 is faster than curve 11-9-7-5-3. However, the NDS performance of curve 11-9-7-5-3 is slightly higher than other curves. Moreover, the inference speed of models with different kernel configurations on V100 is shown in Table 5. It is obvious that as the kernel size increases, the inference speed decreases significantly. However, the lowest speed of 20.577 FPS is still able to meet the basic needs in an emergency situation like tire blow-out.

Figure 5.

The convergence speed of different kernel settings without deviation of calibration. The kernel setting of each curve is the same as Figure 4.

Table 5.

The inference speed of models with different kernel configuration.

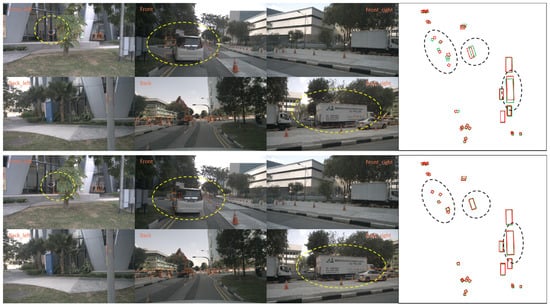

4.5. Visualization Results

For the qualitative results, we show the visualization of detection results on our created datasets. We visualize the 3D bounding boxes in images and BEV plane in Figure 6. The prediction 3D bounding boxes of BEVFormer (the one above) and our model (the one below) are shown under the camera extrinsics perturbation dataset, respectively. Green boxes and red boxes in bird view mean the ground truth and predictions of obstacles. A more pronounced difference in the prediction appears where the dashed line is circled. It can be seen from the figure that our model is effective against the camera extrinsics perturbation.

Figure 6.

Qualitative results on camera extrinsics perturbation dataset.

4.6. Real Tire Blow-Out Experiment

Tire blow-out may cause severe car accidents, and then affect the safety of drivers and surrounding passengers and cars. Therefore, it is not easy to collect real tire blow-out data for our experiment. We have tried our best to create a device mounted on the wheel hub to deflate the tire. The deflating process is just like a tire puncture in a real-life scenario. Therefore, we have developed a device that can set the deflation speed, which is installed on the wheel hub as shown in Figure 7. This device can quickly cause tire air leakage (less than the 750 ms required by the national standard), thus achieving the same effect as a tire blow-out. We will install the device on the right-front wheel hub. We placed five fake cars and five dummies on the vehicle’s driving path to check if our method can recognize these obstacles properly after a tire blow-out. We collected data 10 s before and 10 s after a tire blow-out, and repeated this process 10 times, collecting a total of 10 data packets. In the end, in 10 experiments, our method accurately detected obstacle dummies and fake cars after a tire blow-out, while other methods may have missed objects, proving the effectiveness of the method.

Figure 7.

Precise control equipment for tire blow-out.

5. Conclusions

In this paper, the proposed the GARKT is designed based on the characteristics of vehicles with tire blow-out. We establish a camera deviation model for the vehicle with tire blow-out. Then, according to the fact that blow-out vehicles have significant differences in different camera deviations, we first use the geometric priors to attain the prior position in perspective view, then the auto-resizable kernels (the larger the camera deviation is, the larger the kernel size is) are flattened to generate BEV representation. In addition, the noisy extrinsics analysis and the convergence speed are investigated, which indicate the robust perception for tire blow-out. However, the current work only focuses on the scenario of a single tire blow-out and only performed a simulation on the dataset. This is because tire blow-out may cause severe car accidents, and then affect the safety of drivers and surrounding passengers and cars. Therefore, it is not easy to collect real tire blow-out data for our experiment. We have tried our best to create a device mounted to the wheel hub to deflate the tire. The deflating process is just like a tire puncture in a real-life scenario. In future work, more complex tire blow-out situations and real experiments will be verified.

Author Contributions

D.Y. carried out the study and experiments and drafted the manuscript. X.F. and W.D. edited the manuscript and guided experiments. C.H. and J.L. provided computational support. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the Research on the Mechanical Load Response Mechanism and Safety Reliability of Autonomous Vehicles under Grant 52172388.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this paper were obtained from a third-party database (https://www.nuscenes.org/download), accessed on 10 September 2023.

Conflicts of Interest

The authors declare no conflict of interest. The funding sponsors had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, and in the decision to publish the results. The authors declare no conflicts of interest.

References

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Lang, A.; Vora, S.; Caesar, H.; Zhou, L.; Yang, J.; Beijbom, O. Pointpillars: Fast encoders for object detection from point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12697–12705. [Google Scholar]

- Bewley, A.; Sun, P.; Mensink, T.; Anguelov, D.; Sminchisescu, C. Range conditioned dilated convolutions for scale invariant 3d object detection. arXiv 2020, arXiv:2005.09927. [Google Scholar]

- Ma, X.; Wang, Z.; Li, H.; Zhang, P.; Ouyang, W.; Fan, X. Accurate monocular 3d object detection via color-embedded 3d reconstruction for autonomous driving. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6851–6860. [Google Scholar]

- Zhang, R.; Qiu, H.; Wang, T.; Xu, X.; Guo, Z.; Qiao, Y.; Gao, P.; Li, H. MonoDETR: Depth-aware transformer for monocular 3d object detection. arXiv 2022, arXiv:2203.13310. [Google Scholar]

- Rukhovich, D.; Vorontsova, A.; Konushin, A. ImVoxelNet: Image to voxels projection for monocular and multi-view general-purpose 3d object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 2397–2406. [Google Scholar]

- Ma, Y.; Wang, T.; Bai, X. Vision-Centric BEV Perception: A Survey. arXiv 2022, arXiv:2208.02797. [Google Scholar]

- Qian, R.; Lai, X.; Li, X. 3D Object Detection for Autonomous Driving: A Survey. Pattern Recognit. 2022, 130, 108796. [Google Scholar] [CrossRef]

- Reading, C.; Harakeh, A.; Chae, J.; Waslander, S.L. Categorical Depth Distribution Network for Monocular 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Chen, X.; Kundu, K.; Zhang, Z.; Ma, H.; Fidler, S.; Urtasun, R. Monocular 3D Object Detection for Autonomous Driving. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2147–2156. [Google Scholar] [CrossRef]

- Mousavian, A.; Anguelov, D.; Košecká, J.; Flynn, J. 3D bounding box estimation using deep learning and geometry. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 5632–5640. [Google Scholar] [CrossRef]

- Liu, Z.; Wu, Z.; Toth, R. SMOKE: Single-stage monocular 3D object detection via keypoint estimation. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020. [Google Scholar] [CrossRef]

- Wang, T.; Zhu, X.; Pang, J.; Lin, D. FCOS3D: Fully Convolutional One-Stage Monocular 3D Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 913–922. [Google Scholar] [CrossRef]

- Park, D.; Ambruş, R.; Guizilini, V.; Li, J.; Gaidon, A. Is Pseudo-Lidar needed for Monocular 3D Object detection? In Proceedings of the IEEE International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 3122–3132. [Google Scholar] [CrossRef]

- Roddick, T.; Kendall, A.; Cipolla, R. Orthographic feature transform for monocular 3D object detection. In Proceedings of the 30th British Machine Vision Conference 2019, BMVC 2019, Cardiff, UK, 9–12 September 2019. [Google Scholar]

- Xie, E.; Yu, Z.; Zhou, D.; Philion, J. M2BEV: Multi-Camera Joint 3D Detection and Segmentation with Unified Bird’s-Eye View Representation. arXiv 2022, arXiv:2204.05088. [Google Scholar]

- Wang, Y.; Guizilini, V.; Zhang, T.; Wang, Y.; Zhao, H.; Solomon, J. DETR3D: 3D Object Detection from Multi-view Images via 3D-to-2D Queries. arXiv 2021, arXiv:2110.06922. [Google Scholar]

- Li, Z.; Wang, W.; Li, H.; Xie, E.; Sima, C.; Lu, T.; Qiao, Y.; Dai, J. BEVFormer: Learning Bird’s-Eye-View Representation from Multi-camera Images via Spatiotemporal Transformers. In Proceedings of the 17th European Conference, Tel Aviv, Israel, 23–27 October 2022; Springer Nature: Cham, Switzerland, 2022; pp. 1–18. [Google Scholar] [CrossRef]

- Li, H.; Sima, C.; Dai, J.; Wang, W.; Lu, L.; Wang, H.; Geng, X.; Zeng, J.; Li, Y.; Yang, J.; et al. Delving into the Devils of Bird’s-eye-view Perception: A Review, Evaluation and Recipe. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 46, 2151–2170. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Huang, G.; Zhu, Z.; Ye, Y.; Du, D. BEVDet: High-performance Multi-camera 3D Object Detection in Bird-Eye-View. arXiv 2021, arXiv:2112.11790. [Google Scholar]

- Li, Y.; Ge, Z.; Yu, G.; Yang, J.; Wang, Z.; Shi, Y.; Sun, J.; Li, Z. BEVDepth: Acquisition of Reliable Depth for Multi-view 3D Object Detection. Proc. AAAI Conf. Artif. Intell. 2023, 37, 1477–1485. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhu, Z.; Zheng, W.; Huang, J.; Huang, G.; Zhou, J.; Lu, J. BEVerse: Unified Perception and Prediction in Birds-Eye-View for Vision-Centric Autonomous Driving. arXiv 2022, arXiv:2205.09743. [Google Scholar]

- Philion, J.; Fidler, S. Lift, Splat, Shoot: Encoding Images from Arbitrary Camera Rigs by Implicitly Unprojecting to 3D. Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 12359 LNCS. In Computer Vision–ECCV 2020, Proceedings of the 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XIV 16; Springer International Publishing: Cham, Switzerland, 2020; pp. 194–210. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, L.; Miao, Z.; Zhu, X.; Gao, J.; Hu, W.; Jiang, Y.-G. PolarFormer: Multi-camera 3D Object Detection with Polar Transformers. arXiv 2022, arXiv:2206.15398. [Google Scholar] [CrossRef]

- Chen, S.; Wang, X.; Cheng, T.; Zhang, Q.; Huang, C.; Liu, W. Polar Parametrization for Vision-based Surround-View 3D Detection. arXiv 2022, arXiv:2206.10965. [Google Scholar]

- Liu, Y.; Yan, J.; Jia, F.; Li, S.; Gao, A.; Wang, T.; Zhang, X.; Sun, J. PETRv2: A Unified Framework for 3D Perception from Multi-Camera Images. arXiv 2022, arXiv:2206.01256. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Xu, H.; Lan, G.; Wu, S.; Hao, Q. Online Intelligent Calibration of Cameras and LiDARs for Autonomous Driving Systems. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference, ITSC 2019, Auckland, New Zealand, 27–30 October 2019. [Google Scholar] [CrossRef]

- Schneider, N.; Piewak, F.; Stiller, C.; Franke, U. RegNet: Multimodal sensor registration using deep neural networks. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017. [Google Scholar] [CrossRef]

- Kodaira, A.; Zhou, Y.; Zang, P.; Zhan, W.; Tomizuka, M. SST-Calib: Simultaneous Spatial-Temporal Parameter Calibration between LIDAR and Camera. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Proceedings, ITSC, Macau, China, 8–12 October 2022; pp. 2896–2902. [Google Scholar] [CrossRef]

- Fan, S.; Wang, Z.; Huo, X.; Wang, Y.; Liu, J. Calibration-free BEV Representation for Infrastructure Perception. arXiv 2023, arXiv:2303.03583. [Google Scholar]

- Jiang, H.; Meng, W.; Zhu, H.; Zhang, Q.; Yin, J. Multi-Camera Calibration Free BEV Representation for 3D Object Detection. arXiv 2022, arXiv:2210.17252. [Google Scholar]

- Zhou, Y.; He, Y.; Zhu, H.; Wang, C.; Li, H.; Jiang, Q. Monocular 3D Object Detection: An Extrinsic Parameter Free Approach. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7552–7562. [Google Scholar] [CrossRef]

- Chen, S.; Cheng, T.; Wang, X.; Meng, W.; Zhang, Q.; Liu, W. Efficient and Robust 2D-to-BEV Representation Learning via Geometry-guided Kernel Transformer. arXiv 2022, arXiv:2206.04584. [Google Scholar]

- Caesar, H.; Bankiti, V.; Lang, A.H.; Vora, S.; Liong, V.E.; Xu, Q.; Krishnan, A.; Pan, Y.; Baldan, G.; Beijbom, O. nuscenes: A multimodal dataset for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Tan, M.; Le, Q. Effificientnet: Rethinking model scaling for convolutional neural networks. In Proceedings of the 36th International Conference on Machine Learning, PMLR, Long Beach, CA, USA, 9–15 June 2019. [Google Scholar]

- Liu, Y.; Wang, T.; Zhang, X.; Sun, J. PETR: Position embedding transformation for multi-view 3d object detection. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022. [Google Scholar]

- Huang, B.; Li, Y.; Xie, E.; Liang, F.; Wang, L.; Shen, M.; Liu, F.; Wang, T.; Luo, P.; Shao, J. Fast-BEV: Towards Real-time On-vehicle Bird’s-Eye View Perception. arXiv 2023, arXiv:2301.07870. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).