Abstract

This paper addresses the critical need for advanced real-time vehicle detection methodologies in Vehicle Intelligence Systems (VIS), especially in the context of using Unmanned Aerial Vehicles (UAVs) for data acquisition in severe weather conditions, such as heavy snowfall typical of the Nordic region. Traditional vehicle detection techniques, which often rely on custom-engineered features and deterministic algorithms, fall short in adapting to diverse environmental challenges, leading to a demand for more precise and sophisticated methods. The limitations of current architectures, particularly when deployed in real-time on edge devices with restricted computational capabilities, are highlighted as significant hurdles in the development of efficient vehicle detection systems. To bridge this gap, our research focuses on the formulation of an innovative approach that combines the fractional B-spline wavelet transform with a tailored U-Net architecture, operational on a Raspberry Pi 4. This method aims to enhance vehicle detection and localization by leveraging the unique attributes of the NVD dataset, which comprises drone-captured imagery under the harsh winter conditions of northern Sweden. The dataset, featuring 8450 annotated frames with 26,313 vehicles, serves as the foundation for evaluating the proposed technique. The comparative analysis of the proposed method against state-of-the-art detectors, such as YOLO and Faster RCNN, in both accuracy and efficiency on constrained devices, emphasizes the capability of our method to balance the trade-off between speed and accuracy, thereby broadening its utility across various domains.

1. Introduction

Vehicle detection stands as a crucial element within most Vehicle Intelligence Systems (VIS), which are typically employed to ensure safety, optimize traffic flow, and enable autonomous driving. Traditional approaches to vehicle detection often relied on Custom-engineered features and rule-based algorithms, resulting in a constrained capacity to adjust to different environmental scenarios. Furthermore, with the escalation in the complexity of real-life situations, there emerged a clear need for detection methodologies that were both more precise and advanced [1]. While complicated architectures may enhance detection precision, they introduce additional hurdles, particularly in the context of real-time applications operating on devices with limited capabilities. In many critical transportation solutions where drones are used for data acquisition and processing, a range of difficulties arises. Among these, issues concerning images captured by drones are notable, including oblique angles, non-uniform illumination, degradation, blurring, occlusion, and reduced visibility [2]. Concurrently, the necessity for on-the-fly processing as the drone captures data imposes constraints on the available computational resources [3]. The lack of sufficient data and research on vehicle detection using drones in snowy conditions is the major drive of this work [4]. Many researchers have concentrated on improving the detection of various objects, such as lanes and traffic lights, in different environments. However, it remains uncertain how these advancements will perform in the context of our specific vehicle detection challenges [5,6]. The goal is to shed light on vehicle detection challenges in snowy conditions using drones and offer valuable insights to improve the accuracy and reliability of object detection systems in adverse weather scenarios. Furthermore, this investigation seeks to assess the effectiveness of the proposed methodologies on edge computing devices and to conduct comparative analyses of both the performance and accuracy against state-of-the-art detection frameworks such as YOLO and Faster RCNN. At the inception of this study, a thorough review of the recent research was conducted, focusing on research that addresses vehicle detection on edge devices under severe weather conditions. This search aimed to establish a solid foundation for the current work by identifying gaps in the existing body of knowledge and confirming the necessity of further exploration in this area. The investigation revealed a significant lack of studies that specifically tackle the challenges associated with real-time vehicle detection using edge computing in adverse weather scenarios, such as heavy snow. This finding not only underscored the relevance and urgency of the present research but also highlighted the potential for contributing novel insights and methodologies to the field of intelligent transportation systems and computer vision, particularly in enhancing the robustness and efficiency of vehicle detection technologies in less-than-ideal environmental conditions. Table 1 summarizes the findings from our inquiry into the previously implemented models by researchers, the specific customizations applied, the specifications of the edge device employed, and the weather conditions under which the system was tested.

Table 1.

Applied search criteria over available vehicle dataset.

These above-mentioned studies collectively push the boundaries of object detection, providing tailored solutions to meet distinct needs within various application domains. However, these advancements are not without their challenges. Common obstacles across these studies include navigating the trade-off between detection speed and accuracy, ensuring consistent performance across different environmental conditions, and managing the computational demands of sophisticated models without sacrificing their effectiveness. Such challenges underscore the inherent complexities in object detection and the continuous need for innovative solutions and optimizations [16].

2. Proposed Method

The advancement of vehicle detection methods, especially with UAV images, highlights a specific instance where deep learning has played significant improvements. Nevertheless, the complexity and diversity of features in aerial images captured in severe weather conditions demand further enhancements. In response to this requirement, the proposed study proposes a technique that utilizes the fractional B-spline wavelet transform together with a customized U-Net architecture, implemented on a Raspberry Pi 4 Model B. This strategy is specifically designed to enhance vehicle detection and localization, with an emphasis on evaluating its efficacy using the NVD dataset. The NVD dataset [4], accessible at https://nvd.ltu-ai.dev/ (accessed on 12 June 2024), and a full explanation of the data extraction process can be found at [4]. NVD was compiled amidst the harsh snowy winter conditions of northern Sweden. It encompasses a collection of images taken from heights varying between 120 and 250 m, including 8450 frames that have been annotated to highlight 26,313 vehicles, alongside approximately 10 h of video content that awaits annotation. This dataset is characterized by its variation in video resolutions and frame rates, as well as differences in Ground Sample Distance (GSD) measurements, providing a comprehensive portrayal of vehicles under the demanding winter weather typical of the Nordic region.

2.1. Fractional B-Spline Wavelet Transform

A two-dimensional fractional B-spline wavelet transform was applied to extract relevant features from aerial images. The Fractional B-spline wavelet Transform is an advanced mathematical tool that extends the traditional B-spline wavelet transform by incorporating the concept of fractional calculus. Fractional calculus allows for operations that can be applied at any real or complex order, providing more flexible and precise analysis of data, especially when dealing with complex patterns or irregularities. This extension allows for a more flexible manipulation of the wavelet functions, enabling the extraction of features with varying degrees of smoothness and detail from an image. The “fractional” aspect refers to the use of non-integer derivatives, which provide a richer set of parameters to adjust the wavelet functions, thereby offering more control over the feature extraction process. The fractional nature allows for better detection of edges and boundaries in snowy conditions where the edges of vehicles can become blurred or indistinct. This transform also can analyze images at multiple scales, this is particularly useful for drone imagery, where vehicles can appear at various sizes and orientations. The implementation involves applying wavelet filters separately to vertical and horizontal dimensions. Usually, the wavelet transform involves decomposing a signal into shifted and scaled versions of a base wavelet function. In the fractional domain, this is extended by employing fractional B-spline functions as the base wavelets. The transform coefficients at a scale and shift b for a signal f(t) using a fractional wavelet (derived from the fractional B-spline functions) can be defined as in Equation (1)

where denotes the complex conjugate of .

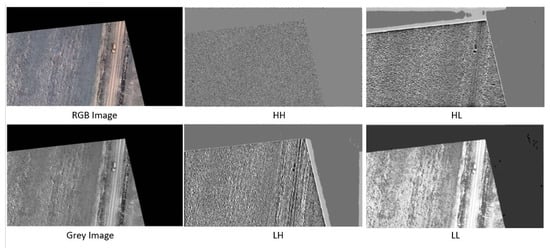

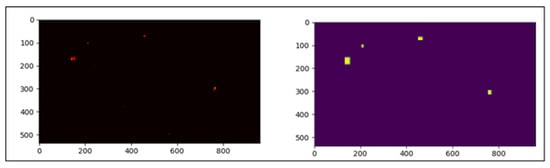

The resulting High-Low (HL) and Low-High (LH) channels were found to contain valuable information for car detection as shown in Figure 1.

Figure 1.

Sample of implementing the Fractional B-Spline Wavelet Transform over NVD dataset.

2.2. Integration with U-Net

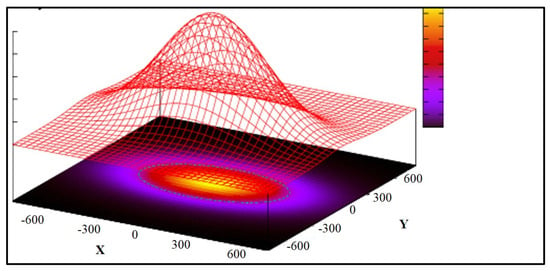

The fractional B-spline wavelet transform was implemented to utilize the provided two-dimensional fractional spline wavelet transform. The transform was applied to each channel of the input image, producing 4 different channels. Only LH and HL channels were then resized and concatenated with the output of the first convolutional layer before being input into the second convolutional layer of the U-Net architecture. The U-Net architecture, named CarLocalizationCNN, was employed for its effectiveness in semantic segmentation tasks. Notably, we modified the U-Net by incorporating the transformed channels into its second convolutional layer. The first layer output channels and the fractional B-spline transformed channels were concatenated and used as input to the second convolutional layer of the network. This approach aims to enhance the network’s ability to discern subtle features related to car presence. Skip connections, present in the original U-Net structure, were intentionally omitted to streamline the architecture for heatmap generation without accurate car boundary delineation. The output of the CarLocalizationCNN model is designed as a heatmap. The final layer of the network produces a heatmap highlighting potential car locations in the input image. This heatmap serves as a valuable tool for visualizing and interpreting the network’s car detection predictions. The generation of these heatmaps is done through the application of a Gaussian elliptical function. The Gaussian function has been selected to facilitate a gradual increase in pixel intensity (a proxy for the likelihood of the presence of a vehicle) as one moves toward the central region of the car. This feature ensures smoothness for the gradient descent during the testing process [17]. Considering the rectangular shape of cars, we opted for an elliptical function, which entails setting one dimension of the Gaussian function with a higher sigma value compared to the other. This approach allows us to better represent the vehicles’ shape in the analysis. Furthermore, we rotated this elliptical Gaussian function using values derived from the original annotations to ensure an optimal fit to the vehicle’s orientation as shown in both below figures (Figure 2 and Figure 3).

Figure 2.

Illustration of gaussian elliptical function over heatmap.

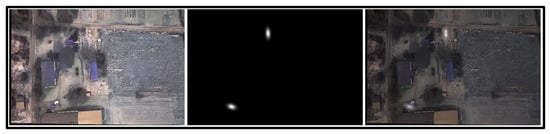

Figure 3.

From left to right: original image, produced heatmaps, overlay of both.

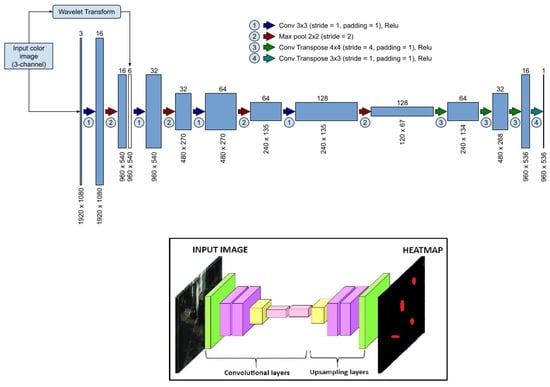

The CarLocalizationCNN model consists of convolutional and up-sampling layers. The convolutional layers capture hierarchical features, while the up-sampling layers restore spatial information as shown in Figure 4. The integration of the fractional B-spline transformed channels into the network is handled seamlessly within the architecture, culminating in a heatmap highlighting potential car locations.

Figure 4.

CarLocalizationCNN architecture (CNN)-based U-Net model).

3. Dataset

Models have been trained and assessed using frames taken from videos that vary in several aspects, including height, snow coverage, cloud coverage, and Ground Sample Distance (GSD) pixel dimensions. The video information and samples of the extracted frame are shown in Table 2 and Table 3, and Figure 5.

Table 2.

Training dataset, 3 videos with 6056 annotated frames include 18,976 vehicles.

Table 3.

Testing dataset, 2 videos with 2394 annotated frames include 7337 vehicles.

Figure 5.

Samples of NVD frames.

4. Testing and Evaluation

The outcomes of the proposed model compared against three detectors commonly utilized in are widely used in both academic research and industrial applications.

- YOLOv5s.

- YOLOv8s.

- Faster R-CNN.

4.1. Evaluation Metrics and Benchmarking

The main evaluation metrics that the models will be compared with are mean Average Precision (mAP) and Inference Time. Since the output of the proposed model is a heatmap, the heatmap was converted to a reflective bounding box by thresholding and grouping the heatmap pixels to be represented as a bounding box in Figure 6.

Figure 6.

Conversion of the heatmap to bounding boxes.

After we have extracted these bounding boxes representing car predictions, we need to compare them with actual annotated bounding boxes. For this comparison, we employ an adapted version of the Intersection over Union (IoU) metric, which essentially calculates the proportion of the overlapping area between two bounding boxes relative to their combined area, ensuring the overlapped section is accounted for only once (Union).

- When a pair of bounding boxes (one from the predictions and one from the ground truth) achieves an IoU exceeding a predefined threshold, we classify the prediction as accurate, or a True Positive.

- Should a predicted bounding box fail to meet the IoU threshold with any ground-truth bounding boxes, we categorize the prediction as a False Positive.

- Conversely, if a ground-truth bounding box does not reach the IoU threshold with any predicted bounding boxes, we label the prediction as a False Negative.

4.2. Experimental Results

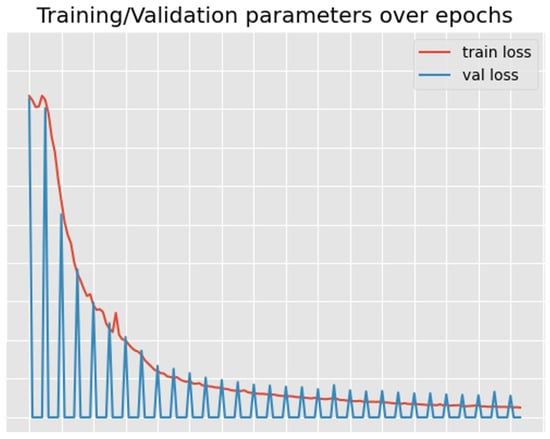

The training of the mentioned models was operated using a PC specified in Table 4 with a training/validation loss of the proposed model during the training as shown in Figure 7. Meanwhile, the evaluation was conducted on Raspberry Pi 4 Model B.

Table 4.

Specification of the PC used for training.

Figure 7.

Loss of the proposed model.

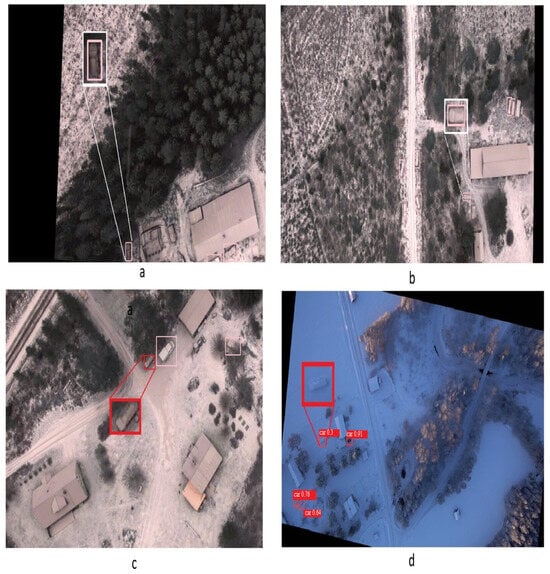

The core aim of the experimental outcomes is to assess the model’s performance through two essential metrics: mean average precision (mAP50) for accuracy, and inference time for evaluating efficiency. mAP50 measures how well the model predicts and localizes objects, indicating its accuracy, while inference time assesses the model’s speed in processing images, reflecting its practical utility in real-time applications. These metrics collectively provide a concise evaluation of the model’s overall effectiveness and applicability as shown in Table 5 and Table 6, and Figure 8.

Table 5.

Accuracy comparison between STOA detectors and the proposed model.

Table 6.

Efficiency comparison between STOA detectors and the proposed model.

Figure 8.

Detection comparisons among the different detectors. (a) detected by calLocalization only, (b) carLocalization and YOLO8s_aug, (c) not detected in all, (d) detected by YOLO8s_aug but carLocalization.

The proposed carLocalizationCNN model demonstrates an enhancement in recall, mAP50, and mAP50-90 metrics when contrasted with YOLOv8s, YOLOv5s, YOLOv5s_aug*, YOLOv8s_aug*, and Faster R-CNN (FRCNN). These improvements highlight the model’s enhanced ability to correctly identify and localize vehicles across a range of conditions and overlaps, thus offering a more robust solution for vehicle detection tasks. However, it is worth mentioning that YOLOv8s_aug outperforms the proposed model in terms of precision. This indicates that while the carLocalizationCNN model is adept at reducing false negatives and improving detection coverage, YOLOv8s maintains a higher accuracy in predicting true positive detections, minimizing false positives within its identifications.

On the efficiency, The model we’ve developed demonstrates enhanced efficiency in its inference capabilities when compared with several established models. Specifically, it processes images approximately 1.83 times quicker than YOLOv5s, signifying a notable speed improvement, which can be particularly beneficial in scenarios demanding rapid data processing. Compared to YOLOv5s, the proposed model exhibits a modest speed increment of 1.25%, which, while smaller, still reflects an advancement in processing efficiency. While the model outperforms Faster R-CNN (FRCNN) by a factor of 6.7, indicating a significant leap in inference speed. This good acceleration in processing times could enhance the applicability of the model in real-time applications like the case that we are discussing using drones for decision-making. The improvements underscore the model’s potential in balancing the trade-off between speed and accuracy, thereby broadening its utility across various domains.

5. Ethical Considerations

- Accuracy: Emphasis was placed on the high-quality annotations of the dataset, which is specific to snowy conditions in the Nordic region, acknowledging potential limitations in generalizability and the importance of accurate data for training algorithms.

- Transparency: The methodology for data collection and model training is thoroughly documented, promoting scrutiny and validation by the scientific community. The use of deep learning for vehicle detection is well-explained, with intentions to share findings and ensure the algorithms perform as expected without unintended behaviors. The dataset used is publicly available.

- Privacy: The aerial data collection minimizes privacy risks by focusing on vehicle tops, excluding identifiable details like license plates or human faces, ensuring anonymity and compliance with privacy standards.

- Fairness and Bias: The dataset encompasses a diverse range of vehicles under snowy conditions to mitigate bias. Careful dataset splitting ensures balanced training and testing sets, allowing for an equitable assessment of the model’s performance in snow-covered environments.

6. Conclusions and Future Work

In conclusion, this study represents a step forward in the domain of Vehicle Intelligence Systems (VIS), especially for real-time vehicle detection in adverse weather conditions using Unmanned Aerial Vehicles (UAVs). The proposed method addresses some of these challenges presented by heavy snowfall, common in Nordic regions. The carLocalizationCNN model developed here adds an enhancement to traditional detection methods like YOLO and Faster R-CNN in key metrics such as recall, mAP50, and mAP50-90. Moreover, the model exhibits improvements in inference speed, making it highly suitable for time-sensitive applications like UAV-based surveillance in snowy environments. The research also seeks to highlight the existing research gap highlighted by the NVD dataset. There remains a substantial need for further investigation to enhance vehicle detection capabilities in such harsh weather conditions. The extensive evaluation using the NVD dataset lays the groundwork for future research in VIS, particularly in optimizing performance for edge devices operating in challenging environments.

Future work should focus on developing detection algorithms specifically tailored for snowy conditions, accounting for unique visual challenges such as reduced contrast, varying snow textures, and occlusions caused by snow accumulation. Techniques like multi-scale feature extraction and context-aware detection can be explored to enhance robustness. Additionally, the use of more advnaced signal processing techniques can offer improved flexibility in modeling the irregular and complex shapes of snow-covered vehicles, potentially leading to more accurate detections. Research can explore optimizing the parameters of fractional splines to balance computational efficiency and detection accuracy.

Funding

This research received no external funding.

Data Availability Statement

Data can be accessed at https://nvd.ltu-ai.dev/ accessed on 10 June 2024.

Conflicts of Interest

Author Olle Hagner was employed by the company smartplanes. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Xu, Y.; Liu, X.; Cao, X.; Huang, C.; Liu, E.; Qian, S.; Liu, X.; Wu, Y.; Dong, F.; Qiu, C.W.; et al. Artificial intelligence: A powerful paradigm for scientific research. Innovation 2021, 2, 100179. [Google Scholar] [CrossRef] [PubMed]

- Tahir, N.U.A.; Zhang, Z.; Asim, M.; Chen, J.; ELAffendi, M. Object Detection in Autonomous Vehicles under Adverse Weather: A Review of Traditional and Deep Learning Approaches. Algorithms 2024, 17, 103. [Google Scholar] [CrossRef]

- Chen, C.; Wang, C.; Liu, B.; He, C.; Cong, L.; Wan, S. Edge intelligence empowered vehicle detection and image segmentation for autonomous vehicles. IEEE Trans. Intell. Transp. Syst. 2023, 24, 13023–13034. [Google Scholar] [CrossRef]

- Mokayed, H.; Nayebiastaneh, A.; De, K.; Sozos, S.; Hagner, O.; Backe, B. Nordic Vehicle Dataset (NVD): Performance of vehicle detectors using newly captured NVD from UAV in different snowy weather conditions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5313–5321. [Google Scholar]

- Lee, S.H.; Lee, S.H. U-Net-Based Learning Using Enhanced Lane Detection with Directional Lane Attention Maps for Various Driving Environments. Mathematics 2024, 12, 1206. [Google Scholar] [CrossRef]

- Chen, B.; Fan, X. MSGC-YOLO: An Improved Lightweight Traffic Sign Detection Model under Snow Conditions. Mathematics 2024, 12, 1539. [Google Scholar] [CrossRef]

- Li, S.; Yang, X.; Lin, X.; Zhang, Y.; Wu, J. Real-time vehicle detection from UAV aerial images based on improved YOLOv5. Sensors 2023, 23, 5634. [Google Scholar] [CrossRef] [PubMed]

- Bulut, A.; Ozdemir, F.; Bostanci, Y.S.; Soyturk, M. Performance Evaluation of Recent Object Detection Models for Traffic Safety Applications on Edge. In Proceedings of the 2023 5th International Conference on Image Processing and Machine Vision, Macau, China, 13–15 January 2023; pp. 1–6. [Google Scholar]

- Liu, Z.; Gao, Y.; Du, Q.; Chen, M.; Lv, W. YOLO-extract: Improved YOLOv5 for aircraft object detection in remote sensing images. IEEE Access 2023, 11, 1742–1751. [Google Scholar] [CrossRef]

- Huang, F.; Chen, S.; Wang, Q.; Chen, Y.; Zhang, D. Using deep learning in an embedded system for real-time target detection based on images from an unmanned aerial vehicle: Vehicle detection as a case study. Int. J. Digit. Earth 2023, 16, 910–936. [Google Scholar] [CrossRef]

- Mokayed, H.; Nayebiastaneh, A.; Alkhaled, L.; Sozos, S.; Hagner, O.; Backe, B. Challenging YOLO and Faster RCNN in Snowy Conditions: UAV Nordic Vehicle Dataset (NVD) as an Example. In Proceedings of the 2024 2nd International Conference on Unmanned Vehicle Systems-Oman (UVS), Muscat, Oman, 12–14 February 2024; pp. 1–6. [Google Scholar]

- John, S.M.; Kareem, F.A.; Paul, S.G.; Gafur, A.; Al Mansoori, S.; Panthakkan, A. Enhanced YOLOv7 Model for Accurate Vehicle Detection from UAV Imagery. In Proceedings of the 2023 International Conference on Innovations in Engineering and Technology (ICIET), Muvattupuzha, India, 13–14 July 2023; pp. 1–4. [Google Scholar]

- Javid, I.; Ghazali, R.; Saeed, W.; Batool, T.; Al-Wajih, E. CNN with New Spatial Pyramid Pooling and Advanced Filter-Based Techniques: Revolutionizing Traffic Monitoring via Aerial Images. Sustainability 2023, 16, 117. [Google Scholar] [CrossRef]

- Tanasa, I.Y.; Budiarti, D.H.; Guno, Y.; Yunata, A.S.; Wibowo, M.; Hidayat, A.; Purnamastuti, F.N.; Purwanto, A.; Wicaksono, G.; Domiri, D.D. U-Net Utilization on segmentation of Aerial Captured Images. In Proceedings of the 2023 International Conference on Radar, Antenna, Microwave, Electronics, and Telecommunications (ICRAMET), Bandung, Indonesia, 15–16 November 2023; pp. 107–112. [Google Scholar]

- Mokayed, H.; Shivakumara, P.; Woon, H.H.; Kankanhalli, M.; Lu, T.; Pal, U. A new DCT-PCM method for license plate number detection in drone images. Pattern Recognit. Lett. 2021, 148, 45–53. [Google Scholar] [CrossRef]

- Chen, Z.; Yang, J.; Feng, Z.; Zhu, H. RailFOD23: A dataset for foreign object detection on railroad transmission lines. Sci. Data 2024, 11, 72. [Google Scholar] [CrossRef]

- McKean, H.; Moll, V. Elliptic Curves: Function Theory, Geometry, Arithmetic; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).