Vehicle Detection Algorithms for Autonomous Driving: A Review

Abstract

1. Introduction

2. Preliminaries for Vehicle Detection

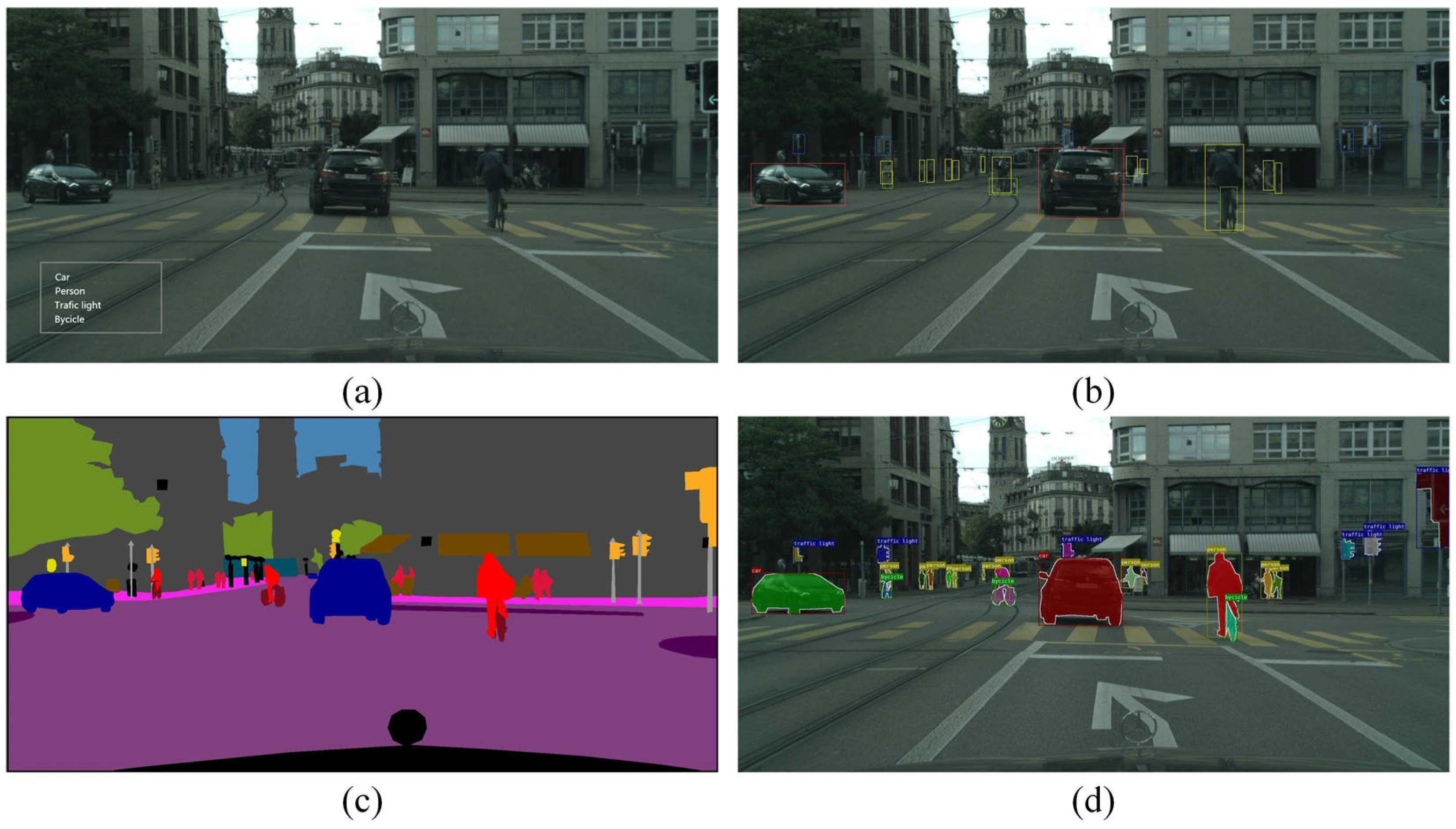

2.1. Tasks

2.2. Evaluation Metrics

2.3. Datasets

3. Vehicle Detection Algorithms Based on Machine Vision

3.1. Traditional-Based Methods for Vehicle Detection

- Color: Due to the continuity and concentration of the color distribution of the vehicles in the image, the vehicles can be separated from the image background by applying different color channels and setting appropriate segmentation thresholds [40,41]. However, techniques based on color features are susceptible to variations in illumination and specular reflections [42].

- Symmetry: Most cars have symmetrical rear ends. By leveraging this feature, we can search for regions with high symmetry on ROI in the image to obtain vehicle information, resulting in the identification of vehicle objects and non-vehicle objects. Moreover, symmetry can not only help to optimize the bounding boxes of vehicles, but also be employed to confirm if the ROI includes targets for vehicles in the HV stages [43]. However, the computation of symmetry increases the overhead of time and reduces detection efficiency.

- Edges: Vehicle features such as silhouettes, bumpers, rear windows, and license plates exhibit strong linear textures in both vertical and horizontal directions [44]. Extracting these typical edge features from the image allows for a further determination of the car’s bounding box [45,46]. However, the edge lines may tend to overlap with some texture lines of the image background, which may lead to the appearance of false positives in particular scenes.

- Texture: Typically, road textures exhibit a relatively uniform distribution, whereas textures on car surfaces tend to be less uniform due to the presence of highly varied regions. We can indirectly perform vehicle detection by distinguishing the difference between these two conditions [47]. However, relying on feature textures to detect vehicles may result in low detection accuracy.

- Shadows: In bright daylight, the vehicles traveling on the road cast stable shadows underneath. The shadowed region clearly exhibits a lower gray value compared to the remaining road areas. Utilizing segmentation thresholds enables the extraction of the underlying shadow as the ROI for vehicles during the HG stage [48,49]. However, the application scenarios of this approach are relatively limited.

3.2. Machine Learning-Based Methods for Vehicle Detection

3.2.1. Feature Extraction

3.2.2. Classifier

3.3. Deep Learning-Based Methods for Vehicle Detection

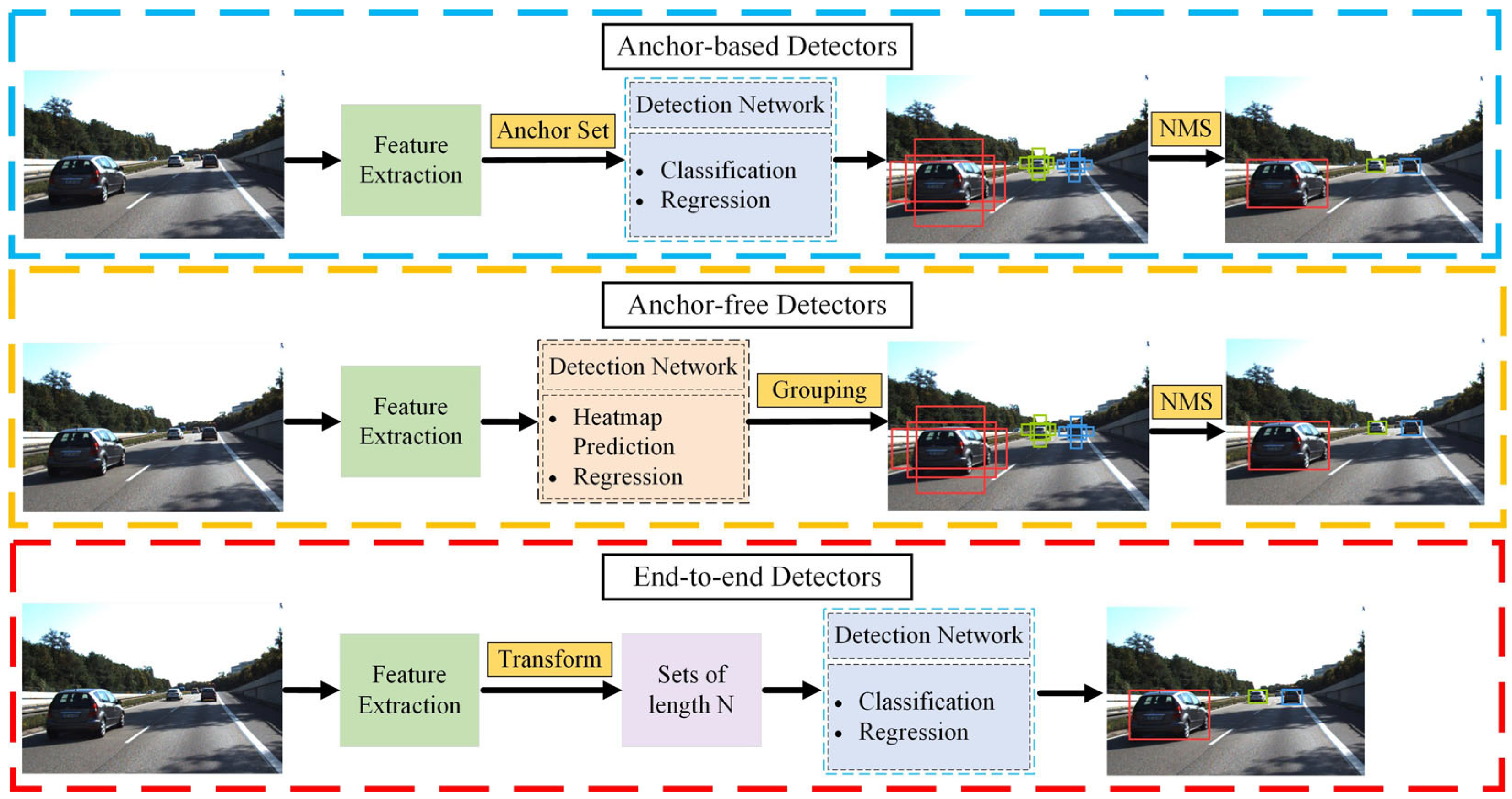

3.3.1. Object-Detection-Based Methods

- (1)

- Anchor-Based Detectors

- (2)

- Anchor-Free Detectors

- (3)

- End-To-End Detectors

3.3.2. Segmentation-Based Methods

4. Vehicle Detection Algorithms Based on Radar and LiDAR

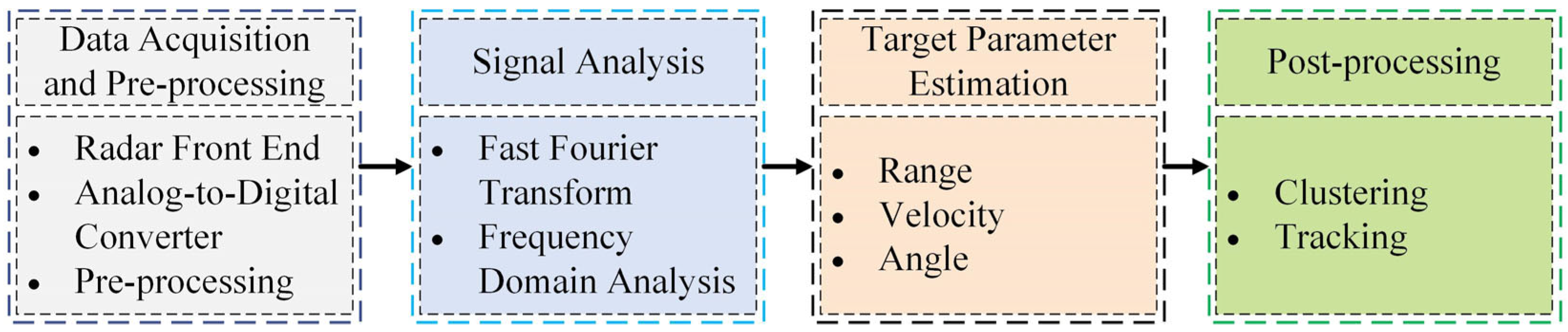

4.1. Millimeter-Wave Radar-Based Methods for Vehicle Detection

4.1.1. Target-Level Radar

4.1.2. Image-Level Radar

4.2. LiDAR-Based Methods for Vehicle Detection

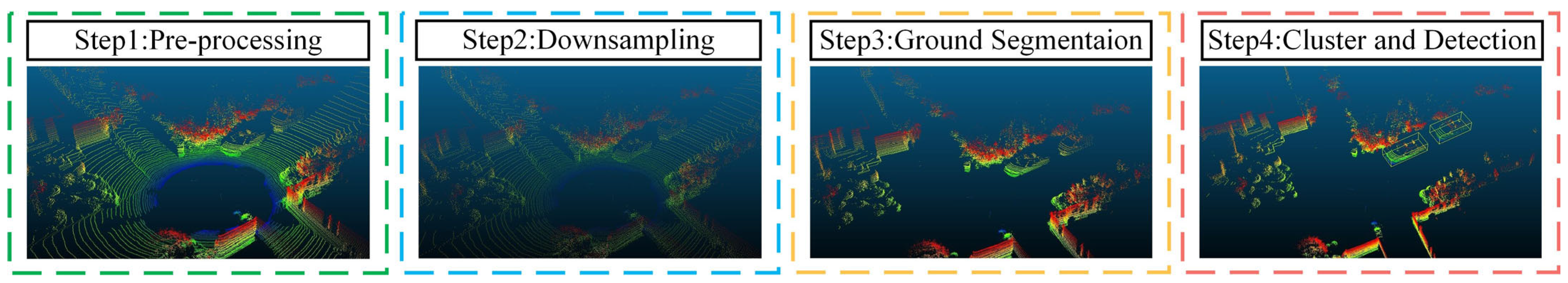

4.2.1. Traditional-Based Methods

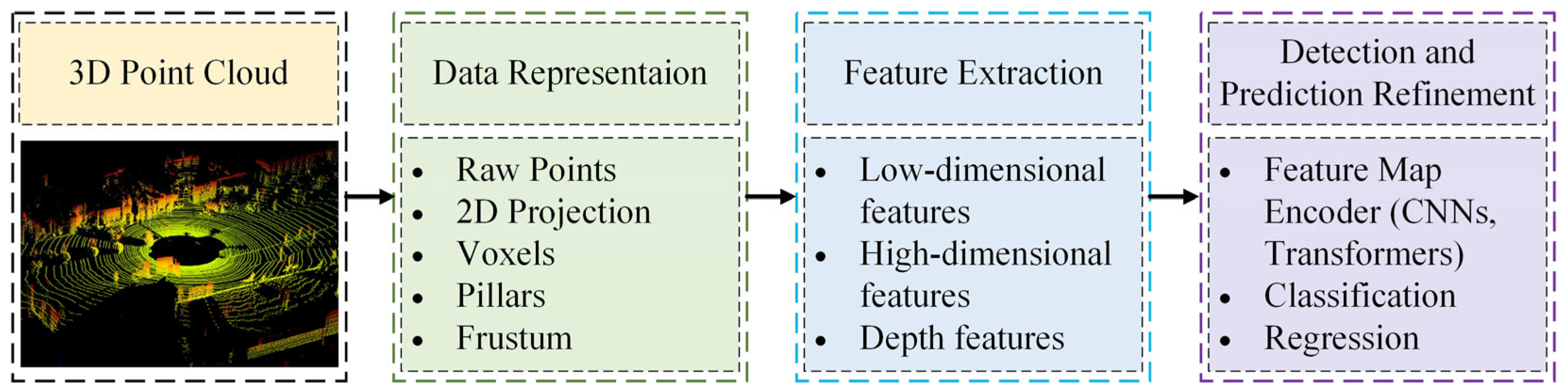

4.2.2. Deep Learning-Based Methods

- (1)

- Point-Based Methods

- (2)

- Projection-Based Methods

- (3)

- Voxel-Based Methods

4.2.3. Point Cloud Segmentation-Based Methods

- (1)

- Point-Based Methods

- (2)

- Projection-Based Methods

- (3)

- Voxel-Based Methods

5. Vehicle Detection Algorithms Based on Multi-Sensor Fusion

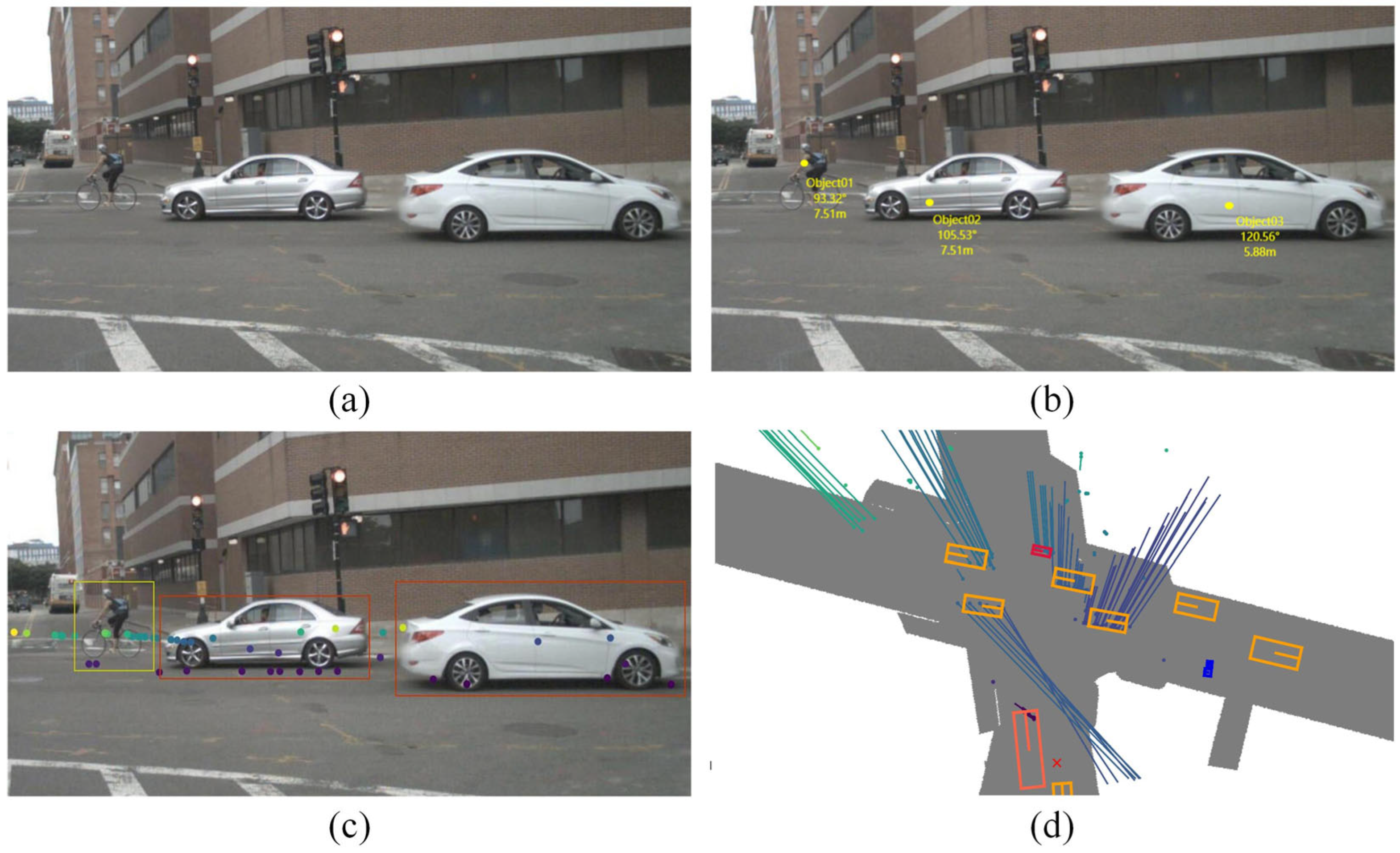

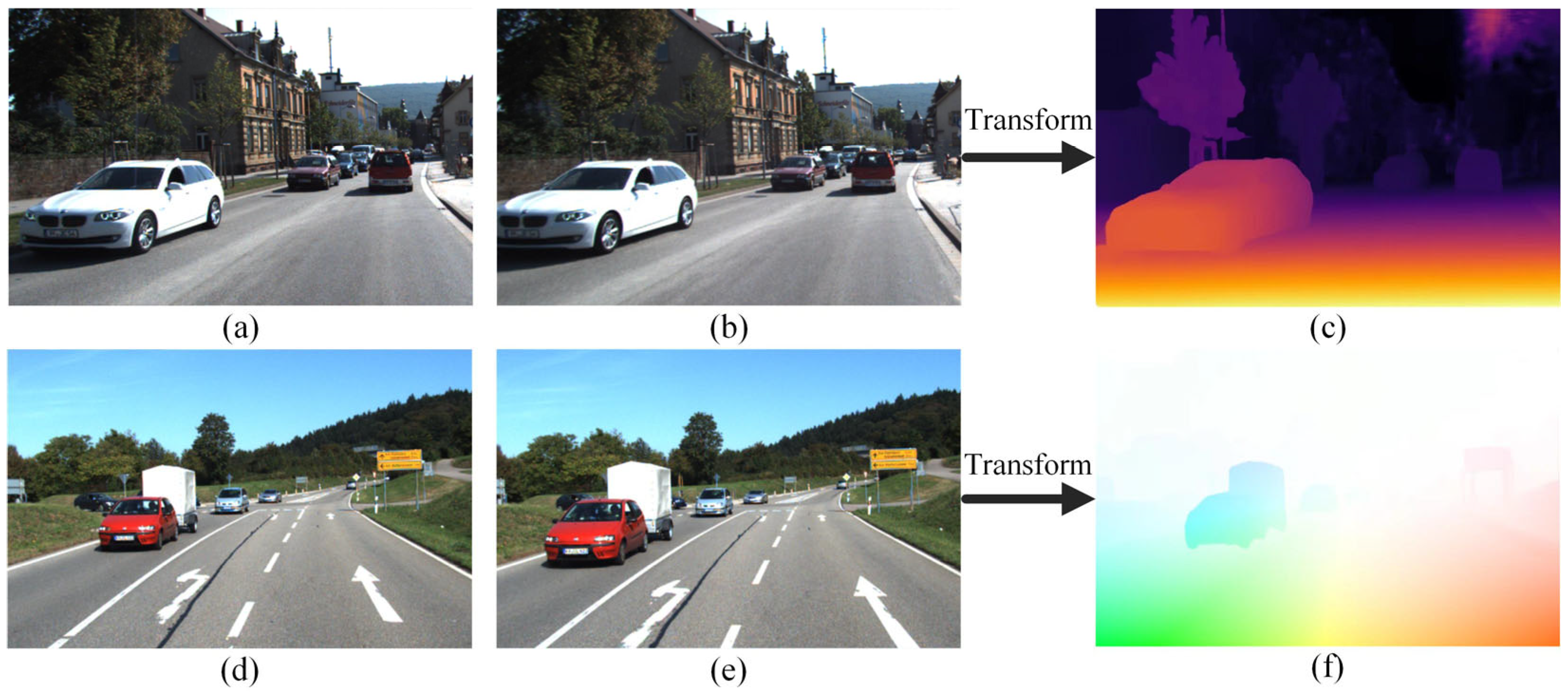

5.1. Stereo Vision-Based Methods for Vehicle Detection

- (1)

- Appearance-Based Methods

- (2)

- Motion-Based Methods

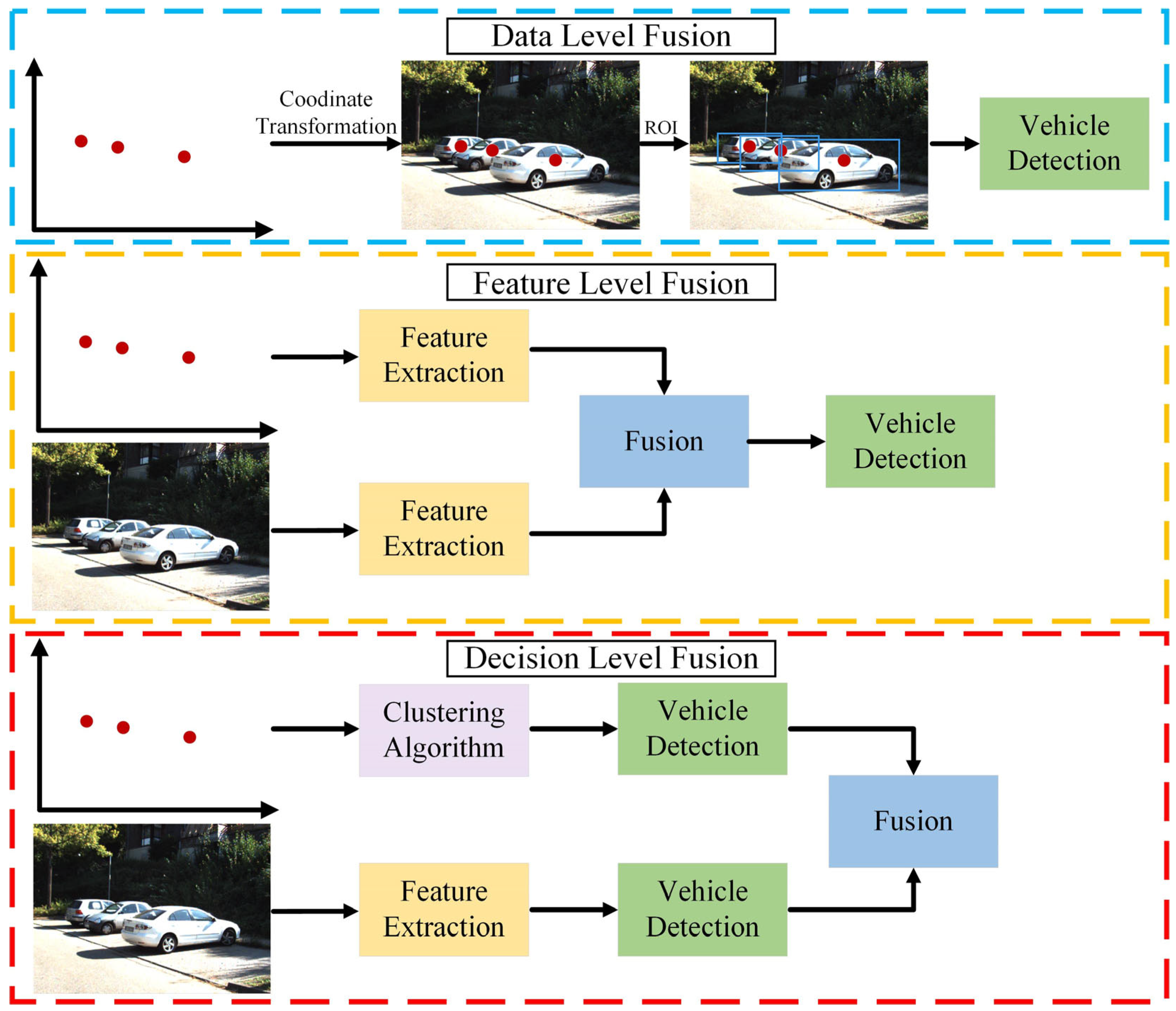

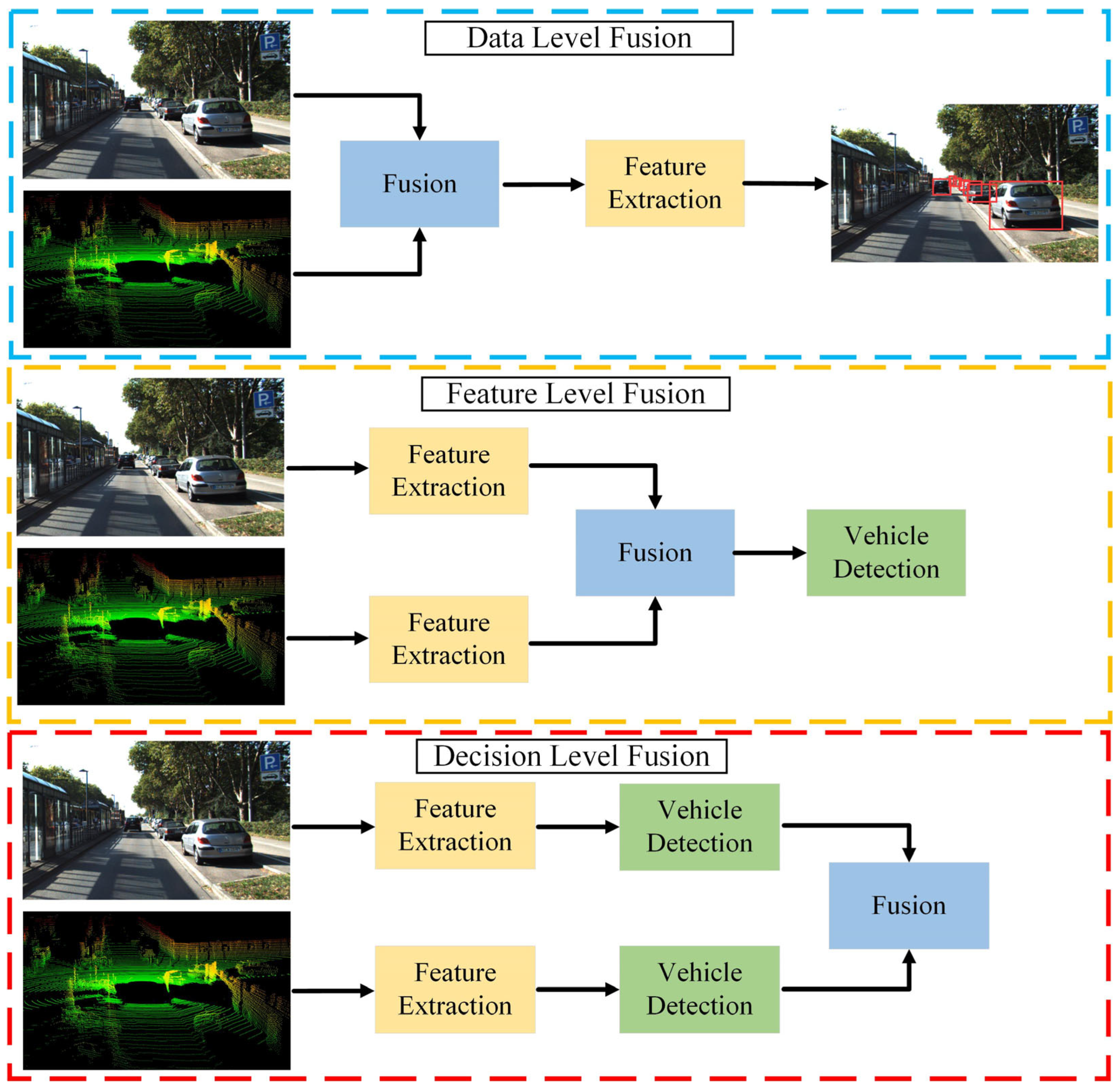

5.2. Fusion of Radar and Vision-Based Methods for Vehicle Detection

- (1)

- Data-Level Fusion

- (2)

- Feature-Level Fusion

- (3)

- Decision-Level Fusion

5.3. Fusion of LiDAR and Vision-Based Methods for Vehicle Detection

- (1)

- Data-Level Fusion

- (2)

- Feature-Level Fusion

- (3)

- Decision-Level Fusion

5.4. Multi-Sensor-Based Methods for Vehicle Detection

6. Discussion and Future Trends

6.1. Discussion

- (1)

- Machine Vision

- (2)

- Millimeter-Wave Radar

- (3)

- LiDAR

- (4)

- Sensor Settings

6.2. Future Trends

- (1)

- Balancing Speed and Accuracy of Algorithms

- (2)

- Multi-Sensor Fusion Strategy

- (3)

- Multi-tasking Algorithms

- (4)

- Unsupervised Learning

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kukkala, V.K.; Tunnell, J.; Pasricha, S.; Bradley, T. Advanced driver-assistance systems: A path toward autonomous vehicles. IEEE Consum. Electron. Mag. 2018, 7, 18–25. [Google Scholar] [CrossRef]

- Crayton, T.J.; Meier, B.M. Autonomous vehicles: Developing a public health research agenda to frame the future of transportation policy. J. Transp. Health 2017, 6, 245–252. [Google Scholar] [CrossRef]

- Shadrin, S.S.; Ivanova, A.A. Analytical review of standard Sae J3016 «taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles» with latest updates. Avtomob. Doroga Infrastrukt. 2019, 3, 10. [Google Scholar]

- Karangwa, J.; Liu, J.; Zeng, Z. Vehicle detection for autonomous driving: A review of algorithms and datasets. IEEE Trans. Intell. Transp. Syst. 2023, 24, 11568–11594. [Google Scholar] [CrossRef]

- Alam, F.; Mehmood, R.; Katib, I.; Altowaijri, S.M.; Albeshri, A. TAAWUN: A decision fusion and feature specific road detection approach for connected autonomous vehicles. Mob. Netw. Appl. 2023, 28, 636–652. [Google Scholar] [CrossRef]

- Sivaraman, S.; Trivedi, M.M. A review of recent developments in vision-based vehicle detection. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast City, Australia, 23–26 June 2013; pp. 310–315. [Google Scholar]

- Bouguettaya, A.; Zarzour, H.; Kechida, A.; Taberkit, A.M. Vehicle detection from UAV imagery with deep learning: A review. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6047–6067. [Google Scholar] [CrossRef] [PubMed]

- Gormley, M.; Walsh, T.; Fuller, R. Risks in the Driving of Emergency Service Vehicles. Ir. J. Psychol. 2008, 29, 7–18. [Google Scholar] [CrossRef]

- Chadwick, S.; Maddern, W.; Newman, P. Distant vehicle detection using radar and vision. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 8311–8317. [Google Scholar]

- Sivaraman, S.; Trivedi, M.M. Looking at Vehicles on the Road: A Survey of Vision-Based Vehicle Detection, Tracking, and Behavior Analysis. IEEE Trans. Intell. Transp. Syst. 2013, 14, 1773–1795. [Google Scholar] [CrossRef]

- Sun, Z.; Bebis, G.; Miller, R. On-Road Vehicle Detection: A Review. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 694–711. [Google Scholar]

- Wang, Z.; Zhan, J.; Duan, C.; Guan, X.; Lu, P.; Yang, K. A review of vehicle detection techniques for intelligent vehicles. IEEE Trans. Neural Netw. Learn. Syst. 2022, 34, 3811–3831. [Google Scholar] [CrossRef]

- Liu, Q.; Li, Z.; Yuan, S.; Zhu, Y.; Li, X. Review on vehicle detection technology for unmanned ground vehicles. Sensors 2021, 21, 1354. [Google Scholar] [CrossRef] [PubMed]

- Wei, Z.; Zhang, F.; Chang, S.; Liu, Y.; Wu, H.; Feng, Z. Mmwave radar and vision fusion for object detection in autonomous driving: A review. Sensors 2022, 22, 2542. [Google Scholar] [CrossRef] [PubMed]

- The KITTI Vision Benchmark Suite. Available online: https://www.cvlibs.net/datasets/kitti (accessed on 7 May 2024).

- Cityscapes Dataset. Available online: https://www.cityscapes-dataset.com (accessed on 7 May 2024).

- Oxford Radar RobotCar Dataset. Available online: https://oxford-robotics-institute.github.io/radar-robotcar-dataset (accessed on 7 May 2024).

- Mapillary Vistas Dataset. Available online: https://www.mapillary.com (accessed on 7 May 2024).

- Berkeley DeepDrive. Available online: http://bdd-data.berkeley.edu (accessed on 7 May 2024).

- ApolloScape Advanced Open Tools and Datasets for Autonomous Driving. Available online: https://apolloscape.auto (accessed on 7 May 2024).

- KAIST Multispectral Pedestrian Detection Benchmark. Available online: http://multispectral.kaist.ac.kr (accessed on 7 May 2024).

- Waymo Open Dataset. Available online: https://waymo.com/open (accessed on 7 May 2024).

- Self-Driving Motion Prediction Dataset. Available online: https://github.com/woven-planet/l5kit (accessed on 7 May 2024).

- Argoverse 1. Available online: https://www.argoverse.org/av1.html (accessed on 7 May 2024).

- D2-City. Available online: https://www.v7labs.com/open-datasets/d2-city (accessed on 7 May 2024).

- H3D Honda 3D Dataset. Available online: https://usa.honda-ri.com//H3D (accessed on 7 May 2024).

- nuScenes. Available online: https://www.nuscenes.org/nuscenes (accessed on 7 May 2024).

- Canadian Adverse Driving Conditions Dataset. Available online: http://cadcd.uwaterloo.ca (accessed on 7 May 2024).

- Audi Autonomous Driving Dataset. Available online: https://www.a2d2.audi/a2d2/en.html (accessed on 7 May 2024).

- A*3D: An Autonomous Driving Dataset in Challeging Environments. Available online: https://github.com/I2RDL2/ASTAR-3D (accessed on 7 May 2024).

- Heriot-Watt RADIATE Dataset. Available online: https://pro.hw.ac.uk/radiate (accessed on 7 May 2024).

- ACDC DATASET. Available online: https://acdc.vision.ee.ethz.ch (accessed on 7 May 2024).

- KITTI-360: A Large-Scale Dataset with 3D&2D Annotations. Available online: https://www.cvlibs.net/datasets/kitti-360 (accessed on 7 May 2024).

- SHIFT DATASET: A Synthetic Driving Dataset For Continuous Multi-Task Domain Adaptation. Available online: https://www.vis.xyz/shift (accessed on 7 May 2024).

- Argoverse 2. Available online: https://www.argoverse.org/av2.html (accessed on 7 May 2024).

- V2v4real: The First Large-Scale, Real-World Multimodal Dataset for Vehicle-to-Vehicle (V2V) Perception. Available online: https://mobility-lab.seas.ucla.edu/v2v4real (accessed on 7 May 2024).

- Bertozzi, M.; Broggi, A.; Fascioli, A. Vision-based intelligent vehicles: State of the art and perspectives. Robot. Auton. Syst. 2000, 32, 1–16. [Google Scholar] [CrossRef]

- Endsley, M.R. Autonomous driving systems: A preliminary naturalistic study of the Tesla Model S. J. Cognit. Eng. Decis. Making 2017, 11, 225–238. [Google Scholar] [CrossRef]

- Yoffie, D.B. Mobileye: The Future of Driverless Cars; Harvard Business School Case; Harvard Business Review Press: Cambridge, MA, USA, 2014; pp. 421–715. [Google Scholar]

- Russell, A.; Zou, J.J. Vehicle detection based on color analysis. In Proceedings of the 2012 International Symposium on Communications and Information Technologies (ISCIT), Gold Coast, Australia, 2–5 October 2012; pp. 620–625. [Google Scholar]

- Shao, H.X.; Duan, X.M. Video vehicle detection method based on multiple color space information fusion. Adv. Mater. Res. 2012, 546, 721–726. [Google Scholar] [CrossRef]

- Chen, H.-T.; Wu, Y.-C.; Hsu, C.-C. Daytime preceding vehicle brake light detection using monocular vision. IEEE Sens. J. 2015, 16, 120–131. [Google Scholar] [CrossRef]

- Teoh, S.S.; Bräunl, T. Symmetry-based monocular vehicle detection system. Mach. Vis. Appl. 2012, 23, 831–842. [Google Scholar] [CrossRef]

- Tsai, W.-K.; Wu, S.-L.; Lin, L.-J.; Chen, T.-M.; Li, M.-H. Edge-based forward vehicle detection method for complex scenes. In Proceedings of the 2014 IEEE International Conference on Consumer Electronics-Taiwan, Taipei, Taiwan, 26–28 May 2014; pp. 173–174. [Google Scholar]

- Mu, K.; Hui, F.; Zhao, X.; Prehofer, C. Multiscale edge fusion for vehicle detection based on difference of Gaussian. Optik 2016, 127, 4794–4798. [Google Scholar] [CrossRef]

- Nur, S.A.; Ibrahim, M.; Ali, N.; Nur, F.I.Y. Vehicle detection based on underneath vehicle shadow using edge features. In Proceedings of the 2016 6th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 25–27 November 2016; pp. 407–412. [Google Scholar]

- Creusot, C.; Munawar, A. Real-time small obstacle detection on highways using compressive RBM road reconstruction. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; pp. 162–167. [Google Scholar]

- Chen, X.; Chen, H.; Xu, H. Vehicle detection based on multifeature extraction and recognition adopting RBF neural network on ADAS system. Complexity 2020, 2020, 8842297. [Google Scholar] [CrossRef]

- Ibarra-Arenado, M.; Tjahjadi, T.; Pérez-Oria, J.; Robla-Gómez, S.; Jiménez-Avello, A. Shadow-based vehicle detection in urban traffic. Sensors 2017, 17, 975. [Google Scholar] [CrossRef]

- Kosaka, N.; Ohashi, G. Vision-based nighttime vehicle detection using CenSurE and SVM. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2599–2608. [Google Scholar] [CrossRef]

- Satzoda, R.K.; Trivedi, M.M. Looking at vehicles in the night: Detection and dynamics of rear lights. IEEE Trans. Intell. Transp. Syst. 2016, 20, 4297–4307. [Google Scholar] [CrossRef]

- Gao, L.; Li, C.; Fang, T.; Xiong, Z. Vehicle detection based on color and edge information. In Proceedings of the Image Analysis and Recognition: 5th International Conference, Berlin/Heidelberg, Germany, 25–27 June 2008; pp. 142–150. [Google Scholar]

- Pradeep, C.S.; Ramanathan, R. An improved technique for night-time vehicle detection. In Proceedings of the 2018 International Conference on Advances in Computing, Communications and Informatics (ICACCI), Karnataka, India, 19–22 September 2018; pp. 508–513. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; pp. 886–893. [Google Scholar]

- Yan, G.; Yu, M.; Yu, Y.; Fan, L. Real-time vehicle detection using histograms of oriented gradients and AdaBoost classification. Optik 2016, 127, 7941–7951. [Google Scholar] [CrossRef]

- Khairdoost, N.; Monadjemi, S.A.; Jamshidi, K. Front and rear vehicle detection using hypothesis generation and verification. Signal Image Process. 2013, 4, 31. [Google Scholar] [CrossRef]

- Cheon, M.; Lee, W.; Yoon, C.; Park, M. Vision-based vehicle detection system with consideration of the detecting location. IEEE Trans. Intell. Transp. Syst. 2012, 13, 1243–1252. [Google Scholar] [CrossRef]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell. 2009, 32, 1627–1645. [Google Scholar] [CrossRef]

- Wen, X.; Shao, L.; Fang, W.; Xue, Y. Efficient feature selection and classification for vehicle detection. IEEE Trans. Circuits Syst. Video Technol. 2014, 25, 508–517. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Harwood, D. Performance evaluation of texture measures with classification based on Kullback discrimination of distributions. In Proceedings of the 12th International Conference on Pattern Recognition, Jerusalem, Israel, 9–13 October 1994; pp. 582–585. [Google Scholar]

- Feichtinger, H.G.; Strohmer, T. Gabor Analysis and Algorithms: Theory and Applications; Springer: New York, NY, USA, 2012. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the European Conference on Computer Vision, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Yang, X.; Yang, Y. A method of efficient vehicle detection based on HOG-LBP. Comput. Eng. 2014, 40, 210–214. [Google Scholar]

- Arunmozhi, A.; Park, J. Comparison of HOG, LBP and Haar-like features for on-road vehicle detection. In Proceedings of the 2018 IEEE International Conference on Electro/Information Technology (EIT), Rochester, MI, USA, 3–5 May 2018; pp. 0362–0367. [Google Scholar]

- Webb, G.I.; Zheng, Z. Multistrategy ensemble learning: Reducing error by combining ensemble learning techniques. IEEE Trans. Knowl. Data Eng. 2004, 16, 980–991. [Google Scholar] [CrossRef]

- Dong, X.; Yu, Z.; Cao, W.; Shi, Y.; Ma, Q. A survey on ensemble learning. Front. Comput. Sci. 2020, 14, 241–258. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The kitti dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Ali, A.M.; Eltarhouni, W.I.; Bozed, K.A. On-road vehicle detection using support vector machine and decision tree classifications. In Proceedings of the 6th International Conference on Engineering & MIS 2020, Istanbul, Turkey, 4–6 July 2020; pp. 1–5. [Google Scholar]

- Sivaraman, S.; Trivedi, M.M. Active learning for on-road vehicle detection: A comparative study. Mach. Vis. Appl. 2014, 25, 599–611. [Google Scholar] [CrossRef]

- Hsieh, J.-W.; Chen, L.-C.; Chen, D.-Y. Symmetrical SURF and its applications to vehicle detection and vehicle make and model recognition. IEEE Trans. Intell. Transp. Syst. 2014, 15, 6–20. [Google Scholar] [CrossRef]

- Sun, Z.; Bebis, G.; Miller, R. Monocular precrash vehicle detection: Features and classifiers. IEEE Trans. Image Process. 2006, 15, 2019–2034. [Google Scholar] [PubMed]

- Ho, W.T.; Lim, H.W.; Tay, Y.H. Two-stage license plate detection using gentle Adaboost and SIFT-SVM. In Proceedings of the 2009 First Asian Conference on Intelligent Information and Database Systems, Quang Binh, Vietnam, 1–3 April 2009; pp. 109–114. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, QC, Canada, 7–12 December 2015; pp. 91–99. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef] [PubMed]

- Dai, J.; Li, Y.; He, K.; Sun, J. R-fcn: Object detection via region-based fully convolutional networks. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; pp. 379–387. [Google Scholar]

- Lin, T.-Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Zhao, Q.; Sheng, T.; Wang, Y.; Tang, Z.; Chen, Y.; Cai, L.; Ling, H. M2det: A single-shot object detector based on multi-level feature pyramid network. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9259–9266. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ultralytics YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 7 May 2024).

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 734–750. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. Reppoints: Point set representation for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 9657–9666. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 6569–6578. [Google Scholar]

- Zhou, X.; Zhuo, J.; Krahenbuhl, P. Bottom-up object detection by grouping extreme and center points. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 850–859. [Google Scholar]

- Lu, X.; Li, B.; Yue, Y.; Li, Q.; Yan, J. Grid r-cnn. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7363–7372. [Google Scholar]

- Zhu, C.; He, Y.; Savvides, M. Feature selective anchor-free module for single-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 840–849. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Wang, J.; Chen, K.; Yang, S.; Loy, C.C.; Lin, D. Region proposal by guided anchoring. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2965–2974. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Reis, D.; Kupec, J.; Hong, J.; Daoudi, A. Real-Time Flying Object Detection with YOLOv8. arXiv 2023, arXiv:2305.09972. [Google Scholar]

- Wang, C.-Y.; Yeh, I.-H.; Liao, H.-Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, J.; Song, L.; Li, Z.; Sun, H.; Sun, J.; Zheng, N. End-to-end object detection with fully convolutional network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 10–25 June 2021; pp. 15849–15858. [Google Scholar]

- Sun, P.; Zhang, R.; Jiang, Y.; Kong, T.; Xu, C.; Zhan, W.; Tomizuka, M.; Li, L.; Yuan, Z.; Wang, C. Sparse r-cnn: End-to-end object detection with learnable proposals. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 10–25 June 2021; pp. 14454–14463. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 213–229. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5998–6008. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

- Wang, Y.; Zhang, X.; Yang, T.; Sun, J. Anchor detr: Query design for transformer-based detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 22 February–1 March 2022; pp. 2567–2575. [Google Scholar]

- Lv, W.; Xu, S.; Zhao, Y.; Wang, G.; Wei, J.; Cui, C.; Du, Y.; Dang, Q.; Liu, Y. Detrs beat yolos on real-time object detection. arXiv 2023, arXiv:2304.08069. [Google Scholar]

- Zhu, Y.; Zhao, C.; Wang, J.; Zhao, X.; Wu, Y.; Lu, H. Couplenet: Coupling global structure with local parts for object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4126–4134. [Google Scholar]

- Li, Z.; Peng, C.; Yu, G.; Zhang, X.; Deng, Y.; Sun, J. Detnet: A backbone network for object detection. arXiv 2018, arXiv:1804.06215. [Google Scholar]

- Bodla, N.; Singh, B.; Chellappa, R.; Davis, L.S. Soft-NMS--improving object detection with one line of code. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5561–5569. [Google Scholar]

- Huang, J.; Rathod, V.; Sun, C.; Zhu, M.; Korattikara, A.; Fathi, A.; Fischer, I.; Wojna, Z.; Song, Y.; Guadarrama, S. Speed/accuracy trade-offs for modern convolutional object detectors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7310–7311. [Google Scholar]

- Singh, B.; Davis, L.S. An analysis of scale invariance in object detection snip. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3578–3587. [Google Scholar]

- Fu, C.-Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4203–4212. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W. YOLOv6: A single-stage object detection framework for industrial applications. arXiv 2022, arXiv:2209.02976. [Google Scholar]

- Yao, Z.; Ai, J.; Li, B.; Zhang, C. Efficient detr: Improving end-to-end object detector with dense prior. arXiv 2021, arXiv:2104.01318. [Google Scholar]

- Zhou, Z.H. A brief introduction to weakly supervised learning. Natl. Sci. Rev. 2017, 5, 44–53. [Google Scholar] [CrossRef]

- Pinheiro, P.O.O.; Collobert, R.; Dollár, P. Learning to segment object candidates. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 1990–1998. [Google Scholar]

- Pinheiro, P.O.; Lin, T.-Y.; Collobert, R.; Dollár, P. Learning to refine object segments. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 75–91. [Google Scholar]

- Zagoruyko, S.; Lerer, A.; Lin, T.-Y.; Pinheiro, P.O.; Gross, S.; Chintala, S.; Dollár, P. A multipath network for object detection. arXiv 2016, arXiv:1604.02135. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Semantic image segmentation with deep convolutional nets and fully connected crfs. arXiv 2014, arXiv:1412.7062. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Chen, L.-C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking atrous convolution for semantic image segmentation. arXiv 2017, arXiv:1706.05587. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. Refinenet: Multi-path refinement networks for high-resolution semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1925–1934. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Zhao, H.; Qi, X.; Shen, X.; Shi, J.; Jia, J. Icnet for real-time semantic segmentation on high-resolution images. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 405–420. [Google Scholar]

- Luc, P.; Couprie, C.; Chintala, S.; Verbeek, J. Semantic segmentation using adversarial networks. arXiv 2016, arXiv:1611.08408. [Google Scholar]

- Souly, N.; Spampinato, C.; Shah, M. Semi supervised semantic segmentation using generative adversarial network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 5688–5696. [Google Scholar]

- Zheng, S.; Lu, J.; Zhao, H.; Zhu, X.; Luo, Z.; Wang, Y.; Fu, Y.; Feng, J.; Xiang, T.; Torr, P.H. Rethinking semantic segmentation from a sequence-to-sequence perspective with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 10–25 June 2021; pp. 6881–6890. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Xie, E.; Wang, W.; Yu, Z.; Anandkumar, A.; Alvarez, J.M.; Luo, P. SegFormer: Simple and efficient design for semantic segmentation with transformers. In Proceedings of the Advances in Neural Information Processing Systems, Virtual, 6–14 December 2021; pp. 12077–12090. [Google Scholar]

- Wan, Q.; Huang, Z.; Lu, J.; Yu, G.; Zhang, L. Seaformer: Squeeze-enhanced axial transformer for mobile semantic segmentation. arXiv 2023, arXiv:2301.13156. [Google Scholar]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. Espnet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 552–568. [Google Scholar]

- Li, H.; Xiong, P.; Fan, H.; Sun, J. Dfanet: Deep feature aggregation for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 9522–9531. [Google Scholar]

- Wang, Y.; Zhou, Q.; Liu, J.; Xiong, J.; Gao, G.; Wu, X.; Latecki, L.J. Lednet: A lightweight encoder-decoder network for real-time semantic segmentation. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 1860–1864. [Google Scholar]

- Welch, G.; Bishop, G. An Introduction to the Kalman Filter; University of North Carolina: Chapel Hill, NC, USA, 1995. [Google Scholar]

- Kim, C.; Li, F.; Ciptadi, A.; Rehg, J.M. Multiple hypothesis tracking revisited. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4696–4704. [Google Scholar]

- Roberts, W.; Stoica, P.; Li, J.; Yardibi, T.; Sadjadi, F.A. Iterative adaptive approaches to MIMO radar imaging. IEEE J. Sel. Top. Signal Process. 2010, 4, 5–20. [Google Scholar] [CrossRef]

- Pang, S.; Zeng, Y.; Yang, Q.; Deng, B.; Wang, H.; Qin, Y. Improvement in SNR by adaptive range gates for RCS measurements in the THz region. Electronics 2019, 8, 805. [Google Scholar] [CrossRef]

- Major, B.; Fontijne, D.; Ansari, A.; Teja Sukhavasi, R.; Gowaikar, R.; Hamilton, M.; Lee, S.; Grzechnik, S.; Subramanian, S. Vehicle detection with automotive radar using deep learning on range-azimuth-doppler tensors. In Proceedings of the IEEE/CVF International Conference on Computer Vision Workshops, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1–9. [Google Scholar]

- Sligar, A.P. Machine learning-based radar perception for autonomous vehicles using full physics simulation. IEEE Access 2020, 8, 51470–51476. [Google Scholar] [CrossRef]

- Akita, T.; Mita, S. Object tracking and classification using millimeter-wave radar based on LSTM. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 1110–1115. [Google Scholar]

- Zhao, Z.; Song, Y.; Cui, F.; Zhu, J.; Song, C.; Xu, Z.; Ding, K. Point cloud features-based kernel SVM for human-vehicle classification in millimeter wave radar. IEEE Access 2020, 8, 26012–26021. [Google Scholar] [CrossRef]

- Guan, J.; Madani, S.; Jog, S.; Gupta, S.; Hassanieh, H. Through fog high-resolution imaging using millimeter wave radar. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11464–11473. [Google Scholar]

- Li, P.; Wang, P.; Berntorp, K.; Liu, H. Exploiting temporal relations on radar perception for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 17071–17080. [Google Scholar]

- Huang, Z.; Pan, Z.; Lei, B. Transfer learning with deep convolutional neural network for SAR target classification with limited labeled data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Kim, W.; Cho, H.; Kim, J.; Kim, B.; Lee, S. YOLO-based simultaneous target detection and classification in automotive FMCW radar systems. Sensors 2020, 20, 2897. [Google Scholar] [CrossRef] [PubMed]

- Douillard, B.; Underwood, J.; Kuntz, N.; Vlaskine, V.; Quadros, A.; Morton, P.; Frenkel, A. On the segmentation of 3D LIDAR point clouds. In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 2798–2805. [Google Scholar]

- Wen, M.; Cho, S.; Chae, J.; Sung, Y.; Cho, K. Range image-based density-based spatial clustering of application with noise clustering method of three-dimensional point clouds. Int. J. Adv. Robot. Syst. 2018, 15, 1735–1754. [Google Scholar] [CrossRef]

- Lee, S.-M.; Im, J.J.; Lee, B.-H.; Leonessa, A.; Kurdila, A. A real-time grid map generation and object classification for ground-based 3D LIDAR data using image analysis techniques. In Proceedings of the 2010 IEEE International Conference on Image Processing, Hong Kong, China, 26–29 September 2010; pp. 2253–2256. [Google Scholar]

- Reymann, C.; Lacroix, S. Improving LiDAR point cloud classification using intensities and multiple echoes. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5122–5128. [Google Scholar]

- Bogoslavskyi, I.; Stachniss, C. Efficient online segmentation for sparse 3D laser scans. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 41–52. [Google Scholar] [CrossRef]

- Byun, J.; Na, K.-I.; Seo, B.-S.; Roh, M. Drivable road detection with 3D point clouds based on the MRF for intelligent vehicle. In Field and Service Robotics: Results of the 9th International Conference; Springer International Publishing: Berlin/Heidelberg, Germany, 2015; Volume 105, pp. 49–60. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Asvadi, A.; Premebida, C.; Peixoto, P.; Nunes, U. 3D Lidar-based static and moving obstacle detection in driving environments: An approach based on voxels and multi-region ground planes. Robot. Auton. Syst. 2016, 83, 299–311. [Google Scholar] [CrossRef]

- Engelcke, M.; Rao, D.; Wang, D.Z.; Tong, C.H.; Posner, I. Vote3deep: Fast object detection in 3d point clouds using efficient convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 1355–1361. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5099–5108. [Google Scholar]

- Shi, S.; Wang, X.; Li, H. Pointrcnn: 3d object proposal generation and detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 770–779. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Jia, J. 3dssd: Point-based 3d single stage object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11040–11048. [Google Scholar]

- Asvadi, A.; Garrote, L.; Premebida, C.; Peixoto, P.; Nunes, U.J. DepthCN: Vehicle detection using 3D-LIDAR and ConvNet. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Zeng, Y.; Hu, Y.; Liu, S.; Ye, J.; Han, Y.; Li, X.; Sun, N. Rt3d: Real-time 3-d vehicle detection in lidar point cloud for autonomous driving. IEEE Robot. Autom. Lett. 2018, 3, 3434–3440. [Google Scholar] [CrossRef]

- Beltrán, J.; Guindel, C.; Moreno, F.M.; Cruzado, D.; Garcia, F.; De La Escalera, A. Birdnet: A 3d object detection framework from lidar information. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 3517–3523. [Google Scholar]

- Barrera, A.; Guindel, C.; Beltrán, J.; García, F. Birdnet+: End-to-end 3d object detection in lidar bird’s eye view. In Proceedings of the 2020 IEEE 23rd International Conference on Intelligent Transportation Systems (ITSC), Rhodes, Greece, 20–23 September 2020; pp. 1–6. [Google Scholar]

- Wang, Y.; Chao, W.-L.; Garg, D.; Hariharan, B.; Campbell, M.; Weinberger, K.Q. Pseudo-lidar from visual depth estimation: Bridging the gap in 3d object detection for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8445–8453. [Google Scholar]

- Qian, R.; Garg, D.; Wang, Y.; You, Y.; Belongie, S.; Hariharan, B.; Campbell, M.; Weinberger, K.Q.; Chao, W.-L. End-to-end pseudo-lidar for image-based 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 5881–5890. [Google Scholar]

- Chen, Y.-N.; Dai, H.; Ding, Y. Pseudo-stereo for monocular 3d object detection in autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 887–897. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4490–4499. [Google Scholar]

- Deng, J.; Shi, S.; Li, P.; Zhou, W.; Zhang, Y.; Li, H. Voxel r-cnn: Towards high performance voxel-based 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 2–9 February 2021; pp. 1201–1209. [Google Scholar]

- Shi, S.; Guo, C.; Jiang, L.; Wang, Z.; Shi, J.; Wang, X.; Li, H. Pv-rcnn: Point-voxel feature set abstraction for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 10529–10538. [Google Scholar]

- Shi, S.; Jiang, L.; Deng, J.; Wang, Z.; Guo, C.; Shi, J.; Wang, X.; Li, H. PV-RCNN++: Point-voxel feature set abstraction with local vector representation for 3D object detection. Int. J. Comput. Vis. 2023, 131, 531–551. [Google Scholar] [CrossRef]

- Chen, Y.; Liu, J.; Zhang, X.; Qi, X.; Jia, J. Voxelnext: Fully sparse voxelnet for 3d object detection and tracking. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 21674–21683. [Google Scholar]

- Liu, M.; Ma, J.; Zheng, Q.; Liu, Y.; Shi, G. 3D Object Detection Based on Attention and Multi-Scale Feature Fusion. Sensors 2022, 22, 3935. [Google Scholar] [CrossRef]

- Hu, J.S.; Kuai, T.; Waslander, S.L. Point density-aware voxels for lidar 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 8469–8478. [Google Scholar]

- Wang, L.; Song, Z.; Zhang, X.; Wang, C.; Zhang, G.; Zhu, L.; Li, J.; Liu, H. SAT-GCN: Self-attention graph convolutional network-based 3D object detection for autonomous driving. Knowl.-Based Syst. 2023, 259, 110080. [Google Scholar] [CrossRef]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Std: Sparse-to-dense 3d object detector for point cloud. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 1951–1960. [Google Scholar]

- Shi, S.; Wang, Z.; Shi, J.; Wang, X.; Li, H. From points to parts: 3d object detection from point cloud with part-aware and part-aggregation network. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 2647–2664. [Google Scholar] [CrossRef] [PubMed]

- He, C.; Zeng, H.; Huang, J.; Hua, X.-S.; Zhang, L. Structure aware single-stage 3d object detection from point cloud. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11873–11882. [Google Scholar]

- Mao, J.; Niu, M.; Bai, H.; Liang, X.; Xu, H.; Xu, C. Pyramid r-cnn: Towards better performance and adaptability for 3d object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 10–25 June 2021; pp. 2723–2732. [Google Scholar]

- Yang, J.; Shi, S.; Wang, Z.; Li, H.; Qi, X. St3d: Self-training for unsupervised domain adaptation on 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 10–25 June 2021; pp. 10368–10378. [Google Scholar]

- Chen, C.; Chen, Z.; Zhang, J.; Tao, D. Sasa: Semantics-augmented set abstraction for point-based 3d object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 22 February–1 March 2022; pp. 221–229. [Google Scholar]

- Zhang, L.; Dong, R.; Tai, H.-S.; Ma, K. Pointdistiller: Structured knowledge distillation towards efficient and compact 3d detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 21791–21801. [Google Scholar]

- Xiong, S.; Li, B.; Zhu, S. DCGNN: A single-stage 3D object detection network based on density clustering and graph neural network. Complex Intell. Syst. 2023, 9, 3399–3408. [Google Scholar] [CrossRef]

- Yang, B.; Luo, W.; Urtasun, R. Pixor: Real-time 3d object detection from point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7652–7660. [Google Scholar]

- Simony, M.; Milzy, S.; Amendey, K.; Gross, H.-M. Complex-yolo: An euler-region-proposal for real-time 3d object detection on point clouds. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 197–209. [Google Scholar]

- Zhang, X.; Wang, L.; Zhang, G.; Lan, T.; Zhang, H.; Zhao, L.; Li, J.; Zhu, L.; Liu, H. RI-Fusion: 3D object detection using enhanced point features with range-image fusion for autonomous driving. IEEE Trans. Instrum. Meas. 2022, 72, 1–13. [Google Scholar] [CrossRef]

- Li, B. 3d fully convolutional network for vehicle detection in point cloud. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 1513–1518. [Google Scholar]

- Yan, Y.; Mao, Y.; Li, B. Second: Sparsely embedded convolutional detection. Sensors 2018, 18, 3337. [Google Scholar] [CrossRef] [PubMed]

- Ye, M.; Xu, S.; Cao, T. Hvnet: Hybrid voxel network for lidar based 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1631–1640. [Google Scholar]

- Liu, Z.; Zhao, X.; Huang, T.; Hu, R.; Zhou, Y.; Bai, X. Tanet: Robust 3d object detection from point clouds with triple attention. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; pp. 11677–11684. [Google Scholar]

- Xiao, W.; Peng, Y.; Liu, C.; Gao, J.; Wu, Y.; Li, X. Balanced Sample Assignment and Objective for Single-Model Multi-Class 3D Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 5036–5048. [Google Scholar] [CrossRef]

- Wang, X.; Zou, L.; Shen, X.; Ren, Y.; Qin, Y. A region-growing approach for automatic outcrop fracture extraction from a three-dimensional point cloud. Comput. Geosci. 2017, 99, 100–106. [Google Scholar] [CrossRef]

- Sun, S.; Li, C.; Chee, P.W.; Paterson, A.H.; Jiang, Y.; Xu, R.; Robertson, J.S.; Adhikari, J.; Shehzad, T. Three-dimensional photogrammetric mapping of cotton bolls in situ based on point cloud segmentation and clustering. ISPRS-J. Photogramm. Remote Sens. 2020, 160, 195–207. [Google Scholar] [CrossRef]

- Zhao, B.; Hua, X.; Yu, K.; Xuan, W.; Chen, X.; Tao, W. Indoor point cloud segmentation using iterative gaussian mapping and improved model fitting. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7890–7907. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11108–11117. [Google Scholar]

- Cheng, R.; Razani, R.; Ren, Y.; Bingbing, L. S3Net: 3D LiDAR sparse semantic segmentation network. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 14040–14046. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.-E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 6411–6420. [Google Scholar]

- Landrieu, L.; Simonovsky, M. Large-scale point cloud semantic segmentation with superpoint graphs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4558–4567. [Google Scholar]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. Squeezeseg: Convolutional neural nets with recurrent crf for real-time road-object segmentation from 3d lidar point cloud. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1887–1893. [Google Scholar]

- Iandola, F.N.; Han, S.; Moskewicz, M.W.; Ashraf, K.; Dally, W.J.; Keutzer, K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and< 0.5 MB model size. arXiv 2016, arXiv:1602.07360. [Google Scholar]

- Wu, B.; Zhou, X.; Zhao, S.; Yue, X.; Keutzer, K. Squeezesegv2: Improved model structure and unsupervised domain adaptation for road-object segmentation from a lidar point cloud. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 4376–4382. [Google Scholar]

- Xu, C.; Wu, B.; Wang, Z.; Zhan, W.; Vajda, P.; Keutzer, K.; Tomizuka, M. Squeezesegv3: Spatially-adaptive convolution for efficient point-cloud segmentation. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 1–19. [Google Scholar]

- Feng, Y.; Zhang, Z.; Zhao, X.; Ji, R.; Gao, Y. Gvcnn: Group-view convolutional neural networks for 3d shape recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 264–272. [Google Scholar]

- Maturana, D.; Scherer, S. Voxnet: A 3d convolutional neural network for real-time object recognition. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 922–928. [Google Scholar]

- Tchapmi, L.; Choy, C.; Armeni, I.; Gwak, J.; Savarese, S. Segcloud: Semantic segmentation of 3d point clouds. In Proceedings of the 2017 International Conference on 3D Vision (3DV), Qingdao, China, 10–12 October 2017; pp. 537–547. [Google Scholar]

- Klokov, R.; Lempitsky, V. Escape from cells: Deep kd-networks for the recognition of 3d point cloud models. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 863–872. [Google Scholar]

- Tang, H.; Liu, Z.; Zhao, S.; Lin, Y.; Lin, J.; Wang, H.; Han, S. Searching efficient 3d architectures with sparse point-voxel convolution. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 685–702. [Google Scholar]

- Le, T.; Duan, Y. Pointgrid: A deep network for 3d shape understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9204–9214. [Google Scholar]

- Riegler, G.; Osman Ulusoy, A.; Geiger, A. Octnet: Learning deep 3d representations at high resolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3577–3586. [Google Scholar]

- Wang, P.-S.; Liu, Y.; Guo, Y.-X.; Sun, C.-Y.; Tong, X. O-cnn: Octree-based convolutional neural networks for 3d shape analysis. ACM Trans. Graph. 2017, 36, 1–11. [Google Scholar] [CrossRef]

- Hou, Y.; Zhu, X.; Ma, Y.; Loy, C.C.; Li, Y. Point-to-voxel knowledge distillation for lidar semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 14–24 June 2022; pp. 8479–8488. [Google Scholar]

- Hu, Z.; Uchimura, K. UV-disparity: An efficient algorithm for stereovision based scene analysis. In Proceedings of the IEEE Proceedings. Intelligent Vehicles Symposium, Las Vegas, NV, USA, 6–8 June 2005; pp. 48–54. [Google Scholar]

- Xie, Y.; Zeng, S.; Zhang, Y.; Chen, L. A cascaded framework for robust traversable region estimation using stereo vision. In Proceedings of the 2017 Chinese Automation Congress (CAC), Jinan, China, 20–22 October 2017; pp. 3075–3080. [Google Scholar]

- Ma, W.; Zhu, S. A Multifeature-Assisted Road and Vehicle Detection Method Based on Monocular Depth Estimation and Refined UV Disparity Mapping. IEEE Trans. Intell. Transp. Syst. 2022, 23, 16763–16772. [Google Scholar] [CrossRef]

- Lefebvre, S.; Ambellouis, S. Vehicle detection and tracking using mean shift segmentation on semi-dense disparity maps. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, New York, NY, USA, 3–7 June 2012; pp. 855–860. [Google Scholar]

- Neumann, D.; Langner, T.; Ulbrich, F.; Spitta, D.; Goehring, D. Online vehicle detection using Haar-like, LBP and HOG feature based image classifiers with stereo vision preselection. In Proceedings of the 2017 IEEE Intelligent Vehicles Symposium (IV), Los Angeles, CA, USA, 11–14 June 2017; pp. 773–778. [Google Scholar]

- Xie, Q.; Long, Q.; Li, J.; Zhang, L.; Hu, X. Application of intelligence binocular vision sensor: Mobility solutions for automotive perception system. IEEE Sens. J. 2023, 24, 5578–5592. [Google Scholar] [CrossRef]

- Kale, K.; Pawar, S.; Dhulekar, P. Moving object tracking using optical flow and motion vector estimation. In Proceedings of the 2015 4th International Conference on Reliability, Infocom Technologies and Optimization (ICRITO) (Trends and Future Directions), Noida, India, 2–4 September 2015; pp. 1–6. [Google Scholar]

- Sengar, S.S.; Mukhopadhyay, S. Detection of moving objects based on enhancement of optical flow. Optik 2017, 145, 130–141. [Google Scholar] [CrossRef]

- Chen, Q.; Koltun, V. Full flow: Optical flow estimation by global optimization over regular grids. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 4706–4714. [Google Scholar]

- Yin, Z.; Shi, J. Geonet: Unsupervised learning of dense depth, optical flow and camera pose. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1983–1992. [Google Scholar]

- Wang, T.; Zheng, N.; Xin, J.; Ma, Z. Integrating millimeter wave radar with a monocular vision sensor for on-road obstacle detection applications. Sensors 2011, 11, 8992–9008. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Xu, L.; Sun, H.; Xin, J.; Zheng, N. On-road vehicle detection and tracking using MMW radar and monovision fusion. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2075–2084. [Google Scholar] [CrossRef]

- Kim, Y.; Kim, S.; Choi, J.W.; Kum, D. Craft: Camera-radar 3d object detection with spatio-contextual fusion transformer. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; pp. 1160–1168. [Google Scholar]

- Lekic, V.; Babic, Z. Automotive radar and camera fusion using generative adversarial networks. Comput. Vis. Image Underst. 2019, 184, 1–8. [Google Scholar] [CrossRef]

- Chang, S.; Zhang, Y.; Zhang, F.; Zhao, X.; Huang, S.; Feng, Z.; Wei, Z. Spatial attention fusion for obstacle detection using mmwave radar and vision sensor. Sensors 2020, 20, 956. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Chen, J.; Shi, Y.; Jiang, K.; Yang, M.; Yang, D. Bridging the view disparity between radar and camera features for multi-modal fusion 3d object detection. IEEE Trans. Intell. Veh. 2023, 8, 1523–1535. [Google Scholar] [CrossRef]

- Zhong, Z.; Liu, S.; Mathew, M.; Dubey, A. Camera radar fusion for increased reliability in ADAS applications. Electron. Imaging 2018, 17, 258. [Google Scholar] [CrossRef]

- Bai, J.; Li, S.; Huang, L.; Chen, H. Robust detection and tracking method for moving object based on radar and camera data fusion. IEEE Sens. J. 2021, 21, 10761–10774. [Google Scholar] [CrossRef]

- Sengupta, A.; Cheng, L.; Cao, S. Robust multiobject tracking using mmwave radar-camera sensor fusion. IEEE Sens. Lett. 2022, 6, 1–4. [Google Scholar] [CrossRef]

- Nabati, R.; Qi, H. Centerfusion: Centre-based radar and camera fusion for 3d object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1527–1536. [Google Scholar]

- Wu, Z.; Chen, G.; Gan, Y.; Wang, L.; Pu, J. Mvfusion: Multi-view 3d object detection with semantic-aligned radar and camera fusion. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2766–2773. [Google Scholar]

- Kim, Y.; Shin, J.; Kim, S.; Lee, I.-J.; Choi, J.W.; Kum, D. Crn: Camera radar net for accurate, robust, efficient 3d perception. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Vancouver, BC, Canada, 17–24 June 2023; pp. 17615–17626. [Google Scholar]

- Chen, X.; Ma, H.; Wan, J.; Li, B.; Xia, T. Multi-view 3d object detection network for autonomous driving. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1907–1915. [Google Scholar]

- Ku, J.; Mozifian, M.; Lee, J.; Harakeh, A.; Waslander, S.L. Joint 3d proposal generation and object detection from view aggregation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–8. [Google Scholar]

- Qi, C.R.; Liu, W.; Wu, C.; Su, H.; Guibas, L.J. Frustum pointnets for 3d object detection from rgb-d data. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 918–927. [Google Scholar]

- Zhao, X.; Sun, P.; Xu, Z.; Min, H.; Yu, H. Fusion of 3D LIDAR and camera data for object detection in autonomous vehicle applications. IEEE Sens. J. 2020, 20, 4901–4913. [Google Scholar] [CrossRef]

- An, P.; Liang, J.; Yu, K.; Fang, B.; Ma, J. Deep structural information fusion for 3D object detection on LiDAR–camera system. Comput. Vis. Image Underst. 2022, 214, 103295. [Google Scholar] [CrossRef]

- Li, J.; Li, R.; Li, J.; Wang, J.; Wu, Q.; Liu, X. Dual-view 3d object recognition and detection via lidar point cloud and camera image. Robot. Auton. Syst. 2022, 150, 103999. [Google Scholar] [CrossRef]

- Liang, T.; Xie, H.; Yu, K.; Xia, Z.; Lin, Z.; Wang, Y.; Tang, T.; Wang, B.; Tang, Z. Bevfusion: A simple and robust lidar-camera fusion framework. In Proceedings of the Advances in Neural Information Processing Systems, New Orleans, LA, USA, 28 November–9 December 2022; pp. 10421–10434. [Google Scholar]

- Liu, Z.; Tang, H.; Amini, A.; Yang, X.; Mao, H.; Rus, D.L.; Han, S. Bevfusion: Multi-task multi-sensor fusion with unified bird’s-eye view representation. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 May–2 June 2023; pp. 2774–2781. [Google Scholar]

- Wu, H.; Wen, C.; Shi, S.; Li, X.; Wang, C. Virtual Sparse Convolution for Multimodal 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and, and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 21653–21662. [Google Scholar]

- Oh, S.-I.; Kang, H.-B. Object detection and classification by decision-level fusion for intelligent vehicle systems. Sensors 2017, 17, 207. [Google Scholar] [CrossRef] [PubMed]

- Guan, L.; Chen, Y.; Wang, G.; Lei, X. Real-time vehicle detection framework based on the fusion of LiDAR and camera. Electronics 2020, 9, 451. [Google Scholar] [CrossRef]

- Xu, D.; Anguelov, D.; Jain, A. Pointfusion: Deep sensor fusion for 3D bounding box estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 244–253. [Google Scholar]

- Liang, M.; Yang, B.; Wang, S.; Urtasun, R. Deep continuous fusion for multi-sensor 3D object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 641–656. [Google Scholar]

- Yang, Z.; Sun, Y.; Liu, S.; Shen, X.; Jia, J. Ipod: Intensive point-based object detector for point cloud. arXiv 2018, arXiv:1812.05276. [Google Scholar]

- Liang, M.; Yang, B.; Chen, Y.; Hu, R.; Urtasun, R. Multi-task multi-sensor fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7345–7353. [Google Scholar]

- Wang, Z.; Jia, K. Frustum convnet: Sliding frustums to aggregate local point-wise features for amodal 3d object detection. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1742–1749. [Google Scholar]

- Zhao, X.; Liu, Z.; Hu, R.; Huang, K. 3D Object Detection Using Scale Invariant and Feature Reweighting Networks. In Proceedings of the Thirty-Third AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 9267–9274. [Google Scholar]

- Vora, S.; Lang, A.H.; Helou, B.; Beijbom, O. Pointpainting: Sequential fusion for 3d object detection. In Proceedings of the 2020 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 4603–4611. [Google Scholar]

- Huang, T.; Liu, Z.; Chen, X.; Bai, X. EPNet: Enhancing point features with image semantics for 3D object detection. In Computer Vision—ECCV; Springer: Berlin/Heidelberg, Germany, 2020; pp. 35–52. [Google Scholar]

- Paigwar, A.; Sierra-Gonzalez, D.; Erkent, Ö.; Laugier, C. Frustum-pointpillars: A multi-stage approach for 3d object detection using rgb camera and lidar. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Virtual Event, 11–17 October 2021; pp. 2926–2933. [Google Scholar]

- Pang, S.; Morris, D.; Radha, H. Fast-CLOCs: Fast camera-LiDAR object candidates fusion for 3D object detection. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 187–196. [Google Scholar]

- Wu, X.; Peng, L.; Yang, H.; Xie, L.; Huang, C.; Deng, C.; Liu, H.; Cai, D. Sparse Fuse Dense: Towards High Quality 3D Detection with Depth Completion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5408–5417. [Google Scholar]

- Zhu, H.; Deng, J.; Zhang, Y.; Ji, J.; Mao, Q.; Li, H.; Zhang, Y. VPFNet: Improving 3D Object Detection with Virtual Point based LiDAR and Stereo Data Fusion. IEEE Trans. Multimedia 2022, 25, 5291–5304. [Google Scholar] [CrossRef]

- Chen, Y.; Li, Y.; Zhang, X.; Sun, J.; Jia, J. Focal Sparse Convolutional Networks for 3D Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 5428–5437. [Google Scholar]

- Li, Y.; Qi, X.; Chen, Y.; Wang, L.; Li, Z.; Sun, J.; Jia, J. Voxel field fusion for 3d object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 1120–1129. [Google Scholar]

- Liu, Z.; Huang, T.; Li, B.; Chen, X.; Wang, X.; Bai, X. Epnet++: Cascade bi-directional fusion for multi-modal 3d object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 8324–8341. [Google Scholar] [CrossRef]

- Wang, M.; Zhao, L.; Yue, Y. PA3DNet: 3-D vehicle detection with pseudo shape segmentation and adaptive camera-LiDAR fusion. IEEE Trans. Ind. Inf. 2023, 19, 10693–10703. [Google Scholar] [CrossRef]

- Mahmoud, A.; Hu, J.S.K.; Waslander, S.L. Dense Voxel Fusion for 3D Object Detection. In Proceedings of the 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 2–7 January 2023; pp. 663–672. [Google Scholar]

- Li, X.; Ma, T.; Hou, Y.; Shi, B.; Yang, Y.; Liu, Y.; Wu, X.; Chen, Q.; Li, Y.; Qiao, Y.; et al. LoGoNet: Towards Accurate 3D Object Detection with Local-to-Global Cross-Modal Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 17524–17534. [Google Scholar]

- Song, Z.; Zhang, G.; Xie, J.; Liu, L.; Jia, C.; Xu, S.; Wang, Z. VoxelNextFusion: A Simple, Unified and Effective Voxel Fusion Framework for Multi-Modal 3D Object Detection. arXiv 2024, arXiv:2401.02702. [Google Scholar]

- Chavez-Garcia, R.O.; Aycard, O. Multiple sensor fusion and classification for moving object detection and tracking. IEEE Trans. Intell. Transp. Syst. 2015, 17, 525–534. [Google Scholar] [CrossRef]

- Yi, C.; Zhang, K.; Peng, N. A multi-sensor fusion and object tracking algorithm for self-driving vehicles. Proc. Inst. Mech. Eng. Part D-J. Automob. Eng. 2019, 233, 2293–2300. [Google Scholar] [CrossRef]

| Dataset | Year | Loc. | Sc. | Cl. | An. | 3Db. | Application Scenarios |

|---|---|---|---|---|---|---|---|

| KITTI [15] | 2012 | Karlsruhe (DE) | 22 | 8 | 15 k | 200 k | Multiple application scenarios. |

| Cityscapes [16] | 2016 | 50 cities | - | 30 | 25 k | - | Mainly oriented to segmentation tasks. |

| Oxford RobotCar [17] | 2016 | Central Oxford (UK) | - | - | - | - | Multimodal joint calibration tasks can be conducted. |

| Vistas [18] | 2017 | Global | - | 152 | 25 k | - | Globally constructed dataset for autonomous driving. |

| BDD100K [19] | 2018 | San Fransisco and New York (US) | 100 k | 10 | 100 k | - | The total volume of data is enormous, nearly 2 terabytes. |

| ApolloScape [20] | 2018 | 4 cities in CN | - | 8–35 | 144 k | 70 k | It contains many extensive and richer labels. |

| KAIST [21] | 2018 | South Korea | - | 3 | 8.9 k | - | Primarily targets SLAM tasks, emphasizing the provision of examples in complex scenarios. |

| Waymo open [22] | 2019 | 6 cities in US | 1 k | 4 | 200 k | 12 M | Focused on computer vision tasks, and utilizes data collected in all-weather conditions. |

| Lyft L5 [23] | 2019 | California (US) | 366 | - | - | 55 k | More than 1000 h of driving record data. |

| Argoverse [24] | 2019 | Pittsburgh and Miami (US) | 1 k | - | 22 k | 993 k | Focus on two tasks: 3D tracking and action prediction. |

| D2-City [25] | 2019 | 5 cities in CN | 1 k | 12 | 700 k | - | Suitable for detection and tracking tasks. |

| H3D [26] | 2019 | San Francisco (US) | 160 | 8 | 27 k | 1.1 M | It is a large-scale full-surround 3D multi-object detection and tracking dataset. |

| nuScenes [27] | 2019 | Boston (US), Singapore | 1 k | 23 | 40 k | 1.4 M | It was taken in dense traffic and highly challenging driving situations. |

| CADC [28] | 2020 | Waterloo (CA) | 75 | 10 | 7 k | - | Focused on constructing a dataset for driving in snowy conditions. |

| A2D2 [29] | 2020 | 3 cities in DE | - | 14 | 12 k | 43 k | Perception for autonomous driving. |

| A*3D [30] | 2020 | Singapore | - | 7 | 39 k | 230 k | With a significant diversity of the scene, time, and weather. |

| RADIATE [31] | 2021 | UK | 7 | 8 | - | - | Focus on tracking and scene understanding using radar sensors in adverse weather. |

| ACDC [32] | 2021 | Switzerland | 4 | 19 | 4.6 k | - | A larger semantic segmentation dataset on adverse visual conditions. |

| KITTI-360 [33] | 2022 | Karlsruhe (DE) | - | 37 | - | 68 k | An extension of the KITTI dataset. It established benchmarks for tasks relevant to mobile perception. |

| SHIFT [34] | 2022 | 8 cities | - | 23 | 2.5 M | 2.5 M | A synthetic driving dataset for continuous multi-task domain adaptation. |

| Argoverse 2 [35] | 2023 | 6 cities in US | 250 k | 30 | - | - | The successor to the Argoverse 3D tracking dataset. It is the largest ever collection of LiDAR sensor data. |

| V2V4Real [36] | 2023 | Ohio (US) | - | 5 | 20 k | 240 k | The first large-scale real-world multimodal dataset for V2V perception. |

| Feature | Classifier | Dataset | Accuracy | Reference |

|---|---|---|---|---|

| HOG | Adaboost | GTI vehicle database and real traffic scene videos | 98.82% | [55] |

| HOG | GA-SVM | 1648 vehicles and 1646 non-vehicles | 97.76% | [56] |

| HOG | SVM | 420 road images from real on-road driving tests | 93.00% | [57] |

| HOG | SVM | GTI vehicle database and another 400 images from real traffic scenes | 93.75% | [68] |

| Haar-like | Adaboost | Hand-labeled data of 10,000 positive and 15,000 negative examples | - | [69] |

| SURF | SVM | 2846 vehicles from 29 vehicle makes and models | 99.07% | [70] |

| PCA | SVM | 1051 vehicle images and 1051 nonvehicle images | 96.11% | [71] |

| SIFT | SVM | 880 positive samples and 800 negative samples | - | [72] |

| Model | Backbone | AP | AP50 | AP75 | APS | APM | APL |

|---|---|---|---|---|---|---|---|

| Anchor-based two-stage | |||||||

| Faster RCNN [73] | VGG-16 | 21.9 | 42.7 | - | - | - | - |

| R-FCN [78] | ResNet-101 | 29.9 | 51.9 | - | 10.8 | 32.8 | 45.0 |

| CoupleNet [110] | ResNet-101 | 34.4 | 54.8 | 37.2 | 13.4 | 38.1 | 50.8 |

| Mask RCNN [76] | ResNeXt-101 | 39.8 | 62.3 | 43.4 | 22.1 | 43.2 | 51.2 |

| DetNet [111] | DetNet-59 | 40.3 | 62.1 | 43.8 | 23.6 | 42.6 | 50.0 |

| Soft-NMS [112] | ResNet-101 | 40.8 | 62.4 | 44.9 | 23.0 | 43.4 | 53.2 |

| G-RMI [113] | - | 41.6 | 61.9 | 45.4 | 23.9 | 43.5 | 54.9 |

| Cascade R-CNN [80] | Res101-FPN | 42.8 | 62.1 | 46.3 | 23.7 | 45.5 | 55.2 |

| SNIP [114] | DPN-98 | 45.7 | 67.3 | 51.5 | 29.3 | 48.8 | 57.1 |

| Anchor-based one-stage | |||||||

| SSD [81] | VGG-16 | 28.8 | 48.5 | 30.3 | 10.9 | 31.8 | 43.5 |

| DSSD [115] | ResNet-101 | 33.2 | 53.3 | 35.2 | 13.0 | 35.4 | 51.1 |

| M2Det [82] | VGG-16 | 33.5 | 52.4 | 35.6 | 14.4 | 37.6 | 47.6 |

| RefineDet [116] | ResNet-101 | 36.4 | 57.5 | 39.5 | 16.6 | 39.9 | 51.4 |

| RetinaNet [83] | ResNet-101 | 39.1 | 59.1 | 42.3 | 21.8 | 42.7 | 50.2 |

| YOLOv2 [84] | DarkNet-19 | 21.6 | 44.0 | 19.2 | 5.0 | 22.4 | 35.5 |

| YOLOv3 [85] | DarkNet-53 | 33.0 | 57.9 | 34.4 | 18.3 | 35.4 | 41.9 |

| YOLOv4 [86] | CSPDarkNet-53 | 41.2 | 62.8 | 44.3 | 20.4 | 44.4 | 56.0 |

| YOLOv5 [87] | CSPDarkNet-53 | 49.0 | 67.3 | - | - | - | - |

| YOLOv7 [88] | ELAN | 52.9 | 71.1 | 57.5 | 36.9 | 57.7 | 68.6 |

| Anchor-free keypoint-based | |||||||

| CornerNet [90] | Hourglass-104 | 40.5 | 56.5 | 43.1 | 19.4 | 42.7 | 53.9 |

| RepPoints [91] | Res101-DCN | 45.9 | 66.1 | 49.0 | 26.6 | 48.6 | 57.2 |

| CenterNet [92] | Hourglass-104 | 44.9 | 62.4 | 48.1 | 25.6 | 47.4 | 57.4 |

| ExtremeNet [93] | Hourglass-104 | 40.2 | 55.5 | 43.2 | 20.4 | 43.2 | 53.1 |

| Grid R-CNN [94] | ResNeXt-DCN | 43.2 | 63.0 | 46.6 | 25.1 | 46.5 | 55.2 |

| Anchor-free center-based | |||||||

| FSAF [95] | ResNeXt-101 | 42.9 | 63.8 | 46.3 | 26.6 | 46.2 | 52.7 |

| FCOS [96] | ResNeXt-101 | 43.2 | 62.8 | 46.6 | 26.5 | 46.2 | 53.3 |

| GA-RPN [97] | ResNet-50 | 39.8 | 59.2 | 43.5 | 21.8 | 42.6 | 50.7 |

| FoveaBox [98] | ResNeXt-101 | 42.1 | 61.9 | 45.2 | 24.9 | 46.8 | 55.6 |

| YOLOX [99] | CSPDarkNet-53 | 50.0 | 68.5 | 54.5 | 29.8 | 54.5 | 64.4 |

| YOLOv6 [117] | EfficientRep | 52.8 | 70.3 | 57.7 | 34.4 | 58.1 | 70.1 |

| YOLOv8 [100] | DarkNet-53 | 52.9 | 69.8 | 57.5 | 35.3 | 58.3 | 69.8 |

| YOLOv9 [101] | GELAN | 53.0 | 70.2 | 57.8 | 36.2 | 58.5 | 69.3 |

| End-to-end-based | |||||||

| DeFCN [102] | - | 38.6 | 57.6 | 41.3 | - | - | - |

| Sparse R-CNN [103] | ResNet-50 | 42.8 | 61.2 | 45.7 | 26.7 | 44.6 | 57.6 |

| DETR [104] | ResNet-50 | 43.3 | 63.1 | 45.9 | 22.5 | 47.3 | 61.1 |

| Deformable DETR [107] | ResNet-50 | 46.2 | 65.2 | 50.0 | 28.8 | 49.2 | 61.7 |

| Anchor-DETR [108] | ResNet-101 | 45.1 | 65.7 | 48.8 | 25.8 | 49.4 | 61.6 |

| Efficient-DETR [118] | ResNet-101 | 45.7 | 64.1 | 49.5 | 28.8 | 49.1 | 60.2 |

| RT-DETR [109] | ResNet-101 | 54.3 | 72.7 | 58.6 | 36.0 | 58.8 | 72.1 |

| Model | Car AP (IoU = 0.7) | FPS | Year | Reference | ||

|---|---|---|---|---|---|---|

| Easy | Moderate | Hard | ||||

| Point-based | ||||||

| Vote3deep | 76.79 | 68.24 | 63.23 | 0.9 | 2017 | [163] |

| PointRCNN | 85.95 | 75.76 | 68.32 | 3.8 | 2019 | [166] |

| STD | 86.61 | 77.63 | 76.06 | - | 2019 | [183] |

| Part-A2 | 85.94 | 77.95 | 72.00 | - | 2020 | [184] |

| 3DSSD | 88.36 | 79.57 | 74.55 | 26.3 | 2020 | [167] |

| SASSD | 88.75 | 79.79 | 74.16 | 24.9 | 2020 | [185] |

| Pyramid RCNN | 87.03 | 80.30 | 76.48 | 8.9 | 2021 | [186] |

| ST3D | - | - | 74.61 | - | 2021 | [187] |

| SASA | 88.76 | 82.16 | 77.16 | 27.8 | 2022 | [188] |

| PointDistiller | 88.10 | 76.90 | 73.80 | - | 2023 | [189] |

| DCGNN | 89.65 | 79.80 | 74.52 | 9.0 | 2023 | [190] |

| Projection-based | ||||||

| DeepthCN | 37.59 | 23.21 | 18.01 | - | 2017 | [168] |

| RT3D | 72.85 | 61.64 | 64.38 | 11.2 | 2018 | [169] |

| BirdNet | 88.92 | 67.56 | 68.59 | 9.1 | 2018 | [170] |

| PIXOR | 81.70 | 77.05 | 72.95 | 10.8 | 2018 | [191] |

| Complex-YOLO | 67.72 | 64.00 | 63.01 | 59.4 | 2018 | [192] |

| BirdNet+ | 70.14 | 51.85 | 50.03 | 10.0 | 2020 | [171] |

| E2E-PL | 79.60 | 58.80 | 52.10 | - | 2020 | [173] |

| Pseudo-L | 23.74 | 17.74 | 15.14 | - | 2022 | [174] |

| Ri-Fusion | 85.62 | 75.35 | 68.31 | 26.0 | 2023 | [193] |

| Voxel-based | ||||||

| 3DFCN | 84.20 | 75.30 | 68.00 | - | 2017 | [194] |

| VoxelNet | 77.47 | 65.11 | 57.73 | 30.3 | 2018 | [175] |

| Second | 83.13 | 73.66 | 66.20 | 20.0 | 2018 | [195] |

| PV-RCNN | 90.25 | 81.43 | 76.82 | 12.5 | 2020 | [177] |

| HVNet | 87.21 | 77.58 | 71.79 | 31.3 | 2020 | [196] |

| TANet | 83.81 | 75.38 | 67.66 | 28.8 | 2020 | [197] |

| Voxel RCNN | 90.09 | 81.62 | 77.06 | 25.0 | 2021 | [176] |

| MA-MFFC | 92.60 | 84.98 | 83.21 | 7.1 | 2022 | [180] |

| PDV | 90.43 | 81.86 | 77.49 | 7.4 | 2022 | [181] |

| SAT-GCN | 79.46 | 86.55 | 78.12 | 8.2 | 2023 | [182] |

| BSAODet | 88.89 | 81.74 | 77.24 | - | 2023 | [198] |

| Sensor | Camera | Radar | LiDAR |

|---|---|---|---|

| Silhouette Representation | 5 | 2 | 3 |

| Color Perception | 5 | 1 | 1 |

| Velocity Measurement | 2 | 5 | 2 |

| Angle Resolution | 5 | 3 | 4 |

| Range Resolution | 2 | 4 | 5 |

| Object Detection | 5 | 3 | 4 |

| Object Classification | 5 | 1 | 3 |

| Field of View | 3 | 4 | 4 |

| Adaptability to Complex Weather | 2 | 5 | 2 |

| Sensor Size | 2 | 2 | 4 |

| Cost | 3 | 1 | 5 |

| Model | Metrics | FPS | Year | Reference | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| mAP | NDS | mATE | mASE | mAOE | mAVE | mAAE | ||||

| CenterFusion | 32.6 | 44.9 | 63.1 | 26.1 | 51.6 | 61.4 | 11.5 | - | 2021 | [238] |

| CRAFT | 41.1 | 52.3 | 46.7 | 26.8 | 45.3 | 51.9 | 11.4 | 4.1 | 2023 | [231] |

| RCBEV | 40.6 | 45.6 | 48.4 | 25.7 | 58.7 | 70.2 | 14.0 | - | 2023 | [234] |

| MVFusion | 45.3 | 51.7 | 56.9 | 24.6 | 37.9 | 78.1 | 12.8 | - | 2023 | [239] |

| CRN | 57.5 | 62.4 | 46.0 | 27.3 | 44.3 | 35.2 | 18.0 | 7.2 | 2023 | [240] |

| Model | Fusion | Car AP3D (IoU = 0.7) | FPS | Year | Reference | ||

|---|---|---|---|---|---|---|---|

| Easy | Moderate | Hard | |||||

| MV3D | Feature | - | - | - | 2.8 | 2017 | [241] |

| AVOD-FPN | Feature | 81.94 | 71.88 | 66.38 | 10.0 | 2018 | [242] |

| PointFusion | Feature | 77.92 | 63.00 | 53.27 | 0.8 | 2018 | [252] |

| ContFuse | Feature | 82.54 | 66.22 | 64.04 | 16.7 | 2018 | [253] |

| F-PointNet | Decision | 83.76 | 70.92 | 63.65 | - | 2018 | [243] |

| IPOD | Decision | 84.10 | 76.40 | 75.30 | - | 2018 | [254] |

| MMF | Feature | 86.81 | 76.75 | 68.41 | 12.5 | 2019 | [255] |

| F-ConvNet | Decision | 85.88 | 76.51 | 68.08 | - | 2019 | [256] |

| SIFRNet | Feature | 85.62 | 72.05 | 64.19 | - | 2020 | [257] |

| PointPainting | Feature | 92.45 | 88.11 | 83.36 | - | 2020 | [258] |

| EPNet | Feature | 88.94 | 80.67 | 77.15 | - | 2020 | [259] |

| F-PointPillars | Feature | 88.90 | 79.28 | 78.07 | 14.3 | 2021 | [260] |

| Fast-CLOCs | Feature | 89.11 | 80.34 | 76.98 | 13.0 | 2022 | [261] |

| SFD | Feature | 91.73 | 84.76 | 77.92 | - | 2022 | [262] |

| VPFNet | Feature | 91.02 | 83.21 | 78.20 | 10.0 | 2022 | [263] |

| FocalsConv | Feature | 92.26 | 85.32 | 82.95 | 6.3 | 2022 | [264] |