Abstract

Cervical auscultation is a simple, noninvasive method for diagnosing dysphagia, although the reliability of the method largely depends on the subjectivity and experience of the evaluator. Recently developed methods for the automatic detection of swallowing sounds facilitate a rough automatic diagnosis of dysphagia, although a reliable method of detection specialized in the peculiar feature patterns of swallowing sounds in actual clinical conditions has not been established. We investigated a novel approach for automatically detecting swallowing sounds by a method wherein basic statistics and dynamic features were extracted based on acoustic features: Mel Frequency Cepstral Coefficients and Mel Frequency Magnitude Coefficients, and an ensemble learning model combining Support Vector Machine and Multi-Layer Perceptron were applied. The evaluation of the effectiveness of the proposed method, based on a swallowing-sounds database synchronized to a video fluorographic swallowing study compiled from 74 advanced-age patients with dysphagia, demonstrated an outstanding performance. It achieved an F1-micro average of approximately 0.92 and an accuracy of 95.20%. The method, proven effective in the current clinical recording database, suggests a significant advancement in the objectivity of cervical auscultation. However, validating its efficacy in other databases is crucial for confirming its broad applicability and potential impact.

1. Introduction

Dysphagia is a disease that is frequently caused by stroke; cerebrovascular, neurogenerative (e.g., Alzheimer’s disease or Parkinson’s disease), or neuromuscular disease; or aging [1,2,3,4], with a particularly high morbidity rate among older patients, and is characterized by symptoms such as choking or coughing fits when eating, or by saliva incorrectly entering the trachea or lungs. These symptoms, in turn, may cause aspiration pneumonia, which is a serious disease that is among the top causes of death worldwide [5,6,7]. Therefore, dysphagia warrants early diagnosis and proper rehabilitation. Current methods used to diagnose dysphagia include a video fluorographic swallowing study (VFSS) and a fiberoptic endoscopic examination of swallowing (FEES) [8,9,10,11,12,13,14].

Both VFSS and FEES are effective for diagnosing swallowing disorders, but each has its advantages and limitations. Cervical auscultation (CA) is a useful noninvasive method for assessing swallowing disorders; however, it has limitations as a diagnostic tool due to issues of subjectivity and accuracy [15,16]. According to a systematic review by Lagarde et al. [17], the diagnostic accuracy of CA varies widely, with sensitivity ranging from 23% to 95% and specificity from 50% to 74%. This wide range reflects the susceptibility of CA results to subjective interpretation, which raises questions about its reliability, especially when not combined with acoustic analysis or other sophisticated methods [17]. Furthermore, advancements in digital signal processing technology have improved the analysis of swallowing sounds obtained through CA. The data from swallowing sounds and vibrations are analyzed through specific digital processing steps, and the results are utilized to evaluate swallowing disorders. Such technology is expected to enhance the accuracy of CA signals and reduce subjectivity [18].

The evaluation of the deglutition function based on swallowing sounds is being investigated [19,20,21,22]. Rayneau et al. developed and evaluated the effectiveness of a smartphone application for automatically detecting and analyzing swallowing sounds in healthy individuals and patients with pharyngeal cancer when engaged in specific types of eating. Based on the results, swallowing sounds were detected with 90% or higher sensitivity in healthy persons when consuming all three food forms (mashed potatoes, water, and yogurt) that were tested; however, the application of the method in patients with cancer proved difficult [20]. Among various studies, the analysis of swallowing sounds using machine learning is a particularly promising approach. Frakking et al. used a Support Vector Machine (SVM) to identify swallowing sounds in healthy children and children with dysphagia and demonstrated 98% accuracy [21], whereas Sarraf Shirazi et al. used K-means clustering to detect aspiration, with 86.4% accuracy, from a sub-band frequency of 300 Hz or less [22].

Advances in machine learning confer the possibility of further improvement of accuracy when detecting swallowing sounds [22,23,24,25]. Khlaifi et al. combined the Mel Frequency Cepstral Coefficient (MFCC) and a Gaussian Mixture Model (GMM) to achieve an 84.57% recognition rate [23]. In a study by Kuramoto et al., a recording database of healthy persons and patients with dysphagia was used to apply a deep learning model wherein the swallowing sounds versus noise were distinguished with a high accuracy of 97.3%, and showed that deep learning can effectively support a diagnosis of dysphagia [24]. However, according to our findings, and focusing on the peculiar features of the patterns of swallowing sounds in real-world clinical conditions, research into their detection is lacking. Additionally, deep learning models require a large data set and advanced computational resources.

This study was conducted with the aim of enabling the automatic detection of swallowing sounds from a clinical recording database by using a novel approach and evaluating the performance thereof.

2. Proposed Method

2.1. Recording Database

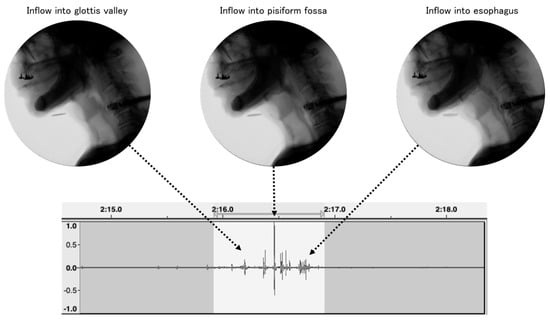

Audio recordings were made of 74 patients having or suspected of having dysphagia who they consented to participate in this study. The participants ingested 2–3 g of gelatinous jelly; then, using a throat microphone (SH-12jk, NANZU ELECTRIC Co., Ltd., Shizuoka, Japan), the sounds during swallowing videofluorography were recorded at a sampling frequency of 44,100 Hz with 16 bit digital resolution. Considering previous reports of swallowing-sound research, the acoustic features of swallowing sounds, and the frequency characteristics of the throat microphone, the recorded data were down-sampled to 16,000 Hz [26]. All recordings were synchronized with video recordings of swallowing videofluorography, and the body posture of each participant during ingestion was adjusted by a medical specialist to minimize the participants’ risk of aspiration. Based on a database containing 93 pieces of recording data obtained from 74 participants, including overlaps from the same participants, we worked to identify the intervals of swallowing sounds. This process involved careful labeling by a dentist based on recording data waveforms, swallowing videofluorography video, and the swallowing sounds themselves (Figure 1). The calibration for the swallowing event identification was performed by two dentists prior to labeling. Each recording datum included 1–13 swallowing-sound intervals; in total, 240 swallowing-sound intervals were recorded.

Figure 1.

Example of swallowing sounds detected from video fluorographic swallowing study (VFSS).

This study was conducted with the approval (No. 3332-1) of the Tokushima University Hospital Clinical Research Ethics Review Committee.

2.2. Detection of Loud Events from Recorded Data

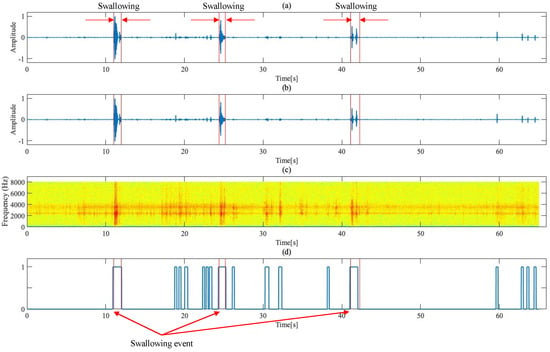

Figure 2 shows the process steps followed from recording data to detecting loud events. The frequency component of swallowing sounds is greater than 750 Hz [27], and the main component is approximately 3500 Hz [28,29]. Based on these reports, a third-order Butterworth bandpass filter with a cutoff frequency in the range of 200–8000 Hz was used, and the results of bandpass filter processing for the recording data are shown in Figure 2a,b.

Figure 2.

Steps from recording data to loud event detection. (a) Recording data waveform, including swallowing during jelly ingestion (red vertical line area is the swallowing-sound interval). (b) Waveform of recording data after bandpass filter processing. (c) Spectrogram for recording data waveform. (d) Recording of loud events and swallowing events (light-colored highlighted sections).

Next, in this study, signals subjected to processing were framed using a frame width of 410 samples and a frameshift width of 160 samples. Subsequently, the logarithmic energy of signals in each frame was calculated (Figure 2c); then, frames exceeding the set threshold were identified as loud frames. This threshold was set through experimental trial and error as a value equal to 7.5%, which is the maximum energy value of signals. Continuous loud frames were treated as a single loud event. Furthermore, the detected loud events had an offset of 6 frames (total: 0.06 s) applied to each side and were separated by less than 0.12 s intervals. The experimentally obtained loud events were evaluated through comparison with the swallowing sound intervals that were previously defined by a dentist (Figure 2d).

In this study, based on the swallowing-sound intervals previously defined by a dentist, a labeling process was used to classify the loud events extracted from recording data into two categories: swallowing and non-swallowing. Regarding the classification criteria, if loud events overlapped swallowing-sound intervals indicated by a dentist by 35% or more, then those loud events were labeled as “swallowing sounds”. Conversely, if detected loud events overlapped the indicated swallowing-sound intervals by less than 35%, or swallowing sounds were too low in volume and not detected, they were labeled “non-swallowing sounds”. Using this method, a total of 234 swallowing-sound events and 697 non-swallowing-sound events were identified. The system followed in this study analyzes a binary classification problem (class 1: swallowing-sound events, and class 0: non-swallowing-sound events), based on these loud events.

In this study cohort comprising 49 men and 25 women (mean age: 78.01 ± 9.88 years old), we examined the results of loud event detection using the novel swallowing-sound-detection system, as well as the classification of swallowing-sound and non-swallowing-sound events.

As described earlier, a swallowing videofluorography video was recorded simultaneously with the swallowing sounds. Through a detailed analysis of the recorded data waveform and video, we identified 234 swallowing- and 697 non-swallowing-sound events. The non-swallowing-sound events were further subdivided through a manual labeling process (See Table 1).

Table 1.

Results of the manual labeling-based classification of loud events.

The 697 analyzed non-swallowing-sound events included diverse sounds heard in actual clinical settings, including respiration, coughing, voice and speech, environmental sounds, and rustling of clothes (Table 1). The average duration and standard deviation for swallowing- and non-swallowing-sound events were 1.34 ± 4.19 and 0.67 ± 0.74, respectively. Notably, swallowing-sound events tended to have longer durations than non-swallowing-sound events. These non-swallowing-sound events exemplify the unique challenges encountered in clinical environments, and a database comprising such sounds was utilized to evaluate the performance of the proposed swallowing-sound and non-swallowing-sound events classification system in this study.

2.3. Feature Extraction

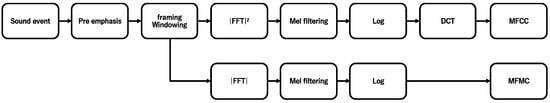

In this study, we used the MFCC and MFMC as features for binary classification. As shown in Figure 3, these features were derived using different specialized steps for feature extraction from loud events.

Figure 3.

Steps for calculating the MFCC and MFMC.

The MFCC is a feature that is frequently used for voice recognition [30,31]. The cepstral analysis accounts for human hearing characteristics, and the MFCC has the property of aggregating features at a low order. The process in this study adopts the following procedure for feature extraction from loud events. First, a pre-emphasis filter was applied to signals to emphasize the high-frequency component. Next, we performed frame processing. Each frame had a length of 410 samples, and the shift-width between frames was set to 160 samples. A Hamming window was used in this frame processing step, and a window function was applied to each frame of signals. Thereafter, we performed 1024-point Fast Fourier Transform (FFT) on each frame and obtained a power spectrum. The converted power spectrum was multiplied by a Mel scale filter bank of 40 channels, and a logarithm was obtained for the output of each filter bank. Finally, a discrete cosine transform (DCT) was applied to these logarithm values to extract a 12-dimensional MFCC. The MFCC can effectively grasp the frequency characteristics of sound signals and particularly provide features that imitate human characteristics.

The MFMC is a technique for extracting the Mel Frequency Amplitude Coefficient from sound signals. In this study, we applied the MFMC extraction process based on reports that the MFMC is superior to the MFCC in speaker emotion recognition and early diagnosis of disease [30,32]. The MFMC extraction process followed the same procedure that was used for the MFCC in its initial stages. Specifically, we performed 1024-point FFTs on each audio frame and obtained the amplitude spectrum. Next, the obtained amplitude spectrum was multiplied by a 40-channel Mel scale filter bank to derive the logarithm for each filter-bank output. At this point, the processes were the same as for the MFCC extraction; thereafter, however, for the MFMC extraction, the DCT was not performed on the obtained logarithm amplitude values, which were instead used as direct features.

In this study, we used a method to calculate representative statistics to represent loud events based on two types of features that were extracted from frames within loud events, namely, the MFCC and MFMC. In frames included in loud events, we calculated the following basic statistics from the MFCC and MFMC: mean, standard deviation, median, range, skewness, as well as their dynamic characteristics, namely, the mean, standard deviation, median, range, and skewness of the deltas of these features. We then used these features to construct 14 feature patterns—6 each for the MFCC and MFMC and two “MIX” feature patterns comprising a combination of the MFCC and MFMC. Table 1 shows that the allocation of statistics in the MFCC feature patterns (MFCC P1–P6), MFMC feature patterns (MFMC P1–P6), and MIX feature patterns (MIX P1 and P2) differ depending on the combination of features. With these feature patterns, we performed a binary classification, and thereby quantitatively evaluated the effectiveness of features in classifying swallowing and non-swallowing in loud events.

According to the analysis of Table 2, the number of dimensions of a feature in each feature pattern tends to increase from P1 to P6. Furthermore, a similar increasing tendency is observed in MIX feature patterns, which confirm that MIX P2, in particular, has the largest number of dimensions.

Table 2.

Feature patterns and number of dimensions. ○ represents patterns of MFCC, while ● represents patterns of MFMC.

2.4. Classification of Loud Events Using Machine Learning Models

In this proposed system, we adopted the following three machine learning models: an SVM, Multi-Layer Perceptron (MLP), and an ensemble learning model that integrates both. This was to facilitate binary classification based on the feature patterns extracted from loud events.

2.4.1. Support Vector Machine

The purpose of an SVM is to determine the decision boundaries having the largest margins between two classes based on a learning data set. This achieves high identification performance for unknown data. The learning data set consists of dimensional N of samples, , and a corresponding class label, starting from . In this study, we solve the following optimization problem:

Here margin violations in the samples are tolerated, with being the normal vector of the decision boundary, the offset, and the slack variable. is a regularization parameter that we used for overfitting prevention and margin balance adjustment. The optimum value of the regularization parameter, , was determined through a grid search, and was selected to maximize the general purpose performance of the model.

We applied Platt scaling to convert the score obtained from this linear SVM to a posterior probability distribution, whereby the score was normalized to a range of 0 to 1 based on the distance from the separating hyperplane. Specifically, a sigmoid function was learned to output the posterior probability distribution, and this function was used for the following conversion:

Here indicates the distance from the separating hyperplane, and and are the learned sigmoid function parameters. This method provides the output of the model in a probabilistic format that is easier to interpret.

2.4.2. Multi-Layer Perceptron

The MLP is an artificial neural network that consists of three layers: an input layer, several intermediate layers, including perceptrons, and an output layer for outputting predictions in response to input vectors [33]. The input layer is fully connected to the intermediate layers, and intermediate layers to each other, and each node is connected to all nodes in the adjacent layer. For the activation function used in this study, a hyperbolic tangent sigmoid function (tanh) is used in the intermediate layers, and a SoftMax function is used in the output layer. The hyperbolic tangent function improves the expression of the model through its nonlinearity. A SoftMax function is used to express class predictive probability in the output layer. In this study, based on the results of repeated trial and error, the number of units of intermediate layers was set to the number resulting from adding 2 to the number of dimensions of a feature. We adopted a backpropagation algorithm based on the scaling conjugate grading method (SCG) for the learning process. With this algorithm, the learning process was set to stop if the number of epochs reached 2000, or when the performance evaluation gradient became smaller than a predetermined threshold.

2.4.3. Ensemble Learning Model

In this study, we planned to exceed the limits of individual learners through an Ensemble Learning Machine (ELM) that combined the SVM and MLP. Specifically, we utilized the “Ensemble Learning Toolbox” [34] proposed by Riberiro et al. and used a boosting method to integrate a total of 10 learners, including five linear SVM and five MLP. Boosting is an approach involving successively training multiple learners and, by having successive learners correct the misclassification of the preceding learners, improving the overall classification performance. This process is used to grasp linear and nonlinear data characteristics and is particularly expected to enable more accurate predictions in the classification of loud events. Initially, weak learners (linear SVM or MLP) are trained using training data. Learner performance is evaluated based on its error rate and then weighted based on the results. Subsequent learners then expressly learn based on the probability distribution focused upon the samples that were misclassified by previous learners. This step is repeated until all learners have been trained. The output from each weak learner is connected using the weighting conferred. Classification as class 1 or class 0 is determined by weighted voting based on learner output. Based on the integrated output, the ensemble model determines final class labels. If the overall confidence in class 1 is higher than in class 0, it is determined to be class 1, and vice versa, it is determined to be class 0. Boosting is an approach in which overall classification performance is raised by the next learner correcting the previous learner’s errors. With this strategy, the model is expected to exhibit high adaptability and generalization ability for specific problems and provide accurate predictions, even for complex data sets and real-world problems.

2.5. Evaluation of Loud Event Classification with K-Fold Cross-Validation

In this study, we performed a 5-fold cross-validation to evaluate the generalization performance of the proposed system. With this method, we divided the data sets into five equal parts, used each part one at a time as a test set, and then used the remaining part as a training set. Thus, we were able to comprehensively evaluate the system’s performance.

We used the following six indices for performance evaluation: accuracy, sensitivity, specificity, positive predictive value (PPV), negative predictive value (NPV), and F1 score. These indices were calculated by the following equations based on the definition of swallowing-sound events as positive and of non-swallowing-sound events as negative:

The percentage of correct classification for each category denotes the accuracy, whereas the percentage of correctly identified swallowing-sound events out of the total number of actual swallowing-sound events denotes sensitivity. The percentage of correctly identified non-swallowing-sound events out of the total number of non-swallowing-sound events indicates the specificity. The percentage of cases predicted to be swallowing-sound events and were actually swallowing-sound events is the PPV, whereas the NPV is the percentage of cases predicted to be non-swallowing-sound events and were actually non-swallowing-sound events. The F1 score is the harmonic mean of sensitivity and PPV and is 1 if the system has correctly classified everything. The F1 score ranges from 0 to 1 and is a particularly useful performance evaluation index if there is a bias in the number of positive and negative samples [35].

3. Results

Results of Evaluating Performance of Swallowing-Sound and Non-Swallowing-Sound Event Classification System

We used a swallowing-sound and non-swallowing-sound event classification system and classified 234 swallowing-sound events and 697 non-swallowing-sound events. Furthermore, we performed a five-fold cross-validation to evaluate system classification performance. Performance evaluation was performed based on 14 feature patterns and using three machine learning models: the SVM, MLP, and ELM. The results of the evaluation of the classification performance are shown in Table 3 based on the F1 score and accuracy.

Table 3.

Results of 5-fold cross-validation based on 14 feature patterns and using three types of machine learning models.

According to the results of the analysis shown in Table 3, if the feature pattern is MIX P2 and ELM is adopted as the machine learning model, an F1 score of 0.90 and an accuracy of 95.24% are achieved, and this shows the highest performance of all the evaluated combinations. MIX P2 comprehensively uses the respective basic statistics and dynamic features of the MFCC and MFMC. Here, we present the performance metrics of when the three machine learning models recorded their respectively highest F1 scores.

Table 4 shows the performance metrics (six evaluation indices, including F1 score, accuracy, sensitivity, etc.) when the three machine learning models achieved their respective highest F1 scores. The ensemble learning model has a high F1 score compared to other models and exhibits similarly high values for sensitivity and PPV, which are important for calculating the F1 score. According to the analysis of Table 3, the ELM had the highest F1 score in 11 cases out of the 14 feature patterns. Although no clear improvement was seen compared to other machine learning models with some feature patterns, these results suggest that the ELM more effectively classifies swallowing-sound events as compared to other models. Additionally, it may be broadly observed from Table 3 that classification performance tends to improve in the three machine learning models when the number of dimensions of a feature increases. Therefore, we conducted a detailed investigation of the relationship between feature patterns (number of dimensions of a feature) and classification performance (accuracy) using ensemble learning.

Table 4.

Feature patterns and performance evaluation values when the classification performance was highest for each machine learning model.

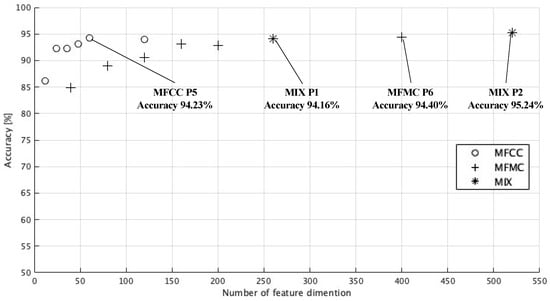

Figure 4 shows a scatterplot using the classification accuracy of the proposed system (vertical axis) and the number of feature dimensions possessed by the feature patterns shown in Table 1 (horizontal axis), and represents the classification results based on the ensemble learning model. In this scatterplot, the highest classification accuracy of 95.24% is achieved with MIX P2 (number of feature dimensions 520), and the classification performance tends to improve as the number of dimensions of both the MFCC and MFMC features increases; however, there are also cases in which the classification accuracy for swallowing sounds is approximately 95%, even with relatively few numbers of feature dimensions. In particular, MFCC P5 (number of feature dimensions: 60) shows a good performance with a high classification accuracy of 94.23%, suggesting that an efficient classification may be possible, even with a small number of dimensions.

Figure 4.

Comparison of number of feature dimensions and swallowing-sound classification performance.

Owing to the utilization of an unbalanced data set, we re-conducted a five-fold cross-validation to classify class 1 as swallowing-sound events and class 0 as non-swallowing-sound events. Subsequently, we re-calculated the mean and standard deviation of the performance metrics for each class when identified as positive. The results are presented in Table 5. For this purpose, we utilized the feature pattern MIX P2 and adopted the ELM as the machine learning model.

Table 5.

Average and standard deviation of performance metrics for positive identification of swallowing-sound event (class 1) vs. non-swallowing-sound event (class 0).

Our analysis indicates minimal differences among classes, suggesting that our method yields a high detection performance for swallowing sounds. The F1-macro average, computed by averaging the mean F1 scores for class 1 and class 0, is approximately 0.92. These outcomes demonstrate the effectiveness of the feature pattern and machine learning model used, highlighting their suitability for handling unbalanced data sets in our study.

4. Discussion

In this study, we undertook an automatic classification of swallowing-sound and non-swallowing-sound events, for which we built three machine learning models and compared their performance. Based on the results, if we exclude the feature patterns for MFCC P4, MFMC P2, and MFMC P5, the ELM exhibited the highest classification performance.

Zhao et al. [33] used vibration signals from the pharynx obtained from healthy persons and patients with dysphagia, and, with an integrated classifier combining Adaboost, an SVM, and MLP, reported 72.09% accuracy for detecting dysphagia. Similarly, these results suggest that an ELM would effectively detect swallowing sounds from specific clinical recording data. A model including MFCC features, in particular, demonstrated good performance. The MFCC is a feature that is widely used for voice recognition [30,31] and for identifying swallowing sounds [23,36]. In this study, feature patterns using the MFCC as the basis showed the most superior performance. Furthermore, we adopted MFMC features that are reportedly effective for speech emotion recognition; however, no significant difference in performance was seen as compared to the analysis using MFCC feature patterns. This may be due to differences in the type and scale of the databases used.

Table 6 summarizes a literature review of the past 15 years, with the latest study results on the automated detection of swallowing sounds. Sazonov et al. used the Mel Scale Fourier Spectrum and reported approximately 85% accuracy in classifying swallowing sounds using an SVM, with data sets including patients who were obese [37]. Moreover, Khlaifi et al. used a classification method that calculates a single MFCC as an acoustic feature for automatically detected acoustic events and, with a GMM and EM algorithm, it was possible to classify swallowing at a recognition rate of 95.94% from acoustic events obtained from a swallowing data set of only healthy persons [23]; the authors used features that mimic human hearing characteristics. In this study, we used basic statistics of the MFCC and feature patterns including dynamic features, and confirmed that classification performance clearly improves in actual clinical recording data more than when these feature patterns use a single MFCC. In addition, in a study of the construction of a swallowing-sound-detection system using a recording database of patients with dysphagia and healthy participants, the real-time detection system for swallowing sounds that was proposed by Jayatilake et al. detected loud intervals and identified swallowing sounds based on their duration and frequency. Swallowing sounds recorded in repetitive saliva swallowing tests performed on healthy participants were reportedly detectable with 83.7% precision and 93.9% recall when using a proposed algorithm. Furthermore, the results show that swallowing sounds when patients with dysphagia swallow 3 mL of water (25% barium mixture) can be detected with 79.3% accuracy [38]. Kuramoto et al. reported that it was possible to classify swallowing sounds and noise with 97.3% accuracy with a Convolutional Neural Network (CNN) model using spectrogram features [24]. However, a direct comparison is difficult due to a lack of evaluation of other performance metrics. Nevertheless, it is noteworthy that with the technique reported here, we were able to achieve a performance comparable to theirs even when using recording data from patients with dysphagia that contained more complex swallowing-sound patterns than those in healthy individuals, and when using shallow learning [39,40].

Table 6.

Comparison with other studies on swallowing-sound detection,.

Swallowing detection was previously possible with various sensors, such as microphones, respiratory flow, and electromyograms [25,36,41,42,43]. In recent years, studies of swallowing-sound detection have combined high-resolution CA (HRCA) signals and deep learning. Khalifa et al. reported that spectrograms of signals from 248 patients with dysphagia that were obtained from three accelerometers and microphones, and learning using a DNN, enabled the detection of swallowing with accuracy greater than 95% [25]. However, if multiple sensors are used, and they are difficult to attach, the necessity of making adjustments to attachment positions per patient and the burden on patients are considered, then these methods are associated with issues that hamper their practical use in clinical settings [44]. In this study, we adopted an approach that involved using microphones attached only around the pharynx to detect swallowing, which has significant advantages in simplifying attachment and mitigating the patient burden.

The method for automatically detecting swallowing sounds using actual clinical condition data in this study achieved high detection accuracy under specific conditions, albeit with several constraints. Although a very small number of errors occurred. The main causes of these errors are identified as a decreased signal-to-noise ratio (SNR) in swallowing sounds and sound episodes with multiple voices mixed together. To resolve these issues, it will be necessary to expand the database and develop more advanced feature extraction methods.

The method may occasionally detect long swallowing-sound events. Future analyses may require the implementation of techniques for emphasizing swallowing sounds.

The scale of data sets used was small, and there was limited diversity among the patients; therefore, the possibility of generalizing this method requires further validation. Moreover, additional testing will be essential for the evaluation of its robustness to interference that may be encountered in actual medical settings, such as external noise and patient motions. It will be important to take these constraints into account in future studies to focus on diverse validations using wider data sets, and thereby test robustness in actual clinical environments.

5. Conclusions

In this study, we proposed a novel method for automatically detecting swallowing sounds using recording data obtained in actual clinical settings from 74 patients with dysphagia. By extracting basic statistics and dynamic features based on the MFCC and MFMC, and adopting an ensemble learning model, we achieved superior performance with an F1-macro average of approximately 0.92 and an accuracy of 95.20%. The method developed in this study has demonstrated efficient applicability to swallowing-sound detection in the current clinical recording database. Therefore, we believe that the proposed method will contribute to enhancing objectivity in CA.

Author Contributions

All authors have read and agreed to the published version of the manuscript. Conceptualization, T.E. and Y.S.; methodology, S.K., T.E. and Y.S.; software, S.K.; validation, T.E., S.K., M.S., A.S. and F.S.; formal analysis, S.K.; investigation, M.S. and A.S.; resources, F.S. and T.E.; data curation, F.S.; writing—original draft preparation, S.K.; writing—review and editing, T.E., S.K., M.S., A.S. and F.S.; visualization, S.K.; supervision, T.E.; project administration, T.E.; funding acquisition, Y.S. and T.E.

Funding

This research was funded by an Ono Acoustics Research Grant.

Institutional Review Board Statement

This study was conducted with the approval (No. 3332-1) of the Tokushima University Hospital Clinical Research Ethics Review Committee.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

Data are unavailable due to privacy or ethical restrictions.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Clavé, P.; Terré, R.; de Kraa, M.; Serra, M. Approaching Oropharyngeal Dysphagia. Rev. Esp. Enferm. Dig. 2004, 96, 119–131. [Google Scholar] [CrossRef] [PubMed]

- Aslam, M.; Vaezi, M.F. Dysphagia in the Elderly. Gastroenterol. Hepatol. 2013, 9, 784–795. [Google Scholar]

- Sura, L.; Madhavan, A.; Carnaby, G.; Crary, M.A. Dysphagia in the Elderly: Management and Nutritional Considerations. Clin. Interv. Aging 2012, 7, 287–298. [Google Scholar] [PubMed]

- Nawaz, S.; Tulunay-Ugur, O.E. Dysphagia in the Older Patient. Otolaryngol. Clin. N. Am. 2018, 51, 769–777. [Google Scholar] [CrossRef] [PubMed]

- Kertscher, B.; Speyer, R.; Palmieri, M.; Plant, C. Bedside Screening to Detect Oropharyngeal Dysphagia in Patients with Neurological Disorders: An Updated Systematic Review. Dysphagia 2014, 29, 204–212. [Google Scholar] [CrossRef] [PubMed]

- Walton, J.; Silva, P. Physiology of Swallowing. Surgery 2018, 36, 529–534. [Google Scholar]

- Sherman, V.; Flowers, H.; Kapral, M.K.; Nicholson, G.; Silver, F.; Martino, R. Screening for Dysphagia in Adult Patients with Stroke: Assessing the Accuracy of Informal Detection. Dysphagia 2018, 33, 662–669. [Google Scholar] [CrossRef] [PubMed]

- Giraldo-Cadavid, L.F.; Leal-Leaño, L.R.; Leon-Basantes, G.A.; Bastidas, A.R.; Garcia, R.; Ovalle, S.; Abondano-Garavito, J.E. Accuracy of Endoscopic and Videofluoroscopic Evaluations of Swallowing for Oropharyngeal Dysphagia. Laryngoscope 2017, 127, 2002–2010. [Google Scholar] [CrossRef] [PubMed]

- Fattori, B.; Giusti, P.; Mancini, V.; Grosso, M.; Barillari, M.R.; Bastiani, L.; Molinaro, S.; Nacci, A. Comparison Between Videofluoroscopy, Fiberoptic Endoscopy and Scintigraphy for Diagnosis of Oro-Pharyngeal Dysphagia. Acta Otorhinolaryngol. Ital. 2016, 36, 395–402. [Google Scholar] [CrossRef]

- Wu, C.H.; Hsiao, T.Y.; Chen, J.C.; Chang, Y.C.; Lee, S.Y. Evaluation of Swallowing Safety with Fiberoptic Endoscope: Comparison with Videofluoroscopic Technique. Laryngoscope 1997, 107, 396–401. [Google Scholar]

- Horiguchi, S.; Suzuki, Y. Screening Tests in Evaluating Swallowing Function. JMAJ 2011, 54, 31–34. [Google Scholar]

- Ozaki, K.; Kagaya, H.; Yokoyama, M.; Saitoh, E.; Okada, S.; González-Fernández, M.; Palmer, J.B.; Uematsu, A.H. The Risk of Penetration or Aspiration During Videofluoroscopic Examination of Swallowing Varies Depending on Food Types. Tohoku J. Exp. Med. 2010, 220, 41–46. [Google Scholar] [CrossRef]

- Helliwell, K.; Hughes, V.J.; Bennion, C.M.; Manning-Stanley, A. The Use of Videofluoroscopy (VFS) and Fibreoptic Endoscopic Evaluation of Swallowing (FEES) in the Investigation of Oropharyngeal Dysphagia in Stroke Patients: A Narrative Review. Radiography 2023, 29, 284–290. [Google Scholar] [CrossRef] [PubMed]

- Takahashi, N.; Kikutani, T.; Tamura, F.; Groher, M.; Kuboki, T. Videoendoscopic Assessment of Swallowing Function to Predict the Future Incidence of Pneumonia of the Elderly. J. Oral Rehabil. 2012, 39, 429–437. [Google Scholar] [CrossRef]

- Leslie, P.; Drinnan, M.J.; Finn, P.; Ford, G.A.; Wilson, J.A. Reliability and Validity of Cervical Auscultation: A Controlled Comparison Using Videofluoroscopy. Dysphagia 2004, 19, 231–240. [Google Scholar] [CrossRef]

- Borr, C.; Hielscher-Fastabend, M.; Lücking, A. Reliability and Validity of Cervical Auscultation. Dysphagia 2007, 22, 225–234. [Google Scholar] [CrossRef]

- Lagarde, M.L.J.; Kamalski, D.M.A.; van den Engel-Hoek, L. The Reliability and Validity of Cervical Auscultation in the Diagnosis of Dysphagia: A Systematic Review. Clin. Rehabil. 2016, 30, 199–207. [Google Scholar] [CrossRef]

- Dudik, J.M.; Kurosu, A.; Coyle, J.L.; Sejdić, E. Dysphagia and Its Effects on Swallowing Sounds and Vibrations in Adults. Biomed. Eng. OnLine 2018, 17, 69. [Google Scholar] [CrossRef]

- Takahashi, K.; Groher, M.E.; Michi, K. Methodology for Detecting Swallowing Sounds. Dysphagia 1994, 9, 54–62. [Google Scholar] [CrossRef] [PubMed]

- Rayneau, P.; Bouteloup, R.; Rouf, C.; Makris, P.; Moriniere, S. Automatic Detection and Analysis of Swallowing Sounds in Healthy Subjects and in Patients with Pharyngolaryngeal Cancer. Dysphagia 2021, 36, 984–992. [Google Scholar] [CrossRef]

- Frakking, T.T.; Chang, A.B.; Carty, C.; Newing, J.; Weir, K.A.; Schwerin, B.; So, S. Using an Automated Speech Recognition Approach to Differentiate Between Normal and Aspirating Swallowing Sounds Recorded from Digital Cervical Auscultation in Children. Dysphagia 2022, 37, 1482–1492. [Google Scholar] [CrossRef]

- Sarraf Shirazi, S.; Buchel, C.; Daun, R.; Lenton, L.; Moussavi, Z. Detection of Swallows with Silent Aspiration Using Swallowing and Breath Sound Analysis. Med. Biol. Eng. Comput. 2012, 50, 1261–1268. [Google Scholar] [CrossRef]

- Khlaifi, H.; Istrate, D.; Demongeot, J.; Malouche, D. Swallowing Sound Recognition at Home Using GMM. IRBM 2018, 39, 407–412. [Google Scholar] [CrossRef]

- Kuramoto, N.; Ichimura, K.; Jayatilake, D.; Shimokakimoto, T.; Hidaka, K.; Suzuki, K. Deep Learning-Based Swallowing Monitor for Realtime Detection of Swallow Duration. In Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Montreal, QC, Canada, 20–24 July 2020; Volume 2020, pp. 4365–4368. [Google Scholar]

- Khalifa, Y.; Coyle, J.L.; Sejdić, E. Non-invasive Identification of Swallows via Deep Learning in High Resolution Cervical Auscultation Recordings. Sci. Rep. 2020, 10, 8704. [Google Scholar] [CrossRef]

- Suzuki, T.; Ogata, J.; Tsunakawa, T.; Nishida, M.; Nishimura, M. Bottleneck Feature-Mediated DNN-Based Feature Mapping for Throat Microphone Speech Recognition. In Proceedings of the 2018 Asia-Pacific Signal and Information Processing Association Annual Summit and Conference (APSIPA ASC), Honolulu, HI, USA, 12–15 November 2018; pp. 1738–1741. [Google Scholar]

- Yagi, N.; Nagami, S.; Lin, M.K.; Yabe, T.; Itoda, M.; Imai, T.; Oku, Y. A Noninvasive Swallowing Measurement System Using a Combination of Respiratory Flow, Swallowing Sound, and Laryngeal Motion. Med. Biol. Eng. Comput. 2017, 55, 1001–1017. [Google Scholar] [CrossRef] [PubMed]

- Movahedi, F.; Kurosu, A.; Coyle, J.L.; Perera, S.; Sejdić, E. A Comparison Between Swallowing Sounds and Vibrations in Patients with Dysphagia. Comput. Methods Programs Biomed. 2017, 144, 179–187. [Google Scholar] [CrossRef] [PubMed]

- Cichero, J.A.; Murdoch, B.E. The Physiologic Cause of Swallowing Sounds: Answers from Heart Sounds and Vocal Tract Acoustics. Dysphagia 1998, 13, 39–52. [Google Scholar] [CrossRef]

- Ancilin, J.; Milton, A. Improved Speech Emotion Recognition with Mel Frequency Magnitude Coefficient. Appl. Acoust. 2021, 179, 108046. [Google Scholar] [CrossRef]

- Kerkeni, L.; Serrestou, Y.; Mbarki, M.; Raoof, K.; Mahjoub, M.A.; Cleder, C. Automatic Speech Emotion Recognition Using Machine Learning; IntechOpen: London, UK, 2019. [Google Scholar] [CrossRef]

- Nayak, S.S.; Darji, A.D.; Shah, P.K. Machine Learning Approach for Detecting COVID-19 from Speech Signal Using Mel Frequency Magnitude Coefficient. Signal Image Video Process. 2023, 17, 3155–3162. [Google Scholar] [CrossRef]

- Zhao, H.; Jiang, Y.; Wang, S.; He, F.; Ren, F.; Zhang, Z.; Yang, X.; Zhu, C.; Yue, J.; Li, Y.; et al. Dysphagia Diagnosis System with Integrated Speech Analysis from Throat Vibration. Expert Syst. Appl. 2022, 204, 117496. [Google Scholar] [CrossRef]

- Ribeiro, V.H.A.; Reynoso-Meza, G. Ensemble Learning Toolbox: Easily Building Custom Ensembles in MATLAB; Version 1.0.0; MathWorks: Beijing, China, 2020. [Google Scholar]

- Chicco, D.; Jurman, G. The Advantages of the Matthews Correlation Coefficient (MCC) over F1 Score and Accuracy in Binary Classification Evaluation. BMC Genom. 2020, 21, 6. [Google Scholar] [CrossRef] [PubMed]

- Nakamura, A.; Saito, T.; Ikeda, D.; Ohta, K.; Mineno, H.; Nishimura, M. Automatic Detection of Chewing and Swallowing. Sensors 2021, 21, 3378. [Google Scholar] [CrossRef] [PubMed]

- Sazonov, E.S.; Makeyev, O.; Schuckers, S.; Lopez-Meyer, P.; Melanson, E.L.; Neuman, M.R. Automatic Detection of Swallowing Events by Acoustical Means for Applications of Monitoring of Ingestive Behavior. IEEE Trans. Biomed. Eng. 2010, 57, 626–633. [Google Scholar] [CrossRef] [PubMed]

- Jayatilake, D.; Ueno, T.; Teramoto, Y.; Nakai, K.; Hidaka, K.; Ayuzawa, S.; Eguchi, K.; Matsumura, A.; Suzuki, K. Smartphone-Based Real-Time Assessment of Swallowing Ability from the Swallowing Sound. IEEE J. Transl. Eng. Health Med. 2015, 3, 2900310. [Google Scholar] [CrossRef] [PubMed]

- Honda, T.; Baba, T.; Fujimoto, K.; Goto, T.; Nagao, K.; Harada, M.; Honda, E.; Ichikawa, T. Characterization of Swallowing Sound: Preliminary Investigation of Normal Subjects. PLoS ONE 2016, 11, e0168187. [Google Scholar] [CrossRef] [PubMed]

- Ohyado, S. Cervical Auscultation. Jpn. J. Gerodontol. 2013, 28, 331. [Google Scholar]

- Miyagi, S.; Sugiyama, S.; Kozawa, K.; Moritani, S.; Sakamoto, S.I.; Sakai, O. Classifying Dysphagic Swallowing Sounds with Support Vector Machines. Healthcare 2020, 8, 103. [Google Scholar] [CrossRef] [PubMed]

- Suzuki, K.; Shimizu, Y.; Ohshimo, S.; Oue, K.; Saeki, N.; Sadamori, T.; Tsutsumi, Y.; Irifune, M.; Shime, N. Real-Time Assessment of Swallowing Sound Using an Electronic Stethoscope and an Artificial Intelligence System. Clin. Exp. Dent. Res. 2022, 8, 225–230. [Google Scholar] [CrossRef]

- Golabbakhsh, M.; Rajaei, A.; Derakhshan, M.; Sadri, S.; Taheri, M.; Adibi, P. Automated Acoustic Analysis in Detection of Spontaneous Swallows in Parkinson’s Disease. Dysphagia 2014, 29, 572–577. [Google Scholar] [CrossRef]

- Santoso, L.F.; Baqai, F.; Gwozdz, M.; Lange, J.; Rosenberger, M.G.; Sulzer, J.; Paydarfar, D. Applying Machine Learning Algorithms for Automatic Detection of Swallowing from Sound. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 2584–2588. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).