Abstract

Video compression algorithms are commonly used to reduce the number of bits required to represent a video with a high compression ratio. However, this can result in the loss of content details and visual artifacts that affect the overall quality of the video. We propose a learning-based restoration method to address this issue, which can handle varying degrees of compression artifacts with a single model by predicting the difference between the original and compressed video frames to restore video quality. To achieve this, we adopted a recursive neural network model with dilated convolution, which increases the receptive field of the model while keeping the number of parameters low, making it suitable for deployment on a variety of hardware devices. We also designed a temporal fusion module and integrated the color channels into the objective function. This enables the model to analyze temporal correlation and repair chromaticity artifacts. Despite handling color channels, and unlike other methods that have to train a different model for each quantization parameter (QP), the number of parameters in our lightweight model is kept to only about 269 k, requiring only about one-twelfth of the parameters used by other methods. Our model applied to the HEVC test model (HM) improves the compressed video quality by an average of 0.18 dB of BD-PSNR and −5.06% of BD-BR.

1. Introduction

As the resolution of video equipment increases, it becomes more important to reduce the recorded video size in order to lessen storage requirements and network transmission burden. Therefore, video compression technology has become more prevalent, with the HEVC [1] video codec gradually becoming the most widely used video standard. HEVC is a block-based video compression standard, and its block splitting style is variable and more versatile in order to effectively handle objects of varying sizes in video frames. Furthermore, HEVC achieves additional compression by predicting between frames, a process known as interframe prediction. Since video compression works by removing some unnecessary and subtle information from the video, when the compression ratio is increased with more information loss, noticeable artifacts appear, affecting perceptional quality significantly. To remove these artifacts, two standard tools are available in the HEVC in-loop filter: the deblocking filter (DBF) [2] and the sample adaptive offset (SAO) [3]. Unfortunately, the improvement of these two tools is limited and the generated results still contain significant artifacts, so there is other work that proposed different ways to improve them.

Taking advantage of the benefits of deep learning techniques, some of the literature developed restoration methods based on it for removing artifacts caused by video compression. These works [4,5,6,7,8] introduced or replaced trained restoration models in the HEVC in-loop filter to improve the compression quality even further. Yang et al. [9] utilized different network architectures to enhance images encoded with different compression types, because interframe prediction artifacts are much more complicated than intraframe prediction artifacts. All of the methods described above are specific to improving HEVC in-loop filter and are limited to a specific codec; they cannot be generalized to other types of codecs.

More general solutions restore the image and video by predicting the original contents based on analyzing the spatial information in a single image with artifacts, which can be easily generalized to other types of codecs, and these solutions are classified as single image restoration methods. Several researchers [10,11,12] designed shallow models to retrieve spatial information and generate restoration results. Unfortunately, the restoration results were unsatisfactory, and thus, a deeper network architecture was adopted [13,14,15,16,17]. Cavigelli et al. [13] adjusted the filter stride in the network to process the feature maps at four different scales to accommodate different object sizes. Zhan et al. [14] proposed a parallel structure with a joint processing of pixel and wavelet domains. Zhang et al. [15] proposed a denoising convolutional network (DnCNN) that used 20 convolutional layers and batch normalization to establish a deeper network. Tai et al. [16] used multiple recursions and designed the memory block inspired by LSTM [18] to avoid the loss of feature information.

More sophisticated methods use more frame information than a single image to restore video frames from artifacts. These multi-frame restoration methods [19,20] will simultaneously input the current frame and adjacent frames, allowing the model to learn to analyze spatial and temporal information and enhance the quality of the current frame using this information. Jia et al. [19] created spatial–temporal residue networks (STResNets) to process the interframe prediction artifacts, which use two convolutional layers with different filter sizes to capture the features. Yang et al. [20] firstly used a support vector machine (SVM)-based detector to determine whether or not the compressed frame is a peak quality frame (PQF), and then QECNN [9] was used to process the current frame if it were a PQF.

However, there are several issues with the above methods as well as some barriers to resolving this issue using a deep learning-based approach. To predict more accurately in deep learning methods, the number of layers in the neural network is usually increased, as fewer layers is more difficult to handle serious artifacts effectively. This may make the network more difficult to train, and the parameters associated with it will considerably increase, putting additional strain on the operating devices, especially the low-end platform. Another issue is that the artifacts of video compression are complicated due to complex prediction mechanisms, particularly interframe prediction, used in HEVC. Furthermore, because different compression configuration profiles will produce varying degrees of artifacts, it is difficult to develop a capable method to solve complex and diverse compression distortion. We also notice that the models and training methods proposed by some of the other literature work [5,6,7,9,10,11,12,13,14,15,16,19,20] only targeted the luminance channel Y of YCbCr color space. Although the visual impact on the luminance quality is the most significant and dominant, artifacts in the color channels can still have a noticeable impact on the quality of visual perception. Therefore, this work aims to address the abovementioned problems.

We proposed a single lightweight model based on our previous paper [21] that is capable of managing and restoring quality after various levels of compression. This model was built using a recursive structure and ResBlock [22] to simulate the architecture of DnCNN [15] because the DnCNN has good restoration performance and its architecture is more flexible for improvement. Our model design and training objective functions take color channels into account, allowing the model to solve the problem of chrominance artifacts with minimal complexity increase. In addition, we added dilated convolution [23] and the ConvGRU [24] concept to our model to expand its receptive field and manage temporal features, respectively.

2. Proposed Method

We proposed the model architecture that handles single images and explained how to optimize the model for restoring images and how to solve the problem of color aberration with a low burden of computation. Then, we introduced the setup of the model training, the preprocessing of the datasets, and the design method of the objective function. Finally, we extend our model to video processing to handle the artifacts of interframe prediction, and discuss its architecture and training strategy. Figure 1 is the flowchart of the entire compression system.

Figure 1.

Flowchart of the compression system.

2.1. Single Image Restoration Method

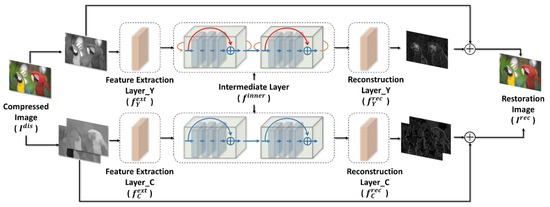

The architecture of deep learning-based restoration methods are commonly made up of three layers: feature extraction, feature processing, and reconstruction. To realize the reconstruction layer, two approaches based on the output of the predicted content can be used: by predicting the whole restored image [6,9,10,11,13,14] or by predicting the residual information for compensating the artifacts [5,7,12,15,16,17,19,20]. Because the amount of information required to predict residual information is less, the residual information prediction is less difficult and the accuracy can be improved. Therefore, our approach adopts the residual-based restoration. We proposed our restoration method based on DnCNN [15]. The architecture of our designed model, shown in Figure 2, predicts the residual image of all YCbCr channels between the compressed and original images. The model can be roughly segmented into three parts: the feature extraction layer, which includes and , the intermediate layer , and the reconstruction layer, which includes and . The operation in the whole model can be expressed as Equations (1) and (2):

where and are the luminance and chroma components of the compressed image, respectively. After the model processing, the reconstructed images and are obtained. We can then concatenate them back into a three-channel image as Equation (3):

Figure 2.

Flowchart of our architecture for a single-image restoration method.

Both the feature extraction and the reconstruction layers have a single convolutional layer. We use to extract 64-channel features from one luminance channel, while retrieves the same total number of 64-channel features from both chroma channels as the human visual system is more sensitive to changes in luminance than chroma, and we can use a lighter architecture when designing the chroma model to reduce the complexity and the number of parameters. The feature extraction layers use a filter size of 3 × 3 to extract features. The reconstruction layer generates the residual image for luminance from the 64-channel luminance features and the residual images for the chroma channel from the 64-channel chroma features, which is the inverse concept of the feature extraction layer. That is, only 32-channel features are used to reconstruct each chroma channel. We will explore why we need to process the chroma channel in Section 3.1.

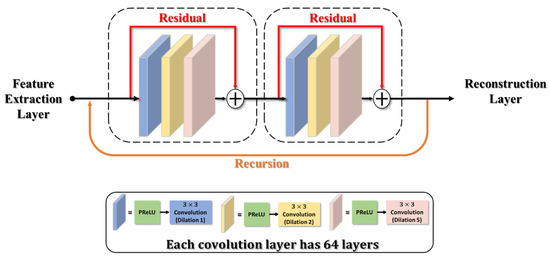

The intermediate layer contains six convolutional layers as shown in Figure 3. Each convolutional layer uses 64 3 × 3 kernel-sized filters and is activated by PReLU. We use a recursive structure [25] to allow the model to build a deep structure with a small number of parameters. By iteratively performing operations on the previous iteration results, this light structure can achieve a similar effect as in the deep network, and thus deeper features in the image can be extracted that would aid in image restoration. Please note that the recursive structure is only applied to the luminance component.

Figure 3.

Structure of our intermediate layer.

However, the recursive network could easily cause the problem of gradient vanishing. To address this issue, we integrate our method with the concept of a residual block [22,26] in our intermediate layer. We decompose our six-layer convolutions in our recursive network into two residual block units in a series connection, as shown in Figure 3. It is worth noting that the PReLU location in our design has been swapped with the convolutional layer, which embraces the same concept of the pre-activation design proposed by ResNet-v2 [27] to effectively improve the performance of residual blocks.

If the standard convolution of 3 × 3 kernel size is used in our model, the receptive field would only reach up to 41 × 41. To improve performance with a limited number of layers, we adopt dilated convolution [23,28] in our model to enlarge the receptive field. By adjusting a dilated coefficient to extend the receptive field with the same kernel size, the convolution achieves a larger receptive field. If the dilated coefficient is set to one, the dilated convolution degenerates to the standard convolution. Although the dilated convolution increases the receptive field, the architecture must be set up with extreme caution. Wang et al. [29] pointed out that if the dilated coefficient is not properly set, the gridding issue will appear in the model output. Therefore, we designed our intermediate layer using the hybrid dilated convolution design rules outlined in [29]. For the three convolutional layers, we assigned dilated coefficients of and 5, respectively. The convolution layers in this design can be thought of passing through equivalent filter sizes of , , and .

Our model design is summarized in Table 1. The receptive field of our final model will be increased to in the luminance channel. It also improves the receptive field in the chroma channels, increasing it from to .

Table 1.

Summary of the filter settings of each layer in our model.

To train our model to repair single images, we used two datasets, BSDS500 [30] and DIV2K [31]. These datasets contain uncompressed raw images of various scenes stored in RGB color space. The BSDS500 contains 500 images for training segmentation tasks. DIV2K is a dataset with 900 high-resolution images that has been released for training super-resolution tasks.

We used JPEG compression on the BSDS500 images and a quality factor (QF) of 10 to create compressed images for our initial model training. We used DIV2K to generate an extended dataset by compressing it with various compression quality factors (QF = 10, 20, 30, and 40) to consider different compression qualities. Initially, only the BSDS500 training and test sets were used as the training set for our model, and the remaining BSDS500 images are used as the validation set to fine-tune the model. We used the transfer learning technique when training with the extended datasets to fine-tune the parameters of the model trained on compressed BSDS500 data with a quality factor of 10 in order to reduce training time. The training and testing images were converted to YCbCr color space and cropped into 80 × 80 image patches before feeding the model. When loading data for training, we used three methods of data augmentation to allow the network to learn more diverse characteristics, such as: (1) randomly rotating images by or ; (2) randomly flipping images horizontally; and (3) cropping patches at random locations of images but not around image boundary.

To train the model, a mini-batch stochastic gradient descent method combined with momentum (SGD + m) is used in this work. We started with a learning rate of 0.1 and decreased it to and at the start of the 100,000th and 200,000th iterations, respectively. Other hyperparameter settings include a batch size of 32 and the use of the He initialization method [32] for parameter initialization.

We used L2 loss as the objective function for model training. In comparison to other methods in the literature that only deal with luminance artifacts, we trained simultaneously in both luminance and chroma channels to achieve better results. As a result, in the objective function, we must account for both the luminance and the chroma. The is set to 0.25 in accordance with the 4:1:1 image compression ratio commonly used in the YCbCr field.

2.2. Multi-Frame Restoration Method

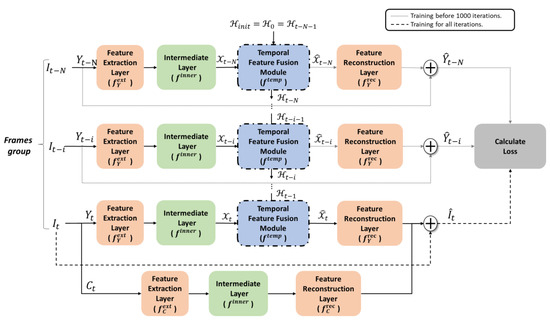

The video compression algorithm will take the previous frames as the reference, search similar information from the reference, and then apply them to the current frame through motion compensation, resulting in a compressed image with complex compression artifacts from prediction loss. To enable our method to handle the video compression artifacts, we design a temporal feature fusion module that allows us to extend our single-image restoration method to multi-frame restoration method. Figure 4 depicts the architecture of our multi-frame restoration method, in which we utilized to integrate the spatial feature and temporal feature from the contiguous frames in the luminance component to retain temporal features of previous frames. In terms of chroma, due to the computational complexity and the fact that chroma is less important than luminance, we continue to use a single image architecture to remove chroma artifacts, and spatial information is sufficient to eliminate visual chroma artifacts. In Figure 4, since the intermediate layer is already able to handle the spatial features of a single frame as discussed previously, we directly use its feature as a spatial feature of the frame as . Then, we concatenate with the previous temporal feature to obtain the module input where the superscript of and indicates the 64-channel features as used in the single-frame restoration scheme, and thus, .

Figure 4.

Flowchart of our architecture for multi-frame restoration method.

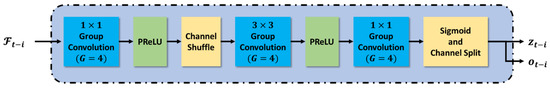

The detailed structure of temporal feature fusion module is shown in Figure 5. We designed the based on the ConvGRU [24,33] concept, and its structure is referred to the ShuffleNet [34] concept to avoid the computational complexity and parameter increase caused by a large number of channels. As shown in Figure 5, the 128 channel features of are first evenly divided into four groups of channel features, and each group is processed by a group convolutional layer with a size of 1 × 1, and then shuffled via ShuffleNet to process different channels of feature information. Next, it is processed sequentially by the group convolutional layers with sizes of 3 × 3 and 1 × 1. That is, we used three group convolutional layers in total to fuse the and . Finally, the sigmoid function is applied to the output to clip it to [0, 1] as the probability of importance, and the output is divided into two gates, each with 64 channels: the update gate z and the output gate o. After the features acquired in time and space are fused, and can be reconstructed and updated using and by Equations (5) and (6), respectively, where denoted the Hadamard product. The residual information for restoring the compressed frame can be generated by a feature reconstruction layer after the new feature is calculated, and the hidden state is passed on to the next frame for processing and updating, as shown in Figure 4.

Figure 5.

The structure of temporal feature fusion module.

The dataset used to train our model was obtained from Xiph.org [35] in video raw file format. This dataset contains a variety of video data, yet we exclude from training the videos, including animation and above 4K resolution. We used HEVC reference software (HM-16.15) [1] to create the compressed images on Xiph.org, and the compression mode is configured using the HEVC standard low-delay P (LDP) with quantization parameter (QP) values of 22, 27, 32, and 37. Because the compression content in LDP mode includes various prediction methods such as interframe and intraframe prediction, this model is suitable for creating a dataset to train the multi-frame restoration model. To be able to compare with the artifact removal technology in the HEVC standard, we will turn off DBF and SAO to compress the video.

We sample multiple sets of frames in each sequence, and each sample set consists of consecutive frames to fit the model we designed. In addition, the video content for some video sequences in the dataset is similar for all frames and has countless duplicate information. When we create the dataset for the model training, we will extract only four sets of frames from each video sequence and skip the frame without substantial content. Considering that HEVC LDP configuration uses four frames as a group of picture (GoP), we also use a similar concept to train the model by a set with four frames (N = 3).

In training, we used the same preprocessing as the trained single-image restoration method. The same random rotation, random horizontal flipping, and random cropping are used to augment the number of training samples in all four continuous frames. Because the parameters of our architecture in spatial processing has already been completed in a previous training, we can use transfer learning to fine-tune this pre-trained model, saving us the time of training the video model. The previously trained model aims to improve JPEG compression artifacts so that its spatial features differ slightly from HEVC. Therefore, the parameters of the spatial model have to be gradually updated and adjusted during training, to best solve the HEVC video compression problem.

There is no need to adjust parameters significantly during the training because the pre-trained model has the ability to remove compressed artifacts, so that we set the initial learning rate to 0.01. After the 50,000th and 100,000th iterations, the learning rate was adjusted to 0.001 and 0.0001, respectively, and the model training was completed after updating all model parameters 150,000 times. We use Equation (7) as the objective function for the first 1000 iterations of training, which requires reconstructing frames in a frame group as shown in Figure 4. To speed up the training, after 1000 iterations, the objective function in Equation (4) is applied to the model, which only measures for the current frame instead of frames to save the complexity. is set as 0.25 in our objective functions.

3. Experiment Results

In our experiment, model training and testing were performed on a personal computer equipped with an Intel i7-4790 CPU, an NVIDIA 1080 8 GB GPU, and the Ubuntu operating system. We used Python and the deep learning framework PyTorch for our neural network model implementation, as well as the HEVC reference software HM16.15 for video compression. To assess the restoration methods, we used the PSNR, SSIM [36], and PSNR-B [37] with larger values indicating higher quality. The PSNR is the most straightforward and widely used method for determining the difference between the reference and evaluated images. In related studies, additional quality evaluation methods, SSIM, and PSNR-B were used to make the image quality assessment more comparable to the human vision. SSIM is currently the most prevalent evaluation method based on human visual system characteristics. There is usually a strong correlation between adjacent pixels in natural images, and the human eye is sensitive to differences in local information. Therefore, unlike PSNR, which computes point-to-point differences in pixels, SSIM computes through the comparison of image blocks. PSNR-B is a method for evaluating block-based compressed images that uses PSNR with the blocking effect factor (BEF) added. BEF calculates the discontinuity of the horizontal or vertical boundary pixels of the blocks in the image to determine the degree of blocking artifacts.

3.1. Experiment of Single-Image Restoration Method

Table 2 summarized the dataset used in the experiment of single image compression artifacts. The training of datasets BSDS500 and DIV2K has been described in Section 2.1. We test our proposed single image retorsion methods using JPEG compression on the LIVE1 [38,39] dataset. We applied the same quality factor (QF) to 10, 20, 30, and 40 as we had to the training set.

Table 2.

Summary of image datasets.

We conducted ablation tests to validate our model design by gradually adding each model component to the model and then analyzing the gains and efficiency of each added model component. The basic model consists of eight convolutional layers with a kernel size. The test model components include recursive networks, residual blocks, and dilated convolution that we introduced in Section 2.1. Finally, we will go over model training after chroma has been added. Table 3 shows the evaluation results of the trained models. The images tested here were compressed using a JPEG quality factor of 10. According to the data tested by each model, our model gradually increases in performance as the design is optimized. After adding the color processing, there is only a slight decrease in Y channel quality, but there is a significant increase in chroma channels. In the end, the performance of our proposed network is further improved by training with DIV2K images, allowing it to deal with all other QFs using the same model parameters.

Table 3.

Average PSNR for the LIVE1 dataset (QF = 10).

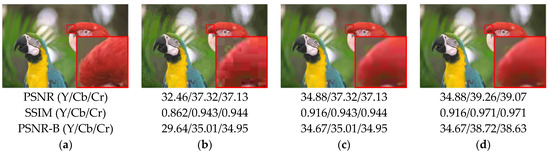

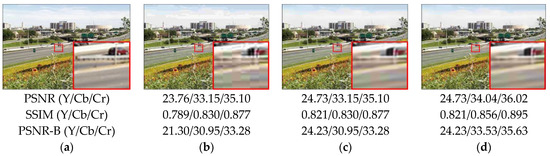

Figure 6 and Figure 7 show how the addition of a color processing component improves not only the evaluation metrics, but also the visual quality. In Figure 6b,c, if our proposed method only deals with luminance, the overall artifacts of the image have already been improved greatly when compared to original compressed images, but there are still visible color artifacts. Figure 6d shows how the color block that occurred in Figure 6c can be effectively restored. We can obtain the same improvement results by referring to Figure 7. It proves the importance of restoring color artifacts, which is why we developed our method for processing both the luminance and chroma.

Figure 6.

Comparison of compressed and restored images on parrots from LIVE1 (QF = 10). (a) Ground truth; (b) compressed image; (c) image with only Y channel restored; (d) image with both Y and CbCr channels restored.

Figure 7.

Comparison of compressed and restored images on Flowersonil35 from LIVE1 (QF = 10). (a) Ground truth; (b) compressed image; (c) image with only Y channel restored; (d) image with both Y and CbCr channels restored.

Table 4 compares the results of our method to those of other studies [10,12,13,14,15,16,17] tested in the LIVE1 dataset. Since no other existing work provides data for the chroma channel, we will only compare our chroma improvement to the chroma quality of JPEG-compressed images. Moreover, since the SSIM settings used in the studies conducted in [14,15,16] are not the same as those in other works, we only use PSNR and PSNR-B for comparison. The values in parentheses represent the percentage improvement of our proposed method compared to other methods.

Table 4.

Comparison of performance to other works on the LIVE1 dataset.

Among all the methods, only our method can manage the different degree of artifacts using the same model parameters, and the results evaluated under both PSNR and PSNR-B are very close to the first place, with the difference not exceeding 0.1 dB at most. It is worth noting that the parameter amount of these methods ranges from 3 to 22 times that of our model, and several sets of model parameters must be prepared for various degrees of artifacts, so the total parameter amount is several times higher than that indicated in Table 4.

3.2. Experiment of Multi-Frame Restoration Method

To create the test set for evaluating the multi-frame restoration model performance, we employed the same settings as the training set to compress the video from HEVC test sequences [40]. We used the low-delay profile (LDP) in the HEVC default configuration, with QP values 22, 27, 32, and 37, and disabled the in-loop filter. We do not test Class F, containing artificially produced sequences in the HEVC test sequences, because our model was developed for natural scenes. The evaluation can fairly justify whether our method can effectively remove the artifacts by utilizing the relationship between the frames. Table 5 summarizes all of the datasets used to train and test our multi-frame restoration method.

Table 5.

Summary of video datasets.

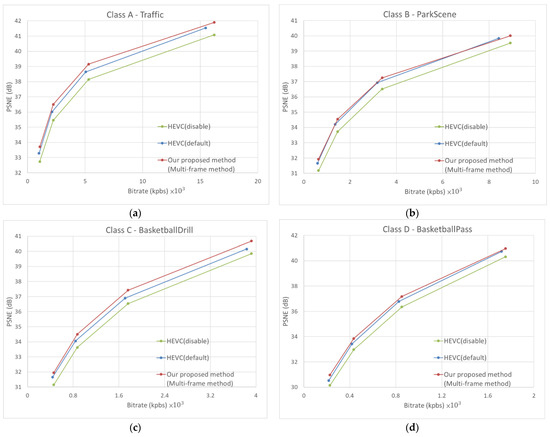

We used the BD-PSNR and BD-BR proposed by Bjontegaard et al. [41] to compare and measure the visual quality difference and bitrate difference, respectively, so as to evaluate the performance of video restoration. The higher the BD-PSNR is, the better the encoded quality is, while the lower the BD-BR is, the fewer bitrates are required for the encoded bitstream. As shown in Table 6, our proposed method can manage artifacts caused by HEVC compression with disabled DBF and SAO. In comparison to HEVC encoding with enabled SAO and DBF, our method improves BD-PSNR by 0.18 dB, while reducing BD-rate by 5.06%. Figure 8 presents the rate-distortion (RD) results obtained for randomly selected sequences in each class (Traffic from Class A, ParkScene from Class B, BasketballDrill from Class C, BasketballPass from Class D, FourPeople from Class E), as well as the overall performance. These figures represent the PSNR performance comparison between our proposed method and HEVC on luminance frames. It can be seen that the PSNR gain of our method is higher than the default mode of HEVC for different classes of sequences and QP values.

Table 6.

BD-PSNR and BD-BR comparison to the HEVC baseline with LDP configuration.

Figure 8.

RD-curve results for difference sequence using QP = 22, 27, 32, and 37. (a) Class A—Traffic; (b) Class B—ParkScene; (c) Class C—BasketballDrill; (d) Class D—BasketballPass; (e) Class E—FourPeople; (f) average performance over all sequences.

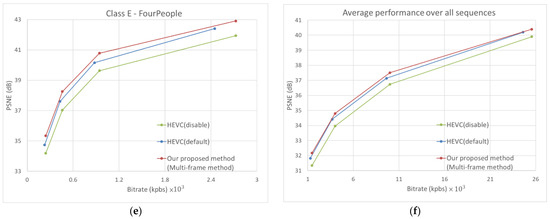

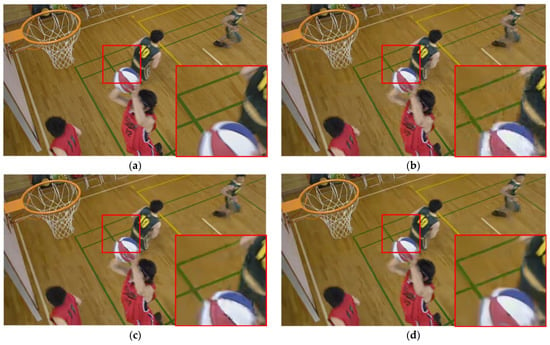

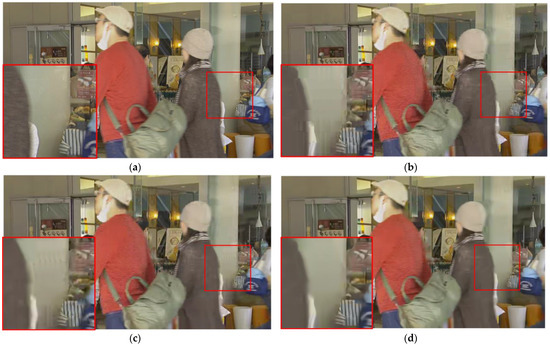

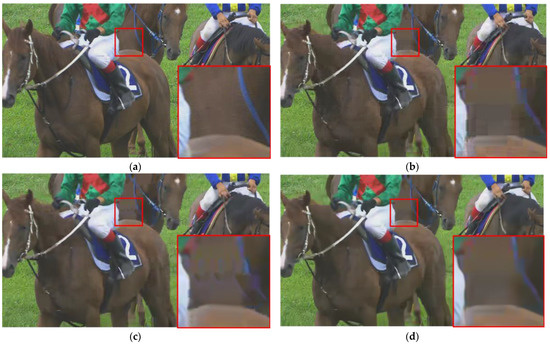

Figure 9, Figure 10 and Figure 11 present a visual comparison of the HEVC disabled in-loop filter, the HEVC baseline, and our proposed method. It is clear that our method can effectively remove not only blocking and ringing artifacts, but also temporal artifacts such as edge floating [42].

Figure 9.

Comparison of visual experience in BasketballDrill from HEVC test sequences (QP = 37). (a) Ground truth; (b) HEVC disabled in-loop filter; (c) HEVC baseline; (d) our proposed method.

Figure 10.

Comparison of visual experience in BQMall from HEVC test sequences (QP = 37). (a) Ground truth; (b) HEVC disabled in-loop filter; (c) HEVC baseline; (d) our proposed method.

Figure 11.

Comparison of visual experience in RaceHorses from HEVC test sequences (QP = 37). (a) Ground truth; (b) HEVC disabled in-loop filter; (c) HEVC baseline; (d) our proposed method.

Although the blocking artifacts visible in the HEVC disabled in-loop filter are absent in the HEVC baseline results, temporal artifacts and color blocks are present. For example, there is a grain artifact in the ball in Figure 9c, a jerkiness artifact in the wall in Figure 10c, and mosquito noise in the horse in Figure 11c. Our method effectively removes all of the above types of artifacts caused by video compression, as shown in Figure 9d, Figure 10d, and Figure 11d.

We compare our proposed model to other multi-frame restoration methods [5,6] that operate in LDP configuration. The data provided in these works are used to compare the model restoration ability. Because RHCNN [5] does not use the dataset in the same way that our method and He et al. [6] do, we compare our restoration performance to RHCNN [5] separately in Table 7.

Table 7.

Comparison of our method to RHCNN [5] using HEVC test sequences.

In comparison to RHCNN [5], the authors used the majority of the HEVC test sequences as their training set, and the test sets are mostly the same as our Xiph [35] training sets. The comparison in Table 7 is for sequences that both belong to the same test set, and this table compares the I frame and P frame separately by referring to RHCNN [5] comparison fashion. The results show our model significantly outperforms RHCNN in almost all cases, with the exception of the I frame type in the PeopleOnStreet sequence.

Next, we compare our method to that of He et al. [6]. He et al. [6] use postprocessing to improving the quality of the standard HEVC, enabling both SAO and DBF. Our model can not only completely take on varying degrees of compression artifacts, as previously shown, but our model also approaches the performance of the model trained for a specific QP value in [6], as shown in Table 8. Please keep in mind that in Table 8, we compare PSNR rather than BD-PSNR to match the presentation in [6]. Comparing PSNR in a fixed QP is not very informative because the real bitrate usage differs between the two methods even when the same QP is used. Table 8 simply indicates that both methods are of the same quality, even if their performance is comparable.

Table 8.

Comparison of our method to [6] in terms of PSNR (dB) (QP = 37).

Table 6, Table 7 and Table 8 shows that our model has nearly the same restoration capability as other methods [5,6]. Although our method may slightly lose the score in some cases, we only need to use one model to manage all cases of QPs, unlike other methods [5,6] that train a specific model for each QP. As shown in Table 9, our model is much lighter than other methods, requiring only about one-twelfth of the parameters (269k vs. 3098k/3340k) used by other methods. Furthermore, if we are to manage four QPs, the amount of our parameters will only be about 1/48 of theirs.

Table 9.

Comparisons of the number of parameters to other methods based on the multi-frame restoration model.

4. Conclusions

We have developed a lightweight method for removing compression artifacts. To achieve this, we employed a recursive network that employs only eight layers of convolutional parameters to simulate a 20-layer CNN and adopted dilated convolutions to increase the receptive field without increasing the depth of the networks. To remove the artifacts caused by video compression, we designed a module that fuses temporal features to extract the features between the luminance components of adjacent frames, and then established a single model using a single set of parameters for all levels of compression qualities and predicted frame modes. In comparison to previous CNN-based compression artifact removal work, our method can effectively remove both luminance and chroma artifacts to improve the overall visual quality. In addition, our model integrates spatial and temporal information to reduce artifacts caused by inter-prediction in video. Our method only requires 1/48 of the parameter amount of these methods to achieve the comparable performance.

Author Contributions

Conceptualization, T.-Y.K.; Methodology, T.-Y.K.; Software, C.-H.C.; Validation, Y.-J.W. and C.-H.C.; Investigation, Y.-J.W.; Resources, T.-Y.K.; Data curation, Y.-J.W.; Writing—original draft, Y.-J.W.; Writing—review & editing, T.-Y.K. and P.-C.S.; Supervision, T.-Y.K. and P.-C.S. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Science and Technology Council grant number 111-2221-E-027-065.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Sullivan, G.J.; Ohm, J.-R.; Han, W.-J.; Wiegand, T. Overview of the high efficiency video coding (HEVC) standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1649–1668. [Google Scholar] [CrossRef]

- Norkin, A.; Bjontegaard, G.; Fuldseth, A.; Narroschke, M.; Ikeda, M.; Andersson, K.; Zhou, M.; Van der Auwera, G. HEVC deblocking filter. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1746–1754. [Google Scholar] [CrossRef]

- Fu, C.-M.; Alshina, E.; Alshin, A.; Huang, Y.-W.; Chen, C.-Y.; Tsai, C.-Y.; Hsu, C.-W.; Lei, S.-M.; Park, J.-H.; Han, W.-J. Sample adaptive offset in the HEVC standard. IEEE Trans. Circuits Syst. Video Technol. 2012, 22, 1755–1764. [Google Scholar] [CrossRef]

- Dai, Y.; Liu, D.; Wu, F. A convolutional neural network approach for post-processing in HEVC intra coding. In Proceedings of the International Conference on Multimedia Modeling, Reykjavik, Iceland, 4–6 January 2017; pp. 28–39. [Google Scholar]

- Zhang, Y.; Shen, T.; Ji, X.; Zhang, Y.; Xiong, R.; Dai, Q. Residual highway convolutional neural networks for in-loop filtering in HEVC. IEEE Trans. Image Process. 2018, 27, 3827–3841. [Google Scholar] [CrossRef]

- He, X.; Hu, Q.; Zhang, X.; Zhang, C.; Lin, W.; Han, X. Enhancing HEVC compressed videos with a partition-masked convolutional neural network. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 216–220. [Google Scholar]

- Park, W.-S.; Kim, M. CNN-based in-loop filtering for coding efficiency improvement. In Proceedings of the 2016 IEEE 12th Image, Video, and Multidimensional Signal Processing Workshop (IVMSP), Bordeaux, France, 11–12 July 2016; pp. 1–5. [Google Scholar]

- Wang, Y.; Zhu, H.; Li, Y.; Chen, Z.; Liu, S. Dense Residual Convolutional Neural Network based In-Loop Filter for HEVC. In Proceedings of the 2018 IEEE Visual Communications and Image Processing (VCIP), Taichung, Taiwan, 9–12 December 2018; pp. 1–4. [Google Scholar]

- Yang, R.; Xu, M.; Wang, Z. Decoder-side HEVC quality enhancement with scalable convolutional neural network. In Proceedings of the 2017 IEEE International Conference on Multimedia and Expo (ICME), Hong Kong, China, 10–14 July 2017; pp. 817–822. [Google Scholar]

- Dong, C.; Deng, Y.; Change Loy, C.; Tang, X. Compression artifacts reduction by a deep convolutional network. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 576–584. [Google Scholar]

- Yu, K.; Dong, C.; Loy, C.C.; Tang, X. Deep convolution networks for compression artifacts reduction. arXiv 2016, arXiv:1608.02778. [Google Scholar]

- Svoboda, P.; Hradis, M.; Barina, D.; Zemcik, P. Compression artifacts removal using convolutional neural networks. arXiv 2016, arXiv:1605.00366. [Google Scholar]

- Cavigelli, L.; Hager, P.; Benini, L. CAS-CNN: A deep convolutional neural network for image compression artifact suppression. In Proceedings of the 2017 International Joint Conference on Neural Networks (IJCNN), Anchorage, AK, USA, 14–19 May 2017; pp. 752–759. [Google Scholar]

- Zhan, W.; He, X.; Xiong, S.; Ren, C.; Chen, H. Image deblocking via joint domain learning. J. Electron. Imaging 2018, 27, 033006. [Google Scholar] [CrossRef]

- Zhang, K.; Zuo, W.; Chen, Y.; Meng, D.; Zhang, L. Beyond a gaussian denoiser: Residual learning of deep cnn for image denoising. IEEE Trans. Image Process. 2017, 26, 3142–3155. [Google Scholar] [CrossRef] [PubMed]

- Tai, Y.; Yang, J.; Liu, X.; Xu, C. Memnet: A persistent memory network for image restoration. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4539–4547. [Google Scholar]

- Schiopu, I.; Munteanu, A. Deep Learning Post-Filtering Using Multi-Head Attention and Multiresolution Feature Fusion for Image and Intra-Video Quality Enhancement. Sensors 2022, 22, 1353. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Jia, C.; Wang, S.; Zhang, X.; Wang, S.; Ma, S. Spatial-temporal residue network based in-loop filter for video coding. In Proceedings of the 2017 IEEE Visual Communications and Image Processing (VCIP), St. Petersburg, FL, USA, 10–13 December 2017; pp. 1–4. [Google Scholar]

- Yang, R.; Xu, M.; Wang, Z.; Li, T. Multi-frame quality enhancement for compressed video. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6664–6673. [Google Scholar]

- Kuo, T.-Y.; Wei, Y.-J.; Chao, C.-H. Restoration of Compressed Picture Based on Lightweight Convolutional Neural Network. In Proceedings of the 2019 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Taipei, Taiwan, 3–6 December 2019; pp. 1–2. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Ballas, N.; Yao, L.; Pal, C.; Courville, A. Delving deeper into convolutional networks for learning video representations. arXiv 2015, arXiv:1511.06432. [Google Scholar]

- Guo, Q.; Yu, Z.; Wu, Y.; Liang, D.; Qin, H.; Yan, J. Dynamic recursive neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5147–5156. [Google Scholar]

- Jian, L.; Yang, X.; Liu, Z.; Jeon, G.; Gao, M.; Chisholm, D. SEDRFuse: A symmetric encoder–decoder with residual block network for infrared and visible image fusion. IEEE Trans. Instrum. Meas. 2020, 70, 1–15. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 630–645. [Google Scholar]

- Qin, K.; Huang, W.; Zhang, T. Multitask deep label distribution learning for blood pressure prediction. Inf. Fusion 2023, 95, 426–445. [Google Scholar] [CrossRef]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 898–916. [Google Scholar] [CrossRef] [PubMed]

- Agustsson, E.; Timofte, R. Ntire 2017 challenge on single image super-resolution: Dataset and study. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 126–135. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1026–1034. [Google Scholar]

- Lu, X.; Wang, W.; Danelljan, M.; Zhou, T.; Shen, J.; Van Gool, L. Video object segmentation with episodic graph memory networks. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Part III 16. pp. 661–679. [Google Scholar]

- Zhang, X.; Zhou, X.; Lin, M.; Sun, J. Shufflenet: An extremely efficient convolutional neural network for mobile devices. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6848–6856. [Google Scholar]

- Xiph.org. Xiph.org Video Test Media. Available online: https://media.xiph.org/video/derf/ (accessed on 20 December 2022).

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Yim, C.; Bovik, A.C. Quality assessment of deblocked images. IEEE Trans. Image Process. 2010, 20, 88–98. [Google Scholar] [PubMed]

- Sheikh, H.R.; Wang, Z.; Cormack, L.; Bovik, A.C. LIVE Image Quality Assessment Database. Available online: https://live.ece.utexas.edu/research/quality/subjective.htm (accessed on 20 December 2022).

- Sheikh, H.R.; Sabir, M.F.; Bovik, A.C. A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans. Image Process. 2006, 15, 3440–3451. [Google Scholar] [CrossRef] [PubMed]

- Bossen, F. Common HM test conditions and software reference Configurations. In Proceedings of the Joint Collaborative Team on Video Coding (JCT-VC) Meeting, San Jose, CA, USA, 11–20 July 2012. Report No. JCTVC-G1100. [Google Scholar]

- Bjontegaard, G. Calculation of average PSNR differences between RD-curves. Document VCEG-M33 ITU-T SG16/Q6. In Proceedings of the 13th Video Coding Experts Group (VCEG) Meeting, Austin, TX, USA, 2–4 April 2001. [Google Scholar]

- Zeng, K.; Zhao, T.; Rehman, A.; Wang, Z. Characterizing perceptual artifacts in compressed video streams. In Proceedings of the Human Vision and Electronic Imaging XIX, San Francisco, CA, USA, 3–6 February 2014; pp. 173–182. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).