A Model for Infrastructure Detection along Highways Based on Remote Sensing Images from UAVs

Abstract

1. Introduction

- An improved anchor-free multi-scale target detection method, MSA-CenterNet based on CenterNet [5], is proposed;

- To better detect various types of infrastructures of different sizes in UAV images, we introduce a multi-scale feature fusion module to enhance the feature extraction capability of the network model and obtain more contextual semantic information;

- Due to the high proportion of small targets, such as warning stakes, milestones, and traffic signs in the dataset, an attention mechanism is used to enrich the semantic information of the feature map while enhancing the feature representation capability;

- A high-resolution infrastructure dataset along the highway is obtained through processing such as UAV photography and filtering and labeling to provide basic training data.

2. Related Works

3. Proposed Method

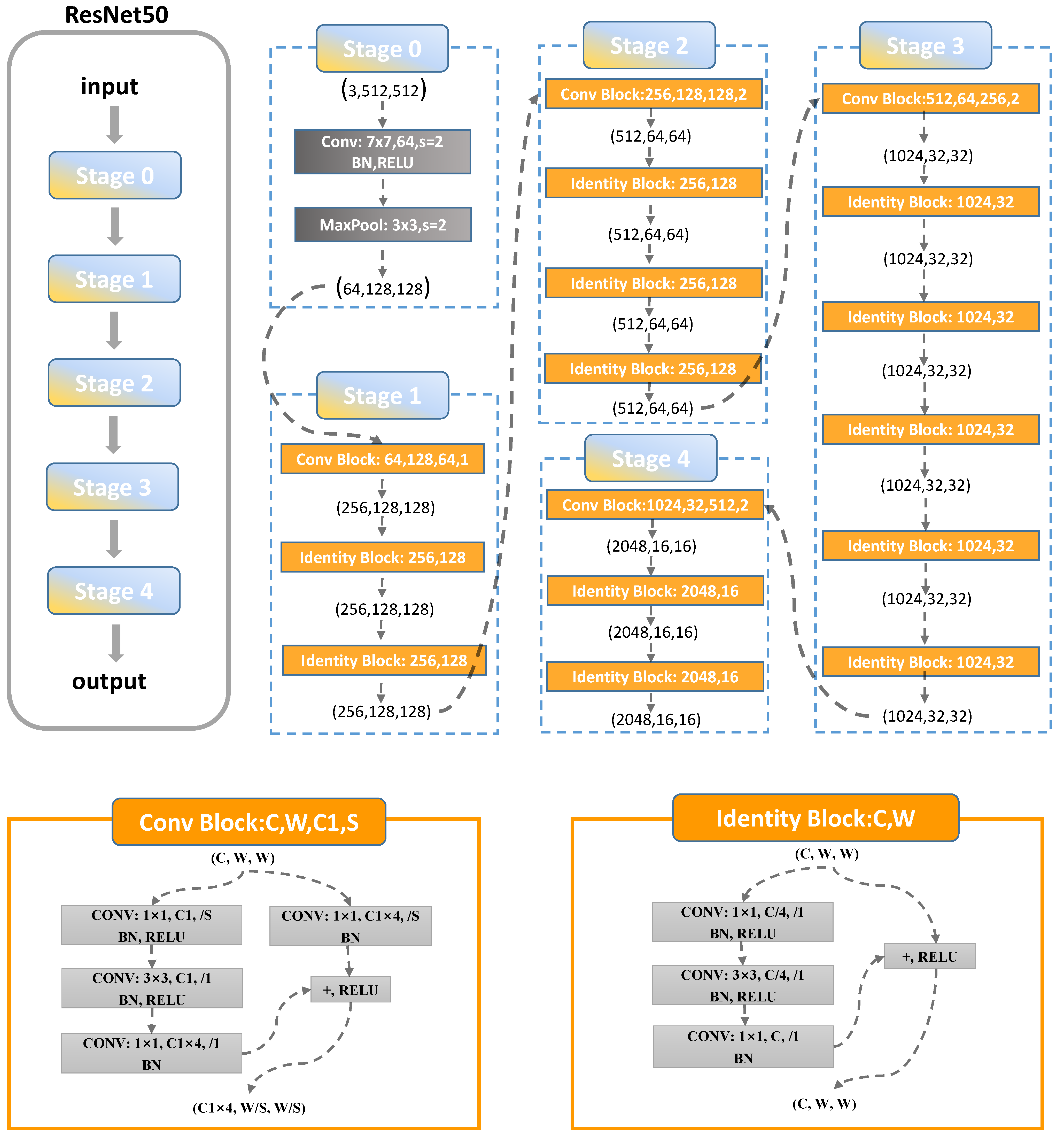

3.1. Backbone Network Overview

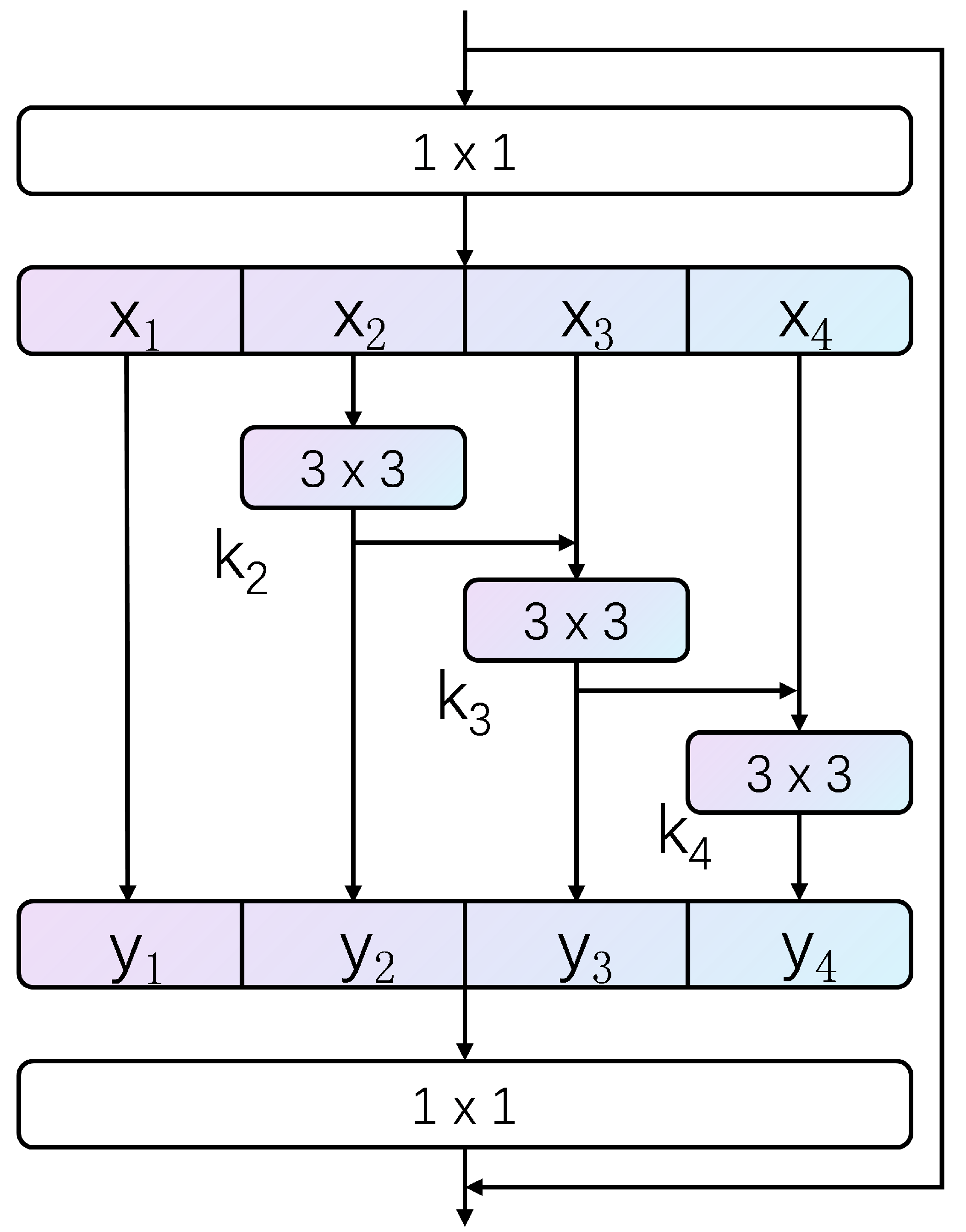

3.2. Multi-Scale Feature Fusion Module

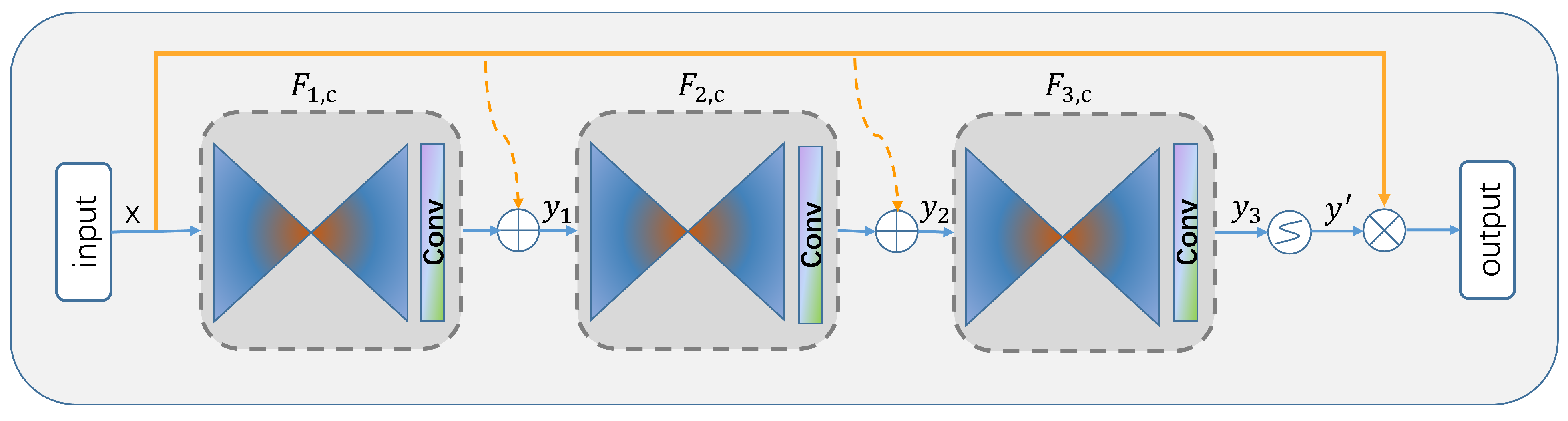

3.3. Inverted Residual Attention Module

3.4. Loss Function

4. Experiments Section

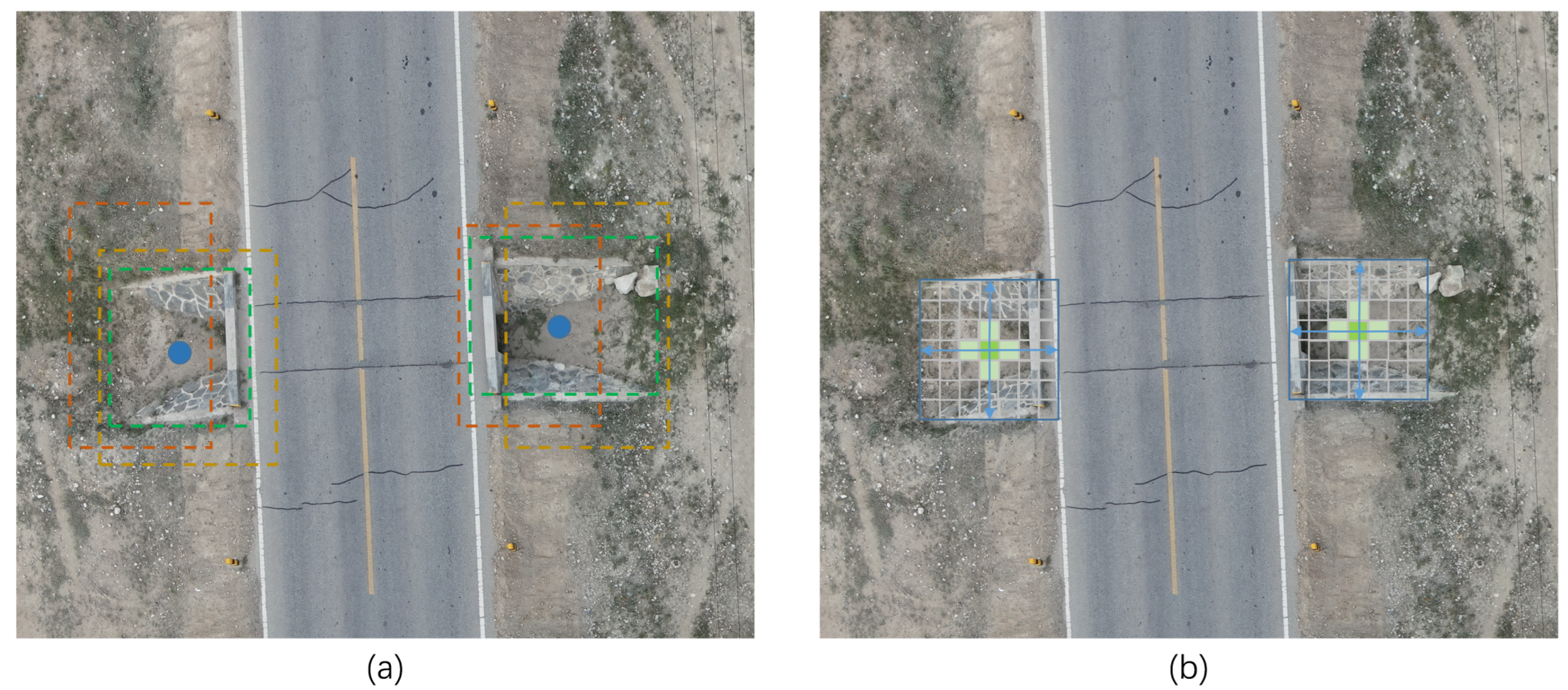

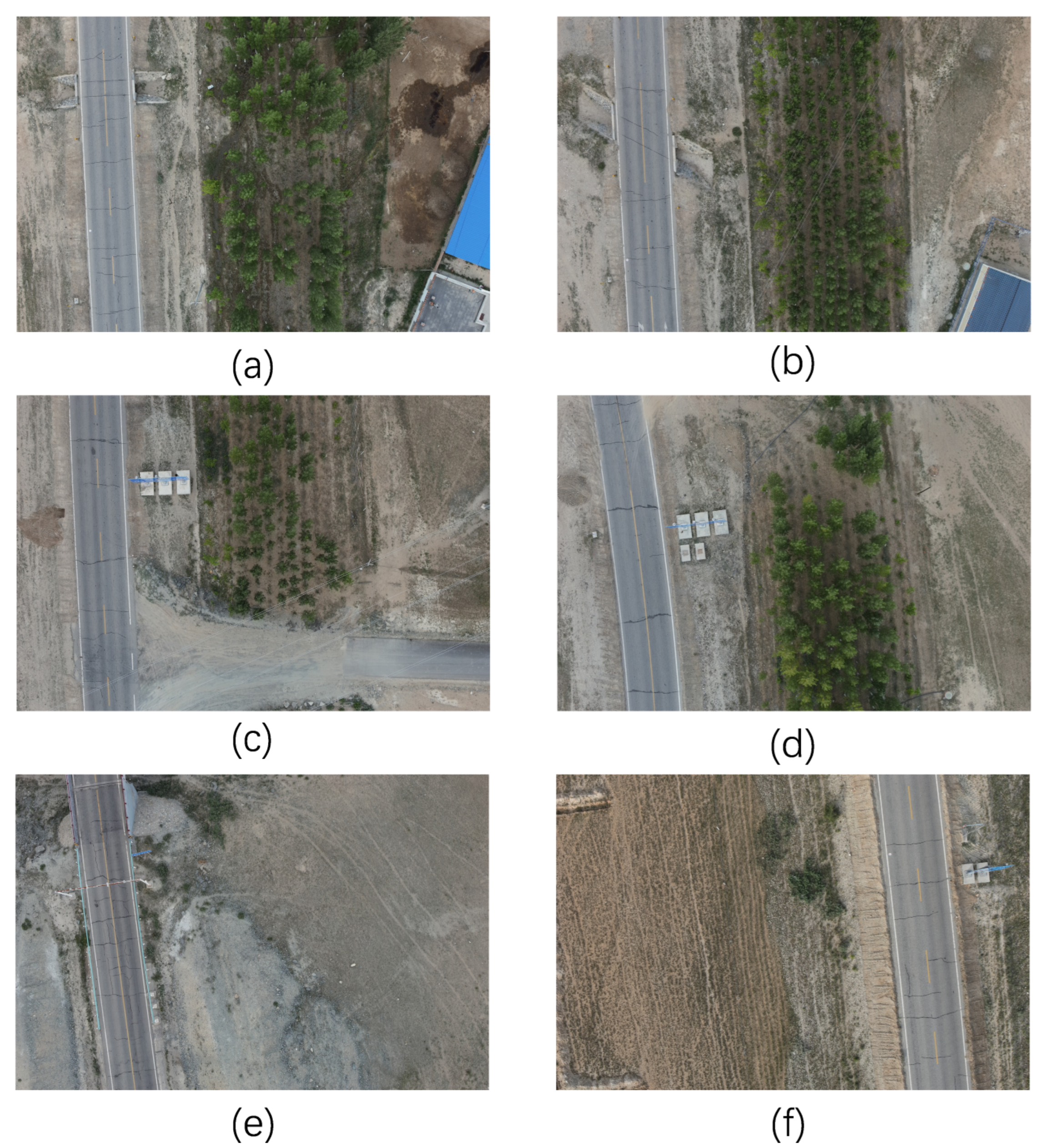

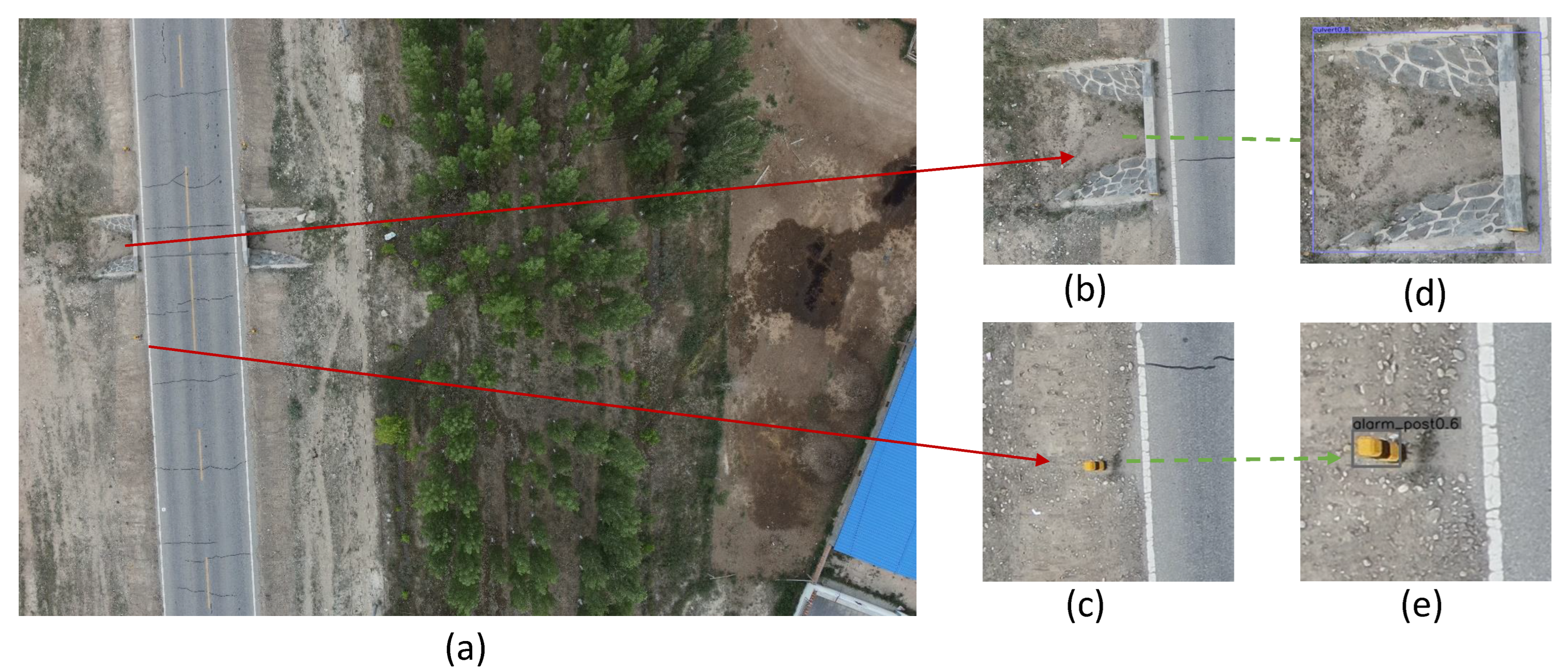

4.1. Infrastructure Datasets along Highways

4.2. Evaluation Metrics

4.3. Ablation Experiments

4.4. Comparative Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wu, J.; Wang, X.; Dang, Y.; Lv, Z. Digital twins and artificial intelligence in transportation infrastructure: Classification, application, and future research directions. Comput. Electr. Eng. 2022, 101, 107983. [Google Scholar] [CrossRef]

- Macioszek, E. Changes in values of traffic volume-case study based on general traffic measurements in Opolskie Voivodeship (Poland). In Proceedings of the Directions of Development of Transport Networks and Traffic Engineering: 15th Scientific and Technical Conference “Transport Systems. Theory and Practice 2018”, Katowice, Poland, 17–19 September 2018; Selected Papers. Springer: Berlin/Heidelberg, Germany, 2019; pp. 66–76. [Google Scholar]

- Macioszek, E. Oversize cargo transport in road transport–problems and issues. Sci. J. Silesian Univ. Technol. Ser. Transp. 2020, 108, 133–140. [Google Scholar] [CrossRef]

- Gallo, I.; Rehman, A.U.; Dehkordi, R.H.; Landro, N.; La Grassa, R.; Boschetti, M. Deep Object Detection of Crop Weeds: Performance of YOLOv7 on a Real Case Dataset from UAV Images. Remote Sens. 2023, 15, 539. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2016, arXiv:1512.02325. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2018, arXiv:1708.02002. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Sydney, NSW, Australia, 2015; Volume 28. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: Delving Into High Quality Object Detection. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar] [CrossRef]

- Jeziorek, K.; Kryjak, T. Traffic sign detection and recognition using event camera image reconstruction. arXiv 2022, arXiv:2212.08387. [Google Scholar] [CrossRef]

- Manzari, O.N.; Boudesh, A.; Shokouhi, S.B. Pyramid Transformer for Traffic Sign Detection. arXiv 2022, arXiv:2207.06067. [Google Scholar] [CrossRef]

- Mehta, D.; Mehta, A.; Narang, P.; Chamola, V.; Zeadally, S. Deep Learning Enhanced UAV Imagery for Critical Infrastructure Protection. IEEE Internet Things Mag. 2022, 5, 30–34. [Google Scholar] [CrossRef]

- Velusamy, P.; Rajendran, S.; Mahendran, R.K.; Naseer, S.; Shafiq, M.; Choi, J.G. Unmanned Aerial Vehicles (UAV) in Precision Agriculture: Applications and Challenges. Energies 2022, 15, 217. [Google Scholar] [CrossRef]

- Lygouras, E.; Santavas, N.; Taitzoglou, A.; Tarchanidis, K.; Mitropoulos, A.; Gasteratos, A. Unsupervised Human Detection with an Embedded Vision System on a Fully Autonomous UAV for Search and Rescue Operations. Sensors 2019, 19, 3542. [Google Scholar] [CrossRef] [PubMed]

- Almagbile, A. Estimation of crowd density from UAVs images based on corner detection procedures and clustering analysis. Geo-Spat. Inf. Sci. 2019, 22, 23–34. [Google Scholar] [CrossRef]

- Krajewski, R.; Bock, J.; Kloeker, L.; Eckstein, L. The highD Dataset: A Drone Dataset of Naturalistic Vehicle Trajectories on German Highways for Validation of Highly Automated Driving Systems. In Proceedings of the 2018 21st International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2118–2125. [Google Scholar] [CrossRef]

- Zhu, P.; Wen, L.; Bian, X.; Ling, H.; Hu, Q. Vision Meets Drones: A Challenge. arXiv 2018, arXiv:1804.07437. [Google Scholar] [CrossRef]

- Vaddi, S.; Kumar, C.; Jannesari, A. Efficient Object Detection Model for Real-Time UAV Applications. arXiv 2019, arXiv:1906.00786. [Google Scholar] [CrossRef]

- Tang, T.; Zhou, S.; Deng, Z.; Zou, H.; Lei, L. Vehicle Detection in Aerial Images Based on Region Convolutional Neural Networks and Hard Negative Example Mining. Sensors 2017, 17, 336. [Google Scholar] [CrossRef]

- Duan, T.; Sang, L.; Hu, P.; Liu, R.; Wang, L. Panoramic reconstruction of central green belt of different levels of highway based on UAV platform. In Proceedings of the 2019 5th International Conference on Transportation Information and Safety (ICTIS), Liverpool, UK, 14–17 July 2019; pp. 229–235. [Google Scholar] [CrossRef]

- Li, Z.; Zhang, B. Application Study of UAV and IOT Technology in Intelligent Detection of Bridge Engineering. In Proceedings of the 2022 International Conference on Machine Learning, Cloud Computing and Intelligent Mining (MLCCIM), Xiamen, China, 5–7 August 2022; pp. 13–18. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. arXiv 2017, arXiv:1612.03144. [Google Scholar] [CrossRef]

- Gao, S.H.; Cheng, M.M.; Zhao, K.; Zhang, X.Y.; Yang, M.H.; Torr, P. Res2Net: A New Multi-Scale Backbone Architecture. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 652–662. [Google Scholar] [CrossRef] [PubMed]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual Attention Network for Image Classification. arXiv 2017, arXiv:1704.06904. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Tzutalin. LabelImg. Available online: https://github.com/tzutalin/labelImg (accessed on 4 April 2023).

| Dataset | Pictures | Culverts | Alarm Posts | Traffic Signs | Milestones | Isolated Gate | Kerb Sign | Portal Frame | Monitor |

|---|---|---|---|---|---|---|---|---|---|

| Training set | 1200 | 1875 | 5766 | 970 | 418 | 533 | 457 | 472 | 389 |

| Validation set | 150 | 158 | 724 | 135 | 41 | 83 | 62 | 50 | 46 |

| Test set | 150 | 293 | 562 | 78 | 72 | 56 | 55 | 65 | 51 |

| Total | 1500 | 2326 | 7052 | 1183 | 531 | 672 | 574 | 587 | 486 |

| Positive | Negative | |

|---|---|---|

| True | TP (forecast as a positive example; actual as a positive example) | TN (forecast as a negative example; actual as a negative example) |

| False | FP (forecast as a positive example; actual as a negative example) | FN (forecast as a negative example; actual as a positive example) |

| Model | Precision/% | Recall/% | mAP/% |

|---|---|---|---|

| Baseline | 81.5 | 82.3 | 83.6 |

| + ResNet50 | 81.8 | 83.6 | 84.2 |

| + ResNet50 + Feature_Fusion | 86.7 | 84.3 | 85.1 |

| + Attention | 84.2 | 88.9 | 84.3 |

| + ResNet50 + Attention | 87.4 | 88.1 | 86.3 |

| + ResNet50 + Feature_Fusion + Attention | 89.2 | 90.6 | 86.7 |

| Models | CL | AP | TS | IG | MS | KS | PF | MO | mAP |

|---|---|---|---|---|---|---|---|---|---|

| SSD | 81.9 | 65.1 | 78.6 | 63.4 | 69.5 | 73.1 | 78.2 | 71.4 | 74.9 |

| Faster R-CNN | 83.5 | 72.8 | 82.3 | 64.7 | 82.2 | 75.5 | 76.5 | 72.7 | 77.4 |

| RetinaNet | 86.7 | 64.6 | 81.4 | 65.6 | 71.6 | 73.7 | 78.8 | 70.6 | 78.3 |

| YOLOv5 | 90.4 | 79.3 | 84.8 | 73.7 | 83.1 | 74.3 | 84.3 | 73.8 | 82.6 |

| Ours | 90.7 | 86.5 | 87.7 | 68.3 | 89.6 | 80.2 | 78.5 | 76.1 | 86.7 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jiang, X.; Cui, Q.; Wang, C.; Wang, F.; Zhao, Y.; Hou, Y.; Zhuang, R.; Mei, Y.; Shi, G. A Model for Infrastructure Detection along Highways Based on Remote Sensing Images from UAVs. Sensors 2023, 23, 3847. https://doi.org/10.3390/s23083847

Jiang X, Cui Q, Wang C, Wang F, Zhao Y, Hou Y, Zhuang R, Mei Y, Shi G. A Model for Infrastructure Detection along Highways Based on Remote Sensing Images from UAVs. Sensors. 2023; 23(8):3847. https://doi.org/10.3390/s23083847

Chicago/Turabian StyleJiang, Xian, Qing Cui, Chongguo Wang, Fan Wang, Yingxiang Zhao, Yongjie Hou, Rujun Zhuang, Yunfei Mei, and Gang Shi. 2023. "A Model for Infrastructure Detection along Highways Based on Remote Sensing Images from UAVs" Sensors 23, no. 8: 3847. https://doi.org/10.3390/s23083847

APA StyleJiang, X., Cui, Q., Wang, C., Wang, F., Zhao, Y., Hou, Y., Zhuang, R., Mei, Y., & Shi, G. (2023). A Model for Infrastructure Detection along Highways Based on Remote Sensing Images from UAVs. Sensors, 23(8), 3847. https://doi.org/10.3390/s23083847