Abstract

With the changes in human work and lifestyle, the incidence of cervical spondylosis is increasing substantially, especially for adolescents. Cervical spine exercises are an important means to prevent and rehabilitate cervical spine diseases, but no mature unmanned evaluating and monitoring system for cervical spine rehabilitation training has been proposed. Patients often lack the guidance of a physician and are at risk of injury during the exercise process. In this paper, we first propose a cervical spine exercise assessment method based on a multi-task computer vision algorithm, which can replace physicians to guide patients to perform rehabilitation exercises and evaluations. The model based on the Mediapipe framework is set up to construct a face mesh and extract features to calculate the head pose angles in 3-DOF (three degrees of freedom). Then, the sequential angular velocity in 3-DOF is calculated based on the angle data acquired by the computer vision algorithm mentioned above. After that, the cervical vertebra rehabilitation evaluation system and index parameters are analyzed by data acquisition and experimental analysis of cervical vertebra exercises. A privacy encryption algorithm combining YOLOv5 and mosaic noise mixing with head posture information is proposed to protect the privacy of the patient’s face. The results show that our algorithm has good repeatability and can effectively reflect the health status of the patient’s cervical spine.

1. Introduction

Cervical spondylosis is ranked as one of the most common pain problems, especially in Asia. According to [1], China had a pain incidence ratio of over 30% prior to the year 2016. Nearly 150 million people in China suffer from cervical spondylosis, and figures showed a gradual trend towards a younger average age. Of all the groups that develop cervical spondylosis, the adolescent group that suffers from it deserves our special attention [2,3,4,5,6]. According to medical research, active exercise of the neck, shoulder, and back muscles can enhance muscle strength, reinforce the normal physiological curvature of the cervical spine, increase the stability of the biomechanical structure of the cervical spine, and promote blood and lymphatic circulation, which is beneficial to the prevention and health recovery of cervical spondylosis [7]. However, due to the diverse types of cervical spondylosis and complex pathogenesis, such as cervical osteoarthritis, cervical disc disease, cervical stenosis, vertebral fracture and malignancies, the rehabilitation effects of the intensity and frequency of cervical spine rehabilitation exercises on different types of cervical spine diseases are not clear. This is limited by key issues such as the absence of cervical spine rehabilitation measurement methods and the lack of big data on the rehabilitation process. Moreover, in traditional medical programs, in the face of cervical spondylosis, medical experts are often required to manually guide patients through rehabilitation training, manually assess the recovery status of the patient’s cervical spine based on experience, and make adjustments to the rehabilitation training program accordingly, in order to enable patients with cervical spondylosis to fully recover. However, traditional medical rehabilitation training programs require professional guidance at all times, with low unmanned levels and high labor costs. To this end, this paper proposes a cervical vertebra rehabilitation evaluation method based on a computer vision algorithm using artificial intelligence technology. In addition, a cervical spine exercise training dataset is constructed for algorithm validation to provide artificial intelligence technology support for long-term tracking of patient health conditions and efficacious assessment of physical rehabilitation exercise.

In particular, head pose estimation (HPE) is an important part of the whole evaluation process, which is the task of obtaining a 3D head pose deflection angle parameter through the analysis of a 2D digital image. The methods of head pose estimation can be broadly classified into two types based on whether they depend on landmarks.

Landmark-dependent methods: The core idea of this type of methods is to extract the landmarks on a human face first and then substitute these landmarks into the formula to calculate the head pose. In recent years, with the rapid development of convolutional neural networks (CNN), many CNN-based face landmark extraction methods have been proposed. For example, Liu et al. proposed a head pose estimation approach including two phases: the 3D face reconstruction and the 3D–2D matching keypoints. They first used CNNs, jointly optimized by an asymmetric Euclidean loss and a keypoint loss, to reconstruct a personalized 3D face model from the 2D image, and then used an iterative optimization algorithm to match the landmarks on the 2D image and the 3D face model reconstructed before under the constraint of perspective transformation [8]. Ranjan, R. et al. used a separate CNN to fuse the intermediate layers of a deep CNN and used a multi-task learning algorithm to operate on the fused features. The method was called HyperFace, performing the multiple tasks of face detection, landmarks localization, pose estimation and gender recognition at the same time, and outperformed many other competitive algorithms in each task [9]. Guo et al. proposed a regression framework called 3DDFA-V2, which utilizes a meta-joint optimization strategy to enhance speed and guarantee accuracy simultaneously [10]. However, CNNs often have difficulty solving the problem when the image structure does not conform to translation invariance. The graph convolutional neural network (GCN), on the other hand, can sometimes provide better results when dealing with graph structures, such as topological graphs made by connecting feature points of faces. Xin viewed head pose estimation as a graph regression problem. He built a landmark-connection graph and designed a novel GCN architecture to stimulate a complex nonlinear mapping relationship between the angle parameters of head pose and the graph typologies and overcame the unstable landmark detection with a joint Edge-Vertex Attention (EVA) mechanism [11]. Some methods regard head pose estimation as an auxiliary task, but also achieve good performance. Kumar, A. et al. proposed KEPLER to solve the unconstrained face alignment problem, and it also provides estimation of the head pose in three dimensions as a by-product [12].

Landmark-independent methods: This type of method has the advantage that the task of head pose estimation does not rely on the quality of the landmarks, which enhances robustness in a sense. Ruiz, N. et al. designed a loss including a pose bin classification and a regression component for each head pose Euler angle to compose a multi-loss network. When combined with data augmentation, their method has a good performance in estimating head poses in low-resolution images [13]. Huang et al. presented an estimation method using two-stage ensembles with average top-k regression to address the fragility of regression-based methods in estimating extreme poses [14]. Cao, Z. et al. used three vectors in a 9D rotation matrix to estimate head pose and adopted the mean absolute error of vectors (MAEV) to assess the performance [15]. Dhingra, N. proposed a light network LwPosr based on fine-grained regression using DSCs and transformer encoders, which can predict head poses efficiently with a very small number of parameters and memory [16]. Thai, C. et al. trained teacher models on the synthesis dataset to get pseudo labels of head poses. Then, they used a network based on ResNet18 to perform knowledge distillation and train the head pose estimation model with the ensemble of the pseudo labels [17].

Based on what has been mentioned above, landmark-independent methods perform slightly better than landmark-dependent methods in terms of accuracy of head pose estimation, but the network structures of landmark-independent methods are usually so heavy and complex that they are unsuitable for running on mobile and embedded platforms. In contrast, landmark-dependent methods run faster and have a smaller computational scale, which is more in line with the requirements for a lightweight estimation system in this task.

Head pose estimation has a wide range of applications in research areas such as driver attention detection [18,19], gaze estimation [20], iris recognition [21], face frontalization [22], best frame selection [23], dangerous behavior detection [24], and so on. However, although HPE has wide application prospects in the intersection of medicine and artificial intelligence, there are still few studies on the application of head pose estimation in the medical field, especially in the field of cervical spine health condition diagnosis and rehabilitation training guidance.

Contributions: To fill the vacancy in the area of an unmanned evaluating and monitoring system for cervical spine rehabilitation training, in this paper, we proposed a complete cervical spine health condition assessment system with a face encryption algorithm, and constructed a cervical exercise dataset which can be utilized in related studies. We adopted Mediapipe to acquire the necessary landmarks on human faces for the calculation of Euler angles. Mediapipe Face Mesh offers high-precision real-time detection of 468 3D face landmarks. Its lightweight architecture, together with GPU acceleration, enables the model to run on mobile devices with only a single camera input [25]. We estimated head movements based on certain landmarks and developed a correlation model between the head pose estimation algorithm and cervical spine rehabilitation. Moreover, we proposed a face encryption algorithm with controllable grain size to solve the privacy problem of patients in rehabilitation training.

The remaining chapters of this paper are as follows: Section 2 reviews related work and mentions some drawbacks of current methods. Section 3 summarizes the whole architecture of the evaluation method and analyzes each component in detail. Section 4 tests the repeatability of the head pose estimation algorithm, introduces the construction of the cervical spine operation dataset, analyzes the scoring results, performs an ablation study, and discusses the mosaic encryption effect. Finally, there is a conclusion summarizing the overall estimation process and presenting an outlook on future research directions.

2. Related Work

With the gradual maturation of computer vision technology, the application of computer vision in the medical field to improve the efficiency and accuracy of diagnosis has become a hot research topic in the academic community. However, little work has been done on the intersection of cervical spine health assessment and its rehabilitation guidance with computer vision, and the task focus and methods used in these works are different. For example, Xiong, J. et al. used computer vision and AR technology for acupuncture point selection in acupuncture therapy for cervical spondylosis and achieved an effective rate of 95.13% [26]. Murugaviswanathan, R. et al. proposed a method to automatically select points and calculate curvature for lateral views of the spine in videos using OpenCV [27]. This method can be transferred into the estimation of cervical vertebra. However, since in the actual scene, both the spine and the cervical vertebrae are wrapped with skin and muscle, rather than a thin curve exposed as in the text, this can cause great interference to this method of point selection and evaluation, making the results inaccurate. Jiang, J. addressed this problem by extracting the left edge after image enhancement of X-ray images of the cervical spine and analyzing the patient’s cervical spine health [28]. Wang, Y. et al. adopted an improved Yolov3 algorithm to extract the cervical vertebrae in infrared thermal images [29]. These two methods mentioned above have improved the precision of cervical spine extraction; however, these methods can only work after the patient’s cervical spine condition has been dissected using other cumbersome techniques (such as X-ray and thermal imaging), which is inconvenient and complicated. They can only process a single static image at one time and do not take into account a full range of factors when assessing the health of a patient’s cervical spine. These problems can all be solved in our method.

3. Methodologies

3.1. Model Architecture and Design

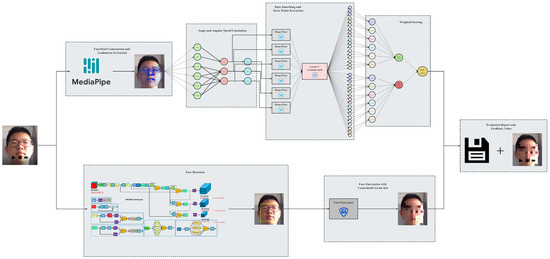

The proposed evaluation model can calculate the head posture and other information by tracking the key points of the face in the input cervical spine exercise video and calculate the cervical spine score accordingly to generate a cervical spine health status assessment report; at the same time, it generates an assessment video with mosaic-processed face and real-time head posture data attached, which aims to provide scientific spine health status assessment and rehabilitation guidance while protecting the privacy of patients. The model architecture is shown below in Figure 1.

Figure 1.

Model architecture.

The specific working steps are as follows:

Step 1: Face Landmarks Extraction

The landmark points on the human face are first extracted using Mediapipe Face Mesh and are then inputted into the angle calculation module.

Step 2: Euler Angle Calculation

The formula that provides an accurate angle estimate with the least number of landmarks, is easy to understand, and reduces the computational overhead, is the one we choose for calculating the head pose based on coordinates.

Step 3: Face Encryption

YOLOv5 is used to detect and localize human faces, and grain-size-adjustable mosaics are put on the detected area to protect patients’ privacy.

Step 4: Feedback Video Synthesis and Evaluation Report Generation

Continuous head pose information is inputted in temporal sequence into the scoring system to get the final evaluation report, and a feedback video with encrypted face and head pose data attached is generated.

3.2. Scenario-Driven Improved YOLO Algorithm

You Only Look Once (YOLO) is a widely used algorithm, especially in the field of target detection. As its name suggests, the most important feature of YOLO is that it can output detection results at once after inputting the images to be predicted into the network, truly realizing the task of end-to-end target detection. Its lightweight network, extremely fast detection speed and excellent detection results make it widely used in many target detection-related scenarios. However, YOLO was not perfect in the beginning and many endeavors have been made by numerous scholars to improve YOLO’s performance.

The first version of YOLO is based on GoogLeNet, including 24 convolution layers and two fully connected layers [30]. It can perceive global information well while excluding the interference of background information, but there are still some problems, such as difficulty in detecting overlapping objects, inability to solve the multi-label problem, difficulty in detecting small objects, single aspect ratio of preselected boxes, etc. YOLOv2 uses Darknet-19 instead of GoogLeNet, adds batch normalization layers after all convolutional layers, adds 10 epochs of high-resolution fine-tuning during the training process, uses multi-label prediction, uses a K-means clustering algorithm to improve the size selection of anchor boxes, and adds a passthrough layer to increase detection accuracy [31]. YOLOv3 extends Darknet-19 to Darknet-53, using a residual network-like structure, and adopts multi-scale training. Another highlight in YOLOv3 is the strategy of ignoring the bounding boxes whose values of IOU are greater than a certain threshold, which is beneficial for improving the accuracy of predictions [32]. YOLOv4 replaces Darknet-53 with CSPDarknet-53, changes the activation function from ReLU to Mish, uses Dropblock to prevent overfitting, uses Mosaic data enhancement, cmBN, SAT self-adversarial training and other improvements on the input side, introduces an SSP module and FPN+PAN structure in the neck part, and changes IOU in the loss function to CIOU [33]. The biggest differences between YOLOX and the previous YOLO models are the application of the decoupled head, the adjustment in the process of data augmentation, and the deletion of anchor boxes (anchor-free) [34]. Recently, YOLOv6 has been proposed by Meituan’s team. It also uses the anchor-free approach and changes the backbone to EfficientRep, achieving great improvements in both detection accuracy and speed, especially with its impressive performance in small object detection in dense environments [35]. However, YOLOv6 is weaker in stability and less customizable in deployment compared with YOLOv5.

In the construction of our face encryption model, since the location and contour of the face in the video to be processed by the model are very clear and moderate in size, it is not necessary to pursue the ultra-high performance of the algorithm in the field of small target detection or other specific fields, but rather to focus on achieving a good balance between accuracy, processing speed, stability, flexibility, and ease of deployment. Taking the above factors into account, we decided that YOLOv5, with its high accuracy, fast detection speed, and customizable model, is the most suitable algorithm for our task.

YOLOv5 has four different networks, and the width and depth of the network can be adjusted according to the parameter configuration in the .yaml file [36]. On the input side, data augmentation, such as random mix-up, random scaling, and random cropping (similar to YOLOv4), is adopted for enhancing the generalization of the model; the process of calculating the initial value of the anchor box is changed to be embedded in the training code, and the method of pixel complementation of non-standard-size images is improved to reduce redundant information and improve the inference speed. In the backbone, the Focus structure is added, and the network structure of CSPDarknet + SPP is retained and improved. In the neck part, the structure of FPN + PAN is retained, and some ordinary convolutional layers in YOLOv4 are replaced by the CSP2 structure to strengthen the ability of network feature fusion. As is shown in Equation (1) (proposed in [37,38]), the loss function is CIoU_Loss, which takes into account the overlap area, aspect ratio, and centroid distance, avoiding the defects of IoU_Loss that cannot distinguish two boxes with the same intersection area but different intersection ways and gradient disappearance in the non-overlapping state.

Many other tricks are also used, such as abandoning the more complex activation function Mish and replacing it with LeakyReLU and Sigmoid to speed up inference; adopting a warm-up strategy when adjusting the learning rate to avoid using a larger learning rate at the beginning of training, which helps avoid early overfitting and ensure the stability of the model; and, as shown in Equation (2) (proposed in [38]), changing IoU_nms to DIoU_nms and adopting a weighting approach, which is conducive to more accurate detection of obscured or overlapping targets.

YOLOv5 is slightly weaker than YOLOv4 in terms of accuracy, but much stronger in terms of flexibility and prediction speed, and has a huge advantage in the rapid deployment of models.

3.3. Head Movement Estimation Algorithm

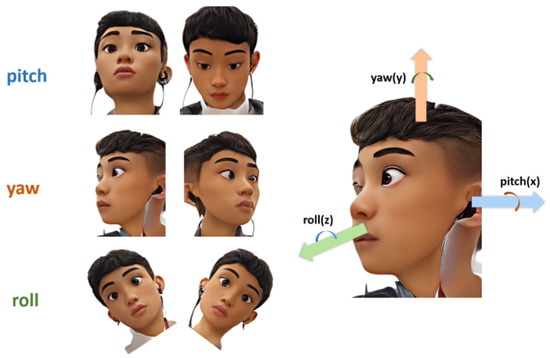

As is shown in Figure 2, three degrees of freedom can be used to describe the movement of the head: pitch, yaw, and roll.

Figure 2.

Three degrees of freedom to describe the movement of the head.

Pitch represents the head-up and head-down movements, and the main muscles used are longus colli, longus capitis, infrahyoids, splenius capitis, seminispinalis capitis, suboccipitals, and the trapezius muscles. Yaw represents the movement of turning the head to the left or to the right, and the main muscles used are the levator scapula and suboccipitals. Roll represents the movement of tilting the head to one side so that the ear on that side gets closer to the shoulder on that side, and the main muscles used are the scalene muscles [39].

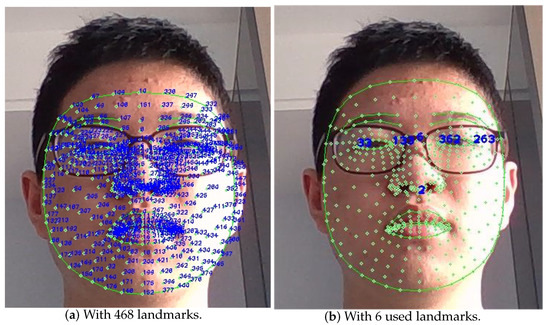

In this paper, feature extraction is performed based on the Mediapipe face detection algorithm and calculates the angles of head poses in three degrees of freedom. The formulas are shown below:

where , , and is (lm is short for landmark)

The formulas in Equations (3) and (4) appeared in [40], although the expression of was incorrect. In this paper, the error is corrected and these formulas are further derived based on the selection of specific face feature points in our experiments. In this algorithm, as is shown in Figure 3, the face mesh with 468 landmarks in Mediapipe is used [41]. Six important landmarks are chosen to calculate angles in three dimensions, and the indices of these landmarks are as follows: 2 (bottom point of the nose), 6 (top point of the nose), 33 (left corner of the left eye), 133 (right corner of the left eye), 263 (right corner of the right eye), and 362 (left corner of the right eye). We can take the midpoint of the left corner and the right corner as the coordinates of the eye, and then, the tangent value of three angles can be calculated according to the coordinates of the left eye, the right eye, and two points on the nose.

Figure 3.

The face mesh landmarks.

3.4. Face Encryption Model

The mosaic is performed by dividing the selected face area into several small squares, each of which is unified into the color of the pixel in the upper left corner of the small square. The process of mosaicking is irreversible due to the loss of information during the encryption process, which effectively protects the patient’s privacy. Equation (5) for the pixel points at each location in the face region after being mosaicked is as follows.

4. Experiment

4.1. Construction of Cervical Spine Operation Dataset

A complete set of cervical spine exercise movements was designed, including axial rotation, lateral bending, flexion, and extension in each direction three times.

To evaluate the method on videos of cervical vertebra rehabilitation, continuous videos were collected for two steps.

First, the cervical spine exercise of a volunteer was recorded as a standard video to test the repeatability of this algorithm. Then, another ten volunteers (5 males and 5 females with an average age of 16) were invited to do this exercise and the validation dataset for the scoring system was constructed.

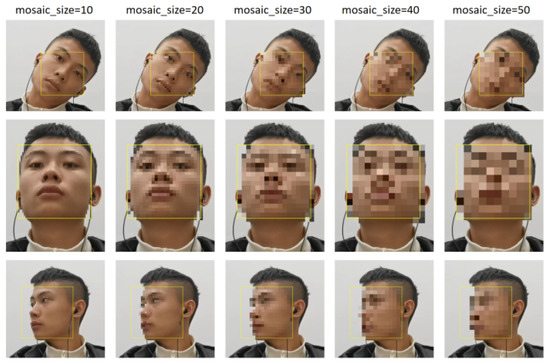

All the volunteers’ movements were recorded by the OV9734 azurewave camera on a laptop at 30 fps, and Figure 4 shows six movements of some volunteers.

Figure 4.

Different angles of the mosaic-processed faces.

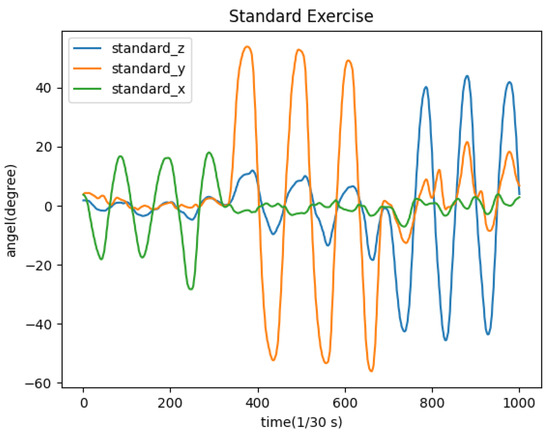

4.2. Analysis of Standard Cervical Spine Exercises

Assuming the cervical spine exercise in one of the videos represents standard movements, the relationship between the standard angle and time is shown in Figure 5.

Figure 5.

Changes of angles in the standard video.

The same video is tested 10 times to evaluate the repeatability of the algorithm and the consistency of the angles calculated in three directions. We chose the standard deviation of the values of angles in each frame in 10 experiments as the measure of the repeatability of the algorithm. In this specific case, n = 10 in Equation (6).

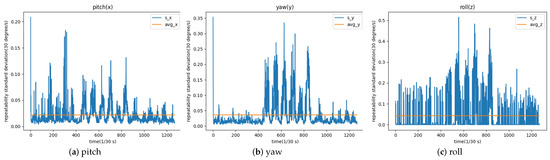

The results are as shown in Figure 6.

Figure 6.

Standard deviation of angles in three directions.

Consistency test statistics charts are generated in three dimensions. The horizontal coordinate in each graph is the number of video frames, and the vertical coordinate is the standard deviation of the deflection angle of each frame in the video in one dimension after ten tests. The orange horizontal line is the average of the standard deviation of all frames, which can reflect the repeatability of our head pose estimation algorithm in that dimension. The average of the standard deviation in pitch, yaw, and roll is 0.0217, 0.0362, and 0.0432, which are all relatively low, and that means the algorithm has good repeatability.

5. Results and Discussion

In this section, a cervical spine condition scoring system is given and 10 volunteers (five males and five females) were tested and analyzed for their cervical spine condition using this system.

5.1. Scoring System

The range and speed of the head motion are two significant scoring indices of the health condition of the cervical spine. Calculation of head motion amplitude can detect problems such as joint misalignment and abnormal cervical curvature, while calculation of head motion speed can reflect the flexibility of the cervical spine and the degree of stiffness of related muscle groups. Many methods of assessing cervical spine health conditions only consider the range of head motion in all directions [42,43,44], that is, only the stationary state in which the patient performs an action is considered. This type of assessment, which is valid most of the time, sometimes misses some information. For example, a person with cervical spondylosis may be able to extend his neck slowly to the same angle as a healthy person, but this does not indicate that his cervical spine is as healthy as a healthy person’s cervical spine. If he stretches quickly, he may still feel pain, whereas a healthy person would not. Therefore, it is necessary to include the speed of head movements in the cervical spine health assessment system to evaluate the condition of the patient’s cervical spine under dynamic movement. Thus, the following scoring formula (7) is proposed:

where means the standard head motion amplitude when doing flexion and extension, means the standard head motion amplitude when doing axial rotation in left and right directions, and means the standard head motion amplitude when performing lateral bending in left and right directions; means the maximum three deflection angles in a given direction; means the angular velocity of the head movement and the meaning of its subscript is similar to that of . We set 18 score points (6 directions × 3 extremes) for and , respectively, and each score point is set as a full score if its value is greater than or equal to the standard value; if it is less than the standard value, it is assigned a score in proportion. The total full score is 100. Weighting factors for each score point are displayed in Table 1.

Table 1.

Weighting factors for each score point.

Ohberg, F. et al. evaluated the importance of the range and the speed of head motion in [45], which is also the basis for the weighting of each score point in the above scoring formula.

5.2. Experiment Results

According to the research of Lind, B. et al. [46], the normal range of motion of the cervical spine is shown in Table 2.

Table 2.

Standard value of angles in three directions.

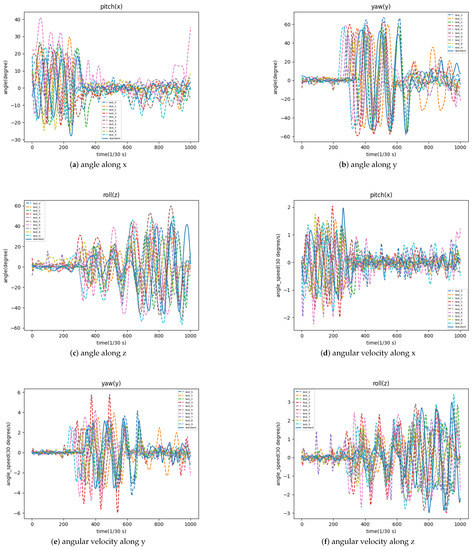

Additionally, a video of a volunteer’s cervical spine exercise was recorded as standard movements and the extreme values of angular velocity were calculated as standard values. The cervical exercise videos of 10 volunteers were tested with this scoring system and the variation of head deflection amplitude and angular velocity in three dimensions over the time domain are plotted in Figure 7.

Figure 7.

The variation of head deflection amplitude and angular velocity in three dimensions.

5.3. Analysis of Experiment Results

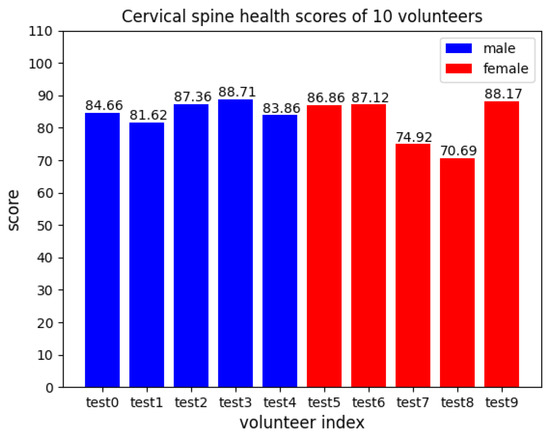

The cervical spine health scores of 10 volunteers in Figure 8 are calculated according to the experiment results using the scoring system mentioned above.

Figure 8.

Cervical spine health scores of 10 volunteers.

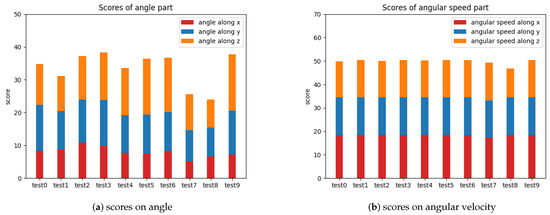

Their more detailed scores on head movement amplitude and movement angular velocity in the three dimensions are shown in Figure 9 below.

Figure 9.

Detailed scores on head movement amplitude and movement angular velocity.

In a practical application scenario, the general health status of a patient’s cervical spine can be assessed based on the overall score, and the patient’s cervical spine problems can be localized based on the detailed score of each index.

5.4. Ablation Study

A human-in-the-loop method was adopted to evaluate and compare the quality of different scoring standards. Professional cervical spine physicians were invited to score the cervical exercise of each volunteer based on their medical examination reports and extensive clinical experience as physicians, and this assessment was set as the benchmark score. (root mean square error), (mean absolute error), and (coefficient of determination) were calculated in different scoring standards. However, the human assessment of the absolute score of the quality of cervical spine exercises performed by a single patient is not always accurate, but the error is much smaller for the relatively good or bad assessment of the quality of cervical spine exercises performed by two patients. Considering the possible subjective errors in the human-in-the-loop method, (Kendall Tau) and (rank biased overlap) are also calculated to assess the relative quality of cervical exercises performed by different patients, in order to assist in evaluating the quality of different scoring standards. The results are shown in Table 3. We can see that our method outperforms other scoring approaches in all evaluation metrics.

Table 3.

Comparisons between different scoring standards.

5.5. Discussion of the Mosaic Encryption Effect

In the final output video, the size of the mosaic can be adjusted according to the resolution of the video, the diagnostic needs, the patient’s request for personal privacy, and other factors. The encryption effect of five different sizes of mosaic on the face was tested on our cervical exercise video with a resolution of 1920 × 1080, as shown in Figure 10.

Figure 10.

The encryption effect of five different sizes of mosaic on the face.

6. Conclusions

In this paper, we proposed an evaluation method for cervical vertebral rehabilitation based on computer vision. The method first uses Mediapipe to construct a face mesh and extract several important landmarks to calculate the angles of head poses in three degrees of freedom. Then, the angular velocity in three dimensions over the time domain is calculated based on the angle data acquired before. After that, the health condition of the patient’s cervical vertebra can be evaluated generally and in detail using the scoring system. Finally, we use YOLOv5 to localize the human face, put mosaics on the detected area, and compose the full video with head posture information attached.

We validated the repeatability, scoring performance, and encryption effect of the proposed evaluation method by experiments based on the cervical exercise videos of volunteers with even gender distribution. The experimental results showed that our algorithm has good repeatability and that our scoring system can effectively reflect the health status of the patient’s cervical spine.

In the future, we plan to work with hospitals to access cervical spine data from real patients to expand the dataset to patients all of ages and study the correlation between changes in various indicators of the patient’s cervical spine during treatment and the patient’s recovery progress, and further improve the assessment indicators and weights in the scoring system. In addition, we will also try to expand and transfer this method of assessing patients’ rehabilitation status with the help of machine vision to more medical fields to better utilize the advantages of cross-disciplinary research.

Author Contributions

Conceptualization, Q.D., H.B. and Z.Z.; methodology, Q.D., H.B. and Z.Z.; software, Q.D. and H.B.; validation, Q.D., H.B. and Z.Z.; formal analysis, Q.D. and Z.Z.; investigation, Q.D., H.B. and Z.Z.; writing—original draft preparation, Q.D. and H.B.; writing—review and editing, Q.D. and Z.Z.; supervision, Z.Z.; funding acquisition, Z.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Key Research and Development Program of China grant number 2021YFE0193900, Fundamental Research Funds for the Central Universities grant number 22120220642, and National Natural Science Foundation of China grant number 5200286.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study. Written informed consent has been obtained from the patient(s) to publish this paper.

Data Availability Statement

The data for this article can be obtained by contacting the authors by email.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yongjun, Z.; Tingjie, Z.; Xiaoqiu, Y.; Zhiying, F.; Feng, Q.; Guangke, X.; Jinfeng, L.; Fachuan, N.; Xiaohong, J.; Yanqing, L. A survey of chronic pain in China. Libyan J. Med. 2020, 15, 1. [Google Scholar]

- Singh, N.N.; Bawa, P.S. Cervical spondylosis in adolescents: A review of the literature. Asian Spine J. 2014, 8, 704–710. [Google Scholar]

- Mummaneni, P.V.; Ames, C.P. Cervical spondylosis in young adults: A review of the literature. Neurosurg. Focus 2009, 27, E6. [Google Scholar]

- Gautam, D.K.; Shrestha, M.K. Cervical spondylosis in adolescents: Clinical and radiographic evaluation. J. Orthop. Surg. 2017, 25, 2309499016682792. [Google Scholar]

- Baker, J.C.; Glotzbecker, M.P. Cervical spondylosis in adolescents: A rare cause of neck pain. J. Pediatr. Orthop. 2014, 34, e49–e51. [Google Scholar]

- Elawady, M.E.M.; El-Sabbagh, M.H.; Eldesouky, S.S. Cervical spondylosis in adolescents: A case report. J. Orthop. Case Rep. 2016, 6, 56–59. [Google Scholar]

- O’Riordan, C.; Clifford, A.; Van De Ven, P.; Nelson, J. Chronic neck pain and exercise interventions: Frequency, intensity, time, and type principle. Arch. Phys. Med. Rehabil. 2014, 95, 770–783. [Google Scholar]

- Liu, L.; Ke, Z.; Huo, J.; Chen, J. Head pose estimation through keypoints matching between reconstructed 3D face model and 2D image. Sensors 2021, 21, 1841. [Google Scholar]

- Ranjan, R.; Patel, V.M.; Chellappa, R. Hyperface: A deep multi-task learning framework for face detection, landmark localization, pose estimation, and gender recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 41, 121–135. [Google Scholar]

- Guo, J.; Zhu, X.; Yang, Y.; Yang, F.; Lei, Z.; Li, S.Z. Towards fast, accurate and stable 3d dense face alignment. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 152–168. [Google Scholar]

- Xin, M.; Mo, S.; Lin, Y. Eva-gcn: Head pose estimation based on graph convolutional networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 1462–1471. [Google Scholar]

- Kumar, A.; Alavi, A.; Chellappa, R. KEPLER: Simultaneous estimation of keypoints and 3D pose of unconstrained faces in a unified framework by learning efficient H-CNN regressors. Image Vis. Comput. 2018, 79, 49–62. [Google Scholar] [CrossRef]

- Ruiz, N.; Chong, E.; Rehg, J.M. Fine-grained head pose estimation without keypoints. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2074–2083. [Google Scholar]

- Huang, B.; Chen, R.; Xu, W.; Zhou, Q. Improving head pose estimation using two-stage ensembles with top-k regression. Image Vis. Comput. 2020, 93, 103827. [Google Scholar]

- Cao, Z.; Chu, Z.; Liu, D.; Chen, Y. A vector-based representation to enhance head pose estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2021; pp. 1188–1197. [Google Scholar]

- Dhingra, N. LwPosr: Lightweight Efficient Fine Grained Head Pose Estimation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 1495–1505. [Google Scholar]

- Thai, C.; Tran, V.; Bui, M.; Ninh, H.; Tran, H.Y. An Effective Deep Network for Head Pose Estimation without Keypoints. In Proceedings of the ICPRAM, Online, 3–5 February 2022. [Google Scholar]

- Alioua, N.; Amine, A.; Rogozan, A.; Bensrhair, A.; Rziza, M. Driver head pose estimation using efficient descriptor fusion. EURASIP J. Image Video Process. 2016, 2016, 2. [Google Scholar]

- Paone, J.; Bolme, D.; Ferrell, R.; Aykac, D.; Karnowski, T. Baseline face detection, head pose estimation, and coarse direction detection for facial data in the SHRP2 naturalistic driving study. In Proceedings of the 2015 IEEE Intelligent Vehicles Symposium (IV), Seoul, Republic of Korea, 28 June–1 July 2015; pp. 174–179. [Google Scholar]

- Yücel, Z.; Salah, A.A. Head pose and neural network based gaze direction estimation for joint attention modeling in embodied agents. In Proceedings of the Annual Meeting of Cognitive Science Society, Amsterdam, The Netherlands, 29 July–1 August 2009. [Google Scholar]

- Akrout, B.; Fakhfakh, S. Three-Dimensional Head-Pose Estimation for Smart Iris Recognition from a Calibrated Camera. Math. Probl. Eng. 2020, 2020, 9830672. [Google Scholar]

- Hassner, T.; Harel, S.; Paz, E.; Enbar, R. Effective face frontalization in unconstrained images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Patna, India, 16–19 December 2015; pp. 4295–4304. [Google Scholar]

- Barra, P.; Barra, S.; Bisogni, C.; De Marsico, M.; Nappi, M. Web-shaped model for head pose estimation: An approach for best exemplar selection. IEEE Trans. Image Process. 2020, 29, 5457–5468. [Google Scholar] [CrossRef]

- Miyoshi, K.; Nomiya, H.; Hochin, T. Detection of Dangerous Behavior by Estimation of Head Pose and Moving Direction. In Proceedings of the 2018 5th International Conference on Computational Science/Intelligence and Applied Informatics (CSII), Yonago, Japan, 10–12 July 2018; pp. 121–126. [Google Scholar]

- Kartynnik, Y.; Ablavatski, A.; Grishchenko, I.; Grundmann, M. Real-time facial surface geometry from monocular video on mobile GPUs. arXiv 2019, arXiv:1907.06724. [Google Scholar]

- Xiong, J.; Wang, Y. Study on the Prescription of Acupuncture in the Treatment of Cervical Spondylotic Radiculopathy Based on Computer Vision Image Analysis. Contrast Media Mol. Imaging 2022, 2022, 8121636. [Google Scholar] [PubMed]

- Murugaviswanathan, R.; D, M.; Subramaniyam, M.; Min, S. Measurement of Spine Curvature using Flexicurve Integrated with Machine Vision. Hum. Factors Wearable Technol. 2022, 29, 82–86. [Google Scholar] [CrossRef]

- Jiang, J. Research on the improved image tracking algorithm of athletes’ cervical health. Rev. Bras. Med. Esporte 2021, 27, 476–480. [Google Scholar]

- Wang, Y.; Sun, D.; Liu, L.; Ye, L.; Fu, K.; Jin, X. Detection of cervical vertebrae from infrared thermal imaging based on improved Yolo v3. In Proceedings of the 5th International Conference on Computer Science and Software Engineering, Guilin, China, 21–23 October 2022; pp. 312–318. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- meituan. YOLOv6. 2022. Available online: https://github.com/meituan/YOLOv6/ (accessed on 31 July 2022).

- ultralytics. YOLOv5. 2022. Available online: https://github.com/ultralytics/yolov5/ (accessed on 31 July 2022).

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM international conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- TECHNOGYM. The Neck—The Power Behind the Head. 2022. Available online: https://www.technogym.com/us/wellness/the-neck-the-power-behind-the-head/ (accessed on 25 July 2022).

- Radmehr, A.; Asgari, M.; Masouleh, M.T. Experimental Study on the Imitation of the Human Head-and-Eye Pose Using the 3-DOF Agile Eye Parallel Robot with ROS and Mediapipe Framework. In Proceedings of the 2021 9th RSI International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 17–19 November 2021; pp. 472–478. [Google Scholar]

- Lugaresi, C.; Tang, J.; Nash, H.; McClanahan, C.; Uboweja, E.; Hays, M.; Zhang, F.; Chang, C.L.; Yong, M.G.; Lee, J.; et al. Mediapipe: A framework for building perception pipelines. arXiv 2019, arXiv:1906.08172. [Google Scholar]

- Aşkin, A.; BAYRAM, K.B.; Demirdal, Ü.S.; Atar, E.; Karaman, Ç.A.; Güvendi, E.; Tosun, A. The evaluation of cervical spinal angle in patients with acute and chronic neck pain. Turk. J. Med Sci. 2017, 47, 806–811. [Google Scholar] [CrossRef]

- Sukari, A.A.A.; Singh, S.; Bohari, M.H.; Idris, Z.; Ghani, A.R.I.; Abdullah, J.M. Examining the range of motion of the cervical spine: Utilising different bedside instruments. Malays. J. Med. Sci. MJMS 2021, 28, 100. [Google Scholar] [PubMed]

- Moreno, A.J.; Utrilla, G.; Marin, J.; Marin, J.J.; Sanchez-Valverde, M.B.; Royo, A.C. Cervical spine assessment using passive and active mobilization recorded through an optical motion capture. J. Chiropr. Med. 2018, 17, 167–181. [Google Scholar]

- Ohberg, F.; Grip, H.; Wiklund, U.; Sterner, Y.; Karlsson, J.S.; Gerdle, B. Chronic whiplash associated disorders and neck movement measurements: An instantaneous helical axis approach. IEEE Trans. Inf. Technol. Biomed. 2003, 7, 274–282. [Google Scholar] [PubMed]

- Lind, B.; Sihlbom, H.; Nordwall, A.; Malchau, H. Normal range of motion of the cervical spine. Arch. Phys. Med. Rehabil. 1989, 70, 692–695. [Google Scholar] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).