Cyclist Orientation Estimation Using LiDAR Data

Abstract

1. Introduction

2. Cyclist Orientation Estimation Based on 2D and 3D Methods

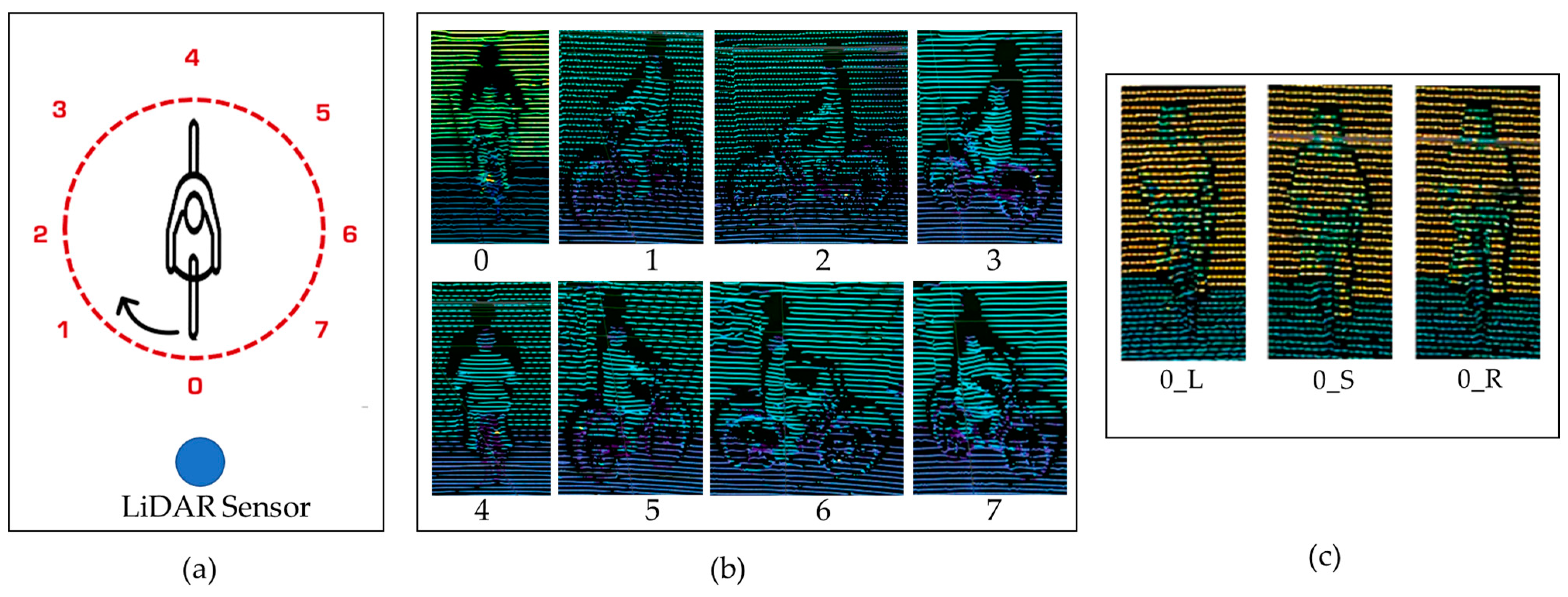

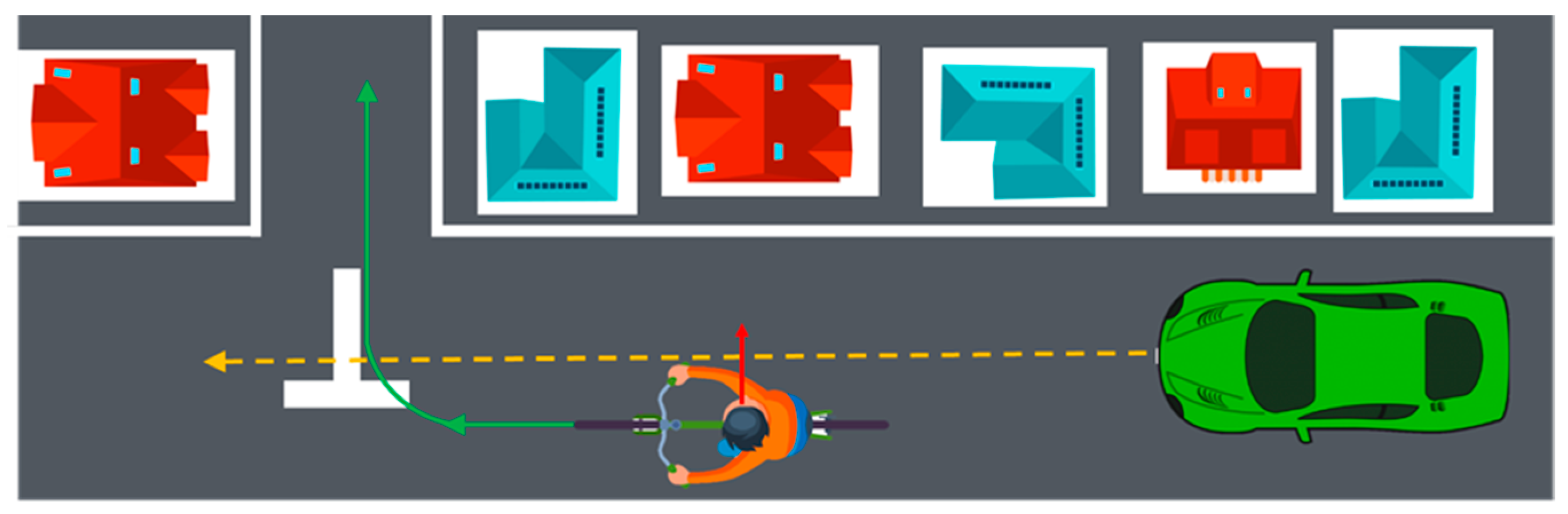

2.1. Definition for Cyclist Body and Head Orientations

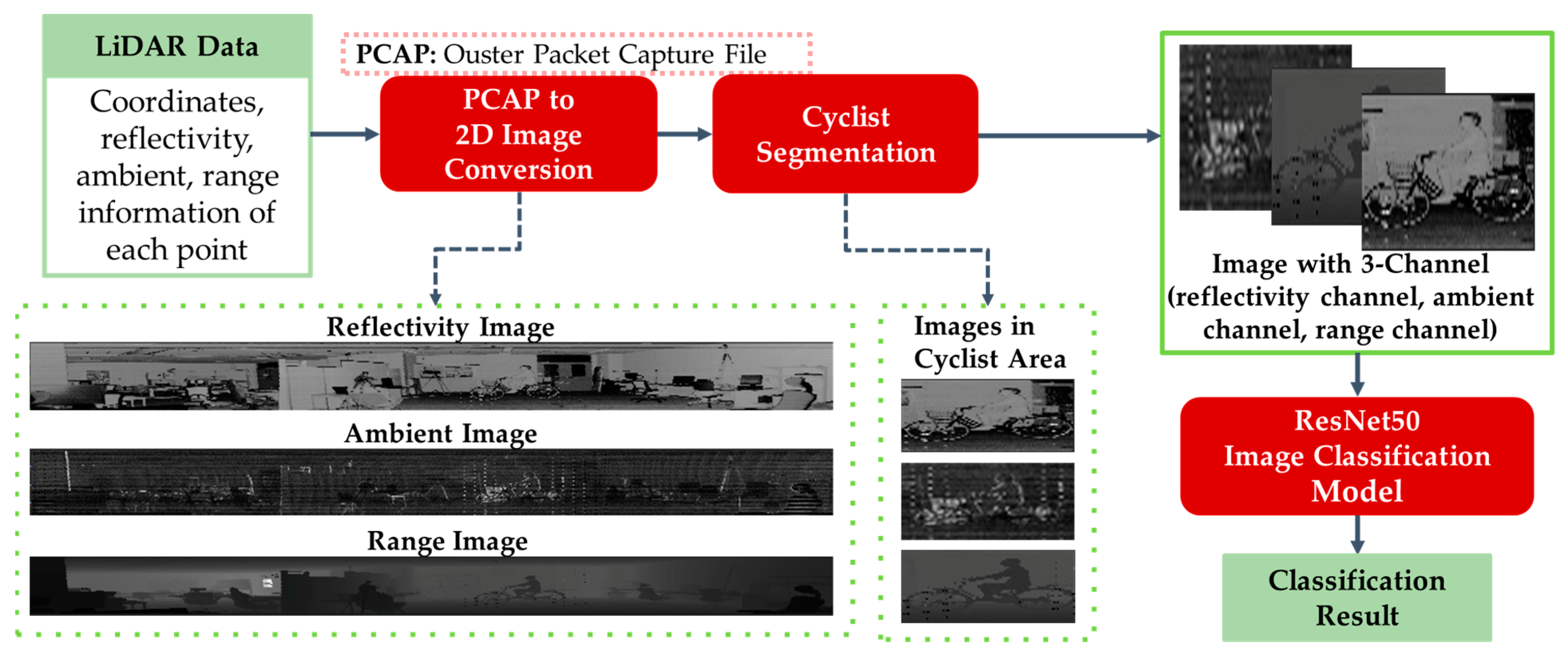

2.2. 2D Image-Based Cyclist Orientation Estimation

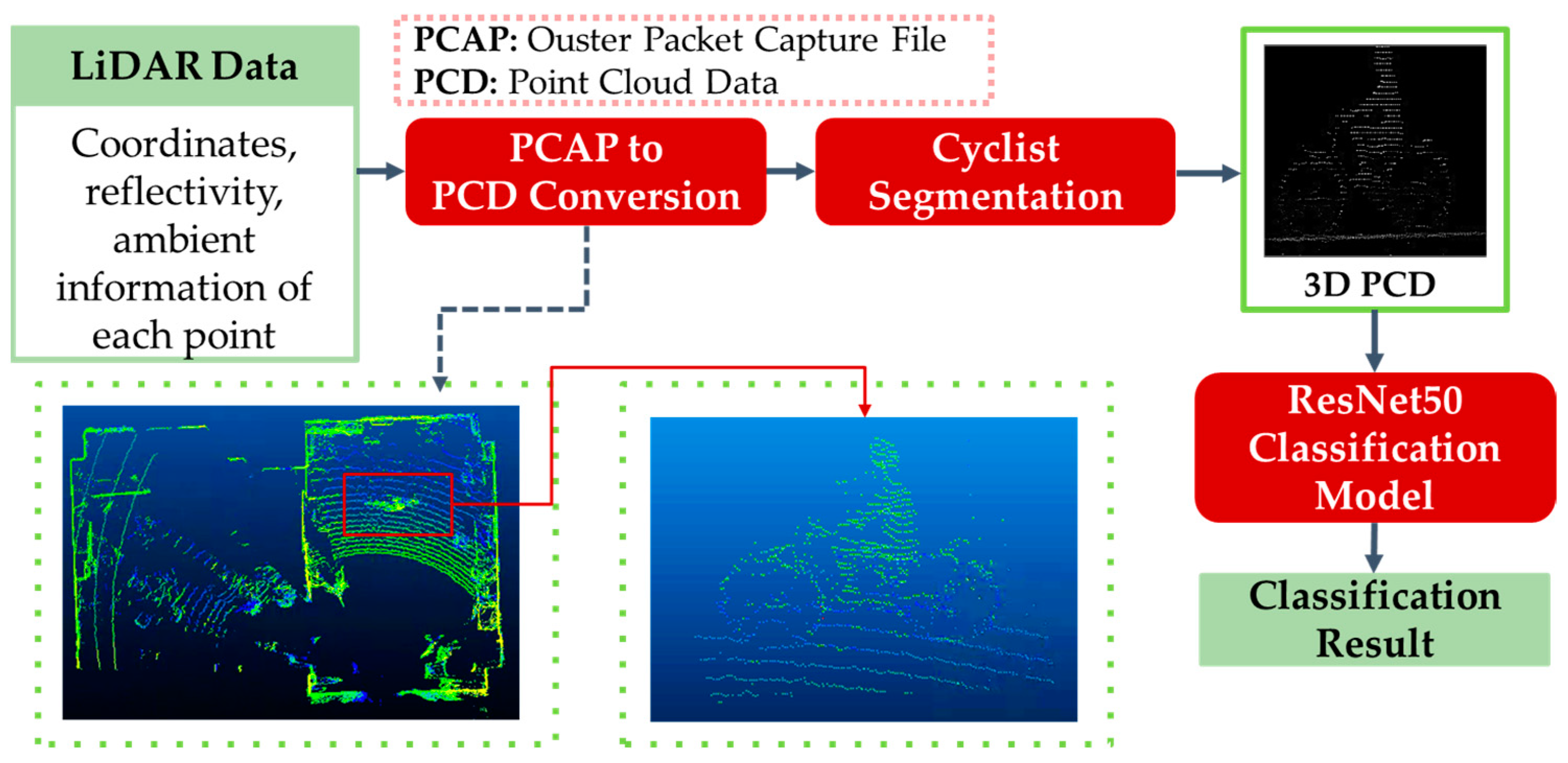

2.3. 3D Point Cloud-Based Cyclist Orientation Estimation

3. Experiments

3.1. Data Collection

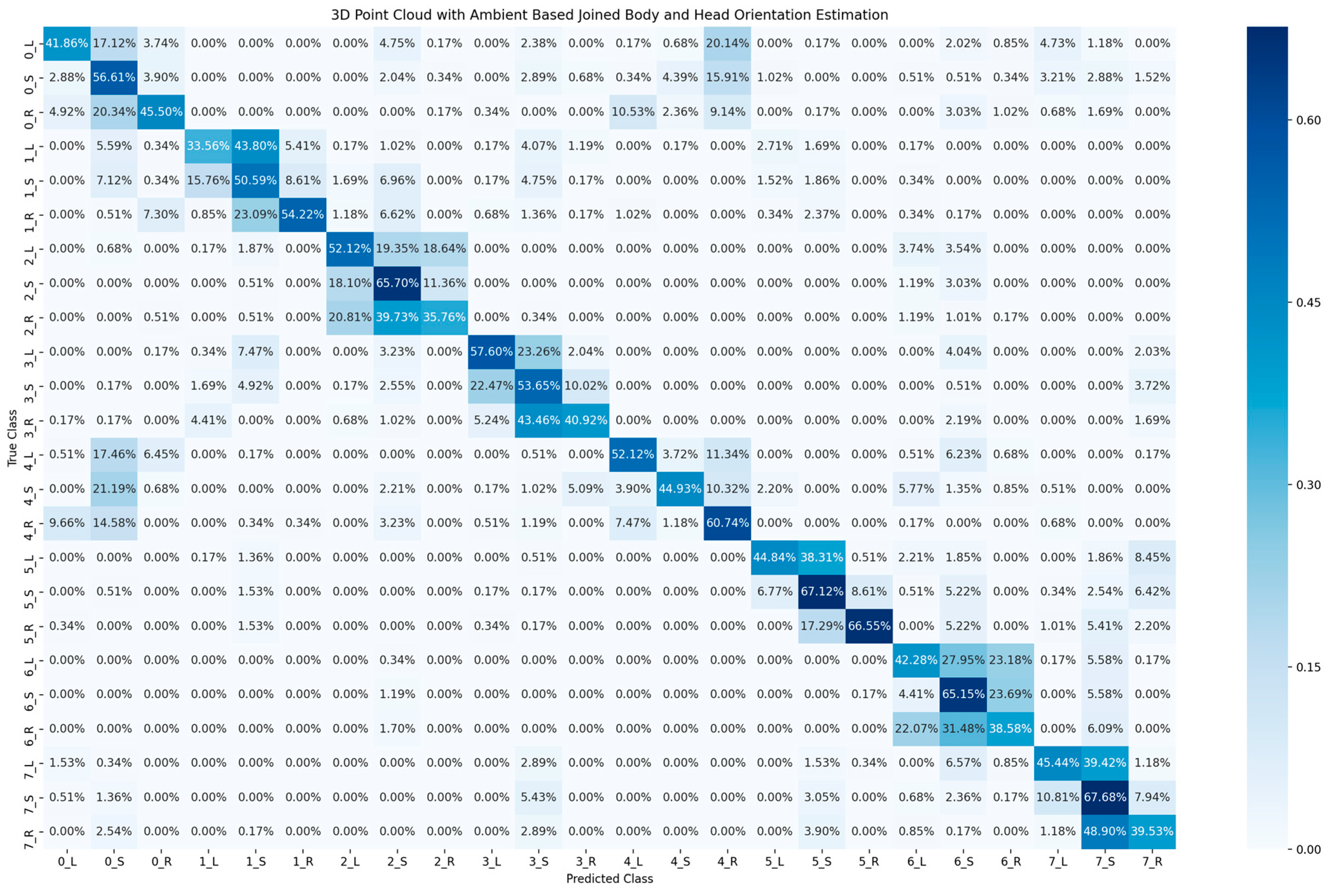

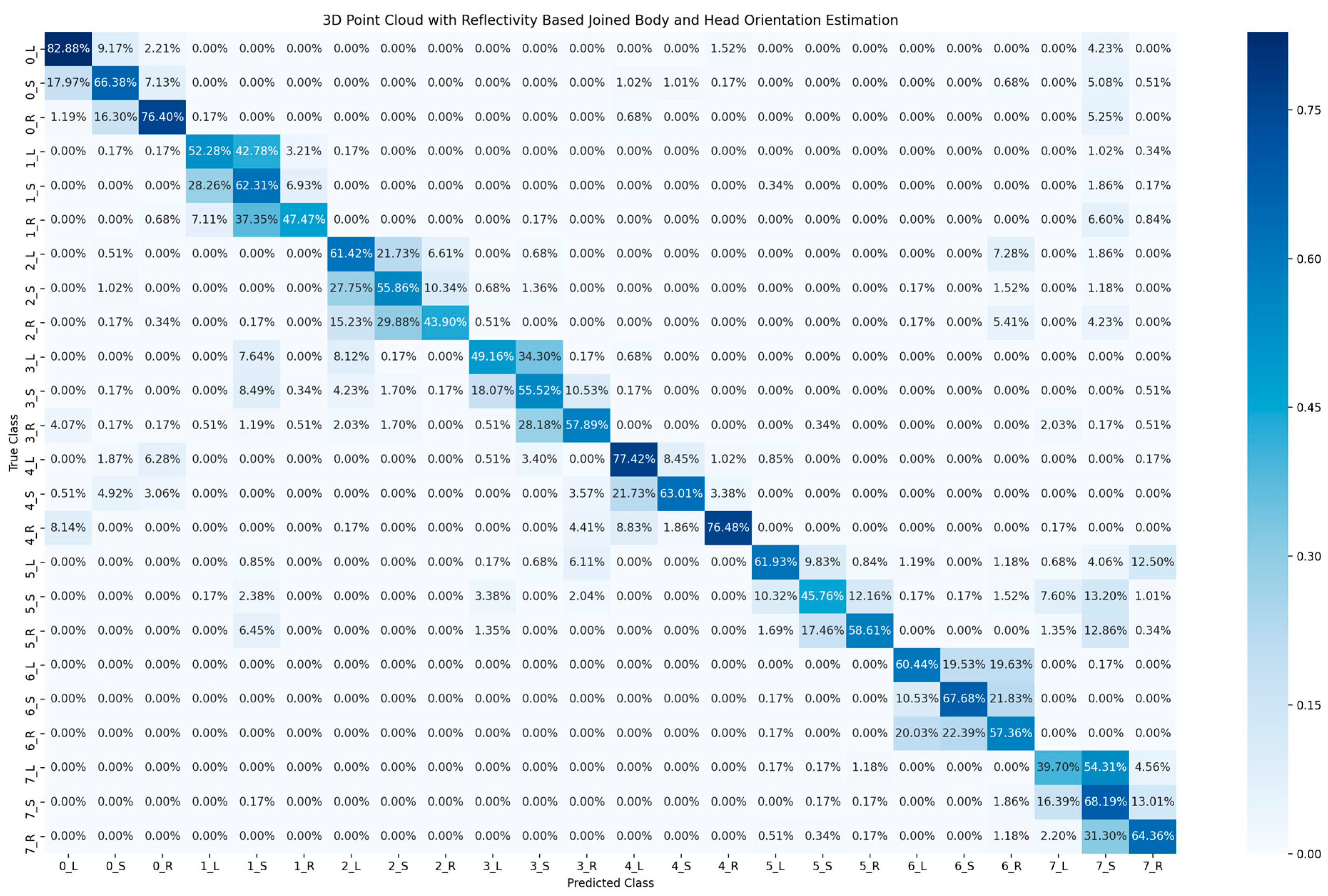

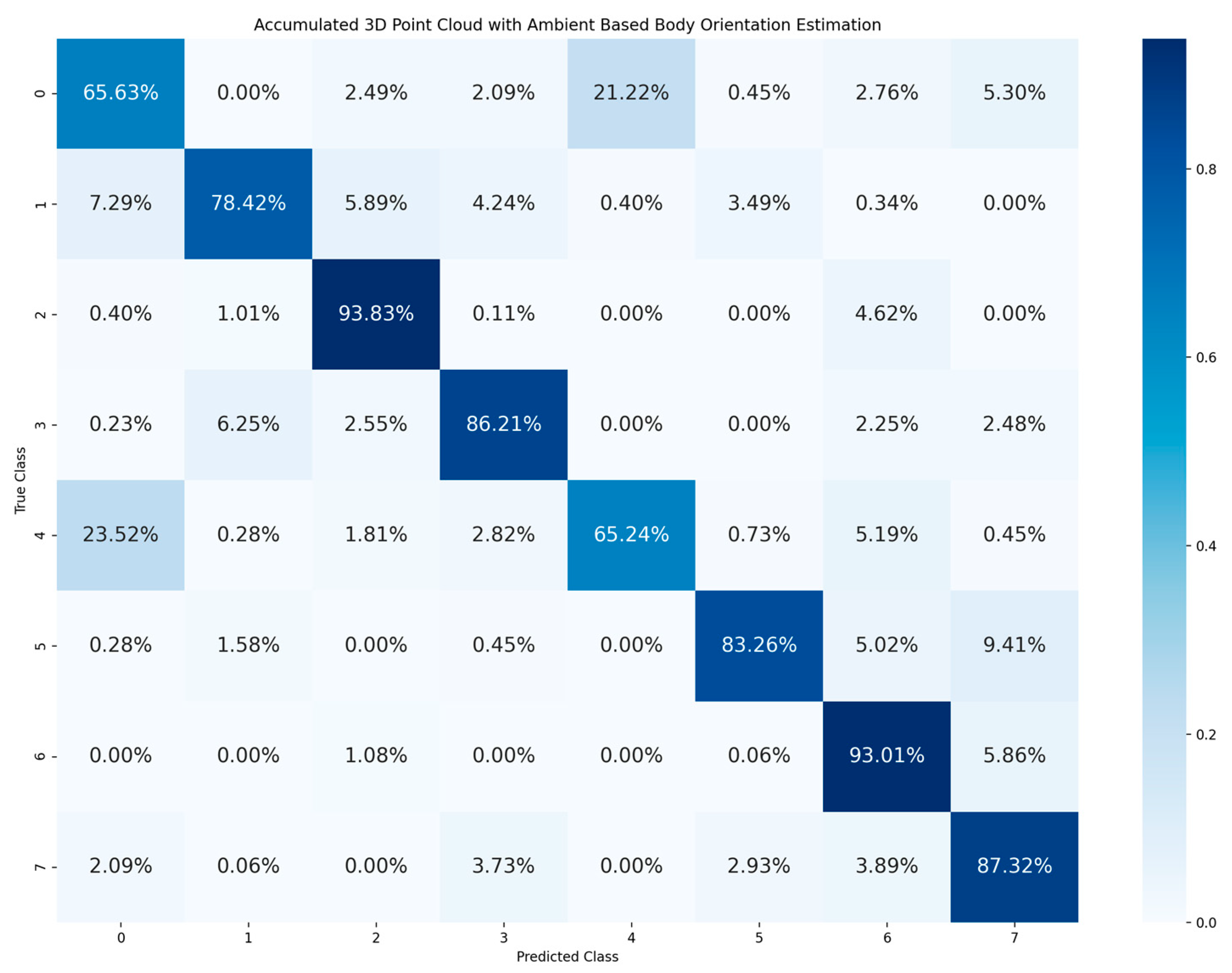

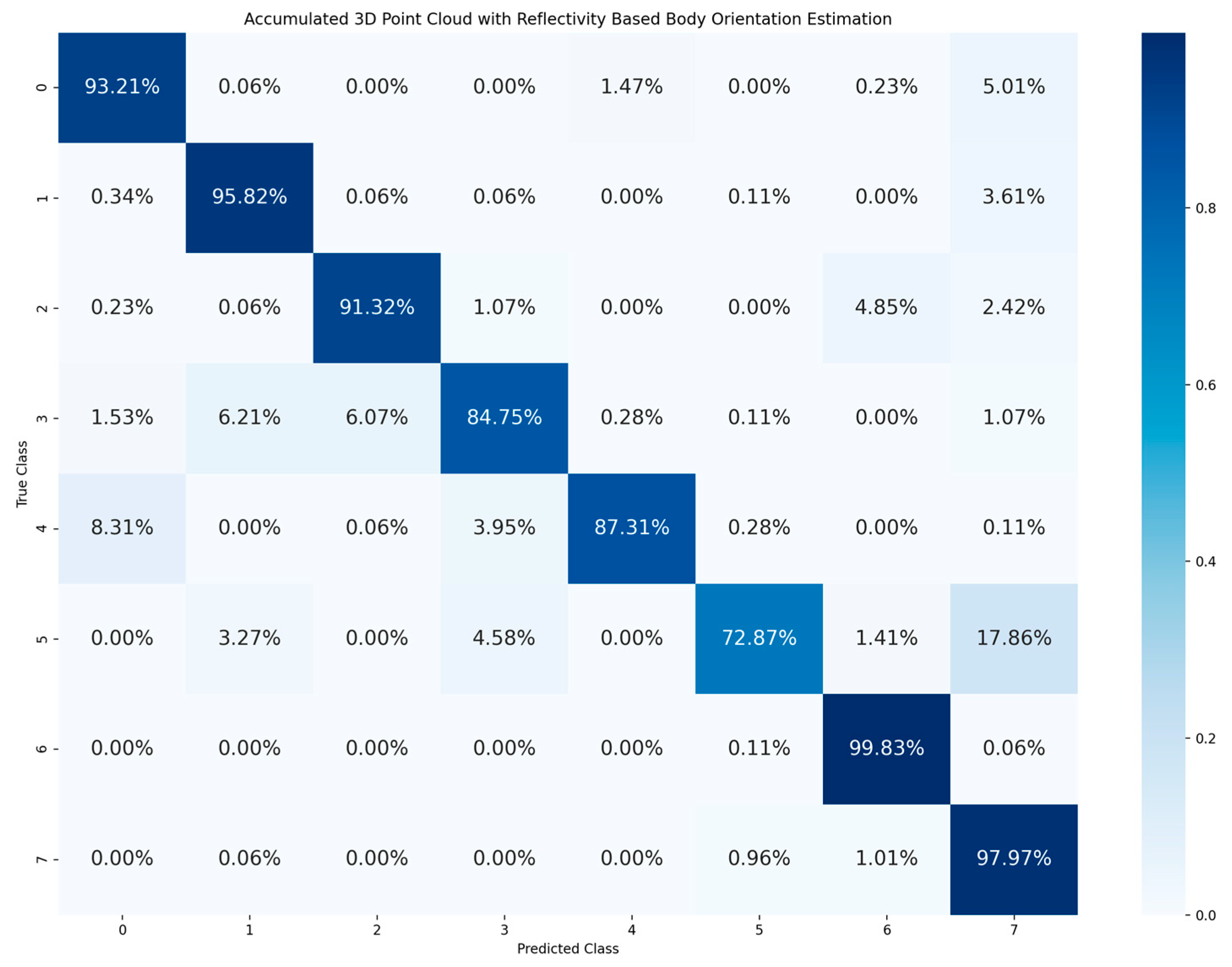

3.2. Experimental Results

3.3. Discussions

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- European Commission. ITS & Vulnerable Road Users. Available online: https://transport.ec.europa.eu/transport-themes/intelligent-transport-systems/road/action-plan-and-directive/its-vulnerable-road-users_en (accessed on 24 January 2023).

- Dollar, P.; Wojek, C.; Schiele, B.; Perona, P. Pedestrian detection: An evaluation of the state of the art. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 34, 743–761. [Google Scholar] [CrossRef] [PubMed]

- Hamed, M.M. Analysis of pedestrians’ behavior at pedestrian crossings. Saf. Sci. 2001, 38, 63–82. [Google Scholar] [CrossRef]

- Tokyo Metropolitan Police Department. Status of Bicycle Traffic Accidents in Tokyo. Available online: https://www.keishicho.metro.tokyo.lg.jp/about_mpd/jokyo_tokei/tokei_jokyo/bicycle.html (accessed on 24 January 2023).

- European Commission. Facts and Figures Cyclists. European Road Safety Observatory; European Commission, Directorate General for Transport: Brussels, Belgium, 2020. [Google Scholar]

- RCUK. Essential Guide to Road Cycling Hand Signals and Calls. Available online: https://roadcyclinguk.com/how-to/technique/essential-guide-road-cycling-hand-signals-calls.html (accessed on 24 January 2023).

- Bicycle Law USA. Bike Hand Signals. Available online: https://www.bikelaw.com/2021/03/bike-hand-signals/ (accessed on 24 January 2023).

- Walker, I. Signals are informative but slow down responses when drivers meet bicyclists at road junctions. Accid. Anal. Prev. 2005, 37, 1074–1085. [Google Scholar] [CrossRef] [PubMed]

- Westerhuis, F.; De Waard, D. Reading cyclist intentions: Can a lead cyclist’s behaviour be predicted? Accid. Anal. Prev. 2017, 105, 146–155. [Google Scholar] [CrossRef] [PubMed]

- Hemeren, P.E.; Johannesson, M.; Lebram, M.; Eriksson, F.; Ekman, K.; Veto, P. The use of visual cues to determine the intent of cyclists in traffic. In Proceedings of the 2014 IEEE International Inter-Disciplinary Conference on Cognitive Methods in Situation Awareness and Decision Support, San Antonio, TX, USA, 3–6 March 2014; pp. 47–51. [Google Scholar]

- Schulz, A.; Damer, N.; Fischer, M.; Stiefelhagen, R. Combined head localization and head pose estimation for video–based advanced driver assistance systems. In Proceedings of the Pattern Recognition: 33rd DAGM Symposium, Frankfurt, Germany, 31 August–2 September 2011; pp. 51–60. [Google Scholar]

- Schulz, A.; Stiefelhagen, R. Video-based pedestrian head pose estimation for risk assessment. In Proceedings of the 2012 15th International IEEE Conference on Intelligent Transportation Systems, Anchorage, AK, USA, 16–19 September 2012; pp. 1771–1776. [Google Scholar]

- Gandhi, T.; Trivedi, M.M. Image based estimation of pedestrian orientation for improving path prediction. In Proceedings of the 2008 IEEE Intelligent Vehicles Symposium, Eindhoven, The Netherlands, 4–6 June 2008; pp. 506–511. [Google Scholar]

- Gu, Y.; Kamijo, S. Bicyclist recognition and orientation estimation from on-board vision system. Int. J. Automot. Eng. 2015, 6, 67–73. [Google Scholar]

- Gu, Y.; Hsu, L.T.; Xie, L.; Kamijo, S. Accurate estimation of pedestrian orientation from on-board camera and inertial sensors. IEICE Trans. Fundam. Electron. Commun. Comput. Sci. 2016, 99, 271–281. [Google Scholar] [CrossRef]

- Flohr, F.; Dumitru-Guzu, M.; Kooij, J.F.; Gavrila, D.M. A probabilistic framework for joint pedestrian head and body orientation estimation. IEEE Trans. Intell. Transp. Syst. 2015, 16, 1872–1882. [Google Scholar] [CrossRef]

- Raza, M.; Chen, Z.; Rehman, S.U.; Wang, P.; Bao, P. Appearance based pedestrians’ head pose and body orientation estimation using deep learning. Neurocomputing 2018, 272, 647–659. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime multi-person 2d pose estimation using part affinity fields. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Abadi, A.D.; Gu, Y.; Goncharenko, I.; Kamijo, S. Detection of Cyclists’ Crossing Intentions for Autonomous Vehicles. In Proceedings of the 2022 IEEE International Conference on Consumer Electronics, Las Vegas, NV, USA, 7–9 January 2022; pp. 1–6. [Google Scholar]

- Abadi, A.D.; Gu, Y.; Goncharenko, I.; Kamijo, S. Detection of Cyclist’s Crossing Intention based on Posture Estimation for Autonomous Driving. IEEE Sens. J. 2023. [Google Scholar] [CrossRef]

- Garcia-Venegas, M.; Mercado-Ravell, D.A.; Pinedo-Sanchez, L.A.; Carballo-Monsivais, C.A. On the safety of vulnerable road users by cyclist detection and tracking. Mach. Vis. Appl. 2021, 32, 109. [Google Scholar] [CrossRef]

- Velodyne, Inc. What Is Lidar? Available online: https://velodynelidar.com/what-is-lidar/ (accessed on 29 January 2023).

- Szarvas, M.; Sakai, U.; Ogata, J. Real-time pedestrian detection using LIDAR and convolutional neural networks. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium, Tokyo, Japan, 13–15 June 2006; pp. 213–218. [Google Scholar]

- Premebida, C.; Ludwig, O.; Nunes, U. Exploiting LIDAR-based features on pedestrian detection in urban scenarios. In Proceedings of the 2009 12th International IEEE Conference on Intelligent Transportation Systems, St. Louis, MO, USA, 4–7 October 2009; pp. 1–6. [Google Scholar]

- Ogawa, T.; Sakai, H.; Suzuki, Y.; Takagi, K.; Morikawa, K. Pedestrian detection and tracking using in-vehicle lidar for automotive application. In Proceedings of the 2011 IEEE Intelligent Vehicles Symposium, Baden-Baden, Germany, 5–9 June 2011; pp. 734–739. [Google Scholar]

- Saleh, K.; Hossny, M.; Hossny, A.; Nahavandi, S. Cyclist detection in lidar scans using faster r-cnn and synthetic depth images. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems, Yokohama, Japan, 16–19 October 2017; pp. 1–6. [Google Scholar]

- Wang, W.; Chang, X.; Yang, J.; Xu, G. LiDAR-based dense pedestrian detection and tracking. Appl. Sci. 2022, 12, 1799. [Google Scholar] [CrossRef]

- Premebida, C.; Ludwig, O.; Nunes, U. LIDAR and vision-based pedestrian detection system. J. Field Robot. 2009, 26, 696–711. [Google Scholar] [CrossRef]

- Dimitrievski, M.; Veelaert, P.; Philips, W. Behavioral pedestrian tracking using a camera and lidar sensors on a moving vehicle. Sensors 2019, 19, 391. [Google Scholar] [CrossRef] [PubMed]

- Pang, L.; Cao, Z.; Yu, J.; Liang, S.; Chen, X.; Zhang, W. An efficient 3D pedestrian detector with calibrated RGB camera and 3D LiDAR. In Proceedings of the 2019 IEEE International Conference on Robotics and Biomimetics, Dali, China, 6–8 December 2019; pp. 2902–2907. [Google Scholar]

- Alfred Daniel, J.; Chandru Vignesh, C.; Muthu, B.A.; Senthil Kumar, R.; Sivaparthipan, C.B.; Marin, C.E.M. Fully convolutional neural networks for LIDAR–camera fusion for pedestrian detection in autonomous vehicle. Multimed. Tools Appl. 2023, 1–24. [Google Scholar] [CrossRef]

- Simony, M.; Milzy, S.; Amendey, K.; Gross, H.M. Complex-yolo: An euler-region-proposal for real-time 3d object detection on point clouds. In Proceedings of the European Conference on Computer Vision Workshops, Munich, Germany, 8–14 September 2018; pp. 1–14. [Google Scholar]

- Ouster Inc. Ouster Sensor Documentations. Available online: https://static.ouster.dev/sensor-docs/ (accessed on 24 January 2023).

- Ouster Inc. Firmware 2.1.1: Better Perception Performance with Improved Reflectivity and Signal Multiplier Mode. Available online: https://ouster.com/blog/firmware-2-1-1-better-perception-performance-with-improved-reflectivity-and-signal-multiplier-mode/ (accessed on 24 January 2023).

- Ouster Inc. Object Detection and Tracking using Deep Learning and Ouster Python SDK. Available online: https://ouster.com/blog/object-detection-and-tracking-using-deep-learning-and-ouster-python-sdk/ (accessed on 24 January 2023).

- Ultralytics. Ultralytics YOLOv8 Docs. Available online: https://docs.ultralytics.com/ (accessed on 29 January 2023).

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for larg, e-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Rusu, R.B.; Cousins, S. 3D is here: Point cloud library (PCL). In Proceedings of the 2011 IEEE International Conference on Robotics and Automation, Shanghai, China, 9–13 May 2011; pp. 1–4. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision, Workshops, Munich, Germany, 8–14 September 2018; pp. 1–16. [Google Scholar]

- Li, R.; Li, X.; Fu, C.W.; Cohen-Or, D.; Heng, P.A. Pu-gan: A point cloud upsampling adversarial network. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7203–7212. [Google Scholar]

| Head Orientation | Body Orientation | Total | |||||||

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | ||

| Left | 590 | 591 | 591 | 592 | 589 | 591 | 589 | 592 | |

| Straight | 589 | 589 | 589 | 589 | 592 | 590 | 594 | 591 | |

| Right | 589 | 592 | 590 | 589 | 591 | 592 | 591 | 592 | |

| Sub-total | 1768 | 1772 | 1770 | 1770 | 1772 | 1773 | 1774 | 1775 | 14,174 |

| 2D Image Based Method | 3D Point Cloud Based Methods | ||

|---|---|---|---|

| Ambient | Reflectivity | ||

| Accuracy | 47.69% | 50.96% | 60.52% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, H.; Gu, Y.; Goncharenko, I.; Hsu, L.-T.; Premachandra, C. Cyclist Orientation Estimation Using LiDAR Data. Sensors 2023, 23, 3096. https://doi.org/10.3390/s23063096

Chang H, Gu Y, Goncharenko I, Hsu L-T, Premachandra C. Cyclist Orientation Estimation Using LiDAR Data. Sensors. 2023; 23(6):3096. https://doi.org/10.3390/s23063096

Chicago/Turabian StyleChang, Hyoungwon, Yanlei Gu, Igor Goncharenko, Li-Ta Hsu, and Chinthaka Premachandra. 2023. "Cyclist Orientation Estimation Using LiDAR Data" Sensors 23, no. 6: 3096. https://doi.org/10.3390/s23063096

APA StyleChang, H., Gu, Y., Goncharenko, I., Hsu, L.-T., & Premachandra, C. (2023). Cyclist Orientation Estimation Using LiDAR Data. Sensors, 23(6), 3096. https://doi.org/10.3390/s23063096