Mapping Fire Severity in Southwest China Using the Combination of Sentinel 2 and GF Series Satellite Images

Abstract

1. Introduction

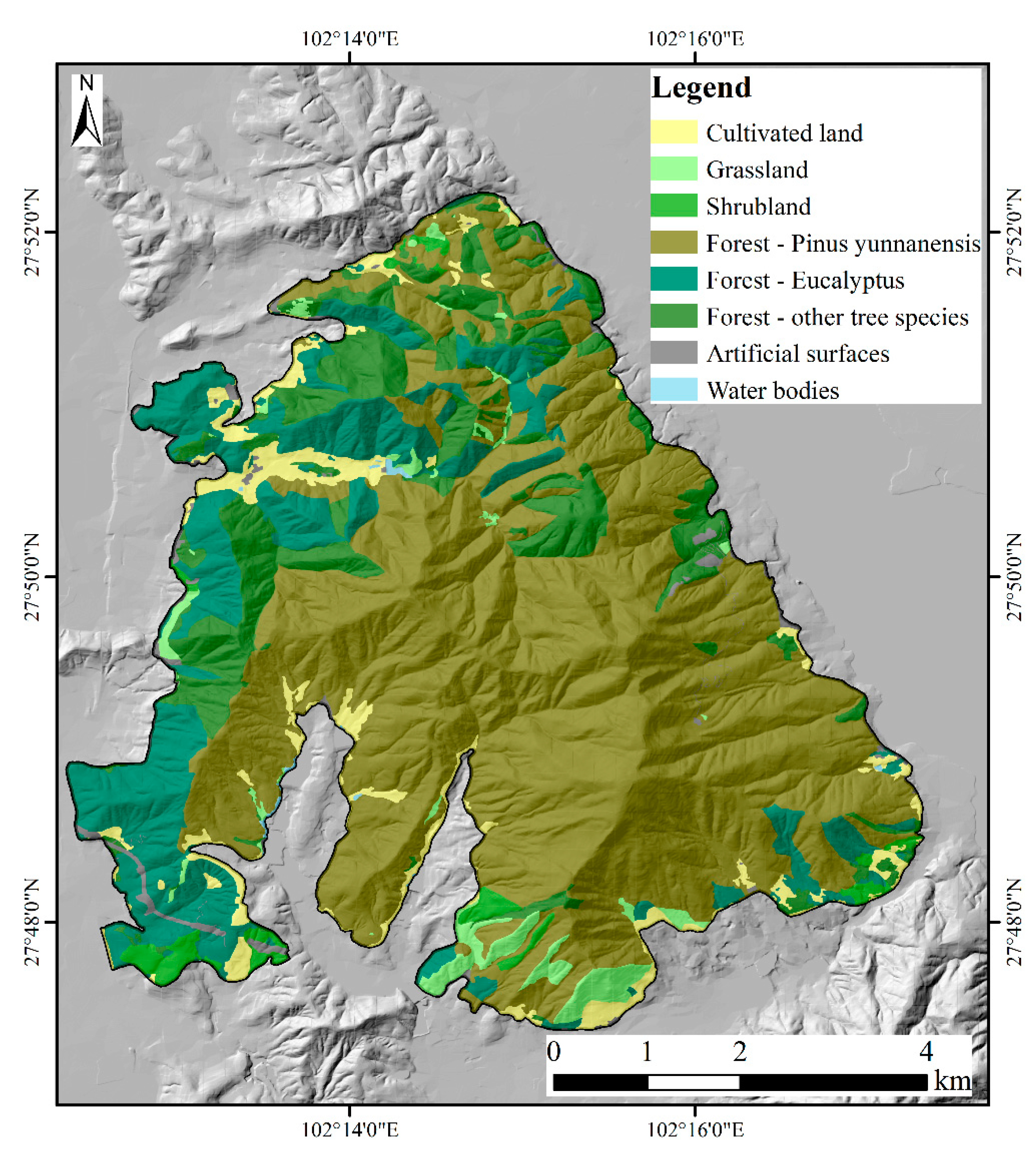

2. Study Area

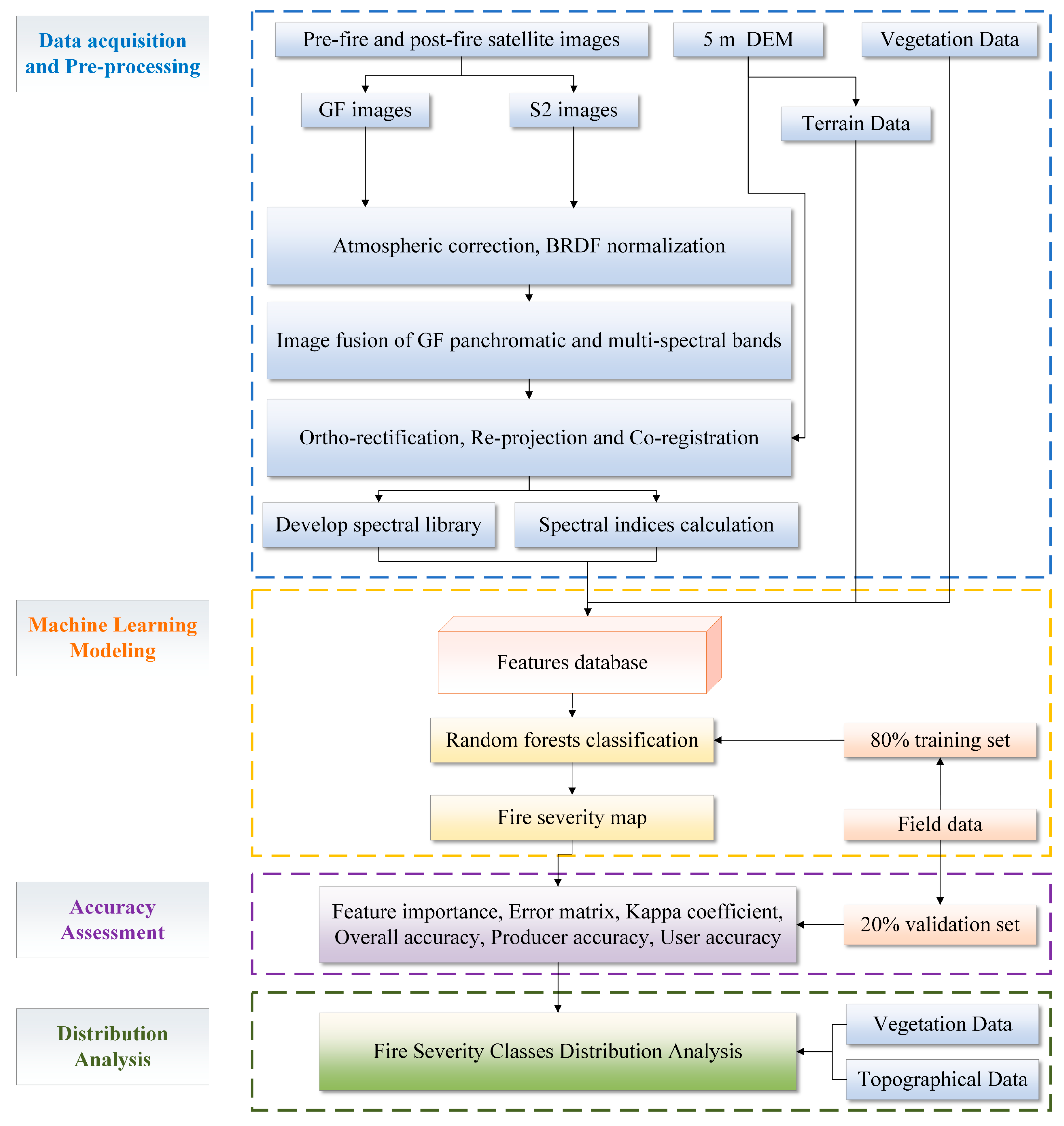

3. Methodology

3.1. Data Acquisition and Pre-Processing

3.1.1. Satellite Images

3.1.2. Topographical Data and Vegetation Data

3.1.3. Training and Validation Dataset

3.2. Machine Learning Modeling

3.3. Classification Accuracy Assessment

3.4. Fire Severity Classes Distribution Analysis

4. Results

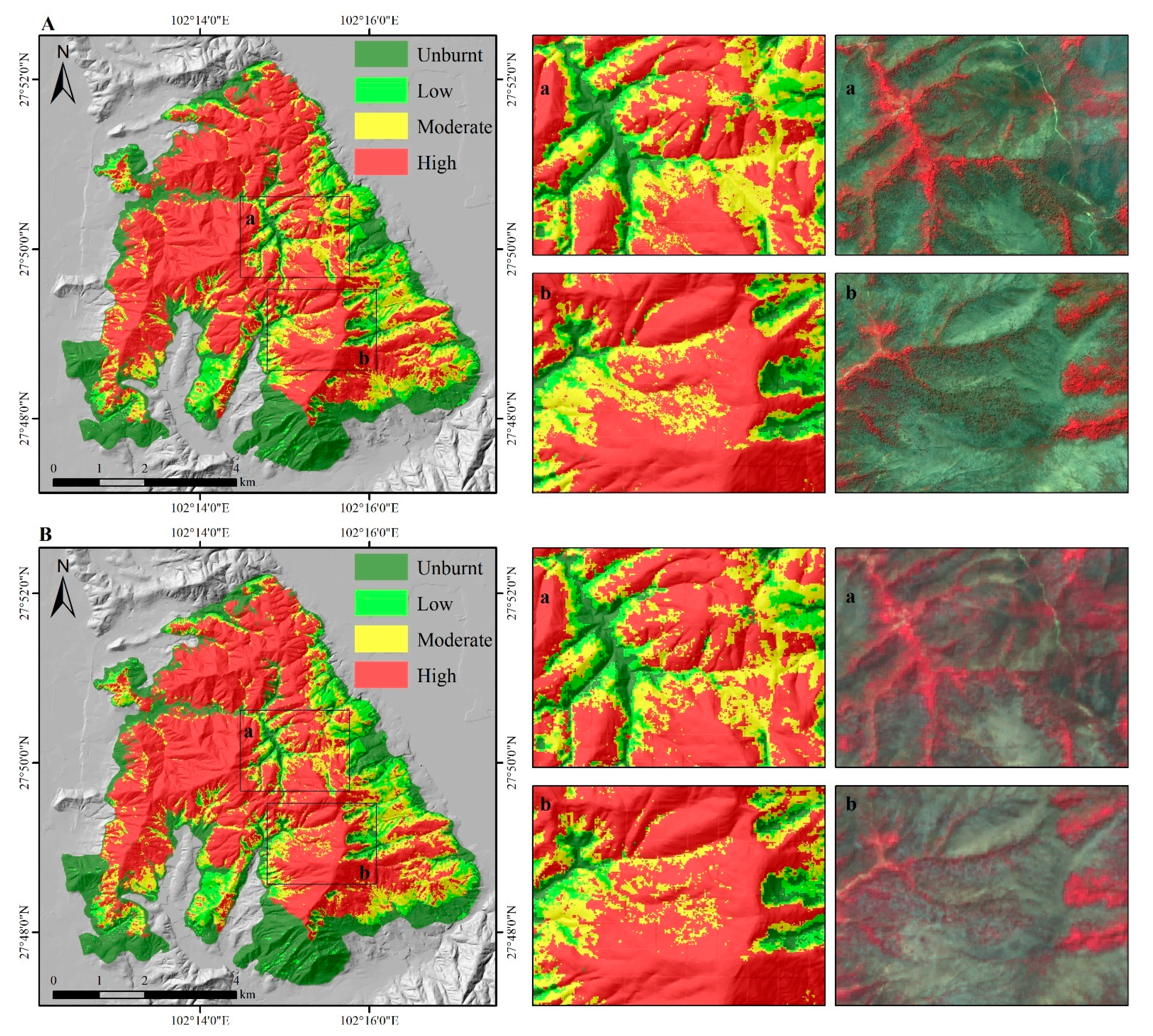

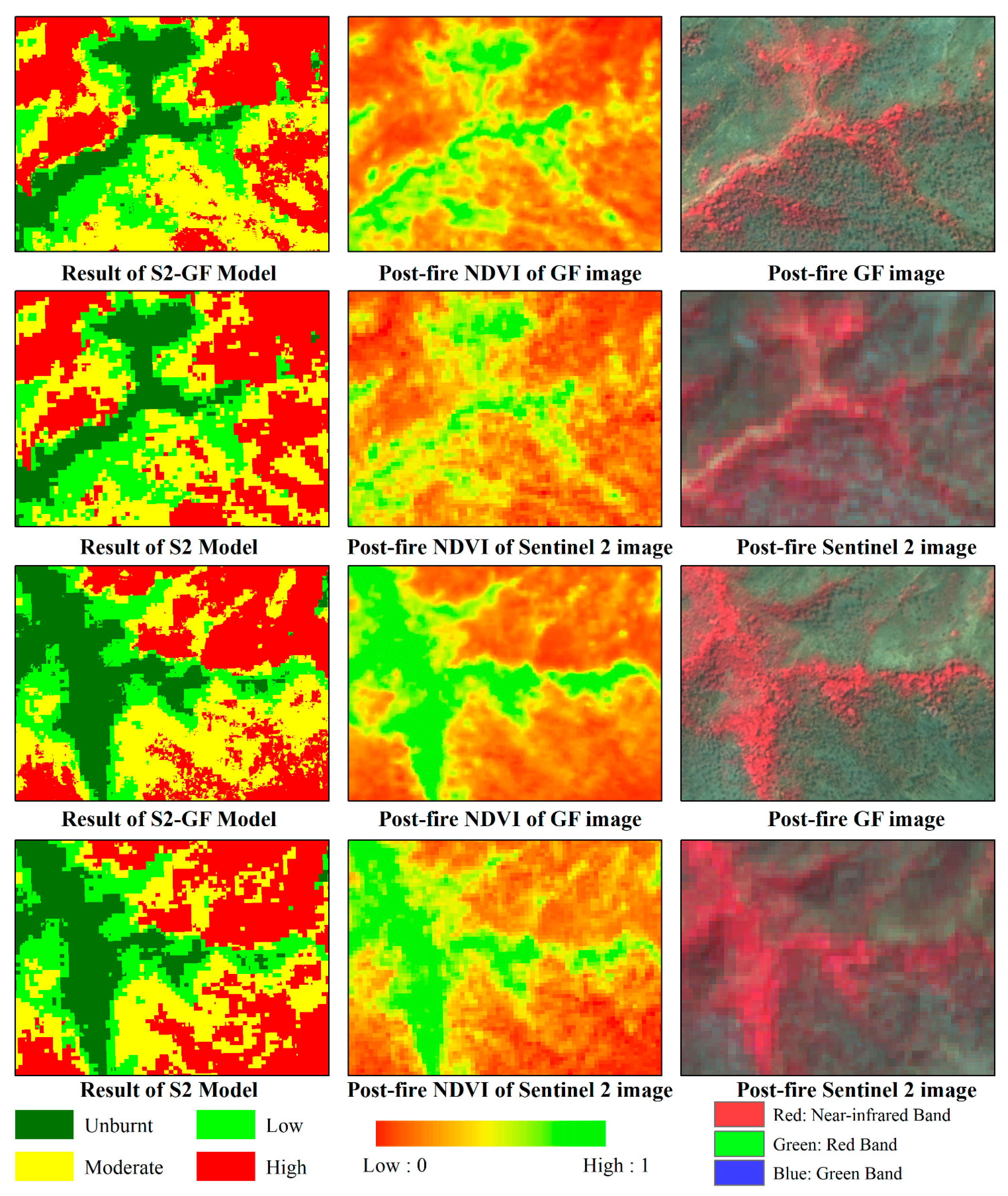

4.1. Model Results and Accuracy Comparison

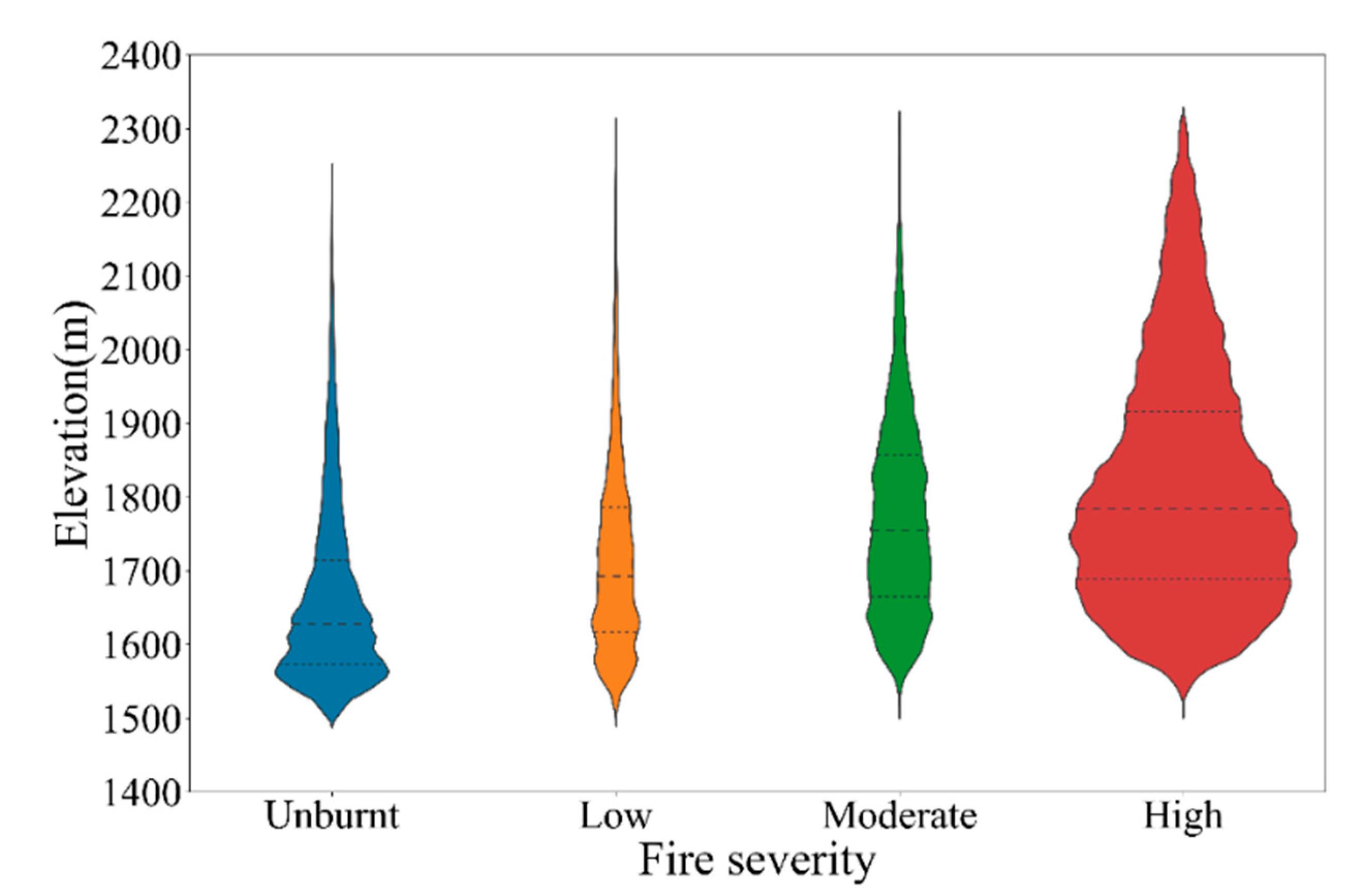

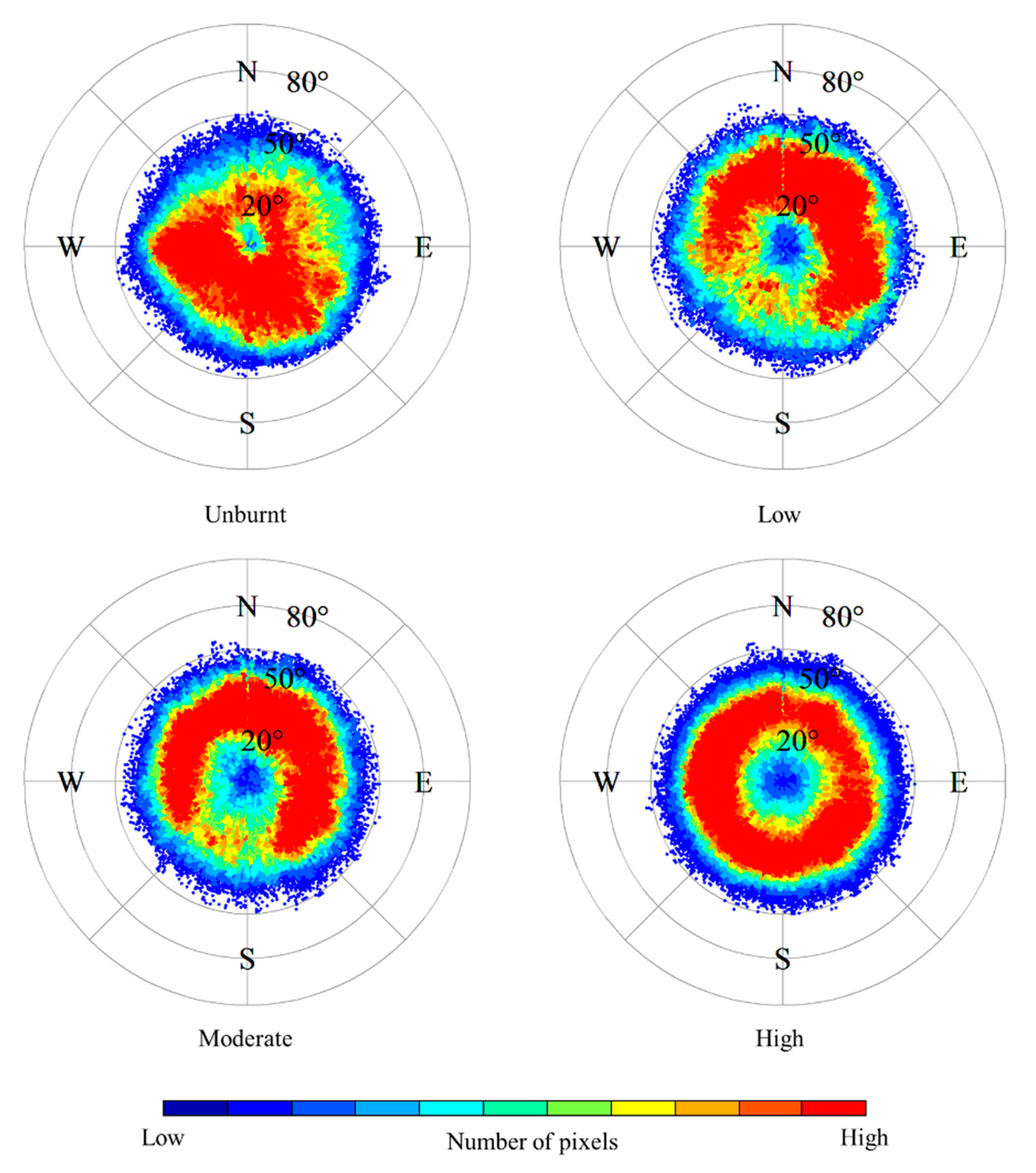

4.2. Relationship between Fire Severity Classes and Topography

4.3. Relationship between Fire Severity Classes and Vegetation Type

5. Discussion

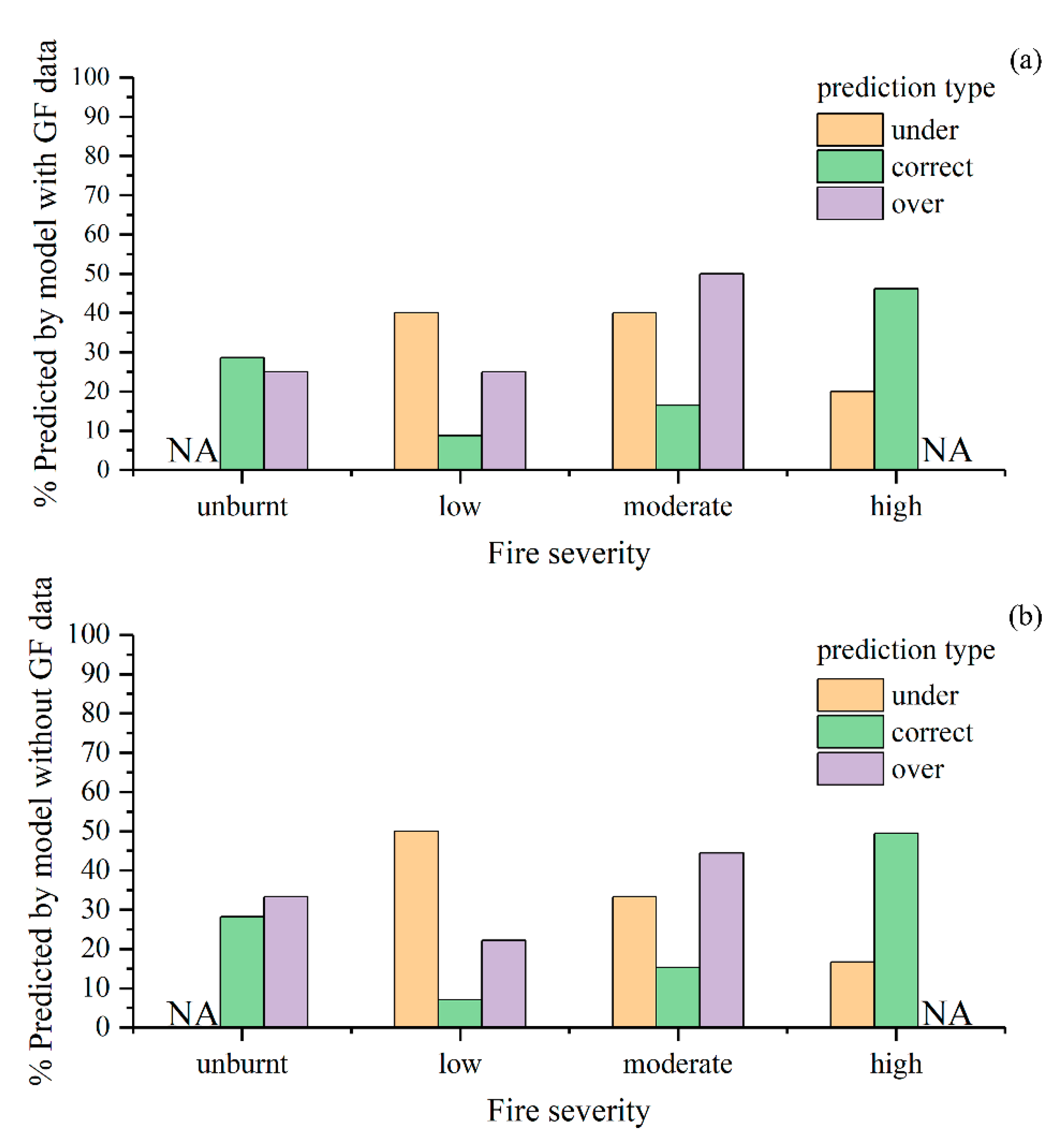

5.1. Error Analysis

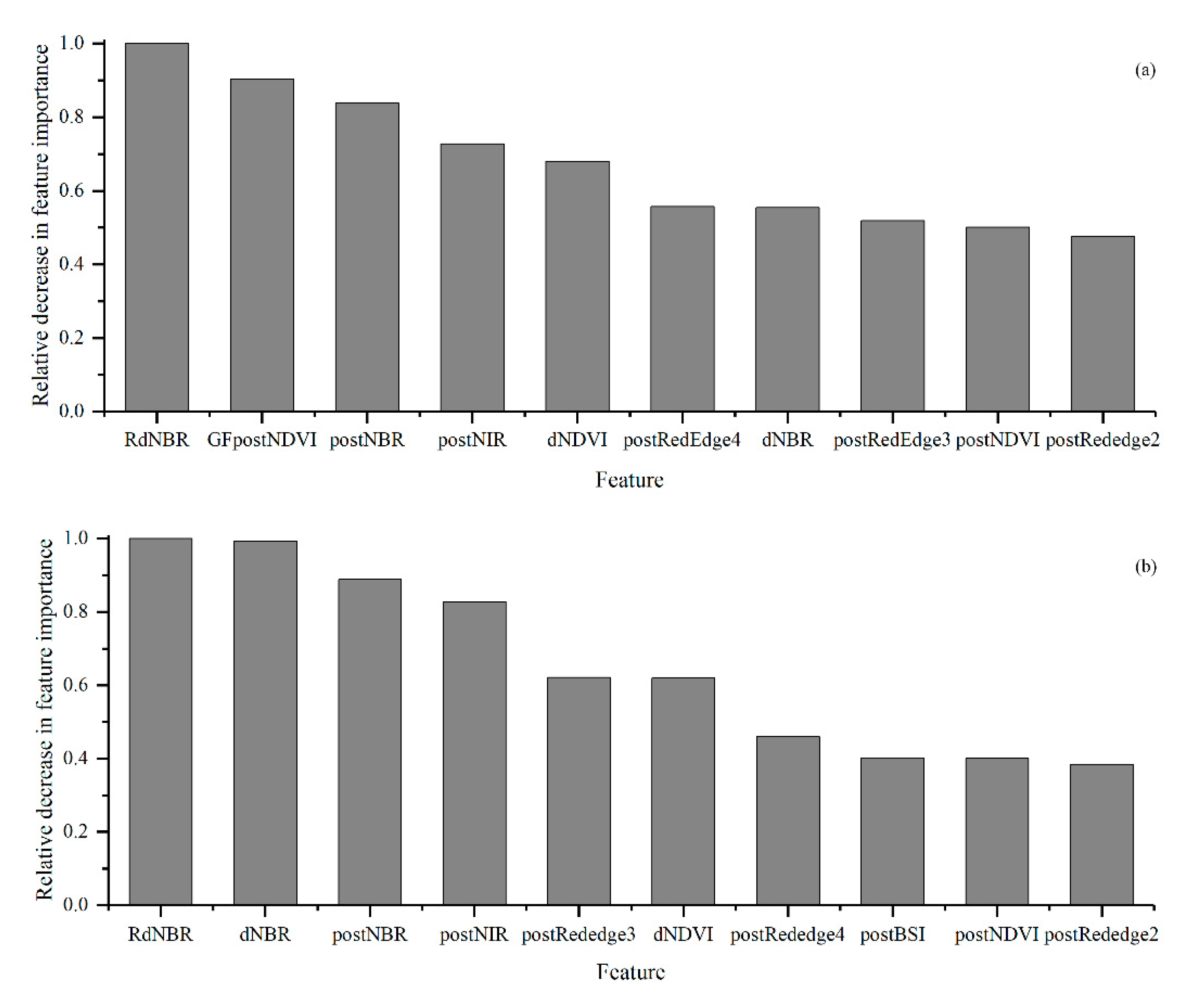

5.2. Feature Importance Comparison

5.3. Fire Severity Distribution

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Wang, Y.; Hu, X.; Wu, L.; Ma, G.; Yang, Y.; Jing, T. Evolutionary History of Post-Fire Debris Flows in Ren’e Yong Valley in Sichuan Province of China. Landslides 2022, 19, 1479–1490. [Google Scholar] [CrossRef]

- Sazeides, C.I.; Christopoulou, A.; Fyllas, N.M. Coupling Photosynthetic Measurements with Biometric Data to Estimate Gross Primary Productivity (GPP) in Mediterranean Pine Forests of Different Post-Fire Age. Forests 2021, 12, 1256. [Google Scholar] [CrossRef]

- Depountis, N.; Michalopoulou, M.; Kavoura, K.; Nikolakopoulos, K.; Sabatakakis, N. Estimating Soil Erosion Rate Changes in Areas Affected by Wildfires. IJGI 2020, 9, 562. [Google Scholar] [CrossRef]

- van Wees, D.; van der Werf, G.R.; Randerson, J.T.; Andela, N.; Chen, Y.; Morton, D.C. The Role of Fire in Global Forest Loss Dynamics. Glob. Chang. Biol. 2021, 27, 2377–2391. [Google Scholar] [CrossRef] [PubMed]

- Roces-Díaz, J.V.; Santín, C.; Martínez-Vilalta, J.; Doerr, S.H. A Global Synthesis of Fire Effects on Ecosystem Services of Forests and Woodlands. Front. Ecol. Environ. 2022, 20, 170–178. [Google Scholar] [CrossRef]

- Geary, W.L.; Buchan, A.; Allen, T.; Attard, D.; Bruce, M.J.; Collins, L.; Ecker, T.E.; Fairman, T.A.; Hollings, T.; Loeffler, E.; et al. Responding to the Biodiversity Impacts of a Megafire: A Case Study from South-eastern Australia’s Black Summer. Divers. Distrib. 2022, 28, 463–478. [Google Scholar] [CrossRef]

- Li, J.; Pei, J.; Liu, J.; Wu, J.; Li, B.; Fang, C.; Nie, M. Spatiotemporal Variability of Fire Effects on Soil Carbon and Nitrogen: A Global Meta-analysis. Glob. Chang. Biol. 2021, 27, 4196–4206. [Google Scholar] [CrossRef]

- Sirin, A.; Maslov, A.; Makarov, D.; Gulbe, Y.; Joosten, H. Assessing Wood and Soil Carbon Losses from a Forest-Peat Fire in the Boreo-Nemoral Zone. Forests 2021, 12, 880. [Google Scholar] [CrossRef]

- Rostami, N.; Heydari, M.; Uddin, S.M.M.; Esteban Lucas-Borja, M.; Zema, D.A. Hydrological Response of Burned Soils in Croplands, and Pine and Oak Forests in Zagros Forest Ecosystem (Western Iran) under Rainfall Simulations at Micro-Plot Scale. Forests 2022, 13, 246. [Google Scholar] [CrossRef]

- Keeley, J.E.; Keeley, J.E. Fire Intensity, Fire Severity and Burn Severity: A Brief Review and Suggested Usage. Int. J. Wildland Fire 2009, 18, 116–126. [Google Scholar] [CrossRef]

- Gibson, R.; Danaher, T.; Hehir, W.; Collins, L. A Remote Sensing Approach to Mapping Fire Severity in South-Eastern Australia Using Sentinel 2 and Random Forest. Remote Sens. Environ. 2020, 240, 111702. [Google Scholar] [CrossRef]

- Meddens, A.J.H.; Kolden, C.A.; Lutz, J.A. Detecting Unburned Areas within Wildfire Perimeters Using Landsat and Ancillary Data across the Northwestern United States. Remote Sens. Environ. 2016, 186, 275–285. [Google Scholar] [CrossRef]

- Meng, R.; Wu, J.; Schwager, K.L.; Zhao, F.; Dennison, P.E.; Cook, B.D.; Brewster, K.; Green, T.M.; Serbin, S.P. Using High Spatial Resolution Satellite Imagery to Map Forest Burn Severity across Spatial Scales in a Pine Barrens Ecosystem. Remote Sens. Environ. 2017, 191, 95–109. [Google Scholar] [CrossRef]

- Collins, L.; Griffioen, P.; Newell, G.; Mellor, A. The Utility of Random Forests for Wildfire Severity Mapping. Remote Sens. Environ. 2018, 216, 374–384. [Google Scholar] [CrossRef]

- Pérez-Rodríguez, L.A.; Quintano, C.; Marcos, E.; Suarez-Seoane, S.; Calvo, L.; Fernández-Manso, A. Evaluation of Prescribed Fires from Unmanned Aerial Vehicles (UAVs) Imagery and Machine Learning Algorithms. Remote Sens. 2020, 12, 1295. [Google Scholar] [CrossRef]

- Montorio, R.; Pérez-Cabello, F.; Borini Alves, D.; García-Martín, A. Unitemporal Approach to Fire Severity Mapping Using Multispectral Synthetic Databases and Random Forests. Remote Sens. Environ. 2020, 249, 112025. [Google Scholar] [CrossRef]

- García-Llamas, P.; Suárez-Seoane, S.; Fernández-Guisuraga, J.M.; Fernández-García, V.; Fernández-Manso, A.; Quintano, C.; Taboada, A.; Marcos, E.; Calvo, L. Evaluation and Comparison of Landsat 8, Sentinel-2 and Deimos-1 Remote Sensing Indices for Assessing Burn Severity in Mediterranean Fire-Prone Ecosystems. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 137–144. [Google Scholar] [CrossRef]

- Miller, J.D.; Thode, A.E. Quantifying Burn Severity in a Heterogeneous Landscape with a Relative Version of the Delta Normalized Burn Ratio (DNBR). Remote Sens. Environ. 2007, 109, 66–80. [Google Scholar] [CrossRef]

- Miller, J.D.; Knapp, E.E.; Key, C.H.; Skinner, C.N.; Isbell, C.J.; Creasy, R.M.; Sherlock, J.W. Calibration and Validation of the Relative Differenced Normalized Burn Ratio (RdNBR) to Three Measures of Fire Severity in the Sierra Nevada and Klamath Mountains, California, USA. Remote Sens. Environ. 2009, 113, 645–656. [Google Scholar] [CrossRef]

- Soverel, N.O.; Perrakis, D.D.B.; Coops, N.C. Estimating Burn Severity from Landsat DNBR and RdNBR Indices across Western Canada. Remote Sens. Environ. 2010, 114, 1896–1909. [Google Scholar] [CrossRef]

- Quintano, C.; Fernández-Manso, A.; Roberts, D.A. Enhanced Burn Severity Estimation Using Fine Resolution ET and MESMA Fraction Images with Machine Learning Algorithm. Remote Sens. Environ. 2020, 244, 111815. [Google Scholar] [CrossRef]

- Xie, X.; Li, A.; Tian, J.; Wu, C.; Jin, H. A Fine Spatial Resolution Estimation Scheme for Large-Scale Gross Primary Productivity (GPP) in Mountain Ecosystems by Integrating an Eco-Hydrological Model with the Combination of Linear and Non-Linear Downscaling Processes. J. Hydrol. 2023, 616, 128833. [Google Scholar] [CrossRef]

- Zhou, Q.; Yu, Q.; Liu, J.; Wu, W.; Tang, H. Perspective of Chinese GF-1 High-Resolution Satellite Data in Agricultural Remote Sensing Monitoring. J. Integr. Agric. 2017, 16, 242–251. [Google Scholar] [CrossRef]

- Xia, T.; He, Z.; Cai, Z.; Wang, C.; Wang, W.; Wang, J.; Hu, Q.; Song, Q. Exploring the Potential of Chinese GF-6 Images for Crop Mapping in Regions with Complex Agricultural Landscapes. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102702. [Google Scholar] [CrossRef]

- Tian, Y.; Wu, Z.; Li, M.; Wang, B.; Zhang, X. Forest Fire Spread Monitoring and Vegetation Dynamics Detection Based on Multi-Source Remote Sensing Images. Remote Sens. 2022, 14, 4431. [Google Scholar] [CrossRef]

- White, L.A.; Gibson, R.K. Comparing Fire Extent and Severity Mapping between Sentinel 2 and Landsat 8 Satellite Sensors. Remote Sens. 2022, 14, 1661. [Google Scholar] [CrossRef]

- Howe, A.A.; Parks, S.A.; Harvey, B.J.; Saberi, S.J.; Lutz, J.A.; Yocom, L.L. Comparing Sentinel-2 and Landsat 8 for Burn Severity Mapping in Western North America. Remote Sens. 2022, 14, 5249. [Google Scholar] [CrossRef]

- Fernández-Manso, A.; Fernández-Manso, O.; Quintano, C. SENTINEL-2A Red-Edge Spectral Indices Suitability for Discriminating Burn Severity. Int. J. Appl. Earth Obs. Geoinf. 2016, 50, 170–175. [Google Scholar] [CrossRef]

- Dadrass Javan, F.; Samadzadegan, F.; Mehravar, S.; Toosi, A.; Khatami, R.; Stein, A. A Review of Image Fusion Techniques for Pan-Sharpening of High-Resolution Satellite Imagery. ISPRS J. Photogramm. Remote Sens. 2021, 171, 101–117. [Google Scholar] [CrossRef]

- Flood, N.; Danaher, T.; Gill, T.; Gillingham, S. An Operational Scheme for Deriving Standardised Surface Reflectance from Landsat TM/ETM+ and SPOT HRG Imagery for Eastern Australia. Remote Sens. 2013, 5, 83–109. [Google Scholar] [CrossRef]

- Roy, D.P.; Zhang, H.K.; Ju, J.; Gomez-Dans, J.L.; Lewis, P.E.; Schaaf, C.B.; Sun, Q.; Li, J.; Huang, H.; Kovalskyy, V. A General Method to Normalize Landsat Reflectance Data to Nadir BRDF Adjusted Reflectance. Remote Sens. Environ. 2016, 176, 255–271. [Google Scholar] [CrossRef]

- Vermote, E.; Justice, C.; Claverie, M.; Franch, B. Preliminary Analysis of the Performance of the Landsat 8/OLI Land Surface Reflectance Product. Remote Sens. Environ. 2016, 185, 46–56. [Google Scholar] [CrossRef] [PubMed]

- Millard, K.; Richardson, M. On the Importance of Training Data Sample Selection in Random Forest Image Classification: A Case Study in Peatland Ecosystem Mapping. Remote Sens. 2015, 7, 8489–8515. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and Photographic Infrared Linear Combinations for Monitoring Vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Rikimaru, A.; Roy, P.; Miyatake, S. Tropical Forest Cover Density Mapping. Trop. Ecol. 2002, 43, 39–47. [Google Scholar]

- Allouche, O.; Tsoar, A.; Kadmon, R. Assessing the Accuracy of Species Distribution Models: Prevalence, Kappa and the True Skill Statistic (TSS). J. Appl. Ecol. 2006, 43, 1223–1232. [Google Scholar] [CrossRef]

- Collins, L.; McCarthy, G.; Mellor, A.; Newell, G.; Smith, L. Training Data Requirements for Fire Severity Mapping Using Landsat Imagery and Random Forest. Remote Sens. Environ. 2020, 245, 111839. [Google Scholar] [CrossRef]

- Yang, X.; Chen, R.; Zhang, F.; Zhang, L.; Fan, X.; Ye, Q.; Fu, L. Pixel-Level Automatic Annotation for Forest Fire Image. Eng. Appl. Artif. Intell. 2021, 104, 104353. [Google Scholar] [CrossRef]

- Wang, H.; Li, Y.; Dang, L.M.; Lee, S.; Moon, H. Pixel-Level Tunnel Crack Segmentation Using a Weakly Supervised Annotation Approach. Comput. Ind. 2021, 133, 103545. [Google Scholar] [CrossRef]

- Hoy, E.E.; French, N.H.F.; Turetsky, M.R.; Trigg, S.N.; Kasischke, E.S.; Hoy, E.E.; French, N.H.F.; Turetsky, M.R.; Trigg, S.N.; Kasischke, E.S. Evaluating the Potential of Landsat TM/ETM+ Imagery for Assessing Fire Severity in Alaskan Black Spruce Forests. Int. J. Wildland Fire 2008, 17, 500–514. [Google Scholar] [CrossRef]

- Cao, X.; Hu, X.; Han, M.; Jin, T.; Yang, X.; Yang, Y.; He, K.; Wang, Y.; Huang, J.; Xi, C.; et al. Characteristics and Predictive Models of Hillslope Erosion in Burned Areas in Xichang, China, on March 30, 2020. Catena 2022, 217, 106509. [Google Scholar] [CrossRef]

| Name | Pre-Fire Data Date | Post-Fire Data Date | Spatial Resolution |

|---|---|---|---|

| Sentinel 2 images | 30 March 2020 (S2B) | 4 April 2020 (S2A) | 10 m/20 m |

| GF series images | 6 March 2020 (GF-6) | 18 April 2020 (GF-1) | 2 m/8 m |

| Digital elevation Model | 2013 | 2013 | 5 m |

| Plot-based vegetation maps | 2019 | 2020 | 2 m |

| Spectral Region | GF-1 | GF-6 | ||||

|---|---|---|---|---|---|---|

| Band | Range (nm) | Spatial Resolution (m) | Band | Range (nm) | Spatial Resolution (m) | |

| Pan 1 | 1 | 450–900 | 2 | 1 | 450–900 | 2 |

| Blue | 2 | 450–520 | 8 | 2 | 450–520 | 8 |

| Green | 3 | 520–590 | 8 | 3 | 520–600 | 8 |

| Red | 4 | 630–690 | 8 | 4 | 630–690 | 8 |

| NIR 2 | 5 | 770–890 | 8 | 5 | 760–900 | 8 |

| Spectral Region | Band | Sentinel 2A | Sentinel 2B | Spatial Resolution (m) | ||

|---|---|---|---|---|---|---|

| Central Wavelength (nm) | Bandwidth (nm) | Central Wavelength (nm) | Bandwidth (nm) | |||

| Blue | 2 | 492.4 | 66 | 492.1 | 66 | 10 |

| Green | 3 | 559.8 | 36 | 559 | 36 | 10 |

| Red | 4 | 664.6 | 31 | 665 | 31 | 10 |

| Red Edge 1 | 5 | 704.1 | 15 | 703.8 | 16 | 20 |

| Red Edge 2 | 6 | 740.5 | 15 | 739.1 | 15 | 20 |

| Red Edge 3 | 7 | 782.8 | 20 | 779.7 | 20 | 20 |

| NIR 1 | 8 | 832.8 | 106 | 833 | 106 | 10 |

| Red Edge 4 | 8A | 864.7 | 21 | 864 | 22 | 20 |

| SWIR 2 1 | 11 | 1613.7 | 91 | 1610.4 | 94 | 20 |

| SWIR 2 | 12 | 2202.4 | 175 | 2185.7 | 185 | 20 |

| Severity Class | Description | % Foliage Fire Affected | Number of Samples |

|---|---|---|---|

| Unburnt | Unburnt surface with green canopy | 0% canopy and understory unburnt | 138 |

| Low | Burnt surface with unburnt canopy or partial canopy scorch | >50% green canopy <50% canopy scorched | 63 |

| Moderate | Most or full canopy scorch | >50% canopy scorched <50% canopy biomass consumed | 82 |

| High | Most or full canopy consumption | >50% canopy biomass consumed | 217 |

| Feature Type | S2-GF Model Trained with GF Data | S2 Model Trained without GF Data |

|---|---|---|

| Reflectance | The reflectance of each band of pre-fire and post-fire Sentinel 2 images | The reflectance of each band of pre-fire and post-fire Sentinel 2 images |

| The reflectance of each band of pre-fire and post-fire GF images | ||

| Spectral indexes | Spectral indexes of pre-fire and post-fire Sentinel 2 images | Spectral indexes of pre-fire and post-fire Sentinel 2 images |

| Spectral indexes of pre-fire and post-fire GF images | ||

| Additional data | DEM, aspect, slope, and vegetation type | DEM, aspect, slope, and vegetation type |

| Spectral Index | Formula | References |

|---|---|---|

| Pre-Fire Normalized Burn Ratio (preNBR) | [18,19,20] | |

| Post-Fire Normalized Burn Ratio (postNBR) | ||

| The Differenced Normalized Burn Ratio (dNBR) | ||

| Relative Differenced Normalized Burn Ratio (RdNBR) | ||

| Pre-Fire Normalized Difference Vegetation Index (preNDVI) | [35] | |

| Post-Fire Normalized Difference Vegetation Index (postNDVI) | ||

| The Differenced Normalized Difference Vegetation Index (dNDVI) | ||

| Pre-Fire Bare Soil Index (preBSI) | [36] | |

| Post-Fire Bare Soil Index (postBSI) | ||

| The Differenced Bare Soil Index (dBSI) |

| Reference from Field Investigation | F1 Score | Kappa | ||||||

|---|---|---|---|---|---|---|---|---|

| Unburnt | Low | Moderate | High | Precision | ||||

| Predicted by the S2-GF model | Unburnt | 26 | 2 | 0 | 0 | 92.86 | 94.55 | 0.8697 |

| Low | 1 | 8 | 2 | 0 | 72.73 | 72.73 | ||

| Moderate | 0 | 1 | 15 | 1 | 88.24 | 83.34 | ||

| High | 0 | 0 | 2 | 42 | 95.45 | 96.55 | ||

| recall | 96.30 | 72.73 | 78.95 | 97.67 | 91.00 | 91 | ||

| Predicted by the S2 model | Unburnt | 24 | 3 | 0 | 0 | 88.89 | 88.89 | 0.7808 |

| Low | 3 | 6 | 2 | 0 | 54.55 | 54.55 | ||

| Moderate | 0 | 1 | 13 | 1 | 86.67 | 76.47 | ||

| High | 0 | 1 | 4 | 42 | 89.36 | 93.33 | ||

| recall | 88.89 | 54.55 | 68.42 | 97.67 | 85.00 | 85 | ||

| Vegetation Type | Fire Severity | |||||

|---|---|---|---|---|---|---|

| Unburnt | Low | Moderate | High | Total | ||

| The whole study area | Area(ha) | 1017.62 | 430.63 | 597.74 | 2039.01 | 4085.00 |

| Percentage (%) | 24.91 | 10.54 | 14.63 | 49.92 | 100.00 | |

| Pinus Yunnanensis | Area(ha) | 356.67 | 339.38 | 497.35 | 1294.33 | 2487.73 |

| Percentage (%) | 14.34 | 13.64 | 19.99 | 52.03 | 100.00 | |

| Eucalyptus | Area(ha) | 195.74 | 50.13 | 63.34 | 341.96 | 651.17 |

| Percentage (%) | 30.06 | 7.70 | 9.73 | 52.51 | 100.00 | |

| Other areas of the study area | Area(ha) | 465.21 | 41.12 | 37.05 | 402.72 | 946.10 |

| Percentage (%) | 49.17 | 4.35 | 3.91 | 42.57 | 100.00 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, X.; Fan, J.; Zhou, J.; Gui, L.; Bi, Y. Mapping Fire Severity in Southwest China Using the Combination of Sentinel 2 and GF Series Satellite Images. Sensors 2023, 23, 2492. https://doi.org/10.3390/s23052492

Zhang X, Fan J, Zhou J, Gui L, Bi Y. Mapping Fire Severity in Southwest China Using the Combination of Sentinel 2 and GF Series Satellite Images. Sensors. 2023; 23(5):2492. https://doi.org/10.3390/s23052492

Chicago/Turabian StyleZhang, Xiyu, Jianrong Fan, Jun Zhou, Linhua Gui, and Yongqing Bi. 2023. "Mapping Fire Severity in Southwest China Using the Combination of Sentinel 2 and GF Series Satellite Images" Sensors 23, no. 5: 2492. https://doi.org/10.3390/s23052492

APA StyleZhang, X., Fan, J., Zhou, J., Gui, L., & Bi, Y. (2023). Mapping Fire Severity in Southwest China Using the Combination of Sentinel 2 and GF Series Satellite Images. Sensors, 23(5), 2492. https://doi.org/10.3390/s23052492