Abstract

Image super-resolution based on convolutional neural networks (CNN) is a hot topic in image processing. However, image super-resolution faces significant challenges in practical applications. Improving its performance on lightweight architectures is important for real-time super-resolution. In this paper, a joint algorithm consisting of modified particle swarm optimization (SMCPSO) and fast super-resolution convolutional neural networks (FSRCNN) is proposed. In addition, a mutation mechanism for particle swarm optimization (PSO) was obtained. Specifically, the SMCPSO algorithm was introduced to optimize the weights and bias of the CNNs, and the aggregation degree of the particles was adjusted adaptively by a mutation mechanism to ensure the global searching ability of the particles and the diversity of the population. The results showed that SMCPSO-FSRCNN achieved the most significant improvement, being about 4.84% better than the FSRCNN model, using the BSD100 data set at a scale factor of 2. In addition, a chest X-ray super-resolution images classification test experiment was conducted, and the experimental results demonstrated that the reconstruction ability of this model could improve the classification accuracy by 13.46%; in particular, the precision and recall rate of COVID-19 were improved by 45.3% and 6.92%, respectively.

1. Introduction

Single-image super-resolution (SR) refers to the reconstruction of low-resolution (LR) images to recreate high-resolution (HR) images as realistic as possible [1]. It has a promising future in medical and remote sensing, visual surveillance, etc. [2,3,4]. SR approaches used to be based on interpolation [5] and degradation [6] models. Currently, learning-based method [1,7] have received wide attention, among which deep learning models have shown powerful performance in image SR [8].

Convolutional neural networks (CNN) are widely used in image SR models. Many CNN-based methods attempt to learn how to achieve a better reconstruction performance by using deeper networks [9,10]. They have shown powerful performance in image SR; however, the high computing costs make them inconvenient for handling real-time problems. Dong et al. [11] proposed a lightweight model called FSRCNN, which has quite comparable performance to and is up to 40 times faster than SRCNN-EX [1]. Studies have further explored methods to improve the quality of SR images generated by FSRCNN. Considering the FSRCNN [11] model, it uses stochastic gradient descent (SGD) [12] optimization-based CNNs. However, the optimization problem for SR is non-convex and thus sensitive to the initial location. Such feature may cause the traditional neural network models to easily fall into the local optimum [13,14]. Particle swarm optimization (PSO) [15] has become a commonly used optimization method for training neural networks because of its simple rules and high search speed [16,17]. Kennedy et al. [18] used PSO to optimize the weight of a feedforward neural network, proposing the first combination of PSO and a neural network. Dong et al. [14] presented a modified PSO combined with an information entropy function to optimize the weights and bias of a back propagation neural network. The results showed that the joint algorithm had better performance in terms of accuracy and stability. Tu et al. [13] proposed an evolutionary convolutional neural network, which uses ModPSO and the backpropagation algorithm, to train convolutional neural networks to avoid models falling into local minima. This is the most advanced attempt; however, this study did not optimize particle swarm in terms of population diversity.

In order to further explore the effect of the PSO algorithm on CNN training and SR image quality, in this paper, a CNN training method based on the PSO algorithm was constructed, that is, the PSO algorithm was used to optimize CNN network parameters. In addition, in view of issues associated with PSO, the mutation of particles with high similarity is proposed according to the cosine similarity between particles, and the mutation probability decreased linearly with the number of iterations. The cosine similarity mutation strategy reinitialized the aggregated particles according to the cosine similarity, which could maintain a better spatial solution distribution of the particle swarm. Finally, the model was used to perform SR on low-resolution chest X-ray (CXR) images and analyze its impact on the diagnosis of pneumonia. The CXR images classification experiment showed that although the hybrid model was trained on a 91-image dataset, it could also super-resolve CXR images effectively and enhance the accuracy of their classification.

2. Related Work

2.1. Deep Learning for SR

Dong et al. [1] first proposed the use of a CNN model for image SR, which is called SRCNN. It is a lightweight model with three layers of construction but demonstrates advance repair qualities. It preprocesses the images using bicubic interpolation and then reconstructs SR images through nonlinear mapping of a three-layer convolutional neural network.

At that time, SRCNN was superior to all other reconstruction methods, but its interpolation structure would lead to too much computation when processing large images [11,19]. To speed up the process, Dong et al. [11] proposed the fast super-resolution convolutional neural networks (FSRCNN) model, which is 40 times faster than the SRCNN-EX model. In the FSRCNN model, the mean square error (MSE) is used as the cost function, which is formulated as:

where and are the HR and LR training data pair, and denotes the neural network output of the parameters . The goal of the stochastic gradient descent algorithm is to enforce the approach to 0. Its iteration formula is:

where represents the step size of each update, usually 0.01, 0.001, and 0.0001

2.2. PSO Based on Centroid Opposition-Based Learning

PSO is an interaction-based optimization algorithm imitating the preying behavior of birds [15]. Each particle delegates a group of weights and bias, and the optimal solution is obtained by iteratively searching particles in the solution space. The particles update their position in two ways: one is the individual optimal solution (pbest), and the other is the global group optimal solution (gbest). The dynamical formulas of PSO are as follows:

where is the initial number of particles, and measure the velocity and position of the particle at the iteration, respectively, denotes the inertia weight, which reflects the effect of the previous velocity on the current velocity, and are acceleration factors, usually represented by two real numbers, and are unpremeditated numbers from the interval (0,1).

Opposition-based learning (OBL) [20] was proved to be an effective means to improve particle swarm optimization algorithms [21]. The central idea of OBL is to improve the optimization ability by searching a solution and its corresponding opposing solution simultaneously in the solution space. Centroid opposition-based learning (COBL) [22] makes use of the centroid of the swarm when calculating the position of opposing solutions and utilizes the swarm experience to improve the searching efficiency of the particle swarm. Assuming that () is the location of n particles, the centroid is calculated as follows:

The opposite solution based on the centroid of the swarm can be formulated as:

The opposite solution exists in a search space with dynamic boundaries, which is expressed as:

If the opposite solution exceeds the dynamic boundary, the opposite solution is recalculated according to the following equation:

By comparing the current solution with the opposite solution, the better one can be selected.

3. Methods

3.1. Cosine Similarity Variation Strategy

In the late phase of COBL, the algorithm will be trapped into a local optimum due to the extreme population aggregation. Cosine similarity is mainly used to measure the size of the difference between two individuals, and here it is used to quantitatively describe the aggregation degree of particles and populations, according to the formulas:

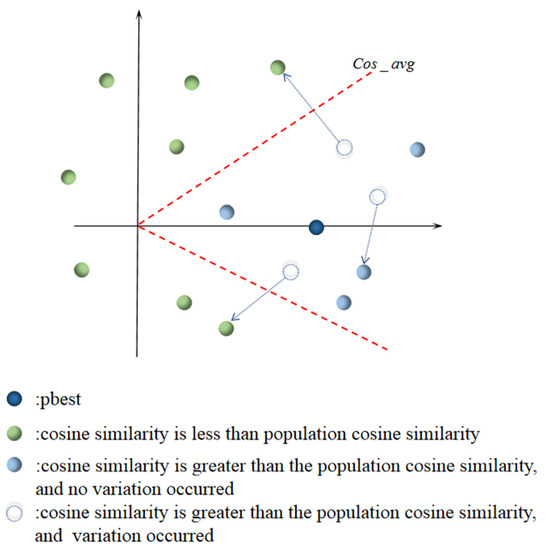

where represents the global optimal solution of the current iteration, and denotes the location of the particle. In order to improve the search efficiency, some particles, whose cosine similarity is greater than the average cosine similarity of the population, are re-initialized to maintain the diversity of the particles. The average cosine similarity was calculated by the cosine similarity between each particle and pbest, and the mutation region was defined according to the average cosine similarity, as indicated by the red dotted line in Figure 1. The region whose cosine similarity to pbest is greater than the average similarity is the mutation region, and the particles in this region have a certain probability to be randomly initialized. The region whose cosine similarity to pbest is less than the average similarity is defined as the non-mutation region, and the particles in this region continue the iterative optimization.

Figure 1.

Schematic diagram of the cosine similarity mutation strategy. The red dotted line represents the average cosine similarity of the population, and the dotted arrow shows one possible position of the particle after the variation.

In addition, the cosine similarity of the population is affected by some extreme particles, which may lead to a higher mutation rate. However, a higher mutation rate may be detrimental to the later convergence of the algorithm. To address this issue, A mutation factor is introduced, which reduces the probability of mutation from 50% to 5% as the number of iterations increases. The mutation factor can be defined as:

where and represent the initial and final mutation probability, respectively, indicates the number of current iterations, and denotes the limit number of iterations.

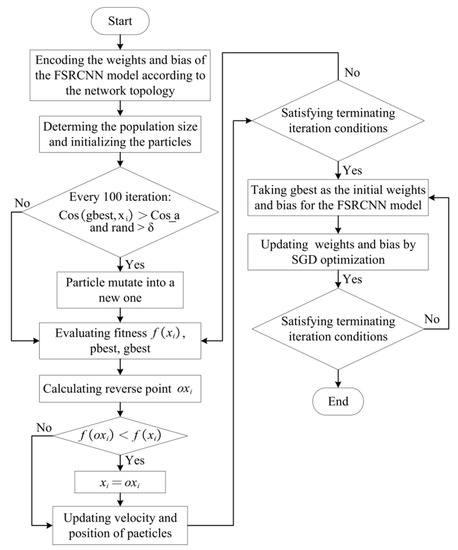

3.2. FSRCNN Model Based on SMCPSO

In our implementation, we utilized the SMCPSO method to initialize the weights and bias of the FSRCNN model. The MSE is defined as the fitness function of SMCPSO, and the dimension of the particles is the number of parameters to be learned in the FSRCNN network. Figure 2 illustrates the flowchart of the joint algorithm. The weight and bias of the FSRCNN model correspond to each dimension of the particle. The number of optimized particles was set to 50, and each particle represented a set of possible weights and biases of the FSRCNN model. The number of iterations was set to 10,000. Every 100 iterations, the particle whose cosine similarity to the optimal particle was less than the average cosine similarity and whose random value was greater than the variation factor was initialized. Each iteration considers whether there is a better solution for the inverse particle of the particle, and if so, transforms the particle into its inverse particle. When the iteration stop condition is reached, the particle swarm training ends. The value of each dimension of the optimal particle corresponds to the weight and bias of the FSRCNN model; the SGD algorithm was used to optimize the weight and bias of the model until the training was completed. The SGD algorithm is greatly affected by the initial position; therefore, PSO can set the ideal initial position for SGD. Specifically, PSO is used to search the desired weights and bias of the CNN as the initial parameters of the SGD algorithm. Higher accuracy can be achieved through this joint training method.

Figure 2.

Flowchart of SMCPSO-based CNNs.

3.3. Classification of Pneumonia

Deep convolutional neural networks are being used in medical diagnosis. ResNet34 [23] adopted the residual network structure to achieve a good balance between classification accuracy and network complexity. Therefore, ResNet34 [23] was selected as the diagnostic classifier for pneumonia. Since the pneumonia data set publicly available online is not large, transfer learning was used to train the model. ResNet34 [23] uses ImageNet weights, and the full connection layer was modified to fit the four categories of the experimental data set.

Five indexes, i.e., accuracy, precision, sensitivity, F1 score, and specificity [24], were used to evaluate the classification results of ResNet34 [23]. The calculation formulas are as follows:

We considered COVID-19, Lung Opacity, Normal, and Viral Pneumonia as the four class problems.

4. Experiments and Results

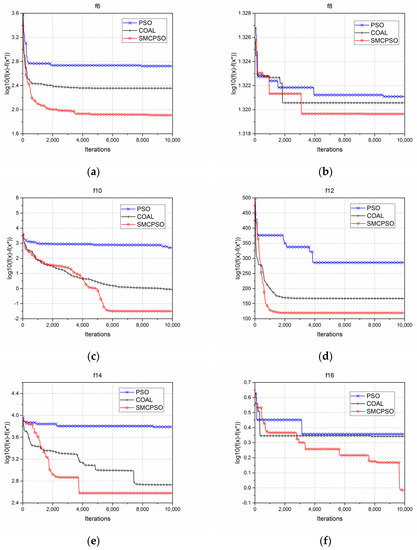

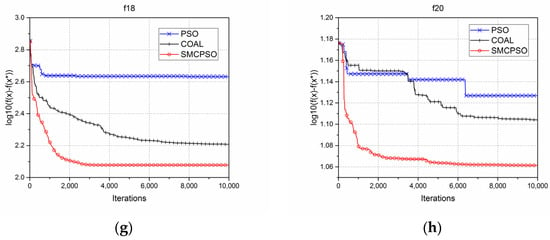

4.1. Improved Particle Swarm Optimization

For the sake of evaluating the search capability of the proposed SMCPSO algorithm, 15 multimodal test functions (F6–F20), recommended by IEEE Evolutionary Computing Conference (CEC) 2013 [25], were used to test the algorithm. The optimal solutions of the F6–F20 functions are −900, −800, −700, −600, −500, −400, −300, −200, −100, 100, 200, 300, 400, 500, 600, 700, respectively. In the experiment, the population size was N = 40, the maximum number of function evaluations was 10,000, and the problem dimension was D = 30. The standard PSO, COBL, and SMCPSO algorithms were used in 20 independent tests. Table 1 records the best value, worst value, mean value, standard deviation, mean absolute error, and time of each algorithm. The results in the Table 1 show that SMCPSO could find better solutions for F6–F20 multi-peak test functions. Especially for the F6, F10, F14, and F19 functions, the optimization of PSO and COBL was not satisfactory, but SMCPSO found a solution extraordinarily close to the global optimal solution.

Table 1.

Statistical summary of the best value, worst value, average value, standard deviation, mean absolute error, and search time for the PSO, COBL, and SMCPSO algorithms in relation to 15 multimodal test functions (F6–F20) recommended by the IEEE Evolutionary Computing Conference (CEC) 2013 [25] (the optimal solutions of the F6-F20 functions are −900, −800, −700, −600, −500, −400, −300, −200, −100, 100, 200, 300, 400, 500, 600, 700, respectively).

As shown in Figure 3, the SMCPO demonstrated improving searching ability for most test functions, and only a slightly lower ability compared to COBL in the initial stage. This is because in the early stage of the SMCPSO algorithm search, the particles with high cosine similarity to the pbest mutated in the optimization process, which was not conducive to the algorithm convergence in the early stage; however, this could make the algorithm less likely to be confused by the local optimal solution. With the increase of the function evaluation times, the SMCPSO algorithm showed a better global searching ability than the standard PSO and COBL models in most multimodal optimization problems.

Figure 3.

Comparison of the results for PSO, COBL, and SMCPSO in relation to multi-peak test functions. ( represents the optimal solution of the current iteration, and represents the actual optimal solution of the function. is the current error represented by a logarithm, and the smaller the error is, the better the algorithm performance is.) (a) Errors between the optimal value of each iteration and the actual optimal value of the f6 multi-peak test function by the standard PSO, COBL, and SMCPSO methods, respectively; similarly, this is shown for (b) f8, (c) f10, (d) f12, (e) f14, (f) f16, (g) f18, (h) f20.

4.2. FSRCNN Model Based on SMCPSO

Consistent with the work of SRCNN and FSRCNN, a 91-image dataset [26] was used for training, and Set5 [27], Set14 [28], BSD100 data set [29] and Urban data set [30] were used for testing. The peak signal-to-noise ratio (PNSR) [31] and the structural similarity index (SSIM) [32] were employed to evaluate the quality of the images.

where

where and represent the mean values of images X and Y, respectively, and were used for the estimation of image brightness; and denote the standard deviations of images X and Y, respectively, and were used for the estimation of contrast; represents the covariance of images X and Y, and was used for the measurement of structural similarity. C1 and C2 are constants that prevent the denominator from being 0.

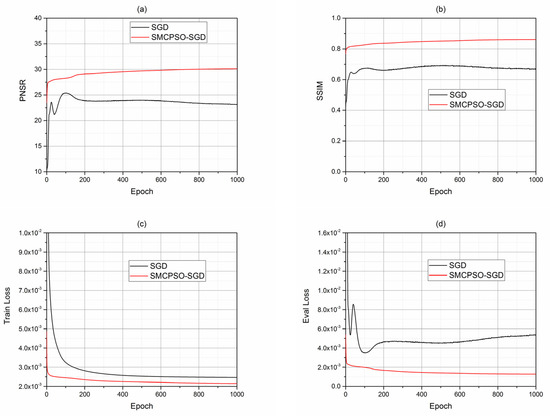

SMCPSO was used to optimize FSRCNN and compared with SGD optimization; their performances were compared using Set5 [27]. In Figure 4a,b, compared with the SGD method, the images generated by the SMCPSO-SGD method show better performance as regards the PNSR [31] and the SSIM [32]. Moreover, the improvement of PNSR and SSIM with SMCPSO-SGD training was relatively stable.

Figure 4.

Comparison of SGD-alone training and SMCPSO-SGD combined training with SET5. In (a,b), PNSR and SSIM of images generated during SGD and SMCPSO-SGD training are compared. The red curve is smoother than the black curve, and the PNSR and SSIM of the images are higher in each epoch, indicating better quality of the generated image. In (c,d), the train loss and eval loss of the red curve are always lower, and the black curve declines steadily in (c) but fluctuates greatly in (d), while the red curve declines steadily all the time, indicating that SMCPSO helps SGD to better train.

In Figure 4c, the training loss function of the SMCPSO-SGD method converges fast and shows a small error; in Figure 4d, the eval loss function of the SMCPSO-SGD method declines stably and shows a lower error. These results showed that SMCPSO can help the SGD algorithm find better solutions faster.

To verify the universality of the algorithm, image quality was evaluated using four general test sets. Table 2 demonstrates the test results of the SMCPSO-SGD using the general test sets Set5 [27], Set14 [28], BSD100 data set [29], and Urban data set [30]. The results indicated that the model trained with SMCPSO performed best as regards PNSR and SSIM at all scales. With the BSD100 dataset, when the scale factor was 2, the improvement of the SMCPSO-SGD model was the most significant, about 1.54 db, compared with SGD model. This suggests that the SMCPSO optimization algorithm can help models generate more realistic images.

Table 2.

PNSR and SSIM of the bicubic, SGD, SMCPSO-SGD models with the test sets Set5 [27], Set14 [28], BSD200 [29], and Urban100 [30].

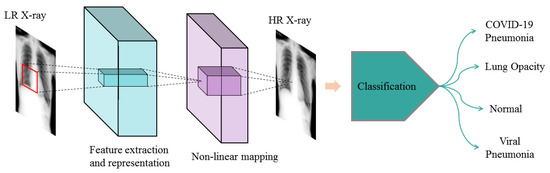

4.3. Classification Evaluation

To confirm the effectiveness of generating image details, we conducted an experiment on chest X-ray (CXR) SR image classification. The data set we used was from Kaggle (available on the website: https://www.kaggle.com/datasets/tawsifurrahman/covid19-radiography-database (accessed on 19 March 2022)) [24]. It has a total of 21,165 CXR images, divided in four categories. The “COVID-19” and “Viral Pneumonia” classes were data-enhanced to balance the training set. Table 3 summarizes the number of images per class used for training, validation, and testing. CXR LR images for each category were obtained from CXR HR images using the down-sampling method. Then, the CXR LR images, CXR SR images, and CXR HR images were fed into the ResNet34 [23] classification network. The implementation scheme is shown in Figure 5.

Table 3.

Number of images per class.

Figure 5.

Pneumonia diagnosis flow diagram. (The SMCPSO-SGD network was first used to convert LR X-rays into HR X-rays, then ResNet34 was used to classify the HR X-ray images).

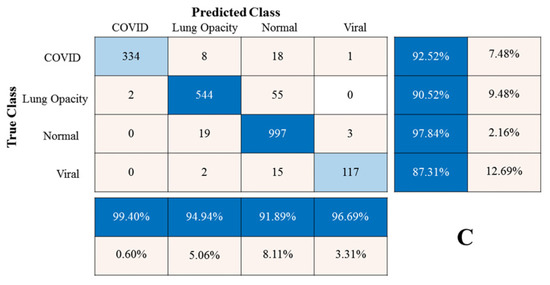

In order to evaluate the reliability of the restored details, the ResNet34 [23] classification network was used to classify LR CXR images, SR CXR images, and HR CXR images. Five performance indicators, i.e., accuracy, precision, sensitivity, F1 score, and specificity [24], were used to evaluate the classification results. Table 4 shows the evaluation results.

Table 4.

Comparison of HR, LR, and SR image classification results.

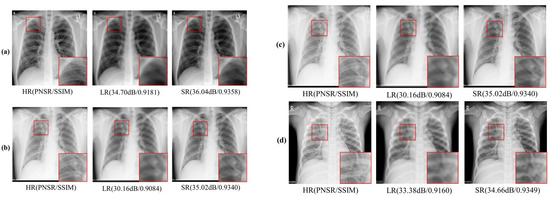

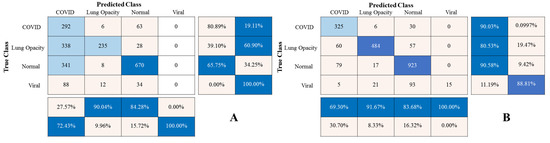

Figure 6 displays the CXR HR, LR, and SR images; the CXR SR image was recovered from the CXR LR image using the SMCPSO-SGD model. In terms of visual perception, images processed by SMCPSO-SGD had better visual perception and higher scores of PNSR and SSIM. Although the given CXR LR images lost a large amount of valid information, this model could help acquire a better visual effect. Figure 7 shows the matrix diagram of CXR HR, LR, and SR image classification for subsequent diagnosis using the ResNet34 model. It was found that the CXR SR images improved the diagnostic accuracy of COVID-19, lung opacity, normal and viral pneumonia from 80.89% to 90.03%, 39.10% to 80.53%, 65.75% to 90.58%, and 0.00% to 11.09%, respectively.

Figure 6.

Examples of CXR HR, LR, and SR images for the COVID-19 category (a), Lung Opacity category (b), Normal category (c), Viral Pneumonia category (d).

Figure 7.

Classification mixing matrix for three kinds of images. (The vertical axis represents the true category, the horizontal axis represents the predicted category, the ratio matrix on the right represents the accuracy of each of the four categories, and the lower ratio matrix represents the recall rate of each of the four categories.) (A) Classification result for the CXR LR images. Since the CXR LR images were obtained from CXR HR images by using the down-sampling method, there was a loss of information, resulting in the worst classification. (B) Classification results for the CXR SR images. The SMCPOS-SGD model was used to process the CXR LR images, and the details of the CXR LR images could be restored. The generated CXR SR images could effectively improve the number of correct classifications for the four categories. (C) Classification results for the CXR HR images. The CXR HR images are the most primitive images; therefore, it is important to classify them correctly.

In order to further evaluate the comprehensive performance of the algorithm, the five indexes of accuracy, precision, sensitivity, F1 scores, and specificity were used to evaluate the classification results. As can be seen in Table 4, the CXR LR image classification accuracy was the lowest, indicating that the LR images contained very little useful information conducive to classification, while the SMCPSO-SGD model led to improvement in five evaluation indicators, increasing the CXR LR image classification accuracy to values close to those obtained with CXR HR images. The results revealed that the model could recover the images’ real and useful details well, which is beneficial to the diagnosis of pneumonia.

5. Conclusions

A training method of a convolution neural network based on an improved particle swarm optimization algorithm was proposed. It was proved that the joint training method could improve the efficiency and accuracy of the model. A mutation strategy was proposed and proved to be able to prevent a premature improvement of the optimization ability of the particle swarm optimization algorithm. Moreover, the chest X-ray image classification experiment proved that the proposed model can reconstruct useful information for pneumonia recognition.

Author Contributions

Conceptualization, C.Z. and A.X.; methodology, C.Z.; software, C.Z.; validation, C.Z. and A.X.; formal analysis, C.Z.; investigation, A.X.; resources, A.X.; data curation, C.Z.; writing—original draft preparation, C.Z. and A.X.; writing—review and editing, A.X.; visualization, C.Z. and A.X.; supervision, A.X. project administration, A.X.; funding acquisition, A.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data is not available due to privacy restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the European Conference on Computer Vision(ECCV), Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Huang, J.; Wang, L.; Qin, J.; Chen, Y.; Cheng, X.; Zhu, Y. Super-Resolution of Intravoxel Incoherent Motion Imaging Based on Multisimilarity. IEEE Sens. J. 2020, 20, 10963–10973. [Google Scholar] [CrossRef]

- Dong, R.; Zhang, L.; Fu, H. RRSGAN: Reference-Based Super-Resolution for Remote Sensing Image. IEEE T. Geosci. Remote 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Ha, Y.; Tian, J.; Miao, Q.; Yang, Q.; Guo, J.; Jiang, R. Part-Based Enhanced Super Resolution Network for Low-Resolution Person Re-Identification. IEEE Access 2020, 8, 57594–57605. [Google Scholar] [CrossRef]

- Li, X.; Orchard, M.T. New Edge Directed Interpolation. In Proceedings of the International Conference on Information Processing (ICIP), Vancouver, BC, Canada, 10–13 September 2000; pp. 311–314. [Google Scholar]

- Capel, D.; Zisserman, A. Super-Resolution Enhancement of Text Image Sequences. In Proceedings of the 15th International Conference on Pattern Recognition (ICPR), Barcelona, Spain, 3–7 September 2000; pp. 311–314. [Google Scholar]

- Huang, Y.; Jiang, Z.; Lan, R.; Zhang, S.; Pi, K. Infrared Image Super-Resolution via Transfer Learning and PSRGAN. IEEE Signal Proc. Let. 2021, 28, 982–986. [Google Scholar] [CrossRef]

- Shen, H.; Hou, B.; Wen, Z.; Jiao, L. Structural-Correlated Self-Examples Based Super-Resolution of Single Remote Sensing Image. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3209–3223. [Google Scholar] [CrossRef]

- Hu, J.; Li, T.; Zhao, M.; Ning, J. Hyperspectral Image Super-Resolution via Deep Structure and Texture Interfusion. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 8665–8678. [Google Scholar] [CrossRef]

- Shang, C.; Li, X.; Foody, G.M.; Du, Y.; Ling, F. Super-Resolution Land Cover Mapping Using a Generative Adversarial Network. IEEE Geosci. Remote Sens. 2022, 19, 1–5. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 8–16 October 2016; pp. 391–407. [Google Scholar]

- Ruder, S. An Overview of Gradient Descent Optimization Algorithms; National University of Ireland Galway: Galway, Ireland, 2017. [Google Scholar]

- Tu, S.; Waqas, M.; Shah, Z.; Rehman, O.; Yang, Z.; Koubaa, A.; Rehman, S.U. ModPSO-CNN: An Evolutionary Convolution Neural Network with Application to Visual Recognition. Soft Comput. 2021, 25, 2165–2176. [Google Scholar] [CrossRef]

- Dong, X.; Lian, Y.; Liu, Y. Small and Multi-Peak Nonlinear Time Series Forecasting Using a Hybrid Back Propagation Neural Network. Inform. Sci. 2017, 424, 39–54. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle Swarm Optimization. In Proceedings of the IEEE International Conference on Neural Networks (ICNN), Perth, WA, Australia, 27–30 November 1995; pp. 1942–1948. [Google Scholar]

- Peng, Y.; Deng, W.; Wu, W.; Luo, Z.; Huang, J. Hybrid Modeling Routine for Metal-Oxide TFTs Based on Particle Swarm Optimization and Artificial Neural Network. Electron. Lett. 2020, 56, 453–456. [Google Scholar] [CrossRef]

- Zhang, L.; Zhao, L. High-Quality Face Image Generation Using Particle Swarm Optimization-Based Generative Adversarial Networks. Future Gener. Comp. Syst. 2021, 122, 98–104. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R.; Shi, Y. Swarm Intelligence; Morgan Kaufmann Publishers: San Francisco, CA, USA, 2001. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Tizhoosh, H.R. Opposition-Based Learning: A New Scheme for Machine Intelligence. In Proceedings of the International Conference on Computational Intelligence for Modelling, Control and Automation and International Conference on Intelligent Agents, Web Technologies and Internet Commerce (CIMCA), Vienna, Austria, 28–30 November 2005; pp. 695–701. [Google Scholar]

- Xu, Q.; Wang, L.; Wang, N.; Hei, X.; Zhao, L.A. Review of Opposition-Based Learning from 2005 to 2012. Eng. Appl. Artif. Intell. 2014, 29, 1–12. [Google Scholar] [CrossRef]

- Rahnamayan, S.; Jesuthasan, J.; Bourennani, F.; Salehinejad, H.; Naterer, G. Computing opposition by involving entire population. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Beijing, China, 6–11 July 2014; pp. 1800–1807. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 1026–1034. [Google Scholar]

- Chowdhury, M.E.H.; Khandakar, A.; Mazhar, R.; Rahman, T.; Mahbub, Z.B.; Islam, K.R.; Khan, M.S.; Iqbal, A.; Al-Emadi, N.; Islam, M.T.; et al. Can AI Help in Screening Viral and COVID-19 Pneumonia? IEEE Access 2020, 8, 132665–132676. [Google Scholar] [CrossRef]

- Liang, J.J.; Qu, B.Y.; Suganthan, P.N.; Hernández-Díaz, A. Problem Definitions and Evaluation Criteria for the CEC 2013 Special Session on Real-Parameter Optimization. Tech. Rep. 2013, 201212, 281–295. [Google Scholar]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image Super-Resolution Via Sparse Representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Bevilacqua, M.; Roumy, A.; Guillemot, C.; Alberi-Morel, M.L. Low-Complexity Single-Image Super-Resolution based on Nonnegative Neighbor Embedding. In Proceedings of the 23rd British Machine Vision Conference (BMVC), Guildford, UK, 3 September 2012; pp. 135.1–135.10. [Google Scholar]

- Zeyde, R.; Elad, M.; Protter, M. On Single Image Scale-Up Using Sparse-Representations. In Proceedings of the International Conference on Curves and Surfaces, Avignon, France, 24–30 June 2010; pp. 711–730. [Google Scholar]

- Martin, D.; Fowlkes, C.; Tal, D.; Malik, J. A Database of Human Segmented Natural Images and Its Application to Evaluating Segmentation Algorithms and Measuring Ecological Statistics. In Proceedings of the Eighth IEEE International Conference on Computer Vision (ICCV), Vancouver, BC, Canada, 7–14 July 2001; pp. 416–423. [Google Scholar]

- Huang, J.B.; Singh, A.; Ahuja, N. Single Image Super-Resolution from Ttransformed Self-Exemplars. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 8–10 June 2015; pp. 5197–5206. [Google Scholar]

- Horé, A.; Ziou, D. Image Quality Metrics: PSNR vs. SSIM. In Proceedings of International Conference on Pattern Recognition(ICPR), Istanbul, Turkey, 23–26 August 2010; pp. 2366–2369. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image Quality Assessment: From Error Visibility to Structural Similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).