Abstract

In recent times, fractional calculus has gained popularity in various types of engineering applications. Very often, the mathematical model describing a given phenomenon consists of a differential equation with a fractional derivative. As numerous studies present, the use of the fractional derivative instead of the classical derivative allows for more accurate modeling of some processes. A numerical solution of anomalous heat conduction equation with Riemann-Liouville fractional derivative over space is presented in this paper. First, a differential scheme is provided to solve the direct problem. Then, the inverse problem is considered, which consists in identifying model parameters such as: thermal conductivity, order of derivative and heat transfer. Data on the basis of which the inverse problem is solved are the temperature values on the right boundary of the considered space. To solve the problem a functional describing the error of the solution is created. By determining the minimum of this functional, unknown parameters of the model are identified. In order to find a solution, selected heuristic algorithms are presented and compared. The following meta-heuristic algorithms are described and used in the paper: Ant Colony Optimization (ACO) for continous function, Butterfly Optimization Algorithm (BOA), Dynamic Butterfly Optimization Algorithm (DBOA) and Aquila Optimize (AO). The accuracy of the presented algorithms is illustrated by examples.

1. Introduction

With the increase in computing power of computers, all kinds of simulations of various phenomena occurring, among others, in physics, biology and technology are gaining in importance. The considered mathematical models are more and more complicated and can be used to model various processes in nature, science and engineering. In the case of modeling anomalous diffusion processes (e.g., heat conduction in porous materials) or processes with long memory, fractional derivatives play a special role. There are many different fractional derivatives, in which the following are the most popular: Caputo, Riemann-Liouville and Riesz. Authors of the study [1] present a model dedicated risk of corporate default, which can be described as a fractional self-exciting model. The model and methods introduced in the study were used to carry out a validation on real market data. In result, the fractional derivative model became better. Ming et al. [2] used Caputo fractional derivative to simulate China’s gross domestic product. The fractional model was compared with the model based on the classical derivative. Using the fractional derivative, the authors built a better and more precise model to predict the values of gross domestic product in China. Another applications of fractional derivatives in modeling processes in biology can be found in the article [3]. The authors presented the applications of the Atangan-Baleanu fractional derivative to create models of such processes as: Newton’s law of cooling, population growth model and blood alcohol model. In the article [4], authors used Caputo fractional derivative to investigate and model population dynamics among tumor cells-macrophage. The study also estimated unknown model parameters based on samples which were collected from the patient with non-small cell lung cancer who had chemotherapy-naive hospitalized. De Gaetano et al. [5] presented a mathematical model with a fractional derivative for Continuous Glucose Monitoring. The paper also contains the numerical solution of the considered fractional model. Based on experimental data from diabetic patients, the authors determine the order of the fractional derivative for which the model best fits the data. The research shows that the fractional derivative model fits the data better than the integer derivative model (both first and second order). More about fractional calculus and its application can be found in [6,7,8].

In order to implement more and more accurate and faster computer simulations, it is necessary to improve various types of numerical methods or algorithms that solve direct and inverse problems. Solving the inverse problem allows to design the process and select the input parameters of the model in a way that make possible obtaining the desired output state. Such tasks are considered difficult due to the fact that they are ill conditioned [9]. Sensor measurements often provide additional information for inverse issues. Based on these measurements, the input parameters of the model are selected and the entire process is designed. In the study [10] a variational approach for reconstructing the thermal conductivity coefficient is presented. The authors also cite statements regarding the existence and uniqueness of the solution. Numerical examples are also provided. In the article [11] the solution of the inverse problem consists in identifying the coefficients of the heat conduction model based on temperature measurements from sensors. In addition, several mathematical models were compared, in particular fractional models with classical model. Under the study, the parameters like order of fractional derivative as well as thermal conductivity and heat transfer coefficient were identified. Considerations regarding solving the inverse problem are also included in the article [12]. The authors present the approach of the solution from the Deep Neural Network, in which they used deep-learning methods. It allowed for learning all free parameters and functions through training. The back-propagation of the training data can be one of the methods for training the deep network. More examples of inverse problems in mathematical modeling and simulations can be found in [13,14,15,16,17,18,19,20].

In this article, the mathematical model of heat conduction with Riemann-Liouville fractional derivative is presented. In the provided model, the boundary conditions of the second and third order are adopted. Then, a solution of direct problem is shortly described. To solve this problem a finite difference scheme is derived. The inverse problem posed in this article consists in the reconstruction of the third order boundary condition and the identification of such parameters as order of fractional derivative and thermal conductivity. In the process of developing a procedure that solves the inverse problem, a fitness function is created. It describes the error of the approximate solution. In order to identify the parameters, the minimum of this function should be found. The following algorithms are used and compared to minimize the fitness function: Ant Colony Optimization (ACO), Dynamic Butterfly Optimization Algorithm (DBOA) and Aquila Optimization (AO). The presented procedure has been tested on numerical examples.

2. Anomalous Diffusion Model

We consider an anomalous diffusion equation in the form of a differential equation with a fractional derivative with over spatial variable:

In this approach, the considered anomalous diffusion equation describes the phenomenon of heat flow in porous medium [11,21,22]. In Equation (1) we assume the following notations: —temperature, —spatial variable, —time, —specific heat, —density, —order of derivative and is scaled heat conduction coefficient, where is scale parameter. Heat conduction had to be scaled to keep the units consistent. To Equation (1) an initial condition is added:

On the left side of the spatial interval the homogeneous boundary condition of the second order is taken:

and for the right boundary of the spatial interval the boundary condition of the third order is assumed:

The symbols , h appearing in the Equation (4) denote ambient temperature and the heat transfer coefficient.

In the Equation (1) there is a fractional derivative with respect to space, which is defined as the Riemann-Liouville derivative [23]:

3. Numerical Solution of Direct Problem

In order to solve the direct problem for model (1)–(4) it is used finite difference scheme. The considered area is discretized by creating a mesh , , where and and . Then the Riemann-Liouville derivative has to be approximated [23]:

as well as boundary conditions (3) and (4):

where is the ambient temperature, is the approximate value of the function T in point , and h is a function describing the heat transfer coefficient. Using the Equations (6)–(8) we obtain a differential scheme (a system of equations). By solving this system, the values of the function T will be determined in mesh points.

4. Inverse Problem and the Procedure for Its Solution

The problem considered in this article concern the inverse problem. It consists in establishing the input parameters of the model in a way that allows obtaining the temperature at the boundary corresponding to the measurements from the sensors. The identified parameters are: thermal conductivity , order of derivative and heat transfer function h in the form of a second degree polynomial. In the presented approach, after solving the direct problem for fixed values of unknown parameters, we obtain approximation of T and compare it to the measurements data. This is a method of creation the fitness function:

where N is a number of measurements, are temperature values at the measurement point calculated from the model, and are measurements from sensors. To find the minimum of function (9) we use selected metaheuristic algorithms described in Section 5.

5. Meta-Heuristic Algorithms

In this section, we present selected metaheuristic algorithms for finding the minimum of functions. These algorithms will be: Ant Colony Optimization (ACO) for continuous function optimization, Dynamic Butterfly Optimization Algorithm (DBOA) and Aquila Optimization (AO).

5.1. ACO for Continuous Function Optimization

The inspiration for the creation this minimum function search algorithm was the observation of the habits of ants while searching for food. In the first stage, the ants randomly search the area around their nest. In the process of foraging for food, ants secrete a chemical called a pheromone. Thanks to this substance, the ants have a chance to communicate with each other. The amount of secreted substance depends on the amount of food found. If the ant has successfully found a food source, the next step is to return to the nest with a food sample. The animal leaves a pheromone trail that will allow other ants to find the food source. This mechanism was adapted to create the ACO algorithm for continuous function optimization [24]. More on the algorithm and its applications can be found, among others, in articles [25,26,27,28].

There are three main parts to the algorithm:

- Solution (pheromone) representation. Points from the search area are identified as pheromone patches. In other words, the pheromone spot plays the role of a solution. Thus, k-th pheromone spot (or approximate solution) can be represented as . Each solution (pheromone spot) has its quality calculated on the basis of fitness function . In each iteration of the algorithm, we store a fixed number of pheromone spots in the set of solutions (establish at the start of the algorithm).

- Transformation of the solution by the ant. The procedure of constructing a new solution, in the first place, consists in choosing one of the current solutions (pheromone spots) with a certain probability. The quality of the solution is a factor that determines the probability. The relationship here is as follows: with the increase in the quality of the solution, the probability of selection increases. In this paper, the following formula is adopted to calculate the probability (based on the rank) of the k-th solution:where L denotes number of all pheromone spots, and is expressed by the formula:The symbol in the Equation (11) denotes the rank of the k-th solution in the set of solutions. The parameter q is a parameter that narrows the search area. In case of small value of q, the choice of the best solution is preferred. The greater q, the closer the probabilities of choosing each of the solutions. After choosing k-th solution, it is required to perform Gaussian sampling using the formula:where is i-th coordinate of k-th solution and is the calculated average distance between the chosen k-th solution and all the other solutions.

- Pheromone spots update. In each iteration of the ACO algorithm, M of new solutions is created (M denotes the number of ants). These solutions should be included in the solution set. In total, there are of pheromone spots in the set. Then the spots (solutions) are sorted by quality. The worst solutions in the M set are removed. Thus, the solution set always has a fixed number of elements equal to L.

Pseudocode ACO algorithm for continuous function optimization is presented in Algorithm 1.

| Algorithm 1 Pseudocode of ACO algorithm. |

| 1: Initialization part. |

| 2: Configuration of ACO algorithm parameters. |

| 3: Initialization of starting population in a random way. |

| 4: Calculation value of the fitness function F for all pheromone spots and sorting them according to their rank (quality). |

| 5: Iterative part. |

| 6: for do |

| 7: Assignment of probability to pheromone spots according to the Equation (10). |

| 8: for do |

| 9: The ant chooses the k-th () solution with probability . |

| 10: for do |

| 11: Using the probability density function (12) in the sampling process, the ant changes the j-th coordinate of the k-th solution. |

| 12: end for |

| 13: end for |

| 14: Calculation the value of the fitness function F for M new solutions. |

| 15: Adding M new solutions to the set of archive of old, sorting the archive by quality and then rejection of the M worst solutions. |

| 16: end for |

| 17: return best solution . |

5.2. Dynamic Butterfly Optimization Algorithm

Another of the presented heuristic algorithms is an improved version of the Butterfly Optimization Algorithm (BOA), namely the Dynamic Butterfly Optimization Algorithm (DBOA) [29].

In order to communicate, search for food, connect with a partner, and to escape from a predator, these animals use the sense of smell, taste and touch. The most important of these senses is smell. Thanks to the sense of smell butterflies look for food sources. Sensory receptors, called chemoreceptors, are scattered all over the body of a butterfly (e.g., on the legs).

Scientists studying the life of butterflies have noticed that these animals locate the source of a fragrance with great precision. In addition, they can distinguish fragrances and recognize their intensity. Those were an inspiration for the development of the Butterfly Optimization Algorithm (BOA) [30]. Each butterfly emits a specific fragrance of a given intensity. Spraying the fragrance allows other butterflies to recognize it and then communicate with each other. In this way, a “collective knowledge network” is created. The global optimum search algorithm is based on the ability of butterflies to sense the fragrance. If the animal cannot sense the fragrance of the environment, its movement will be random.

The key concept is fragrance and the way it is received and processed. The concept of modality detection and processing (fragrance) is based on the following parameters: stimulus intensity (I), sensory modality (c) and power exponent (a). I is the intensity of the stimulus. In BOA, fitness function is somehow correlated with the intensity of the stimulus I. Hence, it can be shown that the more fragrance a butterfly emits (solution quality is better), the easier it is for other butterflies in the environment to sense it and be attracted to it. This relationship is described as follows:

where f denotes fragrance, c is the sensory modality, I denotes the stimulus intensity, and a is the power exponent, which depends on the modality. In this article, we assume values for the parameters a and c in the range . The parameter a is a modality-dependent power exponent. It has a variability in absorption and its value may decrease in subsequent iterations. Thus, the parameter a can control the behavior of the algorithm, its convergence. The parameter c is also important in the perspective of the BOA operation. In theory , while in practice it is assumed that . The values of a and c have a significant impact on the speed of the algorithm. Considering this, it should be noted that an important step here is the appropriate selection of these parameters. It should be carried out once for various optimization tasks.

In the BOA we can distinguish the following stages:

- Butterflies in the considered environment emit fragrances that differ in intensity, which results from the quality of the solution. Communication between these animals takes place through sensing the emitted fragrances.

- There are two ways of movement of a butterfly, namely: towards a more intense fragrance emitted by another butterfly and in a random direction.

- Global search is represented by:where is the position of the butterfly (agent) before the move, and is the transformation position of the butterfly, is the position of the best butterfly in the current population, and f is the fragrance of a butterfly and r denotes a number from the range selected in a random way.

- Local search move is formulated by:where , are randomly selected butterflies from the population.

At the end of each iteration modifying the population of agents (butterflies), the local search algorithm based on mutation operator (LSAM) is run. This is a significant modification compared to BOA. In this article, the operation of LSAM consisted in the selection of several individuals (solutions) and their transformation with the use of the mutation operator. In case of obtaining better solution after mutation, it replaces the old one. The LSAM algorithm is presented as pseudocode in Algorithm 2. More information regarding the applications of the butterfly algorithm can be found in [31,32,33].

| Algorithm 2 Pseudocode of LSAM operator. |

| 1: —random solution among the top half best agents in population (obtained from BOA). |

| 2: —value of the fitness function for . |

| 3: I—number of iterations, —mutation rate. |

| 4: Iterative part. |

| 5: for do |

| 6: Calculate: Mutate(), . |

| 7: if then |

| 8: , . |

| 9: else |

| 10: Set a random solution from the population, but not . |

| 11: Compute the fitness function . |

| 12: if then |

| 13: |

| 14: end if |

| 15: end if |

| 16: end for |

Algorithm 2 includes the process of transforming the individual coordinates of the solution with the use of the mutation operator. The transformation consists in drawing a number from the normal distribution and replacing the old coordinate with a new one. For j-th coordinate we use normal distribution:

where is mean and is standard deviation. By and are denoted lower and upper bound of coordinate. Algorithm 3 presents pseudocode of DBOA.

| Algorithm 3 Pseudocode of DBOA. |

| 1: Initialization part. |

| 2: Determine parameters of BOA algorithm. N—number of butterfly in population, n—dimension, c—sensor modality and a, , p parameters. |

| 3: Random generate starting population . |

| 4: Calculate the value of the fitness function F (hence intensity of the stimulus ) for each butterfly in population. |

Iterative part. |

| for do |

| for do |

| Calculate value of fragnance for with the use of Equation (13). |

| 5: end for |

| Set the best agent among the butterflies. |

| for do |

| Set a random number r from range . |

| if then |

| 10: Convert solution in accordance with the Equation (14). |

| else |

| Convert solution in accordance with the Equation (15). |

| end if |

| end for |

| 15: Change value of the parameter a. |

| Adopt the LSAM algorithm to convert the agents population with mutation rate . |

| end for |

| return . |

5.3. Aquila Optimizer

Another of the considered algorithms is Aquila Optimizer (AO). This algorithm is a mathematical representation of the hunting behavior of a genus of bird called Aquila (family of hawks). Four main techniques can be distinguished in the way these predators hunt:

- Expanded exploration. In the case that a predator is high in the air and wants to hunt other birds, it tilts vertically. After locating the victim from a height, Aquila begins nosediving with increasing speed. We can express this phenomenon with the use of the following equation:where is solution after transformation, is the best solution so far and symbolizes position of the prey, i is current iteration, I is number of maximum iteration and is random number from . In this case can also be defined as the optimization goal or approximate solution. Vector is mean solution from all population:

- Narrowed exploration. This technique involves circling the prey in flight and preparing to drop the earth and attack the prey. It is also known as short stroke contour flight. This is described in the algorithm by the equation:where and denotes the same as in expanded exploration point, is a random solution from population and is a random number from interval . Term simulates spiral flight of Aquila. Expression is random value of the Levy flight distribution:where are constants denote random numbers from range , and is formulated as follows [34]:In above equation denotes gamma function. In order to determine the values of the parameters r and the following formula is used:where is a fixed integer from , are small constants, is an integer from .

- Expanded exploitation. This hunting technique begins with a vertical attack on a prey, which location is known within some approximation defining the search area. Thanks to this information, Aquila gets as close to its prey as possible. It can be described as follows:where is the solution after transformation, is the best solution at the moment and is the mean solution in all population determined with the use of the formula (18). As before, denotes a random number from range , while , are lower and upper bound, and are constants parameters of exploitation regulation.

- Narrowed exploitation. The characteristic feature of this technique are the stochastic movements of the bird, which attacks the prey in close proximity. It can be described by the formula:where denotes solution before transformation, is quality function:and are described by:We can adjust the algorithm with the above parameters.

The Aquila’s food-gathering behavior consists of the four hunting techniques previously described. The Formulas (17)–(26) describing four transformations consists in AO algorithm. Algorithm 4 shows description of implementation of the AO algorithm. More about the Aquila Optimizer can be found in [34,35].

| Algorithm 4 Pseudocode of AO. |

| 1: Initialization part. |

| 2: Set up parameters of AO algorithm. |

| 3: Initialize population in a random way . |

| 4: Iterative part. |

| 5: for do |

| 6: Determine values of the fitness function F for each agent in the population. |

| 7: Establish the best solution in the population. |

| 8: for do |

| 9: Calculate mean solution in the population. |

| 10: Improve parameters of the algorithm. |

| 11: if then |

| 12: if then |

| 13: Perform step expanded exploration (17) by updating solution . |

| 14: In the result solution is obtained. |

| 15: if then make substitution |

| 16: end if |

| 17: if then make substitution |

| 18: end if |

| 19: else |

| 20: Perform step narrowed exploration (19) by updating solution . |

| 21: In the result solution is obtained. |

| 22: if then make substitution . |

| 23: end if |

| 24: if then make substitution . |

| 25: end if |

| 26: end if |

| 27: else |

| 28: if then |

| 29: Perform step Expanded exploitation (23) by updating solution . |

| 30: In the result solution is obtained. |

| 31: if then make substitution . |

| 32: end if |

| 33: if then make substitution . |

| 34: end if |

| 35: else |

| 36: Perform step narrowed exploitation (24) by updating solution . |

| 37: In the result solution is obtained. |

| 38: if then make substitution . |

| 39: end if |

| 40: if then make substitution . |

| 41: end if |

| 42: end if |

| 43: end if |

| 44: end for |

| 45: end for |

| 46: return . |

6. Numerical Example and Test of Algorithms

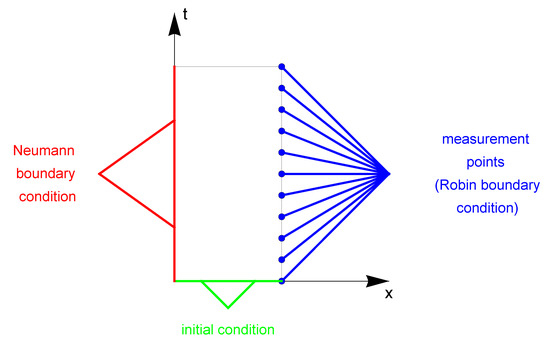

In this section, we present a numerical example illustrating the effectiveness of the algorithms described above. On this basis, the algorithms are compared with each other regarding the inverse problem in the heat flow model. As described in the Section 4, the unknown model parameters that need to be identified are: —thermal conductivity, —order of derivative and h—heat transfer function. Temperature measurements on the right boundary of the considered area (Figure 1) are supplementary data necessary to solve the inverse problem. The process should be modeled in a way that allows obtaining temperature values from the mathematical model adjusted to the measurement data. The calculations in the inverse problem are performed on the grid .

Figure 1.

Considered area with marked measuring points (a fragment of the boundary with Neumann boundary condition is marked in red, a fragment of the boundary with Robin boundary condition is marked in blue, and a fragment of the boundary with initial condition is marked in green).

The verification of heuristic algorithms is carried out by comparative analysis of the values of the searched parameters obtained from the inverse problem solution with exact values. The exact values of the searched parameters are presented below.

In the case of heat transfer function, the error between the exact function h, and the recreated is defined by the following formula:

In Table 1 the results obtained for individual algorithms are presented. Evaluating the tested algorithms according to the criterion of the value of the fitness function F (9), it is concluded that the DBOA algorithm turned out to be the most appropriate. The value of the fitness function for this algorithm is definitely and significantly lower than in the other cases. Also, the reconstruction errors of the parameters and h are the smallest for the DBOA algorithm. The second place belongs to the ACO algorithm. Based on the results, it can be seen that minimizing the fitness function is difficult, and the inverse problem is ill-posed. The value of the fitness function (9) is strongly dependent on changes in the values of the searched parameters.

Table 1.

Results of calculations (—identified value of thermal conductivity coefficient; —identified value of derivative order; —identified value of heat transfer function; —the relative error of reconstruction; F—the value of the fitness function).

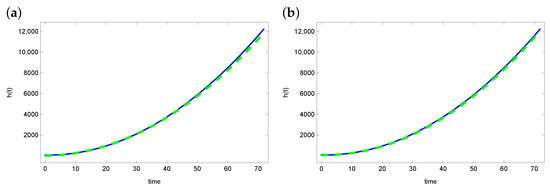

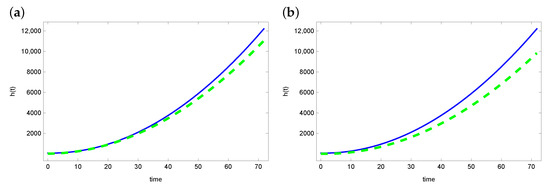

Figure 2 and Figure 3 present graphs of the exact function h and reconstruction function obtained from solving the inverse problem. For the ACO and DBOA algorithms, reconstruction of the function h is satisfying. The reconstructed function matches the exact function well. The reconstruction looks a bit worse in the case of the AO and BOA algorithms. Especially in the latter case, the green line (reconstructed h) diverges from the blue line (exact function h).

Figure 2.

The exact heat transfer (blue line) and approximate heat transfer (dashed green line) for (a) ACO and (b) DBOA.

Figure 3.

The exact heat transfer (blue line) and approximate heat transfer (dashed green line) for (a) AO and (b) BOA.

We compare the reconstructed temperature values at the measurement points with the measurement data afterwards. The Table 2 presents that the best results are obtained for the DBOA algorithm, and the worst for the BOA algorithm. Generally, these values are not high. Hence, it can be concluded that the reconstructed temperature is well matched to the measurement data, but also that the set problems are ill-posed and difficult to minimize.

Table 2.

Errors of reconstruction temperature function T in measurement points (—maximal absolute error; —mean absolute error).

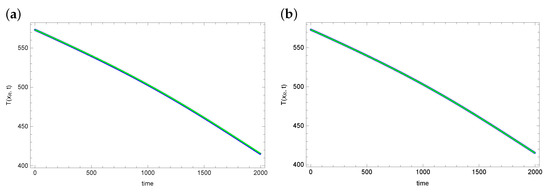

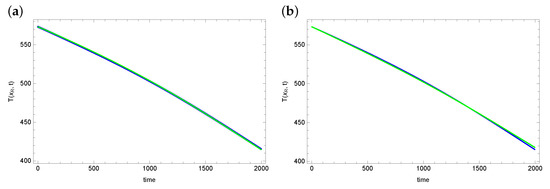

An important parameter evaluating the obtained results is matching the temperature values at the measurement points with the measurement data. Figure 4 and Figure 5 show graphs of reconstructed temperature and graphs of measurement data for each of the algorithms. As can be seen, the reconstructed temperature values are well matched to the measurement data, despite the fact that the reconstructed values of the searched parameters and h differ significantly for considered algorithms. This proves that the graph of the objective function is flat in the vicinity of the exact solution. Thus, the considered inverse problem is difficult to solve. And the found solution (reconstructed parameter values) may contain significant errors.

Figure 4.

The exact temperature in measurement point (blue line) and reconstructed temperature (green line) for (a) ACO and (b) DBOA.

Figure 5.

The exact temperature in measurement point (blue line) and reconstructed temperature (green line) for (a) AO and (b) BOA.

7. Conclusions

The paper presents the inverse problem of heat flow consisting in the identifying parametric data of the model with given temperature measurements.The unknown parameters of the model are: thermal conductivity, order of fractional derivative and heat transfer function. To solve inverse problem, the function describing the error of the approximate solution should be minimized. Four meta-heuristic algorithms were used and compared, such as: ACO, DBOA, AO and BOA. DBOA turned out to be the best in terms of the value of the minimized function. In the case of DBOA, the value of the minimized function was , which is a satisfactory result. In the case of other algorithms, these values were much higher: ACO ; BOA and AO . The DBOA also turned out to be the best in terms of errors in reconstruction model parameters and fitting reconstructed temperature to measurement data. In the case of DBOA, the error of reconstruction the temperature at the measurement points is equal to , while for the other algorithms this error was of the order of . The considered problems turned out to be difficult to solve. The graph of the fitness function is very flat in the vicinity of the searched solution. Thus, even significant differences in the values of the reconstructed parameters have little impact on the differences in the values of the fitness function.

Author Contributions

Conceptualization, R.B. and D.S.; methodology, R.B. and D.S.; software, R.B.; validation, D.S., A.W. and R.B.; formal analysis, D.S.; investigation, R.B. and A.W.; writing—original draft preparation, A.W. and R.B.; writing—review and editing, A.W.; supervision, D.S.; All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ketelbuters, J.J.; Hainaut, D. CDS pricing with fractional Hawkes processes. Eur. J. Oper. Res. 2022, 297, 1139–1150. [Google Scholar] [CrossRef]

- Ming, H.; Wang, J.; Fečkan, M. The Application of Fractional Calculus in Chinese Economic Growth Models. Appl. Math. Comput. 2019, 7, 665. [Google Scholar] [CrossRef]

- Bas, E.; Ozarslan, R. Real world applications of fractional models by Atangana–Baleanu fractional derivative. Chaos Solitons Fractals 2018, 116, 121–125. [Google Scholar] [CrossRef]

- Ozkose, F.; Yılmaz, S.; Yavuz, M.; Ozturk, I.; Şenel, M.T.; Bağcı, B.S.; Dogan, M.; Onal, O. A Fractional Modeling of Tumor–Immune System Interaction Related to Lung Cancer with Real Data. Eur. Phys. J. Plus 2022, 137, 1–28. [Google Scholar] [CrossRef]

- De Gaetano, A.; Sakulrang, S.; Borri, A.; Pitocco, D.; Sungnul, S.; Moore, E.J. Modeling continuous glucose monitoring with fractional differential equations subject to shocks. J. Theor. Biol. 2021, 526, 110776. [Google Scholar] [CrossRef] [PubMed]

- Singh, H.; Kumar, D.; Baleanu, D. Methods of Mathematical Modelling. Fractional Differential Equations; CRC Press: Boca Raton, FL, USA, 2019. [Google Scholar]

- Meng, R. Application of Fractional Calculus to Modeling the Non-Linear Behaviors of Ferroelectric Polymer Composites: Viscoelasticity and Dielectricity. Membranes 2021, 11, 409. [Google Scholar] [CrossRef] [PubMed]

- Maslovskaya, A.; Moroz, L. Time-fractional Landau–Khalatnikov model applied to numerical simulation of polarization switching in ferroelectrics. Nonlinear Dyn. 2023, 111, 4543–4557. [Google Scholar] [CrossRef]

- Kaipio, J.; Somersalo, E. Statistical and Computational Inverse Problems; Springer: New York, NY, USA, 2005. [Google Scholar]

- Marinov, T.; Marinova, R. An inverse problem solution for thermal conductivity reconstruction. Wseas Trans. Syst. 2021, 20, 187–195. [Google Scholar] [CrossRef]

- Brociek, R.; Słota, D.; Król, M.; Matula, G.; Kwaśny, W. Comparison of mathematical models with fractional derivative for the heat conduction inverse problem based on the measurements of temperature in porous aluminum. Int. J. Heat Mass Transf. 2019, 143, 118440. [Google Scholar] [CrossRef]

- Liang, D.; Cheng, J.; Ke, Z.; Ying, L. Deep Magnetic Resonance Image Reconstruction: Inverse Problems Meet Neural Networks. IEEE Signal Process. Mag. 2020, 37, 141–151. [Google Scholar] [CrossRef] [PubMed]

- Drezet, J.M.; Rappaz, M.; Grün, G.U.; Gremaud, M. Determination of thermophysical properties and boundary conditions of direct chill-cast aluminum alloys using inverse methods. Metall. Mater. Trans. A 2000, 31, 1627–1634. [Google Scholar] [CrossRef]

- Zielonka, A.; Słota, D.; Hetmaniok, E. Application of the Swarm Intelligence Algorithm for Reconstructing the Cooling Conditions of Steel Ingot Continuous Casting. Energies 2020, 13, 2429. [Google Scholar] [CrossRef]

- Okamoto, K.; Li, B. A regularization method for the inverse design of solidification processes with natural convection. Int. J. Heat Mass Transf. 2007, 50, 4409–4423. [Google Scholar] [CrossRef]

- Özişik, M.; Orlande, H. Inverse Heat Transfer: Fundamentals and Applications; Taylor & Francis: New York, NY, USA, 2000. [Google Scholar]

- Neto, F.M.; Neto, A.S. An Introduction to Inverse Problems with Applications; Springer: Berlin, Germany, 2013. [Google Scholar]

- Brociek, R.; Pleszczyński, M.; Zielonka, A.; Wajda, A.; Coco, S.; Sciuto, G.L.; Napoli, C. Application of Heuristic Algorithms in the Tomography Problem for Pre-Mining Anomaly Detection in Coal Seams. Sensors 2022, 22, 7297. [Google Scholar] [CrossRef] [PubMed]

- Chen, T.; Yang, D. Modeling and Inversion of Airborne and Semi-Airborne Transient Electromagnetic Data with Inexact Transmitter and Receiver Geometries. Remote Sens. 2022, 14, 915. [Google Scholar] [CrossRef]

- Gao, H.; Zahr, M.J.; Wang, J.X. Physics-informed graph neural Galerkin networks: A unified framework for solving PDE-governed forward and inverse problems. Comput. Methods Appl. Mech. Eng. 2022, 390, 114502. [Google Scholar] [CrossRef]

- Kukla, S.; Siedlecka, U.; Ciesielski, M. Fractional Order Dual-Phase-Lag Model of Heat Conduction in a Composite Spherical Medium. Materials 2022, 15, 7251. [Google Scholar] [CrossRef]

- Žecová, M.; Terpák, J. Heat conduction modeling by using fractional-order derivatives. Appl. Math. Comput. 2015, 257, 365–373. [Google Scholar] [CrossRef]

- Podlubny, I. Fractional Differential Equations; Academic Press: San Diego, CA, USA, 1999. [Google Scholar]

- Socha, K.; Dorigo, M. Ant colony optimization for continuous domains. Eur. J. Oper. Res. 2008, 185, 1155–1173. [Google Scholar] [CrossRef]

- Ojha, V.K.; Abraham, A.; Snášel, V. ACO for continuous function optimization: A performance analysis. In Proceedings of the 14th International Conference on Intelligent Systems Design and Applications, Okinawa, Japan, 28–30 November 2014; pp. 145–150. [Google Scholar] [CrossRef]

- Moradi, B.; Kargar, A.; Abazari, S. Transient stability constrained optimal power flow solution using ant colony optimization for continuous domains (ACOR). IET Gener. Transm. Distrib. 2022, 16, 3734–3747. [Google Scholar] [CrossRef]

- Omran, M.G.; Al-Sharhan, S. Improved continuous Ant Colony Optimization algorithms for real-world engineering optimization problems. Eng. Appl. Artif. Intell. 2019, 85, 818–829. [Google Scholar] [CrossRef]

- Brociek, R.; Chmielowska, A.; Słota, D. Comparison of the Probabilistic Ant Colony Optimization Algorithm and Some Iteration Method in Application for Solving the Inverse Problem on Model With the Caputo Type Fractional Derivative. Entropy 2020, 22, 555. [Google Scholar] [CrossRef]

- Tubishat, M.; Alswaitti, M.; Mirjalili, S.; Al-Garadi, M.A.; Alrashdan, M.T.; Rana, T.A. Dynamic Butterfly Optimization Algorithm for Feature Selection. IEEE Access 2020, 8, 194303–194314. [Google Scholar] [CrossRef]

- Arora, S.; Singh, S. Butterfly optimization algorithm: A novel approach for global optimization. Soft Comput. 2019, 23, 715–734. [Google Scholar] [CrossRef]

- Chen, S.; Chen, R.; Gao, J. A Monarch Butterfly Optimization for the Dynamic Vehicle Routing Problem. Algorithms 2017, 10, 107. [Google Scholar] [CrossRef]

- Xia, Q.; Ding, Y.; Zhang, R.; Liu, M.; Zhang, H.; Dong, X. Blind Source Separation Based on Double-Mutant Butterfly Optimization Algorithm. Sensors 2022, 22, 3979. [Google Scholar] [CrossRef] [PubMed]

- Zhang, M.; Wang, D.; Yang, J. Hybrid-Flash Butterfly Optimization Algorithm with Logistic Mapping for Solving the Engineering Constrained Optimization Problems. Entropy 2022, 24, 525. [Google Scholar] [CrossRef]

- Abualigah, L.; Yousri, D.; Abd Elaziz, M.; Ewees, A.A.; Al-qaness, M.A.; Gandomi, A.H. Aquila Optimizer: A novel meta-heuristic optimization algorithm. Comput. Ind. Eng. 2021, 157, 107250. [Google Scholar] [CrossRef]

- Wang, Y.; Xiao, Y.; Guo, Y.; Li, J. Dynamic Chaotic Opposition-Based Learning-Driven Hybrid Aquila Optimizer and Artificial Rabbits Optimization Algorithm: Framework and Applications. Processes 2022, 10, 2703. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).