1. Introduction

Autonomous vehicles are highly dependent on their perception systems to sense the surrounding environment and detect other road users, as this information is essential for their planning and control systems. However, when adverse weather occurs, retrieved images with low contrast and poor visibility can degrade the performance of the visual algorithms used in autonomous vehicle perception systems, such as detection, tracking, and intention estimation [

1,

2,

3,

4,

5]. This degradation is caused by tiny particles in the atmosphere that absorb and reflect light [

6]. To improve the performance of perception systems during adverse weather, researchers have used de-hazing, de-raining, and de-snowing applications to remove these effects to obtain clearer images.

Previous works have suggested using heuristic priors based on observations to remove weather-related degradation from images, such as Dark Channel Prior [

7], Haze-Lines Prior [

8], Sparse Coding [

9], Layer Prior [

10], Saturation and Visibility Features [

11], and Mutiple-guided Filter [

12]. These works utilize meteorological models, with an example of a haze model being presented in Equation (

1), where

is the input hazy image,

is its radiance,

is the atmospheric light, and

t is the transmittance map measuring the amount of light able to pass through the haze layer [

13].

The same paradigm can also be used for de-raining and de-snowing images. The simplified models for simulating rain [

14] and snow [

15] are shown in Equations (

2) and (

3), respectively.

where

,

, and

symbolize a rainy image, a clean background, and a rain layer, respectively. Equation (

3) utilizes

to represent a snow image and the concept is similar to that explained in Equation (

1) and (

2).

With the advancement of deep learning techniques, researchers have explored alternative solutions to mitigate the limitations of traditional approaches in generalization ability. One such approach that has gained traction is convolutional neural networks (CNN) [

16,

17,

18,

19,

20,

21] and generative adversarial networks (GAN) [

22,

23] trained on prepared datasets. These methods allow for direct mapping of the domain variation between paired weather-affected and clear images.

However, there are several reasons for investing in a new approach. First of all, most current weather removal methods are designed to only solve one type of weather problem. Second, researchers still tend to utilize paired datasets containing synthetic weather images, even though using unpaired, real-world weather images would likely provide more authentic and richer details for deep modeling. Third, current restoration models usually only allow one-way processing for the removal of weather data, although studies have shown that models that learn generation and removal simultaneously can achieve better transformation results. Finally, generating inclement weather images and using them to train visual algorithms to output clear images could improve autonomous vehicle sensing under adverse weather conditions. Therefore, in this study, we proposed a dual-purpose framework for generating images of multiple adverse weather conditions from clear weather images, as well as the removal of these conditions. Our contributions are as follows:

A deep-learning model for the generation and removal of multiple weather conditions in visual images. Inspired by CycleGAN, we introduce a model consisting of four generators and four discriminators. The four GANs are trained to generate and remove haze, rain, snow, and clear weather conditions. Once training is completed, the clear weather generator can be used to restore images, no matter which of the three weather conditions described above is present.

A disentangled training strategy based on unpaired, real-world weather images. By training our model with images of real weather conditions, ground truth images and parameters for meteorological physical models are unnecessary.

We have meticulously curated our newly created dataset, Realistic Driving Scene under Bad Weather (RDSBW) [

24], by removing images with blur and wiper. The dataset now comprises of 20,000 1080P images extracted from videos. These images showcase driving scenes captured through the windshield in varying weather conditions such as clear, hazy, and snowy.

Evaluate the performance of our model using the RDSBW and Weather Cityscapes datasets. Use structural similarity (SSIM) and peak signal-to-noise ratio (PSNR) to measure image quality before and after removing adverse weather. Furthermore, assess detection accuracy using a state-of-the-art pedestrian detector, demonstrating promising results for both accuracy and speed.

2. Related Work

2.1. Removal of One Type of Adverse Weather

Based on image restoration methods, approaches for removing a single type of adverse weather gained extensive attention due to their possible applications in the field of computer vision. As a result, many researchers have proposed methods of de-hazing, de-raining, and de-snowing images.

2.1.1. De-Hazing

Early on, learning-based de-hazing models required an intermediate step to estimate the transmission map and atmospheric light [

25,

26,

27,

28]. Error or bias during this estimation process resulted in artifacts or color distortion, requiring extra processing to reconstruct clear images [

29]. In contrast, more recent, deep-learning models map the relationships between hazy and clear images directly using datasets of paired images with ground truth labels [

16]. Researchers have also proposed various, innovative modules to enhance their de-hazing networks, resulting in substantial advances. These include the feature attention module [

17], dense feature fusion module [

18], spatially-weighted channel attention module [

19], etc.

2.1.2. De-Raining

Deep learning methods for de-raining were first introduced in 2017, when Yang et al. [

20] proposed region-dependent rain models with contextualized dilated modules to jointly detect and remove rain. In the same year, Fu et al. [

21] proposed De-rainNet, which only uses high-frequency details as inputs. These two pioneering studies inspired other CNN-based methods that imported more advanced network architecture designs, achieving better results. However, using synthetic images of rainy weather as supervision often produces unsatisfactory results when processing images of real-world rainy weather.

The most potent generative models proposed to date incorporate generative adversarial networks (GAN) and exploit the Min–Max algorithm to reduce the distance between two domains, which can be used to bridge the visual gap between synthetic and real rain [

14]. Zhang et al. [

22] have suggested an effective, conditional, GAN-based single image de-raining framework with a novel, densely-connected generator and multi-scale discriminator. Li et al. [

23] have proposed a coarse-to-fine framework that first calculates the underlying physics and then recovers the background using a depth-guided GAN.

2.1.3. De-Snowing

Compared to de-hazing and de-raining, learning-based de-snowing is still in the early stages of its development due to variations in the shapes and sizes of snowflakes. Liu et al. [

30] created multi-stage DesnowNet, a network that consists of translucency recovery modules and residual generation modules. Thanks to its context-aware features and loss functions, DesnowNet outputs images with better illumination, color, and contrast distributions than traditional methods.

To resolve problems caused by snowflake diversity, Chen et al. [

15] proposed a hierarchical network, optimized using contradictory channel loss, that was based on contradicting the state of the prior channel. Zhang et al. [

31] proposed using a framework with four sub-networks, including their newly-designed DDMSNet, in order to utilize semantic and depth information. After learning the semantic and geometric representations of snowy images, it was possible to recover clean images with clearer details.

2.2. Removal of Multiple Types of Adverse Weather

Only a few studies have been published which propose generic solutions for removing multiple types of adverse weather interference from visual images. Li et al. [

32] used the Network Architecture Search framework to obtain a network that uses an image degraded by any type of weather condition as the input and predicts a clean image as the output. This All-in-One method was tested across three datasets of rainy, hazy, and snowy images and achieved better or comparable performance than dedicated adverse weather removal models. Jeya et al. [

33] proposed a transformer-based encoder–decoder network called TransWeather. Through fine filtering, they created a dataset combining the Snow100K, Raindrop, and Outdoor-Rain corpora. Extensive experiments using multiple synthetic and real-world images proved that TransWeather can effectively remove any type of weather degradation present in an image.

2.3. Image-to-Image Translation and CycleGAN

If we think of adverse and clear weather as two different domains, the removal of weather effects can be viewed as a type of image-to-image translation process, the objective of which is transforming an image from one domain to the other [

34]. The early work on image-to-image translation applied conditional adversarial networks to learn the mapping between the domains, which required paired datasets. This approach is not suitable for weather removal tasks, however, because we cannot collect data on different weather conditions while keeping the background the same at the pixel level, since atmospheric lighting is constantly changing. However, unpaired image-to-image translation is a possible solution. In order to preserve the attributes of the source image, as well as the relationships between objects, pioneering methods such as CycleGAN [

35], DiscoGAN [

36], and DualGAN [

37] have employed GAN-based reconstruction objective functions, such as the one shown in Equation (

4):

where

represents reconstruction loss,

represents the generator from domain

A to domain

B, and

is the generator from domain

B to domain

A, while

and

represent examples of the two domains.

The approach proposed in this paper is related to previous methods for removing degradation caused by multiple types of adverse weather, but it is not restricted to eliminating noise. In contrast, we use information obtained from the image translation domain and define the problem as unpaired translations of multiple types of weather, which can transform images from any weather domain into the clear domain, or vice versa, without providing a ground truth.

3. Multiple Weather Translation GAN

We propose a novel, multiple weather translation GAN called MWTG, inspired by CycleGAN [

35]. The goal of our work is to translate clean images of traffic scenes into versions of these images with different types of weather degradation and to then be able to convert the weather-degraded images back into clean ones. The proposed method can also be used to convert real-world, weather-degraded images into clearer ones. Overall, MWTG consists of three GANs for weather effect generation and one GAN for weather effect removal. Our rationale for creating a multi-weather application was based on the observation that it would be convenient to be able to use just one model to remove all the various types of adverse weather effects that drivers are likely to encounter.

3.1. General Pipeline

To explain the theoretical basis for our approach in more detail, suppose haze, rain, and snow are three sub-domains of an adverse weather set (

) and that

Y represents a clear weather domain. As shown in

Figure 1, there exist three mappings from adverse to clear weather:

,

, and

. Furthermore, conversely, there also exist three mappings from clear to adverse weather:

,

, and

. In order to simplify the mapping process, we compress the mapping

into one network. Therefore, our model requires four generators:

for generating adverse weather effects (haze, rain, and snow, respectively) and

for adverse weather removal. Correspondingly, four discriminators (

,

,

, and

) are introduced to distinguish real images from the generated, fake images. The pseudo-code of MWTG is also provided in Algorithm 1.

| Algorithm 1 Multiple Weather Translation GAN (MWTG) |

Input: Training data pairs ▹ In order of haze, rain, snow, and clear Output: Generator networks - 1:

Initialize generators and discriminators - 2:

Define loss functions - 3:

Define optimizers for generator and discriminator - 4:

while

do - 5:

for data pair (A, B, C, D) in data_loader do - 6:

Generate fake images: , , and , , - 7:

Generate reconstruct images: , , and , , - 8:

Update Discriminator , , and - 9:

Update Generator , , and - 10:

end for - 11:

end while

|

Since we want to translate the unpaired, real-world weather images, MWTG borrows the cycle consistency principle from pioneering works [

35,

38,

39,

40] to regulate the structure of the output images, so they remain the same as the input images. Therefore, after an image is translated by the weather removal/generation network, we can translate it back into its original domain using the same generator.

We are using to represent sets of hazy, rainy, snowy, and clear weather images, respectively. As part of a single processing step, the image data are simultaneously sorted into two different places. On the one hand, real A, real B, and real C are input to and the fake clear images are output. These fake images will then be input to to obtain the reconstructed adverse weather images. On the other hand, real D images will be simultaneously input to to obtain fake, adverse weather images. These results then go through to obtain the reconstructed clear images.

3.2. Weather Generators and Discriminators

As the backbones of our four MWTG generators, ResNet [

41] (with a Residual Block) is used to maintain the previous output through a skip connection, a method which has been proven to be effective when training deeper neural networks. The input image will first be scaled down twice, using large convolutional filters. After obtaining the desired resolution, the first layer feature maps of the image will go through nine ResNet blocks, generating denser representations with more channels. In a similar manner to the encoder–decoder architecture used in [

42], two transpose convolutional layers then follow, to reverse the dense representations back into normal size RGB images.

For the discriminators, we use simple, three-layer convolutional neural networks that gradually increase the number of filters. The last layer outputs a one-channel prediction map, which is the encoding input for the criterion function. Because our datasets consist of high-resolution images, it would be time and memory-consuming to infer the entire images. Therefore, in the training stage, we crop the images into

pixel patches to reduce the calculation burden, which are then learned using PatchGANs [

35,

43,

44].

3.3. Weather Information Guidance

To obtain better results, we introduce a disentangled training strategy [

24,

45] that regards images degraded by adverse weather as composites of a weather layer and a clean background. We can then calculate the numerical distance between the input and output of each generator and store those distance values in a tensor that has the same dimensions as the input image. We refer to this tensor as the weather layer.

To provide additional input to the generator, we incorporate the spatial feature transform (SFT) to combine the weather layer feature with the extracted feature maps, allowing the weather layer to serve as guidance. SFT, which was first introduced by Wang et al. [

46] for super-resolution image reconstruction, fuses the middle layer’s features with the image’s original features spatially using Affine transformations. We adopt the method proposed by Shao et al. [

47] and use a two-layer convolution module to extract the condition map

from the weather layer. The extracted map is then fed into the two convolutional layers to predict the modulation parameters

and

. Lastly, we use Equation (

5) to obtain the shifted features.

We then use the feature maps of the penultimate convolutional layer of the GAN generator as input

F to the SFT module, while the fake image output from the SFT module is similar to the input image, the values of the elements in the weather layer are close to 0, which is the consequence of the vanishing gradients. That is why we normalize the weather layer before it reaches the SFT module:

where ⊙ means element-wise product and ⊕ means element-wise summation.

3.4. Loss Function

Three kinds of loss functions are used when formulating an MWTG model; adversarial loss, cycle consistency loss, and identity loss.

3.4.1. Adversarial Loss

We use adversarial losses to obtain four mappings, three for from clear to adverse weather (

,

,

) and one for from adverse to clear weather (

). The first three mappings can be expressed as shown in Equations (

6):

where

tries to generate images

that look similar to images from domain

, while

aims to distinguish between the translated samples

and real samples

D. The base of the logarithm in the equation is usually set to 2 or

e.

The transformation from adverse to clear weather involves three components, corresponding to each weather sub-domain; the mean values of which are calculated as follows:

where the

over

tries to enable

to generate better haze, rain, and snow images, while

needs to identify fake images after the generator is evolved.

3.4.2. Cycle Consistency Loss

The concept of ‘cycle consistency loss’ was introduced in [

35], the paper proposing CycleGAN. It is calculated as the L1 norm between the input image and the reconstructed image and is used to prevent the second generator from generating random images of the target domain. An example of forward cycle consistency is shown in

Figure 2, where images of each type of adverse weather are first translated into the “clear” domain before being restored to the original adverse weather images. This process can be formulated as follows:

Likewise, for backward cycle consistency, the clear image that is first translated into various weather domains should be restored to the same state as input, i.e.:

To force the weather removal generator

to update at the same pace as the adverse weather generators, we compute the average of the three cycle losses as the loss of

:

3.4.3. Identity Loss

Identity loss is used to preserve the image color composition when applying painting transfer to realistic photo tasks. We also find it useful when dealing with large weather images that have obvious base color tones. The goal is to train the generator to learn to map the identities of the target domain images used as input. This identity loss can be expressed as:

3.4.4. Overall Objective Function

Based on the context provided above, the overall objective function can be formulated as shown in Equation (

10), where

and

are weights that control the cycle consistency loss and identity loss:

4. Evaluation

In this section, we present the results of our qualitative and quantitative evaluations and also discuss the results when the mixed dataset or the new RDSBW dataset is used as an input.

4.1. Datasets

4.1.1. Cityscapes Weather Datasets

Cityscapes [

48] is an annotated corpus of 5000 driving scene images captured in urban areas. The researchers also simulated various weather effects onto the dataset, using information such as depth maps based on atmospheric scattering models. Foggy Cityscapes [

49] includes three different haze densities for each image, representing visibilities of 150 m, 300 m, or 600 m, respectively. Rain Cityscapes [

50] is based on 295 images, which were used to generate 36 different haze concentrations and rain types for each image. The training and testing sets of the Snow Cityscapes [

31] each consist of 2000 pairs of images. The size of the images in both the training and testing sets is

.

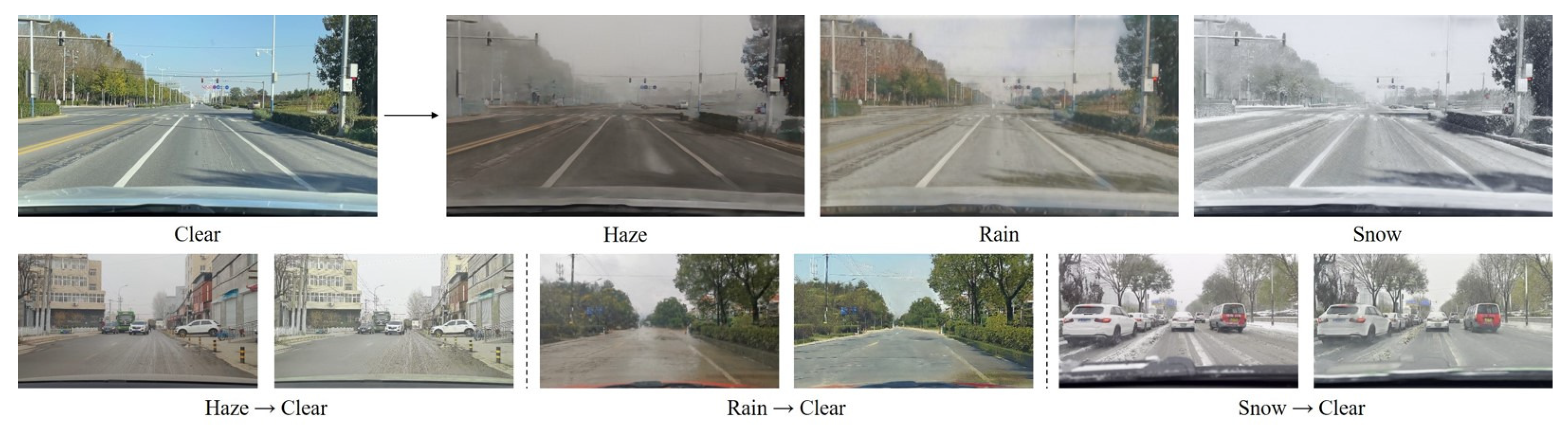

To train our MWTG, we reorganized the datasets described above to create a Cityscapes weather dataset, as shown in

Figure 3. The synthetic weather datasets use the same depth maps as the background more than once to simulate different weather intensities. Since low-intensity weather does not degrade visual applications very much and high-intensity weather occurs relatively infrequently, we only used the 300-m haze images of the Foggy Cityscapes and chose 12 types of rain patterns from Rain Cityscapes. To keep all the images in the training set at the same resolution, which is important to reduce domain difference, we resized the Snow Cityscapes images to

using normal linear interpolation.

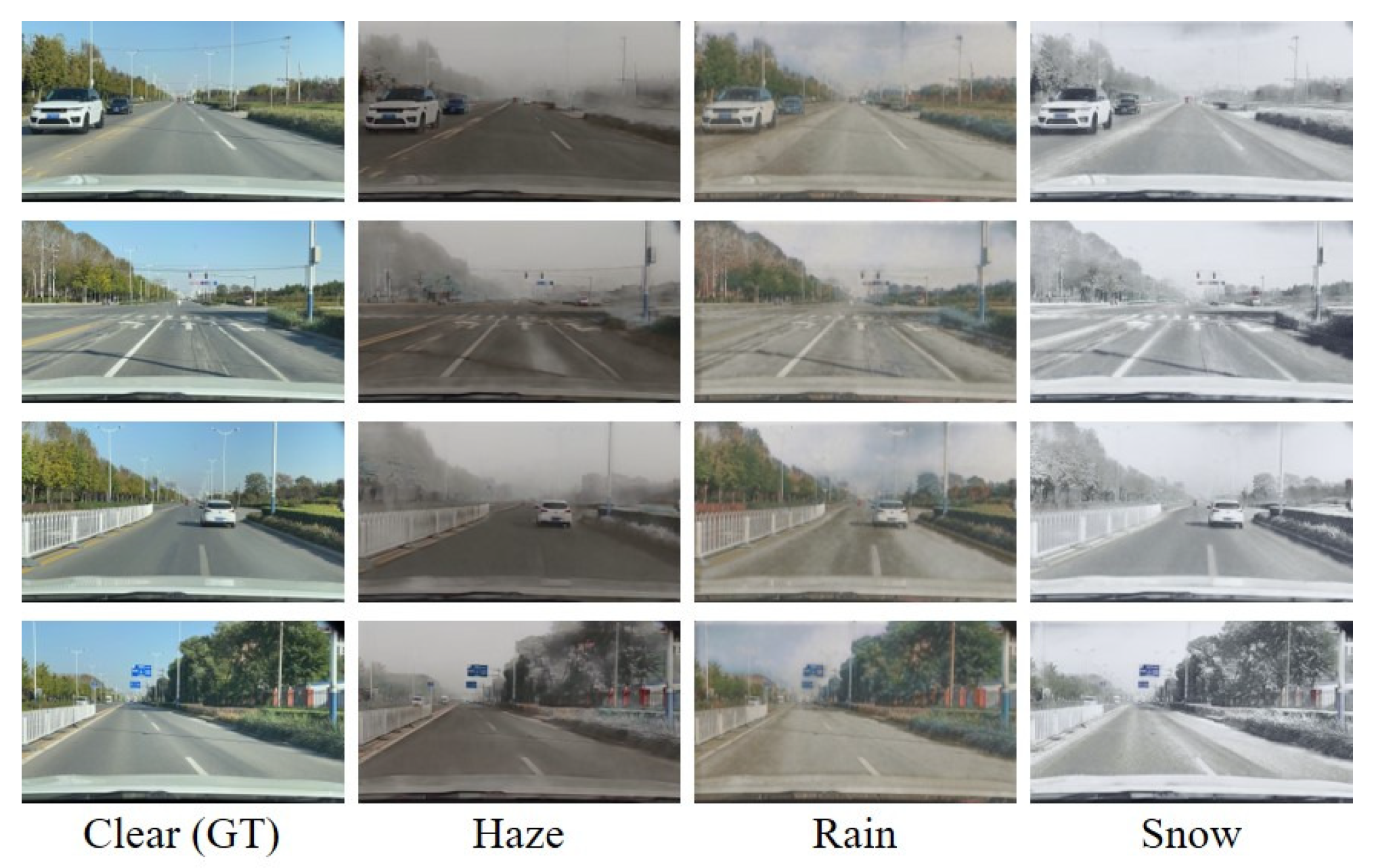

4.1.2. Realistic Driving Scenes under Bad Weather Dataset

RDSBW is a new dataset we created, which includes 2831 clear, 2052 hazy, 4171 rainy, and 4777 snowy images. We picked out high-quality images,

pixels in size, from driving scene videos recorded in urban settings.

Figure 4 shows examples of RDSBW images from each set.

4.2. Implementation Details

We used the Pytorch framework for training, testing, and image preprocessing. Two NVIDIA RTX A6000 graphics cards were used for training, with a batch size of 4. We trained the MWTG model for 200 epochs on each dataset to ensure convergence, using the Adam optimizer and a step learning rate schedule. In addition, we set the and loss weights at 10 and the extra identity loss weight at 2.

4.3. Evaluation Results and Comparisons

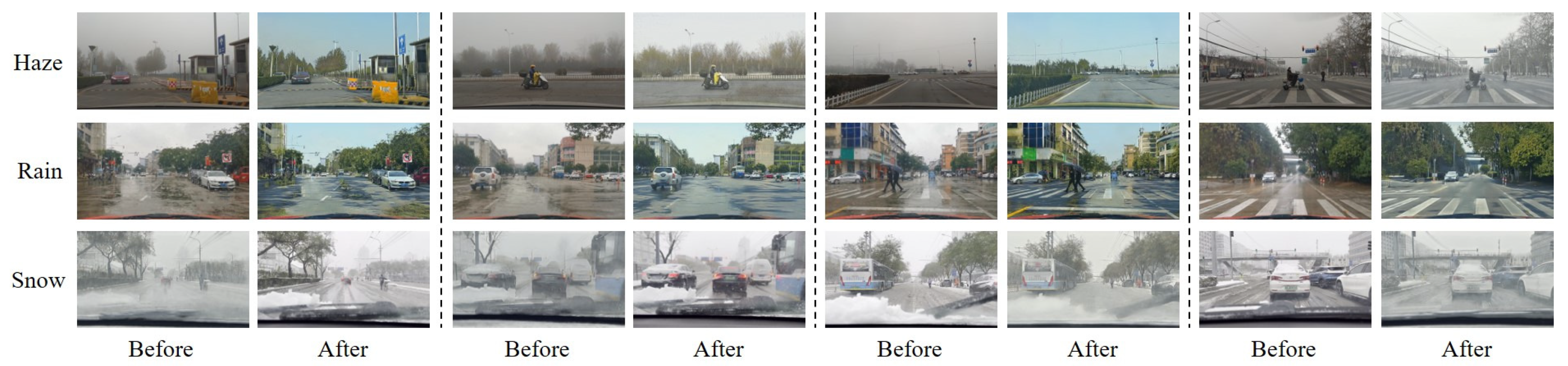

4.3.1. Qualitative Evaluation Using RDSBW Data

We first conducted a qualitative experiment using MWTG with the RDSBW dataset. The samples of the weather generation results are shown in

Figure 5. MWTG can translate an unseen clear image into hazy, rainy, and snowy images without changing the original background context. The proposed method seemed especially effective for adding haze and rain, based on the following three aspects. First, the color of the image shifted based on the type of adverse weather effect being added. Second, the weather effects were similar to those observed in real scenes, since MWTG does not simply add an extra layer to the input image but instead applies appropriately generated weather effects to each region of the image, to objects such as the sky, roads, and trees. Third, MWTG is able to consider the semantic information. For example, the wires connecting the power and telephone poles were partly hidden under hazy weather conditions and the lane markings were covered by ice and snow under snowy conditions. However, in the case of rainy weather, the generated results were not ideal because the patterns in the rainy weather source images were not conspicuous enough for the model to learn them effectively. We will address this problem in the future by collecting more useful rainy weather image data.

Regarding the weather removal results, we can still observe accurate color transformation and realistic scene translation results, as shown in

Figure 6, but MWTG occasionally fabricated inputs, generating artifacts in some cases, most notably the insertion of fake grass in the middle of the road when removing rainy weather effects. We believe this is due to limitations in the generation process and, when weather effects are very extensive, the network is unable to determine the original context without some guessing.

4.3.2. Qualitative and Quantitative Evaluation Using Cityscapes Weather Data

Here, we present our qualitative results when using the Cityscape corpora and, since ground truth images without any adverse weather phenomena are included, we will then provide quantitative results also. As shown in

Figure 7, MWTG demonstrated high performance when removing weather effects during the qualitative experiment. This is because, in this setting, the only difference between the adverse and clear weather domains was the weather effects. Therefore, even though the data were unpaired during training, MWTG can still determine what is hidden behind the haze, rain streaks, or snowflakes and recover the original images.

In our quantitative evaluation, we compare MWTG with the state-of-the-art single weather removal methods, but only for their specific tasks. For de-hazing, we compared the performance of our proposed MTWG method with DehazeNet [

25], MSCNN [

26], AODNet [

27], and GridDehazeNet [

51]. For de-raining, we compared MTWG with RCDNet [

52], MPRNet [

53], PReNet [

54], and RESCAN [

55]. For de-snowing, we compared it with RESCAN [

55], SPANet [

56], and DesnowNet [

30]. Note that although MSCNN is listed in all the comparisons, it is still categorized as a single weather removal tool since it needs to be retrained for each removal task. In contrast, MWTG performs all these tasks using the same model.

PSNR and SSIM were used to compare the performance of each model when using the Cityscapes images. The tabulated results are shown in

Table 1,

Table 2 and

Table 3.

From these results, we can see that MWTG achieved similar or better performance than the other de-hazing and de-raining methods, as measured using PSNR and SSIM. However, for the de-snowing task, MWTG was impaired by pixel resolution differences and, thus, did not achieve satisfactory performance. This is because the conventional methods were evaluated using the original Snow Cityscapes images, with an image resolution of , while we resized these images to match the resolutions of the other two datasets () when training MWTG. Therefore, MWTG is dealing with images that are 16 times larger than the conventional methods.

4.4. Evaluation Using Perception Algorithm

To verify the suitability of MWTG for visual applications, we tested it using the state-of-the-art pedestrian detector ACSP on Foggy Cityscapes images. An example of MWTG’s de-hazing performance is shown in

Figure 8 and ACSP detection results before and after de-hazing are shown in

Table 4. As we can see, the detection results clearly improved after the images were de-hazed using MWTG. For further investigation, we used ACSP on the validation set of Foggy Cityscapes and tabulated the values of log-average Miss Rate over False Positive Per Image (FPPI); the results are shown in

Table 5. We also apply the SOTA object detector, which uses Cascade-RCNN [

57] as the backbone, on Weather Cityscapes as shown in

Figure 9. We can observe the performance improvement in the detection numbers in different weather conditions.

4.5. Discussion

Based on the results of our evaluations, we can confirm that MWTG is able to translate images of multiple types of adverse weather into clear images, since a constraint on cycle-consistency loss allows the background context to remain unchanged. We now discuss in more detail the capabilities and drawbacks of weather generation and removal using MWTG.

In the experiment with the RDSBW dataset, the number of samples for each weather condition was unbalanced. Normally, models learn better conversion rules with more training samples. However, in the case of hazy weather, with only 2000 training samples, the model was able to achieve satisfactory results. In contrast, in the case of images of rainy weather, even though the model was trained with 4000 image samples, the translation results were less accurate. This is because rain creates more complex patterns in images. For example, streaks of rain in the air are spindly, so they are difficult for the camera to capture. Furthermore, rain is often accompanied by high humidity, thus there is often fog in the background of rainy images. When encountering finer and more varied distinctions, our model tends to learn simpler representations, which is why the rainy images generated using MWTG are more similar to an intermediate output between haze and snow. We can also observe that MWTG’s weather generation performance is superior to its weather removal performance. Since the CycleGAN model [

35] is good at translation tasks involving color and texture changes and, since our model is based on CycleGAN, MWTG should inherit this ability. However, even though we intuitively consider the generation and removal of weather to be two separate tasks, our model treats both as translation tasks. This means erasing noise, such as blocks of haze or flakes of snow from occluded objects, is not the primary target of the model but it is adding a layer of snow on the road or inserting a layer of haze in the distance, for example. Note that this difference in conversion performance is less obvious when using the Cityscapes data, where domain variance is minimal since the images are all synthesized using the same dataset.

5. Conclusions

In this work, we have explored a solution to the visibility degradation problem that intelligent vehicles encounter when operating under adverse weather conditions, which can lead to malfunctioning of the perception module. Our proposed, dual-purpose framework, called Multiple Weather Translation GAN (MWTG), is able to perform adverse weather generation and removal tasks simultaneously. In particular, we trained our image translation model using unpaired data. Three weather generators were used to create adverse weather effects on images of normal driving scenes obtained from video datasets, while a fourth clear weather generator was used to recover clear images by removing hazy, rainy, and snowy noise. To avoid translation deviation, we added a spatial feature transform layer to fuse the feature maps of the front-end network, as an information guide to the subsequent network.

A qualitative evaluation of MWTG using our own RDSBW dataset and qualitative and quantitative evaluations using reorganized images from the Cityscapes and Cityscapes weather datasets showed that MWTG can achieve promising de-noising performance. Moreover, the results of a practical experiment showed that our model boosted the performance of state-of-the-art pedestrian detector ACSP when tested using the Foggy Cityscapes images.

In the future, we intend to expand the range of adverse weather that the model can handle to include strong light and nighttime scenes, without complicating the present MWTG framework.

Author Contributions

Conceptualization, H.Y. and Y.Z.; methodology, H.Y.; formal analysis, A.C. writing—original draft preparation, H.Y.; supervision, K.T. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded by the Tokai National Higher Education and Research System (THERS) “Interdisciplinary Frontier Next Generation Researcher Scholarship” (RG211057), supported by the Ministry of Education, Culture, Sports, Science and Technology (MEXT) of Japan and Nagoya University. This work was supported by JSPS KAKENHI Grant Number JP21H04892 and JP21K12073.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Lee, Y.; Jeon, J.; Ko, Y.; Jeon, B.; Jeon, M. Task-driven deep image enhancement network for autonomous driving in bad weather. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp. 13746–13753. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, ECCV 2020, Glasgow, UK, 20–28 August 2020; Springer: Cham, Switzerland, 2020; pp. 213–229. [Google Scholar]

- Stadler, D.; Beyerer, J. Improving multiple pedestrian tracking by track management and occlusion handling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10958–10967. [Google Scholar]

- Zhang, S.; Abdel-Aty, M.; Wu, Y.; Zheng, O. Pedestrian crossing intention prediction at red-light using pose estimation. IEEE Trans. Intell. Transp. Syst. 2021, 23, 2331–2339. [Google Scholar] [CrossRef]

- Zhang, Y.; Carballo, A.; Yang, H.; Takeda, K. Perception and sensing for autonomous vehicles under adverse weather conditions: A survey. ISPRS J. Photogramm. Remote Sens. 2023, 196, 146–177. [Google Scholar] [CrossRef]

- Narasimhan, S.G.; Nayar, S.K. Vision and the atmosphere. Int. J. Comput. Vis. 2002, 48, 233–254. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [PubMed]

- Berman, D.; Treibitz, T.; Avidan, S. Single image dehazing using haze-lines. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 42, 720–734. [Google Scholar] [CrossRef] [PubMed]

- Kang, L.W.; Lin, C.W.; Fu, Y.H. Automatic single-image-based rain streaks removal via image decomposition. IEEE Trans. Image Process. 2011, 21, 1742–1755. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Tan, R.T.; Guo, X.; Lu, J.; Brown, M.S. Single image rain streak decomposition using layer priors. IEEE Trans. Image Process. 2017, 26, 3874–3885. [Google Scholar] [CrossRef]

- Pei, S.C.; Tsai, Y.T.; Lee, C.Y. Removing rain and snow in a single image using saturation and visibility features. In Proceedings of the 2014 IEEE International Conference on Multimedia and Expo Workshops (ICMEW), Chengdu, China, 14–18 July 2014; pp. 1–6. [Google Scholar]

- Zheng, X.; Liao, Y.; Guo, W.; Fu, X.; Ding, X. Single-image-based rain and snow removal using multi-guided filter. In Proceedings of the International Conference on Neural Information Processing, ICONIP 2013, Daegu, Republic of Korea, 3–7 November 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 258–265. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. (TOG) 2008, 27, 1–9. [Google Scholar] [CrossRef]

- Yang, W.; Tan, R.T.; Wang, S.; Fang, Y.; Liu, J. Single image deraining: From model-based to data-driven and beyond. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 43, 4059–4077. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.T.; Fang, H.Y.; Hsieh, C.L.; Tsai, C.C.; Chen, I.; Ding, J.J.; Kuo, S.Y. All snow removed: Single image desnowing algorithm using hierarchical dual-tree complex wavelet representation and contradict channel loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11—17 October 2021; pp. 4196–4205. [Google Scholar]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.H. Gated fusion network for single image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18-23 June 2018; pp. 3253–3261. [Google Scholar]

- Qin, X.; Wang, Z.; Bai, Y.; Xie, X.; Jia, H. FFA-Net: Feature fusion attention network for single image dehazing. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 11908–11915. [Google Scholar]

- Dong, H.; Pan, J.; Xiang, L.; Hu, Z.; Zhang, X.; Wang, F.; Yang, M.H. Multi-scale boosted dehazing network with dense feature fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2157–2167. [Google Scholar]

- Hong, M.; Xie, Y.; Li, C.; Qu, Y. Distilling image dehazing with heterogeneous task imitation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3462–3471. [Google Scholar]

- Yang, W.; Tan, R.T.; Feng, J.; Liu, J.; Guo, Z.; Yan, S. Deep joint rain detection and removal from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1357–1366. [Google Scholar]

- Fu, X.; Huang, J.; Ding, X.; Liao, Y.; Paisley, J. Clearing the skies: A deep network architecture for single-image rain removal. IEEE Trans. Image Process. 2017, 26, 2944–2956. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Sindagi, V.; Patel, V.M. Image de-raining using a conditional generative adversarial network. IEEE Trans. Circuits Syst. Video Technol. 2019, 30, 3943–3956. [Google Scholar] [CrossRef]

- Li, R.; Cheong, L.F.; Tan, R.T. Heavy rain image restoration: Integrating physics model and conditional adversarial learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1633–1642. [Google Scholar]

- Yang, H.; Carballo, A.; Takeda, K. Disentangled Bad Weather Removal GAN for Pedestrian Detection. In Proceedings of the 2022 IEEE 95th Vehicular Technology Conference:(VTC2022-Spring), Helsinki, Finland, 19–22 June 2022; pp. 1–6. [Google Scholar]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. Dehazenet: An end-to-end system for single image haze removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [PubMed]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single image dehazing via multi-scale convolutional neural networks. In Proceedings of the European Conference on Computer Vision, ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland; pp. 154–169. [Google Scholar]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. Aod-net: All-in-one dehazing network. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4770–4778. [Google Scholar]

- Zhang, H.; Patel, V.M. Densely connected pyramid dehazing network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3194–3203. [Google Scholar]

- Wu, H.; Qu, Y.; Lin, S.; Zhou, J.; Qiao, R.; Zhang, Z.; Xie, Y.; Ma, L. Contrastive learning for compact single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10551–10560. [Google Scholar]

- Liu, Y.F.; Jaw, D.W.; Huang, S.C.; Hwang, J.N. DesnowNet: Context-aware deep network for snow removal. IEEE Trans. Image Process. 2018, 27, 3064–3073. [Google Scholar] [CrossRef] [PubMed]

- Zhang, K.; Li, R.; Yu, Y.; Luo, W.; Li, C. Deep dense multi-scale network for snow removal using semantic and depth priors. IEEE Trans. Image Process. 2021, 30, 7419–7431. [Google Scholar] [CrossRef] [PubMed]

- Li, R.; Tan, R.T.; Cheong, L.F. All in one bad weather removal using architectural search. In Proceedings of the IEEE/CVF conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3175–3185. [Google Scholar]

- Valanarasu, J.M.J.; Yasarla, R.; Patel, V.M. Transweather: Transformer-based restoration of images degraded by adverse weather conditions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2353–2363. [Google Scholar]

- Solanki, A.; Nayyar, A.; Naved, M. Generative Adversarial Networks for Image-to-Image Translation; Academic Press: Cambridge, MA, USA, 2021. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.K.; Kim, J. Learning to discover cross-domain relations with generative adversarial networks. In Proceedings of the International Conference on Machine Learning, PMLR, Sydney, Australia, 6–11 August 2017; pp. 1857–1865. [Google Scholar]

- Yi, Z.; Zhang, H.; Tan, P.; Gong, M. Dualgan: Unsupervised dual learning for image-to-image translation. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2849–2857. [Google Scholar]

- Huang, Q.X.; Guibas, L. Consistent shape maps via semidefinite programming. Comput. Graph. Forum 2013, 32, 177–186. [Google Scholar] [CrossRef]

- Wang, F.; Huang, Q.; Guibas, L.J. Image co-segmentation via consistent functional maps. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 849–856. [Google Scholar]

- Zhou, T.; Krahenbuhl, P.; Aubry, M.; Huang, Q.; Efros, A.A. Learning dense correspondence via 3d-guided cycle consistency. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 117–126. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Hinton, G.E.; Salakhutdinov, R.R. Reducing the dimensionality of data with neural networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [PubMed]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE conference on computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2017; pp. 4681–4690. [Google Scholar]

- Ye, Y.; Chang, Y.; Zhou, H.; Yan, L. Closing the loop: Joint rain generation and removal via disentangled image translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 2053–2062. [Google Scholar]

- Wang, X.; Yu, K.; Dong, C.; Loy, C.C. Recovering realistic texture in image super-resolution by deep spatial feature transform. In Proceedings of the IEEE conference on computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 606–615. [Google Scholar]

- Shao, Y.; Li, L.; Ren, W.; Gao, C.; Sang, N. Domain adaptation for image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 2808–2817. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Sakaridis, C.; Dai, D.; Van Gool, L. Semantic foggy scene understanding with synthetic data. Int. J. Comput. Vis. 2018, 126, 973–992. [Google Scholar] [CrossRef]

- Hu, X.; Fu, C.W.; Zhu, L.; Heng, P.A. Depth-attentional features for single-image rain removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8022–8031. [Google Scholar]

- Liu, X.; Ma, Y.; Shi, Z.; Chen, J. Griddehazenet: Attention-based multi-scale network for image dehazing. In Proceedings of the IEEE/CVF international Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 7314–7323. [Google Scholar]

- Wang, H.; Xie, Q.; Zhao, Q.; Meng, D. A model-driven deep neural network for single image rain removal. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3103–3112. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 14821–14831. [Google Scholar]

- Ren, D.; Zuo, W.; Hu, Q.; Zhu, P.; Meng, D. Progressive image deraining networks: A better and simpler baseline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3937–3946. [Google Scholar]

- Li, X.; Wu, J.; Lin, Z.; Liu, H.; Zha, H. Recurrent squeeze-and-excitation context aggregation net for single image deraining. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 254–269. [Google Scholar]

- Wang, T.; Yang, X.; Xu, K.; Chen, S.; Zhang, Q.; Lau, R.W. Spatial attentive single-image deraining with a high quality real rain dataset. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 12270–12279. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade R-CNN: High quality object detection and instance segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2019, 43, 1483–1498. [Google Scholar] [CrossRef] [PubMed]

Figure 1.

Architecture of proposed Multiple Weather Translation GAN. MWTG consists of four generators corresponding to four mappings: , , , and . All the generators have a ResNet encoder–decoder with nine residual blocks. Their associated discriminators are a three-layer CNN and output a one-channel prediction map. The weather layers, which are obtained by subtracting the fake output from the input, are used as information guidance to achieve better results. Note that represent hazy, rainy, snowy, and clear weather, respectively, and that are the respective networks used for generating these weather effects.

Figure 1.

Architecture of proposed Multiple Weather Translation GAN. MWTG consists of four generators corresponding to four mappings: , , , and . All the generators have a ResNet encoder–decoder with nine residual blocks. Their associated discriminators are a three-layer CNN and output a one-channel prediction map. The weather layers, which are obtained by subtracting the fake output from the input, are used as information guidance to achieve better results. Note that represent hazy, rainy, snowy, and clear weather, respectively, and that are the respective networks used for generating these weather effects.

Figure 2.

MWTG translates clear images into hazy, rainy, or snowy images using three different generators, creating a set of images representing different weather conditions (top row). In contrast, only one generator is needed to translate all three types of adverse weather images into clear ones (bottom row).

Figure 2.

MWTG translates clear images into hazy, rainy, or snowy images using three different generators, creating a set of images representing different weather conditions (top row). In contrast, only one generator is needed to translate all three types of adverse weather images into clear ones (bottom row).

Figure 3.

Sample scenes from the Cityscapes and rearranged Cityscapes weather datasets. We combined images selected from Foggy Cityscapes [

49], Rain Cityscapes [

50], Snow Cityscapes [

31], and the original Cityscapes [

48] datasets into one corpus consisting of 5000, 3540, 6000, and 5000 images from each dataset, respectively. The number of samples from each dataset varied due to variation in our synthesis strategy for different weather types. The original resolution of all the images is

.

Figure 3.

Sample scenes from the Cityscapes and rearranged Cityscapes weather datasets. We combined images selected from Foggy Cityscapes [

49], Rain Cityscapes [

50], Snow Cityscapes [

31], and the original Cityscapes [

48] datasets into one corpus consisting of 5000, 3540, 6000, and 5000 images from each dataset, respectively. The number of samples from each dataset varied due to variation in our synthesis strategy for different weather types. The original resolution of all the images is

.

Figure 4.

Sample scenes from the Realistic Driving Scenes under Bad Weather dataset (RDSBW). We first captured driving scene video under different weather conditions using a camera with resolution mounted behind a car windshield. We then selected sharp and unique images and manually categorized them by weather type. The “haze” set contains 2052 images, the “rain” set contains 4171 images, the “snow” set contains 4777 images, and the “clear” set contains 2831 images. Note that the images are uncorrelated by location.

Figure 4.

Sample scenes from the Realistic Driving Scenes under Bad Weather dataset (RDSBW). We first captured driving scene video under different weather conditions using a camera with resolution mounted behind a car windshield. We then selected sharp and unique images and manually categorized them by weather type. The “haze” set contains 2052 images, the “rain” set contains 4171 images, the “snow” set contains 4777 images, and the “clear” set contains 2831 images. Note that the images are uncorrelated by location.

Figure 5.

Weather generation results for RDSBW. Even when trained without paired image sets, MWTG still can translate clear images into images of our three adverse weather conditions without corrupting the background context.

Figure 5.

Weather generation results for RDSBW. Even when trained without paired image sets, MWTG still can translate clear images into images of our three adverse weather conditions without corrupting the background context.

Figure 6.

Weather removal results for the RDSBW dataset, showing examples of MWTG’s translation of adverse weather images into clear weather images; while MWTG was unable to recover objects and buildings hidden behind dense haze, it did not randomly insert fake objects.

Figure 6.

Weather removal results for the RDSBW dataset, showing examples of MWTG’s translation of adverse weather images into clear weather images; while MWTG was unable to recover objects and buildings hidden behind dense haze, it did not randomly insert fake objects.

Figure 7.

Weather removal results for the Cityscapes datasets. Although the MWTG model uses an unpaired training paradigm, the original Cityscapes dataset contains ground truth images for its synthesized weather datasets, in contrast to the RDSBW dataset. The result is that even if objects are occluded by haze, rain, or snowflakes, MWTG still can recover the original Cityscape images to generate clear images.

Figure 7.

Weather removal results for the Cityscapes datasets. Although the MWTG model uses an unpaired training paradigm, the original Cityscapes dataset contains ground truth images for its synthesized weather datasets, in contrast to the RDSBW dataset. The result is that even if objects are occluded by haze, rain, or snowflakes, MWTG still can recover the original Cityscape images to generate clear images.

Figure 8.

Application of MTWG on Foggy Cityscapes using SOTA pedestrian detector.

Figure 8.

Application of MTWG on Foggy Cityscapes using SOTA pedestrian detector.

Figure 9.

Application of MTWG on Weather Cityscapes using SOTA object detector.

Figure 9.

Application of MTWG on Weather Cityscapes using SOTA object detector.

Table 1.

Comparison of results with Foggy Cityscapes.

Table 1.

Comparison of results with Foggy Cityscapes.

| Type | Method | Venue | PSNR | SSIM |

|---|

| Task Specific | DehazeNet [25] | TIP | 14.971 | 0.487 |

| MSCNN [26] | ECCV | 18.994 | 0.859 |

| AODNet [27] | ICCV | 15.446 | 0.631 |

| GridDehazeNet [51] | ICCV | 23.72 | 0.922 |

| Previous Work [24] | VTC | 24.071 | 0.915 |

| Multi Task | MWTG | - | 23.844 | 0.911 |

Table 2.

Comparison of results with Rain Cityscapes.

Table 2.

Comparison of results with Rain Cityscapes.

| Type | Method | Venue | PSNR | SSIM |

|---|

| Task Specific | RCDNet [52] | CVPR | 20.39 | 0.6498 |

| MPRNet [53] | CVPR | 20.10 | 0.6815 |

| PReNet [54] | CVPR | 20.48 | 0.6598 |

| RESCAN [55] | CVPR | 20.44 | 0.6681 |

| Previous Work [24] | VTC | 22.458 | 0.886 |

| Multi Task | MWTG | - | 25.16 | 0.911 |

Table 3.

Comparison of results with Snow Cityscapes.

Table 3.

Comparison of results with Snow Cityscapes.

| Type | Method | Venue | PSNR | SSIM |

|---|

| Task Specific | RESCAN [55] | ECCV | 33.63 | 0.9627 |

| SPANet [56] | CVPR | 35.73 | 0.9741 |

| DesnowNet [30] | TIP | 33.58 | 0.9382 |

| Previous Work [24] | VTC | 27.42 | 0.871 |

| Multi Task | MWTG | - | 25.233 | 0.858 |

Table 4.

Generation Results on Cityscapes.

Table 4.

Generation Results on Cityscapes.

| Type | Weather | PSNR | SSIM |

|---|

| Multi Task | Haze | 21.091 | 0.924 |

| Rain | 21.375 | 0.849 |

| Snow | 19.021 | 0.679 |

Table 5.

Log-average miss rate over false positive per image (FPPI) results for ACSP pedestrian detector using Foggy Cityscapes.

Table 5.

Log-average miss rate over false positive per image (FPPI) results for ACSP pedestrian detector using Foggy Cityscapes.

| Data | Reasonable | Bare | Partial | Heavy |

|---|

| Foggy (before) | 23.73% | 16.32% | 25.93% | 58.70% |

| De-haze (after) | 20.65% | 14.98% | 21.30% | 58.70% |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).