Enhancing System Performance through Objective Feature Scoring of Multiple Persons’ Breathing Using Non-Contact RF Approach

Abstract

1. Introduction

- ▪

- ▪

- The developed system can adopt multiple wireless standards compared to Wi-Fi-based RF-sensing.

- ▪

- The performance of classical feature extracting approaches was improved by introducing the optimal feature scoring methods for building ML models.

- ▪

- This study is the first to consider multi-person breathing monitoring using RF sensing by exploiting the SDR technology to offer a portable and adaptable solution.

2. Related Work

2.1. Radar-Based RF Sensing

2.2. Wi-Fi-Based Breathing Sensing

2.3. SDR-Based Breathing Sensing

3. Design Methodology

3.1. Wireless Signal Sensing

3.1.1. Transmitter

3.1.2. Wireless Channel

3.1.3. Receiver

3.2. Signal Preprocessing

3.2.1. Subcarrier Selection

3.2.2. Outlier Removal

3.2.3. Smoothening

3.2.4. Normalization

3.3. Breathing Monitoring

3.4. Breathing Classification

3.4.1. Optimal Feature Scoring

- (a)

- Features extraction

- (b)

- Features Selection

- I.

- Minimum redundancy maximum relevance (MRMR) algorithm

- II.

- Principle component analysis (PCA)

| Algorithm 1 MRMR Algorithm—Pseudocode for optimal features selection |

|

3.4.2. Breathing Patterns Classification

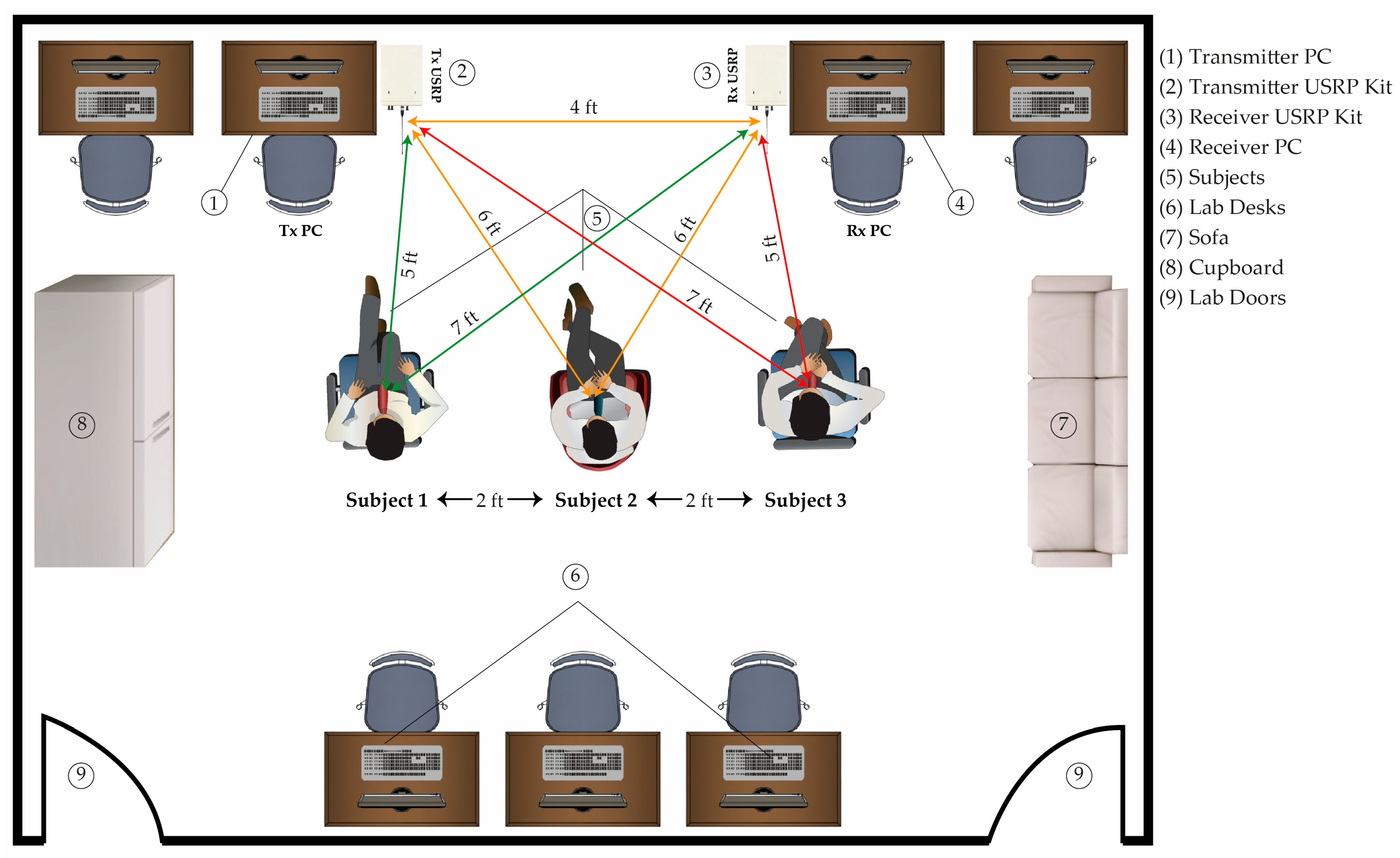

4. Experimental Setup

5. Results and Discussions

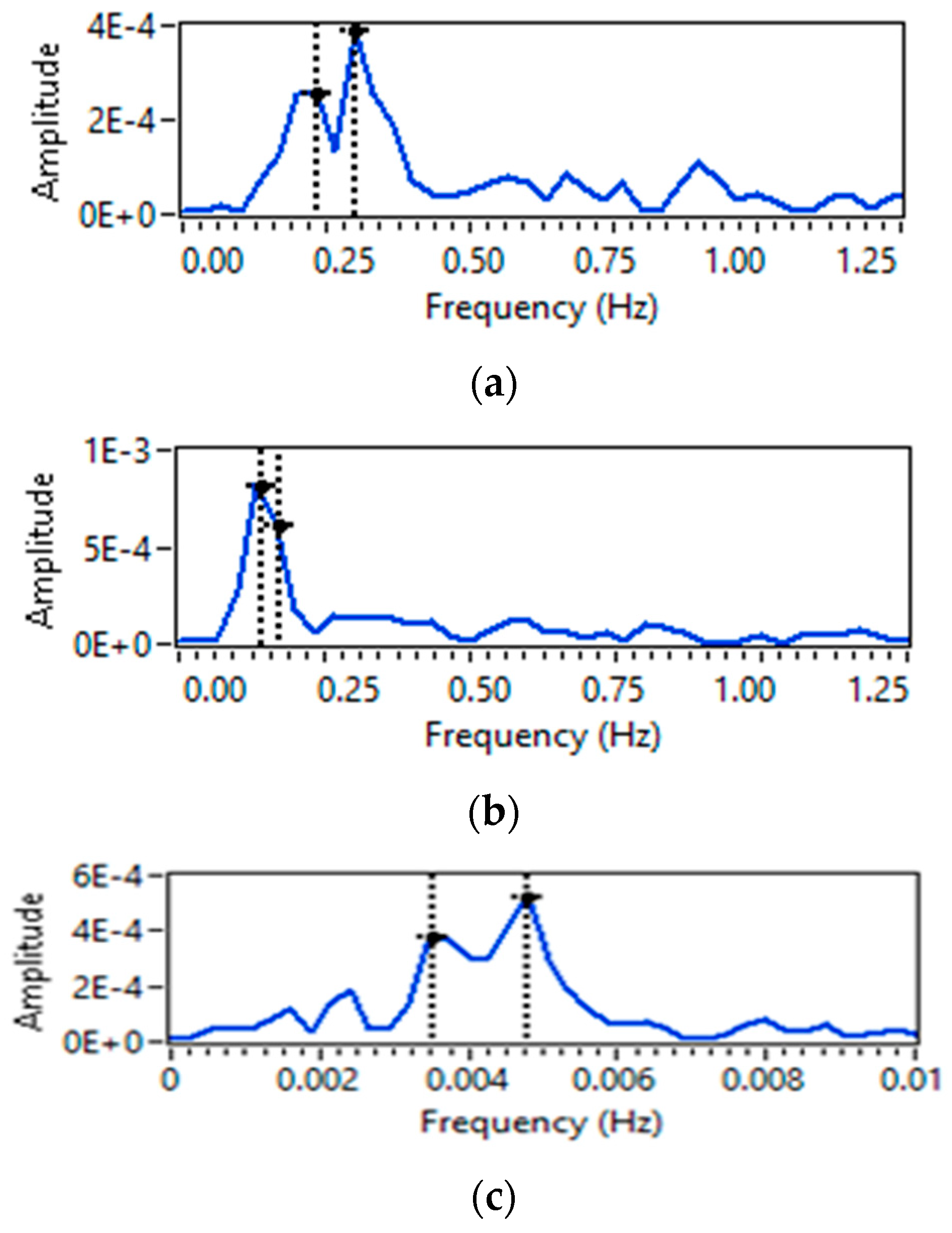

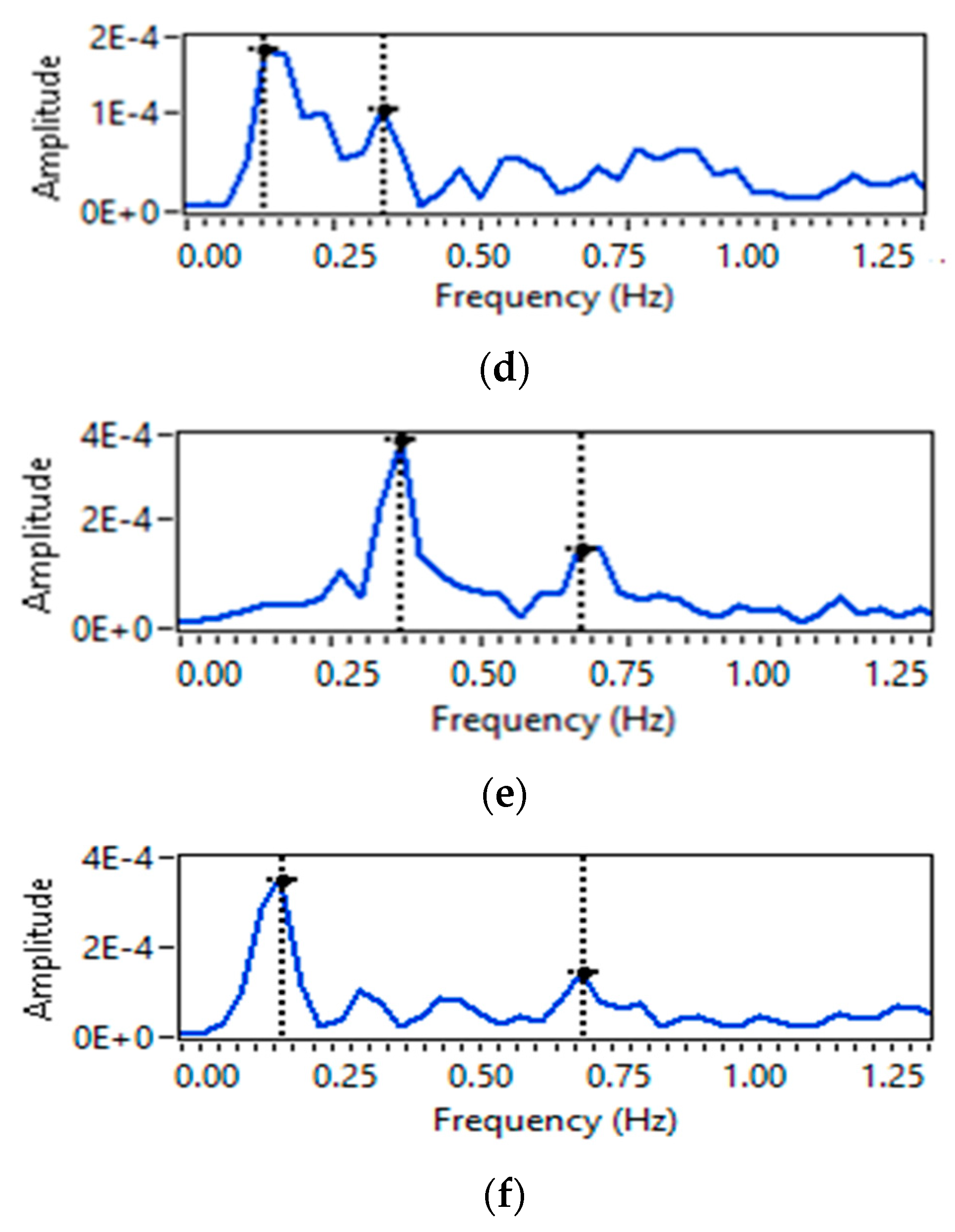

5.1. Breathing Pattern Extraction

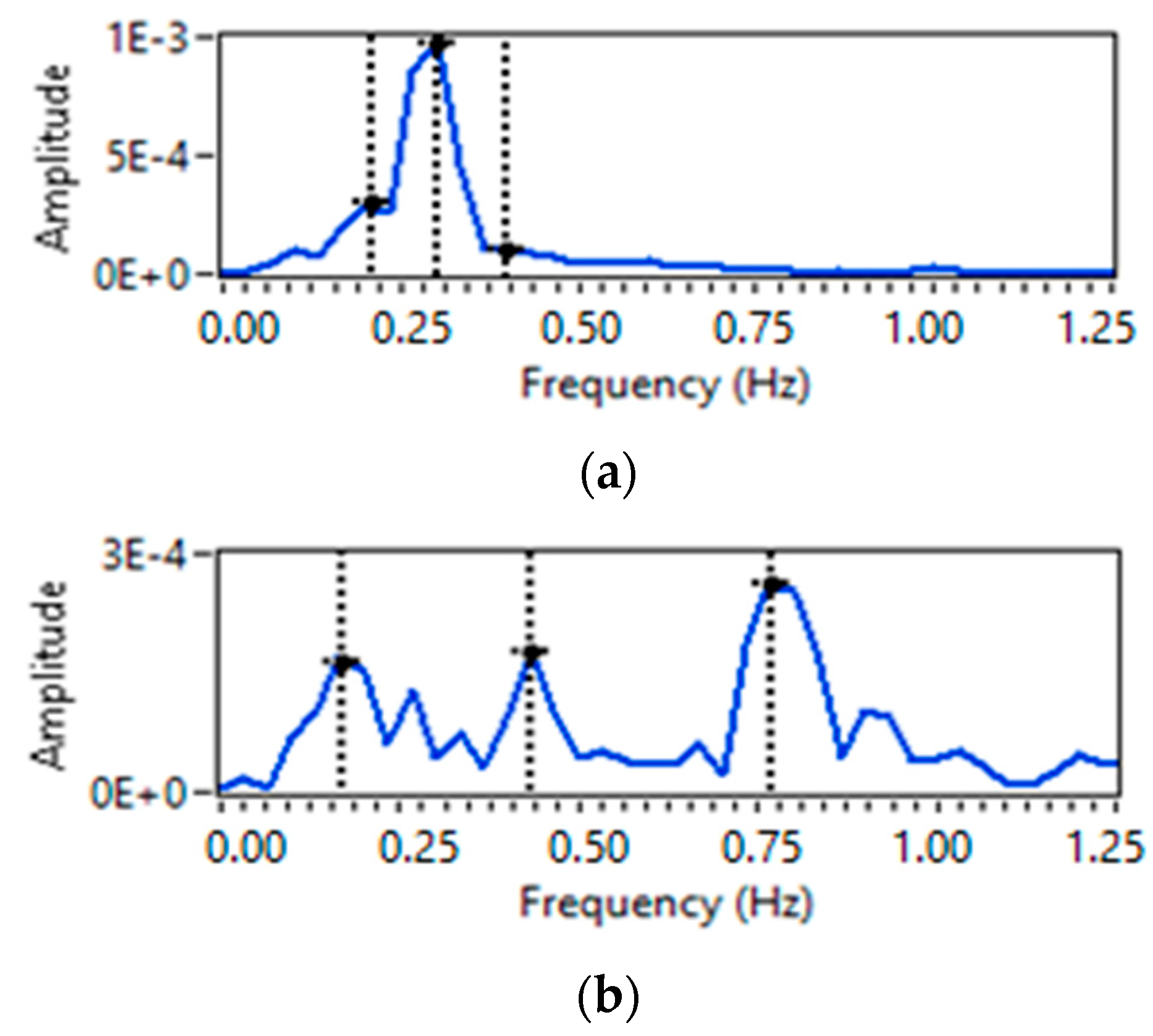

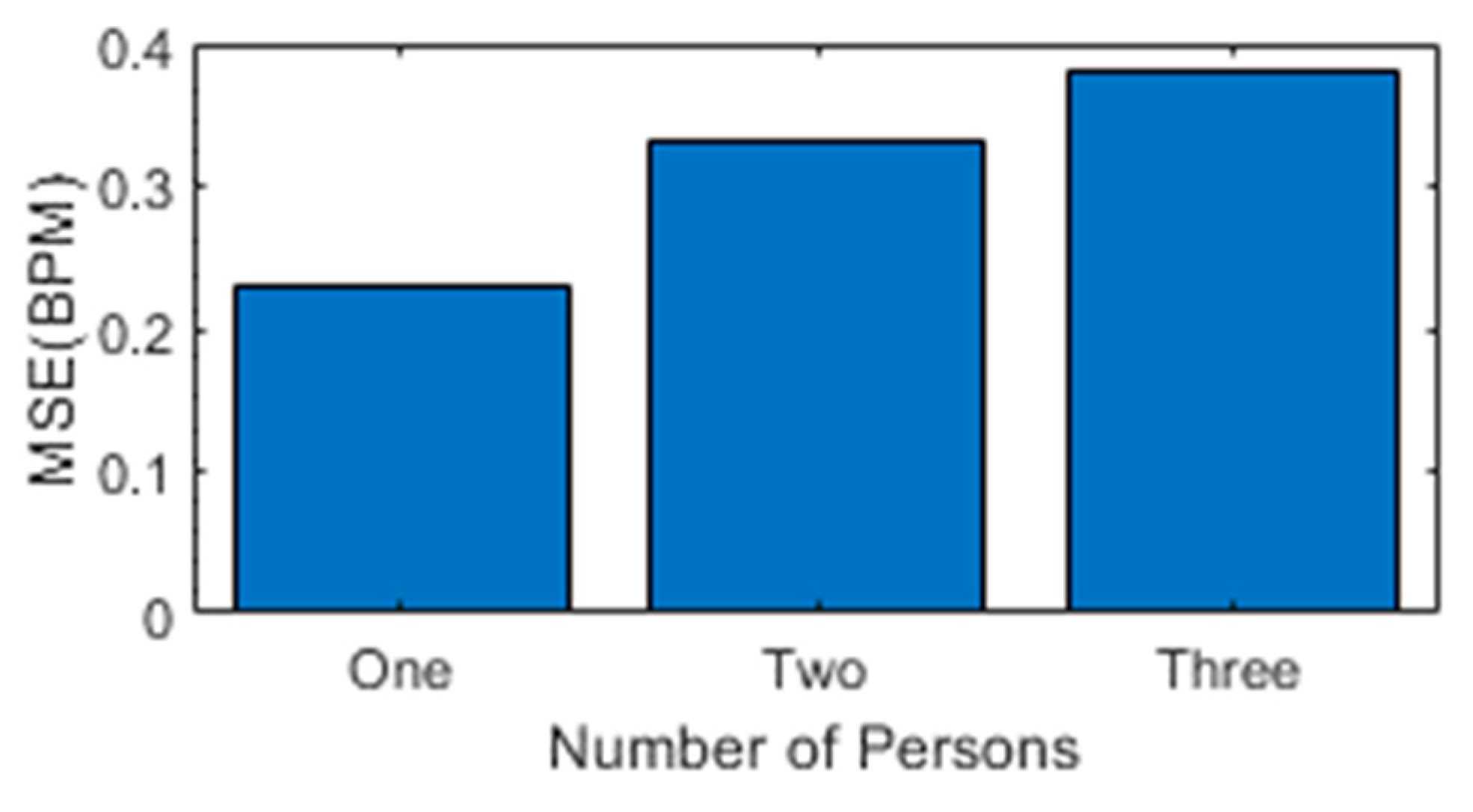

5.2. Breath Rate Extraction

5.3. Comparison with Wearable Sensor

5.4. Breathing Patterns Classification

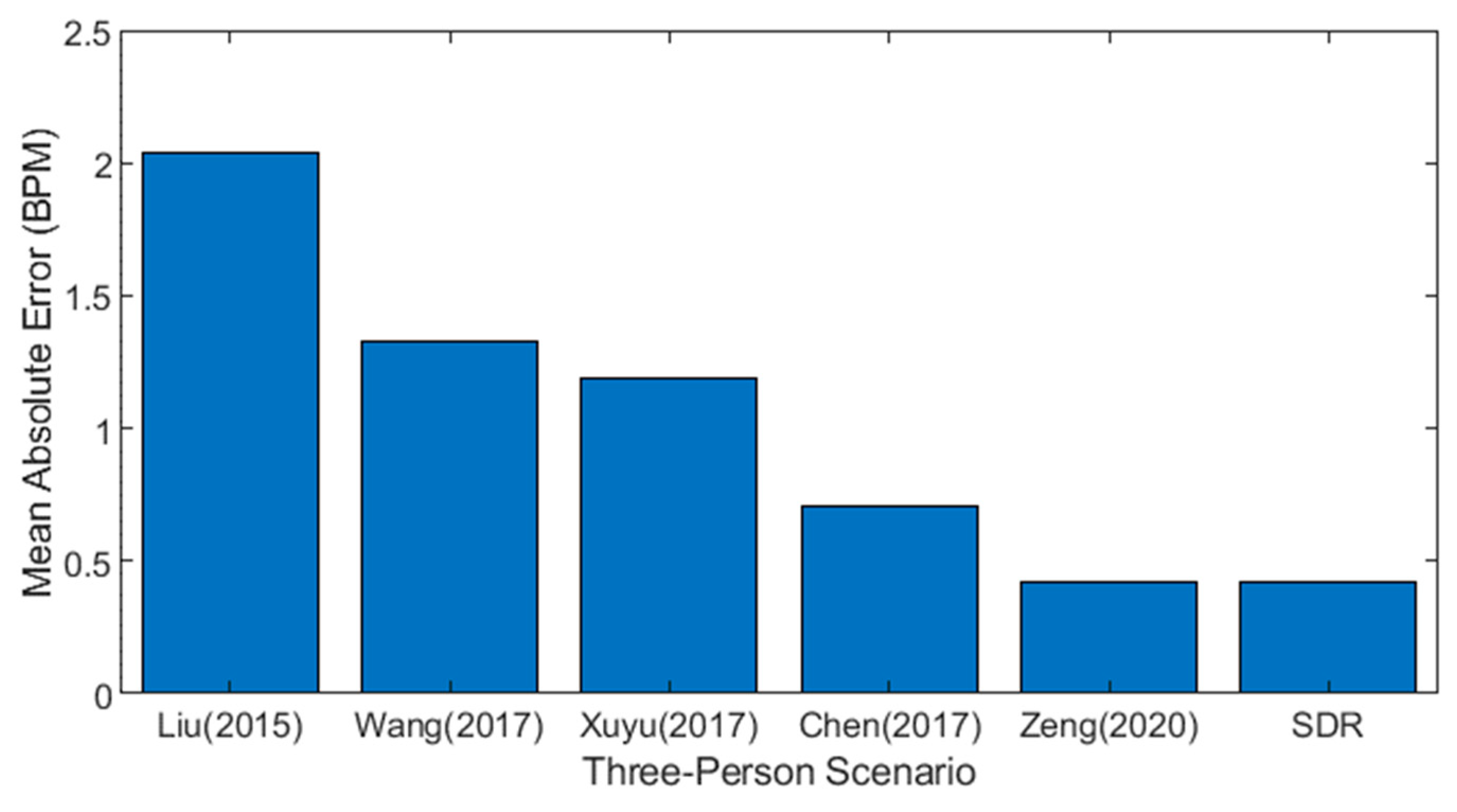

5.5. Comparison with Previous Approaches

6. Conclusions and Future Recommendations

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Nandakumar, R.; Gollakota, S.; Watson, N. Contactless Sleep Apnea Detection on Smartphones. In Proceedings of the 13th Annual International Conference on Mobile Systems, Applications, and Services, Florence, Italy, 18–22 May 2015; pp. 45–57. [Google Scholar]

- Rehman, M.; Shah, R.A.; Khan, M.B.; Ali, N.A.A.; Alotaibi, A.A.; Althobaiti, T.; Ramzan, N.; Shah, S.A.; Yang, X.; Alomainy, A. Contactless Small-Scale Movement Monitoring System Using Software Defined Radio for Early Diagnosis of COVID-19. IEEE Sens. J. 2021, 21, 17180–17188. [Google Scholar] [CrossRef] [PubMed]

- Cretikos, M.A.; Bellomo, R.; Hillman, K.; Chen, J.; Finfer, S.; Flabouris, A. Respiratory Rate: The Neglected Vital Sign. Med. J. Aust. 2008, 188, 657–659. [Google Scholar] [CrossRef] [PubMed]

- Parkes, R. Rate of Respiration: The Forgotten Vital Sign. Emerg. Nurse 2011, 19, 12–17. [Google Scholar] [CrossRef] [PubMed]

- Barthel, P.; Wensel, R.; Bauer, A.; Müller, A.; Wolf, P.; Ulm, K.; Huster, K.M.; Francis, D.P.; Malik, M.; Schmidt, G. Respiratory Rate Predicts Outcome after Acute Myocardial Infarction: A Prospective Cohort Study. Eur. Heart J. 2013, 34, 1644–1650. [Google Scholar] [CrossRef]

- Helfenbein, E.; Firoozabadi, R.; Chien, S.; Carlson, E.; Babaeizadeh, S. Development of Three Methods for Extracting Respiration from the Surface ECG: A Review. J. Electrocardiol. 2014, 47, 819–825. [Google Scholar] [CrossRef]

- Gupta, K.; Prasad, A.; Nagappa, M.; Wong, J.; Abrahamyan, L.; Chung, F.F. Risk Factors for Opioid-Induced Respiratory Depression and Failure to Rescue: A Review. Curr. Opin. Anaesthesiol. 2018, 31, 110–119. [Google Scholar] [CrossRef]

- Rantonen, T.; Jalonen, J.; Grönlund, J.; Antila, K.; Southall, D.; Välimäki, I. Increased Amplitude Modulation of Continuous Respiration Precedes Sudden Infant Death Syndrome: Detection by Spectral Estimation of Respirogram. Early Hum. Dev. 1998, 53, 53–63. [Google Scholar] [CrossRef]

- Lovett, P.B.; Buchwald, J.M.; Stürmann, K.; Bijur, P. The Vexatious Vital: Neither Clinical Measurements by Nurses nor an Electronic Monitor Provides Accurate Measurements of Respiratory Rate in Triage. Ann. Emerg. Med. 2005, 45, 68–76. [Google Scholar] [CrossRef]

- Wiesner, S.; Yaniv, Z. Monitoring Patient Respiration Using a Single Optical Camera. In Proceedings of the 29th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Lyon, France, 22–26 August 2007; pp. 2740–2743. [Google Scholar]

- Massaroni, C.; Lopes, D.S.; Lo Presti, D.; Schena, E.; Silvestri, S. Contactless Monitoring of Breathing Patterns and Respiratory Rate at the Pit of the Neck: A Single Camera Approach. J. Sens. 2018, 2018, 4567213. [Google Scholar] [CrossRef]

- Wang, T.; Zhang, D.; Zheng, Y.; Gu, T.; Zhou, X.; Dorizzi, B. C-FMCW Based Contactless Respiration Detection Using Acoustic Signal. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 1, 1–20. [Google Scholar] [CrossRef]

- Yue, S.; He, H.; Wang, H.; Rahul, H.; Katabi, D. Extracting Multi-Person Respiration from Entangled RF Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–22. [Google Scholar] [CrossRef]

- Fan, D.; Ren, A.; Zhao, N.; Haider, D.; Yang, X.; Tian, J. Small-Scale Perception in Medical Body Area Networks. IEEE J. Transl. Eng. Health Med. 2019, 7, 2700211. [Google Scholar] [CrossRef]

- Qian, K.; Wu, C.; Yang, Z.; Liu, Y.; Jamieson, K. Widar: Decimeter-Level Passive Tracking via Velocity Monitoring with Commodity Wi-Fi. In Proceedings of the 18th ACM International Symposium on Mobile Ad Hoc Networking and Computing, Chennai, India, 10–14 July 2017; pp. 1–10. [Google Scholar]

- Al-Wahedi, A.; Al-Shams, M.; Albettar, M.A.; Alawsh, S.; Muqaibel, A. Wireless Monitoring of Respiration and Heart Rates Using Software-Defined-Radio. In Proceedings of the 2019 16th International Multi-Conference on Systems, Signals & Devices (SSD), Istanbul, Turkey, 21–24 March 2019; pp. 529–532. [Google Scholar]

- Praktika, T.O.; Pramudita, A.A. Implementation of Multi-Frequency Continuous Wave Radar for Respiration Detection Using Software Defined Radio. In Proceedings of the 2020 10th Electrical Power, Electronics, Communications, Controls and Informatics Seminar (EECCIS), Malang, Indonesia, 26–28 August 2020; pp. 284–287. [Google Scholar]

- Rehman, M.; Shah, R.A.; Khan, M.B.; Shah, S.A.; AbuAli, N.A.; Yang, X.; Alomainy, A.; Imran, M.A.; Abbasi, Q.H. Improving Machine Learning Classification Accuracy for Breathing Abnormalities by Enhancing Dataset. Sensors 2021, 21, 6750. [Google Scholar] [CrossRef]

- Walker, H.K.; Hall, W.D.; Hurst, J.W. Clinical Methods: The History, Physical, and Laboratory Examinations. JAMA 1990, 264, 2808–2809. [Google Scholar]

- Liu, C.; Xiong, J.; Cai, L.; Feng, L.; Chen, X.; Fang, D. Beyond Respiration: Contactless Sleep Sound-Activity Recognition Using RF Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–22. [Google Scholar] [CrossRef]

- Adib, F.; Mao, H.; Kabelac, Z.; Katabi, D.; Miller, R.C. Smart Homes That Monitor Breathing and Heart Rate. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems, Soul, Republic of Korea, 18–23 April 2015; pp. 837–846. [Google Scholar]

- Islam, S.M.; Motoyama, N.; Pacheco, S.; Lubecke, V.M. Non-Contact Vital Signs Monitoring for Multiple Subjects Using a Millimeter Wave FMCW Automotive Radar. In Proceedings of the 2020 IEEE/MTT-S International Microwave Symposium (IMS), Los Angeles, CA, USA, 4–6 August 2020; pp. 783–786. [Google Scholar]

- Cardillo, E.; Li, C.; Caddemi, A. Empowering Blind People Mobility: A Millimeter-Wave Radar Cane. In Proceedings of the 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, Online, 3–5 June 2020; pp. 213–217. [Google Scholar]

- Cardillo, E.; Caddemi, A. Radar Range-Breathing Separation for the Automatic Detection of Humans in Cluttered Environments. IEEE Sens. J. 2020, 21, 14043–14050. [Google Scholar] [CrossRef]

- Yue, S.; Yang, Y.; Wang, H.; Rahul, H.; Katabi, D. BodyCompass: Monitoring Sleep Posture with Wireless Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- Ultra-Wideband Radar Technology. Available online: https://www.routledge.com/Ultra-wideband-Radar-Technology/Taylor/p/book/9780849342677 (accessed on 1 December 2022).

- Li, C.; Lubecke, V.M.; Boric-Lubecke, O.; Lin, J. A Review on Recent Advances in Doppler Radar Sensors for Noncontact Healthcare Monitoring. IEEE Trans. Microw. Theory Tech. 2013, 61, 2046–2060. [Google Scholar] [CrossRef]

- Lai, J.C.Y.; Xu, Y.; Gunawan, E.; Chua, E.C.-P.; Maskooki, A.; Guan, Y.L.; Low, K.-S.; Soh, C.B.; Poh, C.-L. Wireless Sensing of Human Respiratory Parameters by Low-Power Ultrawideband Impulse Radio Radar. IEEE Trans. Instrum. Meas. 2010, 60, 928–938. [Google Scholar] [CrossRef]

- Liu, X.; Cao, J.; Tang, S.; Wen, J. Wi-Sleep: Contactless Sleep Monitoring via Wifi Signals. In Proceedings of the 2014 IEEE Real-Time Systems Symposium, Rome, Italy, 2–5 December 2014; pp. 346–355. [Google Scholar]

- Liu, X.; Cao, J.; Tang, S.; Wen, J.; Guo, P. Contactless Respiration Monitoring via Off-the-Shelf WiFi Devices. IEEE Trans. Mob. Comput. 2015, 15, 2466–2479. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, D.; Ma, J.; Wang, Y.; Wang, Y.; Wu, D.; Gu, T.; Xie, B. Human Respiration Detection with Commodity Wifi Devices: Do User Location and Body Orientation Matter? In Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing, Heidelberg, Germany, 12–16 September 2016; pp. 25–36. [Google Scholar]

- Zeng, Y.; Wu, D.; Gao, R.; Gu, T.; Zhang, D. FullBreathe: Full Human Respiration Detection Exploiting Complementarity of CSI Phase and Amplitude of WiFi Signals. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2018, 2, 1–19. [Google Scholar] [CrossRef]

- Zeng, Y.; Wu, D.; Xiong, J.; Yi, E.; Gao, R.; Zhang, D. FarSense: Pushing the Range Limit of WiFi-Based Respiration Sensing with CSI Ratio of Two Antennas. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2019, 3, 1–26. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Y.; Chen, Y.; Yang, J.; Chen, X.; Cheng, J. Tracking Vital Signs during Sleep Leveraging Off-the-Shelf Wifi. In Proceedings of the 16th ACM International Symposium on Mobile ad hoc Networking and Computing, Hangzhou, China, 22–25 June 2015; pp. 267–276. [Google Scholar]

- Niu, K.; Zhang, F.; Chang, Z.; Zhang, D. A Fresnel Diffraction Model Based Human Respiration Detection System Using COTS Wi-Fi Devices. In Proceedings of the 2018 ACM International Joint Conference and 2018 International Symposium on Pervasive and Ubiquitous Computing and Wearable Computers, Singapore, 8–12 October 2018; pp. 416–419. [Google Scholar]

- Wang, X.; Yang, C.; Mao, S. PhaseBeat: Exploiting CSI Phase Data for Vital Sign Monitoring with Commodity WiFi Devices. In Proceedings of the 2017 IEEE 37th International Conference on Distributed Computing Systems (ICDCS), Atlanta, GA, USA, 5–8 June 2017; pp. 1230–1239. [Google Scholar]

- Rao, B.D.; Hari, K.S. Performance Analysis of Root-MUSIC. IEEE Trans. Acoust. Speech Signal Process. 1989, 37, 1939–1949. [Google Scholar] [CrossRef]

- Wang, X.; Yang, C.; Mao, S. TensorBeat: Tensor Decomposition for Monitoring Multiperson Breathing Beats with Commodity WiFi. ACM Trans. Intell. Syst. Technol. (TIST) 2017, 9, 1–27. [Google Scholar] [CrossRef]

- Chen, C.; Han, Y.; Chen, Y.; Lai, H.-Q.; Zhang, F.; Wang, B.; Liu, K.R. TR-BREATH: Time-Reversal Breathing Rate Estimation and Detection. IEEE Trans. Biomed. Eng. 2017, 65, 489–501. [Google Scholar] [CrossRef] [PubMed]

- Yang, Y.; Cao, J.; Liu, X.; Xing, K. Multi-Person Sleeping Respiration Monitoring with COTS WiFi Devices. In Proceedings of the 2018 IEEE 15th International Conference on Mobile Ad Hoc and Sensor Systems (MASS), Chengdu, China, 9–12 October 2018; pp. 37–45. [Google Scholar]

- Rehman, M.; Ali, N.A.A.; Shah, R.A.; Khan, M.B.; Shah, S.A.; Alomainy, A.; Yang, X.; Imran, M.A.; Abbasi, Q.H. Development of an Intelligent Real-Time Multiperson Respiratory Illnesses Sensing System Using SDR Technology. IEEE Sens. J. 2022, 22, 18858–18869. [Google Scholar] [CrossRef]

- Ashleibta, A.M.; Abbasi, Q.H.; Shah, S.A.; Khalid, M.A.; AbuAli, N.A.; Imran, M.A. Non-Invasive RF Sensing for Detecting Breathing Abnormalities Using Software Defined Radios. IEEE Sens. J. 2020, 21, 5111–5118. [Google Scholar] [CrossRef]

- Khan, M.B.; Rehman, M.; Mustafa, A.; Shah, R.A.; Yang, X. Intelligent Non-Contact Sensing for Connected Health Using Software Defined Radio Technology. Electronics 2021, 10, 1558. [Google Scholar] [CrossRef]

- Rehman, M.; Shah, R.A.; Khan, M.B.; AbuAli, N.A.; Shah, S.A.; Yang, X.; Alomainy, A.; Imran, M.A.; Abbasi, Q.H. RF Sensing Based Breathing Patterns Detection Leveraging USRP Devices. Sensors 2021, 21, 3855. [Google Scholar] [CrossRef]

- Marković, I.; Stojanović, M.; Stanković, J.; Stanković, M. Stock Market Trend Prediction Using AHP and Weighted Kernel LS-SVM. Soft Comput. 2017, 21, 5387–5398. [Google Scholar] [CrossRef]

- Guyon, I.; Elisseeff, A. An Introduction to Variable and Feature Selection. J. Mach. Learn. Res. 2003, 3, 1157–1182. Available online: https://dl.acm.org/doi/10.5555/944919.944968 (accessed on 1 December 2022).

- Ding, C.; Peng, H. Minimum Redundancy Feature Selection from Microarray Gene Expression Data. J. Bioinform. Comput. Biol. 2005, 3, 185–205. [Google Scholar] [CrossRef] [PubMed]

- How to Choose a Feature Selection Method for Machine Learning—MachineLearningMastery.Com. Available online: https://machinelearningmastery.com/feature-selection-with-real-and-categorical-data/ (accessed on 1 December 2022).

- Zeng, Y.; Wu, D.; Xiong, J.; Liu, J.; Liu, Z.; Zhang, D. MultiSense: Enabling Multi-Person Respiration Sensing with Commodity Wifi. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2020, 4, 1–29. [Google Scholar] [CrossRef]

| Sr. # | Statistical Features | Detail | Equation |

|---|---|---|---|

| 1. | Mean | Data mean | |

| 2. | Standard deviation | Data dispersion relative to mean | |

| 3. | Peak-to-peak | Max. to min. value difference | |

| 4. | RMS | Root mean square | |

| 5. | Kurtosis | Frequency peaks distribution | |

| 6. | Skewness | Symmetry in data distribution | |

| 7. | Shape Factor | Square root of variance | |

| 8. | Crest Factor | Peak height value to RMS value | |

| 9. | Impulse Factor | Peak height value to mean value | |

| 10. | Entropy | Measure of randomness of data |

| Sr. # | Age (Y) | Height (In) | Weight (Kg) | BMI |

|---|---|---|---|---|

| 1. | 24 | 68 | 70 | 23.5 |

| 2. | 26 | 68 | 76 | 25.5 |

| 3. | 28 | 70 | 65 | 20.6 |

| 4. | 31 | 69 | 52 | 16.9 |

| 5. | 31 | 70 | 51 | 16.1 |

| 6. | 31 | 68 | 65 | 21.8 |

| 7. | 32 | 70 | 83 | 26.3 |

| 8. | 33 | 61 | 91 | 37.9 |

| 9. | 35 | 62 | 88 | 35.5 |

| 10. | 37 | 68 | 84 | 28.2 |

| ML Algorithms | Parameters | Without Feature Selection | Using MRMR Algorithm | Using PCA |

|---|---|---|---|---|

| Fine Gaussian SVM | Accuracy (%) | 92.7 | 93.2 | 93.7 |

| Training Time (s) | 43.53 | 40.949 | 41.04 | |

| Prediction Speed (obs/s) | ~49,000 | ~7400 | ~3700 | |

| Medium KNN | Accuracy (%) | 89.8 | 92.7 | 92.3 |

| Training Time (s) | 81.461 | 64.55 | 62.086 | |

| Prediction Speed (obs/s) | ~17,000 | ~48,000 | ~12,000 | |

| Wide Neural Network | Accuracy (%) | 91.7 | 93.8 | 93.6 |

| Training Time (s) | 392.32 | 324.43 | 329.13 | |

| Prediction Speed (obs/s) | ~99,000 | ~260,000 | ~82,000 |

| ML Algorithms | Parameters | Without Feature Selection | Using MRMR Algorithm | Using PCA |

|---|---|---|---|---|

| Fine Gaussian SVM | Accuracy (%) | 92.7 | 93.2 | 93.7 |

| Training Time (s) | 43.53 | 40.949 | 41.04 | |

| Prediction Speed (obs/s) | ~49,000 | ~7400 | ~3700 | |

| Medium KNN | Accuracy (%) | 89.8 | 92.7 | 92.3 |

| Training Time (s) | 81.461 | 64.55 | 62.086 | |

| Prediction Speed (obs/s) | ~17,000 | ~48,000 | ~12,000 | |

| Wide Neural Network | Accuracy (%) | 91.7 | 93.8 | 93.6 |

| Training Time (s) | 392.32 | 324.43 | 329.13 | |

| Prediction Speed (obs/s) | ~99,000 | ~260,000 | ~82,000 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rehman, M.; Shah, R.A.; Ali, N.A.A.; Khan, M.B.; Shah, S.A.; Alomainy, A.; Hayajneh, M.; Yang, X.; Imran, M.A.; Abbasi, Q.H. Enhancing System Performance through Objective Feature Scoring of Multiple Persons’ Breathing Using Non-Contact RF Approach. Sensors 2023, 23, 1251. https://doi.org/10.3390/s23031251

Rehman M, Shah RA, Ali NAA, Khan MB, Shah SA, Alomainy A, Hayajneh M, Yang X, Imran MA, Abbasi QH. Enhancing System Performance through Objective Feature Scoring of Multiple Persons’ Breathing Using Non-Contact RF Approach. Sensors. 2023; 23(3):1251. https://doi.org/10.3390/s23031251

Chicago/Turabian StyleRehman, Mubashir, Raza Ali Shah, Najah Abed Abu Ali, Muhammad Bilal Khan, Syed Aziz Shah, Akram Alomainy, Mohammad Hayajneh, Xiaodong Yang, Muhammad Ali Imran, and Qammer H. Abbasi. 2023. "Enhancing System Performance through Objective Feature Scoring of Multiple Persons’ Breathing Using Non-Contact RF Approach" Sensors 23, no. 3: 1251. https://doi.org/10.3390/s23031251

APA StyleRehman, M., Shah, R. A., Ali, N. A. A., Khan, M. B., Shah, S. A., Alomainy, A., Hayajneh, M., Yang, X., Imran, M. A., & Abbasi, Q. H. (2023). Enhancing System Performance through Objective Feature Scoring of Multiple Persons’ Breathing Using Non-Contact RF Approach. Sensors, 23(3), 1251. https://doi.org/10.3390/s23031251