Abstract

This article proposes the application of a new mathematical model of spots for solving inverse problems using a learning method, which is similar to using deep learning. In general, the spots represent vague figures in abstract “information spaces” or crisp figures with a lack of information about their shapes. However, crisp figures are regarded as a special and limiting case of spots. A basic mathematical apparatus, based on L4 numbers, has been developed for the representation and processing of qualitative information of elementary spatial relations between spots. Moreover, we defined L4 vectors, L4 matrices, and mathematical operations on them. The developed apparatus can be used in Artificial Intelligence, in particular, for knowledge representation and for modeling qualitative reasoning and learning. Another application area is the solution of inverse problems by learning. For example, this can be applied to image reconstruction using ultrasound, X-ray, magnetic resonance, or radar scan data. The introduced apparatus was verified by solving problems of reconstruction of images, utilizing only qualitative data of its elementary relations with some scanning figures. This article also demonstrates the application of a spot-based inverse Radon algorithm for binary image reconstruction. In both cases, the spot-based algorithms have demonstrated an effective denoising property.

1. Introduction

Imaging based on the scan data of the object under study, and the processing of scattered signals received by sensors, refers to inverse problems. Relevant direct problems are the modeling of wave signals scattered from an object with a known distribution of material properties within it. [1,2]. In particular, medical devices that provide such imaging are CT, MRI, microwave tomography, electrical resistance and capacitance tomography, ultrasound imaging, and others [3]. There are other areas of application for such image reconstruction, including radar, ground-penetrating radar, and through-the-wall radar. Geophysics also uses visualization obtained by sounding the earth with the help of sound or electrical impulses, etc. [1,4]. Imaging in all these areas, with the exception of MRI, is associated with the solution of inverse scattering problems in one or another approximation [5].

The fundamental point here is that practically it is impossible to obtain a mathematically exact solution to the inverse problem, however, it is possible to approximately reconstruct an image with a finite spatial resolution. Generally speaking, inverse problems relate to ill-posed mathematical problems, and such property can be explained by a lack of information for an exact solution due to the noise and the finite amount of sensor signals. Therefore, approximate solution methods are used that utilize regularization, filtering, interpolation, and other approaches [3]. For example, this applies to CT, MRI, and ultrasound, as well as to studies on microwave tomography [6,7,8,9,10].

Note that conventional approximate reconstruction methods such as filtered back-projection in CT or simple inverse FFT in MRI are not always adequate and may lead to artifacts. Therefore, new methods based on appropriate models have been developed that more strictly take into account the physics of objects and the real properties of sensors [11,12,13,14]. In a rigorous formulation, the inverse problem is considered a nonlinear optimization with the regularization to find the minimum of the residual error (the norm of deviation of the received and calculated sensor data). Its solution is sought by the iterative method, where the direct problem is solved and the current residual error is calculated at each iteration step [5]. This rigorous approach is especially relevant for microwave tomography, where one has to solve the nonlinear inverse problem of electromagnetic scattering. Unfortunately, using simple and approximate reconstruction methods is inadequate here, since microwave scanning of a part of the body is performed in the near-field area of antennas; furthermore, multi-pass scattering effects are significant [6,7,8,9,10]. A big issue is that iterative solutions, especially for electromagnetic scattering, require a long execution time and consume large computer resources [7].

In recent years, much attention has been paid to solving nonlinear inverse problems using the artificial intelligence (AI) approach, applying neural networks of deep learning [15,16,17,18,19,20,21]. The idea of this method is that if the neural network is successfully trained on examples, then when new scattering signals are fed to the input of the network, the trained system can create the desired image directly on the basis of the acquired knowledge, without solving the complex inverse scattering problem. Another area of application of learning neural networks is their use for image recognition, classification, and segmentation [22,23,24].

However, many authors point out the disadvantage of the traditional model of artificial neurons in neural networks, which consists of excessive computational accuracy and an excessive number of adjustable system parameters [25]. Indeed, if the apparatus of 32-bit floating-point numbers are utilized in learning algorithms for input signals, weight coefficients, activation, and loss functions, then a lot of the training operations lead to large consumption of computer resources and a long execution time. For example, the training time for image recognition (which is a simple task for humans) can be on the order of several weeks even in high-performance systems [26]. It cannot be implemented on compact devices with limited resources and limited power consumption.

Therefore, intensive research is currently underway to develop algorithms using reduced bit width of numbers: 8 bits, 4 bits, 2 bits, and even 1 bit. This provides a rough approximation for all the network parameters and such optimized neural networks are called binarized [25]. It should be noted that deep learning networks, which are used, for example, for image recognition tasks, usually operate with 32-bit numbers, while it is obvious that high accuracy is not required for such an image classification [26].

A new mathematical model of spots is proposed in [27,28] for the representation and processing of incomplete, inaccurate, or qualitative information. In particular, it allows for the creation of algorithms of a new type for AI and building neuromorphic systems, which operate in a way that is close to human thinking. Instead of real numbers, the proposed model introduces logical L4 numbers, L4 vectors, and L4 matrices. The spot model allows one to represent mental images and semantic content of information, as well as make classification and fragmentation. A new approach to machine learning has also been suggested, which is applicable for solving inverse problems. In addition, a new architecture of neural networks is proposed, consisting of layers that are modeled by L4 matrices, input and output data are L4 vectors, and weight coefficients are L4 numbers [27].

Thus, in the spots model, information representation, storage, and processing are carried out using 4-bit logical L4 numbers, rather than 32-bit numbers that can significantly reduce the consumption of machine resources. Behind this, the developed learning algorithm does not use such complex calculations and iterations as in the backpropagation algorithm for learning from examples [29].

The main idea of the spot theory is that the synthesis of a large amount of even insignificant information allows one to extract detailed and even quantitative information about the object of interest. Since the ill-posedness of inverse problems leads to indefinite, fuzzy, and ambiguous solutions, the application of the spot apparatus seems to be quite adequate.

The proposed model turned out to be ideologically close to the research areas of mereotopology and qualitative geometry [30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47], the idea of which was laid down by Whitehead in 1929 [30]. On the other hand, the basic ideas of the spots model are also close to the rough set theory [48,49,50,51,52,53,54,55], the formal concept analysis [52,56,57,58], and the fuzzy set theory [59], including fuzzy geometry [60,61]. Moreover, the suggested concept is in good agreement with the ideology of granular computing [62,63,64,65,66,67,68].

Instead of points, mereotopology uses regions of space as primitive spatial entities and utilizes qualitative information about their relations. Among other areas, it has been applied to geographical information science, and image analysis [38]. One of the important fields of mereotopology is region connection calculus (RCC) [34,35,36], which has two variants. RCC-8 defines eight relations between regions, including overlapping, disconnection, external connection, and connections (touch) of the region’s boundaries. Bennett [42], as well as Jonsson and Drakengren [43] considered a shortened version of these relations—RCC-5, which does not consider the boundaries connection. A feature of RCC-5 is the uncertainty of boundaries, since here it is impossible to distinguish internal points from boundary ones.

Although most authors considered spatial relations as logical values, Egenhofer et al. [44,45] encoded them in form of logical tables. Namely, they introduced the concepts of 4-intersection [44] and 9-intersection [45] matrixes, which are logical matrices that encode the spatial relations between spatial regions. Notice that these matrixes are similar but differ from the L4 numbers proposed in [27,28] because authors of [44,45] also consider relations with the boundaries. Clementini et al. [46] generalized 9-intersection matrixes, replacing intersections for the crisp boundary with intersections for broad boundaries. Stell [47] also considered the way of representation for spatial relations using logical vectors created on the base of notions part and compliment only. Finally, Butenkov et al. [69] introduced logic tables for Cartesian granules, which are equivalent to L4 numbers for spots, and applied them for spatial data mining algorithms.

The rough set theory suggested by Zdzisław Pawlak [48,50] is a mathematical approach to the representation of the vagueness. One of the main advantages of the rough set approach is the fact that it does not need any preliminary or additional information about data, such as probability in statistics, or membership in the fuzzy set theory. This theory regards sets with incomplete information that does not allow to distinguish elements in some of their subsets, which are called granules. Pawlak’s theory introduced such notions as the lower and upper approximations of rough sets, the boundary region, and even the membership function for elements, which is similar to that for the fuzzy sets.

A general formulation and consideration of granules, including the problem of information granulation, which was later called the concept of granular computing, were first carried out by Zadeh in [62]. His definition of granules: “the information may be said to be granular in the sense that the data points within a granule have to be dealt with as a whole rather than individually” corresponds to the equivalence classes of the universe. Zadeh regards both crisp and fuzzy granules and “considers granular computing as a basis for computing with words” [63]. The elements of a granule are indiscernible that “depends on available knowledge” [65]. The importance of the application of granulation and granular computing relates to the fact that such approximation can lead to simplification in solving practical problems.

The graph is a mathematical model convenient for the representation of the structure of links (labeled edges) between elements (nodes or vertexes) of the system under study [70]. Nowadays, the apparatus of graphs is well suited for the analysis and processing of digital images in digital geometry [71], and the analysis and metrics of the structure of the physical connection of brain neurons [72]. On the other hand, graph theory is actively used in AI to model semantic networks in the knowledge bases, which are called knowledge graphs [73,74,75,76,77]. Note that, unlike spots, the graph is only an abstraction for the representation of a structure of the relations between the entities, rather than a spatial object. However, recent works utilize graphs embedded in some continuous space to reduce the dimension of the graph when processing its data [75,76,77].

Despite the ideological closeness to these theories, the proposed model of spots has a significantly different nature, since spots are not based on sets or fuzzy set concepts, and spot elements do not define. Instead of the elements, a spot can have a structure inside that is determined from spatial relations with other spots. Having elementary spatial properties, spots combine the concepts of discreteness and continuity, while graphs are discrete mathematical objects. Generally, the presence of similar mathematical models allows us to use some approaches and ideas from them.

2. Definition of Spots and the Apparatus of L4 Numbers

Spots are mathematical objects with elementary spatial properties, for which their inner region, outer region (environment), and a logical connection between these regions for any two spots are defined. The logical connection of two spots is determined by two axioms [31,35]

Environments of spots are also considered to be spots, therefore, a logical connection is also defined for them, satisfying the axioms (1) and (2). Axiomatically, we regard spots do not connect their environments, that is

In general, the “shape” of spots and the properties of their environment, such as dimension and curvature of space, are not predetermined but can be evaluated from qualitative information about their elementary spatial relations (ER) to other spots, such as separation, intersection, inclusion, indistinguishability, etc. We consider crisp geometric figures as a special limiting case of spots.

Note that the connection can be defined not only in the case of the existence of a common region of space between two spots but also in a more general sense. For example, two geometric figures can be considered indistinguishable if they can be precisely coincided by a rigid movement. In general, any spot mapping can be defined with help of ER.

2.1. Definition of L4 Numbers

The elementary relations can be formalized using logical L4 numbers [27]. For spots and their environments , the L4 number is defined as a table

where , … denote the logical connections. Such L4 numbers, in general, permit distinguishing up to 16 different ER between spots. Examples of the ER and their corresponding L4 numbers are shown in Table 1.

Table 1.

Some ER of spots.

We call these spatial relations as elementary relations because they carry the lowest-level qualitative information about spots. However, a large amount of such qualitative data allows for extracting higher-level qualitative information and even numerical information.

The mathematical apparatus of the spot model is based on the L4 numbers, rather than on real numbers. As far as the basis of this apparatus is described in more detail in the previous works [27,28], here we will briefly outline the main content and reveal the meanings of the concepts introduced there.

In [27,28], the basis of spots is defined as a collection of “known” spots that can be in some mutual ER. The representation of a spot by their ER on the basis of spots is a mapping or imaging of the spot on this basis. Note that the system of basis spots is analogous to the numerical basis functions, and the orthogonality of the base functions is analogous to the separated basis spots, which we call orthogonal spots. Let us call the collection of spots with a certain ER between them the structure of spots. The structure of the basis spots included in a spot will also be called the structure of the spot .

Note that spot mapping is generally similar to the concept of projection for crisp figures. Consequently, the spot is analogous to some volumetric object, which is determined by its projections on different planes. Hence, one can improve knowledge about the structure of the spot by fusion its mappings on different bases into a “volumetric” image.

Let us define the operations union and the intersection for the spots, which permits the creation of new spots. We suggest the following definitions, which are similar but different from those of the set theory:

Here, the symbol + denotes the logical disjunction operation. Note that, in contrast to the sets, (5) does not define the images of spots absolutely, because they depend on the spots’ basis . Following the equality , see (3), it is possible, for example, to derive the following equations from (5):

The definitions (5) also permit to derive simple properties for zero spots :

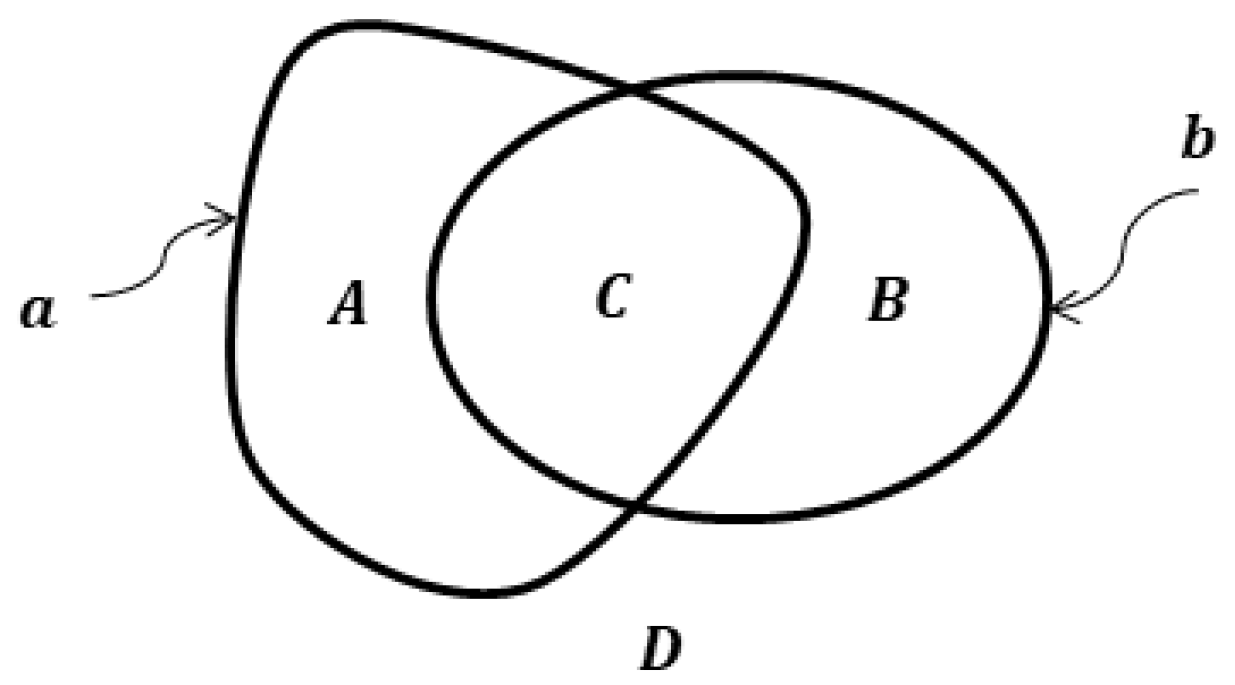

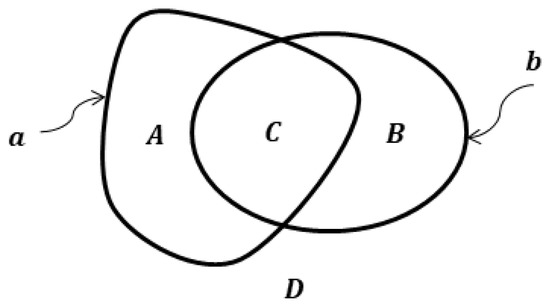

and express intersection parts , , , and of spots and in Figure 1, using the operation :

Figure 1.

Euler-Venn diagram for the elementary relations between spots.

2.2. Definition and Geometric Meaning of L4 Vectors and L4 Matrices

As mentioned earlier, information about any spot can be defined by its mapping on some basis. Then it can be encoded as a vector with coordinates, corresponding to its ER with basis spots. Such a vector of L4 numbers is called an L4 vector. For example, the L4 column vector [27] of the spot , represented on the base , is

where n is the number of spots in the basis . L4 vector (9) is similar to a numerical vector but its elements are L4 numbers. On the other hand, mapping (9) is also similar to the projection of a 3D body on some plane, which, can only represent partial information about the body.

Papers [27,28] introduce the idealized concept of atomic basis and atomic spots, which do not intersect each other and other spots. Note that the atomic spots are similar to points, pixels, voxels, or elements of sets. Another useful notion is orthogonal spots, for which their mutual ER is separated. For example, intersection parts of spots are orthogonal and can be regarded as some approximation for the atomic basis.

The L4 matrix defines ER between the spots of two bases, and and formalizes the mapping from basis to basis:

Here, are row L4 vectors of spot , represented on the basis . Note that the L4 matrix can be used to transform the L4 vector from one to another basis [27] which is similar to the mapping function in topology and geometry. Formally, it can be represented in the form of

however, there is no simple solution to define the rules for such a product, and we will address this issue in the next section. The exception is the special case of the L4-matrix, which we call the indistinguishability matrix that is similar to the numerical identity matrix. The indiscernibility matrix has diagonal elements corresponding to the indiscernibility and all other elements—to separation. Then multiplication of the L4 matrix and any L4 vector corresponds to an identity transformation:

There is a special case when all the spots of two bases are separated that is analogous to orthogonal coordinates in geometry. It is obvious that in this case, it is impossible to obtain a mapping transformation using the product of an L4 matrix and L4 vector, and it is necessary to obtain additional independent data.

2.3. Multiplication Rules for L4 Vectors and L4 Matrixes

First, consider the simplest case of an atomic basis , where basis spots are orthogonal and do not intersect other spots [27,28]. For it, one can define an ER between the spots and with respect to the basis and the “scalar” product of vectors according to the rule

where the symbol “∙” denotes a logical conjunction. We will apply the same rule for an orthogonal basis , consisting of separated spots.

Let us regard a spot , basis and an atomic basis . We suppose that and all spots can be mapped on the atomic basis . Then the rule for the product of the L4 matrix (10) and L4 vector (9) can be defined as the following:

where L4 number is defined in (11). Note that Equation (12) defines the transformation of the mapping of the spot from basis to basis .

For an arbitrary basis , when spots can be intersected, the definition of is more complicated. First, let us consider the orthogonal basis of all intersections of the spots in and find the vectors and on the basis . Then, we define the following equality for calculation :

and apply the rule (11). We define the vectors and using the following formal matrix equations:

where is the L4 matrix that consists on elements and is used for mapping vectors from basis to basis .

To determine the transformation rule for (13), we first apply a convenient method of numbering the intersections of spots , using a binary code. Namely, generalizing (8), each can be defined in terms of the spots or connected by operator . For example, the binary index corresponds to the following spot [28]:

ER for any spot and for (14) can be found using the following approximate equation [28]

which defines the rule for the product in (13). A similar equation can be written for the spot to determine the product in (13).

Equation (15) was tested in [28] when solving the problem of reconstruction of the shape of plane figures, processing its ER data with basis figures (Figures 4–6 in [28]). It turned out that (15) gives uncertainty in the form of a blurred boundary. However, it is possible to eliminate it if to apply additional rules, correcting ER in (15):

where the symbols and denote the separation and inclusion (more) relations, respectively (see Table 1).

Equations (15) and (16) help to determine the general rule for multiplying an arbitrary L4 vector and an L4 matrix defined on the basis and . We can write this rule in the following form:

Here the basis consists of the intersections of the points , is the basis of the intersections of the spots , and is the basis of the intersections of the spots of and basis. Note that Equation (17) should be considered as a series of transformations from one basis to another, namely

Product can be calculated, using (15) and (16), but the vectors and —using (11), (12), regarding , as an atomic basis. Finally, let us use the following natural rule for calculating the product :

where symbols denotes relation inclusion (less) (see Table 1). Note that rule (19) is also approximate.

3. General Approach to Inverse Problems and Learning Using L4 Matrices

3.1. Solution of Inverse Problems

It follows from the definition of L4 matrices (10) that its inverse matrix is equal to

and hence it must always exist and be equal to the transposed matrix with additional transposed elements (L4 numbers). Therefore, as it following from (17) and (20), formally the solution of the equation can be represented as

where, as in (17), the basis consists of intersections of the spots in , the basis —intersections of the spots in and —intersections of the spots of and basis. Considering that Equations (15), (17), and (19) are approximate, we can conclude that in the general case, the inverse solution (21) is also approximate:

3.2. Solving Inverse Problems Using L4 Matrices by Learning Method

As mentioned in the introduction, the practical application of solving inverse problems, especially electromagnetic inverse scattering, requires a large amount of computation time and resources. Alternative approaches involve the use of neural networks to train a solving system, which, after training, can make an inverse solution for newly measured data. In the spots model, this has an analogy with the situation when the matrix in (17) is unknown and it is wanted to be determined on training examples. Let us evaluate an unknown L4 matrix on the basis of a set of training examples , using the equality

We can regard as L4 vectors for spots and that form the basis and of the training data. Then, we can compose an L4 matrix and represent the matrix in (22) as:

where and are atomic bases for L4 vectors and , correspondingly. Obviously, for the testing set the matrix is equal to the indistinguishability matrix . Note that Equation (23) is a schematic interpretation of the learning process [29].

Let us consider the application (23) of the learning system to obtain the inverse solution of the following equation:

To do this, we can use the transformation of the matrix (23), similar to (21), to represent the inverse solution in the following general form:

We should especially consider the case when input data and/or output data are numeric. Then, instead of matrixes or in (24) we have to apply the corresponding operators and that transforms L4 data to numerical data or vice versa. Then, the forward problem has the form of

and its inverse solution instead of (24) can be represented in the following form:

where and are the inverse operators.

3.3. Image Reconstruction by Processing Qualitative Data

Although the proposed theory is developed for spots, which in general correspond to vague figures, it is convenient to verify its mathematical apparatus on crisp figures, which are the limiting case of spots. Let us consider the figure under test as a conditionally unknown spot, and the figures, which are used for mapping this spot (or “sampling”) as the known basis of spots [28]. More specifically, we consider the shape reconstruction of a crisp plane figure, utilizing the only qualitative information of its ER with the bases figures without additional details about these relations. However, it is possible to infinitely refine the reconstructed shape of the unknown figure by increasing the number of samplings and processing all the ER data. It may seem surprising, but it is theoretically possible, to reconstruct the shape of an object with absolute precision. This is a consequence of the following theorem.

Theorem 1.

In order for two figures to be pointwise equal, it is necessary and sufficient that their elementary relations with any other figure of finite size be the same.

Proof.

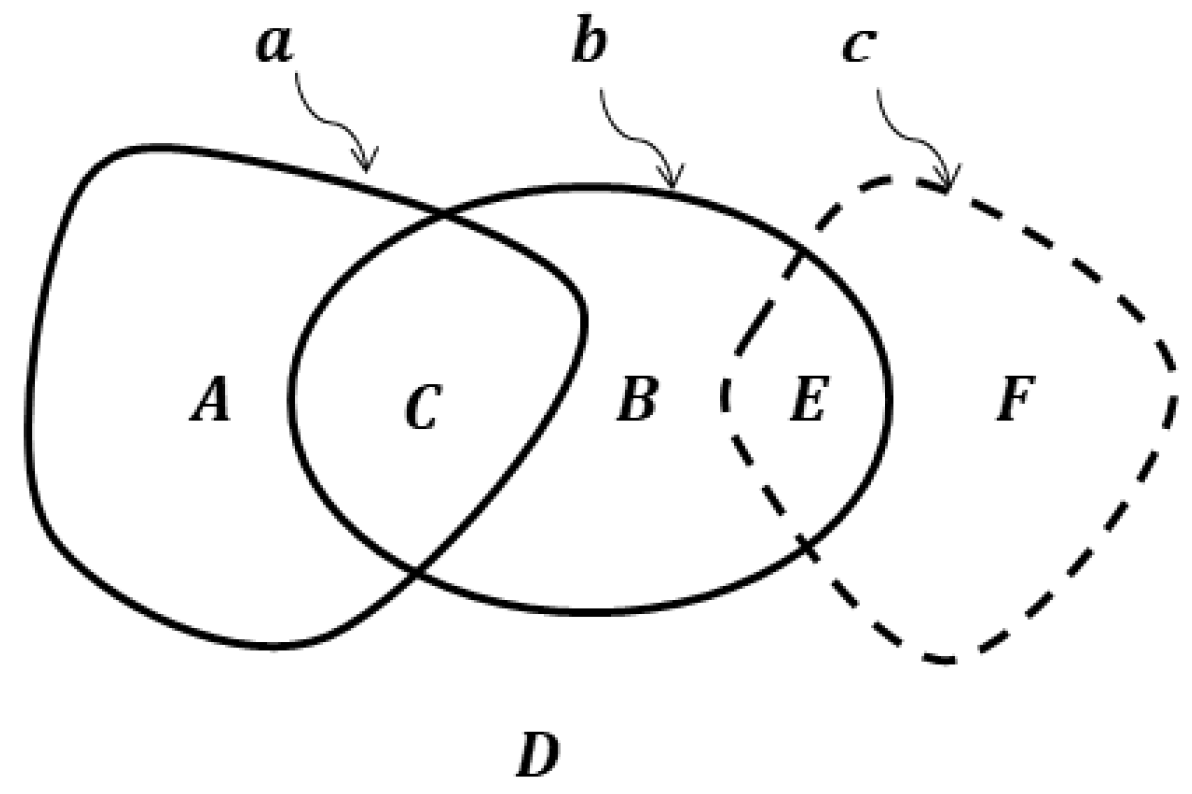

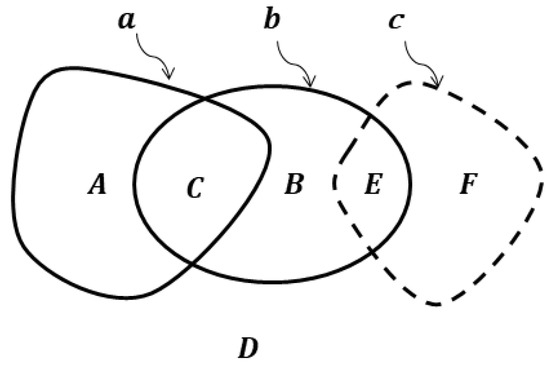

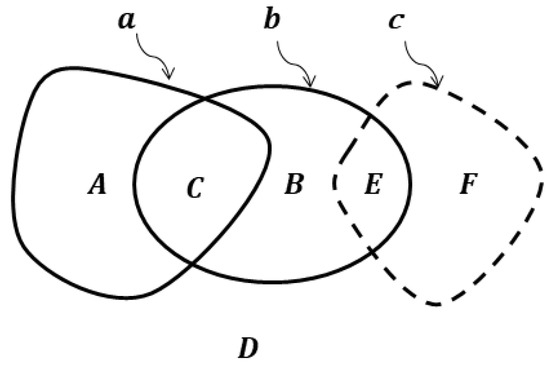

Necessity. As can be seen from the diagram in Figure 2, the condition of equality of figures and is equivalent to the equality of both their intersection parts and to the zero figure . Let us suppose there is a figure that has different ER with and , i.e., different connection values with these figures. For example, , (Figure 2). Then . Therefore, , which proves the necessity condition.

Figure 2.

Euler-Venn diagram for ER between figures .

Sufficiency. Let us prove this by contradiction. Assume that for two figures and their ER is equal with any finite figure, but . Then, (see Figure 2). If, for example, , then that contradicts the condition of equality ER with any finite figure. Therefore, the assumption is false, which proves the sufficiency condition. □

It follows from Theorem 1 that all information about the shape of each figure is contained in the infinite set of its ER with all other figures of finite size. Therefore, in principle, it is possible to reconstruct this shape using such qualitative information. However, due to the incomplete, finite amount of ER data, figure shape reconstruction can only be approximate. This corresponds to the fact that the result of such a reconstruction corresponds to a blurry figure, that is, to a spot.

Note that the shape’s reconstruction, by processing qualitative data, refers to inverse problems. Indeed, its forward problems can be formulated as

where is a basis for atomic spots—pixels or voxels, is a basis for scanning figures for testing, —L4 vector of ER data for the reconstruction of the figure under test . Following (21), the reconstructed figure is the inverse solution of (26) that, similar to (21), can be represented in the form of the equation

where is the basis of intersections of spots . The mapping can be found using (15) and (16).

3.4. Inverse Radon Algorithm for Binary Figures

Let us consider scanning figures–squares as the basis , and use the calculated sinograms (projections) of these squares as training data, which we will assign to the basis . As before, will be considered training data for the learning system, and we will determine an algorithm for the inverse solution by learning.

The forward problem is the Radon transform Sec. 8.7.3 of [2] that can be written in the form of

where is the basis of pixels, is the Radon transform operator, is the sinograms of the image. Following (25), the inverse Radon solution of (28) can be represented as

where is the basis of intersections of . Note, the operator depends on the training sinograms data and matrix is the indiscernibility matrix for solving by learning methods, as it was mentioned before. Therefore,

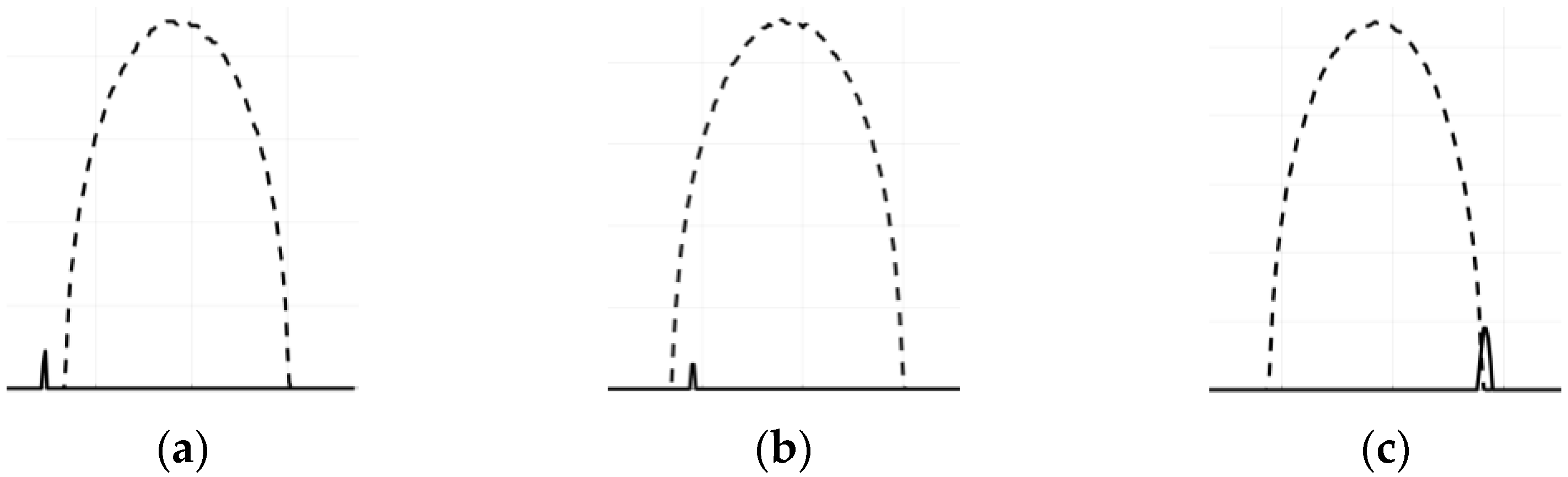

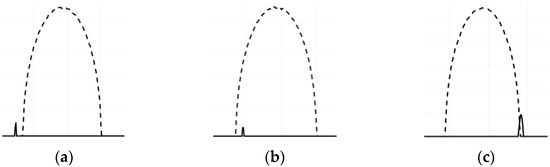

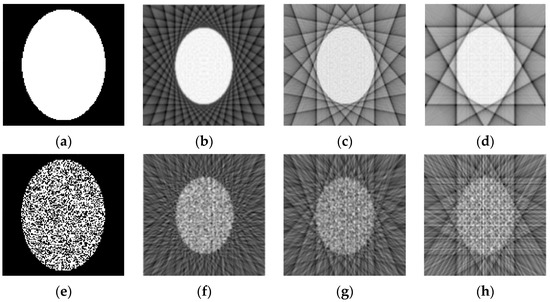

Let us find rules for calculation (30), defining such ER between sinograms that are presented in Figure 3. Here, small spiking sinograms (continue lines) correspond to relatively small basis squares and oval-type sinograms (dashed lines) correspond to ellipse figure (see Section 4.3).

Figure 3.

ER between sinograms of an ellipse and a base square. (a) Separation; (b) Inclusion; (c) Intersection.

The main idea of suggesting an algorithm is that if the basis figure has such ER with the figure under test as separation or intersection, then there are projection angles for which their sinograms are separated or intersected. However, if is included in then all their sinograms have ER inclusion as well. Hence, we can define the following rules for ER of projections and , which are converted to logical values:

Here, is the logical negation, -index corresponds to that of square, -index corresponds to the projection coordinate and -index corresponds to the projection angle Sec. 8.7.3 of [2]. Using (30) and (31) we get a spot-based inverse Radon algorithm for the reconstruction of binary images.

4. Results of Image Reconstruction

To illustrate the suggested theory, MATLAB programs were written that provide processing of ER data between the figure under test and basis spots (crisp figures) , which are scanning (or basis) squares. To obtain a better resolution, we utilize quite tight distribution of the basis spots that makes the intersections (14) to be relatively small. We used a computer with an AMD Ryzen 7 2700 X processor, 8 cores, 3.70 GHz, 32GB RAM, and no GPU.

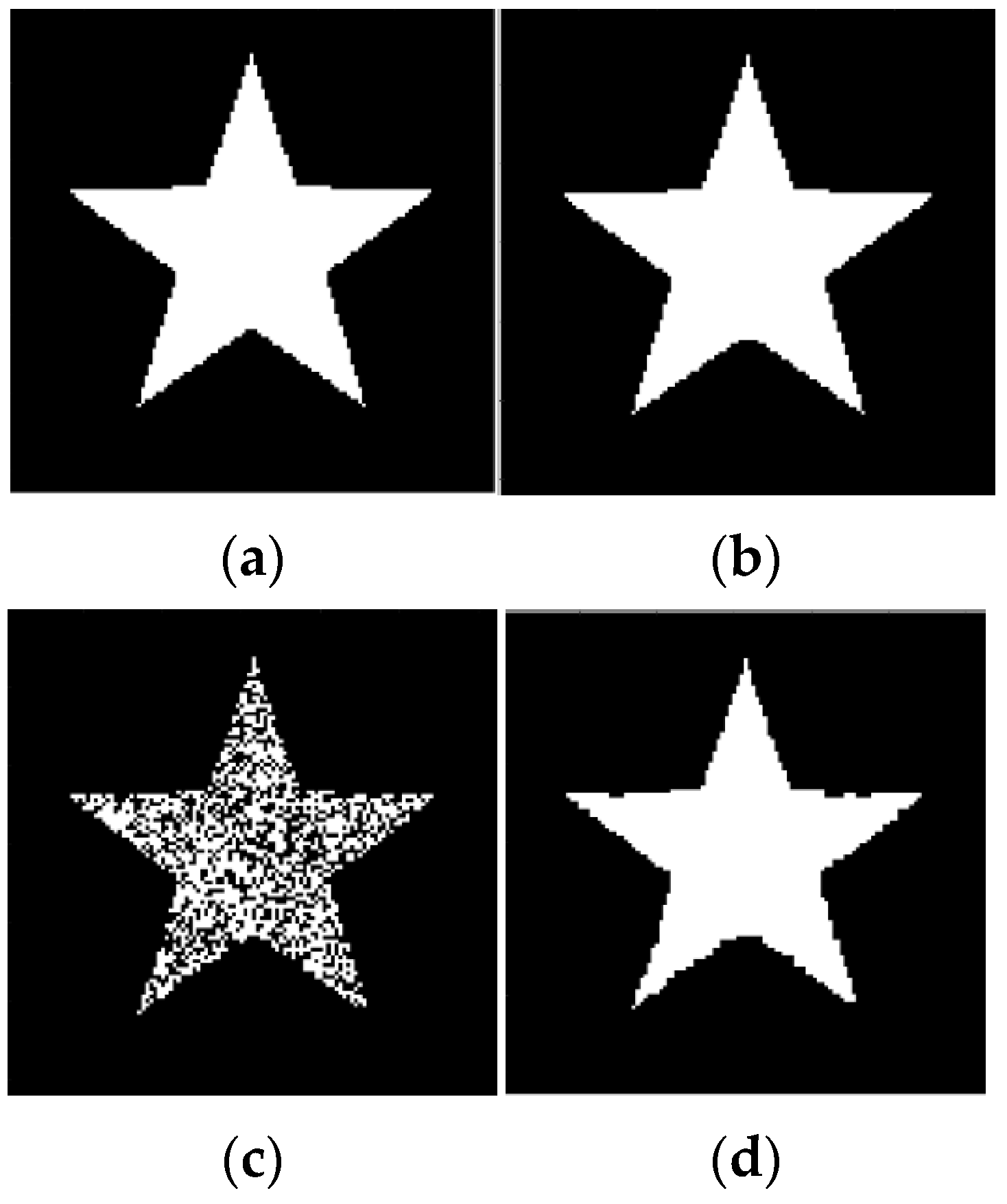

4.1. Reconstruction of Binary Images

The ER data were obtained using scanning of pixels squares with the scan period 1 pixel and processed them using (27) and algorithm (15), (16). The number of basis squares was approx. 20,000, and the calculation time was about 9 min in all cases.

To compare the original and reconstructed binary images we calculated the misfit error

where and are numbers of pixels that correspond to the inner regions of the original (noise-free) and the reconstructed images, correspondingly.

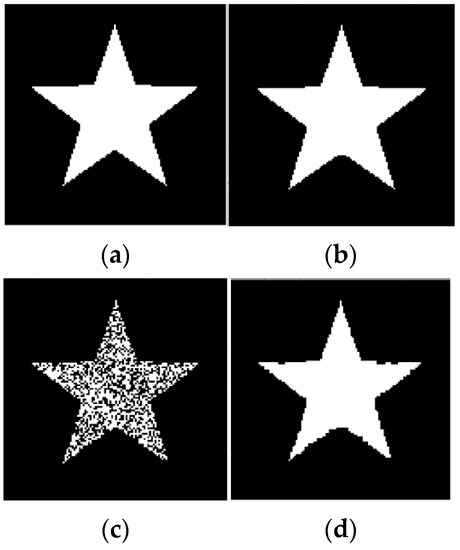

Figure 4 represents the reconstruction of images of a five-pointed star without and with strong noise, utilizing only data from its ER with scanning squares. Note that Figure 4d demonstrates the effective denoising capability of the algorithm (15), (16), (27). The image sizes were pixels, and the misfit errors for the reconstructed images are = 0.1% for Figure 4b, and = 3.1% for Figure 4d.

Figure 4.

Results of reconstruction of five-pointed star based on qualitative data. (a) Original star; (b) Reconstructed star; (c) Original strong noisy star; (d) Reconstructed noisy star.

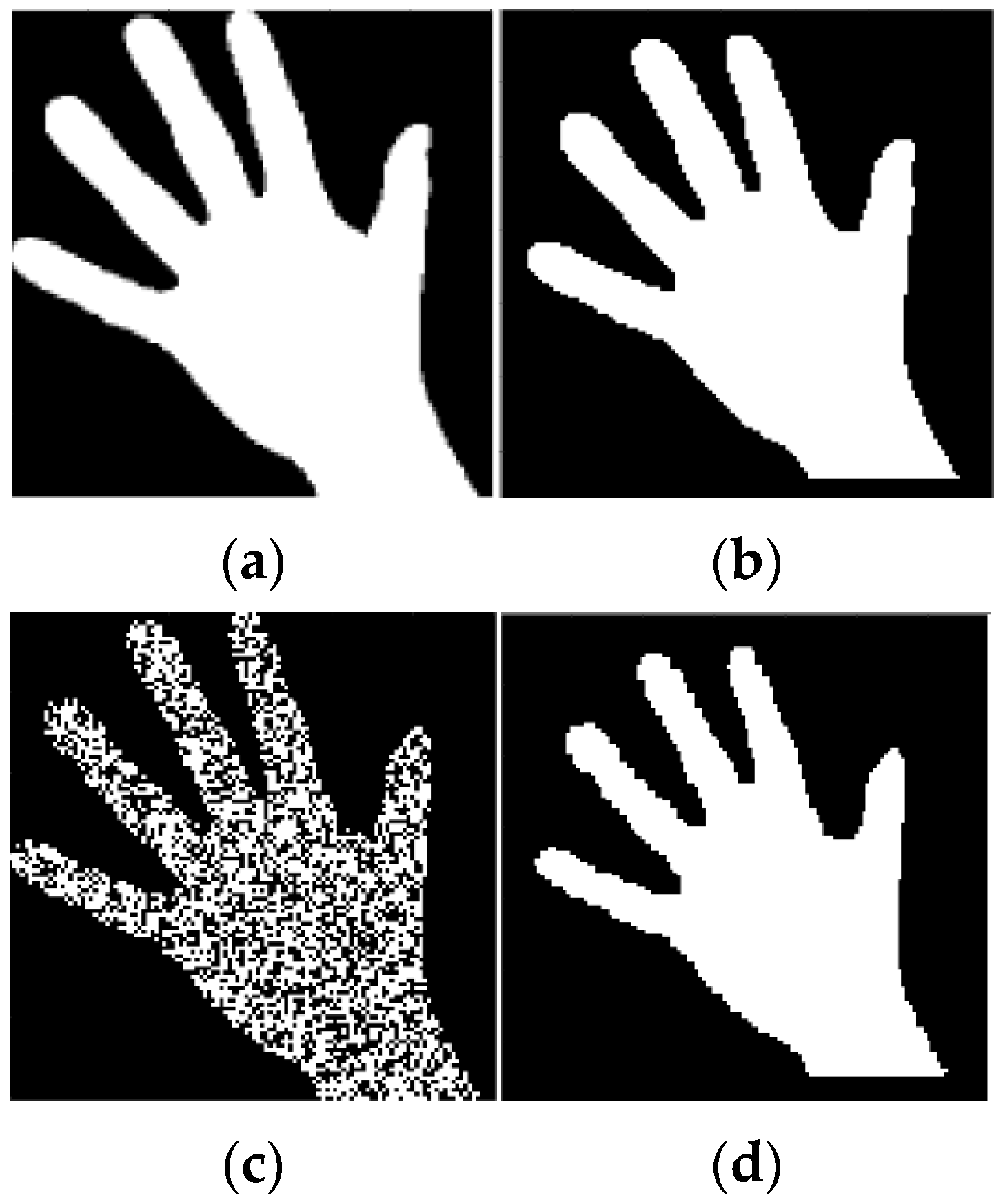

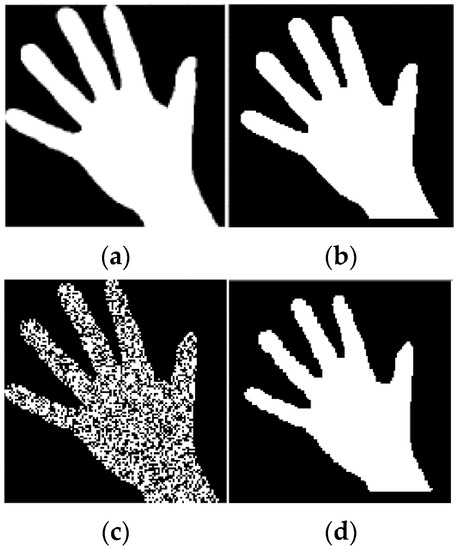

Figure 5 demonstrates the reconstruction of hand-mask images—noise-free and strongly noisy, using similar ER data and rules. Note that Figure 5d also demonstrates the effective denoising capability of the algorithm (15), (16), (27). The image sizes were pixels and the misfit errors for the reconstructed images are = 3.1% (for Figure 5b), and = 4.7% (for Figure 5d).

Figure 5.

Results of reconstruction of a hand-mask image based on qualitative data. (a) Original image; (b) Reconstructed image; (c) Original strong noisy image; (d) Reconstructed noisy image.

4.2. Reconstruction of Gray Scale Images

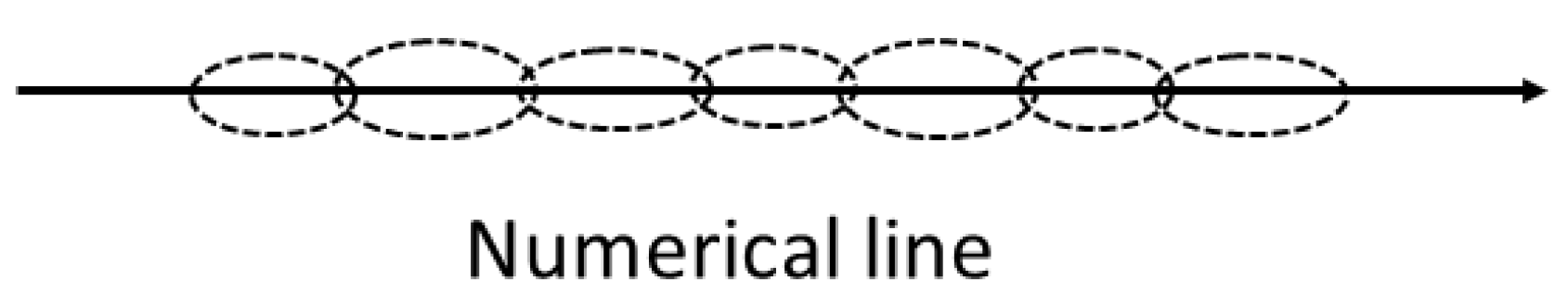

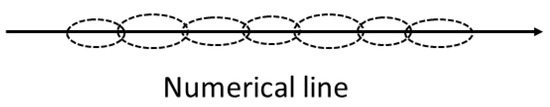

To be able to apply the developed reconstruction algorithm (15), (16), (27) to grayscale images, it is necessary to add a new dimension to 2D spots corresponding to their intensity value. In order for this numerical coordinate to be consistent with the general spot ideology, we represent the intensity axes as a linear structure, a chain of intersected spots (Figure 6).

Figure 6.

Representation of a numerical line as a chain of intersected spots.

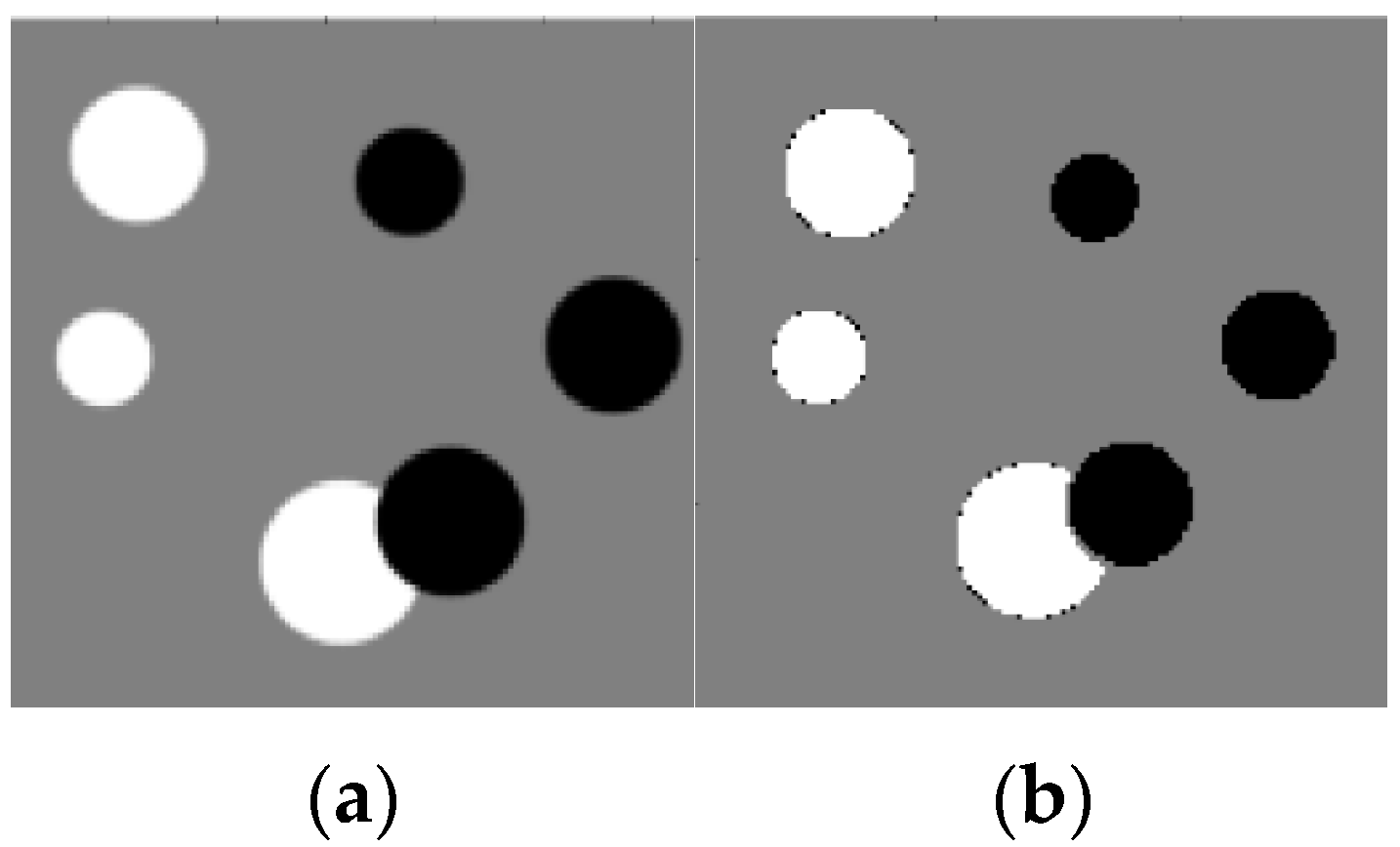

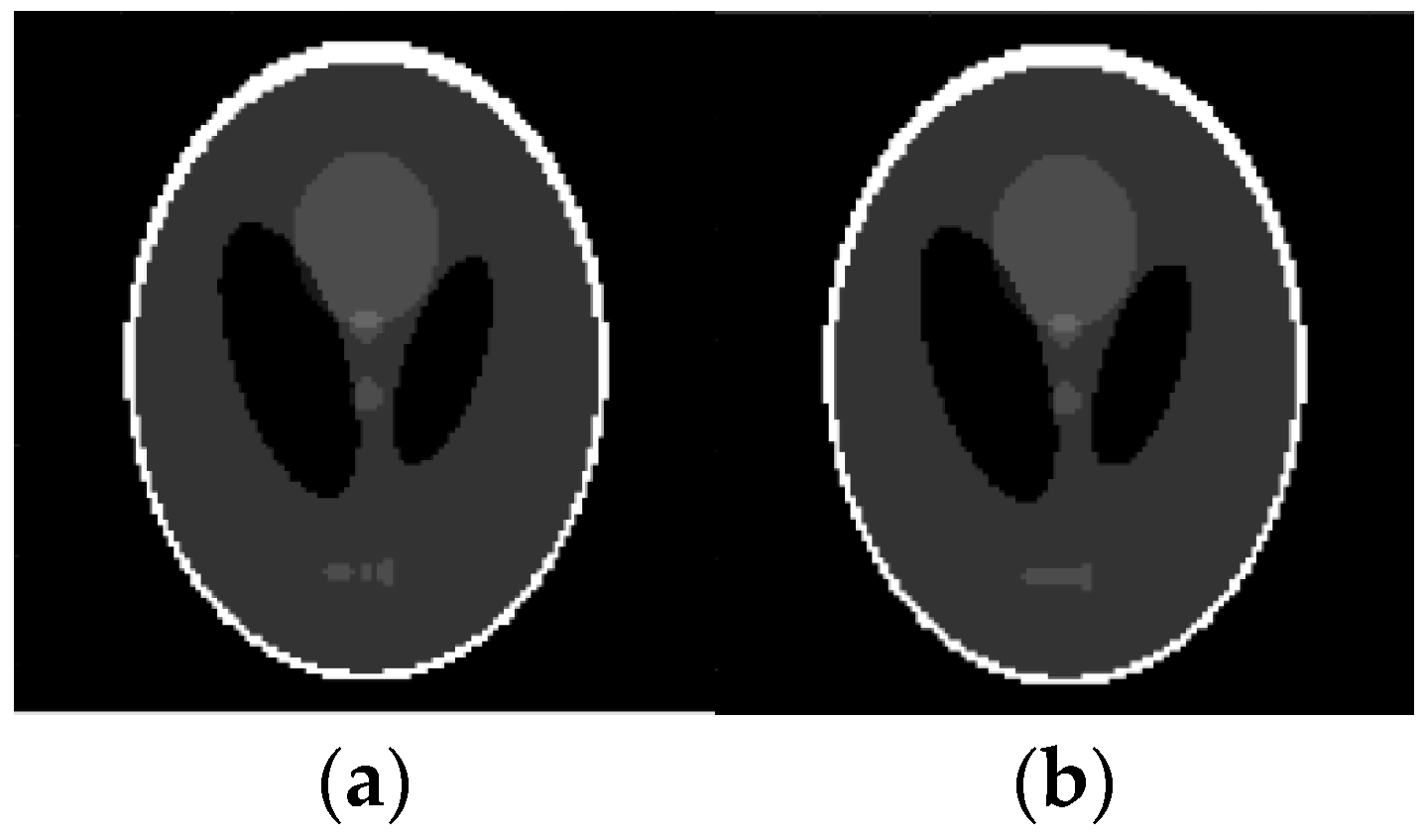

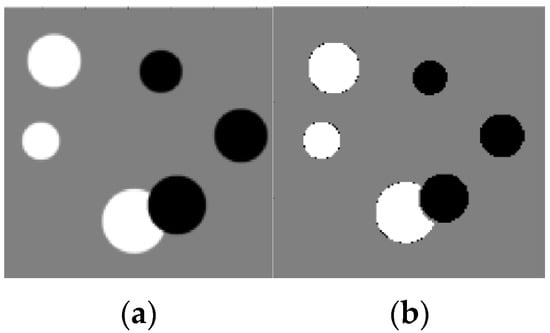

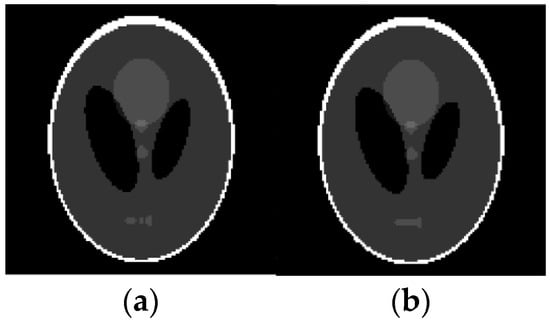

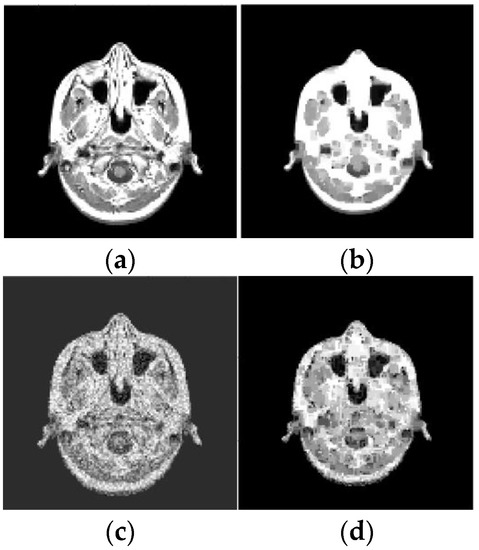

For example, these spots can be numerical intervals, and hence we can split the grayscale image into flat layers, corresponding to these intervals. Then one can reconstruct images in the layers independently and combine them again into the entire image. Results of the reconstruction are demonstrated in Figure 7, Figure 8, Figure 9 and Figure 10, where the intensity axes of pixels images were divided into 20 layers. The number of basis squares was about 20,000, and the calculation times were 6–9 min.

Figure 7.

Reconstruction of a circle’s image based on qualitative data. (a) Original image; (b) Reconstructed image.

Figure 8.

Reconstruction of Shepp-Logan image based on qualitative data. (a) Original image; (b) Reconstructed image.

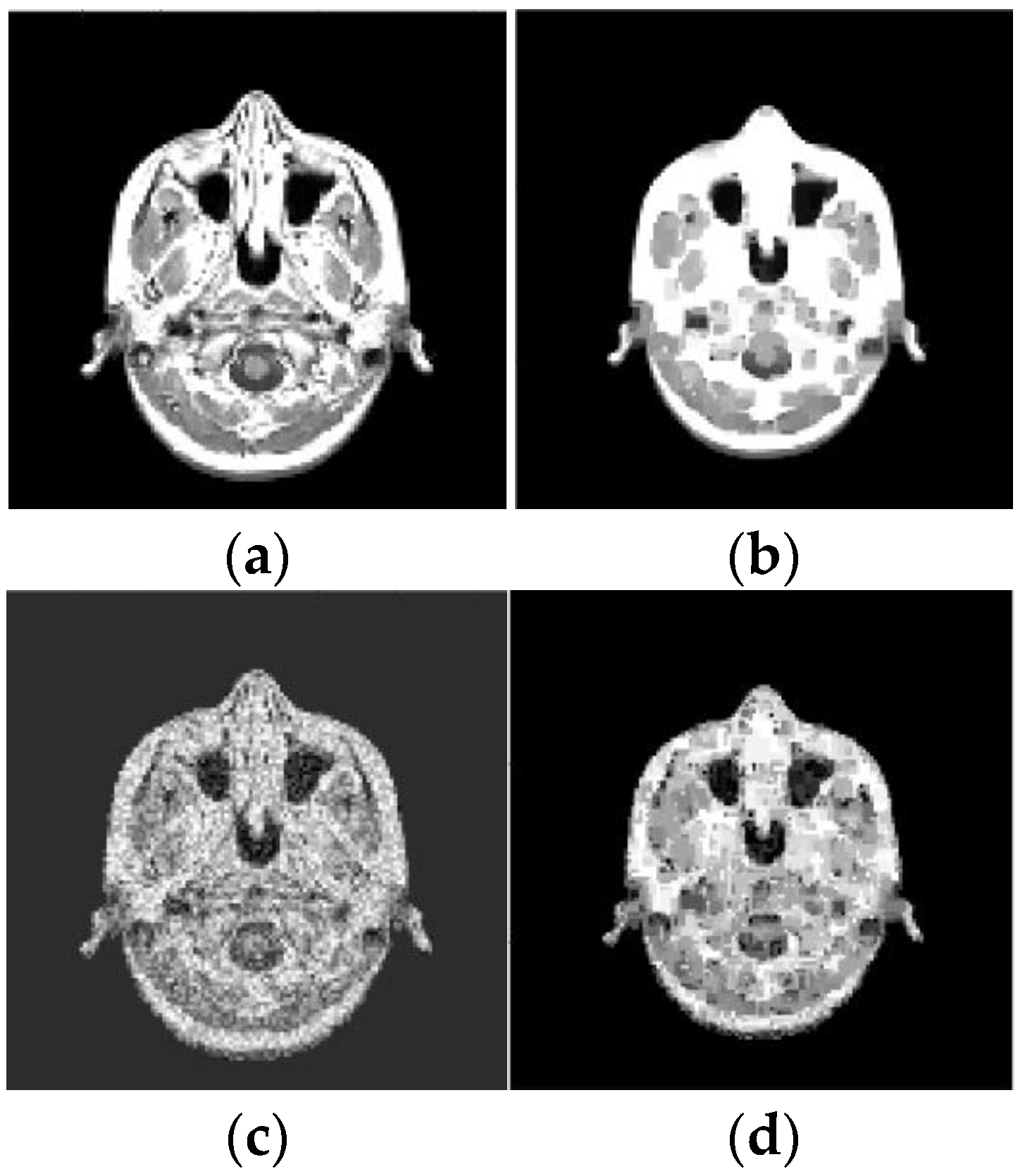

Figure 9.

Results of reconstruction of MRI image based on qualitative data. (a) Original image; (b) Reconstructed image; (c) Original noisy image; (d) Reconstructed noisy image.

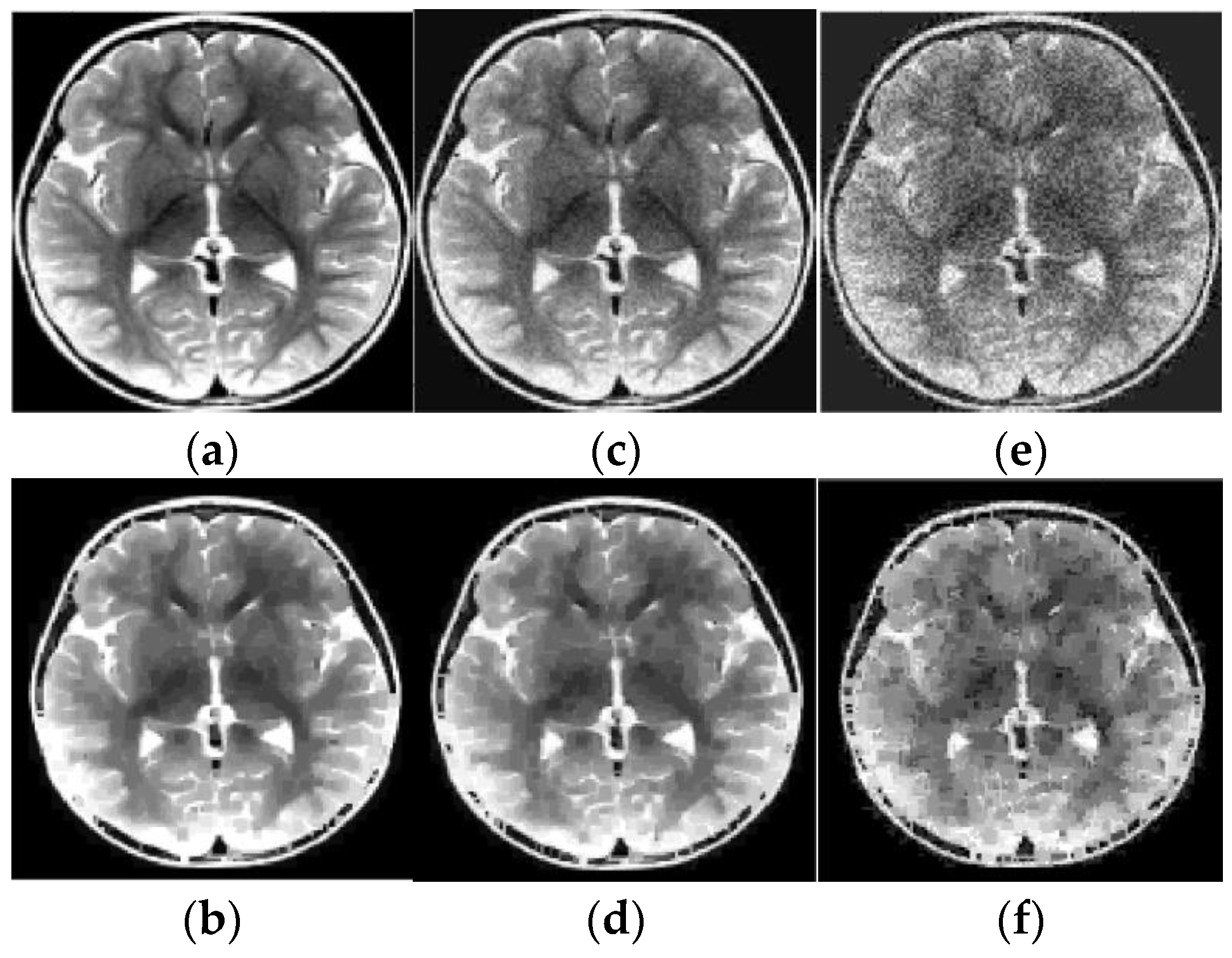

Figure 10.

Results of reconstruction of MRI human brain image based on qualitative data. (a) Original image; (b) Reconstructed image; (c) Original low noise image; (d) Reconstructed low noise image; (e) Original noisy image; (f) Reconstructed noisy image.

As before, the ER data were obtained using pixels squares that were scanned in each of 20 layers, and their scan period was 1 pixel. The SNR, which is defined for an average image intensity, is 9.7 dB (for Figure 9c), 23.3 dB (for Figure 10c), and 9.3 dB (for Figure 10e). Note that Figure 9d and Figure 10d,f also demonstrate the noise reduction ability of the reconstruction algorithm (15), (16), (27).

4.3. Inverse Radon Image Reconstruction

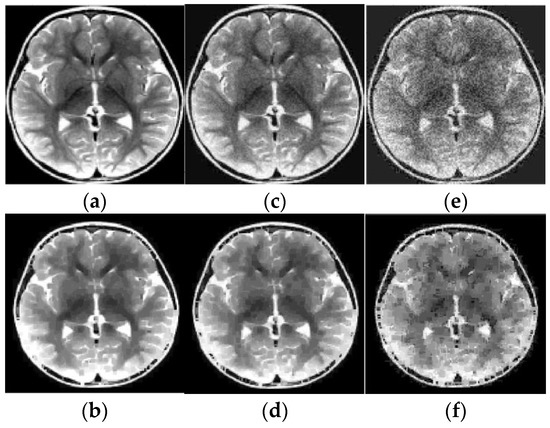

We compared a conventional back-projection and a spot-based (31) algorithm for the reconstruction of binary images, which used under-sampled parallel-beam sinograms for 6, 9, and 18 projection angles only. Figure 11 and Figure 12 show the results of this comparison for the pixels image reconstruction. The sinograms are calculated using the Radon transform, but they imitate the real experimental sinograms of X-ray transmission through the body in a CT system with parallel-beam geometry [3]. Note that typically a CT scanner collects projection signals in approximately 1° increments, and hence the simulated examples in Figure 11 and Figure 12 are indeed highly under-sampled.

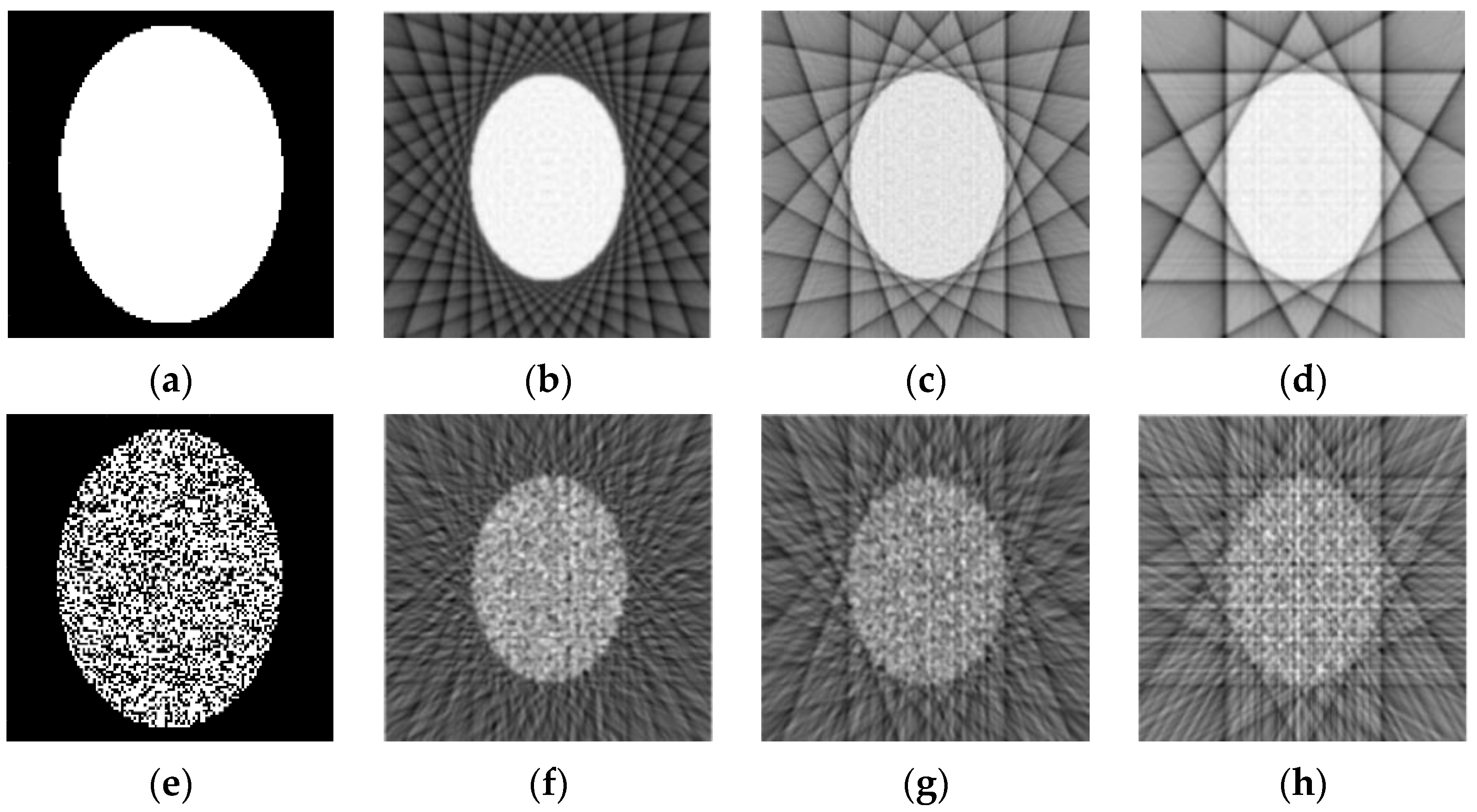

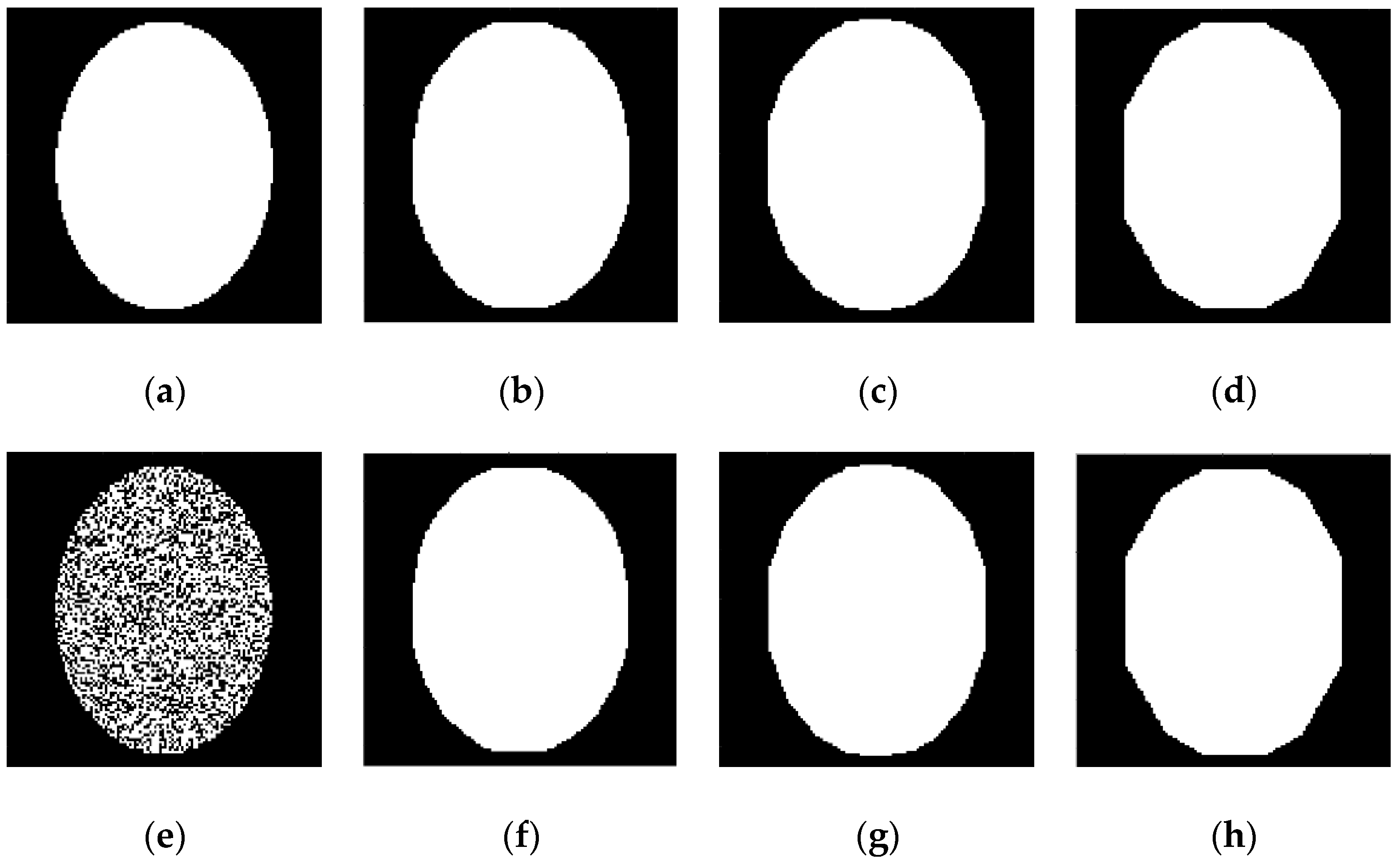

Figure 11.

Examples of reconstruction of two ellipses, using their under-sampled parallel-beam sinograms by the back-projection algorithm with Hann filter. (a) Original ellipse; (b–d) Reconstructed images for 18, 9, and 6 projection angles, correspondingly; (e) Original strong noisy ellipse; (f–h) Reconstructed images for 18, 9, and 6 projection angles, correspondingly.

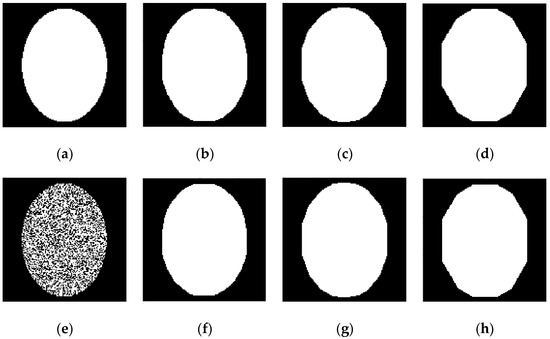

Figure 12.

Reconstruction of two ellipses, using their different under-sampled sinograms. (a) Original ellipse; (b–d) Reconstructed images for 18, 9, and 6 projection angles, correspondingly; (e) Original strong noisy ellipse; (f–h) Reconstructed images for 18, 9, and 6 projection angles, correspondingly.

Application of the back-projection algorithm with Hann filter is demonstrated in Figure 11 for two images of the ellipse: noise-free (Figure 11a) and strong noisy (Figure 11e). It is clear that the results of the noisy image reconstruction in Figure 11f–h demonstrate significant blurring for the reconstructed image.

Figure 12 shows the results of the same image reconstructions, using the spot-based algorithms (30) and (31) with pixels square and 1 pixel scan period. The number of basis squares was about 20,000, and the calculation times were about 6 min. These results of the reconstruction demonstrate the fact that the suggested algorithm allows the reconstruction of unblurred images even for a small number of projection angles. Images in Figure 12f–h also demonstrate a strong denoising effect for the spot-based algorithm, in contrast to the filtered back-projection algorithm. The misfit errors (32) were 3.6% (for Figure 12b,f), 4.8% (for Figure 12c,g), and 5.6% (for Figure 12d,h).

5. Discussion

In [27], the use of the apparatus of the spots model for creating neural networks of a new type is considered, in which each layer corresponds to an L4 matrix and L4 numbers are used instead of real numbers. Here, the L4 vectors play the role of input and output signals for each layer, and the L4 matrix of each layer plays the role of the weight matrix. For example, Equations (21) and (23) can be implemented using such a neural network, which consists of 4 and 3 layers, respectively. In addition, it is possible to create a neural network in the form of a neuromorphic electronic device built on solid-state elements such as field-effect transistors (FETs), FeFETs, or memristors [27].

The potential advantage of the proposed neural networks over conventional ones is, in particular, that the L4 matrix apparatus does not use real numbers with complex calculations during iterations in the backpropagation algorithm of learning by examples. Instead, Equation (24) uses the inverse matrix product. Although the proposed algorithms are approximate, they are adequate to the fact that it is almost always impossible to obtain an exact solution to inverse problems due to the finite number of measured signals. In addition, the tasks of recognition and classification, in principle, do not belong to the class of tasks that require accurate calculations.

The reconstructed images in Figure 4, Figure 5, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 demonstrate a good denoising ability of the proposed algorithm. This property relates to the fact that scanning squares of or pixels plays the role of a spatial filter and averages the sampled data. However, the spatial resolution of the reconstructed image is determined by the scan period of 1 pixel. This can be explained using Formulas (15) and (27), from which it follows that the resolution corresponds to the intersections size of 1×1 pixels.

An imaging algorithm using the spot-based inverse Radon transformation (Equation (31) and Figure 12) illustrates the processing of qualitative data for solving by learning. Indeed, sampling figures are basis spots and also relates to the training set, whereas the figure under test corresponds to the test example in the machine learning paradigm [29]. Finally, the reconstructed image, which is mapped on the basis of the intersections, corresponds to the solution of the trained system.

As it was noted in the Introduction, we can draw a general conclusion about the ideological proximity of the models of the spots and rough sets [48,49,50,51,52], although they have a fundamental difference. In addition, there are several close concepts between the spot model and the rough set theory (see Table 2).

Table 2.

Analogies between the concepts of rough sets and spots.

As it is shown in Table 2, spots are similar to granules, which is also the main concept in the granular computing (GC) research area [62,63,64,65,66,67,68]. A comparison of the spot and granule concepts in GC allows us to conclude that both models are also very close in many aspects.

The suggested spots model can be used for the theory of qualitative geometry (QG). For this application, it is necessary to introduce new concepts that are low-level analogies of the notion in the geometry and topology, including line, surface, dimensionality, curvature of space, etc. Based on the CG, one can introduce the concept of a semantic information space, which is an analogue of an information system characterized by an information table and is used, for example, in the theory of rough sets [50,52].

6. Conclusions

This article is devoted to the description of the concept and the basis of the apparatus of new mathematical objects–spots, which are introduced to represent and process qualitative data. It can be used to model human mental images, perceptions, and reasoning in AI. Furthermore, this paper demonstrates another application of the developed apparatus—for solving inverse problems by the learning method.

The proposed model uses such qualitative information about spots as elementary relations between them and introduces L4 logical numbers that encode these relations. Based on L4 numbers, the theory introduces L4 vectors and L4 matrices using the analogy with numerical matrix algebra. Although L4 numbers correspond to an elementary level of qualitative data, fusing a large number of them allows you to extract a higher level of information, including numerical.

Equations have been derived for reconstructing an image using only qualitative information about its elementary relations with a set of base figures. A general scheme for solving inverse problems for L4 and numerical data is proposed, including a learning method for solving.

The introduced apparatus was tested by solving image reconstruction problems using only qualitative data of its elementary relations with the scan figures. The application of spot-based inverse Radon’s algorithm for the reconstruction of a binary image was also demonstrated.

Further research in the field of the proposed theory involves the development of algorithms for solving various inverse problems, including inverse electromagnetic scattering. Another goal of the work is to design neural networks based on the proposed spots model, where each layer corresponds to the L4 matrix.

Funding

The investigation was supported by Program no. FFNN-2022-0019 of the Ministry of Science and Higher Education of Russia for Valiev Institute of Physics and Technology of RAS.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The author expresses deep appreciation to Lukichev V.F. and Rudenko K.V. for the support and discussion of this work.

Conflicts of Interest

The author declares no conflict of interest.

References

- Ramm, A.G. Inverse Problems: Mathematical and Analytical Techniques with Applications to Engineering; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Devaney, A.J. Mathematical Foundations of Imaging, Tomography and Wavefield Inversion; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Mudry, K.M.; Plonsey, R.; Bronzino, J.D. Biomedical Imaging; CRC Press: Boca Raton, FL, USA, 2003. [Google Scholar]

- Maisto, M.A.; Masoodi, M.; Pierri, R.; Solimene, R. Sensor Arrangement in Through-the Wall Radar Imaging. IEEE Open J. Antennas Propag. 2022, 3, 333–341. [Google Scholar] [CrossRef]

- Chen, X. Computational Methods for Electromagnetic Inverse Scattering; John Wiley & Sons: Hoboken, NJ, USA, 2018. [Google Scholar]

- Chandra, R.; Zhou, H.; Balasingham, I.; Narayanan, R.M. On the Opportunities and Challenges in Microwave Medical Sensing and Imaging. IEEE Trans. Biomed. Eng. 2015, 62, 1667–1682. [Google Scholar] [CrossRef]

- Simonov, N.; Kim, B.-R.; Lee, K.-J.; Jeon, S.-I.; Son, S.-H. Advanced Fast 3-D Electromagnetic Solver for Microwave Tomography Imaging. IEEE Trans. Med Imaging 2017, 36, 2160–2170. [Google Scholar] [CrossRef]

- O’Loughlin, D.; O’Halloran, M.J.; Moloney, B.M.; Glavin, M.; Jones, E.; Elahi, M.A. Microwave Breast Imaging: Clinical Advances and Remaining Challenges. IEEE Trans. Biomed. Eng. 2018, 65, 2580–2590. [Google Scholar] [CrossRef] [PubMed]

- Shao, W.; McCollough, T. Advances in Microwave Near-Field Imaging: Prototypes, Systems, and Applications. IEEE Microw. Mag. 2020, 21, 94–119. [Google Scholar] [CrossRef] [PubMed]

- Simonov, N.; Son, S.-H. Overcoming Insufficient Microwave Scattering Data in Microwave Tomographic Imaging. IEEE Access 2021, 9, 111231–111237. [Google Scholar] [CrossRef]

- Li, K.; Tang, J.; Chen, G.-H. Statistical model based iterative reconstruction (MBIR) in clinical CT systems: Experimental assessment of noise performance. Med. Phys. 2014, 41, 041906. [Google Scholar] [CrossRef] [PubMed]

- Pickhardt, P.J.; Lubner, M.G.; Kim, D.H.; Tang, J.; Ruma, J.A.; del Rio, A.M.; Chen, G.-H. Abdominal CT With Model-Based Iterative Reconstruction (MBIR): Initial Results of a Prospective Trial Comparing Ultralow-Dose With Standard-Dose Imaging. Am. J. Roentgenol. 2012, 199, 1266–1274. [Google Scholar] [CrossRef]

- Fessler, J.A. Model-Based Image Reconstruction for MRI. IEEE Signal Process. Mag. 2010, 27, 81–89. [Google Scholar] [CrossRef]

- Wang, X.; Tan, Z.; Scholand, N.; Roeloffs, V.; Uecker, M. Physics-based reconstruction methods for magnetic resonance imaging. Philos. Trans. R. Soc. A Math. Phys. Eng. Sci. 2021, 379, 20200196. [Google Scholar] [CrossRef]

- McLeavy, C.; Chunara, M.; Gravell, R.; Rauf, A.; Cushnie, A.; Talbot, C.S.; Hawkins, R. The future of CT: Deep learning reconstruction. Clin. Radiol. 2021, 76, 407–415. [Google Scholar] [CrossRef] [PubMed]

- Lee, D.; Lee, J.; Ko, J.; Yoon, J.; Ryu, K.; Nam, Y. Deep learning in MR image processing. Investig. Magn. Reson. Imaging 2019, 23, 81–99. [Google Scholar] [CrossRef]

- Khoshdel, V.; Ashraf, A.; LoVetri, J. Enhancement of Multimodal Microwave-Ultrasound Breast Imaging Using a Deep-Learning Technique. Sensors 2019, 19, 4050. [Google Scholar] [CrossRef] [PubMed]

- Wu, H.; Ren, X.; Guo, L.; Li, Z. A Non-Iterative Method Combined with Neural Network Embedded in Physical Model to Solve the Imaging of Electromagnetic Inverse Scattering Problem. Electronics 2021, 10, 3104. [Google Scholar] [CrossRef]

- Gao, F.; Huang, T.; Sun, J.; Wang, J.; Hussain, A.; Yang, E. A New Algorithm for SAR Image Target Recognition Based on an Improved Deep Convolutional Neural Network. Cogn. Comput. 2018, 11, 809–824. [Google Scholar] [CrossRef]

- Chen, X.; Wei, Z.; Li, M.; Rocca, P. A review of deep learning approaches for inverse scattering problems (invited review). Prog. Electromagn. Res. 2020, 167, 67–81. [Google Scholar] [CrossRef]

- Zhu, X.X.; Montazeri, S.; Ali, M.; Hua, Y.; Wang, Y.; Mou, L.; Shi, Y.; Xu, F.; Bamler, R. Deep Learning Meets SAR: Concepts, models, pitfalls, and perspectives. IEEE Geosci. Remote Sens. Mag. 2021, 9, 143–172. [Google Scholar] [CrossRef]

- Alshammari, M.M.; Almuhanna, A.; Alhiyafi, J. Mammography Image-Based Diagnosis of Breast Cancer Using Machine Learning: A Pilot Study. Sensors 2022, 22, 203. [Google Scholar] [CrossRef]

- Fayaz, M.; Torokeldiev, N.; Turdumamatov, S.; Qureshi, M.S.; Qureshi, M.B.; Gwak, J. An Efficient Methodology for Brain MRI Classification Based on DWT and Convolutional Neural Network. Sensors 2021, 21, 7480. [Google Scholar] [CrossRef]

- Awan, M.J.; Rahim, M.S.M.; Salim, N.; Rehman, A.; Garcia-Zapirain, B. Automated Knee MR Images Segmentation of Anterior Cruciate Ligament Tears. Sensors 2022, 22, 1552. [Google Scholar] [CrossRef]

- Simons, T.; Lee, D.-J. A Review of Binarized Neural Networks. Electronics 2019, 8, 661. [Google Scholar] [CrossRef]

- Aggarwal, C.C. Neural Networks and Deep Learning; Springer: Cham, Switzerland, 2018; Volume 10, pp. 978–983. [Google Scholar] [CrossRef]

- Simonov, N.A. Spots Concept for Problems of Artificial Intelligence and Algorithms of Neuromorphic Systems. Russ. Microelectron. 2020, 49, 431–444. [Google Scholar] [CrossRef]

- Simonov, N. The Spot Model for Representation and Processing of Qualitative Data and Semantic Information. In Proceedings of the Selected Contributions to the Russian Advances in Artificial Intelligence Track at RCAI 2021, Taganrog, Russia, 11–16 October 2021; pp. 55–69. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, UK, 2016. [Google Scholar]

- Whitehead, A.N. Process and Reality: An Essay in Cosmology; Cambridge University Press: Cambridge, UK, 1929. [Google Scholar]

- Clarke, B.L. A calculus of individuals based on connection. Notre Dame J. Form. Log. 1981, 22, 204–218. [Google Scholar] [CrossRef]

- Asher, N.; Vieu, L. Toward a geometry of common sense: A semantics and a complete axiomatization of mereotopology. In Proceedings of the IJCAI’95-ATAL Workshop, Montreal, Canada, 19–20 August 1995; pp. 846–852. [Google Scholar]

- Stell, J.G. Mereotopology and Computational Representations of the Body. Comput. Cult. 2017, 6. [Google Scholar]

- Cohn, A.G.; Bennett, B.; Gooday, J.; Gotts, N.M. Qualitative Spatial Representation and Reasoning with the Region Connection Calculus. Geoinformatica 1997, 1, 275–316. [Google Scholar] [CrossRef]

- Cohn, A.G.; Renz, J. Qualitative Spatial Representation and Reasoning. In Handbook of Knowledge Representation; van Harmelen, F., Lifschitz, V., Porter, B., Eds.; Elsevier B.V.: Amsterdam, Netherlands, 2008; Chapter 13; pp. 551–596. [Google Scholar] [CrossRef]

- Cohn, A.G.; Gotts, N.M. A mereological approach to representing spatial vagueness. In Proceedings of the 5th Conference Principles of Knowledge Representation and Reasoning, Cambridge, MA, USA, 5–8 November 1996; Morgan Kaufmann: Burlington, NJ, USA, 1996; pp. 230–241. [Google Scholar]

- Galton, A. The mereotopology of discrete space. In Proceedings of the International Conference COSIT’99, Stade, Germany, 25–29 August 1999; pp. 251–266. [Google Scholar]

- Galton, A. Discrete mereotopology. In Mereology and the Sciences; Synthese Library (Studies in Epistemology, Logic, Methodology, and Philosophy of Science); Calosi, C., Graziani, P., Eds.; Springer: Cham, Switzerland, 2014; Volume 371, pp. 293–321. [Google Scholar]

- Balbiani, P.; Gencer, C. Finitariness of elementary unification in Boolean Region Connection Calculus. In International Symposium on Frontiers of Combining Systems; Springer: Cham, Switzerland, 2017; pp. 281–297. [Google Scholar]

- Izadi, A.; Stock, K.M.; Guesgen, H.W. Multidimensional Region Connection Calculus. In Proceedings of the 30th International Workshop on Qualitative Reasoning, Melbourne, Australia, 21 August 2017. [Google Scholar]

- Davari, S.; Ghadiri, N. Fuzzy region connection calculus and its application in fuzzy spatial skyline queries. In Intelligent Computing-Proceedings of the Computing Conference; Arai, K., Bhatia, R., Kapoor, S., Eds.; Springer: Cham, Switzerland, 2019; Volume 997, pp. 659–677. [Google Scholar]

- Bennett, B. Spatial reasoning with propositional logics. In Proceedings of the 4th International Conference on Principles on Knowledge Representation and Reasoning (KR-94); Doyle, J., Sandewall, E., Torasso, P., Eds.; Morgan Kaufmann: Bonn, Germany, 1994; pp. 165–176. [Google Scholar]

- Drakengren, T.; Jonsson, P. A Complete Classification of Tractability in RCC-5. J. Artif. Intell. Res. 1997, 6, 211. [Google Scholar] [CrossRef]

- Egenhofer, M.J.; Franzosa, R. Point-set topological spatial relations. Int. J. Geogr. Inf. Syst. 1991, 5, 161–174. [Google Scholar] [CrossRef]

- Egenhofer, M.J.; Herring, J.R. Categorizing Binary Topological Relations between Regions, Lines and Points in Geographic Databases; Technical Report; Department of Surveying Engineering, University of Maine: Orono, ME, USA, 1991. [Google Scholar]

- Clementini, E.; Di Felice, P. Approximate topological relations. Int. J. Approx. Reason. 1997, 16, 173–204. [Google Scholar] [CrossRef]

- Stell, J.G. Part and complement: Fundamental concepts in spatial relations. Ann. Math. Artif. Intell. 2004, 41, 1–17. [Google Scholar] [CrossRef]

- Pawlak, Z. Rough sets. Int. J. Comput. Inf. Sci. 1982, 11, 341–356. [Google Scholar] [CrossRef]

- Pawlak, Z. Rough set theory and its applications to data analysis. Cybern. Syst. 1998, 29, 661–688. [Google Scholar] [CrossRef]

- Suraj, Z. An Introduction to Rough Set Theory and Its Applications: A Tutorial. In Proceedings of the 1st International Computer Engineering Conference (ICENCO’) New Technologies for the Information Society, Cairo, Egypt, 27–30 December 2004; pp. 1–39. [Google Scholar]

- Peters, G.; Lingras, P.; Slezak, D.; Yao, Y.Y. (Eds.) Rough Sets: Selected Methods and Applications in Management and Engineering; Springer: London, UK, 2012. [Google Scholar]

- Yao, Y.Y. Rough set approximations: A concept analysis point of view. In Computational Intelligence; Ishibuchi, H., Ed.; Encyclopedia of Life Support Systems; UNESCO-EOLSS: Paris, France, 2015; Volume 1, pp. 282–296. [Google Scholar]

- Skowron, A.; Dutta, S. Rough sets: Past, present, and future. Nat. Comput. 2018, 17, 855–876. [Google Scholar] [CrossRef] [PubMed]

- Sun, L.; Wang, L.; Xu, J.; Zhang, S. A Neighborhood Rough Sets-Based Attribute Reduction Method Using Lebesgue and Entropy Measures. Entropy 2019, 21, 138. [Google Scholar] [CrossRef]

- Slim, H.; Nadeau, S. A Mixed Rough Sets/Fuzzy Logic Approach for Modelling Systemic Performance Variability with FRAM. Sustainability 2020, 12, 1918. [Google Scholar] [CrossRef]

- Wille, R. Restructuring lattice theory: An approach based on hierarchies of concepts. In Ordered Sets; Rival, I., Ed.; Reidel: Dordrecht, The Netherlands, 1982; pp. 445–470. [Google Scholar]

- Ganter, B.; Wille, R. Formal Concept Analysis: Mathematical Foundations; Springer: New York, NY, USA, 1999. [Google Scholar]

- Wille, R. Formal Concept Analysis as Mathematical Theory of Concepts and Concept Hierarchies. In Formal Concept Analysis, LNAI 3626; Ganter, B., Stumme, G., Wille, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; pp. 1–33. [Google Scholar]

- Zadeh, L.A. Fuzzy sets. Inf. Control 1965, 8, 338–353. [Google Scholar] [CrossRef]

- Buckley, J.J.; Eslami, E. Fuzzy plane geometry I: Points and lines. Fuzzy Sets Syst 1997, 86, 179–187. [Google Scholar] [CrossRef]

- Buckley, J.J.; Eslami, E. Fuzzy plane geometry II: Circles and polygons. Fuzzy Sets Syst. 1997, 87, 79–85. [Google Scholar] [CrossRef]

- Zadeh, L.A. Towards a theory of fuzzy information granulation and its centrality in human reasoning and fuzzy logic. Fuzzy Sets Syst. 1997, 19, 111–127. [Google Scholar] [CrossRef]

- Yao, J.T. A Ten-year Review of Granular Computing. In Proceedings of the IEEE International Conference on Granular Computing, Silicon Valley, CA, USA, 2–5 November 2007; pp. 734–739. [Google Scholar]

- Lin, T.Y. Data mining: Granular computing approach. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining, Beijing, China, 26–28 April 1999; Springer: Berlin/Heidelberg, Germany, 1999; pp. 24–33. [Google Scholar]

- Yao, Y.Y. Information granulation and rough set approximation. Int. J. Intell. Syst. 2001, 16, 87–104. [Google Scholar] [CrossRef]

- Nguyen, S.H.; Skowron, A.; Stepaniuk, J. Granular computing: A rough set approach. Comput. Intell. 2001, 17, 514–544. [Google Scholar] [CrossRef]

- Yao, J.T.; Vasilakos, A.V.; Pedrycz, W. Granular Computing: Perspectives and Challenges. IEEE Trans. Cybern. 2013, 43, 1977–1989. [Google Scholar] [CrossRef] [PubMed]

- Skowron, A.; Jankowski, A.; Dutta, S. Interactive granular computing. Granul. Comput. 2016, 1, 95–113. [Google Scholar] [CrossRef]

- Butenkov, S.A.; Krivsha, V.V.; Krivsha, N.S. The Use of the Mathematical Apparatus of Spatial Granulation in The Problems of Perception and Image Recognition. Recognit. Percept. Images Fundam. Appl. 2021, 221–259. [Google Scholar] [CrossRef]

- Diestel, R. Graph Theory, 3rd ed.; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2005. [Google Scholar]

- Klette, R.; Rosenfeld, A. Digital Geometry: Geometric Methods for Digital Picture Analysis; Elsevier, Morgan Kaufmann Publishers: San Francisco, CA, USA, 2004; Chapters 4–5. [Google Scholar]

- Rubinov, M.; Sporns, O. Complex network measures of brain connectivity: Uses and interpretations. NeuroImage 2010, 52, 1059–1069. [Google Scholar] [CrossRef] [PubMed]

- Cao, S.; Lu, W.; Xu, Q. Deep neural networks for learning graph representations. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 1145–1152. [Google Scholar]

- Defferrard, M.; Bresson, X.; Vandergheynst, P. Convolutional neural networks on graphs with fast localized spectral filtering. In Proceedings of the 30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain, 5–10 December 2016; pp. 3844–3852. [Google Scholar]

- Cui, P.; Wang, X.; Pei, J.; Zhu, W. A survey on network embedding. IEEE Trans. Knowl. Data Eng. 2019, 31, 833–852. [Google Scholar] [CrossRef]

- Bordes, A.; Usunier, N.; Garcia-Dur’an, A.; Weston, J.; Yakhnenko, O. Translating embeddings for modeling multi-relational data. In Advances in Neural Information Processing Systems 26; Burges, C.J.C., Bottou, L., Welling, M., Ghahramani, Z., Eds.; Curran Associates, Inc.: Redhook, NY, USA, 2018; pp. 2787–2795. [Google Scholar]

- Wang, Z.; Zhang, J.; Feng, J.; Chen, Z. Knowledge graph embedding by translating on hyperplanes. In Proceedings of the 28th AAAI Conference on Artificial Intelligence, Québec City, QC, Canada, 27–31 July 2014; pp. 1112–1119. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).